Posts

Comments

The lack of willpower is a heuristic which doesn’t require the brain to explicitly track & prioritize & schedule all possible tasks, by forcing it to regularly halt tasks—“like a timer that says, ‘Okay you’re done now.’”

If one could override fatigue at will, the consequences can be bad. Users of dopaminergic drugs like amphetamines often note issues with channeling the reduced fatigue into useful tasks rather than alphabetizing one’s bookcase.

In more extreme cases, if one could ignore fatigue entirely, then analogous to lack of pain, the consequences could be severe or fatal: ultra-endurance cyclist Jure Robič would cycle for thousands of kilometers, ignoring such problems as elaborate hallucinations, and was eventually killed while cycling.

The ‘timer’ is implemented, among other things, as a gradual buildup of adenosine, which creates sleep homeostatic drive pressure and possibly physical fatigue during exercise (Noakes 2012, Martin et al 2018), leading to a gradually increasing subjectively perceived ‘cost’ of continuing with a task/staying awake/continuing athletic activities, which resets when one stops/sleeps/rests. (Glucose might work by gradually dropping over perceived time without rewards.)

Since the human mind is too limited in its planning and monitoring ability, it cannot be allowed to ‘turn off’ opportunity cost warnings and engage in hyperfocus on potentially useless things at the neglect of all other things; procrastination here represents a psychic version of pain.

Source: https://gwern.net/Backstop

What causes us to sometimes try harder? I play chess once in a while, and I've noticed that sometimes I play half heartedly and end up losing. However, sometimes, I simply tell myself that I will try harder and end up doing really well. What's stopping me from trying hard all the time?

Wonderful, thank you!

Bad question, but curious why it's called "mechanistic"?

Let me try to apply this approach to my views on economic progress.

To do that, I would look at the evidence in favour of economic progress being a moral imperative (e.g. improvements in wellbeing) and against it (development of powerful military technologies), and then make a level-headed assessment that's proportional to the evidence.

It takes a lot of effort to keep my beliefs proportional to the evidence, but no one said rationality is easy.

Do you notice your beliefs changing overtime to match whatever is most self-serving? I know that some of you enlightened LessWrong folks have already overcome your biases and biological propensities, but I notice that I haven't.

Four years ago, I was a poor university student struggling to make ends meet. I didn't have a high paying job lined up at the time, and I was very uncertain about the future. My beliefs were somewhat anti-big-business and anti-economic-growth.

However, now that I have a decent job, which I'm performing well at, my views have shifted towards pro-economic-growth. I notice myself finding Tyler Cowen's argument that economic growth is a moral imperative quite compelling because it justifies my current context.

Amazing. I look forward to getting myself a copy 😄

Will the Sequences Highlights become available in print on Amazon?

Have you come across the work of Yann LeCun on world models? LeCun is very interested in generality. He calls generality the "dark matter of intelligence". He thinks that to achieve a high degree of generality, the agent needs to construct world models.

Insects have highly simplified world models, and that could be part of the explanation for the high degree of generality exhibited by insects. For example, the fact the male Jewel Beetle fell in love with beer bottles mistaking them for females is strong evidence that beetles have highly simplified world models.

I see what you mean now. I like the example of insects. They certainly do have an extremely high degree of generality despite their very low level of intelligence.

Oh, I'm not making the argument that randomly permuting the Rubik's Cube will always solve it in a finite time, but that it might. I think it has a better chance of solving it than the chicken. The chicken might get lucky and knock the Rubik's Cube off the edge of a cliff and it might rotate by accident, but other than that, the chicken isn't going to do much work on solving it in the first place. Meanwhile, randomly permuting might solve it (or might not solve it in the worst case). I just think that random permutations have a higher chance of solving it than the chicken, but I can't formally prove that.

We can demonstrate this wth a test.

- Get a Rubik's Cube playing robotic arm, and ask it to flip a shuffled Rubik's Cube randomly until it's solved. How many years will it take until it's solved? It's some finite time, right? Millions of year? Billions of years?

- Get a chicken and give it a Rubik's Cube and ask it to solve it. I don't think it will perform better than our random robot above.

I just think that randomness is a useful benchmark for performance on accomplishing tasks.

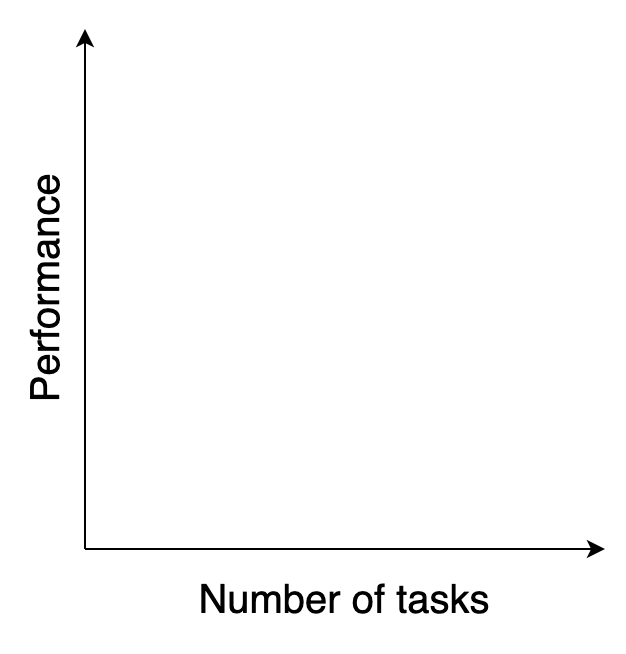

I imagine the relationship differently. I imagine a relationship between how well a system can perform a task and the number of tasks the same system can accomplish.

Does a chicken have a general intelligence? A chicken can perform a wide range of tasks with low performance, and performs most tasks worse than random. For example, could a chicken solve a Rubik's Cube? I think it would perform worse than random.

Generality to me seems like an aggregation of many specialised processes working together seamlessly to achieve a wide variety of tasks. Where do humans sit on my scale? I think we are pretty far along the x axis, but not too far up the y axis.

For your orthogonality thesis to be right, you have to demonstrate the existence of a system that's very far along the x axis but exactly zero on the y axis. I argue that such a system is equivalent to a system that sits at zero in both the x and y axes, and hence we have a counterexample.

Imagine a general intelligence (e.g. a human) with damage to a certain specialised part of their brain, e.g. short-term memory. They will have the ability to do a very wide variety of tasks, but they will struggle to play chess.

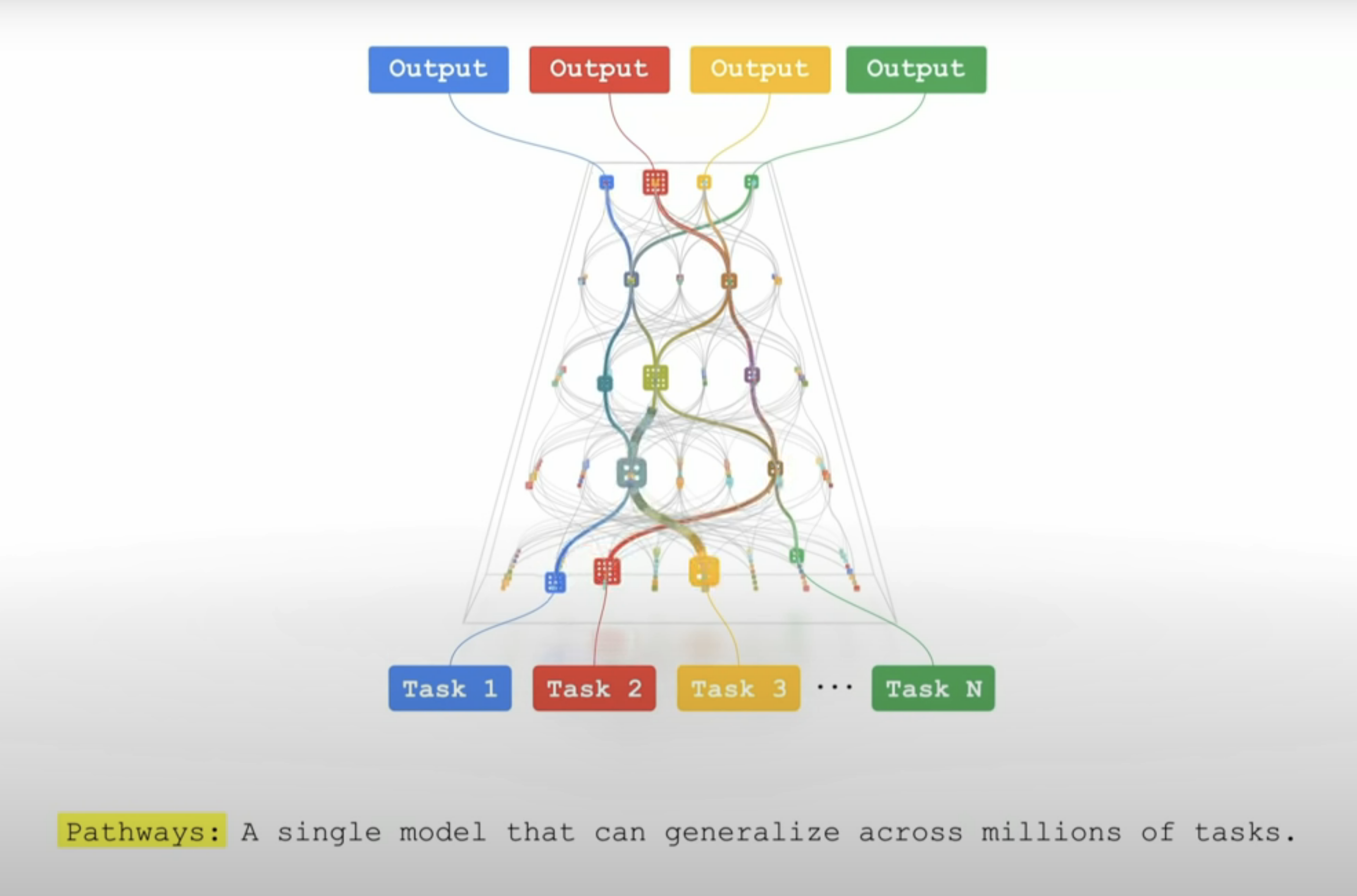

Jeff Dean has proposed a new approach to ML he calls System Pathways in which we connect many ML models together such that when one model learns, it can share its learnings with other models so that the aggregate system can be used to achieve a wide variety of tasks.

This would reduce duplicate learning. Sometimes two specialised models end up learning the same thing, but when those models are connected together, we only need to do the learning once and then share it.

If Turing Completeness turns out to be all we need to create a general intelligence, then I argue that any entity capable of creating computers is generally intelligent. The only living organism we know of that has succeeded in creating a computer is the humans. Creating computers seems to be some kind of intelligence escape velocity. Once you create computers, you can create more intelligence (and maybe destroy yourself and those around you in the process).

When some people hear the words "economic growth" they imagine factories spewing smoke into the atmosphere:

This is a false image of what economists mean by "economic growth". Economic growth according to economists is about achieving more with less. It's about efficiency. It is about using our scarce resources more wisely.

The stoves of the 17th century had an efficiency of only 15%. Meanwhile, the induction cooktops of today achieve an efficiency of 90%. Pre-16th century kings didn't have toilets, but 54% of humans today have toilets all thanks to economic progress.

Efficiency, if harnessed safely, benefits us all. It means we can get more value for less scarce resources, thus increasing the overall pie.

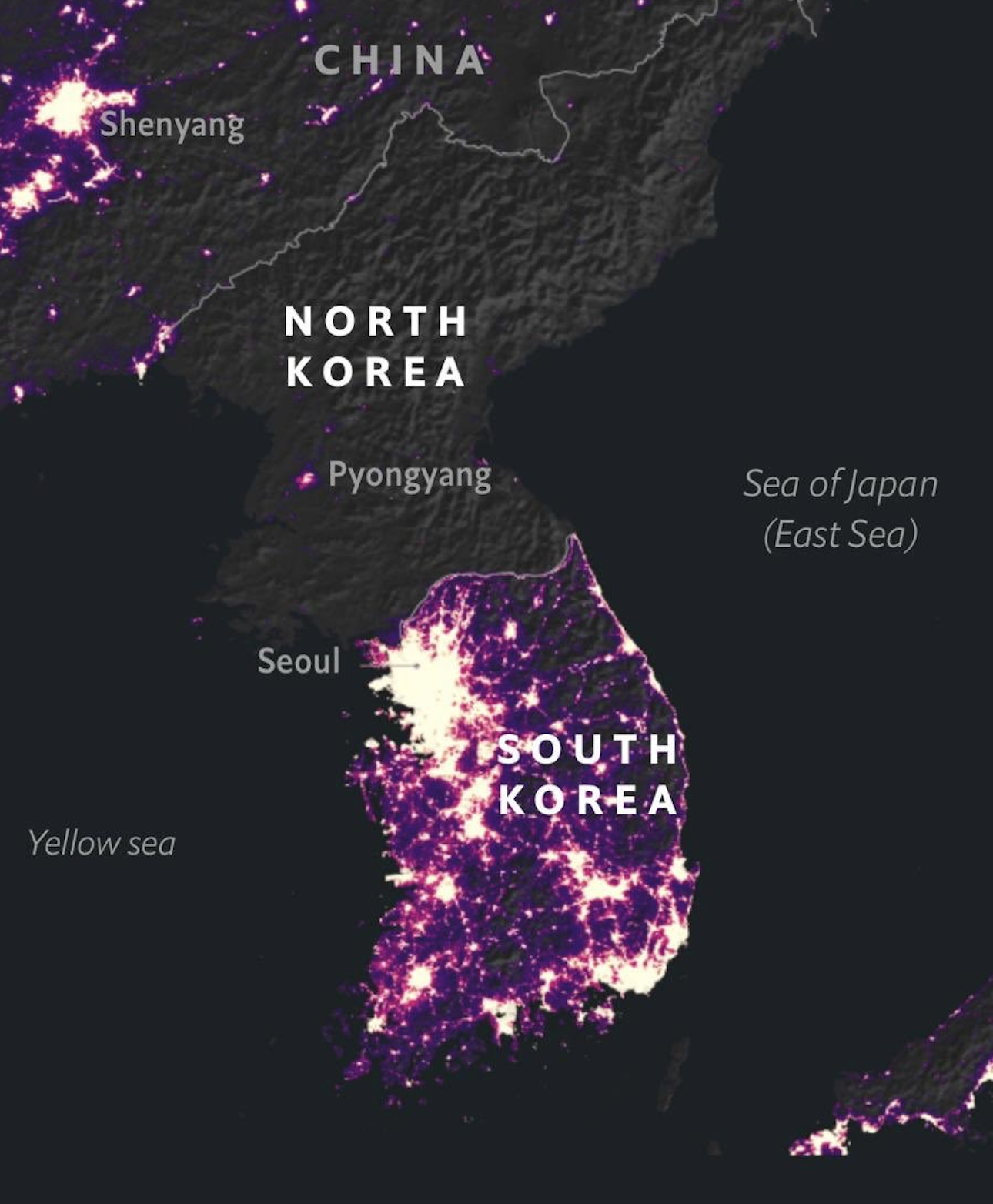

Will economic growth create more inequality due to rent seeking and wealth concentration? The evidence suggests that as GDP (not a perfect measure, but a decent one) grows, per capita incomes also grow. I’d rather be a peasant in South Korea than an average government employee in North Korea.

Hello, I have a question. I hope someone with more knowledge can help me answer it.

There is evidence suggesting that building an AGI requires plenty of computational power (at least early on) and plenty of smart engineers/scientists. The companies with the most computational power are Google, Facebook, Microsoft and Amazon. These same companies also have some of the best engineers and scientists working for them. A recent paper by Yann LeCun titled A Path Towards Autonomous Machine Intelligence suggests that these companies have a vested interest in actually building an AGI. Given these companies want to create an AGI, and given that they have the scarce resources necessary to do so, I conclude that one of these companies is likely to build an AGI.

If we agree that one of these companies is likely to build an AGI, then my question is this: is it most pragmatic for the best alignment researchers to join these companies and work on the alignment problem from the inside? Working alongside people like LeCun and demonstrating to them that alignment is a serious problem and that solving it is in the long-term interest of the company.

Assume that an independent alignment firm like Redwood or Anthropic actually succeeds in building an "alignment framework". Getting such framework into Facebook and persuading Facebook to actually use it remains to be an unaddressed challenge. Given that people like Chris Olah used to work at Google but left tells me that there is something crucial missing from my model. Could someone please enlighten me?

Additionally, your analogy doesn't map well to my comment. A more accurate analogy would be to say that active volcanoes are explicit and non-magical (similar to reason), while inactive volcanoes are mysterious and magical (similar to intuitions), when both phenomena have the same underlying physical architecture (rocks and pressure for volcanoes and brains for cognition), but manifest differently.

I just reckon that we are better off working on understanding how the black box actually works under the hood instead of placing arbitrary labels and drawing lines in the sand on things we don't understand, and then debating those things we don't understand with verve. Labelling some cognitive activities as reason and others as intuitions doesn't explain how either phenomenon actually works.

Thanks for your insights Vladimir. I agree that Abstract Algebra, Topology and Real Analysis don’t require much in terms of prerequisites, but I think without sufficient mathematical maturity, these subjects will be rough going. I should’ve made clear that by “Sets and Logic” I didn’t mean a full fledged course on Advanced Set Theory and Logic, but rather simple familiarity with the axiomatic method through books like Naive Set Theory by Halmos and Book of Proof by Hammack.

A map displaying the prerequisites of the areas of mathematics relevant to CS/ML:

A dashed line means this prerequisite is helpful but not a hard requirement.

Almost any technology has the potential to cause harm in the wrong hands, but with [superintelligence], we have the new problem that the wrong hands might belong to the technology itself.

Excerpt from "Artificial Intelligence: A Modern Approach" by Norvig and Russell.

I would define "physical" as the set of rigid rules governing reality that exist beyond our experience and that we cannot choose to change.

I can cause water to freeze to form ice using my agency, but I cannot change the fundamental rules governing water, such as its freezing point. These rules go beyond my agency and, in fact, constrain my agency.

Physics constrains everything else in a way that everything else does not constrain physics, and thus the primacy of physics.

if there's one superpower close to reaching the power level of everyone else combined, then everyone-else will ally to bring them down, maintaining a multipolar balance of power.

I hope they don't use nukes when they do that because that way, everyone loses.

I've read "The Elephant in the Brain", and it was certainly a breathtaking read. I should read it again.

That's an eloquent way of describing morality.

It would be so lovely had we lived in a world where any means of moneymaking helped us move uphill on the global morality landscape comprising the desires of all beings. That way, we can make money without feeling guilty about doing it. Designing a mechanism like this is probably impossible.

I wish moneymaking was by default aligned with optimising for the "good". That way, I can focus on making money without worrying too much about the messiness of morality. I wholly believe that existential risks are unequivocally the most critical issues of our time because the cost of neglecting them is so enormous, and my rational self would like to work directly on reducing them. However, I'm also profoundly programmed through millions of years of evolution to want a lovely house in the suburbs, a beautiful wife, some adorable children, lots of friends, etc. I do not claim that the two are impossible to reconcile. Instead, I argue that it's easier to achieve status maximising goals without caring too much about the morality of my career. I'd like to not feel guilty for my natural propensity toward earning more status. (When I say status, I don't mean rationality community status, I mean broader society status.)

I agree. I don't think this kind of behaviour is the worst thing in the world. I just think it is instrumentally irrational.

Premise: people are fundamentally motivated by the "status" rewarded to them by those around them.

I have experienced the phenomenon of demandingness described in your post, and you've elucidated it brilliantly. I regularly frequent in-person EA events, and I can visibly see status being rewarded according to impact, which is very different from how it's typically rewarded in the broader society. (This is not necessarily a bad thing.) The status hierarchy in EA communities goes something like this:

- People who've dedicated their careers to effective causes. Or philosophers at Oxford.

- People who facilitate people who've dedicated their careers to effective causes, e.g. research analysts.

- People who donate 99% of their income to effective causes.

- People who donate 98% of their income to effective causes.

- ...

- People who donate 1% of their income to effective causes.

- People who donate their time and money to ineffective causes.

- People who don't donate.

- People who think altruism is bad.

This hierarchy is very "visible" within the in-person circles I frequent, being enforced by a few core members. I recently convinced a non-EA friend to tag along, and following the event, they said, "I felt incredibly unwelcomed". Within 5 minutes, one of the organisers asked my friend, "What charities do you donate to?" My friend said, "I volunteer at a local charity, and my SO works in sexual health awareness." Following a bit of back and forth debate, the EA organiser looked disappointed and said "I'm confused.", then turned his back on my friend. [This is my vague recollection of what happened, it's not an exact description, and my friend had pre-existing anti-EA biases.]

Upholding the core principles of EA is necessary. Without upholding particular principles at the expense of the rest, the organisation ceases. However, the thing about optimisation and effectiveness is that if we're naively and greedily maximising, we're probably doing it wrong. If we are pushing people away from the cause by rewarding them with low status as soon as we meet them, we will not be winning many allies.

If we reward low status to people who don't donate as much as others, we might cause these people to halt their donations, quit our game, and instead play a different game in which they are rewarded with relatively more status.

I don't know how to solve this problem either, and I think it is hard. We can only do so much to "design" culture and influence how status is rewarded within communities. Culture is mostly a thing that just happens due to many agents interacting in a world.

I watched an interview with Toby Ord a while back, and during the Q&A session, the interviewer asked Ord:

Given your analysis of existential risks, do you think people should be donating purely to long-term causes?

Ord's response was fantastic. He said:

No. I do think this is very important, and there is a strong case to be made that this is the central issue of our time. And potentially the most cost-effective as well. Effective Altruism would be much the worse if it specialised completely in one area. Having a breadth of causes that people are interested in, united by their interest in effectiveness is central to the community's success. [...] We want to be careful not to get into criticising each other for supporting the second-best thing.

Extending this logic, let‘s not get into criticising people for doing good. We can argue and debate how we can do good better, but let’s not attack people for doing whatever good they can and are willing to do.

I have seen snide comments about Planned Parenthood floating around rationalist and EA communities, and I find them distasteful. Yeah, sure, donating to Malaria saves more lives. But again, the thing about optimisation is that if we are pushing people away from our cause by being parochial, then we're probably doing a lousy job at optimising.

Loved your comment, especially the “goodharting” interjections haha.

Your comment reminded me of “building” company culture. Managers keep trying to sculpt a company culture, but in reality managers have limited control over the culture. Company culture is more a thing that happens and evolves, and you as an individual can only do so much to influence it this way or that way.

Similarly, status is a thing that just happens and evolves in human society, and sometimes it has good externalities and other times it has bad externalities.

I quite liked “What You Do Is Who You Are” by Ben Horowitz. I thought it offered a practical perspective on creating company culture by focusing on embodying the values you’d like to see instead of just preaching them and hoping others embody them.

I recently read Will Storr's book "The Status Game" based on a LessWrong recommendation by user Wei_Dai. It's an excellent book, and I highly recommend it.

Storr asserts that we are all playing status games, including meditation gurus and cynics. Then he classifies the different kinds of status games we can play, arguing that "virtue dominance" games are the worst kinds of games, as they are the root of cancel culture.

Storr has a few recommendations for playing the Status Game to result in a positive-sum. First, view other people as being the heroes of their own life stories. If everyone else is the hero of their own story, which character do you want to be in their lives? Of course, you'd like to be a helpful character.

Storr distils what he believes to be "good" status games into three categories. They are:

- Warmth: When you are warm, you communicate, "I'm not going to play a dominance game with you." You imply that the other person will not get threats from you and that they are in a safe place around you.

- Sincerity: Sincerity is about levelling with other people and being honest with them. It signals to someone else that you will tell them when things are going badly and when things are going well. You will not be morally unfair to them or allow resentment to build up and then surprise them with a sudden burst of malice.

- Competence: Competence is just success and it signals that you can achieve goals and be helpful to the group.

I thought this book offered an interesting perspective on an integral aspect of being a human, status.

Hello, thank you for the post!

All images on this post are no longer available. I'm wondering if you're able to imbed them directly into the rich text :)

This post has brilliantly articulated a crucial idea. Thank you!

Microfoundations for macroeconomics is a step in the right direction towards a gears-level understanding of economics. Still, our current understanding of cognition and human nature is primarily based on externally-visible behaviour and not on gears. Do you think we are progressing in the right direction within microeconomics towards more gears-level agent models?

I read the arguments against microfoundations, and some opponents point to "feedback loops". They claim that the arrow of causation is bidirectional between agent behaviour and macroeconomics. For example, agents anticipating an interest rate increase change how they behave. Curious to know what you think about this line of argumentation.

Causation goes from the lower levels to the higher levels. E.g. we cannot choose to change the laws of physics, but the laws of physics entirely cause everything we experience. Are these "feedback loops" an illusion created by our confusion and lack of gears-level causal understanding, or are they actual gears?

This reminds me of the book "Four Thousand Weeks". The core idea is that if you become productive at doing something, then society will want you to do more of that thing. For example, if you were good at responding to email, always prompt, and never missing an email, society would send you more emails because you had built a reputation of being good at responding to email.

Excellent post, thanks, Eli. You've captured some core themes and attitudes of rationalism quite well.

I find the "post" prefix unhelpful whenever I see it used. It implies a final state of whatever it is referring to.

What meaning of "rationality" does "post-rationality" refer to? Is "post-rationality" referring to "rationality" as a cultural identity, or is it referring to "rationality" as a process of optimisation towards achieving some desirable states of the world?

There is an important distinction between someone identifying as a rationalist but acting in self-defeating and antisocial ways and the abstract concept of optimisation itself.

I started attending in-person LessWrong meetups a few months ago, and I've found that they attract a wide range of people. Of course, there are the abrasive "truth-seekers" who won't miss an opportunity to make others feel terrible for saying anything that they deemed to be factually or morally imperfect. However, on the whole, this is not much different from any other group of people I engage. I fail to see how prefixing a word with "post" solves any problems.

Oh, it wouldn't eliminate all selection bias, but it certainly would reduce it. I said "avoid selection bias," but I changed it to "reduce selection bias" in my original post. Thanks for pointing this out.

It's tough to extract completely unbiased quasi-experimental data from the world. A frail elder dying from a heart attack during the volcanic eruption certainly contributes to selection bias.

A missing but essential detail: the government compensated these people and provided them with relocation services. Therefore, even the frail were able to relocate.

Recently I came across this brilliant example of avoiding reducing selection bias when extracting quasi-experimental data from the world towards the beginning of the book "Good Economics for Hard Times" by Banerjee and Duflo.

The authors were interested in understanding the impact of migration on income. However, most data on migration contains plenty of selection bias. For example, people who choose to migrate are usually audacious risk-takers or have the physical strength, know-how, funds and connections to facilitate their undertaking,

To reduce these selection biases, the authors looked at people forced to relocate due to rare natural disasters, such as volcano eruptions.

Words cannot possibly express how thankful I am for you doing this!

I bet that most of them would replicate flawlessly. Boring lab techniques and protein structure dominate the list, nothing fancy or outlandish. Interestingly, the famous papers like relativity, expansion of the universe, the discovery of DNA etc. don't rank anywhere near the top 100. There is also a math paper on fuzzy sets among the top 100. Now that's a paper that definitely replicates!

Excellent article! I agree with your thesis, and you’ve presented it very clearly.

I largely agree that we cannot outsource knowledge. For example, you cannot outsource the knowledge to play the violin, and you must invest in countless hours of deliberate practice to learn to play the violin.

A rule of thumb I like is only to delegate things that you know how to do yourself. A successful startup founder is capable of comfortably stepping into the shoes of anyone they delegate work to. Otherwise, they would have no idea what high-quality work looks like and how long work is expected to take. The same perspective applies to wanting to cure ageing with an investment of a billion dollars. If you don’t know how to do the work yourself, you have little chance of successfully delegating that work.

Do you think outsourcing knowledge to experts would be more feasible if we had more accurate and robust mechanisms for distinguishing the real experts from the noise?

The orb-weaving spider. I updated my original post to include the name.

Excellent write-up. Thanks, Elizabeth.

I'm a software engineer at a company that implements a "20%". Every couple of months, we have a one (sometimes two) week sprint for the 20%. As you've pointed out, it works out to be less than 20%, and many engineers choose to keep working on their primary projects to catch up on delivery dates.

In the weeks leading up to the 20% sprint, we create a collaborative table in which engineers propose ideas and pitch those ideas in a meeting on the Monday morning of the sprint. Proposals fall into two categories:

- Reducing technical debt. E.g. deprecating the usage of an old library.

- Prototyping a new idea. E.g. trying out the performance of a new library.

I find the 20% sprints very valuable. A lot of the time, there is work I would like to be done that doesn't fit well within "normal" priorities. I believe such work to be valuable based on my experience and knowledge. However, it doesn't have sufficient visibility from the perspectives of the higher levels. Therefore, this sort of work would never make its way into our everyday work without the 20% sprint.

On the mating habits of the orb-weaving spider:

These spiders are a bit unusual: females have two receptacles for storing sperm, and males have two sperm-delivery devices, called palps. Ordinarily the female will only allow the male to insert one palp at a time, but sometimes a male manages to force a copulation with a juvenile female, during which he inserts both of his palps into the female’s separate sperm-storage organs. If the male succeeds, something strange happens to him: his heart spontaneously stops beating and he dies in flagrante. This may be the ultimate mate-guarding tactic: because the male’s copulatory organs are inflated, it is harder for the female (or any other male) to dislodge the dead male, meaning that his lifeless body acts as a very effective mating plug. In species where males aren’t prepared to go to such great lengths to ensure that they sire the offspring, then the uncertainty over whether the offspring are definitely his acts as a powerful evolutionary disincentive to provide costly parental care for them.

I think that you raise a crucial point. I find it challenging to explain to people that AI is likely very dangerous. It‘s much easier to explain that pandemics, nuclear wars or environmental crises are dangerous. I think this is mainly due to the abstractness of AI and the concreteness of those other dangers, leading to availability bias.

The most common counterarguments I've heard from people about why AI isn't a serious risk are:

- AI is impossible, and it is just "mechanical" and lacks some magical properties only humans have.

- When we build AIs, we will not embed them with negative human traits such as hate, anger and vengeance.

- Technology has been the most significant driver of improvements in human well-being, and there's no solid evidence that this will change.

I have found that comparing the relationship between humans and chimpanzees with humans and hypothetical AIs is a good explanation that people find compelling. There's plenty of evidence suggesting that chimpanzees are pretty intelligent, but they are just not nearly intelligent enough to influence human decision making. This has resulted in chimps spending their lives in captivity across the globe.

Another good explanation is based on insurance. The probability that your house will be destroyed is small, but it's still prudent to buy home insurance. Suppose you believe that the likelihood that AI will be dangerous is small. Is it not wise that we insure ourselves by dedicating resources towards the development of safe AI?

This is a list of the top 100 most cited scientific papers. Reading all of them would be a fun exercise.

Speculating about this is a fun exercise. I argue that the answer is less probable.

The survivors might have a more substantial commitment to life affirmation given that the fragility of life is so fresh in their minds following Armageddon. I argue that this would have a minimal effect. We know that the dinosaurs went extinct, and we know that the average lifespan for a mammalian species is about one million years. We know that we have fought world wars, and we know that life is precious and unreasonably rare in the universe. Yet, in aggregate, we still don't care that much about safeguarding the future of life. Existential risk reduction doesn't factor heavily into our decision making and how we allocate our resources.

In terms of technological progress, I think a post-nuclear war civilisation would likely quickly bootstrap itself, given the abundance of already extracted raw materials and knowledge left behind. However, on the whole, Armageddon would be a severe hindrance to technological progress. Political, social and economic stability appears advantageous to the creation of knowledge.

Thanks for all the excellent writing on economic progress you've put out. I completed reading "Creating a Learning Society" by Joseph Stiglitz a few days ago, and I am in the process of writing a review of that book to share here on LessWrong. Your essays are providing me with a lot of insights that I hope to take into account in my review :D

The theory of ‘morality as cooperation’ (MAC) argues that morality is best understood as a collection of biological and cultural solutions to the problems of cooperation recurrent in human social life. MAC draws on evolutionary game theory to argue that, because there are many types of cooperation, there will be many types of morality. These include: family values, group loyalty, reciprocity, heroism, deference, fairness and property rights. Previous research suggests that these seven types of morality are evolutionarily-ancient, psychologically-distinct, and cross-culturally universal. The goal of this project is to further develop and test MAC, and explore its implications for traditional moral philosophy. Current research is examining the genetic and psychological architecture of these seven types of morality, as well as using phylogenetic methods to investigate how morals are culturally transmitted. Future work will seek to extend MAC to incorporate sexual morality and environmental ethics. In this way, the project aims to place the study of morality on a firm scientific foundation.

Source: https://www.lse.ac.uk/cpnss/research/morality-as-cooperation.

Yes, it looks isomorphic. Thanks for sharing your write-up. You've captured this idea well.

I appreciate how Toby Ord considers "knock-on effects" in his modelling of existential risks, as presented in "The Precipice". A catastrophe doesn't necessarily have to cause extinction for it to be considered an existential threat. The reason being knock-on effects, which would undoubtedly impair our preparedness for what comes next.