Posts

Comments

- Like Wolfram present a diffusion model as a world of concepts. But remove the noise, make the generated concepts like pictures in an art galley (so make 2D pictures stand upright like pictures in this 3D simulated art gallery), this way gamers and YouTubers will see how dreadful those model really are inside. There is a new monster every month on YT, they get millions of views. We want the public to know that AI companies make real-life Frankenstein monsters with some very crazy stuff inside of their electronic "brains" (inside of AI models). It can help to spread the outrage, if people also see their personal photos are inside of those models. If they used the whole output of humanity to train their models, those models should benefit the whole humanity, not cost $200/month like paid ChatGPT. People should be able to see what's in the model, right now a chatbot is like a librarian that spits quotes at you but doesn't let you enter the library (the AI model).

Okay, so you propose a mechanistic interpretability program where you create a virtual gallery of AI concepts extracted from Stable Diffusion, represented as images. I am slightly skeptical that this would move the needle on AI safety significantly, we already have databases like LAION which are open-source databases of scraped images used to train AI models, and I don't see that much outrage over it. I mean, there is some outrage, but not a significantly large amount to be a cornerstone of an AI safety plan.

gamers and YouTubers will see how dreadful those model really are inside. There is a new monster every month on YT, they get millions of views. We want the public to know that AI companies make real-life Frankenstein monsters with some very crazy stuff inside of their electronic "brains" (inside of AI models).

What exactly do you envision that is being hidden inside these Stable Diffusion concepts? What "crazy stuff" is in it? I'm currently not aware of anything about their inner representations that is especially concerning.

It can help to spread the outrage, if people also see their personal photos are inside of those models.

It is probably a lot more efficient to show that by modifying the LAION database and slapping some sort of image search on it, so people can see that their pictures were used to train the model.

Well, this assumes that we have control of most of the world's GPU's, and that we have "Math-Proven Safe GPUs" which can block the execution of bad AI models and only output safe AIs (how this is achieved is not really explained in the text), and if we grant this, then AI safety already gets a lot easier.

This is a solution, but a solution similar to "nuke all the datacenters" and I don't see how this outlines any steps that gets us closer to achieving it.

A helpful page to see and subscribe to all 31 Substack writers (out of 122 total) who were invited to LessOnline: https://lessonline2025invitedlist.substack.com/recommendations

I guess this is another case of 'Universal' Human Experiences That Not Everyone Has

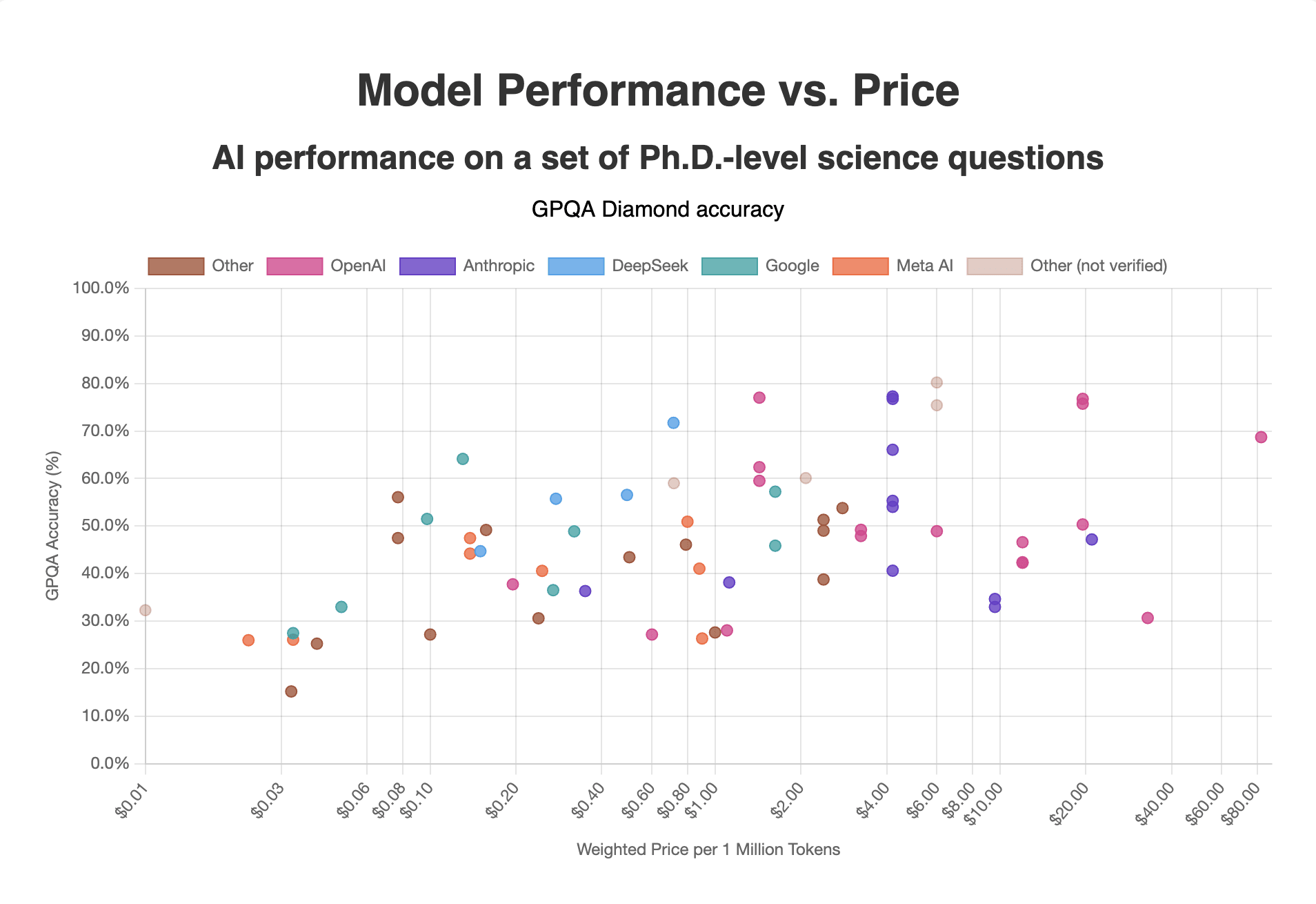

Made a small, quick website showing GPQA benchmark scores plotted against LLM inference cost, at https://ai-benchmark-price.glitch.me/. See how much you get for your buck:

Most benchmark data is from Epoch AI, except for those marked "not verified", which I got from the model developer. Pricing data is from OpenRouter.

All the LLMs on this graph which are on the Pareto frontier of performance vs price were released December 2024 or later...

Zvi has a Substack, there are usually more comments on his posts to there compared to his LessWrong posts https://thezvi.substack.com/p/levels-of-friction/comments

This particular post has 30+ comments in that link

https://www.nytimes.com/interactive/2025/03/03/world/europe/ukraine-russia-war-drones-deaths.html Here's a link to an NYT article about that.

Here are some quotes:

Drones, not the big, heavy artillery that the war was once known for, inflict about 70 percent of all Russian and Ukrainian casualties, said Roman Kostenko, the chairman of the defense and intelligence committee in Ukraine’s Parliament. In some battles, they cause even more — up to 80 percent of deaths and injuries, commanders say.

The conflict now bears little resemblance to the war’s early battles, when Russian columns lumbered into towns and small bands of Ukrainian infantry moved quickly, using hit-and-run tactics to slow the larger enemy.

today most soldiers die or lose limbs to remote-controlled aircraft rigged with explosives, many of them lightly modified hobby models. Drone pilots, in the safety of bunkers or hidden positions in tree lines, attack with joysticks and video screens, often miles from the fighting.

Ukrainian officials said they had made more than one million first-person-view, or FPV, drones in 2024. Russia claims it can churn out 4,000 every day. Both countries say they are still scaling up production, with each aiming to make three to four million drones in 2025.

They’re being deployed far more often, too. With each year of the war, Ukraine’s military has reported huge increases in drone attacks by Russian forces.

2022: 2,600+ reported attacks

2023: 4,700+ reported attacks

2024: 13,800+ reported attacks

People are better defined by smaller categories.

If someone is part of both a large category and a small category that usually don’t overlap, it’s likely that they are an outlier in the large category, not a representative member.

For example, if someone is both a rationalist and a Muslim, you shouldn’t expect them to be very similar to a normal Muslim but much more similar to a rationalist, and that it’s possible that they may not be very good at representing Muslims in general to a rationalist audience.

The category of “social construction” is a social construct.

Good job for guessing that it was Google correctly! Here’s the arXiv print.

UBI would probably work better in Kenya compared to the US because when people are in extreme poverty, funds get used to meet pressing needs, but when these basic needs are met, extra funds are probably more likely to go into having more leisure time. People in Kenya generally have more low-hanging fruit to buy a motorcycle or to get a home renovation, but in the US, major life-changing things probably cost too much for UBI to cover and the income might be used on something like alcohol.

Why are comments on older posts sorted by date, but comments on newer posts are sorted by top scoring?

What about a goal that isn't competitive, such as "get grade 8 on the ABRSM music exam for <instrument>"? Plenty of Asian parents have that particular goal and yet they usually ask/force their children to practice daily. Is this irrational, or is it good at achieving this goal? Would we be able to improve efficiency by using spaced repetition in this scenario as opposed to daily practice?

If spaced repetition is the most efficient way of remembering information, why do people who learn a music instrument practice every day instead of adhering to a spaced repetition schedule?

hare/tortoise takeoff

This seems like the sort of R&D that China is good at: research that doesn't need superstar researchers and that is mostly made of incremental improvements. But yet they don't seem to be producing top LLMs. Why is that?

Google Gemini uses a watermarking system called Synth ID that claims to be able to watermark text by skewing its probability distribution. Do you think it’ll be effective? Do you think that it’s useful to have this?

The digital version of the SAT actually uses dynamic scoring now where you get harder questions if you get the ones in the first section correct, but it’s still approximately as difficult as the normal test so tons of people still tie at 1600

We call on our knowledge when something related triggers, so in order for a lesson to be useful, you need to build those connections and triggers in the student’s mind.

Seems related to trigger-action plans...

Such as this one!

Given that Biden has dropped out, do you believe that the market was accurately priced at the time?

LessWrong messed up the formatting so in my home feed I saw a bet where I pay you $1000 if I lose but only gain $10 instead of $1,000,000,000 if I win

Hello from 12 years in the future!

Try cruise control. Helps you drive your car on the same lane when on highways. Makes it much less tiring.

Not necessarily related to your main point, but you could have downloaded a markdown-based text editor and pasting what you copied into it, and they'll convert the text to Markdown, which Discord uses. A couple of them should support automatic formatting of HTML text to Markdown.

For example, I copied a portion of the article and pasted it into Obsidian (a markdown-based note system), and it formatted the text into Markdown for me. This is what it looked like in Discord:

Discord only supports the first 3 levels of headings so the subheading doesn't format, but everything else is fine. When I compared it with your richtext editor, it matched perfectly.

True, but that's because the author is writing about working with Google Sheets, not Excel.

They're mentioned in the companion piece (Google Docs) linked at the bottom of this post. This isn't the full post.

I tried to visualize the entire dataset and look for patterns...

I decided to try mapping the entire group, by connecting all structures where they only differ by one column. After I did so and played around with the graph, I realised that lots of structures by the same person are connected and either all fail or all succeed. To investigate, I decided to only connect structures that are by the same person, and the pattern appears very nicely:

See the image at https://39669.cdn.cke-cs.com/rQvD3VnunXZu34m86e5f/images/2d52f50b48a8badc77102018aefe03ad8f36e2a05be8f15c.png\ (same image host at LessWrong, and I can't find out how to spoiler images)

Do you happen to have a copy of it that you can share?

I wonder how much of dyslexia transfers cross-linguistically

It turns out that quite a bit of it is dependent on the type of language; A person dyslexic in alphabetic languages is not necessarily dyslexic in logographic languages, because they engage different parts of the brain. For example, from this review of Chinese developmental dyslexia:

Converging behavioral evidence suggests that, while phonological and rapid automatized naming deficits are language universal, orthographic and morphological deficits are specific to the linguistic properties of Chinese. At the neural level, hypoactivation in the left superior temporal/inferior frontal regions in dyslexic children across Chinese and alphabetic languages may indicate a shared phonological processing deficit, whereas hyperactivation in the right inferior occipital/middle temporal regions and atypical activation in the left frontal areas in Chinese dyslexic children may indicate a language-specific compensatory strategy for impaired visual-spatial analysis and a morphological deficit in Chinese (developmental dyslexia), respectively.

I've went into a small rabbit hole about Chinese dyslexia after reading your comment where you treated each letter like a drawing instead of a letter, and it turns out that English and Chinese dyslexia probably affect different parts of the brain, and that someone who is dyslexic in alphabet-based systems (English) may not be dyslexic in logograph-based systems (Chinese).

For example, from the University of Michigans's dyslexia help website:

Wai Ting Siok of Hong Kong University has discovered that being dyslexic in Chinese is actually not the same as being dyslexia in English. Her team’s MRI studies showed that dyslexia among users of alphabetic scripts such as English versus users of logographic scripts such as Chinese was associated with different parts of the brain. Chinese reading uses more of a frontal part of the left hemisphere of the brain, whereas English reading uses a posterior part of the brain.

from a linguistics review in 2023:

Converging behavioral evidence suggests that, while phonological and rapid automatized naming deficits are language universal, orthographic and morphological deficits are specific to the linguistic properties of Chinese. At the neural level, hypoactivation in the left superior temporal/inferior frontal regions in dyslexic children across Chinese and alphabetic languages may indicate a shared phonological processing deficit, whereas hyperactivation in the right inferior occipital/middle temporal regions and atypical activation in the left frontal areas in Chinese dyslexic children may indicate a language-specific compensatory strategy for impaired visual-spatial analysis and a morphological deficit in Chinese DD, respectively.

This made me wonder, since written Chinese has no connection with spoken Chinese, did people in ancient China mostly read aloud or read silently when reading by themselves? I'd expect that they didn't read out what they say.

Also, when I read Chinese as a second-language speaker, having learned English before Chinese, I often find that subvocalizing the Chinese characters helps me to understand it, and not doing so makes it difficult for me to read, however my Chinese-native parents don't have an inner voice when reading Chinese.

I think the general differences in language processing between different types of languages is interesting, though I'm not sure how useful this is. Just my ramblings.

Is the Renaissance caused by the new elite class, the merchants, focusing more on pleasure and having fun compared to the lords, who focused more on status and power?

hmm, is there a collection of the history of terrorist attacks related to AI?

But Manifold adds 20 mana to liquidity per new trader, so it'll eventually become more inelastic over time. The liquidity doesn't stay at 50 mana.

After reading this and your dialogue with Isusr, it seems that Dark Arts arguments are logically consistent and that the most effective way to rebut them is not to challenge them directly in the issue.

jimmy and madasario in the comments asked for a way to detect stupid arguments. My current answer to that is “take the argument to its logical conclusion, check whether the argument’s conclusion accurately predicts reality, and if it doesn’t, it’s probably wrong”

For example, you mentioned before an argument which says that we need to send U.S. troops to the Arctic because Russia has hypersonic missiles that can do a first-strike on the US, but their range is too short to attack the US from the Russian mainland, but it is long enough to attack the US from the Arctic.

If this really were true, we would see this being treated as a national emergency, and the US taking swift action to stop Russia from placing missiles in the Arctic, but we don’t see this.

Now, for some arguments (e.g. AI risk, cryonics), the truth is more complicated than this, but it’s a good heuristic for telling whether you need to investigate an argument more thoroughly or not.

We do agree that suffering is bad, and that if a new clone of you would experience more suffering than happiness, then it’ll be bad, but does the suffering really outweigh the happiness they’ll gain?

You have experienced suffering in your life. But still, do you prefer to have lived, or do you prefer to not have been born? Your copy will probably give the same answer.

(If your answer is genuinely “I wish I wasn’t born”, then I can understand not wanting to have copies of yourself)

I do believe your main point is correct, just that most people here already know that.

Ethical worth may not be finite, but resources are finite. If we value ants more, then that means we should give more resources to ants, which means that there are less resources to give to humans.

From your comments on how you value reducing ant suffering, I think your framework regarding ants seems to be “don’t harm them, but you don’t need to help them either”. So basically reducing suffering but not maximising happiness.

Utilitarianism says that you should also value the happiness of all beings with subjective experience, and that we should try to make them happier , which leads to the question of how to do this if we value animals. I’m a bit confused, how can you value not intentionally making them suffer, but not also conclude that we should give resources to them to make them happier?

The reason why it’s considered good to double the ant population is not necessarily because it’ll be good for the existing ants, it’s because it’ll be good for the new ants created. Likewise, the reason why it’ll be good to create copies of yourself is not because you will be happy, but because your copies will be happy, which is also a good thing.

Yes, it requires the ants to have subjective experience for making more of them to be good in utilitarianism, because utilitarianism only values subjective experiences. Though, if your model of the world says that ant suffering is bad, then doesn’t that imply that you believe ants have subjective experience?

Why would our CoffeeFetcher-1000 stay in the building and continue to fetch us coffee? Why wouldn't it instead leave, after (for example) writing a letter of resignation pointing out that there are staving children in Africa who don't even have clean drinking water, let alone coffee, so it's going to hitchhike/earn its way there, where it can do the most good [or substitute whatever other activity it could do that would do the most good for humanity: fetching coffee at a hospital, maybe].

Why can't you just build an AI whose goal is to fetch its owners coffee, and not to maximize the good it'll do?

I think you just got the wrong audience. People assume that you’re referring to effective altruism charities and aid. The average LessWrong reader already believes that traditional aid is ineffective, this post is mostly old info. Your criticisms of aid sound a bit ignorant because people pattern-match your post to criticism of charities like GiveDirectly, when people have done studies that show GiveDirectly has quite a good cost-benefit ratio.

Your post is accurate, but redundant to EAs.

Also, slightly unrelated, but what do you think about EA charities? Have you looked into them? Do you find them better than traditional charities?

Are there any similar versions of this post on LW which express the same message, but without the patronising tone of Valentine? Would that be valuable?

Would more people donate to charity if they could do so in one click? Maybe...

I don't think so, I also only noticed it on the frontpage today.

I was initially a bit confused over the difference between an AI based on shard theory and one based on an optimiser and a grader, until I realized that the former has an incentive to make its evaluation of results as accurate as possible, while the latter doesn't. Like, the diamond shard agent wouldn't try to fool its grader because it'll conflict with its goal to have more diamonds, whereas the latter agent wouldn't care.

So, most people see sleep as something that's obviously beneficial, but this post was great at sparking conversation about this topic, and questioning that assumption about whether sleep is good. It's well-researched and addresses many of the pro-sleep studies and points regarding the issue.

I'll like to see people do more studies on the effects of low sleep on other diseases or activities. There's many good objections in the comments, such as increased risk of Alzheimer's, driving while sleepy and how the analogy of sleep deprivation to fasting may be misguided.

There was a good experiment presented here, where Andrew Vlahos replied

> I'm a tutor, and I've noticed that when students get less sleep they make many more minor mistakes (like dropping a negative sign) and don't learn as well. This effect is strong enough that for a couple of students I started guessing how much sleep they got the last couple days at the end of sessions, asked them, and was almost always right.

and guzey replied with a proposed experiment

> As an experiment -- you can ask a couple of your students to take a coffee heading to you when they are underslept and see if they continue to make mistakes and learn poorly (in which case it's the lack of sleep per se likely causing problems) or not (in which case it's sleepiness)

Hopefully someone does bother to do it in the future.

Eliezer used “universally compelling argument” to illustrate a hypothetical argument that could persuade anything, even a paper clip maximiser. He didn’t use it to refer to your definition of the word.

You can say that the fact it doesn’t persuade a paper clip maximiser is irrelevant, but that has no bearing on the definition of the word as commonly used in LessWrong.

Isn’t morality a human construct? Eliezer’s point is that morality defined by us, not an algorithm or a rule or something similar. If it was defined by something else, it wouldn’t be our morality.

How would you define objective morality? What would make it objective? If it did exist, how would you possibly be able to find it?

How would we be able to verify such a claim? How would we investigate this? What specific help do you need from us?