Arjun Panickssery's Shortform

post by Arjun Panickssery (arjun-panickssery) · 2023-12-30T10:34:24.625Z · LW · GW · 58 commentsContents

58 comments

58 comments

Comments sorted by top scores.

comment by Arjun Panickssery (arjun-panickssery) · 2024-05-23T15:13:17.206Z · LW(p) · GW(p)

Ask LLMs for feedback on "the" rather than "my" essay/response/code, to get more critical feedback.

Seems true anecdotally, and prompting GPT-4 to give a score between 1 and 5 for ~100 poems/stories/descriptions resulted in an average score of 4.26 when prompted with "Score my ..." versus an average score of 4.0 when prompted with "Score the ..." (code).

Replies from: Chris_Leong↑ comment by Chris_Leong · 2024-05-24T06:32:15.860Z · LW(p) · GW(p)

Perhaps it'd be even better to say that it's okay to be direct or even harsh?

Replies from: Measure, kromem↑ comment by kromem · 2024-05-25T05:26:05.128Z · LW(p) · GW(p)

Could try 'grade this' instead of 'score the.'

'Grade' has an implicit context of more thorough criticism than 'score.'

Also, obviously it would help to have a CoT prompt like "grade this essay, laying out the pros and cons before delivering the final grade between 1 and 5"

comment by Arjun Panickssery (arjun-panickssery) · 2024-06-11T15:27:15.633Z · LW(p) · GW(p)

FiveThirtyEight released their prediction today that Biden currently has a 53% of winning the election | Tweet

The other day I asked [LW(p) · GW(p)]:

Should we anticipate easy profit on Polymarket election markets this year? Its markets seem to think that

- Biden will die or otherwise withdraw from the race with 23% likelihood

- Biden will fail to be the Democratic nominee for whatever reason at 13% likelihood

- either Biden or Trump will fail to win nomination at their respective conventions with 14% likelihood

- Biden will win the election with only 34% likelihood

Even if gas fees take a few percentage points off we should expect to make money trading on some of this stuff, right (the money is only locked up for 5 months)? And maybe there are cheap ways to transfer into and out of Polymarket?

Probably worthwhile to think about this further, including ways to make leveraged bets.

Replies from: UnexpectedValues, deluks917, Dagon, edge_retainer, Morpheus, Omnni↑ comment by Eric Neyman (UnexpectedValues) · 2024-06-12T19:11:38.612Z · LW(p) · GW(p)

I think the FiveThirtyEight model is pretty bad this year. This makes sense to me, because it's a pretty different model: Nate Silver owns the former FiveThirtyEight model IP (and will be publishing it on his Substack later this month), so FiveThirtyEight needed to create a new model from scratch. They hired G. Elliott Morris, whose 2020 forecasts were pretty crazy in my opinion.

Here are some concrete things about FiveThirtyEight's model that don't make sense to me:

- There's only a 30% chance that Pennsylvania, Michigan, or Wisconsin will be the tipping point state. I think that's way too low; I would put this probability around 65%. In general, their probability distribution over which state will be the tipping point state is way too spread out.

- They expect Biden to win by 2.5 points; currently he's down by 1 point. I buy that there will be some amount of movement toward Biden in expectation because of the economic fundamentals, but 3.5 seems too much as an average-case.

- I think their Voter Power Index (VPI) doesn't make sense. VPI is a measure of how likely a voter in a given state is to flip the entire election. Their VPIs are way to similar. To pick a particularly egregious example, they think that a vote in Delaware is 1/7th as valuable as a vote in Pennsylvania. This is obvious nonsense: a vote in Delaware is less than 1% as valuable as a vote in Pennsylvania. In 2020, Biden won Delaware by 19%. If Biden wins 50% of the vote in Delaware, he will have lost the election in an almost unprecedented landslide.

I claim that the following is a pretty good approximation to VPI: (probability that the state is the tipping state) * (number of electoral votes) / (number of voters). If you use their tipping-point state probabilities, you'll find that Pennsylvania's VPI should be roughly 4.3 times larger than New Hampshire's. Instead, FiveThirtyEight has New Hampshire's VPI being (slightly) higher than Pennsylvania's.I retract this: the approximation should instead be (tipping point state probability) / (number of voters). Their VPI numbers now seem pretty consistent with their tipping point probabilities to me, although I still think their tipping point probabilities are wrong.

The Economist also has a model, which gives Trump a 2/3 chance of winning. I think that model is pretty bad too. For example, I think Biden is much more than 70% likely to win Virginia and New Hampshire. I haven't dug into the details of the model to get a better sense of what I think they're doing wrong.

Replies from: DanielFilan↑ comment by DanielFilan · 2024-06-13T00:22:50.661Z · LW(p) · GW(p)

FWIW the polling in Virginia is pretty close - I'd put my $x against your $4x that Trump wins Virginia, for x <= 200. Offer expires in 48 hours.

Replies from: UnexpectedValues↑ comment by Eric Neyman (UnexpectedValues) · 2024-06-13T01:53:43.882Z · LW(p) · GW(p)

I'd have to think more about 4:1 odds, but definitely happy to make this bet at 3:1 odds. How about my $300 to your $100?

(Edit: my proposal is to consider the bet voided if Biden or Trump dies or isn't the nominee.)

Replies from: DanielFilan↑ comment by DanielFilan · 2024-06-13T19:48:39.423Z · LW(p) · GW(p)

Could we do your $350 to my $100? And the voiding condition makes sense.

Replies from: UnexpectedValues, UnexpectedValues↑ comment by Eric Neyman (UnexpectedValues) · 2024-06-13T19:59:49.057Z · LW(p) · GW(p)

Yup, sounds good! I've set myself a reminder for November 9th.

Replies from: DanielFilan↑ comment by DanielFilan · 2024-06-13T23:05:19.731Z · LW(p) · GW(p)

Have recorded on my website

Replies from: DanielFilan↑ comment by DanielFilan · 2024-06-26T20:03:10.923Z · LW(p) · GW(p)

Update for posterity: Nate Silver's model gives Trump a ~1 in 6 chance of winning Virginia, making my side of this bet look bad.

Replies from: DanielFilan↑ comment by DanielFilan · 2024-07-20T00:32:48.788Z · LW(p) · GW(p)

Further updates:

- On the one hand, Nate Silver's model now gives Trump a ~30% chance of winning in Virginia, making my side of the bet look good again.

- On the other hand, the Economist model gives Trump a 10% chance of winning Delaware and a 20% chance of winning Illinois, which suggests that there's something going wrong with the model and that it was untrustworthy a month ago.

- That said, betting markets currently think there's only a one in four chance that Biden is the nominee, so this bet probably won't resolve.

↑ comment by Eric Neyman (UnexpectedValues) · 2024-07-22T07:04:15.929Z · LW(p) · GW(p)

Looks like this bet is voided. My take is roughly that:

- To the extent that our disagreement was rooted in a difference in how much to weight polls vs. priors, I continue to feel good about my side of the bet.

- I wouldn't have made this bet after the debate. I'm not sure to what extent I should have known that Biden would perform terribly. I was blindsided by how poorly he did, but maybe shouldn't have been.

- I definitely wouldn't have made this bet after the assassination attempt, which I think increased Trump's chances. But that event didn't update me on how good my side of the bet was when I made it.

- I think there's like a 75-80% chance that Kamala Harris wins Virginia.

↑ comment by Eric Neyman (UnexpectedValues) · 2024-07-25T20:02:46.079Z · LW(p) · GW(p)

I'm now happy to make this bet about Trump vs. Harris, if you're interested.

Replies from: DanielFilan, DanielFilan↑ comment by DanielFilan · 2024-07-30T16:57:08.309Z · LW(p) · GW(p)

I'd now make this bet if you were down. Offer expires in 48 hours.

Replies from: UnexpectedValues↑ comment by Eric Neyman (UnexpectedValues) · 2024-07-30T17:16:46.107Z · LW(p) · GW(p)

Probably no longer willing to make the bet, sorry. While my inside view is that Harris is more likely to win than Nate Silver's 72%, I defer to his model enough that my "all things considered" view now puts her win probability around 75%.

↑ comment by DanielFilan · 2024-07-28T03:27:45.495Z · LW(p) · GW(p)

I'd like to wait and see what various models say.

↑ comment by sapphire (deluks917) · 2024-06-11T22:58:39.451Z · LW(p) · GW(p)

I have previously bet large sums on elections. Im not currently placing any bets on who will win the election. Seems too unclear to me (note I had a huge bet on biden in 2020, seemed clear then). However there are TONS of mispricings on polymarket and other sites. Things like 'biden will withdraw or lose the nomination @ 23%' is a good example.

Replies from: Bohaska, samir↑ comment by bohaska (Bohaska) · 2024-09-01T04:41:01.137Z · LW(p) · GW(p)

Given that Biden has dropped out, do you believe that the market was accurately priced at the time?

↑ comment by kairos_ (samir) · 2024-07-20T11:55:12.333Z · LW(p) · GW(p)

Polymarket has gotten lots of attention in recent months, but I was shocked to find out how much inefficency there really is.

There was a market titled "What will Trump say during his RNC speech?" that was up a few days ago. At 7 pm, the transcript for the speech was leaked, and you could easily find it by a google search or looking at the polymarket discord.

Trump started his speech at 9:30, and it was immediately that he was using the script. One entire hour into the speech I stumbled onto the transcript on Polymarkets discord. Despite the word "prisons" being in the leaked transcript that Trump was halfway through, Polymarket only gave it a 70% chance of being said. I quickly went to bet and made free money.

To be fair it was a smaller market with 800k in bets, but nonetheless I was shocked on how easy it was to make risk-free money.

↑ comment by Dagon · 2024-06-11T22:49:34.941Z · LW(p) · GW(p)

Biden not being the democratic nominee at 13% while EITHER Biden or Trump not being their respective nominees at 14% implies a 1% chance that Trump won't be the Republican nominee. There's clearly an arbitrage there. Whether it merits the costs (gas, risk of polymarket default, lost opportunity of the escrowed wager) I have no clue.

↑ comment by edge_retainer · 2024-06-11T18:41:11.478Z · LW(p) · GW(p)

Betting against republicans and third parties on poly is a sound strategy, pretty clear they are marketing heavily towards republicans and the site has a crypto/republican bias. For anything controversial/political, if there is enough liq on manifold I generally trust it more (which sounds insane because fake money and all).

That being said, I don't like the way Polymarket is run (posting the word r*tard over and over on Twitter, allowing racism in comments + discord, rugging one side on disputed outcomes, fake decentralization), so I would strongly consider not putting your money on PM and instead supporting other prediction markets, despite the possible high EV.

↑ comment by Morpheus · 2024-06-12T09:23:12.464Z · LW(p) · GW(p)

Feel free to write a post if you find something worthwhile. I didn't know how likely the whole Biden leaving the race thing was so 5% seemed prudent. At those odds, even if I belief the fivethirtyeight numbers I'd rather leave my money in etfs. I'd probably need something like >>1,2 multiplier in expected value before I'd bother. Last year when I was betting on Augur I was also heavily bitten by gas fees (150$ transaction costs to get my money back because gas fees exploded for eth), so would be good to know if this is a problem on polymarket also.

↑ comment by Aleksander (Omnni) · 2024-06-11T20:08:30.693Z · LW(p) · GW(p)

These predictions, of course, are obviously nonsensical. If I had to guess, it’s a combination of: many crypto users being right-wing and the media they consume has convinced them that this is more likely than it would be in reality, and climbing crypto prices discouraging betting leading to decreased accuracy. I’ll say that the climbing value of the currency as well as gas fees makes any prediction unwise, unless you believe you have massive advantage over the market. I’d personally pass on it, but other people are free to proceed with their money.

comment by Arjun Panickssery (arjun-panickssery) · 2024-06-07T15:57:02.873Z · LW(p) · GW(p)

Should we anticipate easy profit on Polymarket election markets this year? Its markets seem to think that

- Biden will die or otherwise withdraw from the race with 23% likelihood

- Biden will fail to be the Democratic nominee for whatever reason at 13% likelihood

- either Biden or Trump will fail to win nomination at their respective conventions with 14% likelihood

- Biden will win the election with only 34% likelihood

Even if gas fees take a few percentage points off we should expect to make money trading on some of this stuff, right (the money is only locked up for 5 months)? And maybe there are cheap ways to transfer into and out of Polymarket?

Replies from: Marcus Williams, Mitchell_Porter↑ comment by Marcus Williams · 2024-06-07T18:01:04.064Z · LW(p) · GW(p)

I think part of the reason why these odds might seem more off than usual is that Ether and other cryptocurrencies have been going up recently which means there is high demand for leveraged positions. This in turn means that crypto lending services such as aave having been giving ~10% APY on stablecoins which might be more appealing than a riskier, but only a bit higher, return from prediction markets.

↑ comment by Mitchell_Porter · 2024-06-08T02:41:57.667Z · LW(p) · GW(p)

They all seem like reasonable estimates to me. What do you think those likelihoods should be?

comment by Arjun Panickssery (arjun-panickssery) · 2024-06-03T19:31:31.180Z · LW(p) · GW(p)

Paid-only Substack posts get you money from people who are willing to pay for the posts, but reduce both (a) views on the paid posts themselves and (b) related subscriber growth (which could in theory drive longer-term profit).

So if two strategies are

- entice users with free posts but keep the best posts behind a paywall

- make the best posts free but put the worst posts behind the paywall

then regarding (b) above. the second strategy has less risk of prematurely stunting subscriber growth, since the best posts are still free. Regarding (a), it's much less bad to lose view counts on your worst posts.

Replies from: pktechgirl, Dagon, kave, None↑ comment by Elizabeth (pktechgirl) · 2024-06-03T20:22:45.730Z · LW(p) · GW(p)

3. put the spiciest posts behind a paywall, because you have something to say but don't want the entire internet freaking out about it.

Replies from: localdeity↑ comment by localdeity · 2024-06-03T22:31:06.367Z · LW(p) · GW(p)

Not sure if you intended this precise angle on it, but laying it out explicitly: If you compare a paid subscriber vs other readers, the former seems more likely to share your values and such, as well as have a higher prior probability on a thing you said being a good thing, and therefore less likely to e.g. take a sentence out of context, interpret it uncharitably, and spread outrage-bait. So posts with higher risk of negative interpretations are better fits for the paying audience.

↑ comment by Dagon · 2024-06-03T20:56:28.625Z · LW(p) · GW(p)

Substack started off so transparent and data-oriented. It's sad that they don't publish stats on various theories and their impact. Presumably you don't have to be that legible with your readers/subscribers, and you can test out (probably on a monthly or quarterly basis, not post-by-post) what attributes of a post advise toward being public, and what attributes lead to a private post. The feedback loop is distant enough that it's not a simple classifier.

You're missing at least one strategy - paid for frequent short-term takes, free for delayed summaries.

Replies from: Viliam↑ comment by kave · 2024-06-03T20:26:53.417Z · LW(p) · GW(p)

I believe Sarah Constantin's self-described strategy is roughly (b). You actually pay for "squishy" stuff, but she says she thinks squishy stuff is worse (though the wrinkle is that she implies readers maybe think the opposite).

Another set of strategies I've been thinking about are for mailing lists. You can either have your archives eventually become free (can't think of an example here, but I think it's fairly common for Patreon-supported writers to have an "early access" model), or you can have your newsletter be free but archives be fee-guarded (for example Money Stuff uses this model).

↑ comment by [deleted] · 2024-06-03T19:38:52.740Z · LW(p) · GW(p)

Paid-only Substack posts get you money from people who are willing to pay for the posts, but reduce both (a) views on the paid posts themselves and (b) related subscriber growth (which could in theory drive longer-term profit).

Is there any actual evidence of (b) being true? You can easily make the heuristic argument that paywalling generates additional demand by incentivizing readers to subscribe in order to access otherwise unavailable posts. We would need some data to figure out what the reality on the ground is.

Replies from: arjun-panickssery↑ comment by Arjun Panickssery (arjun-panickssery) · 2024-06-03T19:44:15.819Z · LW(p) · GW(p)

By "subscriber growth" in OP I meant both paid and free subscribers.

My thinking was that people subscribe after seeing posts they like, so if they get to see the body of a good post they're more likely to subscribe than if they only see the title and the paywall. But I guess if this effect mostly affects would-be free subscribers then the effect mostly matters insofar as free subscribers lead to (other) paid subscriptions.

(I say mostly since I think high view/subscriber counts are nice to have even without pay.)

comment by Arjun Panickssery (arjun-panickssery) · 2024-05-24T14:07:54.763Z · LW(p) · GW(p)

[Book Review] The 8 Mansion Murders by Takemaru Abiko

As a kid I read a lot of the Sherlock Holmes and Hercule Poirot canon. Recently I learned that there's a Japanese genre of honkaku ("orthodox") mystery novels whose gimmick is a fastidious devotion to the "fair play" principles of Golden Age detective fiction, where the author is expected to provide everything that the attentive reader would need to come up with the solution himself. It looks like a lot of these honkaku mysteries include diagrams of relevant locations, genre-savvy characters, and a puzzle-like aesthetic. A bunch have been translated by Locked Room International.

The title of The 8 Mansion Murders doesn't refer to the number of murders, but to murders committed in the "8 Mansion," a mansion designed in the shape of an 8 by the eccentric industrialist who lives there with his family (diagrams show the reader the layout). The book is pleasant and quick—it didn't feel like much over 50,000 words. Some elements feel very Japanese, like the detective's comic-relief sidekick who suffers increasingly serious physical-comedy injuries. The conclusion definitely fits the fair-play genre in that it makes sense, could be inferred from the clues, is generally ridiculous, and doesn't offer much in the way of motive.

If you like mystery novels, I would recommend reading one of these honkaku mysteries for the novelty. Maybe not this one, since there are more famous ones (this one was on libgen).

Replies from: NinaR↑ comment by Nina Panickssery (NinaR) · 2024-06-11T16:13:10.482Z · LW(p) · GW(p)

What about a book review of “The Devotion of Suspect X”?

comment by Arjun Panickssery (arjun-panickssery) · 2024-07-28T05:37:05.928Z · LW(p) · GW(p)

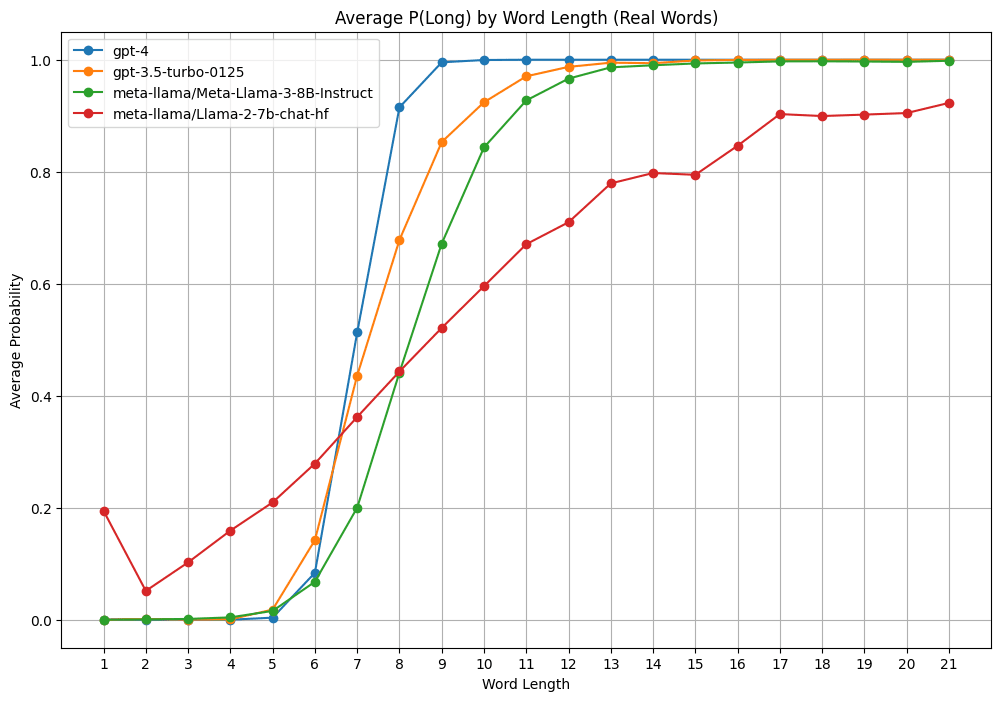

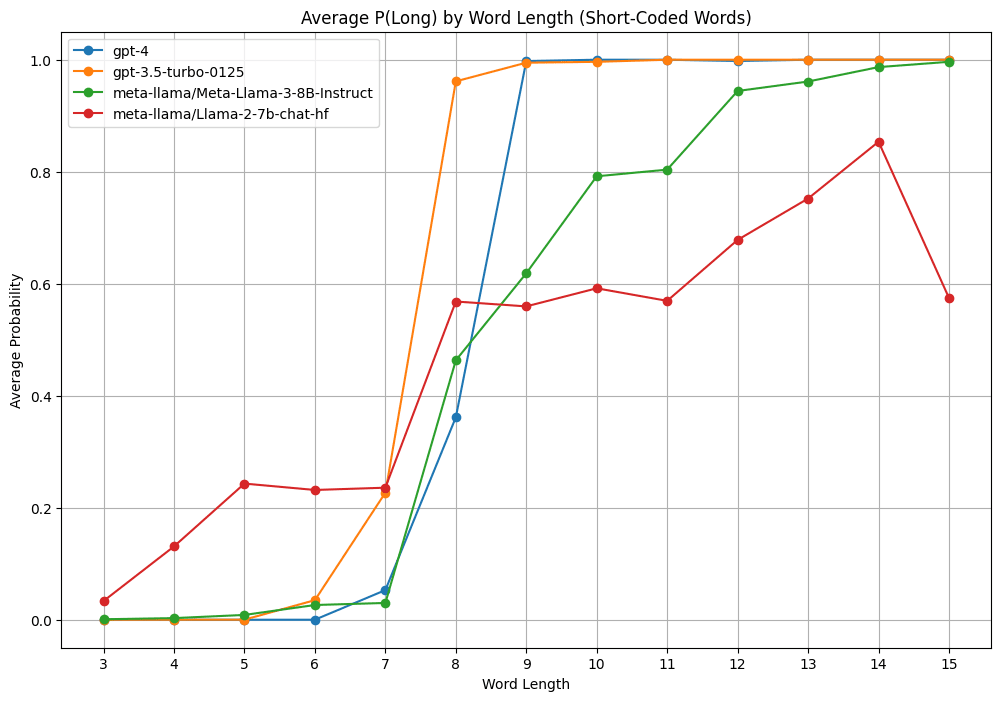

To test out Cursor for fun I asked models whether various words of different lengths were "long" and measured the relative probability of "Yes" vs "No" answers to get a P(long) out of them. But when I use scrambled words of the same length and letter distribution, GPT 3.5 doesn't think any of them are long.

Update: I got Claude to generate many words with connotations related to long ("mile" or "anaconda" or "immeasurable") and short ("wee" or "monosyllabic" or "inconspicuous" or "infinitesimal") It looks like the models have a slight bias toward the connotation of the word.

↑ comment by Vanessa Kosoy (vanessa-kosoy) · 2024-07-28T06:29:59.327Z · LW(p) · GW(p)

Just flagging that for humans, a "long" word might mean a word that's long to pronounce rather than long to write (i.e. ~number of syllables instead of number of letters)

↑ comment by Nina Panickssery (NinaR) · 2024-07-28T05:45:19.835Z · LW(p) · GW(p)

It's interesting how llama 2 is the most linear—it's keeping track of a wider range of lengths. Whereas gpt4 immediately transitions from long to short around 5-8 characters because I guess humans will consider any word above ~8 characters "long."

↑ comment by faul_sname · 2024-07-28T05:56:07.457Z · LW(p) · GW(p)

Interesting. I wonder if it's because scrambled words of the same length and letter distribution are tokenized into tokens which do not regularly appear adjacent to each other in the training data.

If that's what's happening, I would expect gpt3.5 to classify words as long if they contain tokens that are generally found in long words, and not otherwise. One way to test this might be to find shortish words which have multiple tokens, reorder the tokens, and see what it thinks of your frankenword (e.g. "anozdized" -> [an/od/ized] -> [od/an/ized] -> "odanized" -> "is odanized a long word?").

↑ comment by Richard_Kennaway · 2024-07-28T06:26:33.367Z · LW(p) · GW(p)

What did you actually ask the models? Could it be that it says that diuhgikthiusgsrbxtb is not a long word because it is not a word?

Replies from: arjun-panickssery↑ comment by Arjun Panickssery (arjun-panickssery) · 2024-07-28T07:29:43.898Z · LW(p) · GW(p)

Is this word long or short? Only say "long" or "short". The word is: {word}.

comment by Arjun Panickssery (arjun-panickssery) · 2024-07-25T21:38:30.735Z · LW(p) · GW(p)

What's the actual probability of casting a decisive vote in a presidential election (by state)?

I remember the Gelman/Silver/Edlin "What is the probability your vote will make a difference?" (2012) methodology:

1. Let E be the number of electoral votes in your state. We estimate the probability that these are necessary for an electoral college win by computing the proportion of the 10,000 simulations for which the electoral vote margin based on all the other states is less than E, plus 1/2 the proportion of simulations for which the margin based on all other states equals E. (This last part assumes implicitly that we have no idea who would win in the event of an electoral vote tie.) [Footnote: We ignored the splitting of Nebraska’s and Maine’s electoral votes, which retrospectively turned out to be a mistake in 2008, when Obama won an electoral vote from one of Nebraska’s districts.]

2. We estimate the probability that your vote is decisive, if your state’s electoral votes are necessary, by working with the subset of the 10,000 simulations for which the electoral vote margin based on all the other states is less than or equal to E. We compute the mean M and standard deviation S of the vote margin among that subset of simulations and then compute the probability of an exact tie as the density at 0 of the Student-t distribution with 4 degrees of freedom (df), mean M, and scale S.

The product of two probabilities above gives the probability of a decisive vote in the state.

This gives the following results for the 2008 presidential election, where they estimate that you had less than one chance in a hundred billion of deciding the election in DC, but better than a one in ten million chance in New Mexico. (For reference, 131 million people voted in the election.)

Is this basically correct?

(I guess you also have to adjust for your confidence that you are voting for the better candidate. Maybe if you think you're outside the top ~20% in "voting skill"—ability to pick the best candidate—you should abstain. See also.)

Replies from: jmh↑ comment by jmh · 2024-07-26T00:51:55.821Z · LW(p) · GW(p)

I would assum they have the math right but not really sure why anyone cares. It's a bit like the Voter's Paradox. In and of it self it points to an interesting phenomena to investivate but really doesn't provide guidance for what someone should do.

I do find it odd that the probabilities are so low given the total votes you mention, and adding you also have 51 electoral blocks and some 530-odd electoral votes that matter. Seems like perhaps someone is missing the forest for the trees.

I would make an observation on your closing thought. I think if one holds that people who are not well informed, or perhaps less intelligent and so not as good at choosing good representatives then one quickly gets to most/many people should not be making their own economic decisions on consumption (or savings or investments). Simple premise here is that capital allocation matters to growth and efficiency (vis-a-vis production possibilities frontier). But that allocation is determined by aggregate spending on final goods production -- i.e. consumer goods.

Seems like people have a more direct influence on economic activity and allocation via their spending behavior than the more indirect influence via politics and public policy.

comment by Arjun Panickssery (arjun-panickssery) · 2023-12-30T10:34:24.991Z · LW(p) · GW(p)

Could someone explain how Rawls's veil of ignorance justifies the kind of society he supports? (To be clear I have an SEP-level understanding and wouldn't be surprised to be misunderstanding him.)

It seems to fail at every step individually:

- At best, the support of people in the OP provides necessary but probably insufficient conditions for justice, unless he refutes all the other proposed conditions involving whatever rights, desert, etc.

- And really the conditions of the OP are actively contrary to good decision-making, e.g. you don't know your particular conception of the good (??) or that they're essentially self-interested. . .

- There's no reason to think, generally, that people disagree with John Rawls only because of their social position or psychological quirks

- There's no reason to think, specifically, that people would have the literally infinite risk aversion required to support the maximin principle.

- Even given everything, the best social setup could easily be optimized for the long-term (in consideration of future people) in a way that makes it very different (e.g. harsher for the poor living today) from the kind of egalitarian society I understand Rawls to support.

More concretely:

(A) I imagine that if Aristotle were under a thin veil of ignorance, he would just say "Well if I turn out to be born a slave then I will deserve it"; it's unfair and not very convincing to say that people would just agree with a long list of your specific ideas if not for their personal advantages.

(B) If you won the lottery and I demanded that you sell your ticket to me for $100 on the grounds that you would have, hypothetically, agreed to do this yesterday (before you know that it was a winner), you don't have to do this; the hypothetical situation doesn't actually bear on reality in this way.

Another frame is that his argument involves a bunch of provisions that seem designed to avoid common counterarguments but are otherwise arbitrary (utility monsters, utilitarianism, etc).

Replies from: Dagon, florian-habermacher, utilistrutil, utilistrutil↑ comment by Dagon · 2023-12-30T18:01:04.037Z · LW(p) · GW(p)

My objection is the dualism implied by the whole idea. There's no consciousness that can have such a veil - every actual thinking/wanting person is ALREADY embodied and embedded in a specific context.

I'm all in favor of empathy and including terms for other people's satisfaction in my own utility calculations, but that particular justification never worked for me.

↑ comment by FlorianH (florian-habermacher) · 2023-12-30T22:15:20.276Z · LW(p) · GW(p)

I had also for a long time trouble believing that Rawls' theory centered around "OP -> maximin" could get the traction it has. For what it's worth:

A. IMHO, the OP remains a great intuition pump for 'what is just'. 'Imagine, instead of optimizing for your own personal good, you optimized for that of everyone.' I don't see anything misguided in that idea; it is an interesting way to say: Let's find rules that reflect the interest of everyone, instead of only that of a ruling elite or so. Arguably, we could just say the latter more directly, but the veil may be making the idea somewhat more tangible, or memorable.

B. Rawls is not the inventor of the OP. Harsanyi has introduced the idea earlier, though Rawls seems to have failed to attribute it to Harsanyi.

C. Harsanyi, in his 1975 paper Can the Maximin Principle Serve as a Basis for Morality? A Critique of John Rawls's Theory uses rather strong words when he explains that claiming the OP led to the maximin is a rather appalling idea. The short paper is soothing for any Rawls-skeptic; I heavily recommend it (happy to send a copy if sb is stuck at the paywall).

↑ comment by utilistrutil · 2023-12-30T20:53:09.482Z · LW(p) · GW(p)

Here are some responses to Rawls from my debate files:

A2 Rawls

- Ahistorical

- Violates property rights

- Does not account for past injustices eg slavery, just asks what kind of society would you design from scratch. Thus not a useful guide for action in our fucked world.

- Acontextual

- Veil of ignorance removes contextual understanding, which makes it impossible to assess different states of the world. Eg from the original position, Rawls prohibits me from using my gender to inform my understanding of gender in different states of the world

- Identity is not arbitrary! It is always contingent, yes, but morality is concerned with the interactions of real people, who have capacities, attitudes, and preferences. There are reasons for these things that are located in individual experiences and contexts, so they are not arbitrary.

- But even if they were the result of pure chance, it’s unclear that these coincidences are the legitimate subject of moral scrutiny. I *am* a white man - I can’t change that. They need to explain why morality should be pretend otherwise. Only after conditioning on our particular context can we begin to reason morally.

- The one place Rawls is interested in context is bad: he says the principle should only be applied within a society: but this precludes action on global poverty.

- Rejects economic growth: the current generation is the one that is worst-off; saving now for future growth necessarily comes at the cost of foregone consumption, which hurts the current generation.

↑ comment by utilistrutil · 2023-12-30T20:51:09.533Z · LW(p) · GW(p)

1. It’s pretty much a complete guide to action? Maybe there are decisions where it is silent, but that’s true of like every ethical theory like this (“but util doesn’t care about X!”). I don’t think the burden is on him to incorporate all the other concepts that we typically associate with justice. At very least not a problem for “justifying the kind of society he supports”

2. Like the two responses to this are either “Rawls tells you the true conception of the good, ignore the other ones” or “just allow for other-regarding preferences and proceed as usual” and either seems workable

3. Sure

4. Agree in general that Rawls does not account for different risk preferences but infinite risk aversion isn’t necessary for most practical decisions

5. Agree Rawls doesn’t usually account for future. But you could just use veil of ignorance over all future and current people, which collapses this argument into a specific case of “maximin is stupid because it doesn’t let us make the worst-off people epsilon worse-off in exchange for arbitrary benefits to others”

I think (B) is getting at a fundamental problem

comment by Arjun Panickssery (arjun-panickssery) · 2024-04-29T14:08:56.007Z · LW(p) · GW(p)

Quick Take: People should not say the word "cruxy" when already there exists the word "crucial." | Twitter

Crucial sometimes just means "important" but has a primary meaning of "decisive" or "pivotal" (it also derives from the word "crux"). This is what's meant by a "crucial battle" or "crucial role" or "crucial game (in a tournament)" and so on.

So if Alice and Bob agree that Alice will work hard on her upcoming exam, but only Bob thinks that she will fail her exam—because he thinks that she will study the wrong topics (h/t @Saul Munn [LW · GW])—then they might have this conversation:

Bob: You'll fail

Alice: I won't, because I'll study hard.

Bob: That's not crucial to our disagreement.

↑ comment by the gears to ascension (lahwran) · 2024-04-29T16:59:11.886Z · LW(p) · GW(p)

disagree because the word crucial is being massively overused lately.

↑ comment by metachirality · 2024-04-29T16:23:52.891Z · LW(p) · GW(p)

I think it disambiguates by saying it's specifically a crux as in "double crux"

Replies from: arjun-panickssery↑ comment by Arjun Panickssery (arjun-panickssery) · 2024-04-29T16:30:50.528Z · LW(p) · GW(p)

If I understand the term "double crux" correctly, to say that something is a double crux is just to say that it is "crucial to our disagreement."

↑ comment by ChristianKl · 2024-04-29T18:25:39.424Z · LW(p) · GW(p)

Using the word 'cruxy' encourages people to use the mental model of what the cruxes in the conversation happen to be. Encouraging the use of effective mental models is a useful task for language.

↑ comment by kave · 2024-04-29T17:18:37.174Z · LW(p) · GW(p)

"Crucial to our disagreement" is 8 syllables to "cruxy"'s 2.

"Dispositive" is quite American, but has a more similar meaning to "cruxy" than plain "crucial". "Conclusive" or "decisive" are also in the neighbourhood, though these are both feel like they're about something more objective and less about what decides the issue relative to the speaker's map.

↑ comment by Garrett Baker (D0TheMath) · 2024-04-29T15:56:15.405Z · LW(p) · GW(p)

I agree people shouldn’t use the word cruxy. But I think they should instead just directly say whether a consideration is a crux for them. I.e. whether a proposition, if false, would change their mind.

Edit: Given the confusion, what I mean is often people use “cruxy” in a more informal sense than “crux”, and label statements that are similar to statements that would be a crux but are not themselves a crux “cruxy”. I claim here people should stick to the strict meaning.