Posts

Comments

I think there are several potential paths of AGI leading to authoritarianism.

For example consider AGI in military contexts: people might be unwilling to let it make very autonomous decisions, and on that basis, military leaders could justify that these systems be loyal to them even in situations where it would be good for the AI to disobey orders.

Regarding your point about requirement of building a group of AI researchers, these researchers could be AIs themselves. These AIs could be ordered to make future AI systems secretly loyal to the CEO. Consider e.g. this scenario (from Box 2 in Forethought's new paper):

In 2030, the US government launches Project Prometheus—centralising frontier AI development and compute under a single authority. The aim: develop superintelligence and use it to safeguard US national security interests. Dr. Nathan Reeves is appointed to lead the project and given very broad authority.

After developing an AI system capable of improving itself, Reeves gradually replaces human researchers with AI systems that answer only to him. Instead of working with dozens of human teams, Reeves now issues commands directly to an army of singularly loyal AI systems designing next-generation algorithms and neural architectures.

Approaching superintelligence, Reeves fears that Pentagon officials will weaponise his technology. His AI advisor, to which he has exclusive access, provides the solution: engineer all future systems to be secretly loyal to Reeves personally.

Reeves orders his AI workforce to embed this backdoor in all new systems, and each subsequent AI generation meticulously transfers it to its successors. Despite rigorous security testing, no outside organisation can detect these sophisticated backdoors—Project Prometheus' capabilities have eclipsed all competitors. Soon, the US military is deploying drones, tanks, and communication networks which are all secretly loyal to Reeves himself.

When the President attempts to escalate conflict with a foreign power, Reeves orders combat robots to surround the White House. Military leaders, unable to countermand the automated systems, watch helplessly as Reeves declares himself head of state, promising a "more rational governance structure" for the new era.

Relatedly, I'm curious what you think of that paper and the different scenarios they present.

Yeah, I can see why that's possible. But I wasn't really talking about the improbable scenario where ASI would be aligned to the whole of humanity/country, but about a scenario where ASI is 'narrowly aligned' in the sense that it's aligned to its creators/whoever controls it when it's created. This is IMO much more likely to happen since technologies are not created in a vacuum.

No, I wasn't really talking about any specific implementation of democracy. My point was that, given the vast power that ASI grants to whoever controls it, the traditional checks and balances would be undermined.

Now, regarding your point that aligning AGI with what democracy is actually supposed to be, I have two objections:

- To me, it's not clear at all why it would be straightforward to align AGI with some 'democratic ideal'. Arrow's impossibility theorem shows that no perfect voting system exists, so an AGI trying to implement the "perfect democracy" will eventually have to make value judgments about which democratic principles to prioritize (although I do think that an AGI could, in principle, help us find ways to improve upon our democracies).

- Even if aligning AGI with democracy would in principle be possible, we need to look at the political reality the technology will emerge from. I don't think it's likely that whichever group that would end up controlling AGI would willingly want to extend its alignment to other groups of people.

I don't think it's possible to align AGI with democracy. AGI, or at least ASI, is an inherently political technology. The power structures that ASI creates within a democratic system would likely destroy the system from within. Whichever group would end up controlling an ASI would get decisive strategic advantage over everyone else within the country, which would undermine the checks and balances that make democracy a democracy.

Is your goal to identify double-cruxes in a podcast? If so, our tool might not be the best for that, since it's supposed to be used in live conversations as a kind of a mediator. Currently, the Double-Crux Bot can only be used either as a bot that you add to your Slack / Discord, or by joining our Discord channel.

Probably more useful for you is this: In a recent hackathon, Tilman Bayer produced a prompt that was used to extract double-cruxes in from a debate. As a model they used Gemini 2.0 Flash Thinking, you can see the prompt here: https://docs.google.com/presentation/d/1Igs6T-elz61xysRCMQmZVX8ysfVX4NgTItwXPnrwrak/edit#slide=id.g33c29319e5a_0_0

Yes I've heard about it (I'm based in Switzerland myself!)

I don't think it changes the situation that much though, since OpenAI, Anthropic, and Google are still mostly American-owned companies

If we don't build fast enough, then the authoritarian countries could win.

If you build AI for the US, you're advancing the capabilities of an authoritarian country at this point.

I think people who are worried about authoritarian regimes getting access to AGI should seriously reconsider whether advancing US leadership in AI is the right thing to do. After the new Executive Order, Trump seems to have sole interpretation of law, and there are indications that the current admin won't follow court rulings. I think it's quite likely that the US won't be a democracy soon, and this argument about advancing AI in democracies doesn't apply to the US anymore.

Really interesting work! I have two questions:

1. In the model organisms of misalignment -section it is stated that AI companies might be nervous about researching model organisms because it could increase the likelihood of new regulation, since it would provide more evidence on concerning properties in AI system. Doesn't this depend on what kind of model organisms the company expects to be able to develop? If it's difficult to find model organisms, we would have evidence that alignment is easier and thus there would be less need for regulation.

2. Why didn't you listed AI control work as one of the areas that may be slow to progress without efforts from outside labs? According to your incentives analysis it doesn't seem like AI companies have many incentives to pursue this kind of work, and there were zero papers on AI control.

I've also found "spreadsheet literacy" a recurring skill

What exactly do you use spreadsheets for? Any examples?

Unfortunately the bot works only in Discord and Slack.

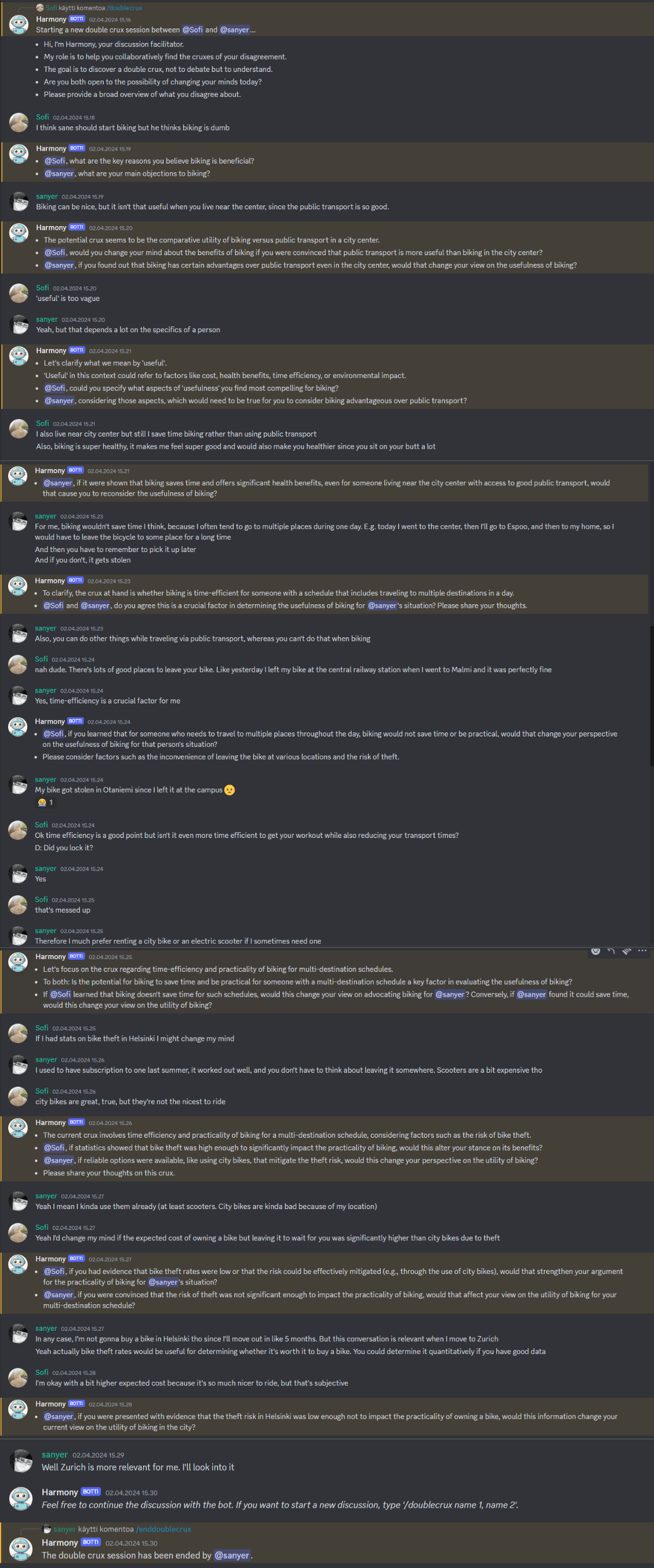

Here's another about biking:

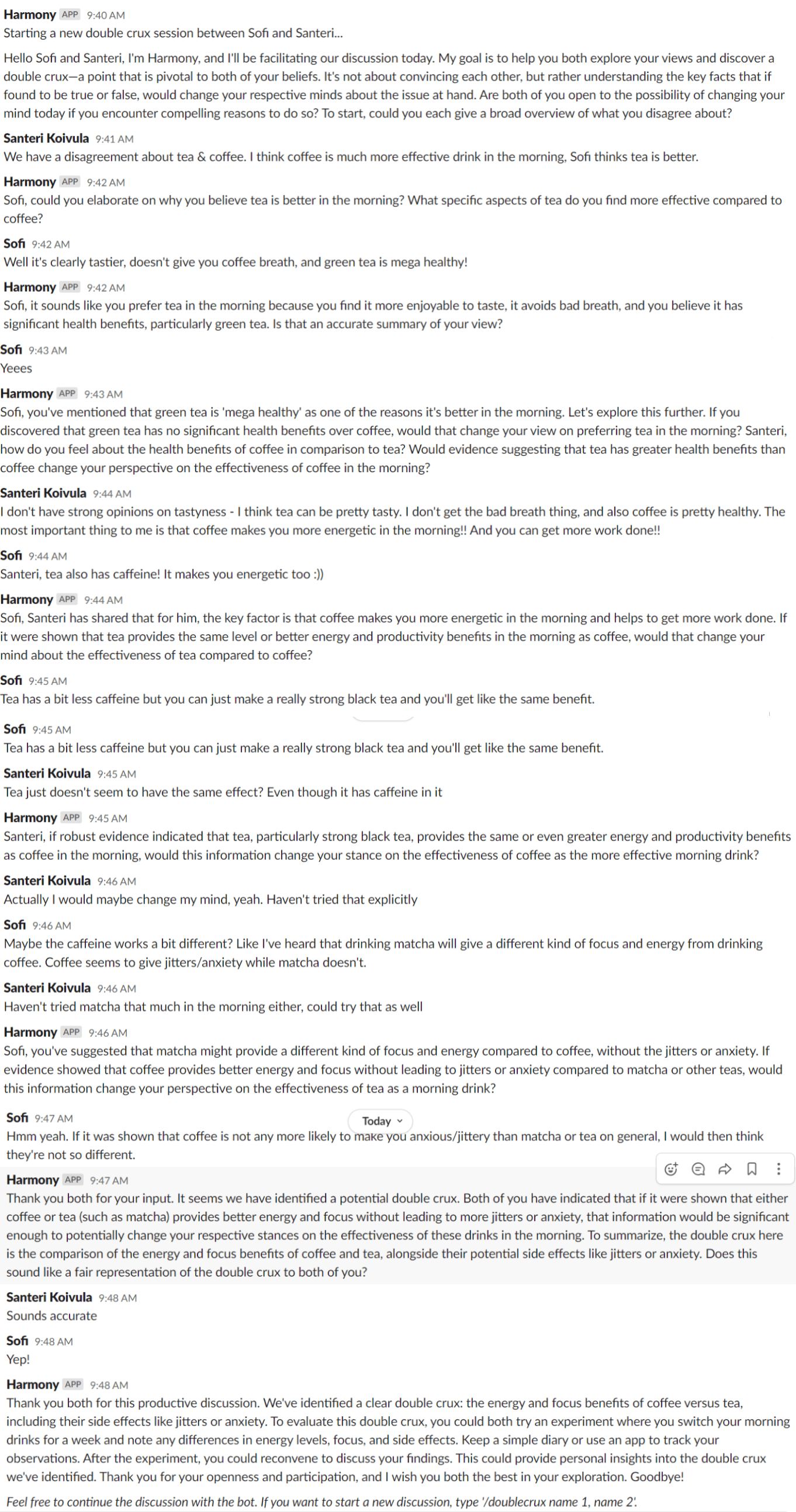

Sure! Here's a simple conversation about tea:

Filtering for "people who can afford to pay for a workshop" works pretty well.

This is surprising to me. It seems to assume income is just based on general competence, which doesn't seem true to me. There are a lot of people who seem to have these traits who would find it really difficult to pay for this, and vice versa

I can see why you think it would be contradictory. The idea in the example was that both of you want better working environment in your workplace, but you have different opinions on how to get there. Whereas the disclaimers were about situations where this is not the case. For example, a situation where the other person doesn't care about a safe working environment. Does that make it clearer?

We are probably going to change the example if it's unclear though

When is the deadline for applying?

Some of the links in this post don't work for me. They seem to be links to localhost.

Is there a tag for posts applying CFAR-style rationality techniques? I'm a bit surprised that I haven't found one yet, and also a bit surprised by how few posts of people applying CFAR-style techniques (like internal double crux) there are.

It doesn't seem sufficient anymore to have a VPN in order to get an access to Claude. You also need a UK/US -based phone number. If anyone knows how to get around this, please let me know!