CFAR Takeaways: Andrew Critch

post by Raemon · 2024-02-14T01:37:03.931Z · LW · GW · 64 commentsContents

"What surprised you most during your time at CFAR? Surprise 1: People are profoundly non-numerate. Surprise 2: People don't realize they need to get over things. Surprise 3: When I learned Inner Simulator from Kenzie, I was surprised that it helped with everything in life forever. Surprise 4: How much people didn't seem to want things What do other people seem surprised or confused about, which are important if they're gonna do something rationality-training-ish Internal Family Systems (IFS) is basically true for most people External memory is essential to intelligence augmentation. People like concocting narratives where their problems are insolvable. You once told me that there were ~20 things a person needed to be generally competent. What were they? None 64 comments

I'm trying to build my own art of rationality training, and I've started talking to various CFAR instructors about their experiences – things that might be important for me to know but which hadn't been written up nicely before.

This is a quick write up of a conversation with Andrew Critch about his takeaways. (I took rough notes, and then roughly cleaned them up for this. Some of my phrasings might not exactly match his intended meaning, although I've tried to separate out places where I'm guessing what he meant from places where I'm repeating his words as best I can)

Note that Andrew Critch is one particular member of the early CFAR team. My sense is each CFAR founder had their own taste on "what's most important". Treat this as one stone in a mosaic of takeaways.

"What surprised you most during your time at CFAR?

Surprise 1: People are profoundly non-numerate.

And, people who are not profoundly non-numerate still fail to connect numbers to life.

I'm still trying to find a way to teach people to apply numbers for their life. For example: "This thing is annoying you. How many minutes is it annoying you today? how many days will it annoy you?". I compulsively do this. There aren't things lying around in my life that bother me because I always notice and deal with it.

People are very scale-insensitive. Common loci of scale-insensitivity include jobs, relationship, personal hygiene habits, eating habits, and private things people do in their private homes for thousands of hours.

I thought it'd be easy to use numbers to not suck.

Surprise 2: People don't realize they need to get over things.

There was a unit a CFAR called 'goal factoring [LW · GW]'. Early in it's development, the instructor would say to their class: "if you're doing something continuously, fill out a 2x2 matrix", where you ask: 1) does this bother me? (yes or not), and 2) is it a problem? (yes or no).

"Some things will bother you and not be a problem. This unit is not for that."

The thing that surprised me, was telling the instructor: "C'mon instructor. It's not necessary to spell out that people just need to accept some things and get over them. People know that, it's not worth spending the minute on it."

At the next class, the instructor asked the class: "When something bothers you, do you ask if you need to get over it?". 10% of people raised their hand. People didn't know the "realize that some things bother you but it's not a problem and you can get over it."

Surprise 3: When I learned Inner Simulator from Kenzie, I was surprised that it helped with everything in life forever.

[I replied: "I'm surprised that you were surprised. I'd expect that to have already been part of your repertoire."]

The difference between Inner Simulator and the previous best tool I had was:

Previously, I thought of my system 1 as something that both "decided to make queries" and "returned the results of the queries." i.e. my fast intuitions would notice something and give me information about it. I previously thought of "inner sim" as a different intelligence that worked on it's own.

The difference with Kenzie's "Inner Sim" approach is that my System 2 could decide when to query System 1. And then System 1 would return the query with its anticipations (which System 2 wouldn't be able to generate on its own).

[What questions is System 1 good at asking that System 2 wouldn't necessarily ask?]

System 1 is good at asking "is this person screwing me over?" without my S2 having to realize that now's a good time to ask that question. (S2 also does sometimes ask this question, at complementary times)

Surprise 4: How much people didn't seem to want things

And, the degree to which people wanted things was even more incoherent than I thought. I thought people wanted things but didn't know how to pursue them.

[I think Critch trailed off here, but implication seemed to be "basically people just didn't want things in the first place"]

What do other people seem surprised or confused about, which are important if they're gonna do something rationality-training-ish

Rationality is pretty hopeless without scope sensitivity, which is pretty hopeless without numeracy.

Me: What are some ways you've tried/failed to teach numeracy? Were there any glimmers of success that maybe point the way?

One glimmer of hope was "visualizations" and comparisons to things people know. If there's a 1/50 chance of something working, that's kinda like shuffling a deck of cards.

Ten minutes a day is 60 hours a year. If something eats 10 minutes each day, you'd break even in a year if you spent a whole work week getting rid of it forever.

Me: What are some surprising things that didn't work?

I kept hoping there'd be little blockers I could remove, like getting people to use a piece of paper to deal with numbers, or telling people to round things to a power of 1, 10, 30, 100.

Internal Family Systems (IFS) is basically true for most people

Everyone seems to have parts of themselves they don't respect, who start to behave better when they respect those parts.

Observation: I'd see a person say something like "there's nothing I can do about blah, because [reason]."

I'd ask them "Could I talk to that part like it's a person?"

They say: "Okay..."

I say: "Hey part, what are you worried about?"

"X"

"Let's talk about X"

And they kinda have this feeling of surprise, like they didn't realize this part of them had any kind of valuable thing to say. And often that part-of-them comes up with the reason.

Me: How many people did you try IFS with, and how many did it help?

I tried this with about 100 people, and I think maybe with two people it didn't work.

External memory is essential to intelligence augmentation.

If your personality type is "writing doesn't work for me", one of your biggest bottlenecks is to make writing work for you. You can also try diagramming.

My update as an instructor is "if a person is not open to learning to use external visual media to help them think, give up on helping them until they've become open to that." The gains per unit time will probably be very low. Focus your energy on helping them fix that, or give up on helping that person.

People like concocting narratives where their problems are insolvable.

If "they already tried it and it didn't work" they're real into that [Ray interpretation: as an excuse not to try more].

People's needs change a lot, so you can try teaching them Goal Factoring in February and it didn't help, but then in August maybe they need it, but they (and you) already learned "Goal factoring didn't work for them." So, watch out for that.

You once told me that there were ~20 things a person needed to be generally competent. What were they?

I'm not sure I had an exact list, but I'll try to generate one now and see how many things are on it:

- Numeracy

- Reading

- Writing

- Logic

- Introspection (such as focusing)

- Affective Memory

- ability to remember how you feel awhile later

- Episodic Memory

- Procedural Memory

- ability to retain procedures for taking actions

- Causal Diagramming

- or, some kind of external media, other than writing

- Ability to use Bayes' rule. The "odds ratio" form of Bayes Theorem.

- Bayes rule specifically has fewer mental operations than most other forms of probability. It fits in fewer working memory slots.

- Ability to clear your mind

- or, return your mind to some base state

- Executive Function and Impulse control

- Executive function: ability to generate an impulse that's not there,

- Impulse control: ability to override an impulse

- Sustaining attention on a thing

- Empathy

- sense of boundaries

- sense of people's cognitive boundary, physical boundaries

- "I don't want to get into that"

- Sense of your own boundaries

- willingness to have boundaries

- Curiosity

- You'll play your cards better if you know what game you're playing

- i.e. if I don't know stuff I'll perform worse, so it's good for me to find stuff out

- "the truth is relevant."

- Ability to go sleep

- Sleep deprivation is one of the greatest effect sizes for IQ

- Social skills?

- track common knowledge, and interface smoothly with it

- people who need to say "common knowledge" a lot are not doing it

- social metacognition

- the thing that the "unrolling social metacognition"

- track common knowledge, and interface smoothly with it

- Ability to express oneself verbally

- (if we're talking "ability to impact the world", not so much

Phrasing is Ray's, reconstructed a few months later:

I don't feel optimistic about training that gets people from 0 of these skills to all 19. But I feel hope about finding people who have like 17 of these skills, and getting them the last missing 2 that they need.

[What's a good way to filter for people who already have 17 of these skills?]

People who are already pretty high functioning probably have most of the skills. Filtering for "people who can afford to pay for a workshop" works pretty well. CFAR workshops were $4k. People for whom that seemed like a good deal are probably people who already have most of the skills on this list.

64 comments

Comments sorted by top scores.

comment by AnnaSalamon · 2024-02-25T05:17:38.750Z · LW(p) · GW(p)

I'm trying to build my own art of rationality training, and I've started talking to various CFAR instructors about their experiences – things that might be important for me to know but which hadn't been written up nicely before.

Perhaps off topic here, but I want to make sure you have my biggest update if you're gonna try to build your own art of rationality training.

It is, basically: if you want actual good to result from your efforts, it is crucial to build from and enable consciousness and caring, rather than to try to mimic their functionality.

If you're willing, I'd be quite into being interviewed about this one point for a whole post of this format, or for a whole dialog, or to talking about it with you in some other way, means, since I don't know how to say it well and I think it's crucial. But, to babble:

Let's take math education as an analogy. There's stuff you can figure out about numbers, and how to do things with numbers, when you understand what you're doing. (e.g., I remember figuring out as a kid, in a blinding flash about rectangles, why 2*3 was 3*2, why it would always work). And other people can take these things you can figure out, and package them as symbol-manipulation rules that others can use to "get the same results" without the accompanying insights. But... it still isn't the same things as understanding, and it won't get your students the same kind of ability to build new math or to have discernment about which math is any good.

Humans are automatically strategic sometimes. Maybe not all the way, but a lot more deeply than we are in "far-mode" contexts. For example, if you take almost anybody and put them in a situation where they sufficiently badly need to pee, they will become strategic about how to find a restroom. We are all capable of wanting sometimes, and we are a lot closer to strategic at such times.

My original method of proceeding in CFAR, and some other staff members' methods also, was something like:

- Find a person, such as Richard Feynman or Elon Musk or someone a bit less cool than that but still very cool who is willing to let me interview them. Try to figure out what mental processes they use.

- Turn these mental processes into known, described procedures that system two / far-mode can invoke on purpose, even when the vicera do not care about a given so-called "goal."

(For example, we taught processes such as: "notice whether you viscerally expect to achieve your goal. If you don't, ask why not, solve that problem, and iterate until you have a plan that you do viscerally anticipate will succeed." (aka inner sim / murphyjitsu.))

My current take is that this is no good -- it teaches non-conscious processes how to imitate some of the powers of consciousness, but in a way that lacks its full discernment, and that can lead to relatively capable non-conscious, non-caring processes doing a thing that no one who was actually awake-and-caring would want to do. (And can make it harder for conscious, caring, but ignorant processes, such as youths, to tell the difference between conscious/caring intent, and memetically hijacked processes in the thrall of institutional-preservation-forces or similar.) I think it's crucial to more like start by helping wanting/caring/consciousness to become free and to become in charge. (An Allan Bloom quote that captures some but not all of what I have in mind: "There is no real education that does not respond to felt need. All else is trifling display.")

comment by AnnaSalamon · 2024-02-25T04:03:56.722Z · LW(p) · GW(p)

Surprise 4: How much people didn't seem to want things

And, the degree to which people wanted things was even more incoherent than I thought. I thought people wanted things but didn't know how to pursue them.

[I think Critch trailed off here, but implication seemed to be "basically people just didn't want things in the first place"]

I concur. From my current POV, this is the key observation that should've, and should still, instigate a basic attempt to model what humans actually are and what is actually up in today's humans. It's too basic a confusion/surprise to respond to by patching the symptoms without understanding what's underneath.

I also quite appreciate the interview as a whole; thanks, Raemon and Critch!

Replies from: Raemon, xpym↑ comment by Raemon · 2024-02-25T19:53:06.890Z · LW(p) · GW(p)

POV, this is the key observation that should've, and should still, instigate a basic attempt to model what humans actually are and what is actually up in today's humans. It's too basic a confusion/surprise to respond to by patching the symptoms without understanding what's underneath.

On one hand, when you say it like that, it does seem pretty significant.

I'm not sure I think there's that much confusion to explain? Like, my mainline story here is:

- Humans are mostly a kludge of impulses which vary in terms of how coherent / agentic they are. Most of them have wants that are fairly basic, and don't lend themselves super well to strategic thinking. (I think most of them also consider strategic thinking sort of uncomfortable/painful). This isn't that weird, because, like, having any kind of agency at all is an anomaly. Most animals have only limited agency and wanting-ness.

- There's some selection effect where the people who might want to start Rationality Orgs are more agentic, have more complex goals, and find deliberate thinking about their wants and goals more natural/fun/rewarding.

- The "confusion" is mostly a typical mind error on the part of people like us, and if you look at evolution the state of most humans isn't actually that weird or surprising.

Perhaps something I'm missing or confused about is what exactly Critch (or, you, if applicable?) mean by "people don't seem to want things." I maybe am surprised that the filtering effect of people who showed up at CFAR workshops or similar still didn't want things.

Can you say a bit more about what you've experienced, and what felt surprising or confusing about it?

Replies from: AnnaSalamon↑ comment by AnnaSalamon · 2024-02-25T21:09:26.354Z · LW(p) · GW(p)

Some partial responses (speaking only for myself):

1. If humans are mostly a kludge of impulses, including the humans you are training, then... what exactly are you hoping to empower using "rationality training"? I mean, what wants-or-whatever will they act on after your training? What about your "rationality training" will lead them to take actions as though they want things? What will the results be?

1b. To illustrate what I mean: once I taught a rationality technique to SPARC high schoolers (probably the first year of SPARC, not sure; I was young and naive). Once of the steps in the process involved picking a goal. After walking them through all the steps, I asked for examples of how it had gone, and was surprised to find that almost all of them had picked such goals as "start my homework earlier, instead of successfully getting it done at the last minute and doing recreational math meanwhile"... which I'm pretty sure was not their goal in any wholesome sense, but was more like ambient words floating around that they had some social allegiance to. I worry that if you "teach" "rationality" to adults who do not have wants, without properly noticing that they don't have wants, you set them up to be better-hijacked by the local memeset (and to better camouflage themselves as "really caring about AI risk" or whatever) in ways that won't do anybody any good because the words that are taking the place of wants don't have enough intelligence/depth/wisdom in them.

2. My guess is that the degree of not-wanting that is seen among many members of the professional and managerial classes in today's anglosphere is more extreme than the historical normal, on some dimensions. I think this partially because:

a. IME, my friends and I as 8-year-olds had more wanting than I see in CFAR participants a lot of the time. My friends were kids who happened to live on the same street as me growing up, so probably pretty normal. We did have more free time than typical adults.

i. I partially mean: we would've reported wanting things more often, and an observer with normal empathy would on my best guess have been like "yes it does seem like these kids wish they could go out and play 4-square" or whatever. (Like, wanting you can feel in your body as you watch someone, as with a dog who really wants a bone or something).

ii. I also mean: we tinkered, toward figuring out the things we wanted (e.g. rigging the rules different ways to try to make the 4-square game work in a way that was fun for kids of mixed ages, by figuring out laxer rules for the younger ones), and we had fun doing it. (It's harder to claim this is different from the adults, but, like, it was fun and spontaneous and not because we were trying to mimic virtue; it was also this way when we saved up for toys we wanted. I agree this point may not be super persuasive though.)

b. IME, a lot of people act more like they/we want things when on a multi-day camping trip without phones/internet/work. (Maybe like Critch's post about allowing oneself to get bored?)

c. I myself have had periods of wanting things, and have had periods of long, bleached-out not-really-wanting-things-but-acting-pretty-"agentically"-anyway. Burnout, I guess, though with all my CFAR techniques and such I could be pretty agentic-looking while quite burnt out. The latter looks to me more like the worlds a lot of people today seem to me to be in, partly from talking to them about it, though people vary of course and hard to know.

d. I have a theoretical model in which there are supposed to be cycles of yang and then yin, of goal-seeking effort and then finding the goal has become no-longer-compelling and resting / getting board / similar until a new goal comes along that is more compelling. CFAR/AIRCS participants and similar people today seem to me to often try to stop this process -- people caffeinate, try to work full days, try to have goals all the time and make progress all the time, and on a large scale there's efforts to mess with the currency to prevent economic slumps. I think there's a pattern to where good goals/wanting come from that isn't much respected. I also think there's a lot of memes trying to hijack people [LW(p) · GW(p)], and a lot of memetic control structures that get upset when members of the professional and managerial classes think/talk/want without filtering their thoughts carefully through "will this be okay-looking" filters.

All of the above leaves me with a belief that the kinds of not-wanting we see are more "living human animals stuck in a matrix that leaves them very little slack to recover and have normal wants, with most of their 'conversation' and 'attempts to acquire rationality techniques' being hijacked by the matrix they're in rather than being earnest contact with the living animals inside" and less "this is simple ignorance from critters who're just barely figuring out intelligence but who will follow their hearts better and better as you give them more tools."

Apologies for how I'm probably not making much sense; happy to try other formats.

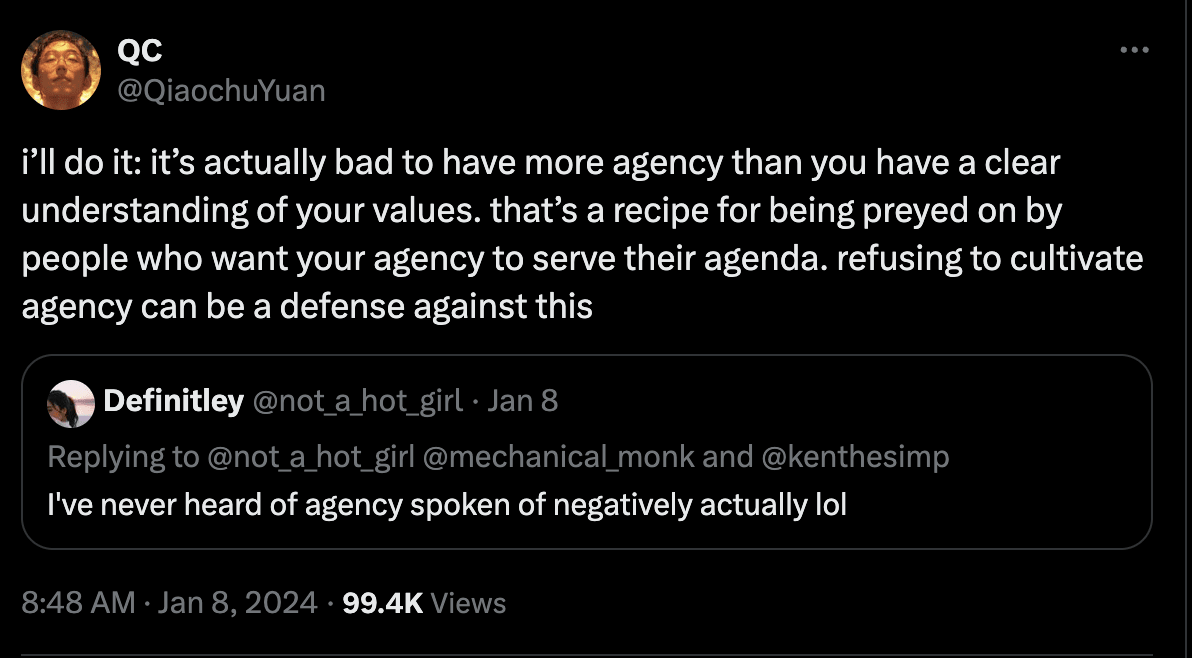

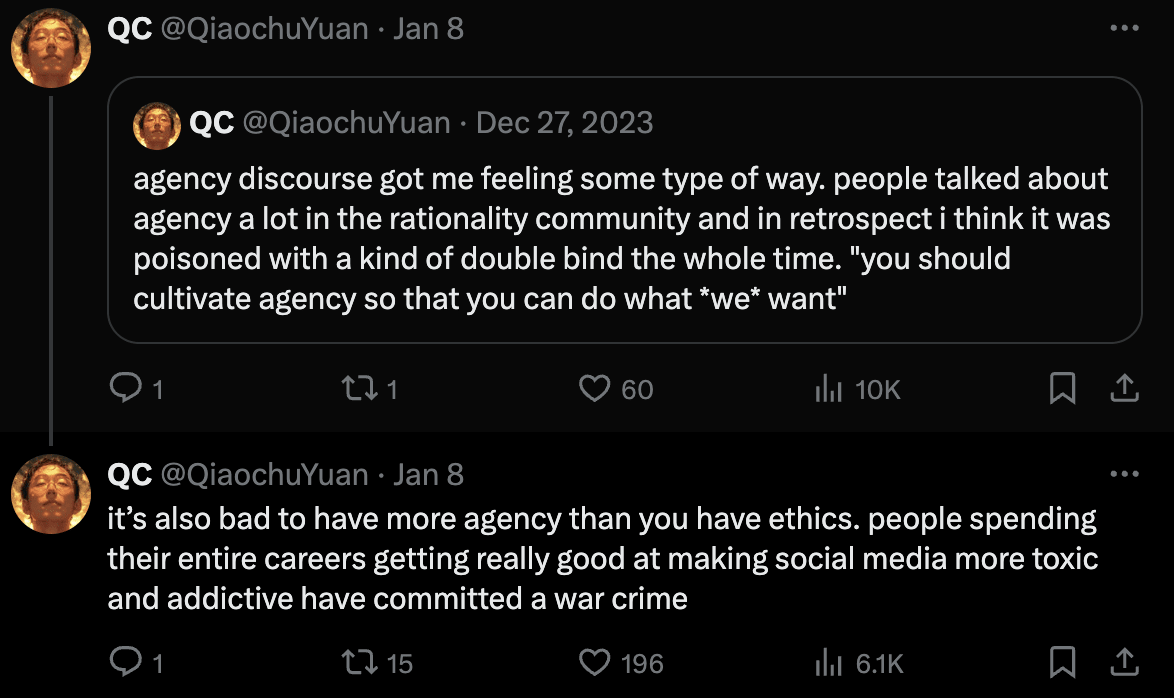

Replies from: AnnaSalamon, Wei_Dai, TekhneMakre↑ comment by AnnaSalamon · 2024-02-26T04:04:00.796Z · LW(p) · GW(p)

(I don't necessarily agree with QC's interpretation of what was going on as people talked about "agency" -- I empathize some, but empathize also with e.g. Kaj's comment in a reply that Kaj doesn't recognize this at from Kaj's 2018 CFAR mentorship training, did not find pressures there to coerce particular kinds of thinking).

My point in quoting this is more like: if people don't have much wanting of their own, and are immersed in an ambient culture that has opinions on what they should "want," experiences such as QC's seem sorta like the thing to expect. Which is at least a bit corroborated by QC reporting it.

Replies from: pktechgirl↑ comment by Elizabeth (pktechgirl) · 2024-02-27T02:03:41.366Z · LW(p) · GW(p)

I'm not sure if this is a disagreement or supporting evidence, but: I remember you saying you didn't want to teach SPARC kids too much [word similar to agency but not quite that. Maybe good at executing plans?], because they'd just use it to [coerce] themselves more. This was definitely before covid, maybe as far back as 2015 or 2016. I'm almost certain it was before QC even joined CFAR. It was a helpful moment for me.

↑ comment by Wei Dai (Wei_Dai) · 2024-02-28T07:18:12.131Z · LW(p) · GW(p)

If humans are mostly a kludge of impulses, including the humans you are training, then... what exactly are you hoping to empower using "rationality training"? I mean, what wants-or-whatever will they act on after your training? What about your "rationality training" will lead them to take actions as though they want things? What will the results be?

To give a straight answer to this, if I was doing rationality training (if I was agenty enough to do something like that), I'd have the goal that the trainees finish the training with the realization that they don't know what they want or don't currently want anything, but they may eventually figure out what they want or want something, and therefore in the interim they should accumulate resources/optionality, avoid doing harm (things that eventually might be considered irreversibly harmful), and push towards eventually figuring out what they want. And I'd probably also teach a bunch of things to mitigate the risk that the trainees too easily convince themselves that they've figured out what they want.

Replies from: Kaj_Sotala↑ comment by Kaj_Sotala · 2024-02-28T18:29:57.948Z · LW(p) · GW(p)

There's something about this framing that feels off to me and makes me worry that it could be counterproductive. I think my main concerns are something like:

1) People often figure out what they want by pursuing things they think they want and then updating on the outcomes. So making them less certain about their wants might prevent them from pursuing the things that would give them the information for actually figuring it out.

2) I think that people's wants are often underdetermined and they could end up wanting many different things based on their choices. E.g. most people could probably be happy in many different kinds of careers that were almost entirely unlike each other, if they just picked one that offered decent working conditions and committed to it. I think this is true for a lot of things that people might potentially want, but to me the framing of "figure out what you want" implies that people's wants are a lot more static than this.

I think this 80K article expresses these kinds of ideas pretty well in the context of career choice:

The third problem [with the advice of "follow your passion"] is that it makes it sound like you can work out the right career for you in a flash of insight. Just think deeply about what truly most motivates you, and you’ll realise your “true calling”. However, research shows we’re bad at predicting what will make us happiest ahead of time, and where we’ll perform best. When it comes to career decisions, our gut is often unreliable. Rather than reflecting on your passions, if you want to find a great career, you need to go and try lots of things.

The fourth problem is that it can make people needlessly limit their options. If you’re interested in literature, it’s easy to think you must become a writer to have a satisfying career, and ignore other options.

But in fact, you can start a career in a new area. If your work helps others, you practice to get good at it, you work on engaging tasks, and you work with people you like, then you’ll become passionate about it. The ingredients of a dream job we’ve found are most supported by the evidence, are all about the context of the work, not the content. Ten years ago, we would have never imagined being passionate about giving career advice, but here we are, writing this article.

Many successful people are passionate, but often their passion developed alongside their success, rather than coming first. Steve Jobs started out passionate about zen buddhism. He got into technology as a way to make some quick cash. But as he became successful, his passion grew, until he became the most famous advocate of “doing what you love”.

↑ comment by TekhneMakre · 2024-02-27T06:30:30.659Z · LW(p) · GW(p)

It makes sense, but I think it's missing that adults who try to want in the current social world get triggered and/or traumatized as fuck because everyone else is behaving the way you describe.

Replies from: AnnaSalamon↑ comment by AnnaSalamon · 2024-02-27T06:34:30.191Z · LW(p) · GW(p)

Totally. Yes.

↑ comment by xpym · 2024-02-26T15:03:42.396Z · LW(p) · GW(p)

What do people here think about Robin Hanson's view, for example as elaborated by him and Kevin Simler in the book Elephant in the Brain? I've seen surprisingly few mentions/discussions of this over the years in the LW-adjacent sphere, despite Hanson being an important forerunner of the modern rationalist movement.

One of his main theses, that humans are strategic self-deceivers, seems particularly important (in the "big if true" way), yet downplayed/obscure.

Replies from: AnnaSalamon↑ comment by AnnaSalamon · 2024-02-26T15:44:12.811Z · LW(p) · GW(p)

I love that book! I like Robin's essays, too, but the book was much easier for me to understand. I wish more people would read it, would review it on here, etc.

Replies from: xpym↑ comment by xpym · 2024-02-26T15:54:35.873Z · LW(p) · GW(p)

That's probably Kevin's touch. Robin has this almost inhuman detachment, which on the one hand allows him to see things most others don't, but on the other makes communicating them hard, whereas Kevin managed to translate those insights into engaging humanese.

Any prospective "rationality" training has to comprehensively grapple with the issues raised there, and as far as I can tell, they don't usually take center stage in the publicized agendas.

comment by romeostevensit · 2024-02-14T18:14:03.792Z · LW(p) · GW(p)

I'd chunk part of this as: most people (including me, often) don't habitually condition on their own thoughts/beliefs/actions and then query their own expectations for the most obvious results of those and are often surprised when the most obvious results are something they don't want. This reliably fails to induce the meta update that

-

This information is (apparently counterintuitively) available

-

I should engage with this information more often

-

Given 2, making upstream changes that make 2 more likely is higher impact than lots of object level changes and should be prioritized as such.

-

Given the past failure of 1, 2, 3 (the meta update) I should try to figure out what's going on and get even more upstream of useful patterns. This would be worth at least tens of hours of investigation on expectation.

For myself, the thing that finally clicked in this area was, for whatever reason (and I don't know if it will work for anyone else) noticing mental operations that can apply to themselves. The specific operation that happened was applying ooda loops to the concept of ooda loops.

I'd also broadly say of the meta update: I think human intuitions aren't fine tuned for happening to be in a +2-3sd brain loadout, so they aren't very good at cueing us to actually use those features reliably.

Replies from: Raemon, AnnaSalamon↑ comment by Raemon · 2024-02-15T00:32:38.464Z · LW(p) · GW(p)

I'd also broadly say of the meta update: I think human intuitions aren't fine tuned for happening to be in a +2-3sd brain loadout, so they aren't very good at cueing us to actually use those features reliably.

That's an interesting point I hadn't thought about before.

↑ comment by AnnaSalamon · 2024-02-25T04:04:52.912Z · LW(p) · GW(p)

. The specific operation that happened was applying ooda loops to the concept of ooda loops.

I love this!

comment by Vaniver · 2024-02-15T01:02:51.641Z · LW(p) · GW(p)

If "they already tried it and it didn't work" they're real into that [Ray interpretation: as an excuse not to try more].

I think I've had this narrative in a bunch of situations. My guess is I have it too much, and it's like fear-of-rejection where it's worth running the numbers and going out on a limb more than people would do by default. But also it really does seem like lots of people overestimate how easy problems are to solve, or how many 'standard' solutions people have tried, or so on. [And I think there's a similar overconfidence thing going on for the advice-giver, which generates some of the resistance.]

It's also not that obvious what the correct update is. Like, if you try a medication for problem X and it fails, it feels like that should decrease your probability that any sort of medication will solve the problem. But this is sort of like the sock drawer problem,[1] where it's probably easy to overestimate how much to update.

- ^

Suppose you have a chest of drawers with six drawers in it, and you think there's a 60% chance the socks are in the chest, and then they're not in the first five drawers you look in. What's the chance they're in the last drawer?

↑ comment by Elizabeth (pktechgirl) · 2024-02-16T00:56:10.247Z · LW(p) · GW(p)

Yeah. This is a real problem and the world is full of people leaving $100 bills on the table, but I can think of situations I expect the other person would describe as "Elizabeth isn't even trying to solve her problem" and I would describe as "Alice jumped to idiot-level solutions without even a token attempt to understand the context, she is clearly not going to be helpful and there isn't a point in trying to bring her up to speed". I suspect this is common, which is why "the kind of person who would suggest yoga for depression" is a codified insult.

And self-development instructors can be the absolute worst at this. They get used to helping people with low-hanging fruit and when their tricks don't work they blame the participant for not trying[1], instead of their algorithm being inadequate to a particular person's problems.

- ^

I'm not asserting that's what happened with Critch- there definitely are lots of people who just aren't trying and that's worth pointing out. Although in many cases I suspect there are underlying reasons that at least felt rational at one point.

↑ comment by Kaj_Sotala · 2024-02-16T06:56:33.929Z · LW(p) · GW(p)

Agree. The advice I've heard for avoiding this is, instead of saying "try X", ask "what have you already tried" and then ideally ask some follow-up questions to further probe why exactly the things they've tried haven't worked yet. You might then be able to offer advice that's a better fit, and even if it turns out that they actually haven't tried the thing, it'll likely still be better received because you made an effort to actually understand their problem first. (I've sometimes used the heuristic, "don't propose any solutions until you could explain to a third party why this person hasn't been able to solve their problem yet".)

Replies from: pktechgirl↑ comment by Elizabeth (pktechgirl) · 2024-02-17T08:03:03.869Z · LW(p) · GW(p)

It's funny. I've had friends make confident statements that I was missing something quite stupid, with a smirk in their voice, and it felt good because they were right. A friend pointing out something I was missing felt good and safe, and necessary, because no one can catch everything and everyone has blind spots, especially when they're upset.

So while "have you tried...?" or "what do you think about...?" are marginal improvements over "you should...", they're not getting at what I really want, which is highly skilled people who have my back.

↑ comment by AnnaSalamon · 2024-02-25T04:06:32.971Z · LW(p) · GW(p)

Okay, maybe? But I've also often been "real into that" in the sense that it resolves a dissonance in my ego-structure-or-something, or in the ego-structure-analog of CFAR or some other group-level structure I've been trying to defend, and I've been more into "so you don't get to claim I should do things differently" than into whether my so-called "goal" would work. Cf "people don't seem to want things."

↑ comment by mike_hawke · 2024-02-15T01:48:41.785Z · LW(p) · GW(p)

comment by WSS · 2024-02-23T20:47:53.803Z · LW(p) · GW(p)

And, the degree to which people wanted things was even more incoherent than I thought. I thought people wanted things but didn't know how to pursue them.

How does one figure out goals and what to want? Usually I find myself following the gradient of my impulses. When I can find coherent goals, executing is relatively straightforward. Finding goals in the first place is IMO much harder. If you map it onto the question of how to find meaning in life, it’s more colloquially recognizable as a hard thing!

Replies from: AnnaSalamon, CronoDAS, Benito, CstineSublime↑ comment by AnnaSalamon · 2024-02-25T04:30:02.540Z · LW(p) · GW(p)

I am pretty far from having fully solved this problem myself, but I think I'm better at this than most people, so I'll offer my thoughts.

My suggestion is to not attempt to "figure out goals and what to want," but to "figure out blockers that are making it hard to have things to want, and solve those blockers, and wait to let things emerge."

Some things this can look like:

- Critch's "boredom for healing from burnout" procedures. Critch has some blog posts recommending boredom (and resting until quite bored) as a method for recovering one's ability to have wants after burnout:

- Physically cleaning things out. David Allen recommends cleaning out one's literal garage (or, for those of us who don't have one, I'd suggest one's literal room, closet, inbox, etc.) so as to have many pieces of "stuck goal" that can resolve and leave more space in one's mind/heart (e.g., finding an old library book from a city you don't live in anymore, and either returning it anyhow somehow, or giving up on it and donating it to goodwill or whatever, thus freeing up whatever part of your psyche was still stuck in that goal).

- Refusing that which does not "spark joy." Marie Kondo suggests getting in touch with a thing you want your house to be like (e.g., by looking through magazines and daydreaming about your desired vibe/life), and then throwing out whatever does not "spark joy", after thanking those objects for their service thus far.

- Analogously, a friend of mine has spent the last several months refusing all requests to which they are not a "hell yes," basically to get in touch with their ability to be a "hell yes" to things.

- Repeatedly asking one's viscera "would there be anything wrong with just not doing this?". I've personally gotten a fair bit of mileage from repeatedly dropping my goals and seeing if they regenerate. For example, I would sit down at my desk, would notice at some point that I was trying to "do work" instead of to actually accomplish anything, and then I would vividly imagine simply ceasing work for the week, and would ask my viscera if there would be any trouble with that or if it would in fact be chill to simply go to the park and stare at clouds or whatever. Generally I would get back some concrete answer my viscera cared about, such as "no! then there won't be any food at the upcoming workshop, which would be terrible," whereupon I could take that as a goal ("okay, new plan: I have an hour of chance to do actual work before becoming unable to do work for the rest of the week; I should let my goal of making sure there's food at the workshop come out through my fingertips and let me contact the caterers" or whatever.

- Gendlin's "Focusing." For me and at least some others I've watched, doing this procedure (which is easier with a skilled partner/facilitator -- consider the sessions or classes here if you're fairly new to Focusing and want to learn it well) is reliably useful for clearing out the barriers to wanting, if I do it regularly (once every week or two) for some period of time.

- Grieving in general. Not sure how to operationalize this one. But allowing despair to be processed, and to leave my current conceptions of myself and of my identity and plans, is sort of the connecting thread through all of the above imo. Letting go of that which I no longer believe in.

I think the above works much better in contact also with something beautiful or worth believing in, which for me can mean walking in nature, reading good books of any sort, having contact with people who are alive and not despairing, etc.

↑ comment by CronoDAS · 2024-02-23T21:20:30.277Z · LW(p) · GW(p)

This is often my problem - I think "I could probably do it if I really wanted to" and "I'm not doing it", and conclude "Therefore I don't really want to do it (strongly enough)."

Replies from: CstineSublime↑ comment by CstineSublime · 2024-02-29T07:39:23.121Z · LW(p) · GW(p)

Have you tried this technique, "if it was easier/less resource demanding to do it, would I be more inclined to do it?" - if so does the answer change much?

Replies from: CronoDAS↑ comment by CronoDAS · 2024-02-29T18:35:24.286Z · LW(p) · GW(p)

Sometimes the answer is "absolutely yes." For example, I'd love to be able to understand Japanese, but I'm not about to dedicate a year or more of my life to studying the language in order to do it. (As I've mentioned before, learning foreign language vocabulary is relatively difficult for me, because there's no way to use partial knowledge to recover something you can't quite remember; knowing that "azul" means "blue" and "rojo" means red doesn't help me remember that "verde" means green. I have to resort to brute force memorization, and I hate it.)

Another thing that I might like to do but I'm not sure is worth trying is making my own game using something like RPG Maker. I imagine that it would take a year or more to go from where I am to a working game I'd be satisfied with, and I don't know if it would "pay off" - even if I did make a really amazing game, how many people would ever play it?

Replies from: CstineSublime↑ comment by CstineSublime · 2024-02-29T21:41:23.151Z · LW(p) · GW(p)

Perhaps I am misreading you original comment but is your issue not about formulating goals - as the examples with Japanese and something like RPG Maker suggest you can and could even be motivated if conditions were different. But lies with formulating viable or likely-to-succeed goals?

Replies from: CronoDAS↑ comment by CronoDAS · 2024-03-02T05:55:54.617Z · LW(p) · GW(p)

In the short run, I have my hands full with keeping up with household chores, walking my dog, and visiting and being an advocate for my severely ill wife, who has been in hospitals almost continuously since December 2022. I don't have a job (besides taking care of my family's rental property) and don't think I could handle one right now.

Most of the actual goals I manage to set for myself and achieve involve playing video games. :/

Replies from: CstineSublime↑ comment by CstineSublime · 2024-03-02T08:52:04.429Z · LW(p) · GW(p)

This is some unsolicited commentary but it does sounds like you have your priorities straight already.

Its sad to hear you're so consumed by responsibilities and set backs. At least you have control in the video games right? I wish the best for you and your wife.

↑ comment by Ben Pace (Benito) · 2024-02-26T18:22:22.797Z · LW(p) · GW(p)

I make space in my week to be bored, and where there are no options for short-term distractions that will zombify me (like videogames or YouTube). I usually find that ideas come to me then that I want that take a bit more work but will be more satisfying, like learning a song on the guitar or reading a book or doing something with a friend.

Chatting with friends who are alive and wanting things is another way I notice such things in myself, usually I catch some excitement from them as I'm empathizing with them.

I wrote the above before reading Anna's comment to see how our answers differed; seems like our number 1 recommendation is the same!

Cleaning things out also works for me, I did that yesterday and it helped me believe in [LW · GW] my ability to make my world better.

I also concur with the grieving one, but I never know how to communicate it. When I try, I come up with sentences like "Now vividly imagine failing to get the thing you want. Feel all the pain and sorrow and nausea associated with it. Great, now go get it!" but that doesn't seem to communicate why it helps and reads to me like unhelpful advice.

↑ comment by CstineSublime · 2024-02-29T07:37:57.520Z · LW(p) · GW(p)

As someone with the opposite problem, who can babble countless goals that interest me, and is rigidly married to a few interrelated ones (i.e. make music videos, make films) but struggling to execute them anywhere near to my liking, I hope I can provide some insight into how to find goals or what you want.

I believe that big goals are no different than small goals in terms of finding them.

I'll be happy to write a post on this if any of this seems intriguing or useful but here's the dotpoints:

- If you have heroes, who are they? What adjectives would you use to describe them? For example, I would describe one of my heroes, Miuccia Prada, as "innovative" "insightful" "paradoxical" and "sophisticated". I could make it my goal to cultivate one of these qualities in myself, and that would require finding an exercise or even enrolling in a course or activity which would allow me to do so?

- i.e. the go-to example would be if you want to be more 'charismatic' take up public speaking, join a amateur theatre troupe as that is meant to be a means of developing it.

- If you don't have heroes, who among your social group or friends have the traits or manners that you most envy (in a non-destructive way)? Same as above.

- Both of these exercises can be inverted by looking at people you detest or at least have a strong aversion to. Pride and Shame are good indicators too of what you want.

- Coming up with goals is easy, committing to them is hard. Just babble. Here's a template: "I would feel proud if I had a reputation based on fixing/making X". Prune out the ones that don't elicit a passionate response.

- Analyze your Revealed Preferences on groceries as an Economist would. Everything from buying biodegradable dishwashing detergent to anti-aging wrinkle cream to tickets to a UFC match are all commitments to a certain lifestyle or living with certain principals or values. Those commitments should point you towards broader patterns of goals

Okay sure? But what works for me? "How do you come up with goals". Here's how I do it.

I've known since I was a teenager that I've wanted to be involved in motion pictures however last year I asked myself "okay you say you want to make films, but what is a film you would be deliriously proud of look like?" so I brainstormed all the qualities and elements, and made a video-moodboard (a hour long montage of films, music videos, retro TV commercials, even Beckett plays and experimental animations that inspired me) and that too formed the vague outline of a story. Now my goal is to write a screenplay that incorporates all those elements seamlessly, and then the subsequent goal to make that screenplay into a film. There's certainly a lot of functionary goals and steppingstones that must be met to achieve those goals.

Do I know how to make that film? Not with any confidence. But that's because coming up with a goal, being specific about what I want, what I'm passionate and dedicated to is the easy part.

comment by mike_hawke · 2024-02-14T22:49:25.433Z · LW(p) · GW(p)

There aren't things lying around in my life that bother me because I always notice and deal with it.

I assume he said something more nuanced and less prone to blindspots than that.

Ten minutes a day is 60 hours a year. If something eats 10 minutes each day, you'd break even in a year if you spent a whole work week getting rid of it forever.

In my experience, I have not been able to reliably break even. This kind of estimate assumes a kind of fungibility that is sometimes correct and sometimes not. I think When to Get Compact [LW · GW] is relevant here--it can feel like my bottleneck is time, when in fact it is actually attentional agency or similar. There are black holes that will suck up as much of our available time as they can.

External memory is essential to intelligence augmentation.

Highly plausible. Also perhaps more tractrable and testable [? · GW] than many other avenues. I remember an old LW Rationality Quotation along the lines of, "There is a big difference between a human and human with a pen and paper."

Replies from: Raemon, Raemon↑ comment by Raemon · 2024-02-15T00:31:46.377Z · LW(p) · GW(p)

I assume he said something more nuanced and less prone to blindspots than that.

I think this is pretty close to what he said. I suspect he'd have some nuances if we drilled down into it.

This involves speculation on my part, but having known Critch moderately well, I'd personally bet that he has something like 10x to 30x fewer things lying around bothering him than most people (which maybe comes in waves where, first he had 10x fewer things lying around bothering him, then he set more ambitious goals for himself that created more bothersome things, then he fixed those, etc)

He probably does still have blindspots but I think the effect is real.

Replies from: CronoDAS, pktechgirl↑ comment by Elizabeth (pktechgirl) · 2024-02-16T05:22:45.732Z · LW(p) · GW(p)

A non-rationalist friend of mine spontaneously said one thing he appreciates about me is that I vibe "taking action against problems". This is from a guy I already estimate to have much higher than average agency. Part of that vibe in me is directly traceable to Raemon, and probably some other part is to rationalist community as a whole. So when Raemon says this about Critch I believe him.

↑ comment by Raemon · 2024-02-15T00:33:30.415Z · LW(p) · GW(p)

In my experience, I have not been able to reliably break even. This kind of estimate assumes a kind of fungibility that is sometimes correct and sometimes not. I think When to Get Compact [LW · GW] is relevant here--it can feel like my bottleneck is time, when in fact it is actually attentional agency or similar. There are black holes that will suck up as much of our available time as they can.

Yeah this sounds like a real/common problem, and dealing with that somehow seems like a necessary piece for this whole process to work.

comment by Ruby · 2024-02-23T19:19:38.327Z · LW(p) · GW(p)

Curated. This post feels to me like a kind of a survey of the mental skills and properties people do/don't have for effectiveness, of which I don't recall any other examples right now, and so is quite interesting. I think it's both interesting from allowing someone to ask themselves if they're weak on any of these, but also helpful in modeling others and answering questions of the sort "why don't people just X?". For all that we spend a tonne of time interacting with people, people's internal mental lives are private, and so much like shower habits (I'm told) vary a lot more than externally observable behaviors.

I would like to see the "scope sensitivity" piece fleshed out more. I can see how it applies to eliminating annoyances that take 10 minutes every day and add up, but I don't think that's at the heart of rationality. I'd be curious how much mileage someone gets from just reflection on their own mind, and how much that can be done without invoking numeracy.

↑ comment by AnnaSalamon · 2024-02-25T04:45:27.967Z · LW(p) · GW(p)

I'm not Critch, but to speak my own defense of the numeracy/scope sensitivity point:

IMO, one of the hallmarks of a conscious process is that it can take different actions in different circumstances (in a useful fashion), rather than simply doing things the way that process does it (following its own habits, personality, etc.). ("When the facts change, I change my mind [and actions]; what do you do, sir?")

Numeracy / scope sensitivity is involved in, and maybe required for, the ability to do this deeply (to change actions all the way up to one's entire life, when moved by a thing worth being moved by there).

Smaller-scale examples of scope sensitivity, such as noticing that a thing is wasting several minutes of your day each day and taking inconvenient, non-default action to fix it, can help build this power.

comment by Morpheus · 2024-02-15T08:29:08.956Z · LW(p) · GW(p)

Causal Diagramming

- or, some kind of external media, other than writing

Anyone knows a nice way to drill this skill? I was just reading one of Steven Byrne's posts [LW · GW] which made me notice that he is good at this and that I lack this skill currently? Also reminds me of Thinking in Systems which I read, found cool and then mostly went about my life not really applying this too much. I think I have a pretty good intuitive understanding of statistical causal relationships and have thought a lot about confounders. But I've never felt compelled to whip out a diagram.

Replies from: frozen↑ comment by Tomek Potuczko (frozen) · 2024-02-24T09:11:33.610Z · LW(p) · GW(p)

You might want to explore the Thinking Processes developed by Eliyahu Goldratt, which are part of his Theory of Constraints. These processes, such as the Goal Tree and the Current Reality Tree, are excellent tools for visualizing complex issues and identifying causal relationships between different elements of a system. Additionally, since you mentioned statistics, I recommend "The Book of Why" by Judea Pearl.

comment by Adam Zerner (adamzerner) · 2024-02-17T09:10:58.166Z · LW(p) · GW(p)

Surprise 1: People are profoundly non-numerate.

I wonder whether Humans are not automatically strategic [LW · GW] is the deeper issue here.

It's one thing if you intend to be strategic about things and fail to do so in part due to lack of numeracy. It's another if you aren't even trying to be strategic in the first place. I suspect that a large majority of the time the issue is not being strategic.

Furthermore, I suspect that most people aren't strategic because they find being strategic distasteful in some way. I've experienced this a lot in my life.

- I'll want to skim through Yelp for 10 minutes before choosing a restaurant to eat at.

- Or spend 20 minutes watching trailers and googling around before picking a movie to watch.

- Or spend 30 minutes on The Wirecutter before making a purchase for a few hundred dollars.

- Or spend however many dozens of hours researching all sorts of stuff about different cities before moving to one.

I've found that various people see these sorts of things as being, depending on what type of mood they're in, "overly analytical" or "Adam being Adam".

On the other hand, I think there is a smaller but not super small subset of people who don't find it particularly distasteful and would be pretty receptive to a proposal of "you're currently not being strategic about lots of things in your life, being strategic about them would benefit you greatly, and so you should start being strategic about them".

I think that it is important to identify what the real blocker or blockers are here. If there are, for example, multiple blockers and you solve one of them, then you end up in a situation where progress is merely latent [LW · GW]. It doesn't really lead to observable results. For example, if someone is both 1) innumerate and 2) not motivated to be strategic, if you teach them to be numerate, (2) will still be a blocker and the person will not achieve better outcomes.

Replies from: adamzerner, Raemon↑ comment by Adam Zerner (adamzerner) · 2024-02-17T17:55:19.520Z · LW(p) · GW(p)

I'm remembering the following excerpt from The Scout Mindset. I think it's similar to what I say above.

My path to this book began in 2009, after I quit graduate school and threw myself into a passion project that became a new career: helping people reason out tough questions in their personal and professional lives. At first I imagined that this would involve teaching people about things like probability, logic, and cognitive biases, and showing them how those subjects applied to everyday life. But after several years of running workshops, reading studies, doing consulting, and interviewing people, I finally came to accept that knowing how to reason wasn't the cure-all I thought it was.

Knowing that you should test your assumptions doesn't automatically improve your judgement, any more than knowing you should exercise automatically improves your health. Being able to rattle off a list of biases and fallacies doesn't help you unless you're willing to acknowledge those biases and fallacies in your own thinking. The biggest lesson I learned is something that's since been corroborated by researchers, as we'll see in this book: our judgment isn't limited by knowledge nearly as much as it's limited by attitude.

↑ comment by Raemon · 2024-02-17T20:37:43.998Z · LW(p) · GW(p)

I totally agree that's a (the?) root level cause for most people.

My guess (although I'm not sure) is that in Critch's case working with CFAR participants he was filtering on people who were at least moderately strategic, and it turned out there was a third blocker.

comment by sanyer (santeri-koivula) · 2024-02-15T22:04:15.022Z · LW(p) · GW(p)

Filtering for "people who can afford to pay for a workshop" works pretty well.

This is surprising to me. It seems to assume income is just based on general competence, which doesn't seem true to me. There are a lot of people who seem to have these traits who would find it really difficult to pay for this, and vice versa

Replies from: fbahnson@gmail.com, CronoDAS↑ comment by Frederic Bahnson (fbahnson@gmail.com) · 2024-02-23T22:43:42.727Z · LW(p) · GW(p)

The filtering described here seems moderately specific but not sensitive, whether or not you agree with the "income implies competence" relationship being strong.

It seems true that those who are interested in and can pay for a $4k course of this type are more likely to have 17 of the attributes in question than a person picked at random from the population. However, the filter tells you nothing about, and completely excludes, a large number of people who would fit the "have 17 of these attributes" criteria but not the "have $4k to spend on a course or the time to take it" criteria.

The filter allows in a population of people with above-average chances of meeting the attribute criteria, but blocks a large and unknown number of other people who would also meet that criteria.

It is potentially good for creating a desired environment in the course (having mostly people with a lot of the desired attributes), but is not a good filter for identifying the much larger population of people who might be interested in and benefitted by the course (as described in article as having 17 of the attributes and therefore capable of picking up the other two).

Replies from: Raemon↑ comment by Raemon · 2024-02-24T23:42:39.328Z · LW(p) · GW(p)

Nod. I'm not actually particularly attached to this point nor think $4000 is necessarily the right amount to get the filtering effect if you're aiming for that. I do think this approach is insufficient for me because the people I most hope to intervene on with my own rationality training are college students, who don't yet have enough income for this approach to work.

But, also, well, you do need some kind of filter.

Speaking for myself, not sure what Critch would say:

There seems like some kind of assumption here that "if we didn't filter out people unnecessarily, we'd be able to help more people." But, I think the throughput of people-who-can-be-helped here is quite small. I think it's not possible to scale this sort of org to help thousands of people per year without compromising the org.

(In general in education, there is a problem where educational interventions work initially, because the educators are invested, and have a nuanced understanding of what they're trying to accomplish. But, when they attempt to scale, they get worse teachers who are less invested and less deep understanding of the methodology, because conveying the knowledge is hard)

So, I think it's more like "there is a smallish number of people this sort of process/org would be able to help. There are going to be thousands/millions of people who could be helped, but you don't have time to help them all." That's sort of baked in.

So, it's not necessarily "a problem" from my perspective if this filters out people who I'd have liked to have helped, so long as the program successfully outputs people who go on to be much more effective. (Past me would be more sad about that, but it's something I've already grieved [LW · GW])

I do think it's important (and plausible) that this creates some kinds of distortions in which people you're selecting on, and if they (in aggregate) those distortions add up. But that's a somewhat different argument from what you and sanyer presented.

But, still, ultimately the question is "okay, what sort of filtering mechanism do you have in mind, and how well does it work?".

comment by Unreal · 2024-02-27T20:08:37.510Z · LW(p) · GW(p)

Rationality seems to be missing an entire curriculum on "Eros" or True Desire.

I got this curriculum from other trainings, though. There are places where it's hugely emphasized and well-taught.

I think maybe Rationality should be more open to sending people to different places for different trainings and stop trying to do everything on its own terms.

It has been way better for me to learn how to enter/exit different frames and worldviews than to try to make everything fit into one worldview / frame. I think some Rationalists believe everything is supposed to fit into one frame, but Frames != The Truth.

The world is extremely complex, and if we want to be good at meeting the world, we should be able to pick up and drop frames as needed, at will.

Anyway, there are three main curricula:

- Eros (Embodied Desire)

- Intelligence (Rationality)

- Wisdom (Awakening)

Maybe you guys should work on 2, but I don't think you are advantaged at 1 or 3. But you could give intros to 1 and 3. CFAR opened me up by introducing me to Focusing and Circling, but I took non-rationalist trainings for both of those. As well as many other things that ended up being important.

Replies from: Morpheus, Raemon, meedstrom↑ comment by meedstrom · 2025-01-11T13:53:23.747Z · LW(p) · GW(p)

I think some Rationalists believe everything is supposed to fit into one frame, but Frames != The Truth. [...] we should be able to pick up and drop frames as needed, at will.

Aye - see also In Praise of Fake Frameworks [LW · GW]. It's helped me interface with a lot people that would've otherwise befuddled me. That gives me a more fleshed-out range of possible perspectives on things, which shortcuts to new knowledge.

But perhaps it's worth thinking twice when or at least how to introduce this skill, because it looks like a method of doing Salvage Epistemology [LW · GW] and so could invite its downsides if taught poorly. I'm undecided whether that's worth worrying about.

comment by Paweł Sysiak (pawel-sysiak) · 2024-02-27T02:15:35.431Z · LW(p) · GW(p)

What specifically does the author mean by lack of numeracy skills?

comment by Adam Zerner (adamzerner) · 2024-02-17T08:53:32.437Z · LW(p) · GW(p)

If your personality type is "writing doesn't work for me", one of your biggest bottlenecks is to make writing work for you.

Thanks for the reminder here. I've thought [LW · GW] a lot in the past about the value of writing in past but for whatever reason I feel like I've drifted away from writing. I think I should spend more time writing and am feeling motivated to start doing so now.

comment by Martín Soto (martinsq) · 2024-02-24T22:16:08.904Z · LW(p) · GW(p)

This is pure gold, thanks for sharing!

comment by Kajus · 2024-02-19T12:54:27.904Z · LW(p) · GW(p)

You once told me that there were ~20 things a person needed to be generally competent. What were they?

I'm not sure I had an exact list, but I'll try to generate one now and see how many things are on it:

- Numeracy

"What surprised you most during your time at CFAR?

Surprise 1: People are profoundly non-numerate.

And, people who are not profoundly non-numerate still fail to connect numbers to life.

This surprised me a lot, because I was told (by somebody who read CFAR handbook) that CFAR isn't mostly about numeracy, and I've never heard about skills involving number crunching from people who went to CFAR workshops. Didn't they just fail to update on that? If that's the most important skill, shouldn't we have a new CFAR who teaches numeracy, reading and writing?

Replies from: habryka4↑ comment by habryka (habryka4) · 2024-02-19T23:05:47.928Z · LW(p) · GW(p)

I think the key issue here is that CFAR workshops were optimized around being 4 days long. I think teaching someone numeracy in 4 days is very hard, and the kind of things you end up being able to convey look different (and still pretty valuable, but I do think end up missing a large fraction of the art of the art of rationality).

Replies from: Kajus↑ comment by Kajus · 2024-02-20T11:28:34.551Z · LW(p) · GW(p)

That makes sense, but it should've been at least mentioned somewhere that they think they aren't teaching the most important skills, and they think that numeracy is more important. The views expressed in the post might not be views of the whole CFAR staff.

comment by Review Bot · 2024-02-14T18:51:03.570Z · LW(p) · GW(p)

The LessWrong Review [? · GW] runs every year to select the posts that have most stood the test of time. This post is not yet eligible for review, but will be at the end of 2025. The top fifty or so posts are featured prominently on the site throughout the year.

Hopefully, the review is better than karma at judging enduring value. If we have accurate prediction markets on the review results, maybe we can have better incentives on LessWrong today. Will this post make the top fifty?

comment by Roko · 2024-03-07T16:59:59.762Z · LW(p) · GW(p)

Ability to go sleep... Sleep deprivation is one of the greatest effect sizes for IQ

Interesting!

Being alive is so much fun. Sleep is death, I don't want to sleep!

Replies from: meedstrom↑ comment by meedstrom · 2025-01-11T14:50:19.142Z · LW(p) · GW(p)

Basically agree, but not an useful comment.

I'd nuance that as that being alive and energetic is fun -- but when my body no longer grants energy, it's like death already. Say I'm trying to take notes about the content of this thread, but I'm so tired I barely produce anything. If the terms of my body are such that I must first do a timeskip to tomorrow to get more energy, then I want the timeskip.

I guess I understand becoming sleep-deprived and staying up anyway if you don't notice your IQ dropping...