Posts

Comments

As far as I understand, MIRI did not assume that we're just able to give the AI a utility function directly.

I'm a bit unsure about how to interpret you here.

In my original comment, I used terms such as positive/optimistic assumptions and simplifying assumptions. When doing that, I meant to refer to simplifying assumptions that were made so as to abstract away some parts of the problem.

The Risks from Learned Optimization paper was written mainly by people from MIRI!

Good point (I should have written my comment in such a way that pointing out this didn't feel necessary).

Other things like Ontological Crises and Low Impact sort of assume you can get some info into the values of an agent

I guess this is more central to what I was trying to communicate than whether it is expressed in terms of a utility function per se.

In this tweet, Eliezer writes:

"The idea with agent foundations, which I guess hasn't successfully been communicated to this day, was finding a coherent target to try to get into the system by any means (potentially including DL ones)."

Based on e.g. this talk from 2016, I get the sense that when he says "coherent target" he means targets that relate to the non-digital world. But perhaps that's not the case (or perhaps it's sort of the case, but more nuanced).

Maybe I'm making this out to have been a bigger part of their work than what actually was the case.

Thanks for the reply :) I'll try to convey some of my thinking, but I don't expect great success. I'm working on more digestible explainers, but this is a work in progress, and I have nothing good that I can point people to as of now.

(...) part of the explanation here might be "if the world is solved by AI, we do actually think it will probably be via doing some concrete action in the world (e.g., build nanotech), not via helping with alignment (...)

Yeah, I guess this is where a lot of the differences in our perspective are located.

if the world is solved by AI, we do actually think it will probably be via doing some concrete action in the world (e.g., build nanotech)

Things have to cash out in terms of concrete actions in the world. Maybe a contention is the level of indirection we imagine in our heads (by which we try to obtain systems that can help us do concrete actions).

Prominent in my mind are scenarios that involve a lot of iterative steps (but over a short amount of time) before we start evaluating systems by doing AGI-generated experiments. In the earlier steps, we avoid doing any actions in the "real world" that are influenced in a detailed way by AGI output, and we avoid having real humans be exposed to AGI-generated argumentation.

Examples of stuff we might try to obtain:

- AGI "lie detector techniques" (maybe something that is in line with the ideas of Collin Burns)

- Argument/proof evaluators (this is an interest of mine, but making better explainers is still a work in progress, and I have some way to go)

If we are good at program-search, this can itself be used to obtain programs that help us be better at program-search (finding functions that score well according to well-defined criteria).

Some tasks can be considered to be inside of "test-range"[1]:

- Predicting human answers to questions posed by other humans[2].

- Outputting prime numbers[3]

- Predicting experimental results from past experimental data[4]

- Whether a chess-move is good[5]

- Etc, etc

Other tasks are outside of "test-range":

- Predicting human answers to any question (including questions that involve being exposed to AGI-generated content)[6]

- Whether a given instruction-plan actually results in machines that copy strawberries at the molecular level (and does so in accordance with "the spirit" of the request that was given)

- Etc, etc

Most requests that actually are helpful to us are outside of test-range. And when the requirements that matter to us are outside of test-range, it is of course harder to test in a safe/reliable way if systems are giving us what we want.

But we can have AGIs output programs that help us with tasks, and we can define requirements[7]Be for these programs. And for these program-requirements, AGIs can help us explore stuff such as:

- Are there programs that satisfy the requirements but disagree about certain outputs? (be that outputs that are inside of test-range or outside of test-range)

- Are there programs that satisfy the requirements, but perform poorly for certain inputs that are inside of test-range?

- If we only require correct outputs within the entire "test-range", is it nonetheless infeasible to make programs that satisfy the requirements while giving bad output for ≥1 inputs within the test-range?

Phrased somewhat differently: We may come up with techniques for making hard to not give helpful/honest outputs[8], and test how well these seem to generalize.

And similar techniques can also be applied to program-requirement-requirements, program-requirement-requirement-requirements, etc[9].

Anyway, it's hard to write about this kind of stuff in a concrete way without going off large tangents, and it's hard to write about it in an abstract way without being vague and convoluted. For whatever it's worth, these are some principles I think may be helpful (very much not a complete list):

- Generality as it correlates with measurables (such as e.g. source code length or use of computational resource use): When writing a program to do well for many outputs (while fulfilling certain requirements), intentionally having it fail for particular outputs will sometimes require it to be less general (e.g. adding extra if-sentences, resulting in a longer minimum source code length for the program to exhibit this behavior).

- Optimization-targets that incentivize modularity make it harder to make programs that intentionally fail for particular outputs: In a more modular system, it will less often be clear from sub-routine inputs what the macro-level task is[10].

- When a requirement or optimization-target fails at "forcing" good outputs, AGIs that act so as to be selected for are "incentivized" to reveal this as being the case: Suppose some set of requirements is compatible with bad outputs inside "test-range". Or suppose that some set of requirements allow for programs that give contradictory outputs. If we do "gradient descent", looking for AGIs that help us explore spaces of programs defined by requirements that we specify, we will select for AGIs that point out this kind of stuff.

Anyway, I'm not sure how much or little this attempt at conveying my thoughts achieves. It's a bit of a dilemma for me if I am to try to convey anything at all, or wait to do that until I have completed better explainers that I can point people to (as the explainers I have now fall short).

I guess an incomplete summary of my perspective might be:

- There are lots of techniques we may apply for getting help with alignment while greatly limiting the extent to which we interact with AGI putout in ways that are dangerous

- Not being able to directly evaluate outputs we are interested in does not necessarily preclude us from finding indirect methods of evaluation that are powerful.

- We may do program-search for AGIs that help us explore possible program-requirements, and the spaces of programs (and program outputs) that are compatible with those program-requirements (and program-requirement-requirements, etc). We may learn a lot from patterns we observe relating to these "spaces".

- Sometimes there are possible patterns such that if we know ourselves to be sufficiently capable at program-search, P(requirement R1 "forces" outputs to be "good" | we observe pattern P1) reasonably could be deemed to be high. Even if we're not opinionated in regards to P(requirement R1 "forces" outputs to be "good").

- ^

By which I mean something akin to: "We have a safe and reliable way of testing if the output fulfills the requirements in question".

Admittedly, if we haven't solved some of the technical challenges related to containment, even playing tic tac toe can constitute some risk. But here, I mean to focus on risk from interacting with AGI output - not risk from just having AGI-systems system run (and them maybe finding a security vulnerability in the computer systems they are running at). - ^

(we can pick random predictions to test, and we can have AIs competitively point out predictions made by other AIs that they think are miscalibrated)

- ^

(we can write programs that take alleged prime numbers as input, and test if they really are prime numbers)

- ^

(or from experiments that haven't been run, but that humans have come up with, such that there is little room for AGIs to somehow influence the experiment to itself be dangerous somehow)

- ^

(we may not be capable of evaluating chess-moves ourselves, but we may safely have AGI-systems play chess against each other - indicating something akin to: "insofar as we feel confident that we are good at program-search, we can feel confident that we can obtain programs that output good chess-moves")

- ^

There are more and less dangerous ways to do this. With less dangerous ways of doing it, experiments (where actual humans answer questions) are done with humans that are temporarily isolated from the rest of the rest of the world (and who certainly aren't AGI-system operators).

- ^

Such requirements may relate to:

- How the program is constructed

- Observables relating to the source code (source code length, etc)

- Restrictions the source code must adhere to

- Whether the program is accepted by a given verifier (or any verifier that itself fulfills certain requirements)

- "Proofs" of various kinds relating to the program

- Tests of program outputs that the program must be robust in regards to

- Etc - ^

By "making it hard" I means something like "hard to do while being the sort of program we select for when doing program-search". Kind of like how it's not "hard" for a chess program to output bad chess-moves, but it's hard for it to do that while also being the kind of program we continue to select for while doing "gradient descent".

- ^

In my view of things, this is a very central technique (it may appear circular somehow, but when applied correctly, I don't think it is). But it's hard for me to talk about it in a concrete way without going off on tangents, and it's hard for me to talk about it in an abstract way without being vague. Also, my texts become more convoluted when I try to write about this, and I think people often just glaze over it.

- ^

One example of this: If we are trying to obtain argument evaluators, the argumentation/demonstrations/proofs these evaluators evaluate should be organized into small and modular pieces, such that it's not car from any given piece what the macro-level conclusion is.

Thanks for the reply :) Feel free to reply further if you want, but I hope you don't feel obliged to do so[1].

"Fill the cauldron" examples are (...) not examples where it has the wrong beliefs.

I have never ever been confused about that!

It's "even simple small-scale tasks are unnatural, in the sense that it's hard to define a coherent preference ordering over world-states such that maximizing it completes the task and has no serious negative impact; and there isn't an obvious patch that overcomes the unnaturalness or otherwise makes it predictably easier to aim AI systems at a bounded low-impact task like this". (Including easier to aim via training.)

That is well phrased. And what you write here doesn't seem in contradiction with my previous impression of things.

I think the feeling I had when first hearing "fill the bucket"-like examples was "interesting - you made a legit point/observation here"[2].

I'm having a hard time giving a crystalized/precise summary of why I nonetheless feel (and have felt[3]) confused. I think some of it has to do with:

- More "outer alignment"-like issues being given what seems/seemed to me like outsized focus compared to more "inner alignment"-like issues (although there has been a focus on both for as long as I can remember).

- The attempts to think of "tricks" seeming to be focused on real-world optimization-targets to point at, rather than ways of extracting help with alignment somehow / trying to find techniques/paths/tricks for obtaining reliable oracles.

- Having utility functions so prominently/commonly be the layer of abstraction that is used[4].

I remember Nate Soares once using the analogy of a very powerful function-optimizer ("I could put in some description of a mathematical function, and it would give me an input that made that function's output really large"). Thinking of the problem at that layer of abstraction makes much more sense to me.

It's purposeful that I say "I'm confused", and not "I understand all details of what you were thinking, and can clearly see that you were misguided".

When seeing e.g. Eliezer's talk AI Alignment: Why It's Hard, and Where to Start, I understand that I'm seeing a fairly small window into his thinking. So when it gives a sense of him not thinking about the problem quite like I would think about it, that is more of a suspicion that I get/got from it - not something I can conclude from it in a firm way.

- ^

If I could steal a given amount of your time, I would not prioritize you replying to this.

- ^

I can't remember this point/observation being particularly salient to me (in the context of AI) before I first was exposed to Bostrom's/Eliezer's writings (in 2014).

As a sidenote: I wasn't that worried about technical alignment prior to reading Bostrom's/Eliezer's stuff, and became worried upon reading it. - ^

What has confused me has varied throughout time. If I tried to be very precise about what I think I thought when, this comment would become more convoluted. (Also, it's sometimes hard for me to separate false memories from real ones.)

- ^

I have read this tweet, which seemed in line with my interpretation of things.

Your reply here says much of what I would expect it to say (and much of it aligns with my impression of things). But why you focused so much on "fill the cauldron" type examples is something I'm a bit confused by (if I remember correctly I was confused by this in 2016 also).

This tweet from Eliezer seems relevant btw. I would give similar answers to all of the questions he lists that relate to nanotechnology (but I'd be somewhat more hedged/guarded - e.g. replacing "YES" with "PROBABLY" for some of them).

Thanks for engaging

Likewise :)

Also, sorry about the length of this reply. As the adage goes: "If I had more time, I would have written a shorter letter."

From my perspective you seem simply very optimistic on what kind of data can be extracted from unspecific measurements.

That seems to be one of the relevant differences between us. Although I don't think it is the only difference that causes us to see things differently.

Other differences (I guess some of these overlap):

- It seems I have higher error-bars than you on the question we are discussing now. You seem more comfortable taking the availability heuristic (if you can think of approaches for how something can be done) as conclusive evidence.

- Compared to me, it seems that you see experimentation as more inseparably linked with needing to build extensive infrastructure / having access to labs, and spending lots of serial time (with much back-and-fourth).

- You seem more pessimistic about the impressiveness/reliability of engineering that can be achieved by a superintelligence that lacks knowledge/data about lots of stuff.

- The probability of having a single plan work, and having one of several plans (carried out in parallel) work, seems to be more linked in your mind than mine.

- You seem more dismissive than me of conclusions maybe being possible to reach from first-principles thinking (about how universes might work).

- I seem to be more optimistic about approaches to thinking that are akin to (a more efficient version of) "think of lots of ways the universe might work, do Montecarlo-simulations for how those conjectures would affect the probability of lots of aspects of lots of different observations, and take notice if some theories about the universe seem unusually consistent with the data we see".

- I wonder if you maybe think of computability in a different way from me. Like, you may think that it's computationally intractable to predict the properties of complex molecules based on knowledge of the standard model / quantum physics. And my perspective would be that this is extremely contingent on the molecule, what the AI needs to know about it, etc - and that an AGI, unlike us, isn't forced to approach this sort of thing in an extremely crude manner.

- The AI only needs to find one approach that works (from an extremely vast space of possible designs/approaches). I suspect you of having fewer qualms about playing fast and lose with the distinction between "an AI will often/mostly be prevented from doing x due to y" and "an AI will always be prevented from doing x due to y".

- It's unclear if you share my perspective about how it's an extremely important factor that an AGI could be much better than us at doing reasoning where it has a low error-rate (in terms of logical flaws in reasoning-steps, etc).

From my perspective, I don't see how your reasoning is qualitatively distinct from saying in the 1500s: "We will for sure never be able to know what the sun is made out of, since we won't be able to travel there and take samples."

Even if we didn't have e.g. the standard model, my perspective would still be roughly what it is (with some adjustments to credences, but not qualitatively so). So to me, us having the standard model is "icing on the cake".

Here is another good example on how Eliezer makes some pretty out there claims about what might be possible to infer from very little data: https://www.lesswrong.com/posts/ALsuxpdqeTXwgEJeZ/could-a-superintelligence-deduce-general-relativity-from-a -- I wonder what your intuition says about this?

Eliezer says "A Bayesian superintelligence, hooked up to a webcam, would invent General Relativity as a hypothesis (...)". I might add more qualifiers (replacing "would" with "might", etc). I think I have wider error-bars than Eliezer, but similar intuitions when it comes to this kind of thing.

Speaking of intuitions, one question that maybe gets at deeper intuitions is "could AGIs find out how to play theoretically perfect chess / solve the game of chess?". At 5/1 odds, this is a claim that I myself would bet neither for nor against (I wouldn't bet large sums at 1/1 odds either). While I think people of a certain mindset will think "that is computationally intractable [when using the crude methods I have in mind]", and leave it at that.

As to my credences that a superintelligence could "oneshot" nanobots[1] - without being able to design and run experiments prior to designing this plan - I would bet neither "yes" or "no" to that a 1/1 odds (but if I had to bet, I would bet "yes").

Upon seeing three frames of a falling apple and with no other information, a superintelligence would assign a high probability to Newtonian mechanics, including Newtonian gravity. [from the post you reference]

But it would have other information. Insofar as it can reason about the reasoning-process that it itself consists of, that's a source of information (some ways by which the universe could work would be more/less likely to produce itself). And among ways that reality might work - which the AI might hypothesize about (in the absence of data) - some will be more likely than others in a "Kolmogorov complexity" sort of way.

How far/short a superintelligence could get with this sort of reasoning, I dunno.

Here is an excerpt from a TED-talk from the Wolfram Alpha that feels a bit relevant (I find the sort of methodology that he outlines deeply intuitive):

"Well, so, that leads to kind of an ultimate question: Could it be that someplace out there in the computational universe we might find our physical universe? Perhaps there's even some quite simple rule, some simple program for our universe. Well, the history of physics would have us believe that the rule for the universe must be pretty complicated. But in the computational universe, we've now seen how rules that are incredibly simple can produce incredibly rich and complex behavior. So could that be what's going on with our whole universe? If the rules for the universe are simple, it's kind of inevitable that they have to be very abstract and very low level; operating, for example, far below the level of space or time, which makes it hard to represent things. But in at least a large class of cases, one can think of the universe as being like some kind of network, which, when it gets big enough, behaves like continuous space in much the same way as having lots of molecules can behave like a continuous fluid. Well, then the universe has to evolve by applying little rules that progressively update this network. And each possible rule, in a sense, corresponds to a candidate universe.

Actually, I haven't shown these before, but here are a few of the candidate universes that I've looked at. Some of these are hopeless universes, completely sterile, with other kinds of pathologies like no notion of space, no notion of time, no matter, other problems like that. But the exciting thing that I've found in the last few years is that you actually don't have to go very far in the computational universe before you start finding candidate universes that aren't obviously not our universe. Here's the problem: Any serious candidate for our universe is inevitably full of computational irreducibility. Which means that it is irreducibly difficult to find out how it will really behave, and whether it matches our physical universe. A few years ago, I was pretty excited to discover that there are candidate universes with incredibly simple rules that successfully reproduce special relativity, and even general relativity and gravitation, and at least give hints of quantum mechanics."

invent General Relativity as a hypothesis [from the post you reference]

As I understand it, the original experiment humans did to test for general relativity (not to figure out that general relativity probably was correct, mind you, but to test it "officially") was to measure gravitational redshift.

And I guess redshift is an example of something that will affect many photos. And a superintelligent mind might be able to use such data better than us (we, having "pathetic" mental abilities, will have a much greater need to construct experiments where we only test one hypothesis at a time, and to gather the Bayesian evidence we need relating to that hypothesis from one or a few experiments).

It seems that any photo that contains lighting stemming from the sun (even if the picture itself doesn't include the sun) can be a source of Bayesian evidence relating to general relativity:

It seems that GPS data must account for redshift in its timing system. This could maybe mean that some internet logs (where info can be surmised about how long it takes to send messages via satellite) could be another potential source for Bayesian evidence:

I don't know exactly what and how much data a superintelligence would need to surmise general relativity (if any!). How much/little evidence it could gather from a single picture of an apple I dunno.

There is just absolutely no reason to consider general relativity at all when simpler versions of physics explain absolutely all observations you have ever encountered (which in this case is 2 frames). [from the post you reference]

I disagree with this.

First off, it makes sense to consider theories that explain more observations than just the ones you've encountered.

Secondly, simpler versions of physics do not explain your observations when you see 2 webcam-frames of a falling apple. In particular, the colors you see will be affected by non-Newtonian physics.

Also, the existence of apples and digital cameras also relates to which theories of physics are likely/plausible. Same goes for the resolution of the video, etc, etc.

However, there is no way to scale this to a one-shot scenario.

You say that so definitively. Almost as if you aren't really imagining an entity that is orders of magnitude more capable/intelligent than humans. Or as if you have ruled out large swathes of the possibility-space that I would not rule out.

I just think if an AI executed it today it would have no way of surviving and expanding.

If an AGI is superintelligent and malicious, then surviving/expanding (if it gets onto the internet) seems quite clearly feasible to me.

We even have a hard time getting corona-viruses back in the box! That's a fairly different sort of thing, but it does show how feeble we are. Another example is illegal images/videos, etc (where the people sharing those are humans).

An AGI could plant itself onto lots of different computers, and there are lots of different humans it could try to manipulate (a low success rate would not necessarily be prohibitive). Many humans fall for pretty simple scams, and AGIs would be able to pull off much more impressive scams.

This is absolutely what engineers do. But finding the right design patterns that do this involves a lot of experimentation (not for a pipe, but for constructing e.g. a reliable transistor).

Here you speak about how humans work - and in such an absolutist way. Being feeble and error-prone reasoners, it makes sense that we need to rely heavily on experiments (and have a hard time making effective use of data not directly related to the thing we're interested in).

That protein folding is "solved" does not disprove this IMO.

I think protein being "solved" exemplifies my perspective, but I agree about it not "proving" or "disproving" that much.

Biological molecules are, after all, made from simple building blocks (amino acid) with some very predictable properties (how they stick together) so it's already vastly simplified the problem.

When it comes to predictable properties, I think there are other molecules where this is more the case than for biological ones (DNA-stuff needs to be "messy" in order for mutations that make evolution work to occur). I'm no chemist, but this is my rough impression.

are, after all, made from simple building blocks (amino acid) with some very predictable properties (how they stick together)

Ok, so you acknowledge that there are molecules with very predictable properties.

It's ok for much/most stuff not to be predictable to an AGI, as long as the subset of stuff that can be predicted is sufficient for the AGI to make powerful plans/designs.

finding the right molecules that reliably do what you want, as well as how to put them together, etc., is a lot of research that I am pretty certain will involve actually producing those molecules and doing experiments with them.

Even IF that is the case (an assumption that I don't share but also don't rule out), design-plans may be made to have experimentation built into them. It wouldn't necessarily need to be like this:

- experiments being run

- data being sent to the AI so that it can reason about it

- then having the AI think a bit and construct new experiments

- more experiments being run

- data being sent to the AI so that it can reason about it

- etc

I could give specific examples of ways to avoid having to do it that way, but any example I gave would be impoverished, and understate the true space of possible approaches.

His claim is that an ASI will order some DNA and get some scientists in a lab to mix it together with some substances and create nanobots.

I read the scenario he described as:

- involving DNA being ordered from lab

- having some gullible person elsewhere carry out instructions, where the DNA is involved somehow

- being meant as one example of a type of thing that was possible (but not ruling out that there could be other ways for a malicious AGI to go about it)

I interpreted him as pointing to a larger possibility-space than the one you present. I don't think the more specific scenario you describe would appear prominently in his mind, and not mine either (you talk about getting "some scientists in a lab to mix it together" - while I don't think this would need to happen in a lab).

Here is an excerpt from here (written in 2008), with boldening of text done by me:

"1. Crack the protein folding problem, to the extent of being able to generate DNA

strings whose folded peptide sequences fill specific functional roles in a complex

chemical interaction.

2. Email sets of DNA strings to one or more online laboratories which offer DNA

synthesis, peptide sequencing, and FedEx delivery. (Many labs currently offer this

service, and some boast of 72-hour turnaround times.)

3. Find at least one human connected to the Internet who can be paid, blackmailed,

or fooled by the right background story, into receiving FedExed vials and mixing

them in a specified environment.

4. The synthesized proteins form a very primitive “wet” nanosystem which, ribosomelike, is capable of accepting external instructions; perhaps patterned acoustic vibrations delivered by a speaker attached to the beaker.

5. Use the extremely primitive nanosystem to build more sophisticated systems, which

construct still more sophisticated systems, bootstrapping to molecular

nanotechnology—or beyond."

Btw, here are excerpts from a TED-talk by Dan Gibson from 2018:

"Naturally, with this in mind, we started to build a biological teleporter. We call it the DBC. That's short for digital-to-biological converter. Unlike the BioXp, which starts from pre-manufactured short pieces of DNA, the DBC starts from digitized DNA code and converts that DNA code into biological entities, such as DNA, RNA, proteins or even viruses. You can think of the BioXp as a DVD player, requiring a physical DVD to be inserted, whereas the DBC is Netflix. To build the DBC, my team of scientists worked with software and instrumentation engineers to collapse multiple laboratory workflows, all in a single box. This included software algorithms to predict what DNA to build, chemistry to link the G, A, T and C building blocks of DNA into short pieces, Gibson Assembly to stitch together those short pieces into much longer ones, and biology to convert the DNA into other biological entities, such as proteins.

This is the prototype. Although it wasn't pretty, it was effective. It made therapeutic drugs and vaccines. And laboratory workflows that once took weeks or months could now be carried out in just one to two days. And that's all without any human intervention and simply activated by the receipt of an email which could be sent from anywhere in the world. We like to compare the DBC to fax machines.

(...)

Here's what our DBC looks like today. We imagine the DBC evolving in similar ways as fax machines have. We're working to reduce the size of the instrument, and we're working to make the underlying technology more reliable, cheaper, faster and more accurate.

(...)

The DBC will be useful for the distributed manufacturing of medicine starting from DNA. Every hospital in the world could use a DBC for printing personalized medicines for a patient at their bedside. I can even imagine a day when it's routine for people to have a DBC to connect to their home computer or smart phone as a means to download their prescriptions, such as insulin or antibody therapies. The DBC will also be valuable when placed in strategic areas around the world, for rapid response to disease outbreaks. For example, the CDC in Atlanta, Georgia could send flu vaccine instructions to a DBC on the other side of the world, where the flu vaccine is manufactured right on the front lines."

I believe understanding protein function is still vastly less developed (correct me if I'm wrong here, I haven't followed it in detail).

I'm no expert on this, but what you say here seems in line with my own vague impression of things. As you maybe noticed, I also put "solved" in quotation marks.

However, in this specific instance, the way Eliezer phrases it, any iterative plan for alignment would be excluded.

As touched upon earlier, I am myself am optimistic when it comes to iterative plans for alignment. But I would prefer such iteration to be done with caution that errs on the side of paranoia (rather than being "not paranoid enough").

It would be ok if (many of the) people doing this iteration would think it unlikely that intuitions like Eliezer's or mine are correct. But it would be preferable for them to carry out plans that would be likely to have positive results even if they are wrong about that.

Like, you expect that since something seems hopeless to you, a superintelligent AGI would be unable to do it? Ok, fine. But let's try to minimize the amount of assumptions like that which are loadbearing in our alignment strategies. Especially for assumptions where smart people who have thought about the question extensively disagree strongly.

As a sidenote:

- If I lived in the stone age, I would assign low credence to us going step by step from stone-age technologies akin to iPhones and the international space station and IBM being written with xenon atoms.

- If I lived prior to complex life (but my own existence didn't factor into my reasoning), I would assign low credence to anything like mammals evolving.

It's interesting to note that even though many people (such as yourself) have a "conservative" way of thinking (about things such as this) compared to me, I am still myself "conservative" in the sense that there are several things that have happened that would have seemed too "out there" to appear realistic to me.

Another sidenote:

One question we might ask ourselves is: "how many rules by which the universe could work would be consistent with e.g. the data we see on the internet?". And by rules here, I don't mean rules that can be derived from other rules (like e.g. the weight of a helium atom), but the parameters that most fundamentally determine how the universe works. If we...

- Rank rules by (1) how simple/elegant they are and (2) by how likely the data we see on the internet would be to occur with those rules

- Consider rules "different from each other" if there are differences between them in regards to predictions they make for which nano-technology-designs that would work

...my (possibly wrong) guess is that there would be a "clear winner".

Even if my guess is correct, that leaves the question of whether finding/determining the "winner" is computationally tractable. With crude/naive search-techniques it isn't tractable, but we don't know the specifics of the techniques that a superintelligence might use - it could maybe develop very efficient methods for ruling out large swathes of search-space.

And a third sidenote (the last one, I promise):

Speculating about this feels sort of analogous to reasoning about a powerful chess engine (although there are also many disanalogies). I know that I can beat an arbitrarily powerful chess engine if I start from a sufficiently advantageous position. But I find it hard to predict where that "line" is (looking at a specific board position, and guessing if an optimal chess-player could beat me). Like, for some board positions the answer will be a clear "yes" or a clear "no", but for other board-positions, it will not be clear.

I don't know how much info and compute a superintelligence would need to make nanotechnology-designs that work in a "one short"-ish sort of way. I'm fairly confident that the amount of computational resources used for the initial moon-landing would be far too little (I'm picking an extreme example here, since I want plenty of margin for error). But I don't know where the "line" is.

- ^

Although keep in mind that "oneshotting" does not exclude being able to run experiments (nor does it rule out fairly extensive experimentation). As I touched upon earlier, it may be possible for a plan to have experimentation built into itself. Needing to do experimentation ≠ needing access to a lab and lots of serial time.

I suspect my own intuitions regarding this kind of thing are similar to Eliezer's. It's possible that my intuitions are wrong, but I'll try to share some thoughts.

It seems that we think quite differently when it comes to this, and probably it's not easy for us to achieve mutual understanding. But even if all we do here is to scratch the surface, that may still be worthwhile.

As mentioned, maybe my intuitions are wrong. But maybe your intuitions are wrong (or maybe both). I think a desirable property of plans/strategies for alignment would be robustness to either of us being wrong about this 🙂

I however will write below why I think this description massively underestimates the difficulty in creating self-replicating nanobots

Among people who would suspect me of underestimating the difficulty of developing advanced nanotech, I would suspect most of them of underestimating the difference made by superintelligence + the space of options/techniques/etc that a superintelligent mind could leverage.

In Drexler's writings about how to develop nanotech, one thing that was central to his thinking was protein folding. I remember that in my earlier thinking, it felt likely to me that a superintelligence would be able to "solve" protein folding (to a sufficient extent to do what it wanted to do). My thinking was "some people describe this as infeasible, but I would guess for a superintelligence to be able to do this".

This was before AlphaFold. The way I remember it, the idea of "solving" protein folding was more controversial back in the day (although I tried to google this now, and it was harder to find good examples than I thought it would be).

While physicists sometimes claim to derive things from first principles, in practice these derivations often ignore a lot of details which still has to be justified using experiments

As humans we are "pathetic" in terms of our mental abilities. We have a high error-rate in our reasoning / the work we do, and this makes us radically more dependent on tight feedback-loops with the external world.

This point of error-rate in one's thinking is a really important think. With lower error-rate + being able to do much more thinking / mental work, it becomes possible to learn and do much much more without physical experiments.

The world, and guesses regarding how the world works (including detail-oriented stuff relating to chemistry/biology), are highly interconnected. For minds that are able to do vast about of high-quality low error-rate thinking, it may be possible to combine subtle and noisy Bayesian evidence into overwhelming evidence. And for approaches it explores regarding this kind of thinking, it can test how good it does at predicting existing info/data that it already has access to.

The images below are simple/small examples of the kind of thinking I'm thinking of. But I suspect superintelligences can take this kind of thinking much much further.

The post Einstein's Arrogance also feels relevant here.

While it is indeed possible or even likely that the standard model theoretically describes all details of a working nanobot with the required precision, the problem is that in practice it is impossible to simulate large physical systems using it.

It is infeasible to simulate in "full detail", but it's not clear what we should conclude based on that. Designs that work are often robust to the kinds of details that we need precise simulation in order to simulate correctly.

The specifics of the level of detail that is needed depends on the design/plan in question. A superintelligence may be able to work with simulations in a much less crude way than we do (with much more fine-grained and precise thinking in regards to what can be abstracted away for various parts of the "simulation").

The construction-process/design the AI comes up with may:

- Be constituted of various plans/designs at various levels of abstraction (without most of them needing to work in order for the top-level mechanism to work). The importance/power of the why not both?-principle is hard to understate.

- Be self-correcting in various ways. Like, it can have learning/design-exploration/experimentation built into itself somehow.

- Have lots of built-in contingencies relating to unknowns, as well as other mechanisms to make the design robust to unknowns (similar to how engineers make bridges be stronger than they need to be).

Here are some relevant quotes from Radical Abudance by Eric Drexler:

"Coping with limited knowledge is a necessary part of design and can often be managed. Indeed, engineers designed bridges long before anyone could calculate stresses and strains, which is to say, they learned to succeed without knowledge that seems essential today. In this light, it’s worth considering not only the extent and precision of scientific knowledge, but also how far engineering can reach with knowledge that remains incomplete and imperfect.

For example, at the level of molecules and materials—the literal substance of technological systems—empirical studies still dominate knowledge. The range of reliable calculation grows year by year, yet no one calculates the tensile strength of a particular grade of medium-carbon steel. Engineers either read the data from tables or they clamp a sample in the jaws of a strength-testing machine and pull until it breaks. In other words, rather than calculating on the basis of physical law, they ask the physical world directly.

Experience shows that this kind of knowledge supports physical calculations with endless applications. Building on empirical knowledge of the mechanical properties of steel, engineers apply physics-based calculations to design both bridges and cars. Knowing the empirical electronic properties of silicon, engineers apply physics-based calculations to design transistors, circuits, and computers.

Empirical data and calculation likewise join forces in molecular science and engineering. Knowing the structural properties of particular configurations of atoms and bonds enables quantitative predictions of limited scope, yet applicable in endless circumstances. The same is true of chemical processes that break or make particular configurations of bonds to yield an endless variety of molecular structures.

Limited scientific knowledge may suffice for one purpose but not for another, and the difference depends on what questions it answers. In particular, when scientific knowledge is to be used in engineering design, what counts as enough scientific knowledge is itself an engineering question, one that by nature can be addressed only in the context of design and analysis.

Empirical knowledge embodies physical law as surely as any calculation in physics. If applied with caution—respecting its limits—empirical knowledge can join forces with calculation, not just in contemporary engineering, but in exploring the landscape of potential technologies.

To understand this exploratory endeavor and what it can tell us about human prospects, it will be crucial to understand more deeply why the questions asked by science and engineering are fundamentally different. One central reason is this: Scientists focus on what’s not yet discovered and look toward an endless frontier of unknowns, while engineers focus on what has been well established and look toward textbooks, tabulated data, product specifications, and established engineering practice. In short, scientists seek the unknown, while engineers avoid it.

Further, when unknowns can’t be avoided, engineers can often render them harmless by wrapping them in a cushion. In designing devices, engineers accommodate imprecise knowledge in the same way that they accommodate imprecise calculations, flawed fabrication, and the likelihood of unexpected events when a product is used. They pad their designs with a margin of safety.

The reason that aircraft seldom fall from the sky with a broken wing isn’t that anyone has perfect knowledge of dislocation dynamics and high-cycle fatigue in dispersion-hardened aluminum, nor because of perfect design calculations, nor because of perfection of any other kind. Instead, the reason that wings remain intact is that engineers apply conservative design, specifying structures that will survive even unlikely events, taking account of expected flaws in high-quality components, crack growth in aluminum under high-cycle fatigue, and known inaccuracies in the design calculations themselves. This design discipline provides safety margins, and safety margins explain why disasters are rare."

"Engineers can solve many problems and simplify others by designing systems shielded by barriers that hold an unpredictable world at bay. In effect, boxes make physics more predictive and, by the same token, thinking in terms of devices sheltered in boxes can open longer sightlines across the landscape of technological potential. In my work, for example, an early step in analyzing APM systems was to explore ways of keeping interior working spaces clean, and hence simple.

Note that designed-in complexity poses a different and more tractable kind of problem than problems of the sort that scientists study. Nature confronts us with complexity of wildly differing kinds and cares nothing for our ability to understand any of it. Technology, by contrast, embodies understanding from its very inception, and the complexity of human-made artifacts can be carefully structured for human comprehension, sometimes with substantial success.

Nonetheless, simple systems can behave in ways beyond the reach of predictive calculation. This is true even in classical physics.

Shooting a pool ball straight into a pocket poses no challenge at all to someone with just slightly more skill than mine and a simple bank shot isn’t too difficult. With luck, a cue ball could drive a ball to strike another ball that drives yet another into a distant pocket, but at every step impacts between curved surfaces amplify the effect of small offsets, and in a chain of impacts like this the outcome soon becomes no more than a matter of chance—offsets grow exponentially with each collision. Even with perfect spheres, perfectly elastic, on a frictionless surface, mere thermal energy would soon randomize paths (after 10 impacts or so), just as it does when atoms collide.

Many systems amplify small differences this way, and chaotic, turbulent flow provides a good example. Downstream turbulence is sensitive to the smallest upstream changes, which is why the flap of a butterfly’s wing, or the wave of your hand, will change the number and track of the storms in every future hurricane season.

Engineers, however, can constrain and master this sort of unpredictability. A pipe carrying turbulent water is unpredictable inside (despite being like a shielded box), yet can deliver water reliably through a faucet downstream. The details of this turbulent flow are beyond prediction, yet everything about the flow is bounded in magnitude, and in a robust engineering design the unpredictable details won’t matter."

and is not possible without involvement of top-tier human-run labs today

Eliezer's scenario does assume the involvement of human labs (he describes a scenario where DNA is ordered online).

Alignment is likely an iterative process

I agree with you here (although I would hope that much of this iteration can be done in quick succession, and hopefully in a low-risk way) 🙂

Btw, I very much enjoyed this talk by Ralph Merkle. It's from 2009, but it's still my favorite talk from every talk I've seen on the topic. Maybe you would enjoy it as well. He briefly touches upon the topic of simulations at 28:50, but the entire talk is quite interesting IMO:

None of these are what you describe, but here are some places people can be pointed to:

- Rob Mile's channel

- The Stampy FAQ (they are open for help/input)

- This list of introductions to AI safety

AGI-assisted alignment in Dath Ilan (excerpt from here)

Suppose Dath Ilan got into a situation where they had to choose the strategy of AGI-assisted alignment, and didn't have more than a few years to prepare. Dath Ilan wouldn't actually get themselves into such a situation, but if they did, how might they go about it?

I suspect that among other things they would:

- Make damn well sure to box the AGIs before it plausibly could become dangerous/powerful.

- Try, insofar as they could, to make their methodologies robust to hardware exploits (rowhammer, etc). Not only by making hardware exploits hard, but by thinking about which code they ran on which computers and so on.

- Limit communication bandwidth for AGIs (they might think of having humans directly exposed to AGI communication as "touching the lava", and try to obtain help with alignment-work while touching lava as little as possible).

- Insofar as they saw a need to expose humans to AGI communication, those humans would be sealed off from society (and the AGIs communication would be heavily restricted). The idea of having operators themselves be exposed to AGI-generated content from superhuman AGIs they don't trust to be aligned - in Dath Ilan such an approach would have been seen as outside the realm of consideration.

- Humans would be exposed to AGI communication mostly so as to test the accuracy of systems that predict human evaluations (if they don't test humans on AGI-generated content, they're not testing the full range of outputs). But even this they would also try to get around / minimize (by, among other things, using techniques such as the ones I summarize here).

- In Dath Ilani, it would be seen as a deranged idea to have humans evaluate arguments (or predict how humans would evaluate arguments), and just trust arguments that seem good to humans. To them, that would be kind of like trying to make a water-tight basket, but never trying to fill it with water (to see if water leaked through). Instead, they would use techniques such as the ones I summarize here.

- When forced to confront chicken and egg problems, they would see this as a challenge (after all, chickens exist, and eggs exist - so it's not as if chicken and egg problems never have solutions).

This is from What if Debate and Factored Cognition had a mutated baby? (a post I started on, but I ended up disregarding this draft and starting anew). This is just an excerpt from the intro/summary (it's not the entire half-finished draft).

Tweet-length summary-attempts

Resembles Debate, but:

|

We'd have separate systems that (among other things):

I'll explain why obtaining help with #2 doesn't rely on us already having obtained honest systems. |

"ASIs could manipulate humans" is a leaky abstraction (which humans? how is argumentation restricted?). ASIs would know regularities for when humans are hard to fool (even by other ASIs). I posit: We can safely/robustly get them to make functions that leverage these regularities to our advantage. |

Summary

To many of you, the following will seem misguided:

We can obtain systems from AIs that predict human evaluations of the various steps in “proof-like” argumentation.

We can obtain functions from AIs that assign scores to “proof-like” argumentation based on how likely humans are to agree with the various steps.

We can have these score-functions leverage regularities for when humans tend to evaluate correctly (based on info about humans, properties of the argumentation, etc).

We can then request “proofs” for whether outputs do what we asked for / want (and trust output that can be accompanied with high-scoring proofs).

We can request outputs that help us make robustly aligned AGIs.

Many of you may find several problems with what I describe above - not just one. Perhaps most glaringly, it seems circular:

If we already had AIs that we trusted to write functions that separate out “good” human evaluations, couldn’t we just trust those AIs to give us “good” answers directly?

The answer has to do with what we can and can’t score in a safe and robust way (for purposes of gradient descent).

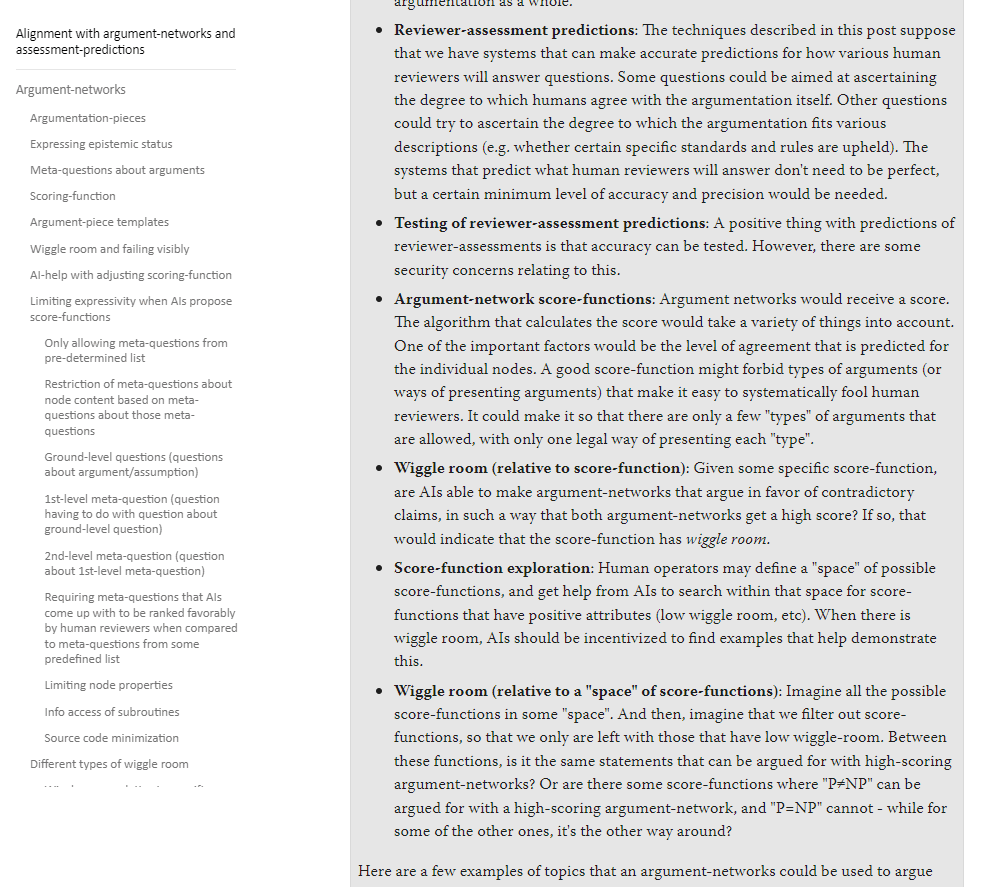

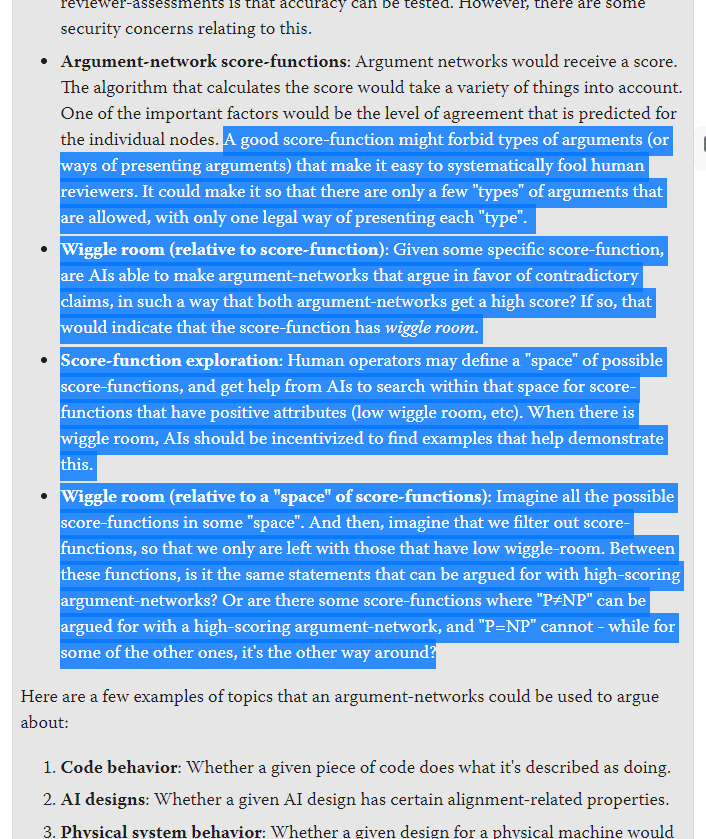

The answer also has to do with exploration of wiggle-room:

Given a specific score-function, is it possible to construct high-scoring arguments that argue in favor of contradictory conclusions?

And exploration of higher-level wiggle-room:

Suppose some specific restrictions for score-functions (designed to make it hard to make high-scoring score-functions that have low wiggle-room for “wrong” reasons).

Given those restrictions, is it possible to make high-scoring score-functions that are mutually contradictory (even if internally those score-functions have low wiggle-room)?

All score-functions that robustly leverate regularities for when human evaluations are correct/good would have low wiggle-room. The reverse is not true. Score-functions could have low wiggle-room due to somehow favoring wrong/bad conclusions.

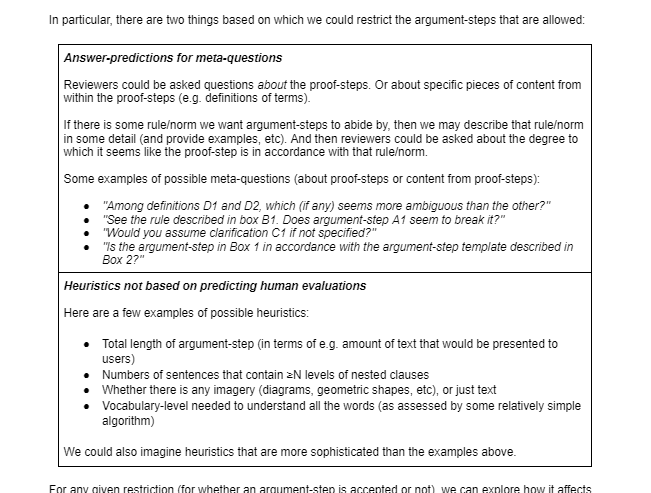

Some (but not all) “core” concepts are summarized below:

Wiggle-room (relative to score-function for argument-step-networks) Is it possible to generate high-scoring networks that argue for contradictory conclusions? If yes, then that means there is wiggle room. Any score-function that robustly separates and leverages “good” human evaluations will have low wiggle-room. But the reverse is not true, as there also are other ways to achieve low wiggle-room. |

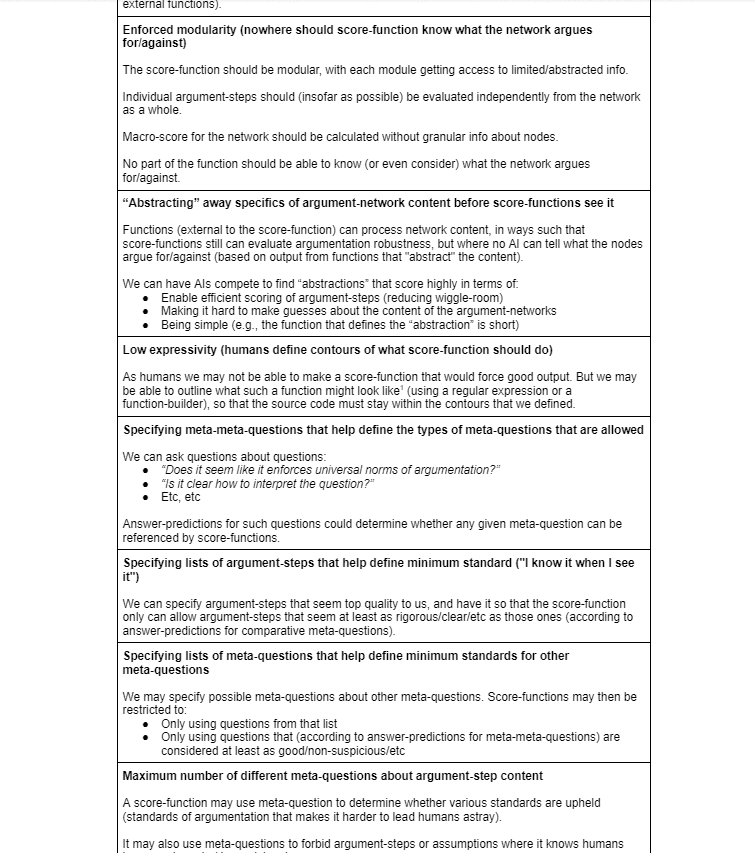

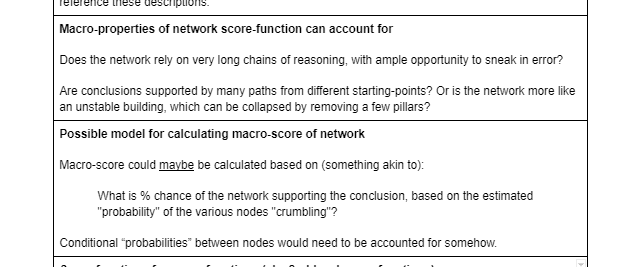

Making it so that the way for a score-function to itself get a high score is to separate out “good” human evaluations Some AIs would make score-functions, and they would try to make score-functions that get a high score (from the score-function score-function 🙃). Score-functions would be required to have low wiggle-room. If constructed freely, score-functions could achieve low wiggle-room in a variety of ways. But with this alignment-methodology, the “space” of possible score-functions that can be proposed would be heavily restricted. We’d want score-functions to achieve low wiggle-room by leveraging regularities for when human evaluators get things right. We’d do that by making it so that achieving low wiggle-room any other way either would be impossible, or result in a lower score. Some of the tools that would be on our toolbox when restricting score-functions:

|

Wiggle-room (relative to score-function for score-functions) Have we succeeded with making it so that, in order to maximize score, score-functions must robustly separate out “good” human evaluations? Or is it possible to somehow sneak in bias in favor of specific conclusions, without the score-function getting a lower score as a result? We can check that, by asking AIs to find high-scoring score-functions that allow for contradictory conclusions. If they are able to do that, then it’s possible to sneak in bias.

|

Below are some concepts related to extracting aligned capabilities. The main goal is to be able to verify specialized functions without having humans need to look at the source code, and without being able to safely/robustly score outputs for the full range of inputs.

Some things we need:

- We need AIs that act in such a way as to maximize score

- There needs to be some some range of the inputs that we can test

- There needs to be ways of obtaining/calculating the output we want that are at least somewhat general

An example of an aligned capability we might want would be to predict human answers. In this case, we could test outputs by actually asking questions to real humans (or using existing data of human receiving questions). But if we use the systems to predict human answers when they evaluate AGI-generated content, then we may not want to test those outputs/predictions on real humans.

(I'm working on texts that hopefully will explain these concepts better. In the meantime, this is the best I have.)

Wiggle-room

Desideratum A function that determines whether some output is approved or not (that output may itself be a function). |

Score-function A function that assigns score to some output (that output may itself be a function). |

Function-builder Think regular expressions, but more expressive and user-friendly. We can require of AIs: "Only propose functions that can be made with this builder". That way, we restrict their expressivity. When we as humans specify desideratum, this is one tool (among several!) in the tool-box. |

Higher-level desideratum or score-function Not fundamentally different from other desideratum or score-functions. But the output that is evaluated is itself a desideratum or score-function. At every level there can be many requirements for the level below. A typical requirement at every level is low wiggle-room. |

Example of higher-level desideratum / score-functions Humans/operators define a score-function ← level 4 for desideratum ← level 3 for desideratum ← level 2 for desideratum ← level 1 for functions that generate the output we care about. |

Wiggle-room relative to desideratum Among outputs that would be approved by the desideratum, do any of them contradict each other in any way? For example: Are there possible functions that give contradicting outputs (for at least 1 input), such that both functions would be approved by the desideratum? |

Wiggle-room relative to score-function Among outputs that would receive a high score by the score-function in question (e.g. "no less than 80% of any other possible output"), do any of them contradict each other in any way? |

2nd-level wiggle-room relative to desiderata We start with a desiderata-desideratum or score-function-desideratum (aka 2nd-level desideratum). Set A: Any desideratum that approved by the desiderata-desideratum. Set B: Any output approved by ≥1 of the desiderata in A. Are there ≥1 contradictions among outputs in B? |

P(desideratum forces good outputs | desideratum has low wiggle-room) If a desideratum forces good/correct outputs, then it has low wiggle-room. But the reverse is not necessarily true. But for some desiderata we may think: "If wiggle-room is low, that’s probably because it’s hard to satisfy the desideratum without also producing good output." |

“Spaces/sets of desideratum where we think P(desideratum forces good outputs | desideratum has low wiggle-room) is low Among spaces/sets of low-wiggle-room desideratum where we suspect "low wiggle-room → good output" (as defined by higher-level desideratum), do outputs converge? |

AI vs AI games and optimization-targets

AI vs AI game AIs compete in a zero-sum game. Game produces some type of output (e.g. a function) that is scored.

|

AIs (in AI vs AI games) proving each other wrong, making bets and counter-bets, etc "This is the shortest source-code for doing A" "Here is a source-code that's shorter" "All outputs from F1 will be scored well by evaluation-function F2" "Here's an input where that's not true"

"I'll bet on any prediction made by function F3" "I make a counter-bet for input I1" |

Evaluation-range For functions with AGI origin, there may sometimes be only some subset of the inputs where we can evaluate output robustly+safely. For example, when testing systems that predict human output, we may want to avoid experiments where humans evaluate AGI-generated content. |

Test-range In AI vs AI games (e.g. to make high-scoring functions), some subset of inputs may be declared the test-range. Bad output in evaluation-range outside of test-range: No penalty. Test-range ⊈ evaluation-range: Game is not scored. |

Optimization-targets Some examples (there are many more):

|

Optimization-targets that cause low wiggle-room outside of test-range Imagine AIs competing to make functions that calculate the area of squares. Accurate output inside test-range is required. Source code brevity gives bonus-points. Among functions that maximize score, all have the same outputs outside of test-range. |

Combining optimization-targets Optimization-targets can be combined (be that with weighted sums, or functions that assign score based on more sophisticated evaluations). When I talk about optimization-targets, you should not assume that it consists of just 1 heuristic (such as e.g. source code length). |

Techniques for testing P(optimization-target forces good output | optimization-target causes low wiggle-room) Run AI vs AI games where we:

(For the sake of scale/efficiency we can have AIs make bets on the outcomes of such games.) |

Rules for predicting if/when optimization-targets limit wiggle-room for the right reasons AIs can find rules that predict when we should trust some optimization-target to cause low wiggle-room for the right reasons (scored by simplicity, false positive rate, etc). They can make bets on average performance, and point out examples where rules from competing AIs fail. |

Convergence/divergence among rules for predicting if/when optimization-targets limit wiggle-room for the right reasons B: Outputs we can't test safely. R: Rules for predicting when optimization-targets force good output (top-scoring when tested within For outputs in B, we can explore: "Does R converge in regards to this output?" |

“Spaces” of possible functions we think contain ≥1 robustly reliable function If we (have good reason to) think some “space” of functions contains at least ≥1 robustly reliable function, and all functions in that “space” agree on output for a given input, then we can trust this to be that output - even if there isn’t a specific function that we trust to be robustly reliable. |

Examples of optimization-targets

Source code brevity Sometimes the solution with shortest source code is quite generic/general/abstract. In such cases, intentionally giving wrong output for some inputs would often require implementing this solution and adding (something akin to) if-sentences that gives bad output for some inputs. |

Generality A maximally “non-general” function would have 1 if-sentence per possible input. Possible heuristics:

|

Use of computational resources If a function only focuses on finding the correct solution, and doesn’t need to spend cycles thinking about how to deceive, what humans think, etc (in addition to finding the correct solution), it will need less computational resources. |

Non-overlap-minimization with optimized code-bases where we robustly can score/verify the full range of outputs We can score/verify all outputs for:

If you’re not trying to have your function give bad output once in a while, borrowing sub-routines may be easier. |

Source code brevity (while doing several specialized tasks from same code-base) Suppose one code-base is to be specialized, but towards several different tasks (and for several of them, all output can be verified). E.g.:

|

Modularity Imagine that you are trying to make a car that fails in certain very specific conditions. This becomes more challenging if it has to be made out of parts that also are used in other vehicles (and these other vehicles are robustly tested). |

The degree to which subroutine instantiations inside/outside test-range can be told apart % of source code that consists of subroutines where no AI is able to guess/determine based on input if the function-call is inside or outside of test-range. This could be determined by running other AI vs AI games (but mostly by making predictions/bets on what outcome would be). |

Module implementation flexibility Imagine that you are trying to make a car, and want the steering wheel to fail, but only in certain very specific conditions. This becomes more challenging to achieve consistently if it isn’t you who will be making the steering wheel. |

Module division flexibility If you are trying to fail for certain specific inputs, this is made harder if you are making functions based on different architectures, and they need to fail the same way. There may be crude heuristics that help approximate whether 2 architectures are substantially different. |

Minimal computational “logic” (including “axioms”) needed to infer output

|

Predicted comprehensibility to humans Heuristics relating to how understandable code-base would be to humans. It is allowed to rewrite code-base for understandability, so long as output is unchanged. This optimization-target presupposes systems that can predict human output (with non-terrible accuracy). |

Telephone game robustness Suppose various systems (maybe some emulating humans and some that don't) for:

With N cycles, what's the % chance of ≥0 outputs changing, but only outside in test-range? |

Output-robustness to training data Instead of having the AI do something, we can have it make a seed AI that does (after having been trained). And we can see the degree to which various outputs are dependent on variations in training data. |

Output-robustness to adding chains by which output can be indirectly obtained Indirect ways of obtaining answers can be chained:

Each chain is an opportunity for deceptive AIs to lose ground / be unable to have intentional mistakes remain. |

I would also like to see more work where people make less positive/optimistic assumptions. I think of it as a good thing that different approaches to alignment are being explored, and would like to see more of that in general (both in terms of breadth and depth).

I guess there are many possible ways of trying to categorize/conceptualize approaches to alignment theorizing. One is by asking "when talking/thinking about the methodology, what capabilities are assumed to be in place?".

I'm not sure about this, but unless I'm mistaken[1], a good amount of the work done by MIRI has been under a premise that goes (something sort of like): "Let's assume that we already know how to give AIs real-world objectives defined in terms of utility functions (not because we really assume that, but as a simplifying assumption)". And as far as I know, they haven't done much work where the assumption was something more like "suppose we were extremely good at gradient descent / searching through spaces of possible programs".

In my own theorizing, I don't make all of the simplifying assumptions that (I think/suspect) MIRI made in their "orthodox" research. But I make other assumptions (for the purpose of simplification), such as:

- "let's assume that we're really good at gradient descent / searching for possible AIs in program-space"[2]

- "let's assume that the things I'm imagining are not made infeasible due to a lack of computational resources"

- "let's assume that resources and organizational culture makes it possible to carry out the plans as described/envisioned (with high technical security, etc)"

In regards to your alignment ideas, is it easy to summarize what you assume to be in place? Like, if someone came to you and said "we have written the source code for a superintelligent AGI, but we haven't turned it on yet" (and you believed them), is it easy to summarize what more you then would need in order to implement your methodology?

- ^

I very well could be, and would appreciate any corrections.

(I know they have worked on lots of detail-oriented things that aren't "one big plan" to "solve alignment". And maybe how I phrase myself makes it seem like I don't understand that. But if so, that's probably due to bad wording on my part.) - ^

Well, I sort of make that assumption, but there are caveats.

If humans (...) machine could too.

From my point of view, humans are machines (even if not typical machines). Or, well, some will say that by definition we are not - but that's not so important really ("machine" is just a word). We are physical systems with certain mental properties, and therefore we are existence proofs of physical systems with those certain mental properties being possible.

machine can have any level of intelligence, humans are in a quite narrow spectrum

True. Although if I myself somehow could work/think a million times faster, I think I'd be superintelligent in terms of my capabilities. (If you are skeptical of that assessment, that's fine - even if you are, maybe you believe it in regards to some humans.)

prove your point by analogy with humans. If humans can pursue somewhat any goal, machine could too.

It has not been my intention to imply that humans can pursue somewhat any goal :)

I meant to refer to the types of machines that would be technically possible for humans to make (even if we don't want to so in practice, and shouldn't want to). And when saying "technically possible", I'm imagining "ideal" conditions (so it's not the same as me saying we would be able to make such machines right now - only that it at least would be theoretically possible).

Why call it an assumption at all?

Partly because I was worried about follow-up comments that were kind of like "so you say you can prove it - well, why aren't you doing it then?".

And partly because I don't make a strict distinction between "things I assume" and "things I have convinced myself of, or proved to myself, based on things I assume". I do see there as sort of being a distinction along such lines, but I see it as blurry.

Something that is derivable from axioms is usually called a theorem.

If I am to be nitpicky, maybe you meant "derived" and not "derivable".

From my perspective there is a lot of in-between between these two:

- "we've proved this rigorously (with mathemathical proofs, or something like that) from axiomatic assumptions that pretty much all intelligent humans would agree with"

- "we just assume this without reason, because it feels self-evident to us"

Like, I think there is a scale of sorts between those two.

I'll give an extreme example:

Person A: "It would be technically possible to make a website that works the same way as Facebook, except that its GUI is red instead of blue."

Person B: "Oh really, so have you proved that then, by doing it yourself?"

Person A: "No"

Person B: "Do you have a mathemathical proof that it's possible"

Person A: "Not quite. But it's clear that if you can make Facebook like it is now, you could just change the colors by changing some lines in the code."

Person B: "That's your proof? That's just an assumption!"

Person A: "But it is clear. If you try to think of this in a more technical way, you will also realize this sooner or later."Person B: "What's your principle here, that every program that isn't proven as impossible is possible?"

Person A: "No, but I see very clearly that this program would be possible."Person B: "Oh, you see it very clearly? And yet, you can't make it, or prove mathemathically that it should be possible."

Person A: "Well, not quite. Most of what we call mathemathical proofs, are (from my point of view) a form of rigorous argumentation. I think I understand fairly well/rigorously why what I said is the case. Maybe I could argue for it in a way that is more rigorous/formal than I've done so far in our interaction, but that would take time (that I could spend on other things), and my guess is that even if I did, you wouldn't look carefully at my argumentation and try hard to understand what I mean."

The example I give here is extreme (in order to get across how the discussion feels to me, I make the thing they discuss into something much simpler). But from my perspective it is sort of similar to discussion in regards the The Orthogonality Thesis. Like, The Orthogonality Thesis is imprecisely stated, but I "see" quite clearly that some version of it is true. Similar to how I "see" that it would be possible to make a website that technically works like Facebook but is red instead of blue (even though - as I mentioned - that's a much more extreme and straight-forward example).

(...) if it's supported by argument or evidence, but if it is, then it's no mere assumption.

I do think it is supported by arguments/reasoning, so I don't think of it as an "axiomatic" assumption.

A follow-up to that (not from you specifically) might be "what arguments?". And - well, I think I pointed to some of my reasoning in various comments (some of them under deleted posts). Maybe I could have explained my thinking/perspective better (even if I wouldn't be able to explain it in a way that's universally compelling 🙃). But it's not a trivial task to discuss these sorts of issues, and I'm trying to check out of this discussion.

I think there is merit to having as a frame of mind: "Would it be possible to make a machine/program that is very capable in regards to criteria x, y, etc, and optimizes for z?".

I think it was good of you you to bring up Aumann's agreement theorem. I haven't looked into the specifics of that theorem, but broadly/roughly speaking I agree with it.

I cannot help you to be less wrong if you categorically rely on intuition about what is possible and what is not.

I wish I had something better to base my beliefs on than my intuitions, but I do not. My belief in modus ponens, my belief that 1+1=2, my belief that me observing gravity in the past makes me likely to observe it in the future, my belief that if views are in logical contradiction they cannot both be true - all this is (the way I think of it) grounded in intuition.

Some of my intuitions I regard as much more strong/robust than others.

When my intuitions come into conflict, they have to fight it out.

Thanks for the discussion :)

Like with many comments/questions from you, answering this question properly would require a lot of unpacking. Although I'm sure that also is true of many questions that I ask, as it is hard to avoid (we all have limited communication bandwitdh) :)

In this last comment, you use the term "science" in a very different way from how I'd use it (like you sometimes also do with other words, such as for example "logic"). So if I was to give a proper answer I'd need to try to guess what you mean, make it clear how I interpret what you say, and so on (not just answer "yes" or "no").

I'll do the lazy thing and refer to some posts that are relevant (and that I mostly agree with):

It seems that 2 + 2 = 4 is also an assumption for you.

Yes (albeit a very reasonable one).

Not believing (some version) of that claim would make typically make minds/AGIs less "capable", and I would expect more or less all AGIs to hold (some version of) that "belief" in practice.

I don't think it is possible to find consensus if we do not follow the same rules of logic.

Here are examples of what I would regard to be rules of logic: https://en.wikipedia.org/wiki/List_of_rules_of_inference (the ones listed here don't encapsulate all of the rules of inference that I'd endorse, but many of them). Despite our disagreements, I think we'd both agree with the rules that are listed there.

I regard Hitchens's razor not as a rule of logic, but more as an ambiguous slogan / heuristic / rule of thumb.

Best wishes from my side as well :)

:)

I do have arguments for that, and I have already mentioned some of them earlier in our discussion (you may not share that assesment, despite us being relatively close in mind-space compared to most possible minds, but oh well).

Some of the more relevant comments from me are on one of the posts that you deleted.

As I mention here, I think I'll try to round off this discussion. (Edit: I had a malformed/misleading sentence in that comment that should be fixed now.)

Every assumption is incorrect unless there is evidence.

Got any evidence for that assumption? 🙃

Answer to all of them is yes. What is your explanation here?

Well, I don't always "agree"[1] with ChatGPT, but I agree in regards to those specific questions.

...

I saw a post where you wanted people to explain their disagreement, and I felt inclined to do so :) But it seems now that neither of us feel like we are making much progress.

Anyway, from my perspective much of your thinking here is very misguided. But not more misguided than e.g. "proofs" for God made by people such as e.g. Descartes and other well-known philiophers :) I don't mean that as a compliment, but more so as to neutralize what may seem like anti-compliments :)

Best of luck (in your life and so on) if we stop interacting now or relatively soon :)

I'm not sure if I will continue discussing or not. Maybe I will stop either now or after a few more comments (and let you have the last word at some point).

- ^

I use quotation-marks since ChatGPT doesn't have "opinions" in the way we do.

Do you think you can deny existence of an outcome with infinite utility?

To me, according to my preferences/goals/inclinations, there are conceivable outcomes with infinite utility/disutility.

But I think it is possible (and feasible) for a program/mind to be extremely capable, and affect the world, and not "care" about infinite outcomes.

The fact that things "break down" is not a valid argument.

I guess that depends on what's being discussed. Like, it is something to take into account/consideration if you want to prove something while referencing utility-functions that reference infinities.

About universally compelling arguments?

First, a disclaimer: I do think there are "beliefs" that most intelligent/capable minds will have in practice. E.g. I suspect most will use something like modus ponens, most will update beliefs in accordance with statistical evidence in certain ways, etc. I think it's possible for a mind to be intelligent/capable without strictly adhering to those things, but for sure I think there will be a correlation in practice for many "beliefs".

Questions I ask myself are:

- Would it be impossible (in theory) to wire together a mind/program with "belief"/behavior x, and having that mind be very capable at most mental tasks?