Posts

Comments

It's the first official day of the AI Safety Action Summit, and thus it's also the day that the Seoul Commitments (made by sixteen companies last year to adopt an RSP/safety framework) have come due.

I've made a tracker/report card for each of these policies at www.seoul-tracker.org.

I'll plan to keep this updated for the foreseeable future as policies get released/modified. Don't take the grades too seriously — think of it as one opinionated take on the quality of the commitments as written, and in cases where there is evidence, implemented. Do feel free to share feedback if anything you see surprises you, or if you think the report card misses something important.

My personal takeaway is that both compliance and quality for these policies are much worse than I would have hoped. I believe many peoples' theories of change for these policies gesture at something about a race to the top, where companies are eager to outcompete each other on safety to win talent and public trust, but I don't sense much urgency or rigor here. Another theory of change is that this is a sort of laboratory for future regulation, where companies can experiment now with safety practices and the best ones could be codified. But most of the diversity between policies here is in how vague they can be while claiming to manage risks :/

I'm really hoping this changes as AGI gets closer and companies feel they need to do more to prove to govts/public that they can be trusted. Part of my hope is that this report card makes clear to outsiders that not all voluntary safety frameworks are equally credible.

Two more disclaimers from both policies that worry me:

Meta writes:

Security Mitigations - Access is strictly limited to a small number of experts, alongside security protections to prevent hacking or exfiltration insofar as is technically feasible and commercially practicable.

"Commercially practicable" is so load-bearing here. With a disclaimer like this, why not publicly commit to writing million-dollar checks to anyone who asks for one? It basically means "We'll do this if it's in our interest, and we won't if it's not." Which is like, duh. That's the decision procedure for everything you do.

I do think the public intention setting is good, and it might support the codification of these standards, but it does not a commitment make.

Google, on the other hand, has this disclaimer:

The safety of frontier AI systems is a global public good. The protocols here represent our current understanding and recommended approach of how severe frontier AI risks may be anticipated and addressed. Importantly, there are certain mitigations whose social value is significantly reduced if not broadly applied to AI systems reaching critical capabilities. These mitigations should be understood as recommendations for the industry collectively: our adoption of them would only result in effective risk mitigation for society if all relevant organizations provide similar levels of protection, and our adoption of the protocols described in this Framework may depend on whether such organizations across the field adopt similar protocols.

I think it's funny these are coming out this week as an implicit fulfillment of the Seoul commitments. Did I miss some language in the Seoul commitments saying "we can abandon these promises if others are doing worse or if it's not in our commercial interest?"

And, if not, do policies with disclaimers like these really count as fulfilling a commitment? Or is it more akin to Anthropic's non-binding anticipated safeguards for ASL-3? If it's the latter, then fine, but I wish they'd label it as such.

I recently created a simple workflow to allow people to write to the Attorneys General of California and Delaware to share thoughts + encourage scrutiny of the upcoming OpenAI nonprofit conversion attempt.

I think this might be a high-leverage opportunity for outreach. Both AG offices have already begun investigations, and Attorneys General are elected officials who are primarily tasked with protecting the public interest, so they should care what the public thinks and prioritizes. Unlike e.g. congresspeople, I don't AGs often receive grassroots outreach (I found ~0 examples of this in the past), and an influx of polite and thoughtful letters may have some influence — especially from CA and DE residents, although I think anyone impacted by their decision should feel comfortable contacting them.

Personally I don't expect the conversion to be blocked, but I do think the value and nature of the eventual deal might be significantly influenced by the degree of scrutiny on the transaction.

Please consider writing a short letter — even a few sentences is fine. Our partner handles the actual delivery, so all you need to do is submit the form. If you want to write one on your own and can't find contact info, feel free to dm me.

OpenAI has finally updated the "o1 system card" webpage to include evaluation results from the o1 model (or, um, a "near final checkpoint" of the model). Kudos to Zvi for first writing about this problem.

They've also made a handful of changes to the system card PDF, including an explicit acknowledgment of the fact that they did red teaming on a different version of the model from the one that released (text below). They don't mention o1 pro, except to say "The content of this card will be on the two checkpoints outlined in Section 3 and not on the December 17th updated model or any potential future model updates to o1."

Practically speaking, with o3 just around the corner, these are small issues. But I see the current moment as the dress rehearsal for truly powerful AI, where the stakes and the pressure will be much higher, and thus acting carefully + with integrity will be much more challenging. I'm frustrated OpenAI is already struggling to adhere to its preparedness framework and generally act in transparent and legible ways.

I've uploaded a full diff of the changes to the system card, both web and PDF, here. I'm also frustrated that these changes were made quietly, and that the "last updated" timestamp on the website still reads "December 5th"

As part of our commitment to iterative deployment, we continuously refine and improve our models. The evaluations described in this System Card pertain to the full family of o1 models, and exact performance numbers for the model used in production may vary slightly depending on system updates, final parameters, system prompt, and other factors.

More concretely, for o1, evaluations on the following checkpoints [1] are included:

• o1-near-final-checkpoint

• o1-dec5-release

Between o1-near-final-checkpoint and the releases thereafter, improvements included better format following and instruction following, which were incremental post-training improvements (the base model remained the same). We determined that prior frontier testing results are applicable for these improvements. Evaluations in Section 4.1, as well as Chain of Thought Safety and Multilingual evaluations were conducted on o1-dec5-release, while external red teaming and Preparedness evaluations were conducted on o1-near-final-checkpoint [2]

- ^

"OpenAI is constantly making small improvements to our models and an improved o1 was launched on December 17th. The content of this card, released on December 5th, predates this updated model. The content of this card will be on the two checkpoints outlined in Section 3 and not on the December 17th updated model or any potential future model updates to o1"

- ^

"Section added after December 5th on 12/19/2024" (In reality, this appears on the web archive version of the PDF between January 6th and January 7th)

What should I read if I want to really understand (in an ITT-passing way) how the CCP makes and justifies its decisions around censorship and civil liberties?

I agree with your odds, or perhaps mine are a bit higher (99.5%?). But if there were foul play, I'd sooner point the finger at national security establishment than OpenAI. As far as I know, intelligence agencies committing murder is much more common than companies doing so. And OpenAI's progress is seen as critically important to both.

Lucas gives GPT-o1 the homework for Harvard’s Math 55, it gets a 90%

The linked tweet makes it look like Lucas also had an LLM doing the grading... taking this with a grain of salt!

I've used both data center and rotating residential proxies :/ But I am running it on the cloud. Your results are promising so I'm going to see how an OpenAI-specific one run locally works for me, or else a new proxy provider.

Thanks again for looking into this.

Ooh this is useful for me. The pastebin link appears broken - any chance you can verify it?

I defintiely get 403s and captchas pretty reliably for OpenAI and OpenAI alone (and notably not google, meta, anthropic, etc.) with an instance based on https://github.com/dgtlmoon/changedetection.io. Will have to look into cookie refreshing. I have had some success with randomizing IPs, but maybe I don't have the cookies sorted.

Sorry, I might be missing something: subdomains are subdomain.domain.com, whereas ChatGPT.com is a unique top-level domain, right? In either case, I'm sure there are benefits to doing things consistently — both may be on the same server, subject to the same attacks, beholden to the same internal infosec policies, etc.

So I do believe they have their own private reasons for it. Didn't mean to imply that they've maliciously done this to prevent some random internet guy's change tracking or anything. But I do wish they would walk it back on the openai.com pages, or at least in their terms of use. It's hypocritcal, in my opinion, that they are so cautious about automated access to their own site while relying on such access so completely from other sites. Feels similar to when they tried to press copyright claims against the ChatGPT subreddit. Sure, it's in their interest for potentially nontrivial reasons, but it also highlights how weird and self-serving the current paradigm (and their justifications for it) are.

ChatGPT is only accessible for free via chatgpt.com, right? Seems like it shouldn't be too hard to restrict it to that.

A (somewhat minor) example of hypocrisy from OpenAI that I find frustrating.

For context: I run an automated system that checks for quiet/unannounced updates to AI companies' public web content including safety policies, model documentation, acceptable use policies, etc. I also share some findings from this on Twitter.

Part of why I think this is useful is that OpenAI in particular has repeatedly made web changes of this nature without announcing or acknowledging it (e.g. 1, 2, 3, 4, 5, 6). I'm worried that they may continue to make substantive changes to other documents, e.g. their preparedness framework, while hoping it won't attract attention (even just a few words, like if they one day change a "we will..." to a "we will attempt to...").

This process requires very minimal bandwidth/requests to the web server (it checks anywhere from once a day to once a month per monitored page).

But letting this system run on OpenAI's website is complicated as (1) they are incredibly proactive at captcha-walling suspected crawlers (better than any other website I've encountered, and I've run this on thousands of sites in the past) and (2) their terms of use technically forbid any automated data collection from their website (although it's unclear whether this is legal/enforceable in the US).

The irony should be immediately obvious — not only is their whole data collection pipeline reliant on web scraping, but they've previously gotten in hot water for ignoring other websites' robots.txt and not complying with the GDPR rules on web scraping. Plus, I'm virtually certain they don't respect other websites with clauses in the terms of use that forbid automated access. So what makes them so antsy about automated access to their own site?

I wish OpenAI would change one of these behaviors: either stop making quiet, unannounced, and substantive changes to your publicly-released content, or else stop trying so hard to keep automated website monitors from accessing your site to watch for these changes.

I've asked similar questions before and heard a few things. I also have a few personal thoughts that I thought I'd share here unprompted. This topic is pretty relevant for me so I'd be interested in what specific claims in both categories people agree/disagree with.

Things I've heard:

- There's some skepticism about how well-positioned xAI actually is to compete with leading labs, because although they have a lot of capital and ability to fundraise, lots of the main bottlenecks right now can't simply be solved by throwing more money at the problem. E.g. building infrastructure, securing power contracts, hiring top engineers, accessing huge amounts of data, and building on past work are all pretty limited by non-financial factors, and therefore the incumbents have lots of advantages. That being said, it's placed alongside Meta and Google in the highest liquidity prediction market I could find about this asking which labs will be "top 3" in 2025.

- There's some optimism about their attitude to safety since Elon has been talking about catastrophic risks from AI in no uncertain terms for a long time. There's also some optimism coming from the fact that he/xAI opted to appoint Dan Hendrycks as an advisor.

Personal thoughts:

- I'm not that convinced that they will take safety seriously by default. Elon's personal beliefs seem to be hard to pin down/constantly shifting, and honestly, he hasn't seemed to be doing that well to me recently. He's long had a belief that the SpaceX project is all about getting humanity off Earth before we kill ourselves, and I could see a similar attitude leading to the "build ASI asap to get us through the time of perils" approach that I know others at top AI labs have (if he doesn't feel this way already).

- I also think (~65%) it was a strategic blunder for Dan Hendrycks to take a public position there. If there's anything I took away from the OpenAI meltdown, it's a greater belief in something like "AI Safety realpolitik;" that is, when the chips are down, all that matters is who actually has the raw power. Fancy titles mean nothing, personal relationships mean nothing, heck, being a literal director of the organization means nothing, all that matters is where the money and infrastructure and talent is. So I don't think the advisor position will mean much, and I do think it will terribly complicate CAIS' efforts to appear neutral, lobby via their 501c4, etc. I have no special insight here so I hope I'm missing something, or that the position does lead to a positive influence on their safety practices that wouldn't have been achieved by unofficial/ad-hoc advising.

- I think most AI safety discourse is overly focused on the top 4 labs (OpenAI, Anthropic, Google, and Meta) and underfocused on international players, traditional big tech (Microsoft, Amazon, Apple, Samsung), and startups (especially those building high-risk systems like highly-technical domain specialists and agents). Similarly, I think xAI gets less attention than it should.

Magic.dev has released an initial evaluation + scaling policy.

It's a bit sparse on details, but it's also essentially a pre-commitment to implement a full RSP once they reach a critical threshold (50% on LiveCodeBench or, alternatively, a "set of private benchmarks" that they use internally).

I think this is a good step forward, and more small labs making high-risk systems like coding agents should have risk evaluation policies in place.

Also wanted to signal boost that my org, The Midas Project, is running a public awareness campaign against Cognition (another startup making coding agents) asking for a policy along these lines. Please sign the petition if you think this is useful!

Thank you for sharing — I basically share your concerns about OpenAI, and it's good to talk about it openly.

I'd be really excited about a large, coordinated, time-bound boycott of OpenAI products that is (1) led by a well-known organization or individual with a recruitment and media outreach strategy and (2) accompanied by a set of specific grievances like the one you provide.

I think that something like this would (1) mitigate some of the costs that @Zach Stein-Perlman alludes to since it's time-bound (say only for a month), and (2) retain the majority of the benefits, since I think the signaling value of any boycott (temporary or permanent) will far, far exceed the material value in ever-so-slightly withholding revenue from OpenAI.

I don't mean to imply that this is opposed to what you're doing — your personal boycott basically makes sense to me, plus I suspect (but correct me if I'm wrong) that you'd also be equally excited about what I describe above. I just wanted to voice this in case others feel similarly or someone who would be well-suited to organizing such a boycott reads this.

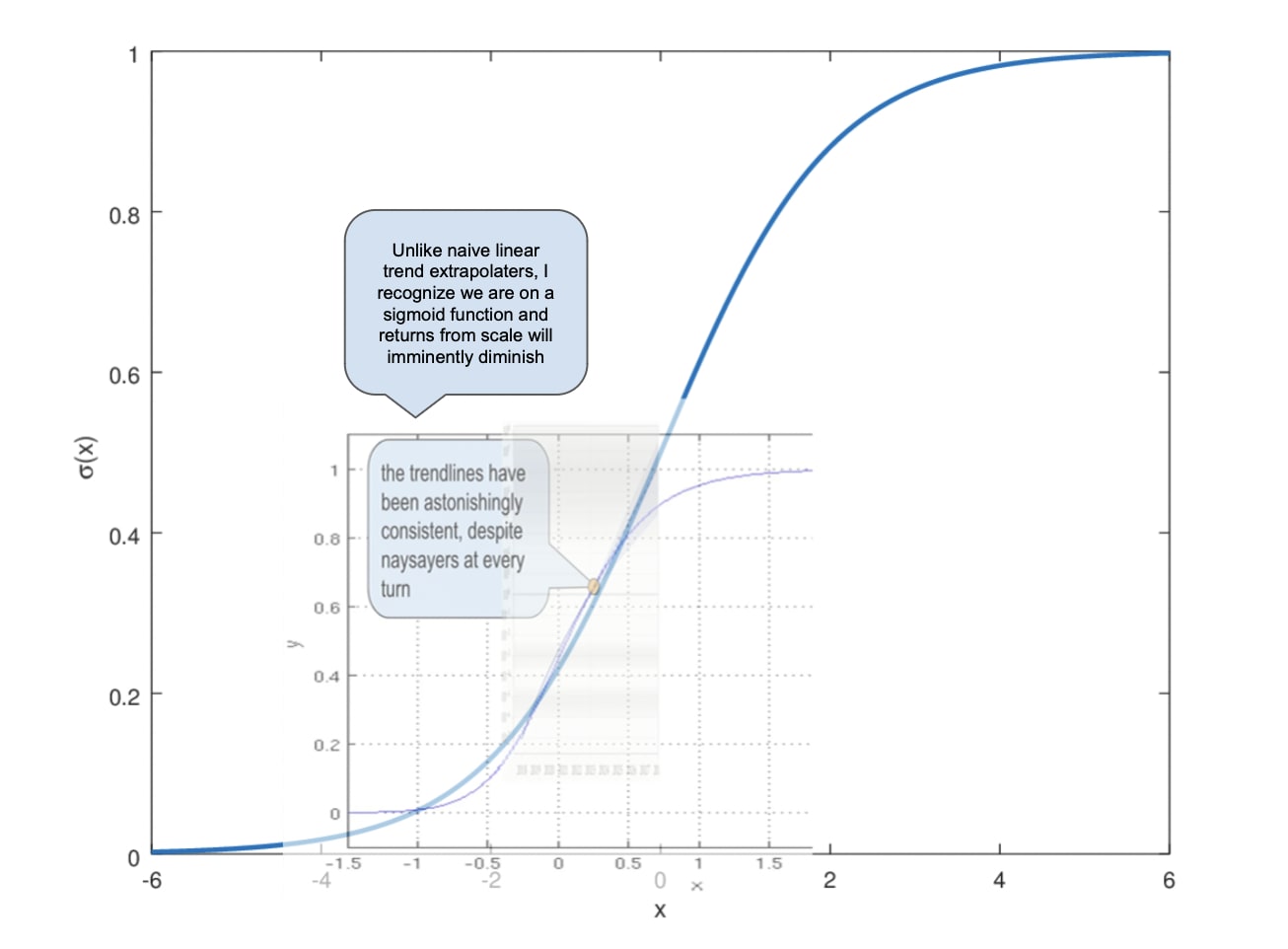

Sorry, I'm kinda lumping your meme in with a more general line of criticism I've seen that casts doubt on the whole idea of extrapolating an exponential trend, on account of the fact that we should eventually expect diminishing returns. But such extrapolation can still be quite informative, especially in the short term! If you had done it in 2020 to make guesses about where we'd end up in 2024, it would have served you well.

The sense in which it's straw-manning, in my mind, is that even the trend-extrapolaters admit that we can expect diminishing returns eventually. The question is where exactly we are on the curve, and that much is very uncertain. Given this, assuming the past rate of growth will hold constant for three more years isn't such a bad strategy (especially if you can tell plausible stories about how we'll e.g. get enough power to support the next 3 OOMs).

It's true — we might run into issues with data — but we also might solve those issues. And I don't think this is just mumbling and hand waving. My best guess is that there are real, promising efforts going on to solve this within labs, but we shouldn't expect to hear them in convincing detail because they will be fiercely guarded as a point of comparative advantage over other companies hitting the wall, hence why it just feels like mumbling from the outside.

I'm not sure what you want me to see by looking at your image again. Is it about the position of 2027 on the X-axis of the graph from Leopold's essay? If so, yeah, I assumed that placement wasn't rigorous or intentional and the goal of the meme was to suggest that extrapolating the trend in a linear fashion through 2027 was naive.

Unless...

Made with love — I just think a lot of critics of the "straight line go up" thought process are straw manning the argument. The question is really when we start to hit diminishing returns, and I (like the author of this post) don't know if anyone has a good answer to that. I do think the data wall would be a likely cause if it is the case that progress slows in the coming years. But progress continuing in a roughly linear fashion between now and 2027 seems, to me, totally "strikingly plauisble."

Yeah, I think you're kind of right about why scaling seems like a relevant term here. I really like that RSPs are explicit about different tiers of models posing different tiers of risks. I think larger models are just likely to be more dangerous, and dangerous in new and different ways, than the models we have today. And that the safety mitigations that apply to them need to be more rigorous than what we have today. As an example, this framework naturally captures the distinction between "open-sourcing is great today" and "open-sourcing might be very dangerous tomorrow," which is roughly something I believe.

But in the end, I don't actually care what the name is, I just care that there is a specific name for this relatively specific framework to distinguish it from all the other possibilities in the space of voluntary policies. That includes newer and better policies — i.e. even if you are skeptical of the value of RSPs, I think you should be in favor of a specific name for it so you can distinguish it from other, future voluntary safety policies that you are more supportive of.

I do dislike that "responsible" might come off as implying that these policies are sufficient, or that scaling is now safe. I could see "risk-informed" having the same issue, which is why "iterated/tiered scaling policy" seems a bit better to me.

My only concern with "voluntary safety commitments" is that it seems to encompass too much, when the RSPs in question here are a pretty specific framework with unique strengths I wouldn't want overlooked.

I've been using "iterated scaling policy," but I don't think that's perfect. Maybe "evaluation-based scaling policy"? or "tiered scaling policy"? Maybe even "risk-informed scaling policy"?

Sidebar: For what it's worth, I don't argue in my comment that "it's not worth worrying" about nuance. I argue that nuance isn't more important for public advocacy than, for example, in alignment research or policy negotiations — and that the opposite might be true.

Just came across an article from agricultural economist Jayson Lusk, who proposes something just like this. A few quotes:

"Succinctly put, a market for animal welfare would consist of giving farmers property rights over an output called animal well-being units (AWBUs) and providing an institutional structure or market for AWBUs to be bought and sold independent of the market for meat."

"Moreover, a benefit of a market for AWBUs, as compared to process regulations, is that an AWBUs approach provides incentives for producers to improve animal well-being at the lowest possible cost by focusing producer and consumer attention on an outcome (animal well-being) rather than a particular process or technology (i.e., cages, stalls, etc.). Those issues for which animal activists can garner the largest political support (e.g., elimination of cages) may not be those that can provide the largest change in animal well-being per dollar spent. By focusing on outcomes and letting producers worry about how to achieve the outcome, markets could enable innovation in livestock production methods and encourage producers to seek cost-effective means of improving animal well-being."

"Animal rights activists often decry the evils of the capitalist, market-based economy. Greedy corporate farms and agribusinesses have enslaved and mistreated billions of animals just to earn a few more dollars—or so the story goes. Market forces are powerful and no doubt the economic incentives that farmers have faced in the last 50 years have contributed to a reduction in animal well-being. But markets are only a means, not an end. Rather than demonizing the market, animal advocates could harness its power to achieve a worthwhile end."

I have major concerns with this class of ideas, but just wanted to share Lusk's proposal in case anyone looks into it further.