Posts

Comments

It seems to me that it's not right to assume the probability of opportunities to trade are zero?

Suppose both John and David are alive on a desert island right now (but slowly dying), and there's a chance that a rescue boat will arrive that will save only one of them, leaving the other to die. What would they contract to? Assuming no altruistic preferences, presumably neither would agree to only the other person being rescued.

It seems more likely here that bargaining will break down, and one of them will kill off the other, resulting in an arbitrary resolution of who ends up on the rescue boat, not a "rational" resolution.

While I've focused on death here, I think this is actually much more general -- there are a lot of irreversible decisions that people make (and that artificial agents might make) between potentially incommensurable choices. Here's a nice example from Elizabeth Anderson's "Value in Ethics & Economics" (Ch. 3, P57 re: the question of how one should live one's life, to which I think irreversibility applies

Similar incommensurability applies, I think, to what kind of society we collectively we want to live in, given that path dependency makes many choices irreversible.

Interesting argument! I think it goes through -- but only under certain ecological / environmental assumptions:

- That decisions / trades between goods are reversible.

- That there are multiple opportunities to make such trades / decisions in the environment.

But this isn't always the case! Consider:

- Both John and David prefer living over dying.

- Hence, John would not trade (John Alive, David Dead) for (John Dead, David Alive), and vice versa for David.

This is already a case of weakly incomplete preferences which, while technically reducible to a complete order over "indifference sets", doesn't seem well described by a utility function! In particular, it seems really important to represent the fact that neither person would trade their life for the other's life, even though both (John Alive, David Dead) and (John Dead, David Alive) lie in the same "indifference / incommensurability set".

(I think it's better to call it an "incommensurability set" -- just because two elements in a lattice share a least upper bound, it doesn't mean they are themselves comparable).

Now let's try and make the preferences strongly incomplete:

- John prefers living freely over imprisonment, and imprisonment to dying.

- Even if David was dead, he would prefer that John be alive over John being imprisoned.

Apart from the fact that you can't reverse death (at least with current technology), this is similar to the pizza scenario: The system as a whole prefers:

- (John Free, David Alive) > (John Free, David Dead) > (John Imprisoned, David Dead) > Both Dead

- (John Free, David Alive) > (John Imprisoned, David Alive) > (John Dead, David Alive) > Both Dead

- No preferences between options of the form (X, David Dead) and (John Dead, Y).

If John and David could contract to go from (John Imprisoned, David Dead) to (John Dead, David Alive) and then to (John Alive, David Dead) when those trades are offered, that would result in an improvement in achieving preferred outcomes on average. But of course, they can't because death is irreversible!

Not sure if this is the same as the awards contest entry, but EJT also made this earlier post ("There are no coherence theorems") arguing that certain Dutch Book / money pump arguments against incompleteness fail!

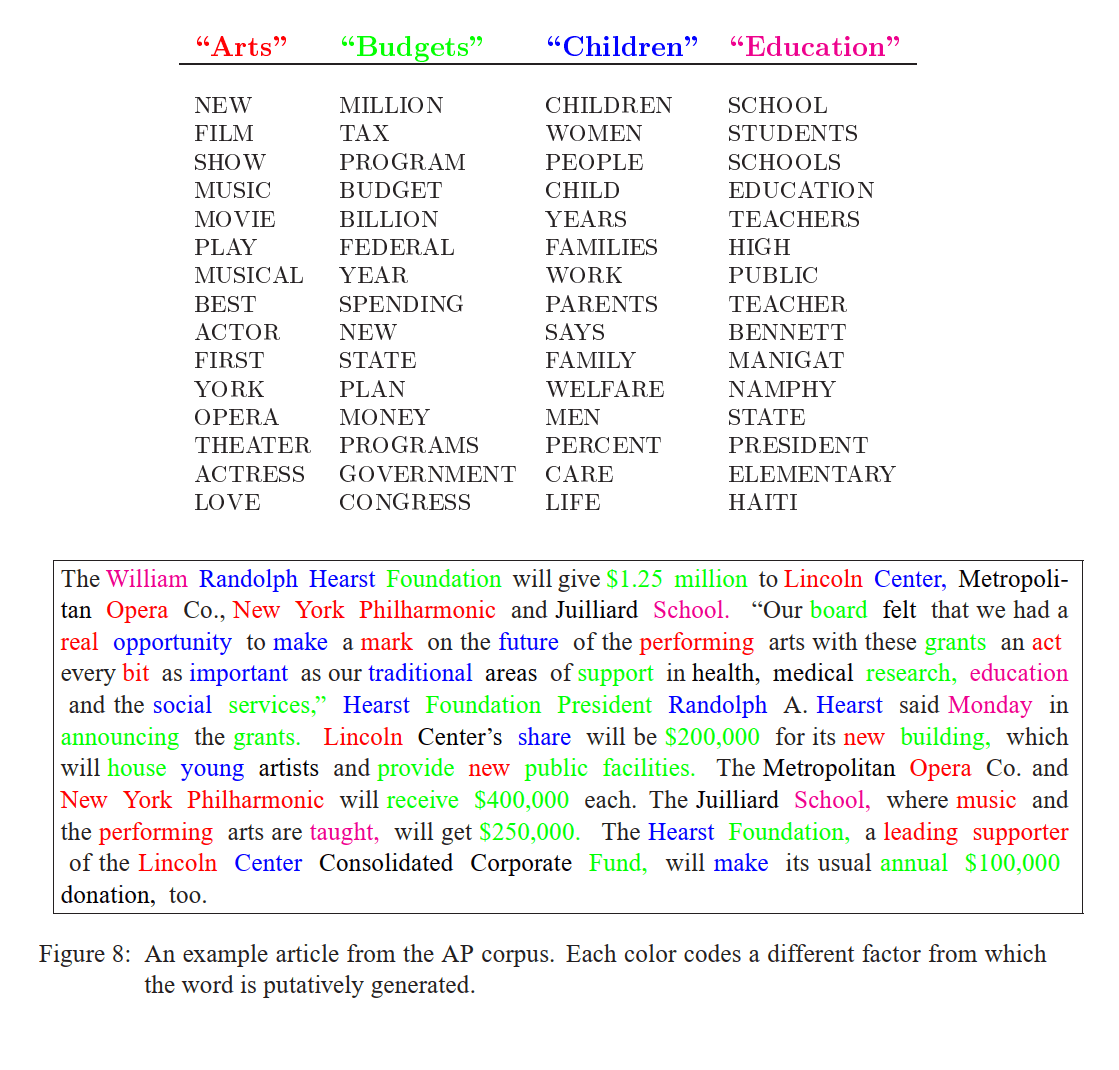

Very interesting work! This is only a half-formed thought, but the diagrams you've created very much remind me of similar diagrams used to display learned "topics" in classic topic models like Latent Dirichlet Allocation (Figure 8 from the paper is below):

I think there's possibly something to be gained by viewing what the MLPs and attention heads are learning as something like "topic models" -- and it may be the case that some of the methods developed for evaluating topic interpretability and consistency will be valuable here. A couple of references:

- Reading Tea Leaves: How Humans Interpret Topic Models (Chang et. al. 2009)

- Machine Reading Tea Leaves: Automatically Evaluating Topic Coherence and Topic Model Quality (Lau, Newman & Baldwin, 2014)

Great to know, and good to hear!

Regarding causal scrubbing in particular, it seems to me that there's a closely related line of research by Geiger, Icard and Potts that it doesn't seem like TAISIC is engaging with deeply? I haven't looked too closely, but it may be another example of duplicated effort / rediscovery:

The importance of interventions

Over a series of recent papers (Geiger et al. 2020, Geiger et al. 2021, Geiger et al. 2022, Wu et al. 2022a, Wu et al. 2022b), we have argued that the theory of causal abstraction (Chalupka et al. 2016, Rubinstein et al. 2017, Beckers and Halpern 2019, Beckers et al. 2019) provides a powerful toolkit for achieving the desired kinds of explanation in AI. In causal abstraction, we assess whether a particular high-level (possibly symbolic) mode H is a faithful proxy for a lower-level (in our setting, usually neural) model N in the sense that the causal effects of components in H summarize the causal effects of components of N. In this scenario, N is the AI model that has been deployed to solve a particular task, and H is one’s probably partial, high-level characterization of how the task domain works (or should work). Where this relationship between N and H holds, we say that H is a causal abstraction of N. This means that we can use H to directly engage with high-level questions of robustness, fairness, and safety in deploying N for real-world tasks.

Strongly upvoting this for being a thorough and carefully cited explanation of how the safety/alignment community doesn't engage enough with relevant literature from the broader field, likely at the cost of reduplicated work, suboptimal research directions, and less exchange and diffusion of important safety-relevant ideas. While I don't work on interpretability per se, I see similar things happening with value learning / inverse reinforcement learning approaches to alignment.

Fascinating evidence!

I suspect this maybe because RLHF elicits a singular scale of "goodness" judgements from humans, instead of a plurality of "goodness-of-a-kind" judgements. One way to interpret language models is as *mixtures* of conversational agents: they first sample some conversational goal, then some policy over words, conditioned on that goal:

On this interpretation, what RL from human feedback does is shift/concentrate the distribution over conversational goals into a smaller range: the range of goals consistent with human feedback so far. And if humans are asked to give only a singular "goodness" rating, the distribution will shift towards only goals that do well on those ratings - perhaps dramatically so! We lose goal diversity, which means less gibberish, but also less of the plurality of realistic human goals.

If the above is true, one corollary is that we should expect to see less mode collapse if one finetunes a language model on ratings elicited using a diversity of instructions (e.g. is this completion interesting? helpful? accurate?), and perhaps use some kind of imitation-learning inspired objective to mimic that distribution, rather than PPO (which is meant to only optimize for a singular reward function instead of a distribution over reward functions).

Apologies for the belated reply.

Yes, the summary you gave above checks out with what I took away from your post. I think it sounds good on a high level, but still too vague / high-level for me to say much in more detail. Values/ethics are definitely a system (e.g., one might think that morality was evolved by humans for the purposes of co-operation), but at the end of the day you're going to have to make some concrete hypothesis about what that system is in order to make progress. Contractualism is one such concrete hypothesis, and folding ethics under the broader scope of normative reasoning is another way to understand the underlying logic of ethical reasoning. Moral naturalism is another way of going "beyond human values", because it argues that statements about ethics can be reduced to statements about the natural world.

Hopefully this is helpful food for thought!

Hmm, I'm not sure I fully understand the concept of "X statements" you're trying to introduce, though it does feel similar in some ways to contractualist reasoning. Since the concept is still pretty vague to me, I don't feel like I can say much about it, beyond mentioning several ideas / concepts that might be related:

- Immanent critique (a way of pointing out the contradictions in existing systems / rules)

- Reasons for action (especially justificatory reasons)

- Moral naturalism (the meta-ethical position that moral statements are statements about the natural world)

Because the rules are meant for humans, with our habits and morals and limitations, and our explicit understanding of them only works because they operate in an ecosystem full of other humans. I think our rules/norms would fail to work if we tried to port them to a society of octopuses, even if those octopuses were to observe humans to try to improve their understanding of the object-level impact of the rules.

I think there's something to this, but I think perhaps it only applies strongly if and when most of the economy is run by or delegated to AI services? My intuition is that for the near-to-medium term, AI systems will mostly be used to aid / augment humans in existing tasks and services (e.g. the list in the section on Designing roles and norms), for which we can either either use existing laws and norms, or extensions of them. If we are successful in applying that alignment approach in the near-to-medium term, as well as the associated governance problems, then it seems to me that we can much more carefully control the transition to a mostly-automated economy as well, giving us leeway to gradually adjust our norms and laws.

No doubt, that's a big "if". If the transition to a mostly/fully-automated economy is sharper than laid out above, then I think your concerns about norm/contract learning are very relevant (but also that the preference-based alternative is more difficult still). And if we did end up with a single actor like OpenAI building transformative AI before everyone else, my recommendation would be still be to adopt something like the pluralistic approach outlined here, perhaps by gradually introducing AI systems into well-understood and well-governed social and institutional roles, rather than initiating a sharp shift to a fully-automated economy.

While listening to the latest Inside View podcast, it occurred to me that this perspective on AI safety has some natural advantages when translating into regulation that present governments might be able to implement to prepare for the future. If AI governance people aren't already thinking about this, maybe bother some / convince people in this comment section to bother some?

Yes, it seems like a number of AI policy people at least noticed the tweet I made about this talk! If you have suggestions for who in particular I should get the attention of, do let me know.

But here I would expect people to reasonably disagree on whether an AI system or community of systems has made a good decision, and therefore it seems harder to ever fully trust machines to make decisions at this level.

I hope the above is at least partially addressed by the last paragraph of the section on Reverse Engineering Roles and Norms! I agree with the worry, and to address it I think we could design systems that mostly just propose revisions or extrapolations to our current rules, or highlight inconsistencies among them (e.g. conflicting laws), thereby aiding a collective-intelligence-like democratic process of updating our rules and norms (of the form described in the Collective Governance section), where AI systems facilitate but do not enact normative change.

Note that if AI systems represent uncertainty about the "correct" norms, this will often lead them to make queries to humans about how to extend/update the norms (a la active learning), instead of immediately acting under its best estimate of the extended norms. This could be further augmented by a meta-norm of (generally) requiring consent / approval from the relevant human decision-making body before revising or acting under new rules.

All of this is to say, it does feel somewhat unavoidable to me to advance some kind of claim about the precise constents of a superior moral framework for what systems ought to do, beyond just matching what people do (in Russell's case) or what society does (in this post's case).

I'm not suggesting that AI systems should simply do what society does! Rather, the point of the contractualist framing is that AI systems should be aligned (in the limit) to what society would agree to after rational / mutually justifiable collective deliberation.

Current democratic systems approximate this ideal to a very rough degree, and I guess I hold out hope that under the right kinds of epistemic and social conditions (freedom of expression, equality of interlocutors, non-deluded thinking), the kind of "moral progress" we instinctively view as desirable will emerge from that form of collective deliberation. So my hope is that rather than specify in great degree what the contents of "superior moral theory" might look like, all we need to align AI systems with is the underlying meta-ethical framework that enables moral change. See Anderson on How Common Sense Can Be Self-Critical for a good discussion of what I think this meta-ethical framework looks like.

Hmm, I'm confused --- I don't think I said very much about inner alignment, and I hope to have implied that inner alignment is still important! The talk is primarily a critique of existing approaches to outer alignment (eg. why human preferences alone shouldn't be the alignment target) and is a critique of inner alignment work only insofar as it assumes that defining the right training objective / base objective is not a crucial problem as well.

Maybe a more refined version of the disagreement is about how crucial inner alignment is, vs. defining the right target for outer alignment? I happen to think the latter is more crucial to work on, and perhaps that comes through somewhat in the talk (though it's not a claim I wanted to strongly defend), whereas you seem to think inner alignment / preventing deceptive alignment is more crucial. Or perhaps both of them are crucial / necessary, so the question becomes where and how to prioritize resources, and you would prioritize inner alignment?

FWIW, I'm less concerned about inner alignment because:

- I'm more optimistic about model-based planning approaches that actually optimize for the desired objective in the limit of the large compute (so methods more like neurally-guided MCTS a.k.a AlphaGo, and less like offline reinforcement learning)

- I'm more optimistic about methods for directly learning human interpretable, modular, (neuro)symbolic world models that we can understand, verify, and edit, and that are still highly capable. This reduces the need for approaches like Eliciting Latent Knowledge, and avoids a number or pathways toward inner misalignment.

I'm aware that these are minority views in the alignment community -- I work a lot more on neurosymbolic and probabilistic programming methods, and think they have a clear path to scaling and providing economic value, which probably explains the difference.

Agreed that the interpreting law is hard, and the "literal" interpretation is not enough! Hence the need to represent normative uncertainty (e.g. a distribution over multiple formal interpretations of a natural language statement + having uncertainty over what terms in the contract are missing), which I see the section on "Inferring roles and norms" as addressing in ways that go beyond existing "reward modeling" approaches.

Let's call the above "wilful compliance", and the fully-fledged reverse engineering approach as "enlightened compliance". It seems like where we might disagree is how far "wilful compliance" alone will take us. My intuition is that essentially all uses of AI will have role-specific / use-specific restrictions on power-seeking associated with them, and these restrictions can be learned (from eg human behavior and normative judgements, incl. universalization reasoning) as implied terms in the contracts that govern those uses. This would avoid the computational complexity of literally learning everyone's preferences / values, and instead leverage the simpler and more politically feasible mechanisms that humans use to cooperate with each other and govern the commons.

I can link to a few papers later that make me more optimistic about something like the approach above!

On the contrary, I think there exist large, complex, symbolic models of the world that are far more interpretable and useful than learned neural models, even if too complex for any single individual to understand, e.g.:

- The Unity game engine (a configurable model of the physical world)

- Pixar's RenderMan renderer (a model of optics and image formation)

- The GLEAMviz epidemic simulator (a model of socio-biological disease spread at the civilizational scale)

Humans are capable of designing and building these models, and learning how to build/write them as they improve their understanding of the world. The difficult part is how we can recapitulate that ability -- program synthesis is only in its infancy in it's ability to do so, but IMO contemporary end-to-end deep learning methods seem unlikely to deliver here if want both interpretability and usefulness.

Adding some thoughts as someone who works on probabilistic programming, and has colleagues who work on neurosymbolic approaches to program synthesis:

- I think a lot of Bayes net structure learning / program synthesis approaches (Bayesian or otherwise) have the issue of uninformative variable names, but I do think it's possible to distinguish between structural interpretability and naming interpretability, as others have noted.

- In practice, most neural or Bayesian program synthesis applications I'm aware of exhibit something like structural interpretability, because the hypothesis space they live in is designed by modelers to have human-interpretable semantic structure. Two good examples of this are the prior over programs that generate handwritten characters in Lake et al (2015), and the PCFG prior over Gaussian Process covariance kernels in Saad et al (2019). See e.g. Figure 6 on how you perform analysis on programs generated by this prior, to determine whether a particular timeseries is likely to be periodic, has a linear trend, has a changepoint, etc.

- Regarding uninformative variable names, there's ongoing work on using natural language to guide program synthesis, so as to come up with more language-like conceptual abstractions (e.g. Wong et al 2021). I wouldn't be surprised if these approaches could also be extended to come up with informative variable and function names / comments. A related line of work is that people are starting to use LLMs to deobfuscate code (e.g. Lachaux et al 2021), and I expect the same techniques will work for synthesized code.

For these reasons, I'm more optimistic about the interpretability prospects of learning approaches that generate models or code that look like traditional symbolic programs, relative to end-to-end deep learning approaches. (Note that neural networks are also "symbolic programs", just written with a more restricted set of [differentiable] primitives, and typically staying within a set of widely used program structures [i.e. neural architectures]).

The more difficult question IMO is whether this interpretability comes at the cost of capabilities. I think this is possibly true in some domains (e.g. learning low-level visual patterns and cues), but not others (e.g. learning the compositional structure of e.g. furniture-like objects).

I haven't seen compelling (to me) examples of people going successfully from psychology to algorithms without stopping to consider anything whatsoever about how the brain is constructed .

Some recent examples, off the top of my head!

- Jain, Y. R., Callaway, F., Griffiths, T. L., Dayan, P., Krueger, P. M., & Lieder, F. (2021). A computational process-tracing method for measuring people’s planning strategies and how they change over time.

- Dasgupta, I., Schulz, E., Tenenbaum, J. B., & Gershman, S. J. (2020). A theory of learning to infer. Psychological review, 127(3), 412.

- Harrison, P., Marjieh, R., Adolfi, F., van Rijn, P., Anglada-Tort, M., Tchernichovski, O., ... & Jacoby, N. (2020). Gibbs Sampling with People. Advances in Neural Information Processing Systems, 33.

One reason it's tricky to make sense of psychology data on its own, I think, is the interplay between (1) learning algorithms, (2) learned content (a.k.a. "trained models"), (3) innate hardwired behaviors (mainly in the brainstem & hypothalamus). What you especially want for AGI is to learn about #1, but experiments on adults are dominated by #2, and experiments on infants are dominated by #3, I think.

I guess this depends on how much you think we can make progress towards AGI by learning what's innate / hardwired / learned at an early age in humans and building that into AI systems, vs. taking more of a "learn everything" approach! I personally think there may still be a lot of interesting human-like thinking and problem solving strategies that we haven't figured out to implement as algorithms yet (e.g. how humans learn to program, and edit + modify programs and libraries to make them better over time), that adult and child studies would be useful in order to characterize what might even be aiming for, even if ultimately the solution is to use some kind of generic learning algorithm to reproduce it. I also think there's this fruitful in-between (1) and (3), which is to ask, "What are the inductive biases that guide human learning?", which I think you can make a lot of headway on without getting to the neural level.

This was a great read! I wonder how much you're committed to "brain-inspired" vs "mind-inspired" AGI, given that the approach to "understanding the human brain" you outline seems to correspond to Marr's computational and algorithmic levels of analysis, as opposed to the implementational level (see link for reference). In which case, some would argue, you don't necessarily have to do too much neuroscience to reverse engineer human intelligence. A lot can be gleaned by doing classic psychological experiments to validate the functional roles of various aspects of human intelligence, before examining in more detail their algorithms and data structures (perhaps this time with the help of brain imaging, but also carefully designed experiments that elicit human problem solving heuristics, search strategies, and learning curves).

I ask because I think "brain-inspired" often gets immediately associated with neural networks, and not say, methods for fast and approximate Bayesian inference (MCMC, particle filters), which are less the AI zeitgeist nowadays, but still very much how cognitive scientists understand the human mind and its capabilities.

Yup! And yeah I think those are open research questions -- inference over certain kinds of non-parametric Bayesian models is tractable, but not in general. What makes me optimistic is that humans in similar cultures have similar priors over vast spaces of goals, and seem to do inference over that vast space in a fairly tractable manner. I think things get harder when you can't assume shared priors over goal structure or task structure, both for humans and machines.

Belatedly reading this and have a lot of thoughts about the connection between this issue and robustness to ontological shifts (which I've written a bit about here), but I wanted to share a paper which takes a very small step in addressing some of these questions by detecting when the human's world model may diverge from a robot's world model, and using that as an explanation for why a human might seem to be acting in strange or counter-productive ways:

Where Do You Think You're Going?: Inferring Beliefs about Dynamics from Behavior

Siddharth Reddy, Anca D. Dragan, Sergey Levine

https://arxiv.org/abs/1805.08010

Inferring intent from observed behavior has been studied extensively within the frameworks of Bayesian inverse planning and inverse reinforcement learning. These methods infer a goal or reward function that best explains the actions of the observed agent, typically a human demonstrator. Another agent can use this inferred intent to predict, imitate, or assist the human user. However, a central assumption in inverse reinforcement learning is that the demonstrator is close to optimal. While models of suboptimal behavior exist, they typically assume that suboptimal actions are the result of some type of random noise or a known cognitive bias, like temporal inconsistency. In this paper, we take an alternative approach, and model suboptimal behavior as the result of internal model misspecification: the reason that user actions might deviate from near-optimal actions is that the user has an incorrect set of beliefs about the rules -- the dynamics -- governing how actions affect the environment. Our insight is that while demonstrated actions may be suboptimal in the real world, they may actually be near-optimal with respect to the user's internal model of the dynamics. By estimating these internal beliefs from observed behavior, we arrive at a new method for inferring intent. We demonstrate in simulation and in a user study with 12 participants that this approach enables us to more accurately model human intent, and can be used in a variety of applications, including offering assistance in a shared autonomy framework and inferring human preferences.

Belatedly seeing this post, but I wanted to note that probabilistic programming languages (PPLs) are centered around this basic idea! Some useful links and introductions to PPLs as a whole:

- Probabilistic models of cognition (web book)

- WebPPL

- An introduction to models in Pyro

- Introduction to Modeling in Gen

And here's a really fascinating paper by some of my colleagues that tries to model causal interventions that go beyond Pearl's do-operator, by formalizing causal interventions as (probabilistic) program transformations:

Bayesian causal inference via probabilistic program synthesis

Sam Witty, Alexander Lew, David Jensen, Vikash Mansinghka

https://arxiv.org/abs/1910.14124Causal inference can be formalized as Bayesian inference that combines a prior distribution over causal models and likelihoods that account for both observations and interventions. We show that it is possible to implement this approach using a sufficiently expressive probabilistic programming language. Priors are represented using probabilistic programs that generate source code in a domain specific language. Interventions are represented using probabilistic programs that edit this source code to modify the original generative process. This approach makes it straightforward to incorporate data from atomic interventions, as well as shift interventions, variance-scaling interventions, and other interventions that modify causal structure. This approach also enables the use of general-purpose inference machinery for probabilistic programs to infer probable causal structures and parameters from data. This abstract describes a prototype of this approach in the Gen probabilistic programming language.

Replying to the specific comments:

This still seems like a fair way to evaluate what the alignment community thinks about, but I think it is going to overestimate how parochial the community is. For example, if you go by "what does Stuart Russell think is important", I expect you get a very different view on the field, much of which won't be in the Alignment Newsletter.

I agree. I intended to gesture a little bit at this when I mentioned that "Until more recently, It’s also been excluded and not taken very seriously within traditional academia", because I think one source of greater diversity has been the uptake of AI alignment in traditional academia, leading to slightly more inter-disciplinary work, as well as a greater diversity of AI approaches. I happen to think that CHAI's research publications page reflects more of the diversity of approaches I would like to see, and wish that more new researchers were aware of them (as opposed to the advice currently given by, e.g., 80K, which is to skill up in deep learning and deep RL).

Reward functions are typically allowed to depend on actions, and the alignment community is particularly likely to use reward functions on entire trajectories, which can express arbitrary views (though I agree that many views are not "naturally" expressed in this framework).

Yup, I think purely at the level of expressivity, reward functions on a sufficiently extended state space can express basically anything you want. That still doesn't resolve several worries I have though:

- Talking about all human motivation using "rewards" tends to promote certain (behaviorist / Humean) patterns of thought over others. In particular I think it tends to obscure the logical and hierarchical structure of many aspects of human motivation -- e.g., that many of our goals are actually instrumental sub-goals in higher-level plans, and that we can cite reasons for believing, wanting, or planning to do a certain thing. I would prefer if people used terms like "reasons for action" and "motivational states", rather than simply "reward functions".

- Even if reward functions can express everything you want them to, that doesn't mean they'll be able to learn everything you want them to, or generalize in the appropriate ways. e.g., I think deep RL agents are unlikely to learn the concept of "promises" in a way that generalizes robustly, unless you give them some kind of inductive bias that leads them to favor structures like LTL formulas (This is a related worry to Stuart Armstrong's no-free-lunch theorem.) At some point I intend to write a longer post about this worry.

Of course, you could just define reward functions over logical formulas and the like, and do something like reward modeling via program induction, but at that point you're no longer using "reward" in the way its typically understood. (This is similar to move, made by some Humeans, that reason can only be motivating because we desire to follow reason. That's fair enough, but misses the point for calling certain kinds of motivations "reasons" at all.)

(I'd cite deep learning generally, not just deep RL.)

You're right, that's what I meant, and have updated the post accordingly.

If you start with an uninformative prior and no other evidence, it seems like you should be focusing a lot of attention on the paradigm that is most successful / popular. So why is this influence "undue"?

I agree that if you start with a very uninformative prior, focusing on the most recently successful paradigm makes sense. But I think once you take into account slightly more information, I think there's reason to think the AI alignment community is currently overly biased towards deep learning:

- The trend-following behavior in most scientific & engineering fields, including AI, should make us skeptical that currently popular approaches are popular for the right reasons. In the 80s everyone was really excited about expert systems and the 5th generation project. About 10 years ago, Bayesian non-parametrics were really popular. Now deep learning is popular. Knowing this history suggests that we should be a little more careful about joining the bandwagon. Unfortunately, a lot of us joining the field now don't really know this history, nor are we necessarily exposed to the richness and breadth of older approaches before diving headfirst into deep learning (I only recognized this after starting my PhD and started learning more about symbolic AI planning and programming languages research).

- We have extra reason to be cautious about deep learning being popular for the wrong reasons, given that many AI researchers say that we should be focusing less on machine learning while at the same time publishing heavily in machine learning. For example, at the AAAI 2019 informal debate, the majority of audience members voted against the proposition that "The AI community today should continue to focus mostly on ML methods". At some point during the debate, it was noted that despite the opposition to ML, most papers at AAAI that year were about ML, and it was suggested, to some laughter, that people were publishing in ML simply because that's what would get them published.

- The diversity of expert opinion about whether deep learning will get us to AGI doesn't feel adequately reflected in the current AI alignment community. Not everyone thinks the Bitter Lesson is quite the lesson we have to learn at. A lot of of prominent researchers like Stuart Russell, Gary Marcus, and Josh Tenenbaum all think that we need to re-invigorate symbolic and Bayesian approaches (perhaps through hybrid neuro-symbolic methods), and if you watch the 2019 Turing Award keynotes by both Hinton and Bengio, both of them emphasize the importance of having structured generative models of the world (they just happen to think it can be achieved by building the right inductive biases into neural networks). In contrast, outside of MIRI, it feels like a lot of the alignment community anchors towards the work that's coming out of OpenAI and DeepMind.

My own view is that the success of deep learning should be taken in perspective. It's good for certain things, and certain high-data training regimes, and will remain good for those use cases. But in a lot of other use cases, where we might care a lot about sample efficiency and rapid + robust generalizability, most of the recent progress has, in my view, been made by cleverly integrating symbolic approaches with neural networks (even AlphaGo can be seen as a version of this, if one views MCTS as symbolic). I expect future AI advances to occur in a similar vein, and for me that lowers the relevance of ensuring that end-to-end DL approaches are safe and robust.

Thanks for this summary. Just a few things I would change:

- "Deep learning" instead of "deep reinforcement learning" at the end of the 1st paragraph -- this is what I meant to say, and I'll update the original post accordingly.

- I'd replace "nice" with "right" in the 2nd paragraph.

- "certain interpretations of Confucian philosophy" instead of "Confucian philosophy", "the dominant approach in Western philosophy" instead of "Western philosophy" -- I think it's important not to give the impression that either of these is a monolith.

Thanks for these thoughts! I'll respond to your disagreement with the framework here, and to the specific comments in a separate reply.

First, with respect to my view about the sources of AI risk, the characterization you've put forth isn't quite accurate (though it's a fair guess, since I wasn't very explicit about it). In particular:

- These days I'm actually more worried by structural risks and multi-multi alignment risks, which may be better addressed by AI governance than technical research per se. If we do reach super-intelligence, I think it's more likely to be along the lines of CAIS than the kind of agential super-intelligence pictured by Bostrom. That said, I still think that technical AI alignment is important to get right, even in a CAIS-style future, hence this talk -- I see it as necessary, but not sufficient.

- I don't think that powerful AI systems will necessarily be optimizing for anything (at least not in the agential sense suggested by Superintelligence). In fact, I think we should actively avoid building globally optimizing agents if possible --- I think optimization is the wrong framework for thinking about "rationality" or "human values", especially in multi-agent contexts. I think it's still non-trivially likely that we'll end up building AGI that's optimizing in some way, just because that's the understanding of "rationality" or "solving a task" that's so predominant within AI research. But in my view, that's precisely the problem, and my argument for philosophical pluralism is in part because it offers theories of rationality, value, and normativity that aren't about "maximizing the good".

- Regarding "the good", the primary worry I was trying to raise in this talk has less to do with "ethical error", which can arise due to e.g. Goodhart's curse, and more to do with meta-ethical and meta-normative error, i.e., that the formal concepts and frameworks that the AI alignment community has typically used to understand fuzzy terms like "value", "rationality" and "normativity" might be off-the-mark.

For me, this sort of error is importantly different from the kind of error considered by inner and outer alignment. It's often implicit in the mathematical foundations of decision theory and ML theory itself, and tends to go un-noticed. For example, once we define rationality as "maximize expected future reward", or assume that human behavior reflects reward-rational implicit choice, we're already making substantive commitments about the nature of "value" and "rationality" that preclude other plausible characterizations of these concepts, some of which I've highlighted in the talk. Of course, there has been plenty of discussion about whether these formalisms are in fact the right ones -- and I think MIRI-style research has been especially valuable for clarifying our concepts of "agency" and "epistemic rationality" -- but I've yet to see some of these alternative conceptions of "value" and "practical rationality" discussed heavily in AI alignment spaces.

Second, with respect to your characterization of AI development and AI risk, I believe that points 1 and 2 above suggest that our views don't actually diverge that much. My worry is that the difficulty of building machines that "follow common sense" is on the same order of magnitude as "defining the good", and just as beset by the meta-ethical and meta-normative worries I've raised above. After all, "common sense" is going to include "common social sense" and "common moral sense", and this kind of knowledge is irreducibly normative. (In fact, I think there's good reason to think that all knowledge and inquiry is irreducibly normative, but that's a stronger and more contentious claim.)

Furthermore, given that AI is already deployed in social domains which tend to have open scope (personal assistants, collaborative and caretaking robots, legal AI, etc.), I think it's a non-trivial possibility that we'll end up having powerful misaligned AI applied to those contexts, and that either violate their intended scope, or require having wide scope to function well (e.g., personal assistants). No doubt, "follow common sense" is a lower bar than "solve moral philosophy", but on the view that philosophy is just common sense applied to itself, solving "common sense" is already most of the problem. For that reason, I think it deserves a plurality of disciplinary* and philosophical perspectives as well.

(*On this note, I think cognitive science has a lot to offer with regard to understanding "common sense". Perhaps I am overly partial given that I am in computational cognitive science lab, but it does feel like there's insufficient awareness or discussion of cognitive scientific research within AI alignment spaces, despite its [IMO clearcut] relevance.)

In exchange for the mess, we get a lot closer to the structure of what humans think when they imagine the goal of "doing good." Humans strive towards such abstract goals by having a vague notion of what it would look and feel like, and by breaking down those goals into more concrete sub-tasks. This encodes a pattern of preferences over universe-histories that treats some temporally extended patterns as "states."

Thank you for writing this post! I've had very similar thoughts for the past year or so, and I think the quote above is exactly right. IMO, part of the alignment problem involves representational alignment -- i.e., ensuring that AI systems accurately model both the abstract concepts we use to understand the world, as well as the abstract tasks, goals, and "reasons for acting" that humans take as instrumental or final ends. Perhap's you're already familiar with Bratman's work on Intentions, Plans, & Practical Reason, but to the the extent that "intentions" feature heavily in human mental life as the reasons we cite for why we do things, developing AI models of human intention feels very important.

As it happens, one of the next research projects I'll be embarking on is modeling humans as hierarchical planners (most likely in the vein of Hierarchical Task & Motion Planning in the Now by Kaelbling & Lozano-Perez) in order to do Bayesian inference over their goals and sub-goals -- would be happy to chat more about it if you'd like!

Thanks for writing up this post! It's really similar in spirit to some research I've been working on with others, which you can find on the ArXiv here: https://arxiv.org/abs/2006.07532 We also model bounded goal-directed agents by assuming that the agent is running some algorithm given bounded compute, but our approach differs in the following ways:

- We don't attempt to compute full policies over the state space, since this is generally intractable, and also cognitively implausible, at least for agents like ourselves. Instead, we compute (partial) plans from initial states to goal states.

- Rather than using RL algorithms like value iteration or SARSA, we assume that agents deploy some form of heuristic-guided model-based search, e.g. A*, MCTS, with a bounded computational budget. If search terminates before the goal is reached, then agents pursue a partial plan towards a promising intermediate state found during search.

- "Promisingness" is dependent on the search heuristic used -- a poor search heuristic will lead to highly non-optimal partial plans, whereas a good search heuristic will lead to partial plans that make significant progress to the goal, even if the goal itself isn't reached.

- Separating out the search heuristic from the search budget gives us at least at two different notions of agent-boundedness, roughly corresponding to competence vs. effort. An agent may be really good at search, but may not spend a large computational budget on it, or they may be bad at search, but spend a lot of time searching, and still get the right answer.

The abstract for the paper is below -- hope it's useful to read, and I'd be curious to hear your thoughts:

Online Bayesian Goal Inference for Boundedly-Rational Planning Agents

People routinely infer the goals of others by observing their actions over time. Remarkably, we can do so even when those actions lead to failure, enabling us to assist others when we detect that they might not achieve their goals. How might we endow machines with similar capabilities? Here we present an architecture capable of inferring an agent's goals online from both optimal and non-optimal sequences of actions. Our architecture models agents as boundedly-rational planners that interleave search with execution by replanning, thereby accounting for sub-optimal behavior. These models are specified as probabilistic programs, allowing us to represent and perform efficient Bayesian inference over an agent's goals and internal planning processes. To perform such inference, we develop Sequential Inverse Plan Search (SIPS), a sequential Monte Carlo algorithm that exploits the online replanning assumption of these models, limiting computation by incrementally extending inferred plans as new actions are observed. We present experiments showing that this modeling and inference architecture outperforms Bayesian inverse reinforcement learning baselines, accurately inferring goals from both optimal and non-optimal trajectories involving failure and back-tracking, while generalizing across domains with compositional structure and sparse rewards.

https://arxiv.org/abs/2006.07532