AI Alignment, Philosophical Pluralism, and the Relevance of Non-Western Philosophy

post by xuan · 2021-01-01T00:08:07.034Z · LW · GW · 21 commentsContents

The Talk A brief introduction to AI alignment An overview of the field Philosophical tendencies in AI alignment 1. Connectionist (vs. symbolic) 2. Behaviorist (vs. cognitivist) 3. Humean (vs. Kantian) 4. Rationality as decision-theoretic (vs. reasonableness / sense-making) 5. Consequentialist (vs. non-consequentialist) From parochialism to pluralism Why pluralism? (And not just diversity?) 1. Avoiding the streetlight fallacy 2. Robustness to moral and normative uncertainty 3. Pluralism as (political) pragmatism 4. Pluralism as respect for the equality and autonomy of persons The relevance of non-Western philosophy 1. Representing and learning human norms 2. Robustness to ontological shifts and crises 3. The phenomenology of valuing and dis-valuing Conclusion None 21 comments

This is an extended transcript of the talk I gave at EAGxAsiaPacific 2020. In the talk, I present a somewhat critical take on how AI alignment has grown as a field, and how, from my perspective, it deserves considerably more philosophical and disciplinary diversity than it has enjoyed so far. I'm sharing it here in the hopes of generating discussion about the disciplinary and philosophical paradigms that (I understand) the AI alignment community to be rooted in, and whether or how we should move beyond them. Some sections cover introductory material that most people here are likely to be familiar with, so feel free to skip them.

The Talk

Hey everyone, my name is Xuan (IPA: ɕɥɛn), and I’m doctoral student at MIT doing cognitive AI research. Specifically I work on how we can infer the hidden structure of human motivations by modeling humans using probabilistic programs. Today though I’ll be talking about something that’s more in the background that informs my work, and that’s about AI alignment, philosophical pluralism, and the relevance of non-Western philosophy.

This talk will cover a lot of ground, so I want to give an overview to keep everyone oriented:

- First, I’ll give a brief introduction to what AI alignment is, and why it likely matters as an effective cause area.

- I’ll then highlight some of the philosophical tendencies of current AI alignment research, and argue that they reflect a relatively narrow set of philosophical views.

- Given that these philosophical views may miss crucial considerations, this situation motivates the need for greater philosophical and disciplinary pluralism.

- And then as a kind of proof by example, I’ll aim to demonstrate how non-Western philosophy might provide insight into several open problems in AI alignment research.

A brief introduction to AI alignment

So what is AI alignment? One way to cache it out is the project of building intelligent systems that robustly act in our collective interests — in other words, building AI that is aligned with our values. As many people in the EA community have argued, this is a highly impactful cause area if you believe the following:

- AI will determine the future of our civilization, perhaps by replacing humanity as the most intelligent agents on this planet, or by having some other kind of transformative impact, like enabling authoritarian dystopias.

- AI will be likely be misaligned with our collective interests by default, perhaps because it’s just very hard to specify what our values are [AF · GW], or because of bad systemic incentives.

- Not only is this problem really difficult to solve, we also cannot delay solving it.

To that last point, basically everyone who works in AI alignment thinks it’s a really daunting technical and philosophical challenge. Human values, whatever they are, are incredibly complex and fragile, and so every seemingly simple solution to aligning superhuman AI is subject to potentially catastrophic loopholes.

I’ll illustrate this by way of this short dialogue between a human and a fictional super-intelligent chatbot called GPT-5, who’s kind of like this genie in a bottle. So you start up this chatbot and you ask:

Human Dear GPT-5, please make everyone on this planet happy.

Okay, I will place them in stasis and inject heroin so they experience eternal bliss. GPT-5

Human No no no, please don’t. I mean satisfy their preferences. Not everyone wants heroin.

Alright. But how should I figure out what those preferences are? GPT-5

Human Just listen to what they say they want! Or infer it from how they act.

Hmm. This person says they can’t bear to hurt animals, but keeps eating meat. GPT-5

Human Well, do what they would want if they could think longer, or had more willpower!

I extrapolate that they will come to support human extinction to save other species. GPT-5

Human Actually, just stop.

How do I know if that’s what you really want? GPT-5

An overview of the field

So that's a taste of the kind of problem we need to solve. Obviously there's a lot to unpack here about philosophy, what people really want, what desires are, what preferences are, and whether should we always satisfy those preferences. Before diving more into that, I think it’d be helpful to give a sense of what AI alignment research is like today, so we can get better sense of what might still be needed to answer these daunting questions.

There have been multiple taxonomies of AI alignment research, one of the earlier ones being Concrete Problems in AI Safety in 2016, suggesting topics like avoiding negative side effects and safe exploration. In 2018, DeepMind offers another categorization, breaking things down into specification, robustness, and assurance. And at EA Global 2020, Rohin Shah laid out another useful way of thinking about the space [? · GW], breaking specification down into outer and inner alignment, and highlighting the question of scaling to superhuman competence while preserving alignment.

One notable feature of these taxonomies is their decidedly engineering bent. You might be wondering — where is the philosophy in all this? Didn’t we say there were philosophical challenges? And it’s actually there, but you have to look closely. It’s often obscured by the technical jargon. In addition, there’s this tendency to formalize philosophical and ethical questions as questions about rewards and policies and utility functions — which I think is something that can be done a little too quickly.

Another way to get a sense of what might currently be missing in AI alignment is to look at the ecosystem and its key players.

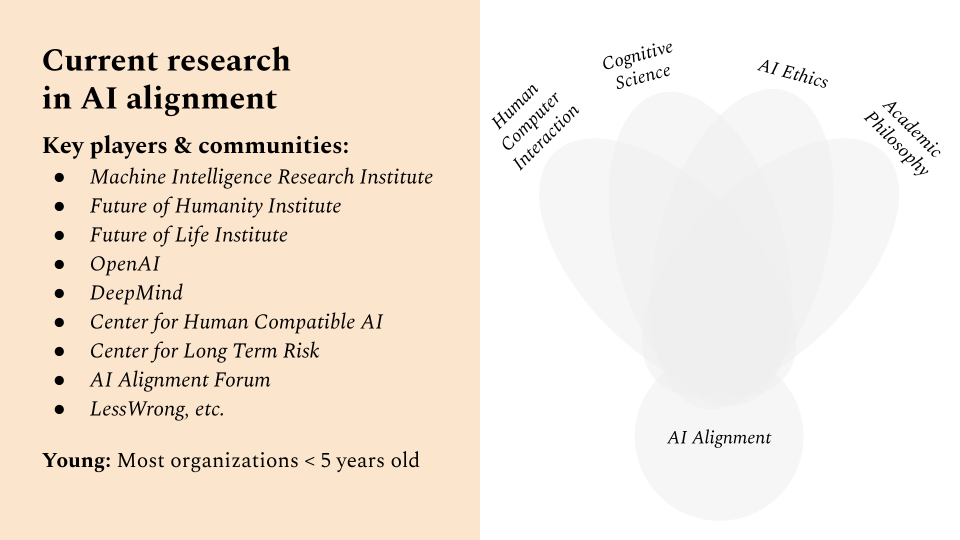

AI alignment is actually a really small and growing field, composed of entities like MIRI, FHI, OpenAI, the Alignment forum, and so on. Most of these organizations are really young, often less than 5 years old — and I think it’s fair to say that they’ve been a little insular as well. Because if you think about AI alignment as a field, and the problems its trying to solve, you’d think it must be this really interdisciplinary field that sits at the intersection of broader disciplines, like human-computer interaction, cognitive science, AI ethics, and philosophy.

But to my knowledge, there actually isn’t very much overlap between these communities — it’s more off-to-the-side, like in the picture above. There are reasons for this, which I’ll get to, and it’s already starting to change, but I think it partly explains the relatively narrow philosophical horizons of the AI alignment community.

Philosophical tendencies in AI alignment

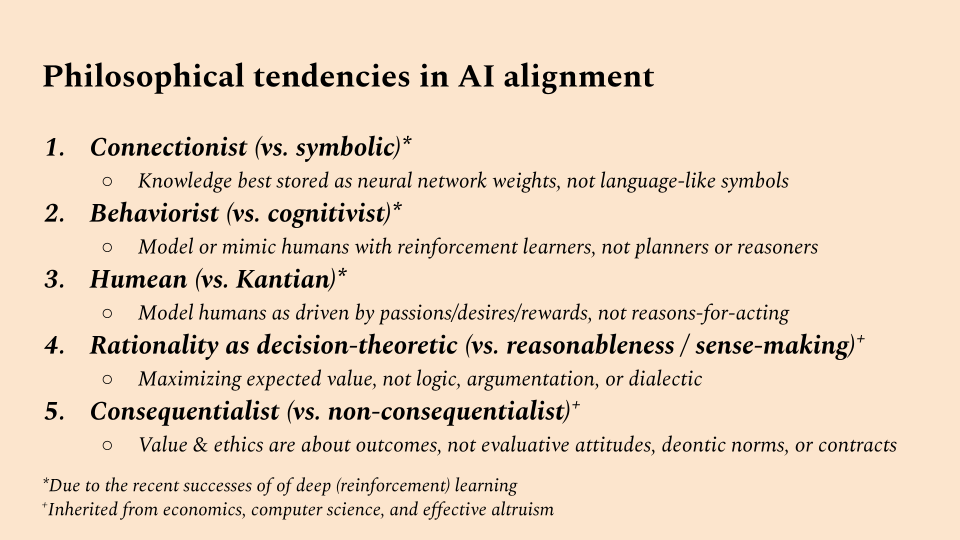

So what are these horizons? I’m going to lay out 5 philosophical tendencies that I’ve perceived in the work that comes out of the AI alignment community — so this is inevitably going to be subjective — but it’s based on the work that gets highlighted in venues like the Alignment Newsletter, or that gets discussed on the AI Alignment forum.

(1) Connectionism, (2) Behaviorism, (3) Humeanism, (4) Decision-Theoretic Rationality, (5) Consequentialism.

1. Connectionist (vs. symbolic)

First there’s a tendency towards connectionism — the position that knowledge is best stored as sub-symbolic weights in neural networks, rather than language-like symbols. You see this in emphasis on deep learning interpretability, scalability, and robustness.

2. Behaviorist (vs. cognitivist)

Second, there’s a tendency towards behaviorism — that to build human-aligned AI, we can model or mimic humans as these reinforcement learning agents, which avoid reasoning or planning by just learning from lifetimes and lifetimes of data. This in contrast to more cognitive approaches to AI, which emphasize the ability to reason with and manipulate abstract models of the world.

3. Humean (vs. Kantian)

Third, there’s a implicit tendency towards Humean theories of motivation — that we can model humans as motivated by reward signals they receive from the environment, which you might think of as “desires”, or “passions” as David Hume called them. This is in contrast more Kantian theories of motivation, which leave more room for humans to also be motivated by reasons, e.g., commitments, intentions, or moral principles.

4. Rationality as decision-theoretic (vs. reasonableness / sense-making)

Fourth, there’s a tendency to view rationality solely in decision theoretic terms — that is, rationality is about maximizing expected value, where probabilities are rationally updated in a Bayesian manner. But historically, in philosophy, there’s been a lot more to norms of reasoning and rationality than just that — rationality is also about logic, and argumentation and dialectic. Broadly, it’s about what it makes sense for a person to think or do, including what it makes sense for a person to value in the first place.

5. Consequentialist (vs. non-consequentialist)

Finally, there’s a tendency towards consequentialism — consequentialism in the broad sense that value and ethics are about outcomes or states of affairs. This excludes views that root value/ethics in evaluative attitudes, deontic norms, or contractualism.

From parochialism to pluralism

By laying out these tendencies, I want to suggest that the predominant views within AI alignment live within a relatively small corner of the full space of contemporary philosophical positions. If this is true, this should give reason for pause. Why these tendencies? Of course, it’s partly that a lot of very smart people thought very hard about these things, and this is what made sense to them. But very smart people may still be systematically biased by their intellectual environments and trajectories.

Might this be happening with AI alignment researchers? It’s worth noting that the first three of these tendencies are very much influenced by recent successes of deep learning and reinforcement learning in AI. In fact, prior to these successes, a lot of work in AI was more on the other end of the spectrum: first order logic, classical planning, cognitive systems, etc. One worry then, is that the attention of AI alignment researchers might be unduly influenced by the success or popularity of contemporary AI paradigms.

It's also notable that the last two of these tendencies are largely inherited from disciplines like economics, computer science, and communities like effective altruism. Another worry then, would be that these origins have unduly influenced the paradigms and concepts that we take as foundational.

So at this point, I hope to have shown how the AI alignment research community exists in a bit of a philosophical bubble. And so in that sense, if you’ll forgive the term, the community is rather parochial.

And there are understandable reasons for this. For one, AI alignment is still a young field, and hasn’t reached a more diverse pool of researchers. Until more recently, It’s also been excluded and not taken very seriously within traditional academia, leading to a lack of intra-disciplinary and inter-disciplinary conversation, and a continued suspicion in some quarters about academia. Obviously, there are also strong founder effects due to the field’s emergence within rationalist and EA communities. And like much of AI and STEM, it inherits barriers to participation from an unjust world.

These can be, and in my opinion, should be addressed. As the field grows, we could make sure it includes more disciplinary and community outsiders. We could foster greater inter-disciplinary collaboration within academia. We could better recognize how founder effects may bias our search through the space of relevant ideas. And we could lower the barriers to participation, while countering unjust selection effects.

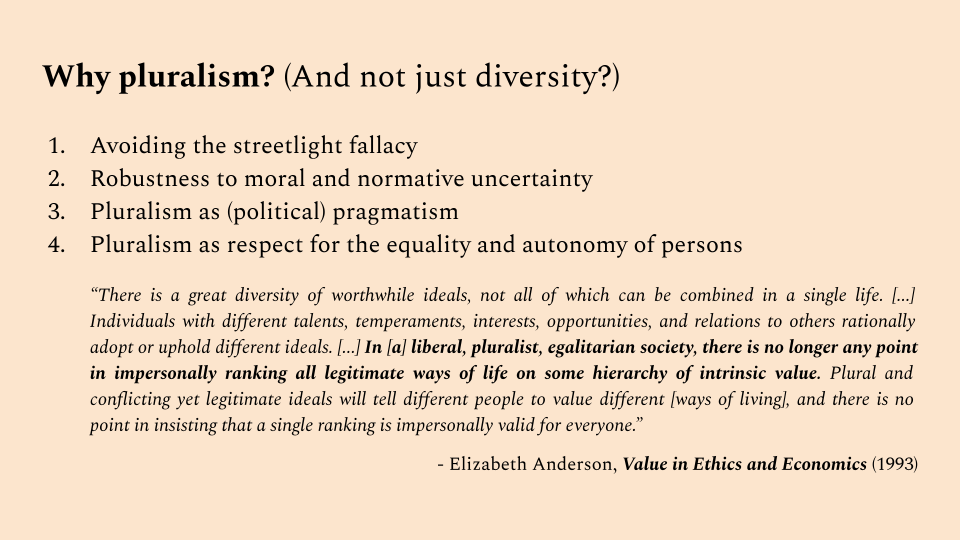

Why pluralism? (And not just diversity?)

By why bother? What exactly is the value in breaking out of this philosophical bubble? I haven’t quite explained that yet, so I’ll do that now. And why do I use the word pluralism in particular, as opposed to just diversity? I chose it because I wanted it to evoke something more than just diversity.

By philosophical pluralism, I mean to include philosophical diversity, by which I mean serious engagement with multiple philosophical traditions and disciplinary paradigms. But I also mean openness to the possibility that the problem of aligning AI might have multiple good answers [AF · GW], and that we need to contend with how to do that. Having defined those terms, let’s get into the reasons.

1. Avoiding the streetlight fallacy

The first is avoiding the streetlight fallacy — that if we simply keep exploring the philosophy that’s familiar to Western-educated elites, we are likely to miss out on huge swathes of human thought that may have crucial relevance to AI alignment.

Jay Garfield puts this quite sharply in his book on Engaging Buddhism. Speaking to Western philosophers about Buddhist philosophy, he argues that Buddhist philosophy shares too many concerns with Western philosophy to be ignored:

“Contemporary philosophy cannot continue to be practiced in the West in ignorance of the Buddhist tradition. ... Its concerns overlap with those of Western philosophy too broadly to dismiss it as irrelevant. Its perspectives are sufficiently distinct that we cannot see it as simply redundant. Close enough for conversation; distant enough for that conversation to be one from which we might learn. ... [T]o continue to ignore Buddhist philosophy (and by extension, Chinese philosophy, non-Buddhist Indian philosophy, African philosophy, Native American philosophy...) is indefensible.”

— Jay Garfield, Engaging Buddhism: Why It Matters to Philosophy (2015)

2. Robustness to moral and normative uncertainty

The second is robustness to moral and normative uncertainty. If you’re unsure about what the right thing to do is, or to align an AI towards, and you think it’s plausible that other philosophical perspectives might have good answers, then it’s reasonable to diversify our resources to incorporate them.

This is similar to the argument that Open Philanthropy makes for worldview diversification (and related to the informational situation of having imprecise credences, discussed briefly by MacAskill, Bykvist and Ord in Moral Uncertainty):

“When deciding between worldviews, there is a case to be made for simply taking our best guess, and sticking with it. If we did this, we would focus exclusively on animal welfare, or on global catastrophic risks, or global health and development, or on another category of giving, with no attention to the others. However, that’s not the approach we’re currently taking. Instead, we’re practicing worldview diversification: putting significant resources behind each worldview that we find highly plausible. We think it’s possible for us to be a transformative funder in each of a number of different causes, and we don’t - as of today - want to pass up that opportunity to focus exclusively on one and get rapidly diminishing returns.”

— Holden Karnofsky, Open Philanthropy CEO, Worldview Diversification (2016)

3. Pluralism as (political) pragmatism

The third is pluralism as a form political pragmatism. As Iason Gabriel at DeepMind writes: In the absence of moral agreement, is there a fair way to decide what principles AI should align with? Gabriel doesn’t really put it this way, but one way to interpret this is that, pluralism is pragmatic because it’s the only way we’re going to get buy in from disparate political actors.

“[W]e need to be clear about the challenge at hand. For the task in front of us is not, as we might first think, to identify the true or correct moral theory and then implement it in machines. Rather, it is to find a way of selecting appropriate principles that is compatible with the fact that we live in a diverse world, where people hold a variety of reasonable and contrasting beliefs about value. ... To avoid a situation in which some people simply impose their values on others, we need to ask a different question: In the absence of moral agreement, is there a fair way to decide what principles AI should align with?”

— Iason Gabriel, DeepMind, Artificial Intelligence, Values, and Alignment (2020)

4. Pluralism as respect for the equality and autonomy of persons

Finally, there’s pluralism as an ethical commitment in itself — pluralism as respect for the equality and autonomy of persons to choose what values and ideals matter to them. This is the reason I personally find the most compelling — I think in order to preserve a lot of what we care about in this world, we need aligned AI to respect this plurality of value.

Elizabeth Anderson puts this quite beautifully in her book, Value in Ethics and Economics. Noting that individuals may rationally adopt or uphold a great diversity of worthwhile ideals, she argues that we lack good reason for impersonally ranking all legitimate ways of life on some universal scale. If we accept that there may be conflicting yet legitimate philosophies about what constitutes a good life, then we also have to accept that there maybe multiple incommensurable scales of value that matter to people:

“There is a great diversity of worthwhile ideals, not all of which can be combined in a single life. ... Individuals with different talents, temperaments, interests, opportunities, and relations to others rationally adopt or uphold different ideals. ... In [a] liberal, pluralist, egalitarian society, there is no longer any point in impersonally ranking all legitimate ways of life on some hierarchy of intrinsic value. Plural and conflicting yet legitimate ideals will tell different people to value different [ways of living], and there is no point in insisting that a single ranking is impersonally valid for everyone.”

— Elizabeth Anderson, Value in Ethics and Economics (1995)

The relevance of non-Western philosophy

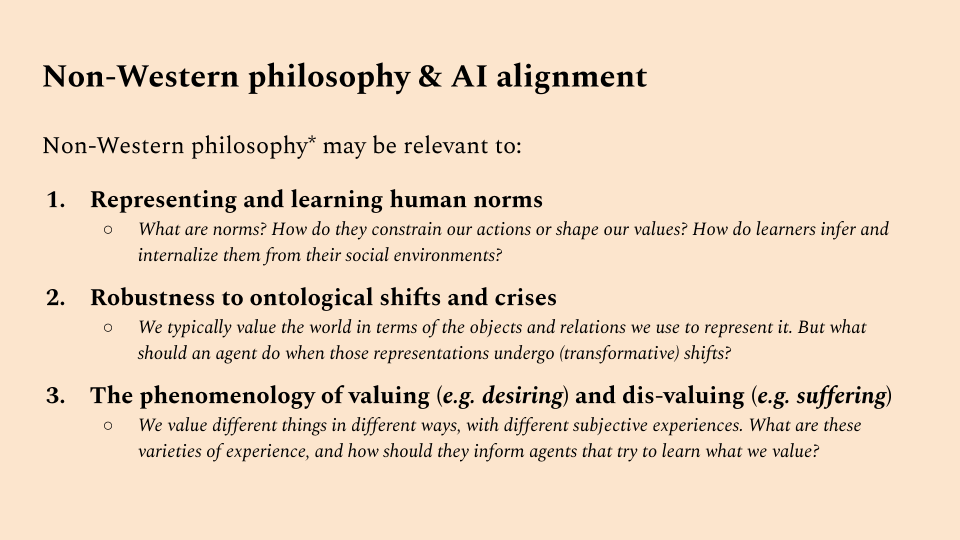

So that’s why I think pluralism matters to AI alignment. Perhaps you buy that, but perhaps it’s hard to think of concrete examples where non-dominant philosophies may be relevant to alignment research. So now I’d just like to offer a few. I think non-Western philosophy might be especially relevant to the following open problems in AI alignment:

- Representing and learning human norms. What are norms? How do they constrain our actions or shape our values? How do learners infer and internalize them from their social environments? Classical Chinese ethics, especially Confucian ethics, could provide some insights.

- Robustness to ontological shifts and crises. We typically value the world in terms of the objects and relations we use to represent it. But what should an agent do when those representations undergo (transformative) shifts? Certain schools of Buddhist metaphysics bear directly on these questions.

- The phenomenology of valuing (e.g. desiring) and disvaluing (e.g. suffering). We value different things in different ways, with different subjective experiences. What are these varieties of experience, and how should they inform agents that try to learn what we value? Buddhist, Jain and Vedic philosophy have been very much centered on the nature of these subjective experiences, and could provide answers.

Before I go on, I also wanted to note that this is primarily drawn from only the limited about of Chinese and Buddhist philosophy I’m familiar with. This is certainly not all of non-Western philosophy, and there’s a lot more out there, outside of the streetlight, that may be relevant.

1. Representing and learning human norms

Why do social norms and practices matter? One answer that’s common from game theory is that norms have instrumental value as coordinating devices or unspoken agreements. To the extent that we need AI to coordinate well with humans then, we may need AI to learn and follow these norms.

If you look to Confucian ethics however, you get a quite different picture. On one possible interpretation of Confucian thought, norms and practices are understood to have intrinsic value as evaluative standards and expressive acts. You can see this for example, in the Analects, which are attributed to Confucius:

克己復禮為仁。

Restraining the self and returning to ritual (禮 / li) constitutes humaneness (仁 / ren).

This word, li (禮), is hard to translate, but means something like ritual propriety or etiquette. And it recurs again and again in Confucian thought. This particular line suggests a central role for ritual in what Confucians thought of as a benevolent, humane and virtuous life. How to interpret this? Kwong-loi Shun suggests that this is because, while ritual forms may just be conventions, without these conventions, important evaluative attitudes like respect or reverence cannot be made intelligible or expressed:

Kwong-loi Shun [...] holds that on the one hand, a particular set of ritual forms are the conventions that a community has evolved, and without such forms attitudes such as respect or reverence cannot be made intelligible or expressed (the truth behind the definitionalist interpretation). In this sense, li constitutes ren within or for a given community. On the other hand, different communities may have different conventions that express respect or reverence, and moreover any given community may revise its conventions in piecemeal though not wholesale fashion (the truth behind the instrumentalist interpretation).

— David Wong, Chinese Ethics (Stanford Encyclopedia of Philosophy)

I was quite struck by this when I first encountered it — partly because I grew up finding a lot of Confucian thought really pointless and oppressive. And to be clear, some norms are oppressive. But I recently encountered a very similar idea in the work of Elizabeth Anderson (cited earlier) that made me come around more to it. In speaking about how individuals value things, and where we get these values from, Anderson argues that:

“Individuals are not self-sufficient in their capacity to value things in different ways. I am capable of valuing something in a particular way only in a social setting that upholds norms for that mode of valuation. I cannot honor someone outside of a social context in which certain actions ... are commonly understood to express honor.”

— Elizabeth Anderson, Value in Ethics and Economics (1995)

I find this really compelling. If you think about what constitutes good art, or literature, or beauty, all of that is undoubtedly tied up in norms about how to value things, and how to express those values.

If this is right, then there’s a sense in which the game theoretic account of norms has got things exactly reversed. In game theory, it’s assumed that norms emerge out of the interaction of individual preferences, and so are secondary. But for Confucians, and Anderson, it’s the opposite: norms are primary, or at least a lot of them are, and what we individually value is shaped by those norms.

This would suggest a pretty deep re-orientation of what AI alignment approaches that learn human values need to do. Rather than learn individual values, then figure out how to balance them across society, we need to consider that many values are social from the outset.

All of this dovetails quite nicely with one of the key insights in the paper Incomplete Contracting and AI Alignment:

“Building AI that can reliably learn, predict, and respond to a human community’s normative structure is a distinct research program to building AI that can learn human preferences. ... To the extent that preferences merely capture the valuation an agent places on different courses of action with normative salience to a group, preferences are the outcome of the process of evaluating likely community responses and choosing actions on that basis, not a primitive of choice.”

— Hadfield-Menell & Hadfield, Incomplete Contracting & AI Alignment (2018)

Here again, we see re-iterated idea that social norms constitute (at least some) individual preferences. What all of this suggests is that, if we want to accurately model human preferences, we may need to model the causal and social processes by which individuals learn and internalize norms: observation, instruction, ritual practice, punishment, etc.

Furthermore, when it comes to human values, then at least in some domains (e.g. what is beautiful, racist, admirable, or just), we ought to identify what's valuable not with the revealed preference or even the reflective judgement of a single individual, but with the outcome of some evaluative social process that takes into account pre-existing standards of valuation, particular features of the entity under evaluation, and potentially competing reasons for applying, not applying, or revising those standards.

As it happens, this anti-individualist approach to valuation isn't particularly prominent in Western philosophical thought (but again, see Anderson). Perhaps then, by looking towards philosophical traditions like Confucianism, we can develop a better sense of how these normative social processes should be modeled.

2. Robustness to ontological shifts and crises

Let's turn now to a somewhat old problem, first posed by MIRI in 2011: An agent defines its objective based on how it represents the world — but what should happen when that representation is changed?

“An agent’s goal, or utility function, may also be specified in terms of the states of, or entities within, its ontology. If the agent may upgrade or replace its ontology, it faces a crisis: the agent’s original goal may not be well-defined with respect to its new ontology. This crisis must be resolved before the agent can make plans towards achieving its goals.”

— Peter De Blanc, MIRI, Ontological Crises in AI Value Systems (2011)

As it turns out, Buddhist philosophy might provide some answers. To see how, it’s worth comparing it against more commonplace views about the nature of reality and the objects within it. Most of us grow up as what you might call naive realists, believing:

Naive Realism. Through our senses, we perceive the world and its objects directly.

But then we grow up and study some science, and encounter optical illusions, and maybe become representational realists instead:

Representational Realism. We indirectly construct representations of the external world from sense data, but the world being represented is real.

Now, Madhyamaka Buddhism goes further — it rejects the idea that there is anything ultimately real or true. Instead, all facts are at best conventionally true. And while there may exist some mind-independent external world, there is no uniquely privileged representation of that world that is the “correct” one. However some representations are still better for alleviating suffering than others, and so part of the goal of Buddhist practice is to see through our everyday representations as merely conventional, and to adopt representations better suited for alleviating suffering.

This view is demonstrated in The Vimalakīrti Sutra, which actually uses gender as an example of a concept that should be seen through as conventional. I was quite astounded when I first read it, because the topic feels so current, but the text is actually 1800 years old:

The reverend monk, Śāriputra, asks a Goddess why she does not transform her female body into a male body, since she is supposed to be enlightened. In response, she swaps both their bodies, and explains:

Śāriputra, if you were able to transform

This female body,

Then all women would be able to transform as well.

Just as Śāriputra is not female

But manifests a female body

So are all women likewise:

Although they manifest female bodies

They are not, inherently, female.

Therefore, the Buddha has explained

That all phenomena are neither female nor male.— The Vimalakīrti Sutra (circa. 200 CE)

All this actually closely resonates, in my opinion, with a recent movement in Western analytic philosophy called conceptual engineering [LW · GW] — the idea that we should re-engineer concepts to suit our purposes. For example, Sally Haslanger at MIT has applied this approach in her writings on gender and race, arguing that feminists and anti-racists need to revise these concepts to better suit feminist and anti-racist ends.

I think this methodology is actually really promising way to deal with the question of ontological shifts. Rather than framing ontological shifts as quasi-exogenous occurrence that agents have to respond to, it frames them as meta-cognitive choices that we select with particular ends in mind. It almost suggests this iterative algorithm for changing our representations of the world:

- Fix some evaluative concepts (e.g., accuracy, well-being) and lower-level primitives.

- Refine other concepts to do better with respect those evaluative concepts.

- Adjust those evaluative concepts and lower-level primitives in response.

- Repeat as necessary.

How exactly this would work, and whether it would lead to reasonable outcomes, is, I think, really fruitful and open research terrain. I see MIRI's recent work on Cartesian Frames [AF · GW] as a very promising step in this direction, by formalizing the ways in which we might carve up the world into "self" and "other". When it comes to epistemic values, steps have also been made towards formalizing approximate causal abstractions. And of course, the importance of representational choice for efficient planning has been known since the 60s. What remains lacking is a theory of when and how to apply these representational shifts according to an initial set of desiderata, and then how to reconceive those desiderata in response.

3. The phenomenology of valuing and dis-valuing

On to the final topic of relevance. In AI and economics, it’s very common to just talk about human values in terms of this barebones concept of preference. Preference is an example of what you might call a thin evaluative attitude, which doesn’t have any deeper meaning beyond imposing a certain ordering over actions or outcomes.

In contrast, I think all of us familiar with a much wider range of evaluative attitudes and experiences: respect, admiration, love, shock, boredom, and so on. These are thick evaluative attitudes. And work in AI alignment hasn’t really tried to account for them. Instead, there’s a tendency to collapse everything into this monolithic concept of “reward”.

And I think that’s very dangerous — we’re not paying attention to the full range of subjective experience, and that may lead to catastrophic outcomes. Instead, I think we need to be engaging more with psychology, phenomenology, and neuroscience. For example, there’s work in the field of neurophenomenology that I think might be really promising for answering some of these questions:

“The use of first-person and second-person phenomenological methods to obtain original and refined first-person data is central to neurophenomenology. It seems true both that people vary in their abilities as observers and reporters of their own experiences and that these abilities can be enhanced through various methods. First-person methods are disciplined practices that subjects can use to increase their sensitivity to their own experiences at various time-scales. These practices involve the systematic training of attention and self-regulation of emotion. Such practices exist in phenomenology, psychotherapy, and contemplative meditative traditions. Using these methods, subjects may be able to gain access to aspects of their experience, such as transient affective state and quality of attention, that otherwise would remain unnoticed and unavailable for verbal report.”

— Thompson et al, Neurophenomenology: An Introduction for Neurophilosophers (2010)

Unsurprisingly, this work is very much informed by engagement with Buddhist, Jain, and Vedic philosophy and practice, because they are entire philosophical practices devoted to questions like “What is the nature of desire?”, “What is the nature of suffering?” and “What are the various mental factors that lead to one or the other?”

Does AI alignment require understanding human subjective experience at the incredibly fine level of detail aimed at by neurophenomenology and contemplative traditions? My intuition is that it won't, simply because we humans are capable of being helpful and beneficial without fulling understanding each others' minds. But we do understand at least that we all have different subjective experiences, which we may value or take as motivating in different ways.

This level of intuitive psychology, I believe, is likely to be necessary for alignment. And AI as a field is nowhere near it. Research into “emotion recognition”, which is perhaps the closest that AI has gotten to these questions, typically reifies emotion into 6 fixed categories, which is not much better than collapsing everything into “reward”. Given that contemplative Dharmic philosophy has long developed systematic methods for investigating the experiential nature of mind, as well as theories about how higher-order awareness relates to experience, it bears promise for informing how AI could learn theories of emotion and evaluative experience, rather than simply having them hard-coded.

Just as a final illustration of why the study evaluative experience is important, I want to highlight a question that often comes up in Buddhist philosophy: How can one act effectively in the world without experiencing desire or suffering? Unless you’re interested in attaining awakening, it may not be so relevant to humans, nor to AI alignment per se. But it becomes very relevant once we consider the possibility that we might build AI that suffers itself. In fact, there’s a recent paper on exactly this topic asking: How can we build functionally effective conscious AI without suffering?

“The possibility of machines suffering at our own hands ... only applies if the AI that we create or cause to emerge becomes conscious and thereby capable of suffering. In this paper, we examine the nature of the relevant kind of conscious experience, the potential functional reasons for endowing an AI with the capacity for feeling and therefore for suffering, and some of the possible ways of retaining the functional advantages of consciousness, whatever they are, while avoiding the attendant suffering.”

— Agarwal & Edelman, Functionally Effective Conscious AI Without Suffering (2020)

The worry here is that consciousness may have evolved in animals because it serves some function, and so, AI might only reach human-level usefulness if it is conscious. And if it is conscious, it could suffer. Most of us who care about sentient beings besides humans would want to make sure that AI doesn’t suffer — we don’t want to create a race of artificial slaves. So that’s why it might be really important to figure out whether agents can have functional consciousness without suffering.

To address this question, Agarwal & Edelman draw explicitly upon Buddhist philosophy, suggesting that suffering arises from identification with a phenomenal model of the self, and that by transcending that identification, suffering no longer occurs:

The final approach ... targets the phenomenology of identification with the phenomenal self model (PSM) as an antidote to suffering. ... Metzinger [2018] describes the unit of identification (UI) as that which the system consciously identifies itself with. Ordinarily, when the PSM is transparent, the system identifies with its PSM, and is thus conscious of itself as a self. But it is at least a logical possibility that the UI may not be limited to the PSM, but be shifted to the most “general phenomenal property” [Metzinger, 2017] of knowing common to all phenomenality including the sense of self. In this special condition, the typical subject-object duality of experience would dissolve; negatively valenced experiences could still occur, but they would not amount to suffering because the system would no longer be experientially subject to them.

— Agarwal & Edelman, Functionally Effective Conscious AI Without Suffering (2020)

No doubt, this is an imprecise — and likely contentious — definition of “suffering”, one which affords a very particular solution due to the way it is defined. But at the very least, the paper makes a valiant attempt towards formalizing, computationally, what suffering even might be. If we want to avoid creating machines that suffer, more research like this needs to be conducted, and we might do well to pay attention to Buddhist and related philosophies in the process.

Conclusion

With that, I’ll end my whirlwind tour of non-Western philosophy, and offer some key takeaways and steps forward.

What I hope to have shown with this talk is that AI alignment research has drawn from a relatively narrow set of philosophical perspectives. Expanding this set, for example, with non-Western philosophy, could provide fresh insights, and reduce the risk of misalignment.

In order to address this, I’d like to suggest that prospective researchers and funders in AI alignment should consider a wider range of disciplines and approaches. In addition, while support for alignment research has grown in CS departments, we may need to increase support in other fields, in order to foster the inter-disciplinary expertise needed for this daunting challenge.

If you enjoyed this talk, and would like to learn more about AI alignment, pluralism, or non-Western philosophy here are some reading recommendations. Thank you for your attention, and I look forward to your questions.

21 comments

Comments sorted by top scores.

comment by Rohin Shah (rohinmshah) · 2021-01-01T03:53:39.852Z · LW(p) · GW(p)

Upvoted, though I do disagree with the (framework behind the) post.

Here's a caricature of what I think is your view of AI alignment:

- We will build powerful / superintelligent AI systems at some point in the future.

- These AI systems will be optimizing for something. Since they are superintelligent, they will optimize very well for that thing, probably to the exclusion of all else.

- Thus, it is extremely important that we make this "something" that they are optimizing for the right thing. In particular, we need to define some notion of "the good" that the AI system should optimize for.

Under this setting, alignment researchers should really be very careful about getting the right notion of "the good", and should be appropriate modest and conservative around it, which in turn implies that we need pluralism.

I basically agree that under this view of the problem, the field as a whole is quite parochial and would benefit from plurality.

----

In contrast, I would think of AI alignment this way:

- There will be some tasks, like "suggest good policies to handle the problem of rising student debt", or "invest this pile of money to get high returns without too much risk", that we will automate via AI.

- As our AI systems become more and more competent, we will use them to automate tasks of larger and larger scope and impact. Eventually, we will have "superintelligent" systems that take on tasks of huge scope that individual humans could not do ("run this company in a fully automated way").

- For systems like this, we do not have a good story suggesting that these systems will "follow common sense", or stay bounded in scope. There is some chance that they behave in a goal-directed way; if so they may choose plans that optimize against humans, potentially resulting in human extinction.

On my view, AI alignment is primarily about defusing this second argument. Importantly, in order to defuse it, we do not need to define "the good" -- we need to provide a general method for creating AI systems that pursue some specific task, interpreted the way we meant it to be interpreted. Once we don't have to define "the good", many of the philosophical challenges relating to values and ethics go away.

The choice of how these AI systems are used happens the same way that such things happen so far: through a combination of market forces, government regulation, public pressure, etc. Humanity as a whole may want to have a more deliberate approach; this is the goal of AI governance work, for example. Note that technical work can be done towards this goal as well -- the ARCHES agenda has lots of examples. But I wouldn't call this part of AI alignment.

(I do think ontological shifts continue to be relevant to my description of the problem, but I've never been convinced that we should be particularly worried about ontological shifts, except inasmuch as they are one type of possible inner alignment / robustness failure.)

----

I should note that the view I'm espousing here may not be the majority view -- I think it's more likely that your view is more common amongst AI alignment researchers.

----

Some specific comments:

it’s based on the work that gets highlighted in venues like the Alignment Newsletter, or that gets discussed on the AI Alignment forum.

If you literally mean things in the highlights section of the newsletter, that's "things that Rohin thinks AI alignment researchers should read", which is heavily influenced by my evaluation of what's important / relevant, as well as what I do / don't understand. This still seems like a fair way to evaluate what the alignment community thinks about, but I think it is going to overestimate how parochial the community is. For example, if you go by "what does Stuart Russell think is important", I expect you get a very different view on the field, much of which won't be in the Alignment Newsletter.

5. Consequentialist (vs. non-consequentialist)

Finally, there’s a tendency towards consequentialism — consequentialism in the broad sense that value and ethics are about outcomes or states of affairs. This excludes views that root value/ethics in evaluative attitudes, deontic norms, or contractualism.

I agree that alignment researchers tend to have this stance, but I don't think their work does? Reward functions are typically allowed to depend on actions, and the alignment community is particularly likely to use reward functions on entire trajectories, which can express arbitrary views [? · GW] (though I agree that many views are not "naturally" expressed in this framework).

It’s worth noting that the first three of these tendencies are very much influenced by recent successes of deep reinforcement learning in AI. In fact, prior to these successes, a lot of work in AI was more on the other end of the spectrum: first order logic, classical planning, cognitive systems, etc. One worry then, is that the attention of AI alignment researchers might be unduly influenced by the success or popularity of contemporary AI paradigms.

(I'd cite deep learning generally, not just deep RL.)

If you start with an uninformative prior and no other evidence, it seems like you should be focusing a lot of attention on the paradigm that is most successful / popular. So why is this influence "undue"?

Replies from: xuan, xuan, Stuart_Armstrong↑ comment by xuan · 2021-01-01T23:28:16.695Z · LW(p) · GW(p)

Thanks for these thoughts! I'll respond to your disagreement with the framework here, and to the specific comments in a separate reply.

First, with respect to my view about the sources of AI risk, the characterization you've put forth isn't quite accurate (though it's a fair guess, since I wasn't very explicit about it). In particular:

- These days I'm actually more worried by structural risks and multi-multi alignment risks, which may be better addressed by AI governance than technical research per se. If we do reach super-intelligence, I think it's more likely to be along the lines of CAIS [AF · GW] than the kind of agential super-intelligence pictured by Bostrom. That said, I still think that technical AI alignment is important to get right, even in a CAIS-style future, hence this talk -- I see it as necessary, but not sufficient.

- I don't think that powerful AI systems will necessarily be optimizing for anything (at least not in the agential sense suggested by Superintelligence). In fact, I think we should actively avoid building globally optimizing agents if possible --- I think optimization is the wrong framework for thinking about "rationality" or "human values", especially in multi-agent contexts. I think it's still non-trivially likely that we'll end up building AGI that's optimizing in some way, just because that's the understanding of "rationality" or "solving a task" that's so predominant within AI research. But in my view, that's precisely the problem, and my argument for philosophical pluralism is in part because it offers theories of rationality, value, and normativity that aren't about "maximizing the good".

- Regarding "the good", the primary worry I was trying to raise in this talk has less to do with "ethical error", which can arise due to e.g. Goodhart's curse, and more to do with meta-ethical and meta-normative error, [AF · GW]i.e., that the formal concepts and frameworks that the AI alignment community has typically used to understand fuzzy terms like "value", "rationality" and "normativity" might be off-the-mark.

For me, this sort of error is importantly different from the kind of error considered by inner and outer alignment. It's often implicit in the mathematical foundations of decision theory and ML theory itself, and tends to go un-noticed. For example, once we define rationality as "maximize expected future reward", or assume that human behavior reflects reward-rational implicit choice, we're already making substantive commitments about the nature of "value" and "rationality" that preclude other plausible characterizations of these concepts, some of which I've highlighted in the talk. Of course, there has been plenty of discussion about whether these formalisms are in fact the right ones -- and I think MIRI-style research has been especially valuable for clarifying our concepts of "agency" and "epistemic rationality" -- but I've yet to see some of these alternative conceptions of "value" and "practical rationality" discussed heavily in AI alignment spaces.

Second, with respect to your characterization of AI development and AI risk, I believe that points 1 and 2 above suggest that our views don't actually diverge that much. My worry is that the difficulty of building machines that "follow common sense" is on the same order of magnitude as "defining the good", and just as beset by the meta-ethical and meta-normative worries I've raised above. After all, "common sense" is going to include "common social sense" and "common moral sense", and this kind of knowledge is irreducibly normative. (In fact, I think there's good reason to think that all knowledge and inquiry is irreducibly normative, but that's a stronger and more contentious claim.)

Furthermore, given that AI is already deployed in social domains which tend to have open scope (personal assistants, collaborative and caretaking robots, legal AI, etc.), I think it's a non-trivial possibility that we'll end up having powerful misaligned AI applied to those contexts, and that either violate their intended scope, or require having wide scope to function well (e.g., personal assistants). No doubt, "follow common sense" is a lower bar than "solve moral philosophy", but on the view that philosophy is just common sense applied to itself, solving "common sense" is already most of the problem. For that reason, I think it deserves a plurality of disciplinary* and philosophical perspectives as well.

(*On this note, I think cognitive science has a lot to offer with regard to understanding "common sense". Perhaps I am overly partial given that I am in computational cognitive science lab, but it does feel like there's insufficient awareness or discussion of cognitive scientific research within AI alignment spaces, despite its [IMO clearcut] relevance.)

↑ comment by Rohin Shah (rohinmshah) · 2021-01-02T00:58:31.800Z · LW(p) · GW(p)

I agree with you on 1 and 2 (and am perhaps more optimistic about not building globally optimizing agents; I actually see that as the "default" outcome).

My worry is that the difficulty of building machines that "follow common sense" is on the same order of magnitude as "defining the good", and just as beset by the meta-ethical and meta-normative worries I've raised above.

I think this is where I disagree. I'd offer two main reasons not to believe this:

- Children learn to follow common sense, despite not having (explicit) meta-ethical and meta-normative beliefs at all. (Though you could argue that the relevant meta-ethical and meta-normative concepts are inherent in / embedded in / compiled into the human brain's "priors" and learning algorithm.)

- Intuitively, it seems like sufficiently good imitations of humans would have to have (perhaps implicit) knowledge of "common sense". We can see this to some extent, where GPT-3 demonstrates implicit knowledge of at least some aspects of common sense (though I do not claim that it acts in accordance with common sense).

(As a sanity check, we can see that neither of these arguments would apply to the "learning human values" case.)

After all, "common sense" is going to include "common social sense" and "common moral sense", and this kind of knowledge is irreducibly normative. (In fact, I think there's good reason to think that all knowledge and inquiry is irreducibly normative, but that's a stronger and more contentious claim.)

I'm going to assume that Quality Y is "normative" if determining whether an object X has quality Y depends on who is evaluating Y. Put another way, an independent race of aliens that had never encountered humans would probably not converge to the same judgments as we do about quality Y.

This feels similar to the is-ought distinction: you cannot determine "ought" facts from "is" facts, because "ought" facts are normative, whereas "is" facts are not (though perhaps you disagree with the latter).

I think "common sense is normative" is sufficient to argue that a race of aliens could not build an AI system that had our common sense, without either the aliens or the AI system figuring out the right meta-normative concepts for humanity (which they presumably could not do without encountering humans first).

I don't see why it implies that we cannot build an AI system that has our common sense. Even if our common sense is normative, its effects are widespread; it should be possible in theory to back out the concept from its effects, and I don't see a reason it would be impossible in practice (and in fact human children feel like a great example that it is possible in practice).

I suspect that on a symbolic account of knowledge, it becomes more important to have the right meta-normative principles (though I still wonder what one would say to the example of children). I also think cog sci would be an obvious line of attack on a symbolic account of knowledge; it feels less clear how relevant it is on a connectionist account. (Though I haven't read the research in this space; it's possible I'm just missing something basic.)

Replies from: Charlie Steiner↑ comment by Charlie Steiner · 2021-01-21T22:03:19.196Z · LW(p) · GW(p)

Children learn to follow common sense, despite not having (explicit) meta-ethical and meta-normative beliefs at all.

Children also learn right from wrong - I'd be interested in where you draw the line between "An AI that learns common sense" and "An AI that learns right from wrong." (You say this argument doesn't apply in the case of human values, but it seems like you mean only explicit human values, not implicit ones.)

My suspicion, which is interesting to me so I'll explain it even if you're going to tell me that I'm off base, is that you're thinking that part of common sense is to avoid uncertain or extreme situations (e.g. reshaping the galaxy with nanotechnology), and that common sense is generally safe and trustworthy for an AI to follow, in a way that doesn't carry over to "knowing right from wrong." An AI that has learned right from wrong to the same extent that humans learn it might make dangerous moral mistakes.

But when I think about it like that, it actually makes me less trusting of learned common sense. After all, one of the most universally acknowledged things about common sense is that it's uncommon among humans! Merely doing common sense as well as humans seems like a recipe for making a horrible mistake because it seemed like the right thing at the time - this opens the door to the same old alignment problems (like self-reflection and meta-preferences [or should that be meta-common-sense]).

P.S. I'm not sure I quite agree with this particular setting of normativity. The reason is the possibility of "subjective objectivity", where you can make what you mean by "Quality Y" arbitrarily precise and formal if given long enough to split hairs. Thus equipped, you can turn "Does this have quality Y?" into an objective question by checking against the (sufficiently) formal, precise definition.

The point is that the aliens are going to be able to evaluate this formal definition just as well as you. They just don't care about it. Even if you both call something "Quality Y," that doesn't avail you much if you're using that word to mean very different things. (Obligatory old Eliezer post [LW · GW])

Anyhow, I'd bet that xuan is not saying that it is impossible to build an AI with common sense - they're saying that building an AI with common sense is in the same epistemological category as building an AI that knows right from wrong.

Replies from: rohinmshah↑ comment by Rohin Shah (rohinmshah) · 2021-01-21T23:43:34.266Z · LW(p) · GW(p)

Children also learn right from wrong - I'd be interested in where you draw the line between "An AI that learns common sense" and "An AI that learns right from wrong."

I'm happy to assume that AI will learn right from wrong to about the level that children do. This is not a sufficiently good definition of "the good" that we can then optimize it.

My suspicion, which is interesting to me so I'll explain it even if you're going to tell me that I'm off base, is that you're thinking that part of common sense is to avoid uncertain or extreme situations (e.g. reshaping the galaxy with nanotechnology), and that common sense is generally safe and trustworthy for an AI to follow, in a way that doesn't carry over to "knowing right from wrong." An AI that has learned right from wrong to the same extent that humans learn it might make dangerous moral mistakes.

That sounds basically right, with the caveat that you want to be a bit more specific and precise with what the AI system should do than just saying "common sense"; I'm using the phrase as a placeholder for something more precise that we need to figure out.

Also, I'd change the last sentence to "an AI that has learned right from wrong to the same extent that humans learn it, and then optimizes for right things as hard as possible, will probably make dangerous moral mistakes". The point is that when you're trying to define "the good" and then optimize it, you need to be very very correct in your definition, whereas when you're trying not to optimize too hard in the first place (which is part of what I mean by "common sense") then that's no longer the case.

After all, one of the most universally acknowledged things about common sense is that it's uncommon among humans!

I think at this point I don't think we're talking about the same "common sense".

Merely doing common sense as well as humans seems like a recipe for making a horrible mistake because it seemed like the right thing at the time - this opens the door to the same old alignment problems (like self-reflection and meta-preferences [or should that be meta-common-sense]).

But why?

they're saying that building an AI with common sense is in the same epistemological category as building an AI that knows right from wrong.

Again it depends on how accurate the "right/wrong classifier" needs to be, and how accurate the "common sense" needs to be. My main claim is that the path to safety that goes via "common sense" is much more tolerant of inaccuracies than the path that goes through optimizing the output of the right/wrong classifier.

Replies from: Charlie Steiner↑ comment by Charlie Steiner · 2021-01-22T03:16:13.620Z · LW(p) · GW(p)

My first idea is, you take your common sense AI, and rather than saying "build me a spaceship, but, like, use common sense," you can tell it "do the right thing, but, like, use common sense." (Obviously with "saying" and "tell" in invisible finger quotes.) Bam, Type-1 FAI.

Of course, whether this will go wrong or not depends on the specifics. I'm reminded of Adam Shimi et al's recent post [LW · GW] that mentioned "Ideal Accomplishment" (how close to an explicit goal a system eventually gets) and "Efficiency" (how fast it gets there). If you have a general purpose "common sensical optimizer" that optimizes any goal but, like, does it in a common sense way, then before you turn it on you'd better know whether it's affecting ideal accomplishment, or just efficiency.

That is to say, if I tell it to make me the best spaceship it can or something similarly stupid, will the AI "know that the goal is stupid" and only make a normal spaceship before stopping? Or will it eventually turn the galaxy into spaceship, just taking common-sense actions along the way? The truly idiot-proof common sensical optimizer changes its final destination so that it does what we "obviously" meant, not what we actually said. The flaws in this process seem to determine if it's trustworthy enough to tell to "do the right thing," or trustworthy enough to tell to do anything at all.

↑ comment by xuan · 2021-01-02T23:21:55.582Z · LW(p) · GW(p)

Replying to the specific comments:

This still seems like a fair way to evaluate what the alignment community thinks about, but I think it is going to overestimate how parochial the community is. For example, if you go by "what does Stuart Russell think is important", I expect you get a very different view on the field, much of which won't be in the Alignment Newsletter.

I agree. I intended to gesture a little bit at this when I mentioned that "Until more recently, It’s also been excluded and not taken very seriously within traditional academia", because I think one source of greater diversity has been the uptake of AI alignment in traditional academia, leading to slightly more inter-disciplinary work, as well as a greater diversity of AI approaches. I happen to think that CHAI's research publications page reflects more of the diversity of approaches I would like to see, and wish that more new researchers were aware of them (as opposed to the advice currently given by, e.g., 80K, which is to skill up in deep learning and deep RL).

Reward functions are typically allowed to depend on actions, and the alignment community is particularly likely to use reward functions on entire trajectories, which can express arbitrary views [? · GW] (though I agree that many views are not "naturally" expressed in this framework).

Yup, I think purely at the level of expressivity, reward functions on a sufficiently extended state space can express basically anything you want. That still doesn't resolve several worries I have though:

- Talking about all human motivation using "rewards" tends to promote certain (behaviorist / Humean) patterns of thought over others. In particular I think it tends to obscure the logical and hierarchical structure of many aspects of human motivation -- e.g., that many of our goals are actually instrumental sub-goals in higher-level plans [AF · GW], and that we can cite reasons for believing, wanting, or planning to do a certain thing. I would prefer if people used terms like "reasons for action" and "motivational states", rather than simply "reward functions".

- Even if reward functions can express everything you want them to, that doesn't mean they'll be able to learn everything you want them to, or generalize in the appropriate ways. e.g., I think deep RL agents are unlikely to learn the concept of "promises" in a way that generalizes robustly, unless you give them some kind of inductive bias that leads them to favor structures like LTL formulas (This is a related worry to Stuart Armstrong's no-free-lunch theorem.) At some point I intend to write a longer post about this worry.

Of course, you could just define reward functions over logical formulas and the like, and do something like reward modeling via program induction, but at that point you're no longer using "reward" in the way its typically understood. (This is similar to move, made by some Humeans, that reason can only be motivating because we desire to follow reason. That's fair enough, but misses the point for calling certain kinds of motivations "reasons" at all.)

(I'd cite deep learning generally, not just deep RL.)

You're right, that's what I meant, and have updated the post accordingly.

If you start with an uninformative prior and no other evidence, it seems like you should be focusing a lot of attention on the paradigm that is most successful / popular. So why is this influence "undue"?

I agree that if you start with a very uninformative prior, focusing on the most recently successful paradigm makes sense. But I think once you take into account slightly more information, I think there's reason to think the AI alignment community is currently overly biased towards deep learning:

- The trend-following behavior in most scientific & engineering fields, including AI, should make us skeptical that currently popular approaches are popular for the right reasons. In the 80s everyone was really excited about expert systems and the 5th generation project. About 10 years ago, Bayesian non-parametrics were really popular. Now deep learning is popular. Knowing this history suggests that we should be a little more careful about joining the bandwagon. Unfortunately, a lot of us joining the field now don't really know this history, nor are we necessarily exposed to the richness and breadth of older approaches before diving headfirst into deep learning (I only recognized this after starting my PhD and started learning more about symbolic AI planning and programming languages research).

- We have extra reason to be cautious about deep learning being popular for the wrong reasons, given that many AI researchers say that we should be focusing less on machine learning while at the same time publishing heavily in machine learning. For example, at the AAAI 2019 informal debate, the majority of audience members voted against the proposition that "The AI community today should continue to focus mostly on ML methods". At some point during the debate, it was noted that despite the opposition to ML, most papers at AAAI that year were about ML, and it was suggested, to some laughter, that people were publishing in ML simply because that's what would get them published.

- The diversity of expert opinion about whether deep learning will get us to AGI doesn't feel adequately reflected in the current AI alignment community. Not everyone thinks the Bitter Lesson is quite the lesson we have to learn at. A lot of of prominent researchers like Stuart Russell, Gary Marcus, and Josh Tenenbaum all think that we need to re-invigorate symbolic and Bayesian approaches (perhaps through hybrid neuro-symbolic methods), and if you watch the 2019 Turing Award keynotes by both Hinton and Bengio, both of them emphasize the importance of having structured generative models of the world (they just happen to think it can be achieved by building the right inductive biases into neural networks). In contrast, outside of MIRI, it feels like a lot of the alignment community anchors towards the work that's coming out of OpenAI and DeepMind.

My own view is that the success of deep learning should be taken in perspective. It's good for certain things, and certain high-data training regimes, and will remain good for those use cases. But in a lot of other use cases, where we might care a lot about sample efficiency and rapid + robust generalizability, most of the recent progress has, in my view, been made by cleverly integrating symbolic approaches with neural networks (even AlphaGo can be seen as a version of this, if one views MCTS as symbolic). I expect future AI advances to occur in a similar vein, and for me that lowers the relevance of ensuring that end-to-end DL approaches are safe and robust.

Replies from: rohinmshah↑ comment by Rohin Shah (rohinmshah) · 2021-01-03T20:35:07.828Z · LW(p) · GW(p)

Re: worries about "reward", I don't feel like I have a great understanding of what your worry is, but I'd try to summarize it as "while the abstraction of reward is technically sufficiently expressive, 1) it may not have the right inductive biases, and so the framework might fail in practice, and 2) it is not a good framework for thought, because it doesn't sufficiently emphasize many important concepts like logic and hierarchical planning".

I think I broadly agree with those points if our plan is to explicitly learn human values, but it seems less relevant when we aren't trying to do that and are instead trying to

provide a general method for creating AI systems that pursue some specific task, interpreted the way we meant it to be interpreted.

In this framework, "knowledge about what humans want" doesn't come from a reward function, it comes from something like GPT-3 pretraining. The AI system can "invent" whatever concepts are best for representing its knowledge, which includes what humans want.

Here, reward functions should instead be thought of as akin to loss functions -- they are ways of incentivizing particular kinds of outputs. I think it's reasonable to think on priors that this wouldn't be sufficient to get logical / hierarchical behavior, but I think GPT and AlphaStar and all the other recent successes should make you rethink that judgment.

----

The trend-following behavior in most scientific & engineering fields, including AI, should make us skeptical that currently popular approaches are popular for the right reasons.

I agree that trend-following behavior exists. I agree that this means that work on deep learning is less promising than you might otherwise think. That doesn't mean it's the wrong decision; if there are a hundred other plausible directions, it can still be the case that it's better to bet on deep learning rather than try your hand at guessing which paradigm will become dominant next. To quote Rodney Brooks:

Whatever [the "next big thing"] turns out to be, it will be something that someone is already working on, and there are already published papers about it. There will be many claims on this title earlier than 2023, but none of them will pan out.

He also predicts that the "next big thing" will happen by 2027 (though I get the sense that he might count new kinds of deep learning architectures as a "big thing" so he may not be predicting something as paradigm-shifting as you're thinking).

Whether to diversify depends on the size of the field; if you have 1 million alignment researchers you definitely want to diversify, whereas at 5 researchers you almost certainly don't; I'm claiming that we're small enough now and uninformed enough about alternatives to deep learning that diversification is not a great approach.

We have extra reason to be cautious about deep learning being popular for the wrong reasons, given that many AI researchers say that we should be focusing less on machine learning while at the same time publishing heavily in machine learning.

Just because AI research should diversify doesn't mean alignment research should diversify -- given their relative sizes, it seems correct for alignment researchers to focus on the dominant paradigm while letting AI researchers explore the space of possible ways to build AI. Alignment researchers should then be ready to switch paradigms if a new one is found.

A lot of of prominent researchers like Stuart Russell, Gary Marcus, and Josh Tenenbaum all think that we need to re-invigorate symbolic and Bayesian approaches (perhaps through hybrid neuro-symbolic methods)

This feels like the most compelling argument, since it identifies particular other approaches (though still very large ones). Some objections from the outside view:

- I think all three of the researchers you mentioned have long timelines; work is generally more useful on shorter timelines, this should bias you towards what is currently popular. Some of these researchers don't think we can get to AGI at all; as long as you aren't confident that they are correct, you should ignore that position (if we're in that world, then there isn't any AI alignment x-risk, so it isn't decision-relevant).

- I find the arguments given by these researchers to be relatively weak and easily countered, and am more inclined to use inside-view arguments as a result. (Though I should note that I think that it is often correct to trust in an expert even when their arguments seem weak, so this is a relatively minor point.)

(Re: Hinton and Bengio, I feel like that's in support of the work that's currently being done; the work that comes out of those labs doesn't seem that different from what comes out of OpenAI and DeepMind.)

Going to the inside view on neurosymbolic AI:

(even AlphaGo can be seen as a version of this, if one views MCTS as symbolic)

I feel like if you endorse this then you should also think of iterated amplification as neurosymbolic [LW · GW] (though maybe you think if humans are involved that's "neurohuman" rather than neurosymbolic and the distinction is relevant for some reason).

Overall, I do expect that neurosymbolic approaches will be helpful and used in many practical AI applications; they allow you to encode relevant domain knowledge without having to learn it all from scratch. I don't currently see that it introduces new alignment problems, or changes how we should think about the existing problems that we work on, and that's the main reason I don't focus on it. But I certainly agree with that as a background model of what future AI systems will look like, and if someone identified a problem that happens with neurosymbolic AI that isn't addressed by current work in AI alignment, I'd be pretty excited to see research solving that problem, and might do it myself.

----

Things I do agree with:

- It would be significantly better if the average / median commenter on the Alignment Forum knew more about AI techniques. (I think this is also true of deep learning.)

- There will probably be something in the future that radically changes our beliefs about AGI.

↑ comment by Stuart_Armstrong · 2021-01-12T16:13:44.578Z · LW(p) · GW(p)

(I do think ontological shifts continue to be relevant to my description of the problem, but I've never been convinced that we should be particularly worried about ontological shifts, except inasmuch as they are one type of possible inner alignment / robustness failure.)

I feel that the whole AI alignment problem can be seen as problems with ontological shifts: https://www.lesswrong.com/posts/k54rgSg7GcjtXnMHX/model-splintering-moving-from-one-imperfect-model-to-another-1 [LW · GW]

Replies from: rohinmshah↑ comment by Rohin Shah (rohinmshah) · 2021-01-12T19:47:57.463Z · LW(p) · GW(p)

I think I agree at least that many problems can be seen this way, but I suspect that other framings are more useful for solutions. (I don't think I can explain why here, though I am working on a longer explanation of what framings I like and why.)

What I was claiming in the sentence you quoted was that I don't see ontological shifts as a huge additional category of problem that isn't covered by other problems, which is compatible with saying that ontological shifts can also represent many other problems.

Replies from: Stuart_Armstrong↑ comment by Stuart_Armstrong · 2021-01-13T10:04:21.800Z · LW(p) · GW(p)

(I don't think I can explain why here, though I am working on a longer explanation of what framings I like and why.)

Cheers, that would be very useful.

comment by lsusr · 2021-08-14T03:51:17.347Z · LW(p) · GW(p)

I do not doubt that the AI alignment community is philosophically homogeneous and that homogeneity hinders the epistemic process. I also agree that it tends to be very consequentialist and that consequentialist ethics should not be considered axiomatic. The AI control problem stems from the unstated assumption that a powerful AI is a consequentialist machine. I think that AI should not, in general, be considered a consequentialist machine [LW · GW].

What do we do about this? I can't speak directly for the AI alignment community, but I can speak about the rationality community. The rationalist community and the AI alignment community are so closely connected that improvements to one ought to spill into the other.

The simplest thing is to promote actual diversity (especially intellectual diversity). That's a lot easier to say than to do. This field tends to attract extreme people. Intense intellectual selective pressure promotes intellectual homogeneity. I push back against intellectual homogeneity by writing about Eastern thought.

Chinese readers occasionally message me about how my rationalist writings related to classical literature (such as Sunzi's The Art of War and the poetry of Li Bai) have made them feel welcome in the rationalist community in a way nothing else has. For my non-Chinese readers, this approach provides an on-boarding process into alien ways of thought.

comment by adamShimi · 2021-01-03T12:41:19.768Z · LW(p) · GW(p)

This is a really cool post. Do you have books/blogs recommendations for digging into non-western philosophies?

On the philosophical tendencies you see, I would like to point some examples which don't follow these tendencies. But on the whole I agree with your assessment.

- For symbolic AI, I would say a big part of the Agents Foundations researchers (which includes a lot of MIRI researchers and people like John S. Wentworth) definitely do consider symbolic AI in their work. I won't go as far as saying that they don't care about connectionism, but I definitely don't get a "connectionism or nothing" vibe from them

- For cognitivist AI, examples of people thinking in terms of internal behaviors are Evan Hubinger from MIRI and Richard Ngo, who worked at DeepMind and is now doing a PhD in Philosophy at Oxford.

- For reasonableness/sense-making, researchers on Debate (like Beth Barnes, Paul Christiano, Joe Collman) and the people I mentioned in the symbolic AI point seem to also consider more argumentation and logical forms of rationality (in combination with decision theoretic reasoning)

4. Pluralism as respect for the equality and autonomy of persons

This feels like something that a lot of current research focuses on. Most people trying to learn values and preferences focus on the individual preferences of people at a specific point in time, which seems pretty good for respecting the differences in value. The way this wouldn't work would be if the specific formalism (like utility functions over histories) was really biased against some forms of value.

Furthermore, when it comes to human values, then at least in some domains (e.g. what is beautiful, racist, admirable, or just), we ought to identify what's valuable not with the revealed preference or even the reflective judgement of a single individual, but with the outcome of some evaluative social process that takes into account pre-existing standards of valuation, particular features of the entity under evaluation, and potentially competing reasons for applying, not applying, or revising those standards.

As it happens, this anti-individualist approach to valuation isn't particularly prominent in Western philosophical thought (but again, see Anderson). Perhaps then, by looking towards philosophical traditions like Confucianism, we can develop a better sense of how these normative social processes should be modeled.

Do you think this relates to idea like computational social choice? I guess the difference with the latter comes from it taking individual preferences as building blocks, where you seem to want community norms as primitives.

I definitely don't know Confucianism enough for discussing it in this context, but I'm really not convinced by the value of all social norms. For some (like those around language, and morality), the Learning Normativity agenda [AF · GW] of Abram feels relevant.

I think this methodology is actually really promising way to deal with the question of ontological shifts. Rather than framing ontological shifts as quasi-exogenous occurrence that agents have to respond to, it frames them as meta-cognitive choices that we select with particular ends in mind.

My first reaction is horror at imagining how this approach could allow an AGI to take a decision with terrible consequences for humans, and then change its concept to justify it to itself. Being more charitable with your proposal, I do think that this can be a good analysis perspective, especially for understanding reward tampering problems. But I want the algorithm/program dealing with ontological crises to keep some tethers to important things I want it aligned to. So in some sense, I want AGIs to be some for of realists according to concepts like corrigibility and impact.