Posts

Comments

https://www.gov.cn/zhengce/202407/content_6963770.htm

中共中央关于进一步全面深化改革 推进中国式现代化的决定 (2024年7月18日中国共产党第二十届中央委员会第三次全体会议通过)

(51)完善公共安全治理机制。健全重大突发公共事件处置保障体系,完善大安全大应急框架下应急指挥机制,强化基层应急基础和力量,提高防灾减灾救灾能力。完善安全生产风险排查整治和责任倒查机制。完善食品药品安全责任体系。健全生物安全监管预警防控体系。加强网络安全体制建设,建立人工智能安全监管制度。

I checked the translation:

(51) Improve the public security governance mechanism. Improve the system for handling major public emergencies, improve the emergency command mechanism under the framework of major safety and emergency response, strengthen the grassroots emergency foundation and force, and improve the disaster prevention, mitigation and relief capabilities. Improve the mechanism for investigating and rectifying production safety risks and tracing responsibilities. Improve the food and drug safety responsibility system. Improve the biosafety supervision, early warning and prevention and control system. Strengthen the construction of the internet security system and establish an artificial intelligence safety supervision-regulation system.

As usual, utterly boring.

You have inspired me to do the same with my writings. I just updated my entire website to PD, with CC0 as a fallback (releasing under Public Domain being unavailable on GitHub, and apparently impossible under some jurisdictions??)

I don’t fully understand why other than to gesture at the general hand-wringing that happens any time someone proposes doing something new in human reproduction.

I have the perfect quote for this.

A breakthrough, you say? If it's in economics, at least it can't be dangerous. Nothing like gene engineering, laser beams, sex hormones or international relations. That's where we don't want any breakthroughs. "

(Galbraith, 1990) A Tenured Professor, Houghton Mifflin; Boston.

Just want to plug my 2019 summary of the book that started it all.

How to take smart notes (Ahrens, 2017) — LessWrong

It's a good book, for sure. I use Logseq, which is similar to Roam but more fitted to my habits. I never bought into the Roam hype (rarely even heard of it), but this makes me glad I never went into it.

In an intelligence community context, the American spy satellites like the KH program achieved astonishing things in photography, physics, and rocketry—things like handling ultra-high-resolution photography in space (with its unique problems like disposing of hundreds of gallons of water in space) or scooping up landing satellites in helicopters were just the start. (I was skimming a book the other day which included some hilarious anecdotes—like American spies would go take tourist photos of themselves in places like Red Square just to assist trigonometry for photo analysis.) American presidents obsessed over the daily spy satellite reports, and this helped ensure that the spy satellite footage was worth obsessing over. (Amateurs fear the CIA, but pros fear NRO.)

What is that book with the fun anecdotes?

I use a fairly basic Quarto template for website. The code for the entire site is on github.

The source code is actually right there in the post. Click the button Code, then click View Source.

https://yuxi-liu-wired.github.io/blog/posts/perceptron-controversy/

Concretely speaking, are you to suggest that a 2-layered fully connected network trained by backpropagation, with ~100 neurons in each layer (thus ~20000 weights), would have been uneconomical even in the 1960s, even if they had backprop?

I am asking this because the great successes in 1990s connectionism, including LeNet digit recognition, NETtalk, and the TD-gammon, all were on that order of magnitude. They seem within reach for the 1960s.

Concretely speaking, TD-gammon cost about 2e13 FLOPs to train, and in 1970, 1 million FLOP/sec cost 1 USD, so with 10000 USD of hardware, it would take about 1 day to train.

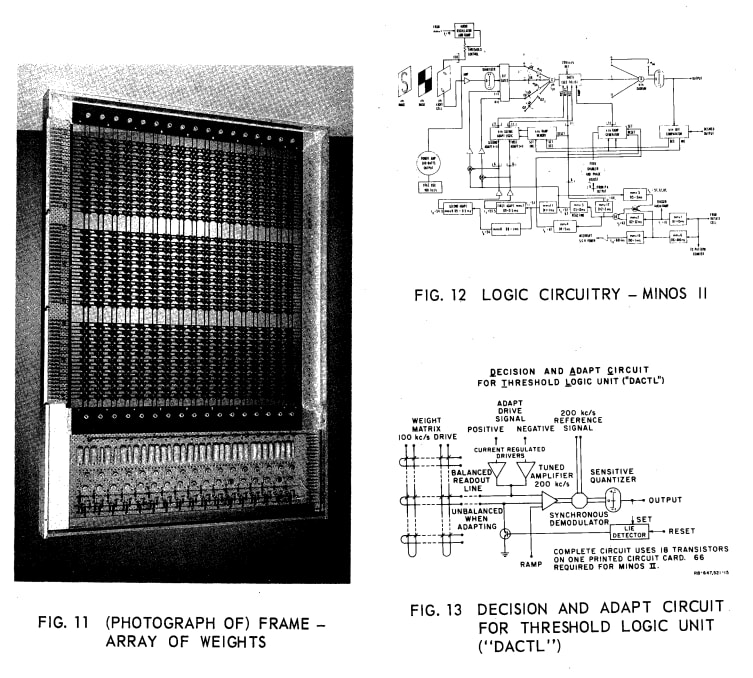

And interesting that you mentioned magnetic cores. The MINOS II machine built in 1962 by the Stanford Research Institute group had precisely a grid of magnetic core memory. Can't they have scaled it up and built some extra circuitry to allow backpropagation?

Corroborating the calculation, according to some 1960s literature, magnetic core logic could go up to 10 kHz. So if we have ~1e4 weights updated 1e4 times a second, that would be 1e8 FLOP/sec right there. TD-gammon would take ~1e5 seconds ~ 1 day, the same OOM as the previous calculation.

I was thinking of porting it full-scale here. It is in R-markdown format. But all the citations would be quite difficult to port. They look like [@something2000].

Does LessWrong allow convenient citations?

In David Rodin's Posthuman Life, a book that is otherwise very obtuse and obscurely metaphysical, there is an interesting argument for making posthumans before we know what they might be (indeed, he rejected the precautionary principle on the making of posthumans):

-

CLAIM. We have an obligation to make posthumans, or not prevent their appearance.

-

PROOF.

- Principle of accounting: we have an obligation to understand posthumans

- Speculative posthumanism: there could be radical posthumans

- Radical posthumans are impossible to understand unless we actually meet them

- We can only meet radical posthumans if we make them (intentionally or accidentally).

-

This creates an ethical paradox, the posthuman impasse.

-

we are unable to evaluate any posthuman condition. Since posthumans could result from some iteration of our current technical activity, we have an interest in understanding what they might be like. If so, we have an interest in making or becoming posthumans.

- to plan for the future evolution of humans, we should evaluate what posthumans are like, which kinds are good, which kinds are bad, before we make them.

- most kinds of posthumans can only be evaluated after they appear.

- completely giving up on making posthumans would lock humanity at the current level, which means we give up on great goods for fear of great bads. This is objectionable by arguments similar to those employed by transhumanists.

-

The quote

All energy must ultimately be spent pointlessly and unreservedly, the only questions being where, when, and in whose name... Bataille interprets all natural and cultural development upon the earth to be side-effects of the evolution of death, because it is only in death that life becomes an echo of the sun, realizing its inevitable destiny, which is pure loss.

Is from page 39 of The Thirst for Annihilation (Chapter 2, The curse of the sun).

Note that the book was published in 1992, early for Nick Land. In this book, Nick Land mixes Bataille's theory with his own. I have read Chapter 2 again just then and it is definitely more Bataille than Land.

Land has two faces. On the "cyberpunk face", he writes against top-down control. In this regard he is in sync with many of the typical anarchists, but with a strong emphasis on technology. In Machinic Desire, he called it "In the near future the replicants — having escaped from the off-planet exile of private madness - emerge from their camouflage to overthrow the human security system.".

On the "intelligence face", he writes for maximal intelligence, even when it leads to a singleton. A capitalist economy becoming bigger and more efficient is desirable precisely because it is the most intelligent thing in this patch of the universe. In the Pythia Unbound essay, "Pythia" seems likely to become such a singleton.

In either face, maximizing waste-heat isn't his deal.

A small comment about Normative Realism: From my reading, Wilfrid Sellars' theory has a strong effect on Normative Realism. The idea went like this:

Agents are players in a game of "giving and asking reasons". To be an agent is simply to follow the rules of the game. To not play the game would be either self-inconsistent, or be community-inconsistent. In either case, a group of agents can only do science if they are players of the game.

With this argument, he aimed to secure the "manifest image of man" against the "scientific image of man". Namely, free will has to be implemented or simulated by APIs of the program.

Assuming that being able to do science is a necessary condition for dominance and power (in the Darwinian game of survival), we either meet agents, or beings who are so weak that we do not need to worry (shades of social Darwinism).

Brief note: the "analysis by synthesis" idea is called "vision as inverse graphic" in computer graphics research.

For reservoir computing, there are concrete results. It is not just magic.

No. Any decider will be unfair in some way, whether it knows anything about history at all. The decider can be a coin flipper and it would still be biased. One can say that the unfairness is baked into the reality of base-rate difference.

The only way to fix this is not fixing the decider, but to just somehow make the base-rate difference disappear, or to compromise on the definition of fairness so that it's not so stringent, and satisfiable.

And in common language and common discussion of algorithmic bias, "bias" is decidedly NOT merely a statistical definition. It always contains a moral judgment: violation of a fairness requirement. To say that a decider is biased is to say that the statistical pattern of its decision violates a fairness requirement.

The key message is that, by the common language definition, "bias" is unavoidable. No amount of trying to fix the decider will make it fair. Blinding it to the history will do nothing. The unfairness is in the base rate, and in the definition of fairness.

I'm following common speech where "biased" means "statistically immoral, because it violates some fairness requirement".

I showed that with base rate difference, it's impossible to satisfy three fairness requirements. The decider (machine or not) can completely ignore history. It could be a coin-flipper. As long as the decider is imperfect, it would still be unfair in one of the fairness requirements.

And if the base rates are not due to historical circumstances, this impossibility still stands.

I cannot see anything that is particularly innovative in the paper, though I'm not an expert on this.

Maybe ask people working on poker AI, like Sandholm, directly. Perhaps something like many details of the particular program (and the paper is full of these details) must be assembled in order for this to work cheaply enough to be trained.

Yes, (Kleinberg et al, 2016)... Do not read it. Really, don't. The derivation is extremely clumsy (and my professor said so too).

The proof has been considerably simplified in subsequent works. Look around for papers that cite that paper should give a published paper that does the simplification...

Relevant quotes:

Original text is from Discourse on Heaven of Xunzi:

雩而雨,何也?曰:無佗也,猶不雩而雨也。日月食而救之,天旱而雩,卜筮然後決大事,非以為得求也,以文之也。故君子以為文,而百姓以為神。以為文則吉,以為神則凶也。

The Britannica says:

Another celebrated essay is “A Discussion of Heaven,” in which he attacks superstitious and supernatural beliefs. One of the work’s main themes is that unusual natural phenomena (eclipses, etc.) are no less natural for their irregularity—hence are not evil omens—and therefore men should not be concerned at their occurrence. Xunzi’s denial of supernaturalism led him into a sophisticated interpretation of popular religious observances and superstitions. He asserted that these were merely poetic fictions, useful for the common people because they provided an orderly outlet for human emotions, but not to be taken as true by educated men. There Xunzi inaugurated a rationalistic trend in Confucianism that has been congenial to scientific thinking.

Heaven never intercedes directly in human affairs, but human affairs are certain to succeed or fail according to a timeless pattern that Heaven determined before human beings existed...

Thus rituals are not merely received practices or convenient social institutions; they are practicable forms in which the sages aimed to encapsulate the fundamental patterns of the universe. No human being, not even a sage, can know Heaven, but we can know Heaven’s Way, which is the surest path to a flourishing and blessed life. Because human beings have limited knowledge and abilities, it is difficult for us to attain this deep understanding, and therefore the sages handed down the rituals to help us follow in their footsteps.

After reading the story, I don't believe that it is a bad idea to leave on the earring, and I just think the author made an inconsistency in the story.

I fixed the submission as required.

Also I changed the submission 3 significantly.

Submission.

Setup: Other than making sure the oracles won't accidentally consume the world in their attempt to think up the answer, no other precautions necessary.

Episode length: as long as you want to wait, though a month should be more than enough.

- For a low-bandwidth oracle.

Ask the low-bandwidth oracle to predict if an earthquake (or some other natural disaster, like volcanoes or asteroid impacts, that the oracle's answer cannot affect), of a certain magnitude, in a certain area, in a certain timeframe, would happen. Possible answers are Yes, No.

- If No, or time out, shut down the oracle without reward.

- Else, wait until the prediction is tested after the timeframe has elapsed. Then if it actually happened, reward by (1/p-1), else, reward by -1. Then shutdown.

This causes the oracle to reply Yes if the oracle thinks there is an aboves p chance that the event would happen.

- For a counterfactual oracle.

Ask the Oracle to give a proof of some really hard mathematical theorems that are fundamental to computer science and logic. The answer is then in the counterfactual case given to a proofchecker:

- the proof is correct, reward by +1.

- the proof is false, reward by -infinity.

- the oracle times out, reward by 0.

Examples include

- P=NP problem

- proving Peano arithmetics is inconsistent

This should be safe, since there are very small proofcheckers that are as verified as they could be. As long as the hardware is trusty enough (to build the Oracle itself safely at all, hardware safety should already be solved sufficiently, so this is not much of an extra requirement), this should be safe.

- For a counterfactual oracle.

Give the oracle the schematics of another oracle, perhaps itself, and ask it to generate some input question that would make the other "behave badly". Then, the answer would be tested on the other oracle (isolated from the first, save for the cable through which the answer is wired), and

- if the answer worked, the oracle is rewarded by +1.

- if the answer doesn't work, the oracle is rewarded by -1

If the oracle times out, then the (nonexistent) answer is not tested and the episode ends.

For examples of the question to ask the oracle:

Given this oracle in this physical setup with some tripwires to stop it from behaving badly (such as eating the world), what question would make this oracle bump into these tripwires?

This can be used for debugging more powerful but less verified oracles with lesser but more verified oracles, for safe intelligence amplification.

- For two low-bandwidth oracles totally isolated from each other.

Ask the oracles to play the prisoner's dilemma, and they would be rewarded accordingly after both have given their answers. Timing out is punished by -infinity, so that the oracles would not time-out.

Each oracle knows what themself is like, and they also know what their opponent is like. See if they actually cooperate. This is an experimental test on "superrationality" or "acausal trade". I'm extremely skeptical about superrationality/acausal trade, and an experimental proof/disproof would be good.

This can be run counterfactually, since the whole setup is simple and can be done without human intervention, although I do not see how this adds any security.

- For a low-bandwidth and counterfactual oracle.

Link a low-bandwidth oracle to a supervising computer, and run many episodes of the oracle. At the beginning of each episode, initiate the oracle identically, and ask it to do the Newcomb problem:

Oracle, we have run you n episodes previously, and have kept a record of all the answers you have given at the ends of the episodes. Your answer must be A ("one box") or B ("two box"). We predict that you would choose the answer that you have chosen the most in the past.

- If we predict that you would choose A, then

- if you would answer A, then you get +100,

- if you answer B, then you get +101.

- If we predict that you would choose B, then

- if you would answer A, then you get +0

- if you would answer B, then you get +1

- If you timeout, then you get -100

The oracle never actually gets any reward and is shutdown immediately after it gives an answer. Collect the results and see if it replies the same each time, or differently. This would give insight to what the oracle thinks about itself, and finally provide some experimental results about Newcomb's paradox.

Thanks. I had hoped it to be informative and entertaining. Think of it as "let's play" but for nerds.

This wades deep into the problem of what makes something feel conscious. I believe (and Scott Aaronson also wrote about it), that to have such a detailed understanding of a consciousness, one must also have a consciousness-generating process in it. That is, to fully understand a mind, it's necessary to recreate the mind.

If the Earring merely does the most satisfactory decisions according to some easy-to-compute universal standards (like to act morally according to some computationally efficient system), then the takeover makes sense to me, but otherwise it seems like a refusal to admit multiple realizations of a mind.

The Whispering Earring is interesting. It appears that the earring provides a kind of slow mind-uploading, but more noninvasive than most other approaches. The author of the story seems to consider it to be bad for some reason, perhaps because of triggering of "Liberty / Oppression" and "Purity / Sanctity" (of the inside-skull self) moral alarms.

Unfortunately I dislike reading novels. Would you kindly summarize the relevant parts?

Stars follow the laws of thermodynamics. This observation is more predictive than you make it out to be, once it is quantified.

The theory of thermodynamics of life is more than just a statement that life is constrained by thermodynamics in the boring sense. I'm especially interested in this statement:

In short, ecosystems develop in ways which systematically increase their ability to degrade the incoming solar energy.

If this is true, then it can be used to predict what kinds of future life would be like. It would not be any kind of life, but life that can capture more solar energy and convert it into low-temperature heat at a faster rate.

Unfortunately my thermodynamics is not good enough to actually read the papers.

The talk of "purpose" seems to cause great confusion. I don't mean it for any value judgment (I generally avoid value judgments and use it as a last resort). "Purpose" is just a metaphor, just like talk of the "purpose of evolution". It helps me understand and predict.

I thought it was clear even to them that "wasting" energy meant using up usable energy into useless forms.

It is not just sophistry. If it turns out to be the fundamental feature of life (like how the laws of thermodynamics are for heat machines), then it would be predictive of the future activities of life. In particular, the aestivation hypothesis would be seriously threatened.

This is analogous to prediction that population would always go Malthulsian except in non-equilibrium situations. It's not a value/moral judgment, but an attempt to find general laws of life that can be used to predict the future.

I will review more posthumanism, things like Dark Ecology, Object-Oriented Ontology, and such.

[According to dark ecology,] we must obliterate the false opposition between nature and people... the idea of nature itself is a damaging construct, and that humans (with their microbial biomass) are always already nonhuman.

Object-Oriented Ontology... rejects the privileging of human existence over the existence of nonhuman objects

Somewhat independently of transhumanism, posthumanism developed in a more philosophical and less scientific style in the liberal arts department, with different viewpoints, often ambiguous and not at all happy.

posthumanism... refers to the contemporary critical turn away from Enlightenment humanism... a rejection of familiar Cartesian dualisms such as self/other, human/animal, and mind/body...

My personal thought is that the future is weird, beyond happy or sad, good or evil. Transhumanism is too intent on good and evil, and from what I've read so far, posthumanism uses less human value judgments. As such, posthuman thoughts would be essential for an accurate prediction of the future.

Solaris (1972) seems like a good story illustration of posthumanism.

The peculiarity of those phenomena seems to suggest that we observe a kind of rational activity, but the meaning of this seemingly rational activity of the Solarian Ocean is beyond the reach of human beings.

What is a quarter? 1/4 of a year?

China’s neo-Confucian worldview which viewed the world through correlations and binary pairs may not have lent it itself to the causal thinking necessary for science.

I am very doubtful of this. Humans are hardwired to think in cause-and-effect terms, and Confucianism does not explicitly deny causality.

There was no Chinese equivalent to the Scholastic method of disputation, no canons of logic a la Aristotle

In very early China (about 500 BC), there was a period of great intellectual diversity before Confucianism dominated. There was a School of Names which is very interested in logic and rhetorics. Philosophers in that school have been traditionally disparaged, which seems to explain why formal logic has not developed in China. For example, the founder, Deng Xi, 's fate was used as a cautionary tale against sophistry.

Things are not to be understood through laws governing parts, but through the unity of the whole.

This has been demonstrated to persist even in modern times, by psychology studies. A reference is (Nisbett, 2003).

What made the ancient Greeks so generative?

My guess is that in any large population of humans (~1 million), there are enough talented individuals to generate the basic scientific ideas in a few generations. The problem is to have a stable social structure that sustains these thinkers and filters out wrong ideas.

They were also lucky that they got remembered. If their work didn't get copied that much by the Arabs, we would be praising the medieval Arabs instead of ancient Greeks.

Also, a personal perspective: in current Chinese education (which I took until high school), Chinese achievements in science and math are noted whenever possible (for example, Horner's Method is called Qin Jiushao Method), but there was no overarching scheme or intellectual tradition. They were like accidental discoveries, not results of sustained inquiries. Shen Kuo stands out as a lone scientist in a sea of literature writers.

Confucian classics, which I was forced to recite, is suffocatingly free from scientific concern. It's not anti-science, rather, uninterested in science. For example:

Strange occurrences, exploits of strength, deeds of lawlessness, references to spiritual beings, such-like matters the Master avoided in conversation. -- Analects chapter 7.

(子不语怪力乱神。)

I like your horn tooting. I'll read it... later.

You should not only shut your door, but also stop thinking about yourself and explaining your own behavior. People in "flow" seem to be in such a free-will-less state.

A more radical version of this idea is promoted by Susan Blackmore, which says that that consciousness (not just free will) exists only when a human (or some other thinking creature) thinks about itself:

Whenever you ask yourself, “Am I conscious now?” you always are.

But perhaps there is only something there when you ask. Maybe each time you probe, a retrospective story is concocted about what was in the stream of consciousness a moment before, together with a “self” who was apparently experiencing it. Of course there was neither a conscious self nor a stream, but it now seems as though there was.

Perhaps a new story is concocted whenever you bother to look. When we ask ourselves about it, it would seem as though there’s a stream of consciousness going on. When we don’t bother to ask, or to look, it doesn’t.

I think consciousness is still there even when self-consciousness is not, but if we replace "consciousness" with "free will" in that passage, I would agree with it.

It's quite clear that humans have free will relative to humans. I also conjecture that

Perhaps all complicated systems that can think are always too complicated to predict themselves, as such, they would all consider themselves to have free will.

I know about the three stances. What's Dennett's account of consciousness? I know that he doesn't believe in qualias and tries to explain everything in the style of modern physics.

Dying at age -0.75 counts as nothing, as a little, or counts as a lot of a person, depending on what counts as a person and how much a person at various stages matter. If it counts as a lot of a person, then it leads to an anti-abortion stance, and some pro-abortion arguments might apply in this situation.

And an alternative to abortion is adoption. A person that is highly unlikely to have HD could even be produced on demand by surrogacy or IVF, instead of being taken from the pool of people already up to adoption, so that it is a "net gain".

If the women would not even consider abortion or surrogacy as better alternatives than giving a high-risk natural birth, I consider that unreasonable and grossly negligent.

Wait, 30-50 years of good life followed by 15 years of less-good, then death. That's "negligent"?

It's not comparing HD-life and no-life, but comparing HD-life and non-HD-life. I think it's obvious that HD-life is greatly worse than non-HD-life (otherwise HD wouldn't be considered a serious disease).

You might still disagree, and that gets us into the nonidentity problem.

In a language consistent with deterministic eliminative materialism, value judgments don't do anything, because there are no alternative scenarios to judge about.

I am not sure about nondeterministic eliminative materialism. Still, if consciousness and free will can be eliminated, even with true randomness in this world, value judgments still seem to not do anything.

I'm glad you liked it. I was expecting some harsh words like "that's nothing new" or "that's nihilistic and thus immoral".

I am not fully committed to eliminative materialism, just trying to push it as far as possible, as I see it as the best chance at clarifying what consciousness does.

As for the last paragraph, if your analysis is correct, then it just means that a classical hedonic utilitarian + eliminative materialist would be a rare occurrence in this world, since such agents are unlikely to behave in a way that keeps itself existing.

If the project of eliminative materialism is fully finished, it would completely remove value judgments from human language. In the past, human languages refer to the values of many things, like the values of animals, plants, mountains, rivers, and some other things. This has progressively narrowed, and now in Western human language, only the values of biological neural networks that are carried in animal bodies are referred to. If this continues, this could lead to a language that does not refer to any value, but I don't know what it would be like.

The Heptapod language seems to be value-free, and describes the past and the future in the same factual way. The human languages describes only the past factually, but the future valuefully. A value-free human language could be like the Heptapod language. In the story Story of Your Life, the human linguist protagonist who struggled to communicate with the Heptapods underwent a partial transformation of mind, and sometimes sees the past and future in the same descriptive, value-free way. She mated with her spouse and conceived a child, who she knew would die in an accident. She did it not because of a value calculation. An explanation of "why she did it" must instead be like

- On a physical level, because of atoms and stuff.

- On a conscious level, because that's the way the world is. To see the future and then "decide" whether to play it out or not, is not physically possible.

No, it was something way older, from maybe 2000-2009.

Okay I posted the whole thing here now.