Torture and Dust Specks and Joy--Oh my! or: Non-Archimedean Utility Functions as Pseudograded Vector Spaces

post by Louis_Brown · 2019-08-23T11:11:52.802Z · LW · GW · 29 commentsContents

Linearity and Archimedes Comparability Pseudograded Vector Spaces Basis Independence What's the point? None 29 comments

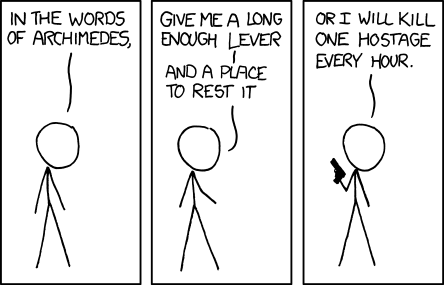

A dozen years ago, Eliezer Yudkowsky asked [LW · GW] us which was less (wrong?) bad:

- 3^^^3 people each getting a dust speck in their eyes,

or

- 1 person getting horribly tortured continually for 50 years.

He cheekily ended the post with "I think the answer is obvious. How about you?"

To this day, I do not have a clue which answer he thinks is obviously true, but I strongly believe that the dust specks are preferable. Namely, I don't think there's any number we can increase 3^^^3 to that would change the answer, because I think the disutility of dust specks is fundamentally incomparable with that of torture. If you disagree with me, I don't care--that's not actually the point of this post. Below, I'll introduce a generalized type of utility function which allows for making this kind of statement with mathematical rigor.

Linearity and Archimedes

A utility function is called linear if it respects scaling--5 people getting dust specked is 5 times as bad as a single dust specker. This is a natural and common assumption for what utility functions should look like.

An ordered algebraic structure (number system) is called Archimedean if for any two positive values , , there exists natural numbers , such that and . In other words, adding either value to itself a finite number of times will eventually outweigh the other. Any pair , for which this property holds of and is called comparable. Note that we're taking the absolute value because we care about magnitude and not sign here: of course any positive number of people enjoying a sunset is better than any positive number of people being tortured, but the question remains whether there is some number of sunset-views which would outweigh a year of torture (in the sense that a world with additional sunset watchers and 1 additional year of torture would be preferable to a world with no more of either).

Another way to think about comparability is that both values are relevant to an analysis of world state preference: a duster does not need to know how many dust specks are in world 1 or world 2 if they know that world 2 has more torture than world 1, because the preference is already decided by the torture differential.

Convicted dusters now face a dilemma: a linear, Archimedean utility function necessarily yields some number of dust specks to which torture is preferable. One resolution is to reject linearity: very smart people have discussed [LW · GW] this [LW · GW] at [LW · GW] length [EA · GW]. There is another option though which, as far as the author can tell, hardly ever gets proper attention: rejecting Archimedean utilities. This is what I'll explore in the post.

Comparability

Comparability defines an equivalence class on utilities of world states (and their utilities).

- Any world state is comparable to itself (reflexivity),

- if state 1 is comparable to state 2, then state 2 is comparable to state 1 (symmetry), and

- if state 1 is comparable to state 2 and state 2 is comparable to state 3, then state 1 is comparable to state 3 (transitivity).

These properties follow immediately from the definition of comparability provided above. Now we have a partition of world states into comparability classes. Note that the comparability classes do not form a vector space: 1 torture + 1 dust speck is comparable to one torture, but their difference, 1 dust speck, is not comparable to either.

As I said before, I don't care if you disagree about dust specks. If you think there are any pair of utilities which are in some way qualitatively distinct or otherwise incomparable (take your pick from: universe destruction, death, having corn stuck between one's teeth, the simple joy of seeing a friend, etc--normative claims about which utilities are comparable is outside the scope of this post), then there is more than one comparability class and the utility function needs a non-Archimedean co-domain to reflect this.

If you staunchly believe that every pair of utilities is comparable, that's fine--there will be only one comparability class containing all world states, and the construction below will boringly return your existing Archimedean utility function.

Pseudograded Vector Spaces

We can assign a total ordering (severity) to our collection of comparability classes: means that, for any world states in classes , respectively, for all . It is clear from the construction of comparability classes that this does not depend on which representatives are chosen. Note that severity does not distinguish between severe positive and severe negative utilities: the classes are defined by magnitude and not sign. Thus, the framework is agnostic on such matters as negative utilitarianism: if one believes that reducing disutility of various forms takes priority over expanding positive utility, then that will be reflected in the severity levels of these comparability classes--perhaps a large portion of the most severe classes will be disutility-reduction based, and only after that do we encounter classes prioritizing positive utility world states.

Then we can think of utilities as being vectors, where each component records the amount of utility of a given severity level. We'll write the components with most severe first (since those are the most significant ones for comparing world states). Thus, if an agent believes that there are exactly two comparability classes:

- a severe class which contains e.g. 50 years of torture, and

- a milder class containing e.g. being hit with a dust speck,

then world A with 1 person being tortured and 100 dust-speckers would have utility . Choosing between this and world B with no torture and 3^^^3 dust speckers is now easy: world B has utility , and since the first component is greater in world B, it is preferable no matter what the second component is. If this seems unreasonable to the reader, it is not a fault of the mathematics but the normative assumption that dust specks are incomparable to torture--if comparability classes are constructed correctly, then this sort of lexicographic ordering on utilities necessarily follows.

This is what we refer to as a pseudograded vector space: the utility space consists of -dimensional vectors, which are I-pseudograded by severity, meaning that for any vector we can identify its severity as the comparability class of the first non-zero component. Equivalently, utility values are functions from I to , and thus utility functions have type (where is the world state space) (this interpretation becomes important if we would like to consider the possibility of infinitely many comparability classes).

Formally, the utility vector space admits a grading function which gives the index of the first (most severe) non-zero coordinate of the input vector. In our example, and . In general, if we're comparing two world states with different severity gradings, we need only check which world has greater severity, and then consult the sign of (that's a function evaluation, not a product!) to determine if it's preferable to the alternative (the sign will be non-zero by definition of ). More generally, we compare worlds by checking the sign of . Note that two utility vectors satisfy exactly when , so the lexicographic ordering is determined fully by which utilities are preferable to 0, the utility of an empty world. We will refer to such utility vectors as positive, keeping in mind that this only means that the most severe non-zero coefficient is positive and not that the whole vector is.

Expected utility computations work exactly as in Archimedean utility functions: averages are performed component-wise. Thus, existing work with utilities and decision theory should import easily to this generalization.

Observe that satisfies ultrametric-like conditions (recalling those found in -adic norms):

- For any non-zero and utility vector ,

- For any pair of utility vectors , we have with equality if

One may reasonably be concerned about the well-definedness of if they believe : isn't it possible that I is not well-ordered under severity, meaning that some vectors may not have a first non-zero element? [Technically we are considering the well-orderedness of the reverse ordering on , since we want to find the most severe non-zero utility and not the least severe].

Consider for instance the function defined on the positive reals: for all its smoothness, it has no least input with a non-zero output, and what's worse the output oscillates signs infinitely many times approaching such that we aren't prepared to weigh a world state with such a utility against an empty world with utility 0. Luckily, our setting is actually a special case of the Hahn Embedding Theorem for Abelian Ordered Groups, which guarantees that any utility vector can only have non-zero entries on a well-ordered set of indices (thanks to Sam Eisenstat for pointing this out to me), and thus is necessarily well-defined. We'll refer to this vector space as : the space of all functions which each only have non-zero values on a well-ordered subset of , and thus in particular all have a first non-zero value.

Basis Independence

Working with vector spaces, we often think of the vectors as lists of (or functions from the index set to) numbers, but this is a convenient lie--in doing so, we are fixing a basis with which the vector space was not intrinsically equipped. Abstractly, a vector space is coordinate-free, and we merely pick bases for ease of visualization and computation. However, it is important for our purposes here that the lexicographic ordering on utility functions is ``basis-independent", for a suitable notion of basis (otherwise, our world state preference would depend on arbitrary choices made in representations of the utility space). In fact, classical graded vector spaces have distinguished homogeneous elements of grading i (e.g. polynomials which only have degree i monomials, rather than mixed polynomials with max degree i, in the graded vector space of polynomials), but this is too much structure and would be unnatural to apply to our setting, hence pseudograded vector spaces.

The standard definition of basis (a linearly independent set of vectors which spans the space) isn't sufficient here, since we have extra structure we'd like to encode in our bases--namely, the severity grading. Instead, we'll define a graded basis to be a choice of one positive utility vector for each grading (i.e., a map such that --in categorical terms, is a section of ).

Then suppose Alice and Bob agree that there are two severity levels, and even agree on which world states fall into each, but have picked different graded bases. Letting X=50 years of torture, and Y=1 dust speck in someone's eye, perhaps Alice's basis has and , but for some reason Bob thinks the sensible choice of representatives is and --he agrees with Alice that , but factors the world state differently (while Bob may seem slightly ridiculous here, there are certainly world states with multiple natural factorizations).

Then Alice's utility for world A in her basis is and her , while Bob writes (since ) and . Note that they will both prefer world B, since the change in graded basis did not affect the lexicographic ordering on utilities. Specifically, the transformation from Alice's coordinates to Bob's is induced by left multiplication by the matrix

We observe two key facts about :

- is lower triangular (all entries above the main diagonal are 0), and

- has positive diagonal entries.

These will be true of any transformations between graded bases of the same utility space: lower triangularity follows from the ultrametric property of the grading , and positive diagonals follow from our insistence that the graded basis vectors all have positive utilities. In general (when ), it won't make sense to view as a matrix, but we will have a linear transformation operator with the same properties.

Recalling that the ordering on utilities is determined by the positive cone of utilities (since iff ), we simply observe that lower triangular linear transformations with positive diagonal entries will preserve the positive cone of : if the first non-zero entry of is positive, then will also have 0 for all entries before index (since lower triangularity means changes only propagate downstream) and will scale by the positive diagonal element, returning a positive utility. Thus, our ordering is independent of graded basis and depends only upon the severity classes and comparisons within them.

What's the point?

I think many LW folk (and almost all non-philosophers) are firm dusters and have felt dismayed at their inability to justify this within Archimedean utility theory, or have thrown up their hands and given up linearity believing it to be the only option. While we will never encounter a world with people in it to be dusted, it's important to understand the subtleties of how our utility functions actually work, especially if we would like to use them to align AI who will have vastly more ability than us to consider many small utilities and aggregate them in ways that we don't necessarily believe they should.

Moreover, the framework is very practical in terms of computational efficiency. It means that choosing the best course of action or comparing world states requires one to only have strong information on the most severe utility levels at stake, rather than losing their mind (or overclocking their CPU) trying to consider all the tiny utilities which might add up. Indeed, this is how most people operate in daily life; while some may counter that this is a failure of rationality, perhaps it is in fact because people understand that there are some tiny impacts which can never accumulate to more importance than the big stuff, and it can't all be chalked up to scope neglect.

Finally, completely aside from all ethical questions, I think there's some interesting math going on and I wanted people to look at it. Thanks for looking at it!

This is my first blog post and I look forward to feedback and discussion (I'm sure there are many issues here, and I hope that I will not be alone in trying to solve them). Thanks to everyone at MSFP with whom I talked about this--even if you don't think you gave me any ideas, being organic rubber ducks was very productive for my ability to formulate these thoughts in semi-coherent ways.

29 comments

Comments sorted by top scores.

comment by Hoagy · 2019-08-23T17:43:05.276Z · LW(p) · GW(p)

Apologies if this is not the discussion you wanted, but it's hard to engage with comparability classes without a framework for how their boundaries are even minimally plausible.

Would you say that all types of discomfort are comparable with higher quantities of themselves? Is there always a marginally worse type of discomfort for any given negative experience? So long as both of these are true (and I struggle to deny them) then transitivity seems to connect the entire spectrum of negative experience. Do you think there is a way to remove the transitivity of comparability and still have a coherent system? This, to me, would be the core requirement for making dust specks and torture incomparable.

Replies from: Louis_Brown, Slider

↑ comment by Louis_Brown · 2019-08-23T18:09:26.023Z · LW(p) · GW(p)

I agree that delineating the precise boundaries of comparability classes is a uniquely challenging task. Nonetheless, it does not mean they don't exist--to me your claim feels along the same lines as classical induction "paradoxes" involving classifying sand heaps. While it's difficult to define exactly what a sand heap is, we can look at many objects and say with certainty whether or not they are sand heaps, and that's what matters for living in the world and making empirical claims (or building sandcastles anyway).

I suspect it's quite likely that experiences you may be referring to as "higher quantities of themselves" within a single person are in fact qualitatively different and no longer comparable utilities in many cases. Consider the dust specks: they are assumed to be minimally annoying and almost indetectable to the bespeckèd. However, if we even slightly upgrade them so as to cause a noticeable sting in their targeted eye, they appear to reach a whole different level. I'd rather spend my life plagued by barely noticeable specks (assuming they have no interactions) than have one slightly burn my eyeball.

Replies from: Hjalmar_Wijk↑ comment by Hjalmar_Wijk · 2019-08-23T18:44:31.094Z · LW(p) · GW(p)

Theron Pummer has written about this precise thing in his paper on Spectrum Arguments, where he touches on this argument for "transitivity=>comparability" (here notably used as an argument against transitivity rather than an argument for comparability) and its relation to 'Sorites arguments' such as the one about sand heaps.

Personally I think the spectrum arguments are fairly convincing for making me believe in comparability, but I think there's a wide range of possible positions here and it's not entirely obvious which are actually inconsistent. Pummer even seemed to think rejecting transitivity and comparability could be a plausible position and that the math could work out in nice ways still.

↑ comment by Slider · 2019-08-25T14:48:57.256Z · LW(p) · GW(p)

Surreals have multiples and are ordered, yet they contain multiple different archimedian fields. You can have for all r in reals and all s in surreals that r*s exists and that there is another surreal that is greater than all of r*s. Arbitrarily large finite is a different thing than an infinitely large value. You can't "inch" your way to infinity. If you have a single bad experience and "inch" around it you will only reach one archimedian field but how do you know that you have covered the whole space of bad experience?

comment by Dacyn · 2019-08-23T21:06:14.249Z · LW(p) · GW(p)

Once you introduce any meaningful uncertainty into a non-Archimedean utility framework, it collapses into an Archimedean one. This is because even a very small difference in the probabilities of some highly positive or negative outcome outweighs a certainty of a lesser outcome that is not Archimedean-comparable. And if the probabilities are exactly aligned, it is more worth your time to do more research so that they will be less aligned, than to act on the basis of a hierarchically less important outcome.

For example, if we cared infinitely more about not dying in a car crash than about reaching our destination, we would never drive, because there is a small but positive probability of crashing (and the same goes for any degree of horribleness you want to add to the crash, up to and including torture -- it seems reasonable to suppose that leaving your house at all very slightly increases your probability of being tortured for 50 years).

For the record, EY's position (and mine) is that torture is obviously preferable. It's true that there will be a boundary of uncertainty regardless of which answer you give, but the two types of boundaries differ radically in how plausible they are:

- if SPECKS is preferable to TORTURE, then for some N and some level of torture X, you must prefer 10N people to be tortured at level X than N to be tortured at a slightly higher level X'. This is unreasonable, since X is only slightly higher than X', while you are forcing 10 times as many people to suffer the torture.

- On the other hand, if TORTURE is preferable to SPECKS, then there must exist some number of specks N such that N-1 specks is preferable to torture, but torture is preferable to N+1 specks. But this is not very counterintuitive, since the fact that torture costs around N specks means that N-1 specks is not much better than torture, and torture is not much better than N+1 specks. So knowing exactly where the boundary is isn't necessary to get approximately correct answers.

↑ comment by Slider · 2019-08-25T15:51:51.660Z · LW(p) · GW(p)

Well small positive probabilities need not be finite if we have a non-archimedean utility framework.

Infinidesimal times an inifinite number might yield a finite number that would be on equal footing with familiar expected values that would trade sensibly.

And it might help that the infinidesimals might compare mostly against each other. You compare the danger of driving against the dangers of being in a kitchen. If you find that driving is twice as dangerous it means you need to spend half the time to drive to accomplish something rather than do it in a kitchen rather than categorically always doing things in a kitchen.

I guess the relevance of waste might be important. If you could choose 0 chance of death you would take that. But given that you are unable to choose that you choose among the death optimums. Sometimes further research is not possible.

↑ comment by Andrew Jacob Sauer (andrew-jacob-sauer) · 2019-08-24T04:48:15.823Z · LW(p) · GW(p)

Regarding your comments on SPECKS preferable to TORTURE, I think that misses the argument they made. The reason you have to prefer 10N at X to N at X' at some point, is that a speck counts as a level of torture. That's exactly what OP was arguing against.

Replies from: Dacyn↑ comment by Dacyn · 2019-08-24T16:00:17.893Z · LW(p) · GW(p)

The OP didn't give any argument for SPECKS>TORTURE, they said it was "not the point of the post". I agree my argument is phrased loosely, and that it's reasonable to say that a speck isn't a form of torture. So replace "torture" with "pain or annoyance of some kind". It's not the case that people will prefer arbitrary non-torture pain (e.g. getting in a car crash every day for 50 years) to a small amount of torture (e.g. 10 seconds), so the argument still holds.

Replies from: Louis_Brown↑ comment by Louis_Brown · 2019-08-26T04:59:38.184Z · LW(p) · GW(p)

Once you introduce any meaningful uncertainty into a non-Archimedean utility framework, it collapses into an Archimedean one. This is because even a very small difference in the probabilities of some highly positive or negative outcome outweighs a certainty of a lesser outcome that is not Archimedean-comparable. And if the probabilities are exactly aligned, it is more worth your time to do more research so that they will be less aligned, than to act on the basis of a hierarchically less important outcome.

I don't think this is true. As an example, when I wake up in the morning I make the decision between granola and cereal for breakfast. Universe Destruction is undoubtedly high up on the severity scale (certainly higher than crunch satisfaction utility), so your argument suggests that I should spend time researching which choice is more likely to impact that. However, the difference in expected impact in these options is so averse to detection that, despite the fact that I literally act on this choice every single day of my life, it would never be worth the time to research breakfast foods instead of other choices which have stronger (i.e. measurable by the human mind) impacts on Universe Destruction.

This is not a bug, but an incredible feature of the non-Archimedean framework. It allows you to evaluate choices only on the highest severity level at which they actually occur, which is in fact how humans seem to make their decisions already, to some approximation.

As for the car example, your analysis seems sound (assuming there's no positive expected utility at or above the severity level of car crash injuries to counterbalance it, which is not necessarily the case--e.g. driving somewhere increases the chance that you meet more people and consequently find the love(s) of your life, which may well be worth a broken limb or two. Alternatively, if you are driving to a workshop on AI risk then you may believe yourself to be reducing the expected disutility from unaligned AI, which appears to be incomparable with a car crash). But, forgiving my digression and argument of the hypothetical: the claim that not driving is (often) preferable to driving feels much more reasonable to me than the claim that some number of dust specks is worse than torture.

if SPECKS is preferable to TORTURE, then for some N and some level of torture X, you must prefer 10N people to be tortured at level X than N to be tortured at a slightly higher level X'. This is unreasonable, since X is only slightly higher than X', while you are forcing 10 times as many people to suffer the torture

I'm not sure I understand this properly. To clarify, I don't believe that any non-torture suffering is incomparable with torture, merely that dust specks are. I think "slightly higher level" is potentially misleading here--if it's in a different severity class, then by definition there is nothing slight about it. Depending on the order type, there may not even be a level immediately above torture X, and it may be that there are infinitely many severity classes sitting between X and any distinct X' (think: or ).

Replies from: vanessa-kosoy↑ comment by Vanessa Kosoy (vanessa-kosoy) · 2019-08-29T15:16:05.990Z · LW(p) · GW(p)

If you're choosing between granola and cereal, then it might be that the granola manufacturer is owned by a multinational conglomerate that also has vested interests in the oil industry, and therefore, buying granola contributes to climate change that has consequences of much larger severity than your usual considerations for granola vs. cereal. Of course there might also be arguments in favor of cereal. But, if you take your non-Archimedean preferences seriously, it is absolutely worth the time to write down all of the arguments in favor of both options, and then choose the side which has the advantage in the largest severity class, however microscopic that advantage is.

Replies from: Louis_Brown↑ comment by Louis_Brown · 2019-08-29T16:10:32.406Z · LW(p) · GW(p)

I agree that small decisions have high-severity impacts, my point was that it is only worth the time to evaluate that impact if there aren't other decisions I could spend that time making which have much greater impact and for which my decision time will be more effectively allocated. This is a comment about how using the non-Archimedean framework goes in practice. Certainly, if we had infinite compute and time to make every decision, we should focus on the most severe impacts of those decisions, but that is not the world we are in (and if we were, it would change quite a lot of other things too, effectively eliminating empirical uncertainty and the need to take expectations at all).

Replies from: vanessa-kosoy↑ comment by Vanessa Kosoy (vanessa-kosoy) · 2019-08-29T16:39:03.355Z · LW(p) · GW(p)

Alright, so if you already know any fact which connects cereal/granola to high severity consequences (however slim the connection is) then you should choose based on those facts only.

My intuition is, you will never have a coherent theory of agency with non-Archimedean utility functions. I will change my mind when I see something that looks like such the beginning of such a theory (e.g. some reasonable analysis of reinforcement learning for non-Archimedean utility).

If we had infinite compute, that would not eliminate empirical uncertainty. There are many things you cannot compute because you just don't have enough information. This is why in learning theory sample complexity is distinct from computational complexity, and applies to algorithms with unlimited computational resources. So, you would definitely still need to take expectations.

Replies from: Louis_Brown, Slider↑ comment by Louis_Brown · 2019-09-01T23:44:15.926Z · LW(p) · GW(p)

Why does this pose an issue for reinforcement learning? Forgive my ignorance, I do not have a background in the subject. Though I don't believe that I have information which distinguishes cereal/granola in terms of which has stronger highest-severity consequences (given the smallness of those numbers and my inability to conceive of them, I strongly suspect anything I could come up with would exclusively represent epistemic and not aleatoric uncertainty), even if I accept it then the theory would tell me, correctly, that I should act based on that level. If that seems wrong, then it's evidence we've incorrectly identified an implicit severity class in our imagination of the hypothetical, not that severity classes are incoherent (i.e. if I really have reason to believe that eating cereal even slightly increases the chance of Universe Destruction compared to eating granola, shouldn't that make my decision for me?)

I would argue that many actions are sufficiently isolated such that, while they'll certainly have high-severity ripple effects, we have no reason to believe that on expectation the high-severity consequences are worse than they would have been for a different action.

If the non-Archimedean framework really does "collapse" to an Archimedean one in practice, that's fine with me. It exists to respond to a theoretical question about qualitatively different forms of utility, without biting a terribly strange bullet. Collapsing the utility function would mean assigning weight 0 to all but the maximal severity level, which seems very bad in that we certainly prefer no dust specks in our eyes to dust specks (ceteris paribus), and this should be accurately reflected in our evaluation of world states, even if the ramped function does lead to the same action preferences in many/most real-life scenarios for a sufficiently discerning agent (which maybe AI will be, but I know I am not).

If we had infinite compute, that would not eliminate empirical uncertainty. There are many things you cannot compute because you just don't have enough information. This is why in learning theory sample complexity is distinct from computational complexity, and applies to algorithms with unlimited computational resources. So, you would definitely still need to take expectations.

Thanks for letting me know about this! Another thing I haven't studied.

↑ comment by Slider · 2019-08-29T18:30:33.801Z · LW(p) · GW(p)

Reinforcement learning with rewards or punishments that can have an infinite magnitude would seem to make intuitive sense for me. The buck is then kicked to reasoning whether it's ever reasonable to give a sample a post-finite reward. Say that there are pictures label as either "woman", "girl","boy" or "man" and labeling a boy a man or a man a boy would get you a Small reward while labeling a man a man would get you a Large reward where Large is infinite respect with respect to Small. With a finite version some "boy" vs "girl" weight could overcome a "man" vs "girl" weight which might be undesirable behaviour (if you strictly care about gender discrimination with no tradeoff for age discrimination).

comment by Vanessa Kosoy (vanessa-kosoy) · 2019-08-24T13:20:22.409Z · LW(p) · GW(p)

Two comments:

-

The thing you called "pseudograding" is normally called "filtration".

-

In practice, because of the complexity of the world, and especially because of the presence of probabilistic uncertainty, an agent following a non-Archimedean utility function will always consider only the component corresponding to the absolute maximum of , since there will never be a choice between A and B such that these components just happen to be exactly equal. So it will be equivalent to an Archimedean agent whose utility is this worst component. (You can have an without an absolute maximum but I don't think it's possible to define any reasonable utility function like that, where by "reasonable" I roughly mean that, it's possible to build some good theory of reinforcement learning out of it.)

↑ comment by Louis_Brown · 2019-08-26T05:56:34.277Z · LW(p) · GW(p)

The thing you called "pseudograding" is normally called "filtration".

Ah, thanks! I knew there had to be something for that, just couldn't remember what it was. I was embarrassed posting with a made-up word, but I really did look (and ask around) and couldn't find what I needed.

...Although, reading the definition, I'm not sure it's exactly the same...the severity classes aren't nested, and I think this is probably an important distinction to the conceptual framing, even if the math is equivalent. If I start with a filtration proper, I need to extract the severity classes in a way that seems slightly more convoluted than what I did.

In practice, because of the complexity of the world, and especially because of the presence of probabilistic uncertainty, an agent following a non-Archimedean utility function will always consider only the component corresponding to the absolute maximum of I, since there will never be a choice between A and B such that these components just happen to be exactly equal. So it will be equivalent to an Archimedean agent whose utility is this worst component.

See my response to Dacyn [LW(p) · GW(p)].

Replies from: vanessa-kosoy↑ comment by Vanessa Kosoy (vanessa-kosoy) · 2019-08-29T15:09:50.245Z · LW(p) · GW(p)

If I understand what you do correctly, the severity classes are just the set differences , where is the filtration. I think that you also assume that the quotient is one-dimensional and equipped with a choice of "positive" direction.

Replies from: Louis_Brown↑ comment by Louis_Brown · 2019-08-29T16:04:22.553Z · LW(p) · GW(p)

Yes! This is all true. I thought set differences of infinite unions and quotients would only make the post less accessible for non-mathematicians though. I also don't see a natural way to define the filtration without already having defined the severity classes.

comment by Donald Hobson (donald-hobson) · 2019-08-23T18:07:07.127Z · LW(p) · GW(p)

Firstly I will focus on the most wrong part. The claim that non archimedian utilities are more efficient. In the real world there aren't 3^^^3 little impacts to add up. If the number of little impacts is a few hundred, and they are a trillion times smaller, then the little impacts make up less than a billionth of your utility. Usually you should be using less than a billionth of your compute to deal with them. For agents without vast amounts of compute, this means forgetting them altogether. This can be understood as an approximation strategy to maximize a normal archimedian utility.

There is also the question of different severity classes. If we can construct a sliding scale between specks and torture then we find the need for a weird cut off point, like a broken arm being in a different severity class than a broken toe.

Replies from: JakeArgent↑ comment by JakeArgent · 2019-08-23T20:07:40.057Z · LW(p) · GW(p)

Intuitively speaking broken arm and broken toe are comparable. Broken arm is worse, broken toe is still bad. I'd rather get a broken arm than torture for 50 years, or even torture for 1 day.

For sliding scale of severities: there's a very difficult to compute but intuitively satisfying emphasis that can be imposed so the scale can't slide. It's the idea of "bouncing back". If you can't bounce back from an action that imparts negative utility, it forms a distinct class of utilities. Compare broken arm with torn-off toe. Compare both of those to 50 years of torture.

P.S: If you're familiar with Taleb's idea of "antifragility", that's the notion I'm basing these on.

Replies from: donald-hobson, AprilSR↑ comment by Donald Hobson (donald-hobson) · 2019-08-24T04:29:00.694Z · LW(p) · GW(p)

The idea is that we can take a finite list of items like this

Torture for 50 years

Torture for 40 years

...

Torture for 1 day

...

Broken arm

Broken toe

...

Papercut

Sneeze

Dust Speck

Presented with such a list you must insist that two items on this list are incomparable. In fact you must claim that some item is incomparably worse than the next item. I don't think that any number of broken toes is better than a broken arm. A million broken toes is clearly worse. Follow this chain of reasoning for each pair of items on the list. Claiming incomparably is a claim that no matter how much I try to subdivide my list, one item will still be infinitely worse than the next.

The idea of bouncing back is also not useful. Firstly it isn't a sharp boundary, you can mostly recover but still be somewhat scarred. Secondly you can replace an injury with something that takes twice as long to bounce back from, and they still seem comparable. Something that takes most of a lifetime to bounce back from is comparable to something that you don't bounce back from. This breaks if you assume immortality, or that bouncing back 5 seconds before you drop dead is of morally overwhelming significance, such that doing so is incomparable to not doing so.

Replies from: JakeArgent↑ comment by JakeArgent · 2019-08-25T13:50:22.717Z · LW(p) · GW(p)

Broken arms vs toes: I agree that any number of broken toes wouldn't be better than a broken arm. But that's the point, these are _comparable_.

Incomparable breaks occur where you put the ellipses in your list. Torture for 40-50 years vs torture for 1 day is qualitatively distinct. I imagine a human being can bounce back from torture for 1 day, have scars but manage to prosper. That would be hellishly more difficult with torture for 40 years. We could count torture by day, 1-(365*40) and there would be a point of no return there. A duration of torture a person can't bounce back. It would depend on the person, what happens during and after etc, which is why it's not possible to compute that day. That doesn't mean we should ignore how humans work.

Here's the main beef I have with Dust Specks vs Torture: Statements like "1 million broken toes" or "3^^^3 dust specks" disregard human experience. That many dust specks on one person is torture. One on each is _practically nothing_. I'm simulating people experiencing these, and the result I arrive at is this; choose best outcome from (0 utils * 3^^^3) vs (-3^^^3 utils). This is easy to answer.

You may say "but 1 dust speck on a person isn't 0 utils, it's a very small negative utility" and yes, technically you're correct. But before doing the sum over people, take a look at the people. *Distribution matters.*

Humans don't work like linear sensory devices. Utility can't work linearly as well.

Replies from: Dagon, donald-hobson↑ comment by Dagon · 2019-08-25T16:27:58.616Z · LW(p) · GW(p)

I like this insight - not only nonlinear but actually discontinuous. There are some marginal instants of torture that are hugely negative, mixed in with those that are more mildly negative. This is due to something that's often forgotten in these discussions: ongoing impact of a momentary experience.

Being "broken" by torture may make it impossible to ever recover enough for any future experiences to be positive. There may be a few quanta of brokenness, but it's not the case that every marginal second is all that bad, only some of them.

Replies from: JakeArgent↑ comment by JakeArgent · 2019-08-25T22:28:53.593Z · LW(p) · GW(p)

To me all the talk about utilities seem broken at a fundamental level. An implication of Timeless Decision Theory should be that an agent running TDT calculates utilities as an integral over time, so final values don't have any explicit time dependence.

This fixes a lot of things, especially when combined with the texture of human experience. Utility should be a function of the states of the world it affects integrated over time. Since we don't get to make that calculation in detail, we can approximate by choosing the kinds of actions that minimize the future bad impacts and maximize good ones.

This is the only view of utility that I can think of that preserves the "wisdom of the elders" point of view. It's strange how often they turn out to be right as one ages, in saying "only care for the ones caring for you", "focus on bettering yourself and not wallowing in bad circumstances" etc. These are the kind of actions that incorporate the notion that life is ongoing. One person only realizes these in an experiential way after having access to dozens of years of memories to reflect on.

Consequentialism (and utilitarianism as well IMO) is broad enough to incorporate both the necessity of universality and the view of virtue ethics if one thinks in the timeless utility perspective.

↑ comment by Donald Hobson (donald-hobson) · 2019-08-26T00:45:13.814Z · LW(p) · GW(p)

What if I make each time period in the "..." one nanosecond shorter than the previous.

You must believe that there is some length of time, t>most of a day, such that everyone in the world being tortured for t-1 nanosecond is better than one person being tortured for t.

Suppose there was a strong clustering effect in human psychology, such that less than a week of torture left peoples minds in one state, and more than a week left them broken. I would still expect the possibility of some intermediate cases on the borderlines. Things as messy as human psychology, I would expect there to not be a perfectly sharp black and white cutoff. If we zoom in enough, we find that the space of possible quantum wavefunctions is continuous.

There is a sense in which specs and torture feel incomparable, but I don't think this is your sense of incomparability, to me it feels like moral uncertainty about which huge number of specs to pick. I would also say that "Don't torture anyone" and "don't commit attrocities based on convoluted arguments" a good ethical injunction. If you think that your own reasoning processes are not very reliable, and you think philosophical thought experiments rarely happen in real life, then implementing the general rule "If I think I should torture someone, go to nearest psych ward" is a good idea. However I would want a perfectly rational AI which never made mistakes to choose torture.

Replies from: Louis_Brown↑ comment by Louis_Brown · 2019-08-26T05:24:23.790Z · LW(p) · GW(p)

we find the need for a weird cut off point, like a broken arm

For the cut-off point on a broken arm, I recommend the elbow [not a doctor].

Suppose there was a strong clustering effect in human psychology, such that less than a week of torture left peoples minds in one state, and more than a week left them broken. I would still expect the possibility of some intermediate cases on the borderlines. Things as messy as human psychology, I would expect there to not be a perfectly sharp black and white cutoff. If we zoom in enough, we find that the space of possible quantum wavefunctions is continuous.

I agree! You've made my point for me: it is precisely this messiness which grants us continuity on average. Some people will take longer than others to have qualitatively incomparably damaging effects from torture, and as such the expected impact of any significant torture will have a component on the severity level of 50 years torture. Hence, comparable (on expectation).

comment by Andrew Jacob Sauer (andrew-jacob-sauer) · 2019-08-24T04:46:21.274Z · LW(p) · GW(p)

Non-Archimedean utility functions seem kind of useless to me. Since no action is going to avoid moving the probability of any outcome by more than 1/3^^^3, absolutely any action is important only insomuch as it impacts the highest lexical level of utility. So you might as well just call that your utility function.

comment by romeostevensit · 2019-08-23T18:00:54.314Z · LW(p) · GW(p)

I've tried intuitive approaches to thinking along these lines which failed so it's really nice to see a serious approach. I see this as key anti-moloch tech and want to use it to think about rivalrous and non-rivalrous goods.