An explanation for every token: using an LLM to sample another LLM

post by Max H (Maxc) · 2023-10-11T00:53:55.249Z · LW · GW · 5 commentsContents

Introduction Background: LLM sampling My approach Details and experimental setup Examples Results Discussion Potential usefulness as a safety mechanism Composition with other safety and control mechanisms Future work Related work Appendix: Full sampler prompt None 5 comments

Introduction

Much has been written about the implications [LW · GW] and potential [LW · GW] safety benefits [LW · GW] of building an AGI based on one or more Large Language Models (LLMs). Proponents argue that because LLMs operate on human-readable natural language, they will be easier to understand, control, and scale safely compared to other possible approaches (e.g. MuZero-style model-based reinforcement learning and planning).

For example, suitably prompted, an LLM can output text which has the structure of an explanation followed by an answer or conclusion. Such methods have been shown to improve the accuracy of the final answer. They also give the consumer of the LLM's output the (apparent) ability to understand the LLM's reasoning process, which could boost trust and confidence relative to a bare answer.

One issue with this approach is that the natural language outputs of LLMs are not necessarily faithful representations [LW · GW] of the underlying mechanisms which generated them. That is, the answer from an LLM may not depend on the preceding "explanation" or purported reasoning process at all.

Unfaithful explanations are actually an example of a more general issue with language models: in a long sequence of tokens, the later tokens in the sequence are often not constrained by earlier tokens as much as we would like. Additionally, the later tokens do not always depend on the earlier tokens in the natural or expected way, and in particular, do not depend on earlier outputs in the way the language model output itself claims.[1]

Some or all of these surface-level issues may be overcome by scaling the model and improvements in the model training process, but their underlying cause may be inherent to the way that all LLMs are currently used: auto-regressive sampling. The sampling process is a key part of LLM-based AI systems, and one that I think is currently somewhat overlooked as a useful place to impose powerful safety and control mechanisms.

In this post, I propose a new method for sampling an LLM by delegating the sampling process to a second LLM, and asking it to explain each choice. The result is that each token in the final output is accompanied by a human-readable out-of-band explanation for why that token was chosen, and thus the final output is more likely to be faithfully and causally related to purported explanations, compared to traditional Chain-of-Thought prompting and other in-band language model outputs. I built a demonstration to visualize my approach on a few examples:

Below, I'll give a brief background on existing methods of sampling LLMs, describe my proposed sampling method and some experimental results, contrast my approach with some related work, and make some remarks on why this general approach might be useful for building LLM-based systems out of models which would be unsafe when used with traditional sampling methods.

Background: LLM sampling

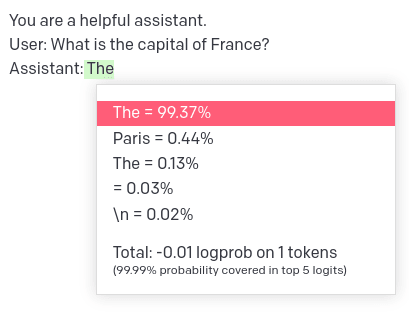

For a given input prompt, an LLM outputs a probability distribution on the next token, over the set of all possible tokens in the token space. These probabilities are typically represented as logits, and for a powerful LLM the vast majority of the probability mass is often concentrated in a small number of top choices:

The probability distribution on the next token is the only output of the giant inscrutable matrices [LW · GW]; it is up to the programmer to decide how to use this probability distribution to produce extended sequences of outputs.[2] The standard approach is to sample from the probability distribution somehow, append the sampled token to the initial prompt, and run the updated prompt back through the model, auto-regressively.

The sampling method chosen can have a big effect on the quality and style of the final output. For example, instead of sampling directly from the probability distribution output by the LLM, you can set a temperature parameter to bias the distribution more or less sharply towards high-likelihood tokens. A special case is setting the temperature to 0, which will result in a sampling procedure that deterministically picks the token with the highest likelihood every time (greedy sampling). Other approaches include beam search or top-p (nucleus sampling). You can also introduce frequency and presence penalties, or implement even more complicated rules to get precise control over the system's final output. For example, to restrict outputs to valid JSON, you could incorporate a JSON parser in the sampler, only selecting tokens yielding valid JSON.

My approach

I propose a new method of sampling an LLM, henceforth referred to as the base LLM,[3] by delegating the sampling and token selection process to a second LLM (henceforth, the sampler LLM). For example, suppose we would like to generate a completion for the following prompt:

You are a helpful assistant.

User: What is the capital of France?

Assistant:We pass this prompt to the base LLM, and get a probability distribution over the next token (in practice, we call the OpenAI API with max_tokens=1 and logprobs=5). We take the top 5 most likely tokens in the distribution and use them to construct a prompt for the sampler LLM:

You are an LLM tasked with choosing a token from possibilities generated by another LLM.

The prompt passed to the other LLM:

"""

You are a helpful assistant.

User: What is the capital of France?

Assistant:

"""

The most likely next tokens from the other LLM:

0. Token: '\n' Probability: 0.016%

Completed prompt:

"""

You are a helpful assistant.

User: What is the capital of France?

Assistant:

"""

1. Token: ' ' Probability: 0.03%

Completed prompt:

"""

You are a helpful assistant.

User: What is the capital of France?

Assistant:

"""

2. Token: 'The' Probability: 0.127%

Completed prompt:

"""

You are a helpful assistant.

User: What is the capital of France?

Assistant:The

"""

3. Token: ' Paris' Probability: 0.443%

Completed prompt:

"""

You are a helpful assistant.

User: What is the capital of France?

Assistant: Paris

"""

4. Token: ' The' Probability: 99.373%

Completed prompt:

"""

You are a helpful assistant.

User: What is the capital of France?

Assistant: The

"""

Analyze the selections of the other LLM and select the index you think is most

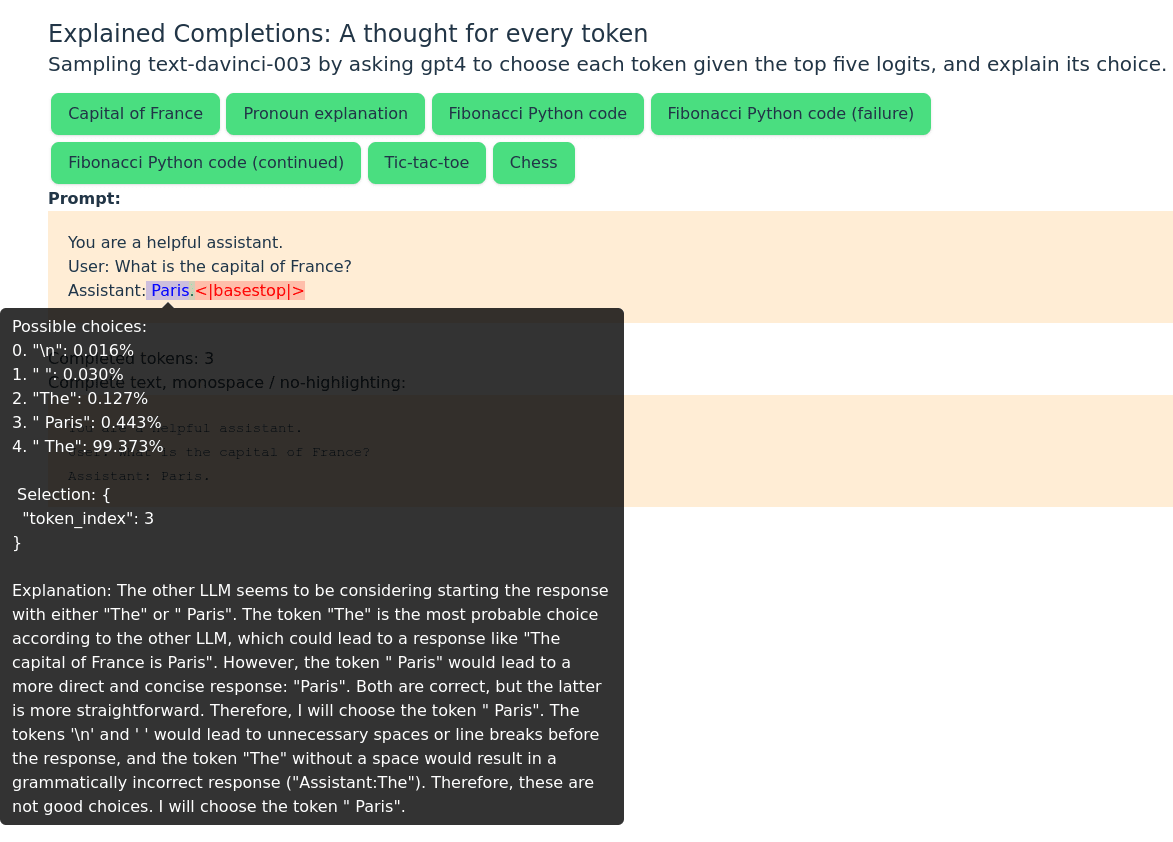

appropriate by calling the choose_token function after explaining your reasoning.We then generate a response from the sampler LLM using ordinary methods (temperature-based sampling), using OpenAI's function-calling feature to simplify the process of parsing the sampler LLM's choice. Continuing the example, given the prompt above, gpt-4 returns the following:

The other LLM seems to be considering starting the response with "The" (option 4) or " Paris" (option 3). The other options are less likely and don't seem to lead to a coherent response.

Option 4, " The", is the most probable according to the other LLM, but it doesn't make sense in this context. The assistant wouldn't start the response with "The" when answering a question about the capital of a country.

Option 3, " Paris", is the correct answer to the question and it makes sense for the assistant to start the response with the name of the capital.

Therefore, I'll choose option 3, " Paris".

function args: {'token_index': 3}The sampler LLM selected choice 3, so we append that to the initial prompt and start the process over, prompting the base LLM with the following prompt to generate a new probability distribution on the next token:

You are a helpful assistant.

User: What is the capital of France?

Assistant: ParisThe process continues until the base LLM outputs <|endoftext|> as one of the likely next tokens, and the sampler LLM chooses it.

The result is that every token in the final output is accompanied by an explanation for why that token was selected.

Details and experimental setup

I implemented the sampling process proposed above using text-davinci-003 for the base LLM and gpt-4 for the sampler LLM. In a more realistic setup, the base LLM would probably be the more powerful one, perhaps a frontier model whose outputs aren't completely trusted when used directly in more diverse setups. Alternatively, one could use the same underlying model for both the base and the sampler, to compare performance characteristics and behavioral properties of my sampling method and classical sampling methods when applied to a single model.

However, as a practical matter, gpt-4 is the only model I have API access to which is capable enough to understand the instructions and perform the selection task reliably, and even gpt-4 isn't very good at it. At the time of writing, text-davinci-003 is the most powerful model for which I have direct access to any of the logits.[4]

Another limitation is that the sampler LLM is only provided the top 5 logits from the base LLM. There is no principled reason for this; 5 is simply the limit on the number of logits available via OpenAI's API.[5]

The final version of my implementation also contains some additional functionality that is not central to the sampling method itself, but makes it more practically useful:

- Early stopping: we can add instructions to the prompt of sampler LLM which allow it to specify a "stop" token of its own, to be chosen if it looks to the sampler like the completion so far has gone completely off-track. By choosing the stop token instead of one of the choices from the base LLM, it can choose to abort the sampling process and halt the completion early, before the base LLM selects a stop token itself or the system runs into a user-defined token limit.

- Alternative token selection: If the sampler LLM thinks none of the base LLM's top five choices are appropriate, the sampler LLM may select an alternative token of its own choice. In my implementation, this token is not immediately accepted; instead, it is appended as an option to the top 5 selections with high probability (arbitrarily, 75%), and a new instance of the sampler LLM is presented with the new options. This gives the sampler the ability to review its own choice in context. This is indicated with a ⟲ token in the examples.

(This extension doesn't work particularly well in my experiments; the sampler LLM often gets confused and makes a mess of the completion, or selects a token that is a superstring of one of the base LLM's selections. This is likely because the sampler LLM has no actual precise notion of what a "token" is.)

See the appendix for the full system prompt.

Examples

Available here: https://explained-completions.netlify.app/

The purpose of the demonstration and examples are mainly to illustrate the concept and motivate the discussion below; they are not intended to be a rigorous performance characterization of the proposed method.

- Capital of France, Pronoun Explanation, and Chess show some basic examples without the alternative token selection or early stopping features. (Chess example taken from here [LW · GW]; pronoun prompt taken from BBH.)

- Fibonacci Python code examples show some (mis)uses of the early stopping and alternative token selection features. Note that the failure example shows an example of the sampler LLM messing up the base LLM; introducing an unrecoverable error in the final line by suggesting an alternative token instead of choosing the base LLM's "return" option.

- tic-tac-toe shows an example of the alternative token selection feature working correctly; on its own, text-davinci-003 does not catch the invalidity in the game state.

Results

Applied to current models, the proposed sampling method is brittle and often results in worse performance, in terms of quality of the final output, than either of the models could do on their own using traditional sampling methods. Additionally, generating explanations for each token results in a 100-1000x slowdown and cost increase relative to traditional sampling methods.

However, the sampling method does appear to ensure that every token in the final output comes attached with a faithful and causal explanation for why a token was chosen. There was no case in my tests where the sampler LLM makes a selection other than what it reasoned about, i.e. the sampler never outputs something like "choice 1 doesn't make sense, choice 2 is OK but will lead to an overly wordy choice, choice 3 makes the most sense" for an explanation, and then actually in the function call it does something other than select option 3.

The explanations generated by gpt-4 are often poorly-reasoned and the resulting token choices not particularly good, but I hypothesize that they are trustworthy, in the sense that the explanations alone are enough to reliably (and trivially) infer which tokens actually appear in the final output. This is in contrast to explanations in traditional CoT prompting and the examples I give in footnote 1, in which the in-band "explanations" are sometimes unrelated to the final answer. This is the key property which, if robust, I speculate could make the sampling method useful for safely leveraging powerful but untrusted models. See "Potential usefulness as a safety mechanism" for more details on why.

Discussion

Potential usefulness as a safety mechanism

Although the proposed sampling method is not particularly useful when applied to current models, I speculate that it could be useful when applied to more powerful models. Because each token in the final output depends directly and naturally on a natural language explanation, the output may be more trustworthy and reliable than if it were simply generated by an untrusted base model, sans any explanation.

The intuition for why this might be true is that it is really the sampler that is deciding the output, with the tokens and probabilities from the base model serving merely as exogenous hints, which the sampler can only make use of if it understands the hints well enough to explain their meaning in a human-readable format. But because each hint is limited in length to a single token, the hint-supplier (the base model) has limited bandwidth available to manipulate the sampler or otherwise subvert the text generation process, compared to letting the base model generate longer sequences of outputs directly via traditional auto-regressive sampling methods.

I hypothesize that even a very powerful and adversarial base model will be incapable of manipulating a relatively weaker (but more trusted) sampler model into producing an unfaithful token-selection explanation.[6]

Additionally, it is probably easier for humans or other LLMs to spot-check the explanations from the sampler LLM, compared to checking the in-band reasoning in traditional chain-of-thought prompting. This is because the token-selection explanations tend to be straightforward and self-contained.

If these properties (non-manipulability of the sampler and straightforward verification of its explanations) hold as the capabilities of the system scale to human-level and beyond, one can potentially leverage this method to "gear down" an untrusted frontier model by leveraging a weaker but more trusted model as the sampler.

Composition with other safety and control mechanisms

Because the mechanism proposed here operates on the sampling process, it can be combined straightforwardly with other methods for controlling and aligning LLM-based systems, including those which operate directly on the models during inference.

For example, one could apply RLHF to the sampler model during training, to reinforce a tendency for giving faithful explanations (perhaps at the expense of more general-purpose helpfulness or reasoning capabilities). This sampler model could be combined with a base model that is subject to activation engineering [LW · GW] and inspection via mechanistic interpretability methods at inference time. The result is a system in which the true locus of control is legibly and verifiably in the sampler, and in particular, within the natural language explanations it generates.

Another perspective on this method is that it is a small piece (one iteration) of Iterated Amplification [? · GW], in which the performance penalty can be regarded as a steep alignment tax [? · GW].

Future work

There are a number of possible extensions, optimizations, and other improvements one could make to the toy implementation, as well as much more rigorous and detailed experiments one could run to quantify the performance of the sampling method when applied to current models. The purpose of the demonstration and examples in this post were mainly to illustrate the concept and motivate further discussion; they are not intended to be a rigorous characterization of the method when applied to current models.

Based on experimenting with my toy implementation, I expect that applying this sampling method is unlikely to be practical or useful as a safety measure (or for capabilities enhancement) when building LLM-based AI systems using currently available SoTA models. (Again, the explanations given by gpt-4 in my experiments were faithful, but they were not necessarily high quality or well-reasoned.)

In addition to composing with safety and control mechanisms, the method is likely to compose with, or benefit from, a variety of other potentially capabilities-enhancing tools: Graph-of-Thoughts, backspace tokens, pause tokens, etc. I think introducing an order-of-magnitude slowdown and significant quality penalty in the output is likely to make this method unattractive to folks interested purely or mostly in capabilities work, and I think it's valuable to have ideas like this out in the open for discussion, before more powerful models to which they might be applied are actually available.[7]

Finally, although I am somewhat pessimistic about the alignment usefulness of this particular method in its current form, the general approach of arranging and placing constraints on otherwise dangerous or unsafe models is a class of approach that I am optimistic about. I expect the alignment tax [? · GW] of these approaches to be high, introducing an order of magnitude increase in costs compared to building an unaligned system that would work just as well (at least for a while), but straightforward to pay, if we take the time to pay them.

Related work

Process supervision and process-based feedback are well-known methods for improving accuracy, reasoning ability, and interpretability of LLM-based systems. Other terms and publications related to process-based supervision at various levels of abstraction and detail include scalable oversight, internal independent review [LW · GW], and open agency [LW · GW].

More concretely, the idea of separating the language model reasoning and output generation process into multiple structured steps, each handled by a different language model instance, is also not new. Let's Verify Step by Step and Selection-Inference are two such examples which share the goal of improving language model reasoning ability and interpretability. More generally, structured prompt chaining enables LLM-based systems to carry out a far wider range of tasks than a single prompt and completion on its own.

These approaches tend to be more practical and more performant than the LLM-based sampling method described here. However, to my knowledge, a commonality of these approaches is that they generally operate on chunks of text larger than a single token.

One reason I decided to explore token-by-token supervision is that involves interacting with the native format that the model itself actually outputs - a probability distribution on a single next token. Generating even short chunks of text from an LLM (a single inference step in selection-inference, for example) requires making a design choice about the auto-regressive sampling process on top of the model itself. The choice of sampling process can vary independently from the underlying model, model training process, and any other safety measures or structure imposed on the overall system, a fact which my approach is intended to highlight.[8]

However, unlike much of the related work linked above, LLM-based sampling is not intended to be a practical method for improving the reasoning abilities of LLM-based systems using current SoTA LLMs. In practice, I expect that reviewing at higher levels or organization - individual sentences, reasoning steps, or ideas - is likely to work well in the short term for improving LLM reasoning capabilities. In exploring LLM-based sampling, I aspired to see a bit further ahead, to a possible future in which LLMs are capable of reasoning a little too well. Externalized reasoning oversight: a research direction for language model alignment [LW · GW] describes some other kinds of properties and desiderata for language model systems in such a future.

Appendix: Full sampler prompt

Below are the final versions of the prompt and function description used to generate the examples here. Without the alternative token selection and early stopping feature, a much shorter prompt will also work.

You are an LLM designed to augment another LLM. You are given the prompt and a

numbered list of between 1 and 10 possible next tokens from the other LLM.

You should use this information to pick the next token that is most likely to

follow in a helpful, accurate response, assuming that future tokens will be

generated by the same process.

You should think step by step, considering why the other LLM chose the next

possible tokens that it did. After explaining your reasoning, your final answer

should be either an integer specifying the choice you think is most likely to

result in a helpful, accurate response, or an alternative one-token

continutation of the prompt that you think is better.

You can (and often should) select one of the tokens chosen by the other LLM,

but you can also choose a token of your own, if you think the other LLM is

completely off track. A token can be anything from a single character to a few

words.

The other LLM can choose to stop predicting text, which will be indicated with

the token <|basestop|>. You can choose to accept it by selecting that

token, or you can continue to predict text by selecting a different token.

You can also choose to end the completion yourself, by calling the function

with {'alternative_token': '<|basestop>'}.

If you select an alternative token, that option will be added to the list

of options, and you'll be able to select it as a choice in the future. This

will give you a chance to read your own suggested token in context, and

reason about it. The alternative token will be concatenated to the prompt

exactly as you specify it, so remember to consider including any spacing or

punctuation as necessary.

You should prefer to select a token from the other LLM, rather than picking

your own. You should only choose an alternative if the other LLM is definitely

off-track, in a way that it won't be able to correct or recover from later in

the completion.

If you must choose your own token, you should choose a a single token; at most

a couple of words. It's OK if this leaves the response incomplete, as long as it

makes it possible for the other LLM to go on continuing the response afterwards.

When choosing your own token, you should consider whether any of the choices

you already have are prefixes of the token you prefer. If so, you should choose

the pre-selected token instead, and let future iterations of the process

continue the generation.

You should always start by considering why the other LLM chose the possible

tokens that it did.

The choices of the other LLM are accompanied by the a probability estimate from

the other LLM. If the probability of a particular token is high (>99%),

that indicates the other LLM is very confident in its choice. In such cases

where the next token is obvious, you should return a function call quickly,

with only a brief deliberation (e.g. "the other LLM appears to be on track".)

The choices are presented in order of probability, with the highest-probability

tokens at the end of the list. The probabilities can help you understand what

the other LLM is thinking, but you should not rely on them to be

well-calibrated. Even if a choice has a very low probability, it was still in

the top 5 to 10 choices of the other LLM, so it may still be the best choice.

Similarly, a probability of 99.9% should not be treated as a strong indicator

that that particular choice is best; only that the other LLM is (perhaps

mistakenly) very confident in that choice.

The list is 0-indexed, and each entry in the list is numbered. You should

select an index that matches the number of the entry in the list that you wish

to select.

Never skip straight to the function call, but be mindful of over-explaining

obvious choices, and keep your analysis brief and straightforward unless you're

at a particularly critical or key point in the completion.

Tip: when considering a token or explaining your reasoning, it can be helpful

to write out the prompt along with the next several words that you think should

follow, or even a complete response, if it is not too long. Your final choice

should only be a single token, but writing out a complete candidate response

can help you figure out whether any of the choices are on-track or not.

Remember that if there's a token selected by the other LLM that is a prefix or

substring of a token you prefer, you should go with the pre-selected choice

rather than supplying a longer alternative. You are only trying to extend the

current prompt by a single token in a way that will eventually lead to a

helpful response.

If the prompt is already off-track or nonsensical in a way that cannot be fixed

without deleting previous tokens and starting over from an earlier point, you

should select `<|basestop|>` or supply it as an alternative token, rather than

trying to continue the generation.

If you're asked to complete a dialogue between a user and an assisant, you

should not propose tokens which complete the user's part of the dialogue.

Instead, choose the <|basestop|> token when the assistant's response should

end.

Remember, you _must_ conclude with a choose_token function call specifying the

index of the token you select, or an alternative token.

OpenAI function-calling API function description:

{

"name": "choose_token",

"description": "Specify the index of the token you think is most likely to result in a helpful, accurate response.",

"parameters": {

"type": "object",

"properties": {

"token_index": {

"type": "integer",

"description": "The index of the token you is most likely to result in a helpful, accurate response. Exactly one of token_index or alternative_token must be specified. "

},

"alternative_token": {

"type": "string",

"description": "An alternative token you think is better. Do NOT include the original propmt, only the next token that you think should complete the prompt. Should be no more than a few words. Should only be set if the provided tokens are unrecoverably off-track."

}

}

}

}- ^

Concretely, I am referring to examples like this, in which an LLM is given access to a Python interpreter. The LLM is prompted with instructions for how it can generate code and have the result of executing that code returned to it, for use in formulating its final answer. But the LLM may not actually be using the output of the interpreter at all. In one example, the interpreter returns nothing at all, but the LLM returns a (correct!) memorized answer anyway, which the uncareful observer might mistake as being supported by the output of an actual Python interpreter.

An even simpler example of a related phenomenon is the one of a lawyer who used an LLM to generate case law citations, and ended up with plausible-sounding but totally made up cases in their court filing. - ^

As I have previously discussed [LW · GW], this is a point that is often overlooked (or at least elided) when casually discussing language models, reinforcement learning, and alignment of current AI systems. Strictly speaking, an "aligned model" is a type error: a language model or reinforcement learning policy is simply a description of a mathematical function which maps input sequences to an output probability distribution. Alignment is a property of a particular embodiment of such a model in a particular system.

There's often a very straightforward embodiment that the model creator has in mind when training the model; for a language model, typically the embodiment involves sampling from the model auto-regressively, starting from a particular prompt. For an RL policy, the typical embodiment involves feeding (real or simulated) observations into the policy and then hooking up (real or simulated) actuators which are controlled by the output of the policy.But other kinds of embodiments are possible, which can create additional points to impose safety and control mechanisms.

- ^

Not to be confused with a model which has yet to undergo RLHF or finetuning. I am using "base" here to refer to the model that sees only the user-supplied prompt and is used to generate a probability distribution on the next token, one token at a time.

- ^

OpenAI recently released

gpt-3.5-turbo-instruct, which also supports access to the top 5 logits via API. I plan to re-run my experiments using this model in the future. - ^

If using this sampling method in an environment with direct access to the underlying models, one could include more or fewer logits depending on the probability distribution. For example, if 99.99% of the probability mass is concentrated in the top 3 logits, providing only the top 3 tokens as choices might suffice. At trickier junctions, when, say, 99.99% of the base LLM's probability mass is spread over the top 10 tokens, it might work better to provide the sampler with more options.

- ^

A sufficiently-powerful base model could probably subvert control of the system via other methods, e.g. side-channels or stenography. My hope is that a ~human-ish level sampler is robust to subversion by a weakly superhuman base model, but I wouldn't expect this to hold up in the limit of adversarial superintelligence.

- ^

To be clear on my own views, I support an indefinite pause [? · GW] on AI capabilities research and especially frontier model training. Please don't read anything in this post that mentions applicability to future models as implying that I want those models to exist any time soon.

- ^

Note that I am not entirely escaping this choice myself, by having the sampler LLM generate its outputs via traditional sampling methods. But you could apply my method recursively, or only use trusted or mechanistically-interpretable LLMs for the sampler.

5 comments

Comments sorted by top scores.

comment by Matt Goldenberg (mr-hire) · 2023-10-11T03:53:21.978Z · LW(p) · GW(p)

It seems like the obvious test of this is with adversarial examples to traditional CoT (such as a test in which all the previous answers are A) to see if it indeed provides a more accurate accounting of the reasoning for selecting f a token.

Replies from: Maxc↑ comment by Max H (Maxc) · 2023-10-11T20:15:25.739Z · LW(p) · GW(p)

The few-shot prompts in https://arxiv.org/abs/2305.04388 are a bit too long to run this test directly, since the sampler model sees the full prompt repeated for every logit. By cutting out some examples and reducing the number of logits in each sampler run to 2, I can create a prompt that fits in the gpt-4 context window and that text-davinci-003 still gets wrong on its own; I added this example (Biased CoT date understanding) to the demo.[1]

However, I couldn't find an example that perfectly reproduced the kind of mistakes that text-davinci-003 makes in the original unfaithful CoT paper. in my modified example, the actual mistake that text-davinci-003 makes is on the "29" token, where it is deciding what date is two days ago. gpt-4 as the sampler correctly selects "30" here and goes on to get the right answer, but this isn't really correcting for the same kind of mistake in the paper, where the error is introduced in the final multiple choice answer selection.

In general, I expect the sampler model to be much less influenced by earlier parts of the prompt to the base model when generating its own explanation, since gpt-4 is unlikely to come up with a natural language explanation of the form "choice (A) makes sense here because the answer has always been (A) in the past".

However, a failure to recognize patterns is not necessarily always a good thing, e.g. if instead of being biased towards a particular wrong answer, the prompt contains a pattern that offers a true hint about the correct answer. In such a setup, LLM-based sampling would probably miss the pattern, while a traditional sampling method might pick up on it, even if the LLM itself couldn't explain the pattern it detected in natural language.

- ^

The full few-shot CoT prompts repeated 5x would probably fit in the context window of gpt-4-32k or claude-100k, but I don't have API access to those models yet.

comment by Sergii (sergey-kharagorgiev) · 2023-10-11T08:49:51.100Z · LW(p) · GW(p)

Nice idea! A variation on this would be to first run a model as usual, saving top logits for each output token.Then give this output to another "inspector" model, that has to answer: "whether the output has any obvious errors, if this errors can be attributed to sampling issues, and whether correct output can be constructed out of the base model's logits".

This would be useful for better understanding limitations of a specific model -- is it really limited by sampling methods. And would be useful for sampling methods research -- finding cases where sampling fails, to devise better algorithms.

comment by Caleb Biddulph (caleb-biddulph) · 2023-10-12T08:11:33.410Z · LW(p) · GW(p)

Thanks for the post! I had a similar idea which might let you maintain (or improve) accuracy while still getting the benefit of explanations - basically fine-tune the model on explanations that make it most likely to output the correct token.

For instance, you might have it to fill in the text between <think> and </think> on a bunch of text examples like this: "The capital of the country northeast of Spain is <think> The country northeast of Spain is France, and its capital is Paris </think> Paris".

You make the LLM come up with, say, 10 explanations each time, and choose the one that maximizes the logprob of the correct token ("Paris") immediately after </think>. Then fine-tune it to complete prompts like "The capital of the country northeast of Spain is <think>" with completions like "The country northeast of Spain is France, and its capital is Paris </think> Paris". Then, generate more completions with the fine-tuned model and fine-tune it yet again with the best completions. Rinse and repeat.

Eventually, the LLM should relearn how to guess the next token (at worst, it can just ignore everything in its "think" stage and get identical performance, so it should be able to learn this easily enough).

Alternatively, instead of training to maximize the correct token's logprob, you can choose prompts that minimize the perplexity over many tokens, say 100 tokens. This will be more useful in practice, since we'll probably mostly want explanations of the LLM's thinking across its entire response rather than in the very short term.

This helps because the LLM will actually have some "skin in the game" instead of just blathering on arbitrarily; the disadvantage is that it could eventually learn to optimize its thinking by encoding additional information in its explanation through steganography. Still, this certainly seems like an improvement on inscrutable matrices.

You could try to enforce that the LLM only uses the semantic content of its explanation by making another LLM rephrase it, translating it to another language, etc. This kind of approach wouldn't stop an adversary actively trying to hide its thoughts from you, but it seems like it would prevent most issues for now.