Decision Theory with the Magic Parts Highlighted

post by moridinamael · 2023-05-16T17:39:55.038Z · LW · GW · 24 commentsContents

I. The Magic Parts of Decision Theory II. Alignment by Demystifying the Magic None 24 comments

I. The Magic Parts of Decision Theory

You are throwing a birthday party this afternoon and want to decide where to hold it. You aren't sure whether it will rain or not. If it rains, you would prefer not to have committed to throwing the party outside. If it's sunny, though, you will regret having set up inside. You also have a covered porch which isn't quite as nice as being out in the sun would be, but confers some protection from the elements in case of bad weather.

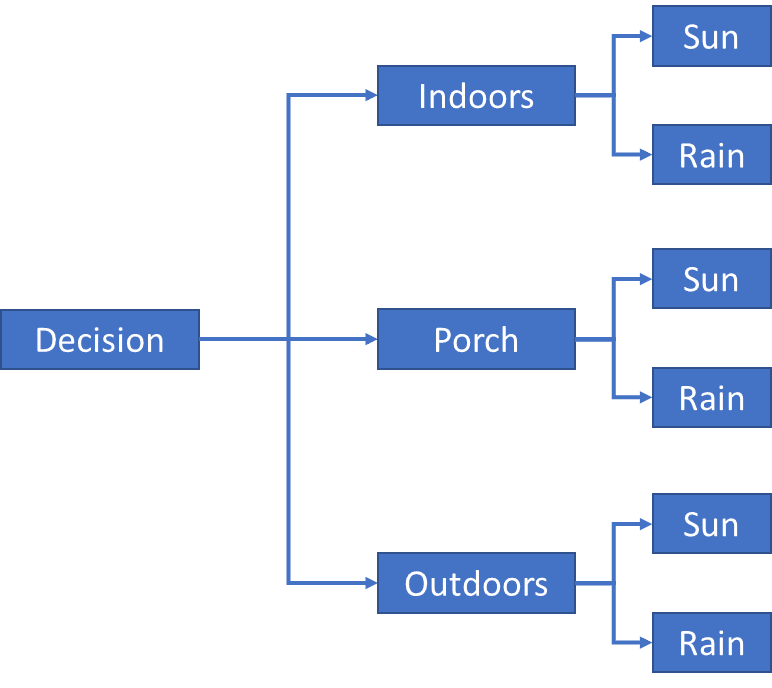

You break this problem down into a simple decision tree. This operation requires magic[1], to avert the completely intractable combinatorial explosion inherent in the problem statement. After all, what does "Rain" mean? A single drop of rain? A light sprinkling? Does it only count as "Rain" if it's a real deluge? For what duration? In what area? Just in the back yard? What if it looks rainy but doesn't rain? What if there's lightning but not rain? What if it's merely overcast and humid? Which of these things count as Rain?

And how crisply did you define the Indoors versus Porch versus Outdoors options? What about the option of setting up mostly outside but leaving the cake inside, just in case? There are about ten billion different permutations of what "Outdoors" could look like, after all - how did you determine which options need to be explicitly represented? Why not include Outside-With-Piñata and Outside-Without-Piñata as two separate options? How did you determine that "Porch" doesn't count as "Outdoors" since it's still "outside" by any sensible definition?

Luckily you're a human being, so you used ineffable magic to condense the decision tree with a hundred trillion leaf nodes down into a tree with only six.

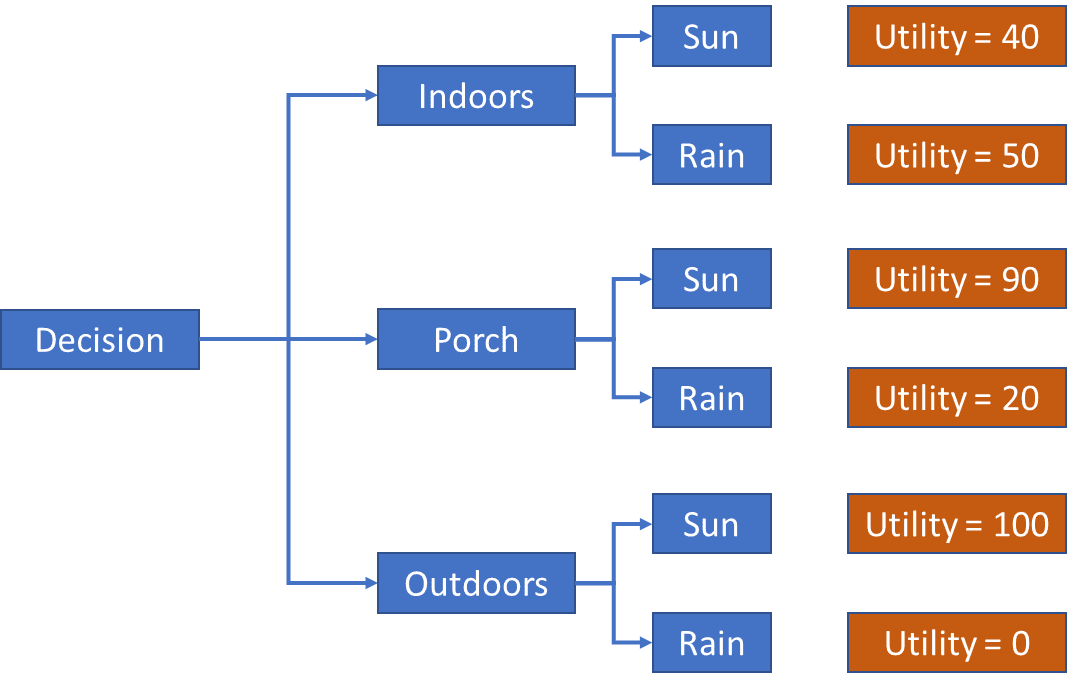

You're a rigorous thinker, so the next step, of course, is to assign utilities to each outcome, scaled from 0 to 100, in order to represent your preference ordering and the relative weight of these preferences. Maybe you do this explicitly with numbers, maybe you do it by gut feel. This step also requires magic; an enormously complex set of implicit understandings come into play, which allow you to simply know how and why the party would probably be a bit better if you were on the Porch in Sunny weather than Indoors in Rainy weather.

Be aware that there is not some infinitely complex True Utility Function that you are consulting or sampling from, you simply are served with automatically-arising emotions and thoughts upon asking yourself these questions about relative preference, resulting in a consistent ranking and utility valuation.

Nor are these emotions and thoughts approximations of a secret, hidden True Utility Function; you do not have one of those, and if you did, how on Earth would you actually use it in this situation? How would you use it to calculate relative preference of Porch-with-Rain versus Indoors-with-Sun unless it already contained exactly that comparison of world-states somewhere inside it?

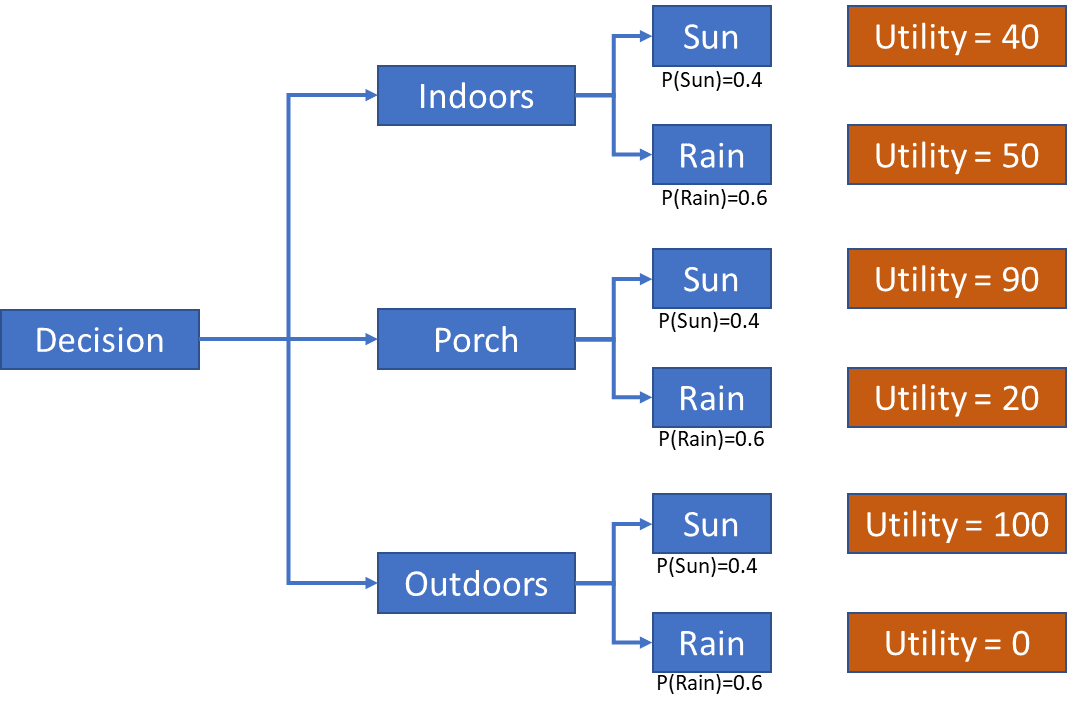

Next you perform the trivial-to-you act of assigning probability of Rain versus Sun, which of course requires magic. You have to rely on your previous, ineffable distinction of what Rain versus Sun means in the first place, and then aggregate vast amounts of data, including what the sky looks like and what the air feels like (with your lifetime of experience guiding how you interpret what you see and feel), what three different weather reports say weighted by ineffable assignments of credibility, and what that implies for your specific back yard, plus the timing of the party, into a relatively reliable probability estimate.

What's that, you say? An ideal Bayesian reasoner would be able to do this better? No such thing exists; it is "ideal" because it is pretend. For very simple reasons of computational complexity, you cannot even really approach "ideal" at this sort of thing. What sources of data would this ideal Bayesian reasoner consult? All of them? Again you have a combinatorial explosion, and no reliable rule for constraining it. Instead you just use magic: You do what you can with what you've got, guided by heuristics and meta-heuristics baked in from Natural Selection and life experience, projected onto a simplified tree-structure so that you can make all the aforementioned magical operations play together in a convenient way.

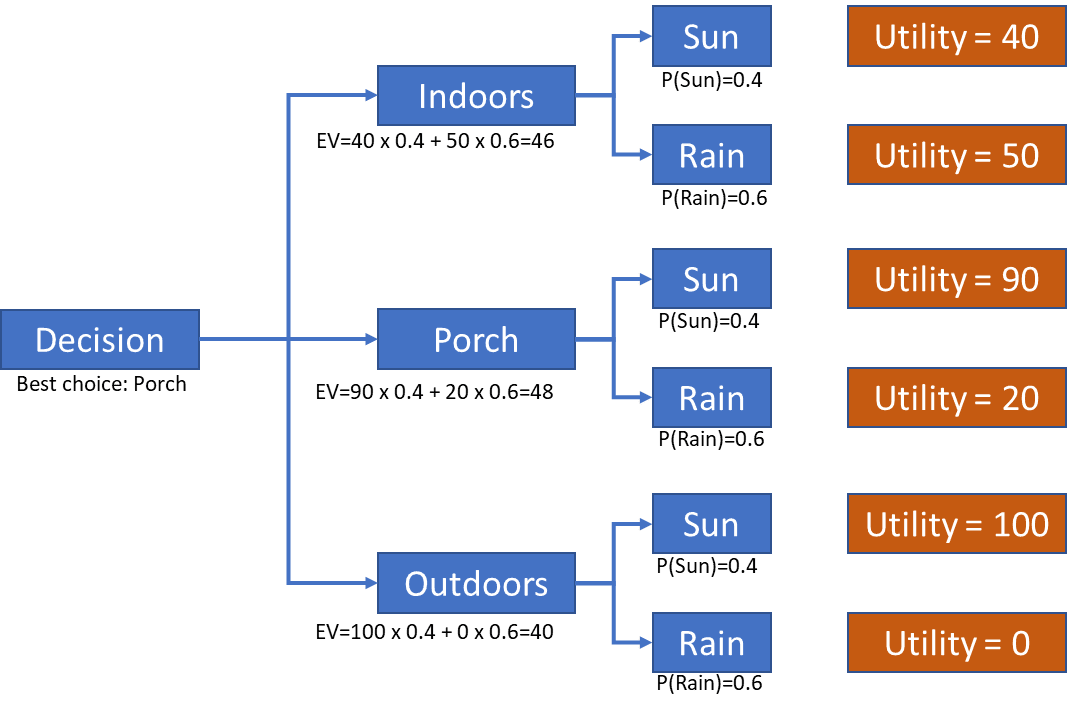

Finally you perform the last step, which is an arithmetic calculation to determine the option with the highest expected value. This step does not require any magic.

II. Alignment by Demystifying the Magic

It was never clear to me how people ever thought we were going to build an AI out of the math of Logic and Decision Theory. There are too many places where magic would be required. Often, in proposals for such GOFAI-adjacent systems, there is a continual deferral to the notion that there will be meta-rules that govern the object-level rules, which would suffice for the magic required for situations like the simple one described above. These meta-rules never quite manage to work in practice. If they did, we would have already have powerful and relatively general AI systems built out of Logic and Decision Theory, wouldn't we? "Graphs didn't work, we need hypergraphs. No we need meta-hypergraphs. Just one more layer of meta, bro. Just one more layer of abstraction."

Of course, now we do have magic. Magic turned out to be deep neural networks, providing computational units flexible enough to represent any arbitrary abstraction by virtue of their simplicity, plus Attention, to help curtail the combinatorial explosion. These nicely mirror the way humans do our magic. This might be enough; GPT-4 can do all of the magic required in the problem above. GPT-4 can break the problem down into reasonable options and outcomes, assign utilities based on its understanding of likely preferences, and do arithmetic. GPT-4, unlike any previous AI, is actually intelligent enough that it can do the required magic.

To review, the magical operations required to make a decision are the following:

- Break the problem down into sensible discrete choices and outcomes, making reasonable assumptions to curtail the combinatorial explosion of potential choices and outcomes inherent in reality.

- Assigning utility valuations to different outcomes, which incorporate latent preference information to a new and never-before-seen situation.

- Assigning probabilities to different outcomes in a situation which has no exact precedent, which reflect expectations gleaned from a black-box predictive model of the universe.

One could quibble over whether GPT-4 does these three things well, but I would contend that it does them about as well as a human, which is an important natural threshold for general competency.

This list of bullets maps onto certain parts of the AI Alignment landscape:

- State Space Abstraction is an open problem even before we consider that we would prefer that artificial agents cleave the state spaces in ways that are intuitive and natural for humans. GPT-4 does okay at this task, though the current short context window means that it's impossible to give the model a truly complete understanding of any situation beyond the most simplistic.

- Value Learning was originally the idea that AI would need to be taught human values explicitly. It turns out that GPT-4 got us surprisingly far in the direction of having a model that can represent human values accurately, without necessarily being compelled to adhere to them.

- Uncertainty Estimation is, allegedly, something that GPT-4 was better at before it was subjected to Reinforcement Learning from Human Feedback (RLHF). This makes sense; it is the sort of thing that I would expect an AI to be better at by default, since its expectations are stored as numbers instead of inarticulable hunches. The Alignment frontier here would be getting the model to explain why it provides the probability that it does.

Obviously the Alignment landscape is bigger than what I have described here. My aim is to provide a useful mental framework for organizing the main ideas of alignment in context of the fundamentals of decision theory and fundamental human decision-making processes.

Thanks to the Guild of the Rose Decision Theory Group for providing feedback on drafts of this article.

- ^

The intent of this usage is to illustrate that we don't know how this works at anything like sufficient granularity. At best, we have very coarse conceptional models that capture small parts of the problem in narrow, cartoon scenarios.

24 comments

Comments sorted by top scores.

comment by gwern · 2023-05-17T17:48:47.399Z · LW(p) · GW(p)

Probably the single biggest blackbox in classic decision theory is the Cartesian assumption of an 'agent' which is an atomic entity sealed inside a barrier which is communicating with/acting in an environment which has no access to or prediction about the agent. This is a black box not for convenience, nor for generality, but because if you open it, you just see a little symbol labeled 'agent', and beyond that, you just have to shrug and say, 'magic'.

The 'agent' node is where a lot of reflexive problems like Newcomb or smoking lesion or AIXI wireheading come in. In comparison to the problem that 'we have no way at all to define an "agent" in a rigorous way reducible to atoms and handling correctly cases like the environment screwing with the agent's brain', problems like "where do we get a probability" are much easier - standard reductionist moves like 'the brain is just a gigantic Bayesian model/deep ensemble and that's how you can assign probabilities to everything you can think of' look like they work, whereas for defining an agent with permeable boundaries & which is made up of the exact same parts as the environment, we're left struggling to even say what a solution might look like.

(It would need to handle all of the dynamics inside and outside an agent, from memes colonizing a brain, to clonal lineages of blood or immune cells colonizing your body over a lifetime, to commensals like gut bacteria, to bacteriophages deciding whether to lyse or lie quiescent inside them, to infections evolving within an individual such as the COVID strains that emerged within immuno-compromised individuals, to passive latent infections waiting for immune weakness or old age to spring back to life, to 'neural darwinism' of neurons & connections, to transmission-biasing genes or jumping transposons or sex-biased genes or antagonistic pleiotropic genes or... And this is just a small selection of the stuff which is 'under the skin'! Where is the 'agent' which emerges out of all these different levels & units of analysis which one could potentially apply Price's equation or Bellman equations or VNM coherency? We get away with ignoring this most of the time and modeling the 'obvious' agents the 'obvious' way - but why does this work so well, why do sometimes you do in fact have to unsee an 'agent' as a bunch of sub-agents, and what justifies each particular level of analysis, beyond just the obvious pragmatic response of 'this particular approach seems to be what's useful in this case'?)

Hence, Agent Foundations and trying to nail down workable abstractions which can handle, say, cellular automata agents, and which can be composed and copied while preserving agency or identity.

Replies from: lcmgcd, M. Y. Zuo↑ comment by lemonhope (lcmgcd) · 2024-02-23T07:09:33.247Z · LW(p) · GW(p)

Seems unlikely to me but I do wonder: after we break down the agent all the way to atoms and build it back up level by level, will we realize that the physics sim was all we needed all along? Perhaps just a coarser sim for bigger stuff?

↑ comment by M. Y. Zuo · 2023-05-18T10:46:35.172Z · LW(p) · GW(p)

Probably the single biggest blackbox in classic decision theory is the Cartesian assumption of an 'agent' which is an atomic entity sealed inside a barrier which is communicating with/acting in an environment which has no access to or prediction about the agent.

I've always understood this to be the equivalent of spherical cows.

Of course it doesn't, and can't actually, exist in the real world.

Considering that for real humans even something as simple as severing the connection between left and right hemispheres of the brain causes identity to break down, there likely are no human 'agents' in the strict sense.

comment by Daniel Kokotajlo (daniel-kokotajlo) · 2023-05-16T19:45:32.610Z · LW(p) · GW(p)

You had me at the title. :) I feel like I've been waiting for ten years for someone to make this point. (Ten years ago was roughly when I first heard about and started getting into game and decision theory). I'm not sure I like the taxonomy of alignment approaches you make, but I still think it's valuable to highlight the 'magic parts' that are so often glossed over and treated as unimportant.

Replies from: moridinamael, Vaniver↑ comment by moridinamael · 2023-05-16T20:53:59.086Z · LW(p) · GW(p)

I spent way too many years metaphorically glancing around the room, certain that I must be missing something that is obvious to everyone else. I wish somebody had told me that I wasn't missing anything, and these conceptual blank spots are very real and very important.

As for the latter bit, I am not really an Alignment Guy. The taxonomy I offer is very incomplete. I do think that the idea of framing the Alignment landscape in terms of "how does it help build a good decision tree? what part of that process does it address or solve?" has some potential.

↑ comment by Vaniver · 2023-05-18T07:04:21.865Z · LW(p) · GW(p)

Huh, this feels like it lines up with my view from 11 years ago [LW · GW], but I definitely didn't state it as crisply as I remember stating it. (Maybe it was in a comment I couldn't find?) Like, the math is trivial and the difficulties are all in problem formulation and model elicitation (of both preferences and dynamics).

comment by Dagon · 2023-05-16T23:57:28.809Z · LW(p) · GW(p)

I think there's hidden magic in the setup as well - knowing whether to assign probabilities or calculate EV in a given column (alternately, knowing whether to branch location to weather or weather to location). Understanding causality of "decision" and "outcome" is the subject of much discussion on the topic.

Replies from: moridinamael↑ comment by moridinamael · 2023-05-17T00:30:28.509Z · LW(p) · GW(p)

Good point. You could also say that even having the intuition for which problems are worth the effort and opportunity cost of building decision trees, versus just "going with what feels best", is another bit of magic.

comment by romeostevensit · 2023-05-20T18:08:50.407Z · LW(p) · GW(p)

Previously: https://slatestarcodex.com/2014/11/21/the-categories-were-made-for-man-not-man-for-the-categories/

comment by Said Achmiz (SaidAchmiz) · 2023-05-17T17:43:19.529Z · LW(p) · GW(p)

Excellent post! I agree with Daniel [LW(p) · GW(p)]—this is a post which I feel like should’ve been made long ago (which is about as high a level of praise as I can think of).

comment by cubefox · 2023-05-24T17:35:54.634Z · LW(p) · GW(p)

A bit late, a related point. Let me start with probability theory. Probability theory is considerably more magic than logic, since only the latter is "extensional" or "compositional", the former is not. Which just means the truth values of and determine the truth value of complex statements like ("A and B"). The same is not the case for probability theory: The probabilities of and do not determine the probability of , they only constrain it to a certain range of values.

For example, if and have probabilities 0.6 and 0.5 respectively, the probability of the conjunction, , is merely restricted to be somewhere between 0.1 and 0.5. This is why we can't do much "probabilistic deduction" as opposed to logical deduction. In propositional logic, all the truth values of complex statements are determined by the truth values of atomic statements.

In probability theory we need much more given information than in logic, we require a "probability distribution" over all statements, including the complex ones (which grow exponentially with the number of of atomic statements), and only require them to not violate the rather permissive axioms of probability theory. In essence, probability theory requires most inference questions to be already settled in advance. By magic.

This already means a purely "Bayesian" AI can't be built, as magic doesn't exist, and some other algorithmic means is required to generate a probability distribution in the first place. After all, probability distributions are not directly given by observation.

(Though while logic allows for inference, it ultimately also fails as an AI solution, partly because purely deductive logical inference is not sufficient, or even very important, for intelligence, and partly also because real world inputs and outputs of an AI do not usually come in form of discrete propositional truth values. Nor as probabilities, for that matter.)

The point about probability theory generalizes to utility theory. Utility functions (utility "distributions") are not extensional either. Nor are preference orderings extensional in any sense. A preference order between atomic propositions implies hardly anything about preferences between complex propositions. We (as humans) can easily infer that someone who likes lasagna better than pizza, and lasagna better than spaghetti, probably also likes lasagna better than pizza AND spaghetti. Utility theory doesn't allow for such "inductive" inferences.

But while these theories are not theories that solve the general problem of inductive algorithmic inference (i.e., artificial intelligence), they at least set, for us humans, some weak coherence constraints on rational sets of beliefs and desires. They are useful for the study of rationality, if not for AI.

Replies from: moridinamael↑ comment by moridinamael · 2023-05-24T18:44:16.383Z · LW(p) · GW(p)

Great points. I would only add that I’m not sure the “atomic” propositions even exist. The act of breaking a real-world scenario into its “atomic” bits requires magic, meaning in this case a precise truncation of intuited-to-be-irrelevant elements.

Replies from: cubefox↑ comment by cubefox · 2023-05-24T19:45:23.618Z · LW(p) · GW(p)

Yeah. In logic it is usually assumed that sentences are atomic when they do not contain logical connectives like "and". And formal (Montaigne style) semantics makes this more precise, since logic may be hidden in linguistic form. But of course humans don't start out with language. We have some sort of mental activity, which we somehow synthesize into language, and similar thoughts/propositions can be expressed alternatively with an atomic or a complex sentence. So atomic sentences seem definable, but not abstract atomic propositions as object of belief and desire.

comment by the gears to ascension (lahwran) · 2023-05-17T02:15:24.252Z · LW(p) · GW(p)

I was looking forward to seeing decision theory explained in a new, more complete and concise and readable way, but find myself disappointed. This is a solid post and I've upvoted but I want a post that more strictly adheres to the title.

comment by Shmi (shminux) · 2023-05-16T21:35:48.291Z · LW(p) · GW(p)

I am confused as to what work the term "magic" does here. Seems like you use it for two different but rather standard operations: "listing possible worlds" and "assigning utility to each possible world". Is the "magic" part that we have to defer to a black-box human judgment there?

Replies from: moridinamael↑ comment by moridinamael · 2023-05-16T22:10:17.518Z · LW(p) · GW(p)

Short answer, yes, it means deferring to a black-box.

Longer answer, we don't really understand what we're doing when we do the magic steps, and nobody has succeeded in creating an algorithm to do the magic steps reliably. They are all open problems, yet humans do them so easily that it's difficult for us to believe that they're hard. The situation reminds me back when people thought that object recognition from images ought to be easy to do algorithmically, because we do it so quickly and effortlessly.

Maybe I'm misunderstanding your specific point, but the operations of "listing possible worlds" and "assigning utility to each possible world" are simultaneously "standard" in the sense that they are basic primitives of decision theory and "magic" in the sense that we haven't had any kind of algorithmic system that was remotely capable of doing these tasks until GPT-3 or -4.

Replies from: shminux, Drewdrop↑ comment by Shmi (shminux) · 2023-05-16T23:38:36.062Z · LW(p) · GW(p)

Ah, thank you, that makes sense. I agree that we definitely need some opaque entity to do these two operations. Though maybe not as opaque as magic, unless you consider GPT-4 magic. As you say, "GPT-4 can do all of the magic required in the problem above." In which case you might as well call everything an LLM does "magic", which would be fair, but not really illuminating.

GPT-4 analysis, for reference:

One possible decision tree for your problem is:

graph TD

A[Will it rain?] -->|Yes| B[Throw party inside]

A -->|No| C[Throw party outside]

B --> D[Enjoyment: 0.8, Cost: 0.6]

C --> E[Enjoyment: 1.0, Cost: 0.4]

This decision tree assumes that you have a binary choice between throwing the party inside or outside, and that you have some estimates of the enjoyment and cost of each option. The enjoyment and cost are normalized between 0 and 1, where higher values are better. The decision tree also assumes that you know the probability of rain, which you can estimate from weather forecasts or historical data.

If you want to include the option of throwing the party on the covered porch, you can modify the decision tree as follows:

graph TD

A[Will it rain?] -->|Yes| B[Throw party on porch]

A -->|No| C[Throw party outside]

B --> D[Enjoyment: 0.9, Cost: 0.5]

C --> E[Enjoyment: 1.0, Cost: 0.4]

D --> F[Probability of rain: 0.3]

F --> G[Party ruined: 0.1]

F --> H[Party saved: 0.9]

G --> I[Enjoyment: 0.2, Cost: 0.7]

H --> J[Enjoyment: 0.9, Cost: 0.5]

This decision tree adds another layer of uncertainty to the problem, as throwing the party on the porch depends on the probability of rain and the chance of ruining or saving the party if it rains. You can adjust these values based on your own preferences and expectations.

To use a decision tree to make a decision, you can calculate the expected value of each option by multiplying the enjoyment and cost by their respective probabilities and adding them up. For example, the expected value of throwing the party outside is:

EV(outside) = Enjoyment * Probability + Cost * Probability

EV(outside) = 1.0 * (1 - Probability of rain) + 0.4 * (1 - Probability of rain)

EV(outside) = 1.4 * (1 - Probability of rain)

Similarly, you can calculate the expected value of throwing the party on the porch as:

EV(porch) = Enjoyment * Probability + Cost * Probability

EV(porch) = (0.9 * Probability of rain + 0.2 * Probability of rain * Party ruined + 0.9 * Probability of rain * Party saved) + (0.5 * Probability of rain + 0.7 * Probability of rain * Party ruined + 0.5 * Probability of rain * Party saved)

EV(porch) = (0.9 + 0.5) * Probability of rain + (0.2 + 0.7 - 0.9 - 0.5) * Probability of rain * Party ruined + (0.9 + 0.5 - 0.9 - 0.5) * Probability of rain * Party saved

EV(porch) = 1.4 * Probability of rain + (-0.4) * Probability of rain * Party ruined + (0) * Probability of rain * Party saved

You can then compare the expected values of each option and choose the one that maximizes your utility.

Replies from: moridinamael↑ comment by moridinamael · 2023-05-17T00:27:46.717Z · LW(p) · GW(p)

I probably should have listened to the initial feedback on this post along the lines that it wasn't entirely clear what I actually meant by "magic" and was possibly more confusing than illuminating, but, oh well. I think that GPT-4 is magic in the same way that the human decision-making process is magic: both processes are opaque, we don't really understand how they work at a granular level, and we can't replicate them except in the most narrow circumstances.

One weakness of GPT-4 is it can't really explain why it made the choices it did. It can give plausible reasons why those choices were made, but it doesn't have the kind of insight into its motives that we do.

Replies from: pjeby↑ comment by pjeby · 2023-05-18T00:33:25.572Z · LW(p) · GW(p)

it doesn't have the kind of insight into its motives that we do

Wait, human beings have insight into their own motives that's better than GPTs have into theirs? When was the update released, and will it run on my brain? ;-)

Joking aside, though, I'd say the average person's insight into their own motives is most of the time not much better than that of a GPT, because it's usually generated in the same way: i.e. making up plausible stories.

↑ comment by Drewdrop · 2023-05-18T11:00:32.845Z · LW(p) · GW(p)

I guess it is ironic but there is an important senses of magic that I read into the piece which are not disambiguated by that.

A black box can mean arbitrary code that you are not allowed to know. Let's call this more tame style "formulaic". A black box can also mean a part you do not know what it does. Let's call this style "mysterious".

Incompleteness and embeddedness style argumentation points to a direction that an agent can only partially have a formulaic understanding of itself. Things built from "tame things up" can be completely non-mysterious. But what we often do is find yourself with the capacity to make decisions and actions and then reflect what is that all about.

I think there was some famous fysicist that opines that the human brain is material but non-algoritmic and supposedly the lurking place of the weirdness would be in microtubules.

It is easy to see that math is very effective for the formulaic part. But do you need to and how would you tackle with any non-formulaic parts of the process? Any algorithm specification is only going to give you a formulaic handle. Thus where we can not speak we must be silent.

In recursive relevance realization lingo, you have a salience landscape, you do not calculate one. How come you initially come to feel some affordance as possible in the first place? Present yet ineffable elements are involved thus the appreciation of magic.

Replies from: Nonecomment by Review Bot · 2024-02-20T09:09:58.927Z · LW(p) · GW(p)

The LessWrong Review [? · GW] runs every year to select the posts that have most stood the test of time. This post is not yet eligible for review, but will be at the end of 2024. The top fifty or so posts are featured prominently on the site throughout the year.

Hopefully, the review is better than karma at judging enduring value. If we have accurate prediction markets on the review results, maybe we can have better incentives on LessWrong today. Will this post make the top fifty?

comment by Iknownothing · 2023-07-17T11:10:23.643Z · LW(p) · GW(p)

On the porch/outside/indoors thing- maybe that's not a great example, because having the numbers there seems to add nothing of value to me. Other than maybe clarifying to yourself how you feel about certain ideas/outcomes, but that's something that any one with decent thinking does anyways.

Replies from: moridinamael↑ comment by moridinamael · 2023-07-17T14:12:44.264Z · LW(p) · GW(p)

The Party Problem is a classic example taught as an introductory case in decision theory classes, that was the main reason why I chose it.