Forecasting Thread: Existential Risk

post by Amandango · 2020-09-22T03:44:28.622Z · LW · GW · 13 commentsThis is a question post.

Contents

How to participate How to add an image to your comment Questions to consider as you're making your prediction Comparisons and aggregations None Answers 11 Owain_Evans 10 matthew.vandermerwe 8 steven0461 6 elifland 6 Daniel Kokotajlo 3 VermillionStuka None 13 comments

This is a thread for displaying your probabilities of an existential catastrophe that causes extinction or the destruction of humanity’s long-term potential.

Every answer to this post should be a forecast showing your probability of an existential catastrophe happening at any given time.

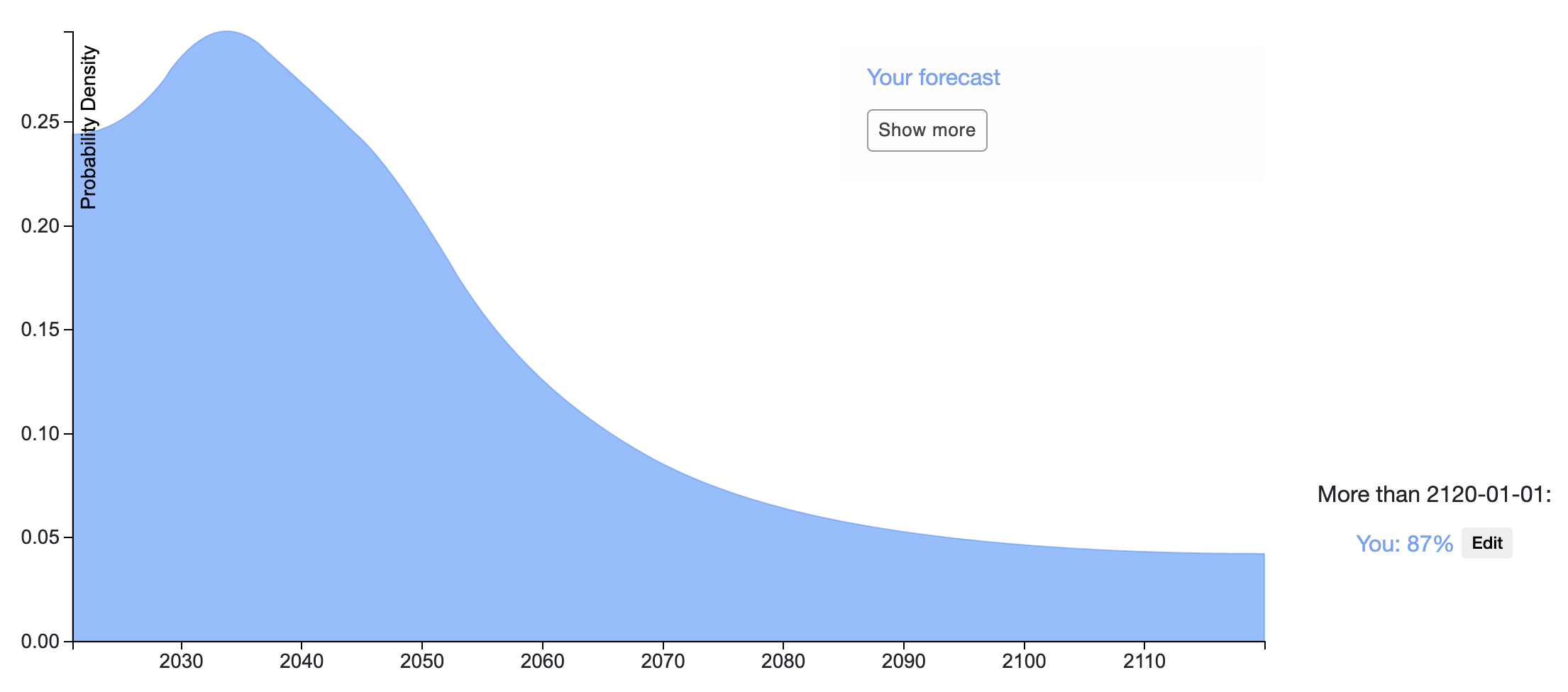

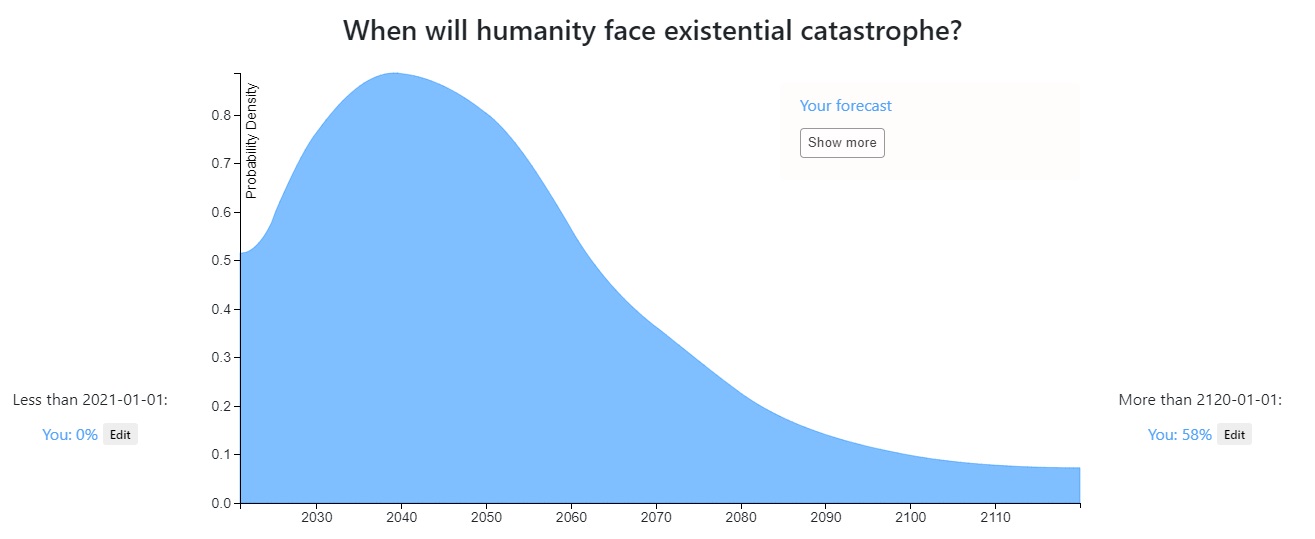

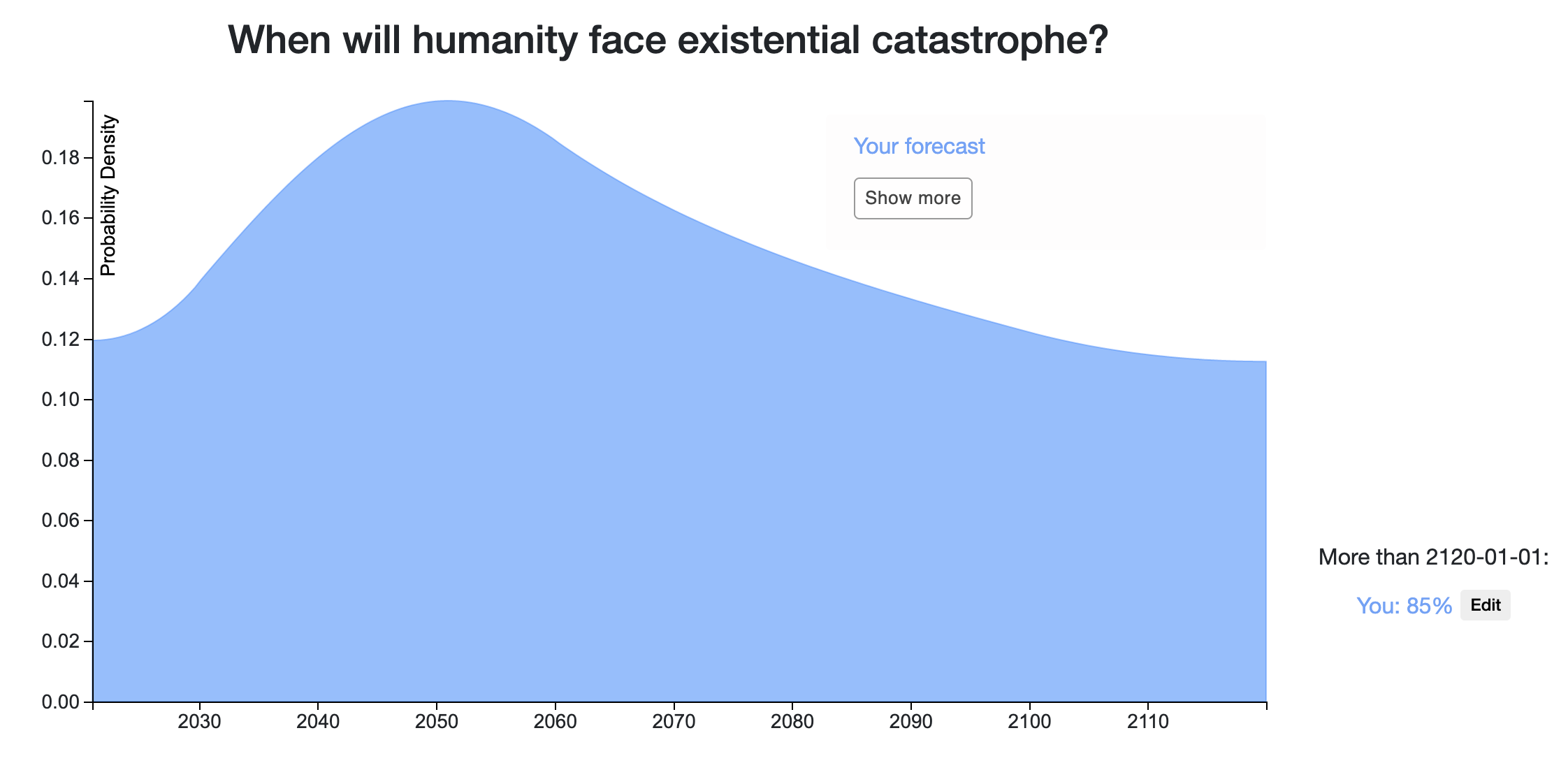

For example, here is Michael Aird’s timeline:

The goal of this thread is to create a set of comparable, standardized x-risk predictions, and to facilitate discussion on the reasoning and assumptions behind those predictions. The thread isn’t about setting predictions in stone – you can come back and update at any point!

How to participate

- Go to this page

- Create your distribution

- Specify an interval using the Min and Max bin, and put the probability you assign to that interval in the probability bin.

- You can specify a cumulative probability by leaving the Min box blank and entering the cumulative value in the Max box.

- To put probability on never, assign probability above January 1, 2120 using the edit button to the right of the graph. Specify your probability for never in the notes, to distinguish this from putting probability on existential catastrophe occurring after 2120.

- Click 'Save snapshot' to save your distribution to a static URL

- A timestamp will appear below the 'Save snapshot' button. This links to the URL of your snapshot.

- Make sure to copy it before refreshing the page, otherwise it will disappear.

- Click ‘Log in’ to automatically show your snapshot on the Elicit question page

- You don’t have to log in, but if you do, Elicit will:

- Store your snapshot in your account history so you can easily access it.

- Automatically add your most recent snapshot to the x-risk question page under ‘Show more’. Other users will be able to import your most recent snapshot from the dropdown, shown below.

- We’ll set a default name that your snapshot will be shown under – if you want to change it, you can do so on your profile page.

- If you’re logged in, your snapshots for this question will be publicly viewable.

- You don’t have to log in, but if you do, Elicit will:

- Copy the snapshot timestamp link and paste it into your LessWrong comment

- You can also add a screenshot of your distribution in your comment using the instructions below.

Here's an example of how to make your distribution:

How to add an image to your comment

- Take a screenshot of your distribution

- Then do one of two things:

- If it worked, you will see the image in the comment before hitting submit.

If you have any bugs or technical issues, reply to Ben from the LW team or Amanda (me) from the Ought team in the comment section, or email me at amanda@ought.org.

Questions to consider as you're making your prediction

- What definitions are you using? It’s helpful to specify them.

- What evidence is driving your prediction?

- What are the main assumptions that other people might disagree with?

- What evidence would cause you to update?

- How is the probability mass allocated amongst x-risk scenarios?

- Would you bet on these probabilities?

Comparisons and aggregations

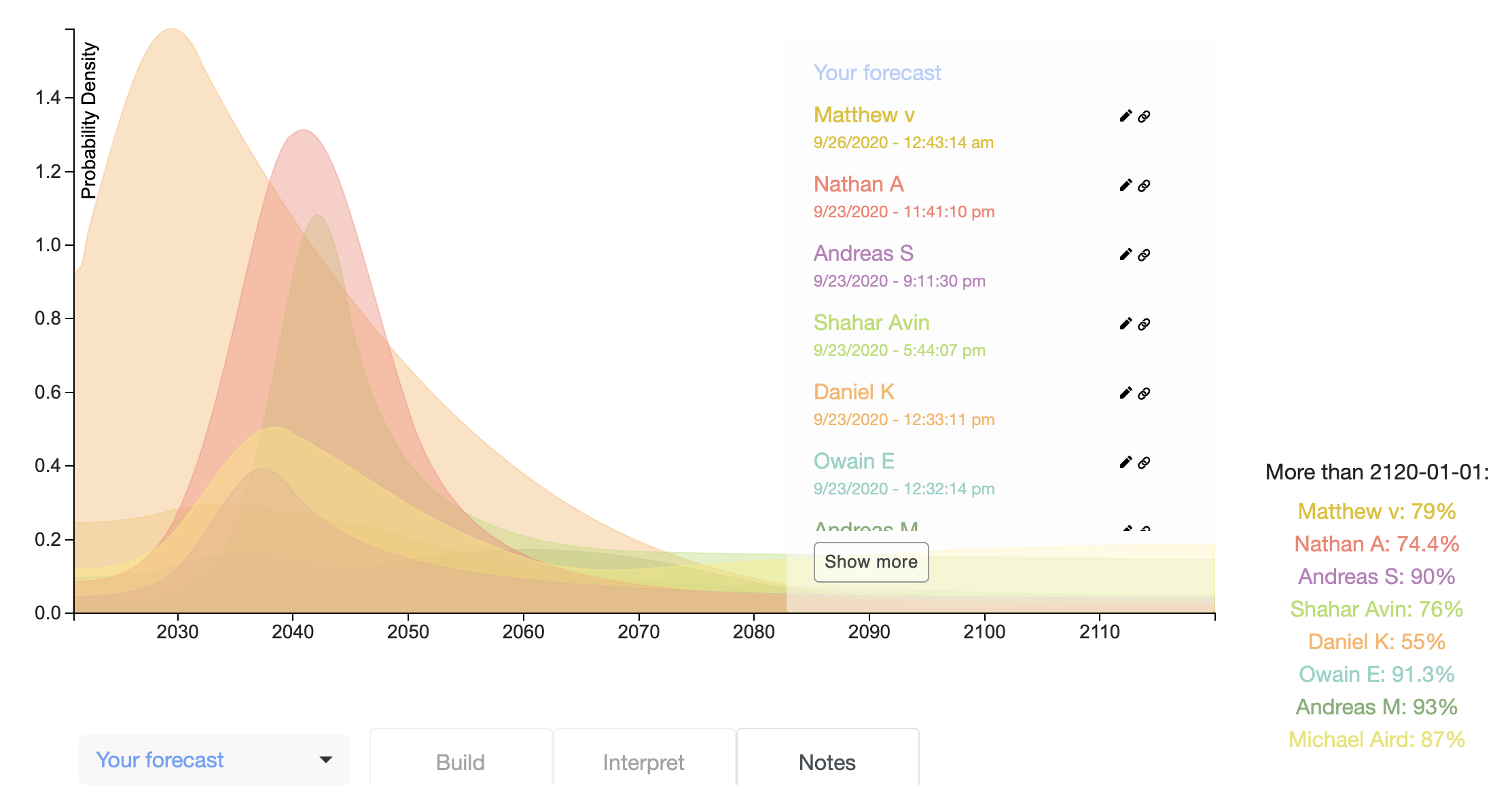

Here's a comparison of the 8 predictions made so far (last updated 9/26/20).

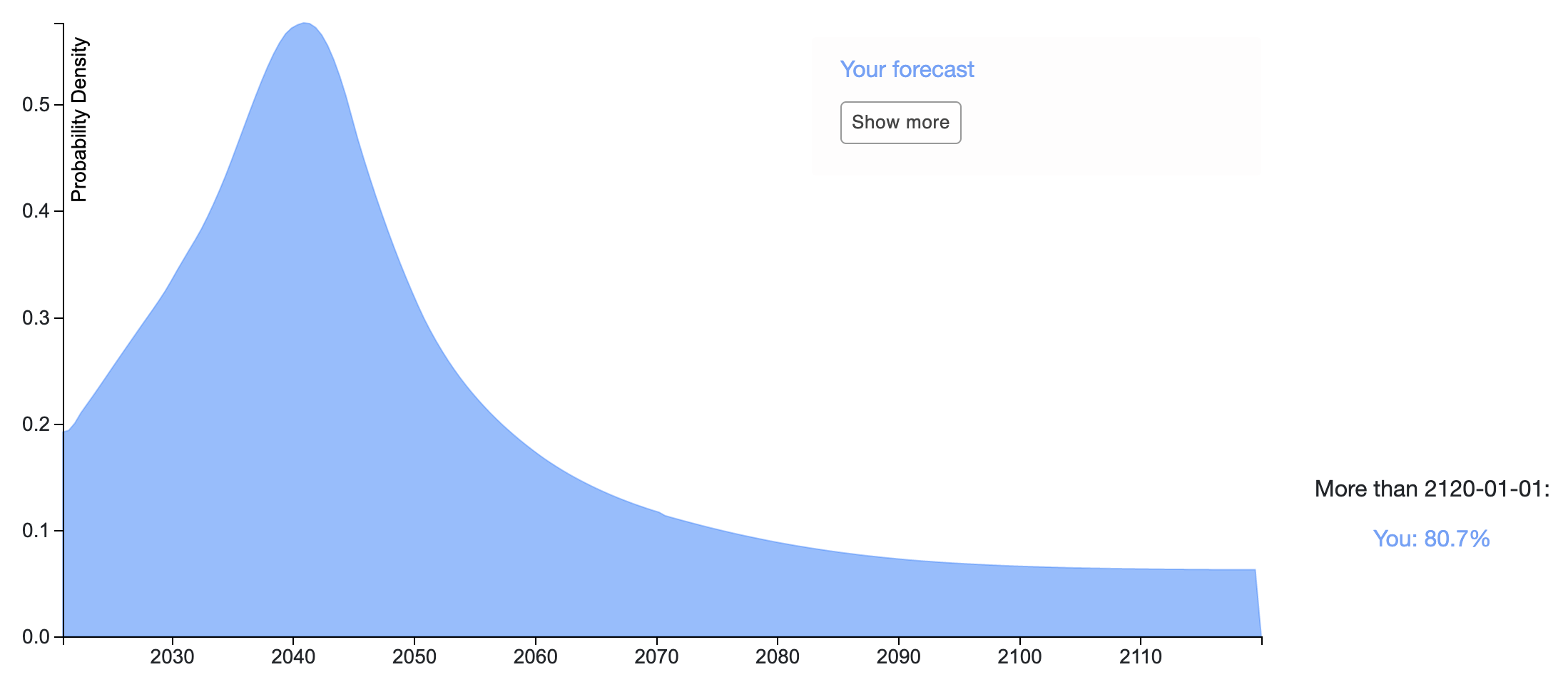

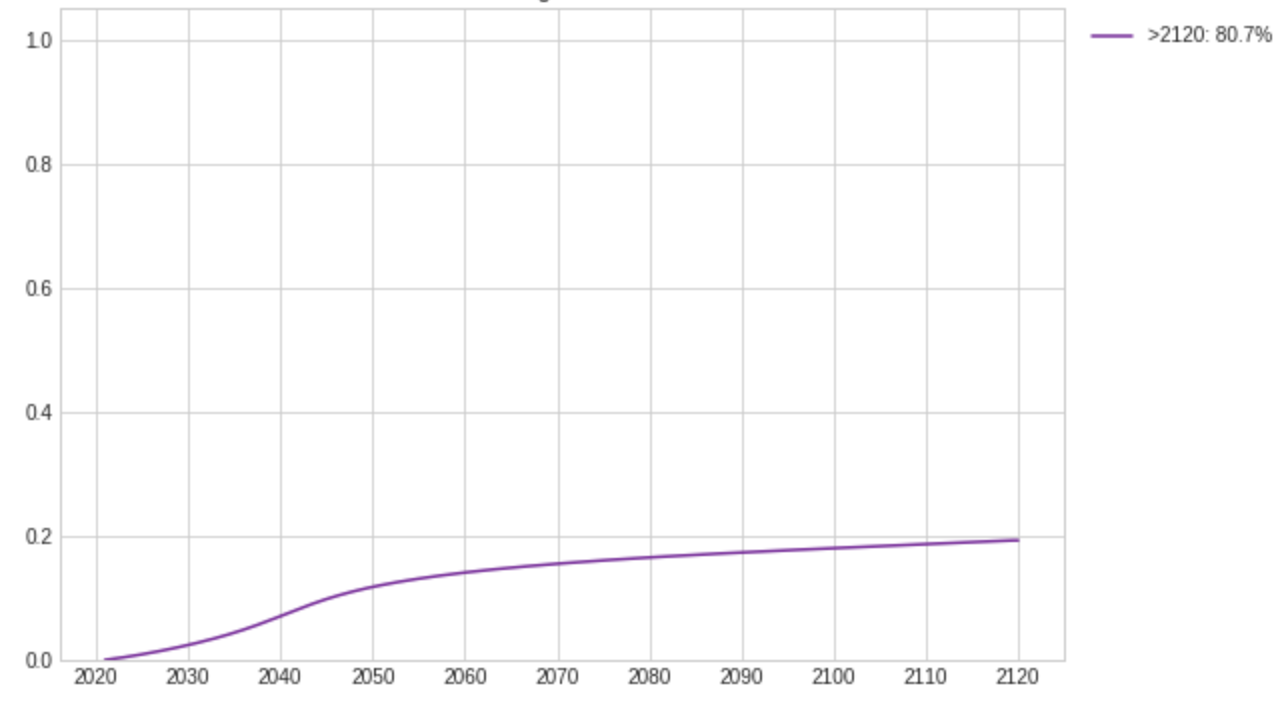

Here's a distribution averaging all the predictions (last updated 9/26/20). The averaged distribution puts 19.3% probability before 2120 and 80.7% after 2120. The year within 2021-2120 with the greatest risk is 2040.

Here's a CDF of the averaged distribution:

Answers

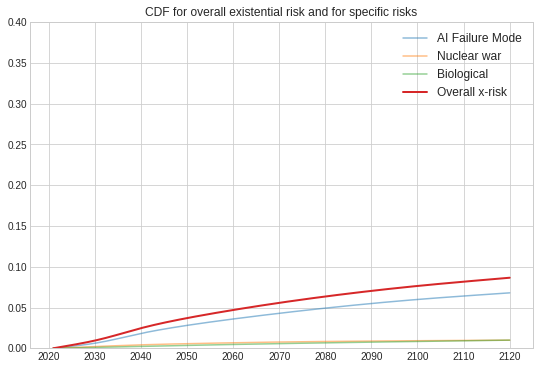

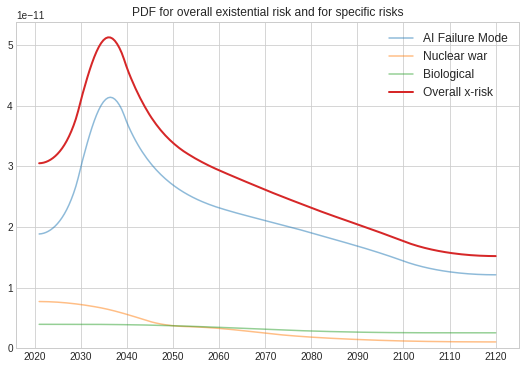

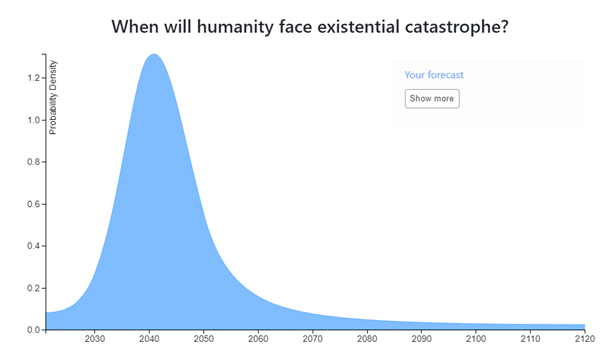

I've made a distribution based on the Metaculus community distributions:

(I used this Colab notebook for generating the plots from Elicit distributions over specific risks. My Elicit snapshot is here).

In 2019, Metaculus posted the results of a forecasting series on catastrophic risk (>95% of humans die) by 2100. The overall risk was 9.2% for the community forecast (with 7.3% for AI risk). To convert this to a forecast for existential risk (100% dead), I assumed 6% risk from AI, 1% from nuclear war, and 0.4% from biological risk. To get timelines, I used Metaculus forecasts for when the AI catastrophe occurs and for when great power war happens (as a rough proxy for nuclear war). I put my own uninformative distribution on biological risk.

This shouldn't be taken as the "Metaculus" forecast, as I've made various extrapolations. Moreover, Metaculus has a separate question about x-risk, where the current forecast is 2% by 2100. This seems to me hard to reconcile with the 7% chance of AI killing >95% of people by 2100, and so I've used the latter as my source.

Technical note: I normalized the timeline pdfs based on the Metaculus binary probabilities in this table, and then treated them as independent sources of x-risk using the Colab. This inflates the overall x-risk slightly. However, this could be fixed by re-scaling the cdfs.

↑ comment by MichaelA · 2020-09-23T20:16:34.680Z · LW(p) · GW(p)

The overall risk was 9.2% for the community forecast (with 7.3% for AI risk). To convert this to a forecast for existential risk (100% dead), I assumed 6% risk from AI, 1% from nuclear war, and 0.4% from biological risk

I think this implies you think:

- AI is ~4 or 5 times (6% vs 1.3%) as likely to kill 100% of people as to kill between 95 and 100% of people

- Everything other than AI is roughly equally likely (1.5% vs 1.4%) to kill 100% of people as to kill between 95% and 100% of people

Does that sound right to you? And if so, what was your reasoning?

I ask out of curiosity, not because I disagree. I don't have a strong view here, except perhaps that AI is the risk with the highest ratio of "chance it causes outright extinction" to "chance it causes major carnage" (and this seems to align with your views).

Replies from: Owain_Evans↑ comment by Owain_Evans · 2020-09-24T10:17:26.581Z · LW(p) · GW(p)

The Metaculus community forecast has chance of >95% dead (7.5%) close to chance of >10% dead (9.7%) for AI. Based on this and my own intuition about how AI risks "scale", I extrapolated to 6% for 100% dead. For biological and nuclear war, there's a much bigger drop off from >10% to >95% from the community. It's hard to say what to infer from this about the 100% case. There are good arguments that 100% is unlikely from both, but some of those arguments would also cut against >95%. I didn't do a careful examination and so take all these numbers with a grain of salt.

↑ comment by MichaelA · 2020-09-23T20:10:18.842Z · LW(p) · GW(p)

Very interesting, thanks for sharing! This seems like a nice example of combining various existing predictions to answer a new question.

a forecast for existential risk (100% dead)

It seems worth highlighting that extinction risk (risk of 100% dead) is a (big) subset [EA · GW] of existential risk (risk of permanent and drastic destruction of humanity's potential), rather than those two terms being synonymous. If your forecast was for extinction risk only, then the total existential risk should presumably be at least slightly higher, due to risks of unrecoverable collapse or unrecoverable dystopia.

(I think it's totally ok and very useful to "just" forecast extinction risk. I just think it's also good to be clear about what one's forecast is of.)

Replies from: Owain_Evans↑ comment by Owain_Evans · 2020-09-24T10:06:37.224Z · LW(p) · GW(p)

Good points. Unfortunately it seems even harder to infer "destruction of potential" from the Metaculus forecasts. It seems plausible that AI could cause destruction of potential without any deaths at all, and so this wouldn't be covered by the Metaculus series.

Replies from: MichaelA↑ comment by MichaelA · 2020-09-25T06:35:34.107Z · LW(p) · GW(p)

Yeah, totally agreed.

I also think it's easier to forecast extinction in general, partly because it's a much clearer threshold, whereas there are some scenarios that some people might count as an "existential catastrophe" and others might not. (E.g., Bostrom's "plateauing — progress flattens out at a level perhaps somewhat higher than the present level but far below technological maturity".)

Big thanks to Amanda, Owain, and others at Ought for their work on this!

My overall forecast is pretty low confidence — particularly with respect to the time parameter.

Snapshot is here: https://elicit.ought.org/builder/uIF9O5fIp

(Please ignore any other snapshots in my name, which were submitted in error)

My calculations are in this spreadsheet

For my prediction (which I forgot to save as a linkable snapshot before refreshing, oops) roughly what I did was take my distribution for AGI timing [LW(p) · GW(p)] (which ended up quite close to the thread average), add an uncertain but probably short delay for a major x-risk factor (probably superintelligence) to appear as a result, weight it by the probability that it turns out badly instead of well (averaging to about 50% because of what seems like a wide range of opinions among reasonable well-informed people, but decreasing over time to represent an increasing chance that we'll know what we're doing), and assume that non-AI risks are pretty unlikely to be existential and don't affect the final picture very much. To an extent, AGI can stand in for highly advanced technology in general.

If I start with a prior where the 2030s and the 2090s are equally likely, it feels kind of wrong to say I have the 7-to-1 evidence for the former that I'd need for this distribution. On the other hand, if I made the same argument for the 2190s and the 2290s, I'd quickly end up with an unreasonable distribution. So I don't know.

↑ comment by MichaelA · 2020-09-23T12:32:32.325Z · LW(p) · GW(p)

Interesting, thanks for sharing.

an uncertain but probably short delay for a major x-risk factor (probably superintelligence) to appear as a result

I had a similar thought, though ultimately was too lazy to try to actually represent it. I'd be interested to hear what what size of delay you used, and what your reasoning for that was.

averaging to about 50% because of what seems like a wide range of opinions among reasonable well-informed people

Was your main input into this parameter your perceptions of what other people would believe about this parameter? If so, I'd be interested to hear whose beliefs you perceive yourself to be deferring to here. (If not, I might not want to engage in that discussion, to avoid seeming to try to pull an independent belief towards average beliefs of other community members, which would seem counterproductive in a thread like this.)

Replies from: daniel-kokotajlo, steven0461↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2020-09-23T13:45:21.810Z · LW(p) · GW(p)

IMO the point where it's too late for us to influence the future will come shortly after human-level AGI, partly because superintelligence might happen shortly after HLAGI and partly because even if it doesn't we might lose control of the future anyway -- for world takeover, a godlike advantage is not necessary. [LW · GW]

↑ comment by steven0461 · 2020-09-23T17:40:26.470Z · LW(p) · GW(p)

I'd be interested to hear what what size of delay you used, and what your reasoning for that was.

I didn't think very hard about it and just eyeballed the graph. Probably a majority of "negligible on this scale" and a minority of "years or (less likely) decades" if we've defined AGI too loosely and the first AGI isn't a huge deal, or things go slowly for some other reason.

Was your main input into this parameter your perceptions of what other people would believe about this parameter?

Yes, but only because those other people seem to make reasonable arguments, so that's kind of like believing it because of the arguments instead of the people. Some vague model of the world is probably also involved, like "avoiding AI x-risk seems like a really hard problem but it's probably doable with enough effort and increasingly many people are taking it very seriously".

If so, I'd be interested to hear whose beliefs you perceive yourself to be deferring to here.

MIRI people and Wei Dai for pessimism (though I'm not sure it's their view that it's worse than 50/50), Paul Christiano and other researchers for optimism.

Replies from: MichaelA↑ comment by MichaelA · 2020-09-23T20:04:02.427Z · LW(p) · GW(p)

Thanks for those responses :)

MIRI people and Wei Dai for pessimism (though I'm not sure it's their view that it's worse than 50/50), Paul Christiano and other researchers for optimism.

It does seem odd to me that, if you aimed to do something like average over these people's views (or maybe taking a weighted average, weighting based on the perceived reasonableness of their arguments), you'd end up with a 50% credence on existential catastrophe from AI. (Although now I notice you actually just said "weight it by the probability that it turns out badly instead of well"; I'm assuming by that you mean "the probability that it results in existential catastrophe", but feel free to correct me if not.)

One MIRI person (Buck Schlegris) has indicated they think there's a 50% chance of that. One other MIRI-adjacent person gives estimates for similar outcomes in the range of 33-50%. I've also got general pessimistic vibes from other MIRI people's writings, but I'm not aware of any other quantitative estimates from them or from Wei Dai. So my point estimate for what MIRI people think would be around 40-50%, and not well above 50%.

And I think MIRI is widely perceived as unusually pessimistic (among AI and x-risk researchers; not necessarily among LessWrong users). And people like Paul Christiano give something more like a 10% chance of existential catastrophe from AI. (Precisely what he was estimating was a little different, but similar.)

So averaging across these views would seem to give us something closer to 30%.

Personally, I'd also probably include various other people who seem thoughtful on this and are actively doing AI or x-risk research - e.g., Rohin Shah, Toby Ord - and these people's estimates seem to usually be closer to Paul than to MIRI (see also [EA · GW]). But arguing for doing that would be arguing for a different reasoning process, and I'm very happy with you using your independent judgement to decide who to defer to; I intend this comment to instead just express confusion about how your stated process reached your stated output.

(I'm getting these estimates from my database of x-risk estimates [EA · GW]. I'm also being slightly vague because I'm still feeling a pull to avoid explicitly mentioning other views and thereby anchoring this thread.)

(I should also note that I'm not at all saying to not worry about AI - something like a 10% risk is still a really big deal!)

Replies from: steven0461↑ comment by steven0461 · 2020-10-07T23:58:42.376Z · LW(p) · GW(p)

Yes, maybe I should have used 40% instead of 50%. I've seen Paul Christiano say 10-20% elsewhere. Shah and Ord are part of whom I meant by "other researchers". I'm not sure which of these estimates are conditional on superintelligence being invented. To the extent that they're not, and to the extent that people think superintelligence may not be invented, that means they understate the conditional probability that I'm using here. I think lowish estimates of disaster risks might be more visible than high estimates because of something like social desirability, but who knows.

Replies from: MichaelA↑ comment by MichaelA · 2020-10-08T06:56:26.788Z · LW(p) · GW(p)

I'm not sure which of these estimates are conditional on superintelligence being invented. To the extent that they're not, and to the extent that people think superintelligence may not be invented, that means they understate the conditional probability that I'm using here.

Good point. I'd overlooked that.

I think lowish estimates of disaster risks might be more visible than high estimates because of something like social desirability, but who knows.

(I think it's good to be cautious about bias arguments, so take the following with a grain of salt, and note that I'm not saying any of these biases are necessarily the main factor driving estimates. I raise the following points only because the possibility of bias has already been mentioned.)

I think social desirability bias could easily push the opposite way as well, especially if we're including non-academics who dedicate their jobs or much of their time to x-risks (which I think covers the people you're considering, except that Rohin is sort-of in academia). I'd guess the main people listening to these people's x-risk estimates are other people who think x-risks are a big deal, and higher x-risk estimates would tend to make such people feel more validated in their overall interests and beliefs.

I can see how something like a bias towards saying things that people take seriously and that don't seem crazy (which is perhaps a form of social desirability bias) could also push estimates down. I'd guess that that that effect is stronger the closer one gets to academia or policy. I'm not sure what the net effect of the social desirability bias type stuff would be on people like MIRI, Paul, and Rohin.

I'd guess that the stronger bias would be selection effects in who even makes these estimates. I'd guess that people who work on x-risks have higher x-risk estimates than people who don't and who have thought about odds of x-risk somewhat explicitly. (I think a lot of people just wouldn't have even a vague guess in mind, and could swing from casually saying extinction is likely in the next few decades to seeing that idea as crazy depending on when you ask them.)

Quantitative x-risk estimates tend to come from the first group, rather than the latter, because the first group cares enough to bother to estimate this. And we'd be less likely to pay attention to estimates from the latter group anyway, if they existed, because they don't seem like experts - they haven't spent much time thinking about the issue. But they haven't spent much time thinking about it because they don't think the risk is high, so we're effectively selecting who to listen to the estimates of based in part on what their estimates would be.

I'd still do similar myself - I'd pay attention to the x-risk "experts" rather than other people. And I don't think we need to massively adjust our own estimates in light of this. But this does seem like a reason to expect the estimates are biased upwards, compared to the estimates we'd get from a similarly intelligent and well-informed group of people who haven't been pre-selected for a predisposition to think the risk is somewhat high.

Replies from: steven0461↑ comment by steven0461 · 2020-10-08T17:06:56.287Z · LW(p) · GW(p)

Mostly I only start paying attention to people's opinions on these things once they've demonstrated that they can reason seriously about weird futures, and I don't think I know of any person who's demonstrated this who thinks risk is under, say, 10%. (edit: though I wonder if Robin Hanson counts)

Replies from: MichaelA, MichaelA↑ comment by MichaelA · 2020-10-09T06:57:11.159Z · LW(p) · GW(p)

I don't think I know of any person who's demonstrated this who thinks risk is under, say, 10%

If you mean risk of extinction or existential catastrophe from AI at the time AI is developed, it seems really hard to say, as I think that that's been estimated even less often than other aspects of AI risk (e.g. risk this century) or x-risk as a whole.

I think the only people (maybe excluding commenters who don't work on this professionally) who've clearly given a greater than 10% estimate for this are:

- Buck Schlegris (50%)

- Stuart Armstrong (33-50% chance humanity doesn't survive AI)

- Toby Ord (10% existential risk from AI this century, but 20% for when the AI transition happens)

Meanwhile, people who I think have effectively given <10% estimates for that (judging from estimates that weren't conditioning on when AI was developed; all from my database):

- Very likely MacAskill (well below 10% for extinction as a whole in the 21st century)

- Very likely Ben Garfinkel (0-1% x-catastrophe from AI this century)

- Probably the median FHI 2008 survey respondent (5% for AI extinction in the 21st century)

- Probably Pamlin & Armstrong in a report (0-10% for unrecoverable collapse extinction from AI this century)

- But then Armstrong separately gave a higher estimate

- And I haven't actually read the Pamlin & Armstrong report

- Maybe Rohin Shah (some estimates in a comment thread)

(Maybe Hanson would also give <10%, but I haven't seen explicit estimates from him, and his reduced focus on and "doominess" from AI may be because he thinks timelines are longer and other things may happen first.)

I'd personally consider all the people I've listed to have demonstrated at least a fairly good willingness and ability to reason seriously about the future, though there's perhaps room for reasonable disagreement here. (With the caveat that I don't know Pamlin and don't know precisely who was in the FHI survey.)

↑ comment by MichaelA · 2020-10-09T06:35:02.114Z · LW(p) · GW(p)

Mostly I only start paying attention to people's opinions on these things once they've demonstrated that they can reason seriously about weird futures

[tl;dr This is an understandable thing to do, but does seem to result in biasing one's sample towards higher x-risk estimates]

I can see the appeal of that principle. I partly apply such a principle myself (though in the form of giving less weight to some opinions, not ruling them out).

But what if it turns out the future won't be weird in the ways you're thinking of? Or what if it turns out that, even if it will be weird in those ways, influencing it is too hard, or just isn't very urgent (i.e., the "hinge of history" is far from now), or is already too likely to turn out well "by default" (perhaps because future actors will also have mostly good intentions and will be more informed).

Under such conditions, it might be that the smartest people with the best judgement won't demonstrate that they can reason seriously about weird futures, even if they hypothetically could, because it's just not worth their time to do so. In the same way as how I haven't demonstrated my ability to reason seriously about tax policy, because I think reasoning seriously about the long-term future is a better use of my time. Someone who starts off believing tax policy is an overwhelmingly big deal could then say "Well, Michael thinks the long-term future is what we should focus on instead, but how why should I trust Michael's view on that when he hasn't demonstrated he can reason seriously about the importance and consequences of tax policy?"

(I think I'm being inspired here by Trammell's interested posting "But Have They Engaged With The Arguments?" There's some LessWrong discussion - which I haven't read - of an early version here [LW · GW].)

I in fact do believe we should focus on long-term impacts, and am dedicating my career to doing so, as influencing the long-term future seems sufficiently likely to be tractable, urgent, and important. But I think there are reasonable arguments against each of those claims, and I wouldn't be very surprised if they turned out to all be wrong. (But I think currently we've only had a very small part of humanity working intensely and strategically on this topic for just ~15 years, so it would seem too early to assume there's nothing we can usefully do here.)

And if so, it would be better to try to improve the short-term future, which further future people can't help us with, and then it would make sense for the smart people with good judgement to not demonstrate their ability to think seriously about the long-term future. So under such conditions, the people left in the sample you pay attention to aren't the smartest people with the best judgement, and are skewed towards unreasonably high estimates of the tractability, urgency, and/or importance of influencing the long-term future.

To emphasise: I really do want way more work on existential risks and longtermism more broadly! And I do think that, when it comes to those topics, we should pay more attention to "experts" who've thought a lot about those topics than to other people (even if we shouldn't only pay attention to them). I just want us to be careful about things like echo chamber effects and biasing the sample of opinions we listen to.

Epistemic status: extremely uncertain

I created my Elicit forecast by:

- Slightly adjusting down the 1/6 estimate of existential risk during the next century made in The Precipice

- Making the shape of the distribution roughly give a little more weight to time periods when AGI is currently forecasted to be more likely to come [LW(p) · GW(p)]

[I work for Ought.]

For my prediction, like those of others, I basically just went with my AGI timeline multiplied by 50% (representing my uncertainty about how dangerous AGI is; I feel like if I thought a lot more about it the number could go up to 90% or down to 10%) and then added a small background risk rate from everything else combined (nuclear war, bio stuff, etc.)

I didn't spend long on this so my distribution probably isn't exactly reflective of my views, but it's mostly right.

Note that I'm using a definition of existential catastrophe where the date it happens is the date it becomes too late to stop it happening, not the date when the last human dies.

For some reason I can't drag-and-drop images into here; when I do it just opens up a new window.

↑ comment by MichaelA · 2020-09-23T12:46:13.938Z · LW(p) · GW(p)

(Just a heads up that the link leads back to this thread, rather than to your Elicit snapshot :) )

Replies from: daniel-kokotajlo↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2020-09-23T13:41:59.605Z · LW(p) · GW(p)

Oops, thanks!

Elicit prediction: https://elicit.ought.org/builder/0n64Yv2BE

Epistemic Status: High degree of uncertainty, thanks to AI timeline prediction and unknowns such as unforeseen technologies and power of highly developed AI.

My Existential Risk (ER) probability mass is almost entirely formed from the risk of unfriendly Artificial Super Intelligence (ASI) and so is heavily influenced my predicted AI timelines. (I think AGI is most likely to occur around 2030 +-5 years, and will be followed within 0-4 years of ASI, with a singularity soon after that, see my AI timelines post: https://www.lesswrong.com/posts/hQysqfSEzciRazx8k/forecasting-thread-ai-timelines?commentId=zaWhEdteBG63nkQ3Z [LW(p) · GW(p)] ).

I do not think any conventional threat such as nuclear war, super pandemic or climate change is likely to be an ER, and super volcanoes or asteroid impacts are very unlikely. I think this century is unique and will constitute 99% of the bulk of ER, with the last <1% being from more unusual threats such as simulation being turned off, false vacuum collapse, or hostile alien ASI. But also, for unforeseen or unimagined threats.

I think the most likely decade for the creation of ASI will be the 30’s, with an 8% ER chance (From not being able to solve the control problem or coordinate to implement it even if solved).

Considering AI timeline uncertainty as well as how long an ASI takes to acquire techniques or technologies necessary to wipe out or lock out humanity I think an 11% ER chance for the 40’s. Part of the reason this is higher than the 30’s ER estimate is to accommodate the possibility of a delayed treacherous turn.

Once past the 50’s I think we will be out of most of the danger (only 6% for the rest of the century), and potential remaining ER’s such as runaway nanotech or biotech will not be a very large risk as ASI would be in firm control of civilisation by then. Even then though some danger remains for the rest of the century from unforeseen black ball technologies, however interstellar civilisational spread (ASI high percent of speed of light probes) by early next century should have reduced nearly all threats to less than ERs.

So overall I think the 21st Century will pose a 25.6% chance of ER. See the Elicit post for the individual decade breakdowns.

Note: I made this prediction before looking at the Effective Altruism Database of Existential Risk Estimates.

↑ comment by MichaelA · 2020-09-23T12:44:46.723Z · LW(p) · GW(p)

(Minor & meta: I'd suggest people take screenshots which include the credence on "More than 2120-01-01" on the right, as I think that's a quite important part of one's prediction. But of course, readers can still find that part of your prediction by reading your comment or clicking the link - it's just not highlighted as immediately.)

↑ comment by MichaelA · 2020-09-23T12:37:53.599Z · LW(p) · GW(p)

I do not think any conventional threat such as nuclear war, super pandemic or climate change is likely to be an ER

Are you including risks from advanced biotechnology in that category? To me, it would seem odd to call that a "conventional threat"; that category sounds to me like it would refer to things we have a decent amount of understanding of and experience with. (Really this is more of a spectrum, and our understanding of and experience with risks from nuclear war and climate change is of course limited in key ways as well. But I'd say it's notably less limited than is the case with advanced biotech or advanced AI.)

with the last <1% being from more unusual threats such as simulation being turned off, false vacuum collapse, or hostile alien ASI. But also, for unforeseen or unimagined threats.

It appears to me that there are some important risks that have been foreseen and imagined which you're not accounting for. Let me know if you want me to say more; I hesitate merely because I'm wary of pulling independent views towards community views in a thread like this, not for infohazard [LW · GW] reasons (the things I have in mind are widely discussed and non-exotic).

Note: I made this prediction before looking at the Effective Altruism Database of Existential Risk Estimates.

I think it's cool that you made this explicit, to inform how and how much people update on your views if they've already updated on views in that database :)

Replies from: VermillionStuka↑ comment by Vermillion (VermillionStuka) · 2020-09-23T23:23:20.213Z · LW(p) · GW(p)

I'm not including advanced biotech in my conventional threat category; I really should have elaborated more on what I meant: Conventional risks are events that already have a background chance of happening (as of 2020 or so) and does not include future technologies.

I make the distinction because I think that we don’t have enough time left before ASI to develop such advanced tech ourselves, so as an ASI would be overseeing their development and deployment, which reduces their threat massively I think (Even if used by a rouge AI I would say the ER was from the AI not the tech). And that time limit goes not just for tech development but also runaway optimisation processes and societal forces (IE in-optimal value lock in), as a friendly ASI should have enough power to bring them to heel.

My list of threats wasn’t all inclusive, I paid lip service to some advanced tech and some of the more unusual scenarios, but generally I just thought past ASI nearly nothing would pose a real threat so didn’t focus on it. I am going read through the database of existential threats though, does it include what you were referring too? (“important risks that have been foreseen and imagined which you're not accounting for”).

Thanks for the feedback :)

Replies from: MichaelA↑ comment by MichaelA · 2020-09-24T06:41:47.906Z · LW(p) · GW(p)

Conventional risks are events that already have a background chance of happening (as of 2020 or so) and does not include future technologies.

Yeah, that aligns with how I'd interpret the term. I asked about advanced biotech because I noticed it was absent from your answer unless it was included in "super pandemic", so I was wondering whether you were counting it as a conventional risk (which seemed odd) or excluding it from your analysis (which also seems odd to me, personally, but at least now I understand your short-AI-timelines-based reasoning for that!).

I am going read through the database of existential threats though, does it include what you were referring too?

Yeah, I think all the things I'd consider most important are in there. Or at least "most" - I'd have to think for longer in order to be sure about "all".

There are scenarios that I think aren't explicitly addressed in any estimates that database, like things to do with whole-brain emulation or brain-computer interfaces, but these are arguably covered by other estimates. (I also don't have a strong view on how important WBE or BCI scenarios are.)

13 comments

Comments sorted by top scores.

comment by Owain_Evans · 2020-09-22T17:57:18.202Z · LW(p) · GW(p)

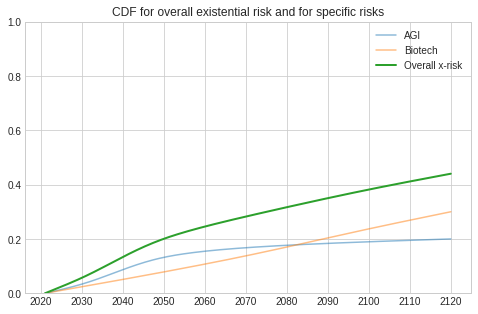

We made a Colab notebook that lets you forecast total x-risk as a combination of specific risks. For example, you construct pdfs for x-risk from AGI and biotech, and the notebook will calculate a pdf for overall risk. This pdf is ready to display as an answer. (Note: this example assumes AGI and biotech are the main risks and are statistically independent.)

The notebook will also display the cdf for each specific risk and for overall risk:

As a bonus, you can use this notebook to turn your Elicit pdf for overall x-risk into a cdf. Just paste your snapshot url into first url box and run the cell below.

↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2020-09-23T08:55:10.704Z · LW(p) · GW(p)

I'm having some technical difficulties I think. When I run the last thing that's supposed to generate my combined distribution, I get the error "NameError: name 'labels' is not defined"

Replies from: Owain_Evans↑ comment by Owain_Evans · 2020-09-23T10:10:10.187Z · LW(p) · GW(p)

You need to first run all the cells in "Setup Code" (e.g. by selecting "Runtime > Run before"). Then run the cell with the form input ("risk1label", "risk1url", etc), and then run the cell that plots your pdf/cdf. It sounds like you're running the last cell without having run the middle one.

Replies from: daniel-kokotajlo↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2020-09-23T11:54:45.929Z · LW(p) · GW(p)

Thanks!

comment by Rohin Shah (rohinmshah) · 2020-09-22T15:49:42.107Z · LW(p) · GW(p)

How do you tell at what particular point in time, humanity's long-term potential has been destroyed?

For example, suppose that some existing leader uses an increasing number of AI-enabled surveillance techniques + life-extension technology to enable robust totalitarianism forever, and we stay stuck on Earth and never use the cosmic endowment. When did the existential catastrophe happen?

Similarly, when did the existential catastrophe happen in Part 1 of What Failure Looks Like [AF · GW]?

Replies from: daniel-kokotajlo, Benito, Angela Pretorius↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2020-09-22T21:16:05.779Z · LW(p) · GW(p)

IMO, the answer is: "At the point where it's too late for us longtermist EAs to do much of anything about it."

(EDIT: Where "much of anything" is relative to other periods between now and then.)

↑ comment by Rohin Shah (rohinmshah) · 2020-09-22T21:44:36.365Z · LW(p) · GW(p)

Yeah, I think that's a plausible answer, though note that it is still pretty vague and I wouldn't expect agreement on it even in hindsight. Think about how people disagree to what extent key historical events were predetermined or not. For example, I feel like there would be a lot of disagreement on "at what point was it too late for <smallish group of influential people> to prevent World War 1?"

Replies from: daniel-kokotajlo↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2020-09-23T07:45:10.076Z · LW(p) · GW(p)

I agree it's vague and controversial, but that's OK in this case because it's an important variable we probably use in our decisions anyway. We seek to maximize our impact, so we do some sort of search over possible plans, and a good heuristic is to contrain our search to plans that take effect before it is too late. (Of course, this gets things backwards in an important sense: Whether or not it is too late depends on whether there are any viable plans, not the other way round. But it's still valid I think because we can learn facts about the world that make it very likely that it's too late, saving us the effort of considering specific plans.)

↑ comment by Ben Pace (Benito) · 2020-09-22T22:24:31.543Z · LW(p) · GW(p)

Suppose there's a point where it's clearly possible that humanity explores the universe and flourishes. Suppose there's a point where it's clearly no longer possible that humanity explores the universe and flourishes. Assign 0% of existential risk at the first point, and 100% at the second point. Draw a straight line between.

Then make everything proportional to the likelihood of things going off the rails at that time period.

↑ comment by Angela Pretorius · 2020-09-22T18:17:25.598Z · LW(p) · GW(p)

I agree. I mean, when would you say that the existential catastrophe happens in the following scenario?

Suppose that technological progress starts to slow down and, as a result, economic growth fails to keep pace with population growth. Living standards decline over the next several decades until the majority of the world's population is living in extreme poverty. For a few thousand years the world remains in a malthusian trap. Then there is a period of rapid technological progress for a few hundred years which allows a significant portion of the population to escape poverty and acheive a comfortable standard of living. Then technological progress starts to slow down again. The whole cycle repeats many times until some fluke event causes human extinction.

comment by MichaelA · 2020-09-22T06:28:24.759Z · LW(p) · GW(p)

Here are a couple sources people might find useful for guiding how they try to break this question down and reason about it:

- An analysis and evaluation of methods currently used to quantify the likelihood of existential hazards by Beard et al. (Beard being a CSER researcher)

- Quantifying the Probability of Existential Catastrophe: A Reply to Beard et al. by Seth Baum of GCRI

↑ comment by MichaelA · 2020-09-23T07:37:27.758Z · LW(p) · GW(p)

I'll also hesitantly mention my database of existential risk estimates [EA · GW].

I hesitate because I suspect it's better if most people who are willing to just make a forecast here without having recently looked at the predictions in that database, so we get a larger collection of more independent views.

But I guess people can make their own decision about whether to look at the database, perhaps for cases where:

- People just feel too unsure where to start with forecasting this to bother trying, but if they saw other people's forecasts they'd be willing to come up with their own forecast that does more than just totally parroting the existing forecasts

- And it's necessary to do more than just parroting, as the existing forecasts are about % chance by a given date, not the % chance at each date over a period

- People could perhaps come up with clever ways to decide how much weight to give each forecast and how to translate them into an Elicit snapshot

- People make their own forecast, but then want to check the database and consider making tweaks before posting it here (ideally also showing here what their original, independent forecast was)

comment by MichaelA · 2020-09-22T06:21:11.402Z · LW(p) · GW(p)

Thanks for making this thread!

I should say that I'd give very little weight to both my forecast and my reasoning. Reasons for that include that:

- I'm not an experienced forecaster

- I don't have deep knowledge on relevant specifics (e.g., AI paradigms, state-of-the-art in biotech)

- I didn't spend a huge amount of time on my forecast, and used pretty quick-and-dirty methods

- I drew on existing forecasts to some extent (in particular, the LessWrong Elicit AI timelines thread [LW · GW] and Ord's x-risk estimates). So if you updated on those forecasts and then also updated on my forecast as if it was independent of them, you'd be double-counting some views and evidence

So I'm mostly just very excited to see other people's forecasts, and even more excited to see how they reason about and break down the question!