On (Not) Reading Papers

post by Jan (jan-2) · 2021-12-21T09:57:19.416Z · LW · GW · 10 commentsThis is a link post for https://universalprior.substack.com/p/on-not-reading-papers

Contents

The emperor's new paper. And yet it moves. “Not reading papers” is a fix, not a solution. None 11 comments

The emperor's new paper.

In a recent lunch conversation, a colleague of mine explained her system for reading scientific papers. She calls the system tongue-in-cheek "not reading the paper". It goes as follows:

- Read the title and predict what the abstract will say. If you are correct, stop reading the paper.

- Otherwise, read the abstract and predict what the main results will be. If you are correct, stop reading the paper.

- Read the last paragraph of the introduction or the first paragraph of the discussion and predict the details of the results. If you are correct, stop reading the paper.

- etc.

I love this system, mostly because of the flippant name, but also because it points out that "the emperor is naked". It is a bit of an open secret that "not reading the paper" is the only feasible strategy for staying on top of the ever-increasing mountain of academic literature. But while everybody knows this, not everybody knows that everybody knows this [? · GW]. On discussion boards, some researchers claim with a straight face to be reading 100 papers per month. Skimming 100 papers per month might be possible, but reading them is not.

So why is this not common knowledge? A likely cause is the stinging horror that overcomes me every time I think about how science really shouldn't work. Our institutions are stuck in inadequate equilibria [LW · GW], the scientific method is too weak, statistical hypothesis testing is broken, nothing replicates, there is rampant fraud,... and now scientists don't even read academic papers? That's just what we needed.

And yet it moves. For some reason, we still get some new insight out of this mess that calls itself science. Even though scientists don't read papers, the entire edifice does not collapse. How is that? In this post, I will go through some obvious and less obvious problems that come from "not reading the paper", and how those turn out to not be that bad. In the end, I tee up a better system that I will expand on in part two of this post.

And yet it moves.

Argyle: Okay, level with me here. How can you just not read the paper? How do you know what's written in it?

Belka: Well, when I was new at this, I did read a lot of papers very carefully. I got stuck a lot, redid a lot of the calculations, double-checked the methodology, asked my colleagues...

Argyle: But you don't anymore?

Belka: Sometimes I do! That's what journal clubs and coffee breaks are good for. But I don't have the time to do this for most papers anymore. I also want to produce new research. And I also notice that, from experience, I usually have a pretty good idea of "where a paper is going". I usually have seen a poster presentation of the research somewhere, or read a thread on Twitter. Or I know the work that usually comes out of the senior author's lab and that lets me fill in the blanks.

Argyle: But those are only rules of thumb. What if you're wrong?

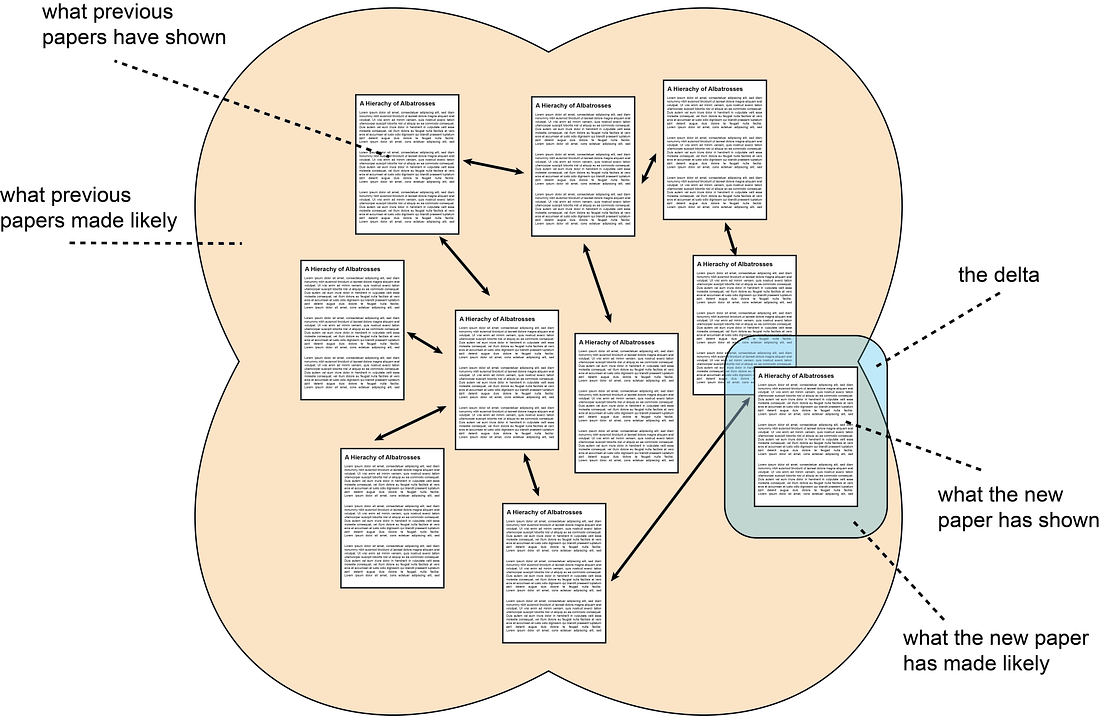

Belka: I do still look at the paper, and if it's substantially different from what I anticipate, I do read it more carefully. Also, if it's really surprising, then it'll bubble up on Twitter. I've become very efficient at identifying the delta between what I already know and what a paper adds.

Argyle: I'm not convinced, but that's not even my only issue. Let's assume you are able to roughly predict “the delta”. How are you able to assess reliability of their method? How do you know their study isn't fundamentally flawed?

Belka: I'd like to say that that's what peer-review is for, but I probably couldn't keep a straight face while saying that. Not all is lost though. You don't even have to dig into the methodology a lot, with just 2.5 minutes of reading and a smidgeon of common sense you can get a decent estimate of the probability that a study will replicate. And beyond that, you should probably always include substantial uncertainty in your assessment of any single study. Before you see a well-done meta-analysis of dozens of studies that find the same thing, you should probably not include the results in your mental database of facts. The trick is, I guess, to get a quick first-order approximation and to assume low reliability by default.

Argyle: But you are part of the problem! If people did read papers carefully then weak methodology would be noticed much faster and the solid studies could stand out.

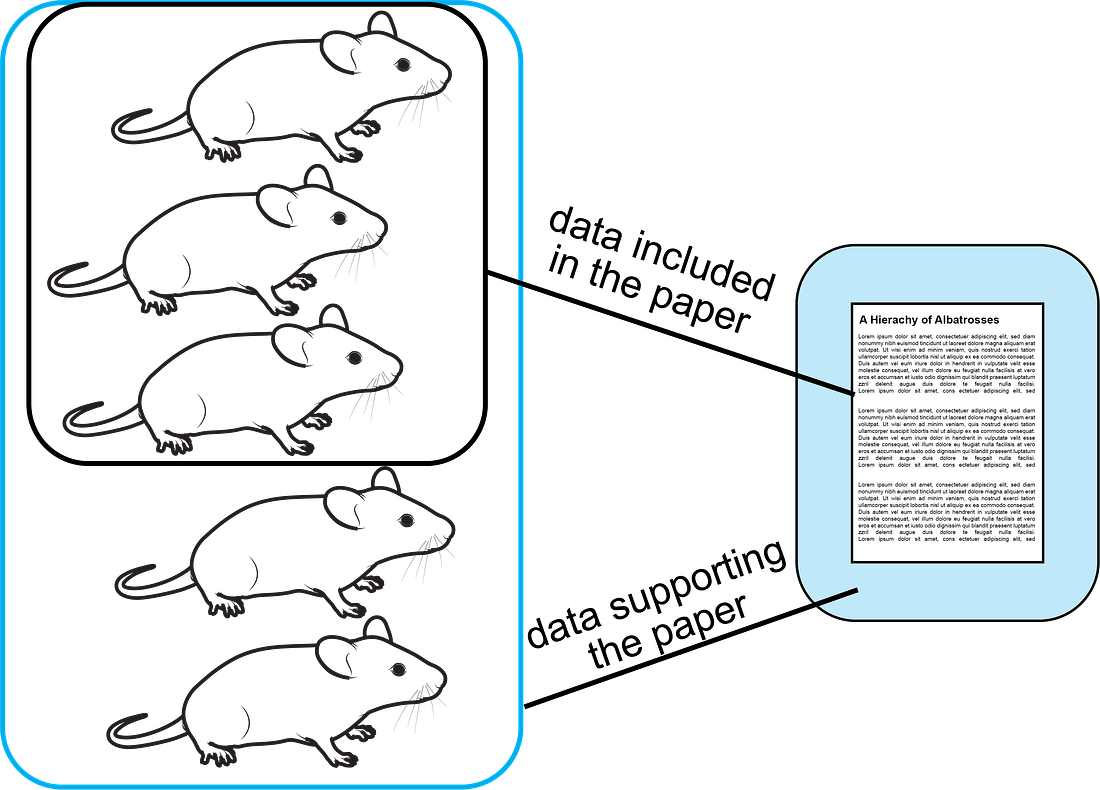

Belka: Ah, yes, that would be great, but the problem runs deeper. Even if you read every word carefully, who says that the authors included all the relevant information? Researchers routinely add antibiotics to their cultures to combat infections, but don't usually mention this fact in papers because it is seen as a red flag for a "dirty lab environment". Whether mice are housed in an enriched environment (with toys etc.) can have substantial effects on brain anatomy and function, but this is also usually not reported. And even if researchers wanted to report everything, "high impact journals" often reward extreme brevity and don't allow more than 2000-2500 words to summarize years of research. The list goes on and on. And even if the incentives weren't so misaligned, part of the problem is that we don't even know which factors are important. So a proper reliability estimate might simply be impossible given just what is written in the text.

Argyle: Oh boy, I'm getting dizzy.

Belka: Take a seat, it's normal. You'll get used to it.

Argyle: But... How can any of this work? Is there a benevolent science god that makes sure that everything turns out alright?

Belka: Ahahahahaha, that would be nice, wouldn't it? I think we have two non-divine factors on our side that help push science forward. One is the discrepancy between what you are "allowed to write in a paper" and the "actual reasons that lead you to believe something". When you do an experiment, your sample size is probably twice as large as what you report, but half of those animals cannot be included in the paper because there was something wrong with the animal, the procedure didn't work, there was bleeding, imaging quality is subpar... Those data points are not reported, but the experimenter still uses them update their beliefs [? · GW] about what is going on. The same goes for theoretical studies, where a lot of the auxiliary results just don't make it into the paper, but they still support the main message. There is a kind of narrative that emerges from all of those pieces and it rhymes in a way that's hard to put on paper, but it's still fortifying your hypothesis. The inferences in a scientific paper are usually supported by multiple strands of logic and converging evidence, not all of which are included in the paper.

Argyle: That's scant consolation. Aren't humans infamously good at deceiving themselves? Perhaps I can trust all those studies a bit more, but most of them will likely still turn out to be wrong?

Belka: You shouldn't underestimate the importance of a good narrative. There is some really weird link between beauty and truth that I don't quite understand, and it might also apply to "beautiful stories"...

Argyle: Not convinced.

Belka: I didn't think you would be. So yes, you are right, most things will probably turn out to be wrong (or at least not quite right). But this brings me to the second non-divine factor that helps science: it marches on. Entire subdisciplines of science can turn out to be baseless, trends and fads come and go, according to an urban legend a doctoral student in mathematics wrote their thesis about a mathematical object that has no non-trivial examples, and all those things are water under the bridge. There are millions of researchers doing varying degrees of sensible research, and science is just self-correcting enough to eventually get rid of bad strands of research. "Truth" has a certain inviolability that false science cannot fake indefinitely. If you actually know how the universe works, this will give you an edge that eventually lets you win. Academia's size ensures that a portion of it is research is solid. This portion will outlast the confused and wrong parts.

Argyle: You say "you", but you don't actually mean me, right? What happens if I don't happen to be lucky enough to pick a fruitful research question?

Belka: Not quite, the outcome is also partially up to you. There are some strategies for how to improve your chances in situations of very high uncertainty: do multiple different research project, switch research fields multiple times, don't get stuck, get 80% of outcomes from 20% of effort. Be an albatross. Don't read papers.

Argyle: Okay, you've lost me completely.

Belka: That's fine, this fictive dialogue is nearing its end anyways.

Argyle: What am I supposed to believe about "not reading papers" now? You haven't convinced me that it's actually better than reading a paper carefully.

Belka: That was never my aim, I also would prefer reading them carefully. But that's not really an option. My point is just that "not reading papers" is not as bad as it sounds.

Argyle: Hmm, that's fair, I guess, although I'm still wondering if you are just lazy and are using a lot of words to cover that up.

Belka: Fair. I guess it's really hard to know where my beliefs really come from.

Argyle: Hmm. Belka: Hmm.

Argyle: I wonder what happens to us when this fictive dialogue is over.

Belka: We can take a swim in the pool and watch the sunset. Argyle: I'd like that.

“Not reading papers” is a fix, not a solution.

As useful as the method might be, it is borne out of necessity, not because it is the optimal way of conducting research. The scientific paper, as it exists, has one central shortcoming: The author(s) do not know the reader. When writing a paper the author(s) try to break down their explanations to the smallest common denominator of hypothetical readers. As a consequence, no actual reader is served in an ideal way. A beginner might require a lot more background. An expert might care for more raw data and for more speculative discussions. A researcher from an adjacent field might need translations for certain terminology. A reader from the future might want to know how the study connects to future work. None of those get what they want from the average paper.

It would be so much better to just always have the chance to talk to the author instead of reading their paper. You could go quickly over the easy parts and go deep on the parts you find difficult. I think this is what is supposed to happen in question sessions after a talk, but when there are more than three people in the audience the idea rapidly falls apart. I know that this is what usually happens at conferences and workshops during tea time, where the senior researchers talk with each other to get actually useful descriptions of a research project from each other.

This clearly does not scale. What would be a better solution? Arbital tried something in this direction by offering explanations with different “speeds”, but the platform has become defunct. Elicit from Ought might have something like this on the menu at some point in the future, but at the moment results are not personalized (afaict). What we ideally might want is a “researcher in a box” that can answer questions about their field of expertise on demand. Whenever the original researcher has finished a project, the new insights become available from the box. This sounds implausible, after all, you can’t expect the researcher to hang around all day, can you? But I think there is a way to make it work with language models. In the next post in this series, I will outline my progress on this question. Stay tuned!

10 comments

Comments sorted by top scores.

comment by gabrielrecc (pseudobison) · 2021-12-21T13:46:31.281Z · LW(p) · GW(p)

The number of experiences I've had of reading an abstract and later finding that the results provided extraordinarily poor evidence for the claims (or alternatively, extraordinarily good evidence -- hard to predict what I will find if I haven't read anything by the authors before...) makes this system suspect. This seems partially conceded in the fictive dialogue ("You don't even have to dig into the methodology a lot") - but it helps to look at it at least a little. I knew a senior academic whose system was as follows: read the abstract (to see if the topic of the paper is of any interest at all) but don't believe any claims in it; then skim the methodology and results and update based on that. This makes a bit more sense to me.

comment by interstice · 2021-12-21T17:18:25.108Z · LW(p) · GW(p)

I didn't read the whole post, just the introduction and the bolded lines of the dialogue -- but from what I read, nice post! I think I agree. ETA: For things on the internet, I've also adopted the heuristic of reading the top few comments between reading the introduction and the body of the post.

comment by Charlie Steiner · 2021-12-23T20:14:25.557Z · LW(p) · GW(p)

An important modification in physics: look at the figures. This is such an important shortcut that it should be the absolute first thing on your mind when designing the figures for your paper.

Replies from: jan-2↑ comment by Jan (jan-2) · 2022-01-16T09:44:43.033Z · LW(p) · GW(p)

Agreed! The figures are also super important in neuroscience, and they are how I remember papers. It would be great to have something like CLIP for scientific figures...

comment by Elizabeth (pktechgirl) · 2021-12-21T10:29:05.171Z · LW(p) · GW(p)

This seems like it trusts the authors to be summarizing their own work accurately. Is that correct? Do you/your friend endorse that assumption?

Replies from: jan-2↑ comment by Jan (jan-2) · 2022-01-16T09:56:18.510Z · LW(p) · GW(p)

I'd make a distinction between: "The author believes (almost everything of) what they are writing." and "The experiments conducted for the paper provide sufficient evidence for what the author writes."

The first one is probably true more frequently than the second. But the first one is also more useful than the second: The beliefs of the author are not only formed by the set of experiments leading to the paper but also through conversations with colleagues, explored dead-ends, synthesizing relevant portions of the literature, ...

In contrast, the bar for making the second statement true is incredibly high.

So as a result, I don't take what's written in a paper for granted. Instead, I add it to my model of "Author X believes that ...". And I invest a lot more work before I translate this into "I believe that ...".

comment by Pattern · 2021-12-21T18:07:36.266Z · LW(p) · GW(p)

On discussion boards, some researchers claim with a straight face to be reading 100 papers per month.

That would be a feat even for someone like Nicolas Bourbaki.

And even if researchers wanted to report everything, "high impact journals" often reward extreme brevity and don't allow more than 2000-2500 words to summarize years of research.

Might be interesting to compare with twitter.

Argyle: What am I supposed to believe about "not reading papers" now? You haven't convinced me that it's actually better than reading a paper carefully.

Belka: That was never my aim, I also would prefer reading them carefully. But that's not really an option. My point is just that "not reading papers" is not as bad as it sounds

Read the important papers carefully. If it's obviously not relevant to what you're working on and you don't care, then don't read it, obviously. "Not reading papers" is obviously a strategy for figuring out what paper you should read next. If you know that already, then just read the paper.

comment by bideup · 2023-10-22T11:26:00.819Z · LW(p) · GW(p)

Sometimes I think trying to keep up with the endless stream of new papers is like watching the news - you can save yourself time and become better informed by reading up on history (ie classic papers/textbooks) instead.

This is a comforting thought, so I’m a bit suspicious of it. But also it’s probably more true for a junior researcher not committed to a particular subfield than someone who’s already fully specialised.

comment by papetoast · 2023-10-22T08:14:51.486Z · LW(p) · GW(p)

"high impact journals" often reward extreme brevity

I read the linked article and I don't think it supports your claim. The author referenced a few examples of extremely short abstracts and papers written with the intention of setting records for brevity, then talks about a conversation with his friend that shorter papers have been proliferating. The article does not provide a strong argument that high impact journals reward extreme brevity in general.