AGI is easier than robotaxis

post by Daniel Kokotajlo (daniel-kokotajlo) · 2023-08-13T17:00:29.901Z · LW · GW · 30 commentsContents

The direct method 1. Scale advantage for AGI: 2. Stakes advantage for AGI: 3. No similarly massive advantage for robotaxis: The indirect method None 30 comments

[Epistemic status: Hot take I wrote in 1 hour. We'll see in the comments how well it holds up.]

[Update: OK, seems like people hate this. I guess it was probably too hot and sloppy in retrospect. I should have framed it as "People seem to have a prior that AGI is a grand challenge that's way more difficult than mundane AI tech like self-driving cars, but I don't think this is justified, I think people aren't thinking through the difficulties involved and are instead basically going AGI-is-exciting-therefore-difficult, robotaxis-are-less-exciting-therefore-easier."]

Who would win in a race: AGI, or robotaxis? Which will be built first?

There are two methods:

- Tech companies build AGI/robotaxis themselves.

- First they build AI that can massively accelerate AI R&D, then they bootstrap to AGI and/or robotaxis.

The direct method

Definitions: By AGI I mean a computer program that functions as a drop-in replacement for a human remote worker, except that it's better than the best humans at every important task (that can be done via remote workers). (h/t Ajeya Cotra for this language) And by robotaxis I mean at least a million fairly normal taxi rides a day are happening without any human watching ready to take over. (So e.g. if the Boring Company gets working at scale, that wouldn't count, since all those rides are in special tunnels.)

1. Scale advantage for AGI:

Robotaxis are subject to crippling hardware constraints, relative to AGI. According to my rough estimations [LW(p) · GW(p)], Teslas would cost tens of thousands of dollars more per vehicle, and have 6% less range, if they scaled up the parameter count of their neural nets by 10x. Scaling up by 100x is completely out of the question for at least a decade, I'd guess.

Meanwhile, scaling up GPT-4 is mostly a matter of purchasing the necessary GPUs and networking them together. It's challenging but it can be done, has been done, and will be done. We'll see about 2 OOMs of compute scale-up in the next four years, I say, and then more to come in the decade after that.

This is a big deal because roughly half of AI progress historically came from scaling up compute, and because there are reasons to think it's impossible or almost-impossible for a neural net small enough to run on a Tesla to drive as well as a human, no matter how long it is trained. (It's about the size of an ant's brain. An ant is driving your car! Have you watched ants? They bump into things all the time!)

2. Stakes advantage for AGI:

When a robotaxi messes up, there's a good chance someone will die. Robotaxi companies basically have to operate under the constraint that this never happens, or happens only once or twice. That would be like DeepMind training AlphaStar except that the whole training run gets shut down after the tenth game is lost. Robotaxi companies can compensate by doing lots of training in simulation, and doing lots of unsupervised learning on real-world camera recordings, but still. It's a big disadvantage.

Moreover, the vast majority of tasks involved in being an AGI are 'forgiving' in the sense that it's OK to fail. If you send a weirdly worded message to a user, or make a typo in your code, it's OK, you can apologize and/or fix the error. Only in a few very rare cases are failures catastrophic. Whereas with robotaxis, the opportunity for catastrophic failure is omnipresent. As a result, I think arguably being a safe robotaxi is just inherently harder than most of of the tasks involved in being an AGI. (Analogy: Suppose that cars and people were indestructible, like in a video game, so that they just bounced off each other when they collided. Then I think we'd probably have robotaxis already; sure, it might take you 20% longer to get to your destination due to all the crashes, but it would be so much cheaper! Meanwhile, suppose that if your chatbot threatens or insults >10 users, you'd have to close down the project. Then Microsoft Bing would have been shut down, along with every other chatbot ever.)

Finally, from a regulatory perspective, there are ironically much bigger barriers to building robotaxis than building AGI. If you want to deploy a fleet of a million robotaxis there is a lot of red tape you need to cut through, because the public and regulators are justifiably scared that you'll kill people. If you want to make AGI and give it the keys to your datacenter and connect it to the internet... you currently aren't even required to report this. In the future there will be red tape, but I cynically predict that it will remain less than what the robotaxis face.

3. No similarly massive advantage for robotaxis:

Here's where I'm especially keen to see the comments. Maybe there are arguments I haven't thought of. Based on the small amount of thought I've put into it, there isn't any advantage for robotaxis that is similarly massive to the two advantages for AGI described above.

The best I can do is this: Suppose that being a good robotaxi involves being good at N skills. Probably, being a good AGI involves being good at M skills, where M>>N. After all, human remote workers can do so many different things! Whereas driving a car is just one thing, or maybe a few things, depending on how you count... So there are probably at least a few skills necessary for AGI that are harder than all the skills necessary for robotaxis, so even if most AGI-skills are easier (due to being lower-stakes or whatever) to actually get AGI will be harder.

My reply to this argument is: OK, but I'd like to think this through more, I'm not confident in it yet. More importantly, by the time we are automating most of the skills involved in AGI, plausibly we'll be getting some serious AI R&D acceleration, and that moves us into the next section of this post...

The indirect method

One way we could get AGI and/or robotaxis is by automating most or all of the tasks involved in AI R&D, massively accelerating AI R&D, and then using the awesome power of all those newer better AIs and AI training methods and so forth to very quickly build AGI and/or robotaxis.

I think this is the default path, in fact. The tasks involved in AI R&D are already starting to get automated a little bit (e.g. ChatGPT and copilot are speeding up coding) and whereas in the abstract I sorta bought the argument that there have got to be some tasks involved in AGI that are harder than driving cars safely, when I focus more narrowly on the tasks involved in AI R&D, none of them seem harder than driving cars safely. Maybe I'm wrong here -- maybe concept formation or coming up with novel insights or understanding ML papers is inherently a lot harder than driving cars? Maybe. I would have been a lot more sympathetic to this four years ago, before AIs learned common sense and started commenting intelligently on ML papers and producing lots of useful code.

If we go via this indirect path, whether robotaxis or AGI come first depends heavily on whether the powers that be decide to direct all that super-advanced post-singularity AI research power to make robotaxis, or AGI, first.

Here I think it's pretty plausible that the answer will be "AGI." For one thing, there'll plausibly be more red tape and physical constraints on robotaxis. For another, there'll be so much more incentive to make AGI than make robotaxis, that probably the company that has successfully automated AI R&D will want to make AGI next instead of robotaxis.

30 comments

Comments sorted by top scores.

comment by Matthew Barnett (matthew-barnett) · 2023-08-15T03:53:13.379Z · LW(p) · GW(p)

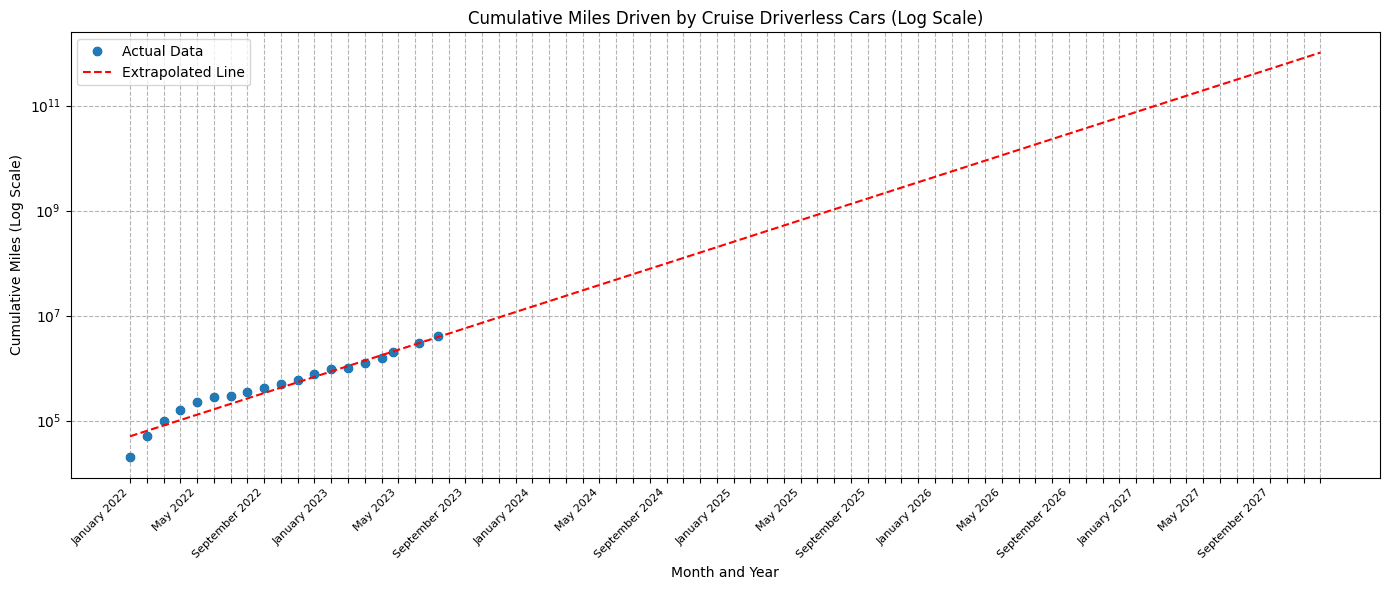

According to data that I grabbed from Cruise, my (admittedly wildly speculative) projection of their growth reveals that driverless cars may become near-ubiquitous by the end of 2027. More specifically, my extrapolation is for the cumulative number of miles driven by Cruise cars by the the end of 2027 to approach one trillion, which can be compared to the roughly 3 trillion miles driven per year by US drivers. Now obviously, we might get AGI before that happens. And maybe (indeed it's likely) that Cruise's growth will slow down at some point before they hit the trillion mile mark. Nonetheless, it seems that if current trends hold, we might get driverless cars almost everywhere in the US in the near-term future. As for whether that milestone comes before AGI, I think that may depend heavily on your definition of AGI.

↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2023-08-15T04:02:25.981Z · LW(p) · GW(p)

Awesome. I must admit I wasn't aware of this trend & it's an update for me. Hooray! Robotaxis are easier than I thought! Thanks.

↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2023-11-03T19:13:08.571Z · LW(p) · GW(p)

Update:

G.M. has spent an average of $588 million a quarter on Cruise over the past year, a 42 percent increase from a year ago. Each Chevrolet Bolt that Cruise operates costs $150,000 to $200,000, according to a person familiar with its operations.

Half of Cruise’s 400 cars were in San Francisco when the driverless operations were stopped. Those vehicles were supported by a vast operations staff, with 1.5 workers per vehicle. The workers intervened to assist the company’s vehicles every 2.5 to 5 miles, according to two people familiar with is operations. In other words, they frequently had to do something to remotely control a car after receiving a cellular signal that it was having problems.

It's behind a paywall so I can't verify but I'm told this is a quote from Cruise Grew Fast and Angered Regulators. Now It’s Dealing With the Fallout. - The New York Times (nytimes.com)

If these numbers are accurate, it seems my original take was correct after all. 2.5 to 5 miles per intervention is significantly worse than Tesla IIRC. And 1.5 employees per car, remotely operating the vehicle when it gets into trouble, is not robotaxi material.

↑ comment by SandXbox (PandaFusion) · 2023-10-26T08:11:42.443Z · LW(p) · GW(p)

And maybe (indeed it's likely) that Cruise's growth will slow down at some point before they hit the trillion mile mark.

An update: Cruise has been suspended by the California DMV.

Replies from: matthew-barnett↑ comment by Matthew Barnett (matthew-barnett) · 2023-10-26T19:14:13.603Z · LW(p) · GW(p)

That's unfortunate. Thankfully, this suspension is not a ban, but looks to be more of a temporary pause.

comment by Shmi (shminux) · 2023-08-13T17:44:48.040Z · LW(p) · GW(p)

Robotaxis are already here and expanding through multiple countries relatively quickly. AGI is nowhere close.

Replies from: daniel-kokotajlo↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2023-08-13T18:30:34.140Z · LW(p) · GW(p)

When do you think they'll reach 1 million rides per day?

Replies from: daniel-kokotajlo, shminux↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2023-08-13T18:37:59.334Z · LW(p) · GW(p)

My impression is that the growth rate is pretty underwhelming. But I don't have hard data on the growth rate and this would totally change my mind if e.g. the population of self-driving cars was 10xing every year, or even doubling. (Currently it's like, what, 500? And they only operate during certain hours in certain geofenced locations?)

Replies from: Jsevillamol, leogao↑ comment by Jsevillamol · 2023-08-14T07:01:29.623Z · LW(p) · GW(p)

Here is some data through Matthew Barnett and Jess Riedl

Number of cumulative miles driven by Cruise's autonomous cars is growing as an exponential at roughly 1 OOM per year.

https://twitter.com/MatthewJBar/status/1690102362394992640

Replies from: daniel-kokotajlo↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2023-08-14T19:48:55.182Z · LW(p) · GW(p)

Oh shit! So, seems like my million rides per day metric will be reached sometime in 2025? That is indeed somewhat faster than I expected. Updating, updating...

Thanks!

↑ comment by leogao · 2023-08-13T18:53:51.605Z · LW(p) · GW(p)

I know for Cruise they're operating ~300 vehicles here in SF (I was previously under the impression this was a hard cap by law until the approval a few days ago but no longer sure of this). The geofence and hours vary by user but my understanding is the highest tier of users (maybe just employees?) have access to Cruise 24/7 with a geofence encompassing almost all of SF, and then there are lower tiers of users with various restrictions like tighter geofences and 9pm-5:30am hours. I don't know what their growth plans look like now that they've been granted permission to expand.

Replies from: daniel-kokotajlo↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2023-08-13T19:11:49.262Z · LW(p) · GW(p)

OK, thanks. I'll be curious to see how fast they grow. I guess I should admit that it does seem like ants are driving cars fairly well these days, so to speak. Any ideas on what tasks could be necessary for AI R&D automation, that are a lot harder than driving cars? So far I've got things like 'coming up with new paradigms' and 'having good research taste for what experiments to run.' That and long-horizon agency, though long-horizon agency doesn't seem super necessary.

↑ comment by Shmi (shminux) · 2023-08-13T19:37:15.086Z · LW(p) · GW(p)

Why this particular number?

Replies from: daniel-kokotajlo↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2023-08-13T19:43:18.672Z · LW(p) · GW(p)

I chose it not particularly carefully, as a milestone that to me signified "it's actually a profitable regular business now, it really works, there isn't any 'catch' anymore like there has been for so many years."

You said "AGI is nowhere close" but then I could be like "Have you heard of GPT-4?" and you'd be like "that doesn't count for reasons X,Y,andZ" and I'd be like "I agree, GPT-4 sure is quite different in those ways from what was long prophesied in science fiction. Similarly, what Waymo is doing now in San Francisco is quite different from the dream of robotaxis."

↑ comment by Shmi (shminux) · 2023-08-13T20:04:55.810Z · LW(p) · GW(p)

GPT-4 is an AGI, but not a stereotypical example of AGI as generally discussed on this forum for about a decade (no drive to do anything, no ability to self-improve), but basically an Oracle/tool AI, as per Karnofsky's original proposal in the discussion with Eliezer here. The contrast between the apparent knowledge and the lack of drive to improve is confusing. if you recall, the main argument against a Tool AI was "to give accurate responses it will have to influence the world, not just remain passive". GPT shows nothing of the sort. It will complete the next token the best it can, hallucinate pretty often, and does not care if it hallucinates even when it knows it does. I don't think even untuned models like Sydney showed any interest in changing anything about their inputs. GPT is not an agentic AGI that we all had in mind for years, and not clearly close to being one.

In contrast, Waymo robotaxis are precisely the stereotypical example of a robotaxi: a vehicle that gets you from point A to point B without a human driver. It will be a profitable business, and how soon depends mostly on regulations, not on capabilities. There are still ways to go to improve reliability in edge cases, but it is already better than a human driver most of the time.

One argument is that state-of-the art generative models might become a lot more agentic if given real world sensors and actuators... say, put inside a robotaxi. Who knows, but it does not look obviously so at this point. There is some sort of AI controlling most autonomous driving vehicles already, I have no idea how advanced compared to GPT-4.

Replies from: daniel-kokotajlo↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2023-08-15T03:47:18.088Z · LW(p) · GW(p)

I feel like you are being unnecessarily obtuse -- what about AutoGPT-4 with code execution and browsing etc.? It's not an oracle/tool, it's an agent.

Also, hallucinations are not an argument in favor of it being an oracle. Oracles supposedly just told you what they thought, they didn't think one thing and say another.

I agree that there are differences between AutoGPT4 and classic AGI, and if you feel like they are bigger than the differences between current Waymo and classic robotaxi dreams, fair enough. But the difference in difference size ain't THAT big, I say. I think it's wrong to say "Robotaxis are already here... AGI is nowhere in sight."

↑ comment by Shmi (shminux) · 2023-08-15T04:45:46.571Z · LW(p) · GW(p)

I feel like you are being unnecessarily obtuse

I guess there is no useful discussion possible after a statement like that.

↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2023-08-13T19:44:41.285Z · LW(p) · GW(p)

(I edited the OP to be a bit more moderate & pointed at what I'm really interested in. My apologies for being so hasty and sloppy.)

comment by Stephen McAleese (stephen-mcaleese) · 2023-08-13T20:22:28.861Z · LW(p) · GW(p)

I highly recommend this interview with Yann LeCun which describes his view on self-driving cars and AGI.

Basically, he thinks that self-driving cars are possible with today's AI but would require immense amounts of engineering (e.g. hard-wired behavior for corner cases) because today's AI (e.g. CNNs) tends to be brittle and lacks an understanding of the world.

My understanding is that Yann thinks we basically need AGI to solve autonomous driving in a reliable and satisfying way because the car would need to understand the world like a human to drive reliably.

comment by Tomás B. (Bjartur Tómas) · 2023-08-14T16:18:24.162Z · LW(p) · GW(p)

One argument I've had for self-driving being hard is: humans drive many millions of miles before they get in fatal accidents. In this long tail, would it be that surprising if there were AGI complete problems within it? My understanding is Waymo and Cruise both use teleoperation in these cases. And one could imagine automating this, a God advising the ant in your analogy. But still, at that point you're just doing AGI research.

Replies from: ryan_greenblatt↑ comment by ryan_greenblatt · 2023-08-15T04:41:17.589Z · LW(p) · GW(p)

Driving optimally might be AGI complete, but you don't necessarily need to drive optimally, it should be sufficient to beat typical human drivers for safety (this will depend on the regulatory regime of course).

It might be that the occurrences where avoiding an accident is AGI complete are lower per mile than the cases where typical human drivers make dumb mistakes due to lack of attentiveness and worse sensors.

comment by Htarlov (htarlov) · 2023-08-13T22:10:53.359Z · LW(p) · GW(p)

Both seem around the corner for me.

For robo-taxis it is more a society-based problem than a technical one.

- Robo-taxis have problems with edge cases (like some situations in some places with some bad circumstances). Usually in those where human drivers also have even worse problems (like pedestrians wearing black on the road at night with rainy weather - robo-taxi at least have LIDAR to detect objects in bad visibility). Sometimes they are also prone to object detection hacking (by stickers put on signs, paintings on the road, etc.). In general, they have fewer problems than human drivers.

- Robo-taxis have a public trust problem. Any more serious accident hits the news and propagates distrust, even if they are already safer than human drivers in general.

- Robo-taxis and self-driving cars in general move responsibility from the driver to the producer. The responsibility that the producer does not want to have and needs to count toward costs. It makes investors cautious.

What is missing so we would have robo-taxis is mostly public trust and more investments.

For AGI we already have basic blocks. Just need to scale them up and connect them into a proper system. What building blocks? These:

- Memory, duh. It is there for a long time, with many solutions with indexing and high performance.

- Thoughts generating. Now we have LLMs that can generate thoughts based on instructions and context. It can easily be made to interact with other models and memory. A more complex system can be built from several LLMs with different instructions interacting with each other.

- Structuring the system and communication within it. It can be done with normal code.

- Loop of thoughts (stream of thoughts). It can be easily achieved by looping LLM(s).

- Vision. Image and video processing. We have a lot of transformer models and image-processing techniques. There are already sensible image-to-text models, even LLM-based ones (so can answer questions about images).

- Actuators and movement. We have models built for movements on different machines. Including much of humanoid movements. We currently even have models that are able to one-shot or few-shot learning of movements for attached machines.

- Learning of new abilities. LLMs are able to write code. It can write code for itself to make more complex procedures based on more basic commands. There was a work where LLM explored and learned Minecraft having only very basic procedures. It wrote code for more complex operations and used what it wrote to move around and do things, and build stuff.

- Connection to external interfaces (even GUI). It can be translated into basic API that can be explored, memorized, and called by the system that can build more complex operations for itself.

What is missing for AGI:

- Performance. LLMs have high performance in reading input data, but not very much inferring the result. It also does not scale very well (but better than humans). Multi-model complex systems on current LLMs would be either slow and somewhat dumb and make a lot of mistakes (open-source fast models, even GPT 3.5) or be very slow but better.

- Cost-effectiveness. For sporadic use like "write me this or that" it is cost-effective, but for a continuous stream of thoughts, especially with several models, it does not compare well to a remote human worker. It needs some further advancements, maybe dedicated hardware,

- Learning, refining, and testing is very slow and costly with those models. This makes a cap for anyone wanting to build something sensible. Rather slow steps are done towards AGI by the main players.

- The scope for the model is rather short currently. Best of powerful models have a scope of about 32 thousand tokens. There are some models that trade quality for being able to operate on more tokens, but those are not the best ones. 32k seems a lot, but when you need a lot of context and information to process to have coherent thoughts on non-trivial topics not rooted in model learning data... then it is a problem. This is the case with streams of thoughts if you need it to analyze instructions, analyze context and inputs, propose strategy, refine it, propose current tactic, refine it, propose next moves and decisions, refine it, generate instructions for the current task at hand, and also process learning to add new procedures, code, memories, etc. to reuse later. Some modern LLMs are technically capable of all that, but the scope is a road blocker for any non-trivial thing here.

If I would be to guess I would say that AGI will be sooner in scale - just because there is hype, there are big investments and the main problems are currently less like "we need a breakthrough" and more like "we need refinements". For robo-taxis we still need a lot more investments and some breakthroughs in areas of public trust or law.

comment by Dagon · 2023-08-14T17:02:50.548Z · LW(p) · GW(p)

I think these problems are much more similar than you do. Perhaps not purely equivalent, but the hard parts of each are very much the same.

Note that here's a lot of fuzz in the definition of AGI, which could lead it to being easier, harder, or orthogonal to self-driving taxis.

a computer program that functions as a drop-in replacement for a human remote worker, except that it's better than the best humans at every important task (that can be done via remote workers).

"a human worker": one, some, some parts of some jobs, only script-based call-center work, with liberal escalation to humans available, or some other criterion? One could argue that a whole lot of jobs from the last milleneum have been automated away, and that doesn't really qualify as AGI. It might be useful to instead compare on the same dimensions as self-driving cars: amount of output with minimal human operational intervention for a type of job.

But that problem aside, the thing that's going to be difficult in both branches is the long tail. Human cognition is massive overkill for 99% of things that humans actually do. But the small amount of unexpected situations or highly-variant demands are REALLY hard to handle. The reasons humans are hired for office/knowledge/remote-able jobs is that there are undocumented, non-obvious, and changing requirements for the tools in use and the other people involved in the enterprise. The old joke that goes "why would I pay you just to push buttons?" being answered with "you don't, you pay me to push only the right buttons at the right times" points to this very difficult-to-define capability.

This difficulty of definition applies to training, documenting, and performing the tasks, of course. But it ALSO applies to even identifying how to measure how well an agent (human or AGI) is capable of handling it. Or even noticing that it's the crux of why a human is there.

Further, both branches have to extra hurdle of human-level performance being insufficient for success. Self-driving cars are ALREADY better than (guesses, no cite) the 75%ile human driver, in 90% of condions. But that's not good enough to make the switch, they need to be near-perfect, including the decisions of when to break rules in order to get something done. Chatbots are ALREADY better than the median level-one customer support agent, and that's not good enough either - the ability to decide to go beyond, or to escalate, needs to be way BETTER than human before the switch gets made.

comment by [deleted] · 2023-08-14T02:56:39.002Z · LW(p) · GW(p)

Here's a post I made on this to one of your colleages:

https://www.greaterwrong.com/posts/ZRrYsZ626KSEgHv8s/self-driving-car-bets/comment/Koe2D7QLRCbH8erxM [LW(p) · GW(p)]

To summarize: AGI may be easier or harder than robotaxis, but that's not precisely the relevant parameter.

What matters is how much human investment goes into solving the problem, and is the problem solvable with the technical methods that we know exist.

In the post above and following posts, I define a transformative AI as a machine that can produce more resources of various flavors than it's own costs. It need not be as comprehensive as a remote worker or top expert, but merely produce more than it costs, and things get crazy once it can perform robotic tasks to supply most of it's own requirements.

You might note robotaxis do not produce more than they cost, they need a minimum very large scale to be profitable. That's a crucial difference, others have pointed out that to equal OAI's total annual spending, say it's 1 billion USD, then they would need 4 million chatGPT subscribers. It only has to be useful enough to pay $20 a month for. That's a very low bar.

While each robotaxi only makes the delta between it's operating costs, and what it can charge for a ride, which is slightly less than the cost of an uber or lyft at first, but over time has to go much lower. At small scales that's a negative number, operating costs will be high, partly from all the hardware, and partly simply because of scale. To run robotaxis there are fixed costs with each service/deployment center, with the infrastructure and servers, with the crew of remote customer service/operators, etc.

As for is the AGI problem solvable, I think yes, for certain subtasks, but not necessarily all subtasks. Many jobs humans do are not good RL problems and are not solvable with current techniques. Some of those jobs are done by remote workers (your definition) or top human experts (Paul's definition). These aren't the parameters that matter for transformative AI.

comment by xiann · 2023-08-15T00:43:38.208Z · LW(p) · GW(p)

"Normally when Cruise cars get stuck, they ask for help from HQ, and operators there give the vehicles advice or escape routes. Sometimes that fails or they can’t resolve a problem, and they send a human driver to rescue the vehicle. According to data released by Cruise last week, that happens about an average of once/day though they claim it has been getting better."

From the Forbes write-up of GM Cruise's debacle this weekend. I think this should update people downward somewhat in FSD % complete. I think commenters here are being too optimistic about current AI, particularly in the physical world. We will likely need to get closer to AGI for economically-useful physical automation to emerge, given how pre-trained humans seem to be for physical locomotion and how difficult the problem is for minds without that pre-training.

So in my opinion, there actually is some significant probability that we either have AGI prior to or very soon after robotaxis, at least the without-a-hitch, no weird errors, slide-deck presentation form of it your average person thinks of when one says "robotaxis".

comment by Christopher King (christopher-king) · 2023-08-14T17:05:04.568Z · LW(p) · GW(p)

Note that even if robotaxis are easier, it's not much easier. It is at most the materials and manufacturing cost of the physical taxi. That's because from your definition:

By AGI I mean a computer program that functions as a drop-in replacement for a human remote worker, except that it's better than the best humans at every important task (that can be done via remote workers).

Assume that creating robo-taxis is humanly possible. I can just run a couple AGIs and have them send a design to a factory for the robo-taxi, self-driving software included.

comment by samshap · 2023-08-13T17:45:35.010Z · LW(p) · GW(p)

Counterpoint, robotaxis already exist: https://www.nytimes.com/2023/08/10/technology/driverless-cars-san-francisco.html

You should probably update your priors.

Replies from: daniel-kokotajlo↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2023-08-13T18:31:01.216Z · LW(p) · GW(p)

From the OP:

And by robotaxis I mean at least a million fairly normal taxi rides a day are happening without any human watching ready to take over.

I agree that robotaxis are pretty close. I think that AGI is also pretty close.

Replies from: akram-choudhary↑ comment by Akram Choudhary (akram-choudhary) · 2023-10-28T14:28:17.492Z · LW(p) · GW(p)

you would have to have ridiculous ai timelines for it to be closer than robotaxis. Closer than 2027?

Replies from: daniel-kokotajlo↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2023-10-29T13:07:53.798Z · LW(p) · GW(p)

My AGI timelines are currently 50% by 2027. After writing this post I realized (thanks to the comments) that robotaxis were progressing faster than I thought. I still think it's unclear which will happen first. (Remember my definition of robotaxis = 1M rides per day without human oversight.)