Self-driving car bets

post by paulfchristiano · 2023-07-29T18:10:01.112Z · LW · GW · 44 commentsContents

Lessons The analogy to transformative AI None 44 comments

This month I lost a bunch of bets.

Back in early 2016 I bet at even odds that self-driving ride sharing would be available in 10 US cities by July 2023. Then I made similar bets a dozen times because everyone disagreed with me.

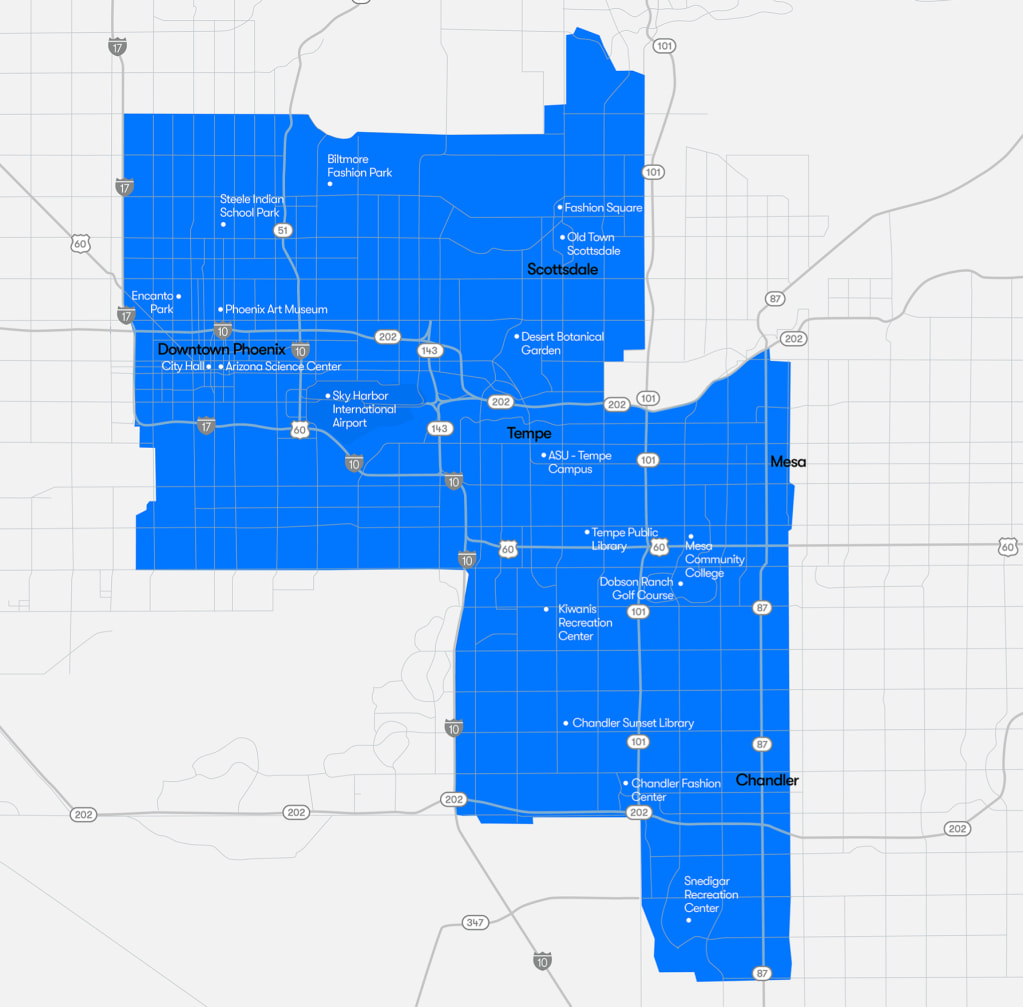

The first deployment to potentially meet our bar was Phoenix in 2022. I think Waymo is close to offering public rides in SF, and there are a few more cities being tested, but it looks like it will be at least a couple of years before we get 10 cities even if everything goes well.

Back in 2016 it looked plausible to me that the technology would be ready in 7 years. People I talked to in tech, in academia, and in the self-driving car industry were very skeptical. After talking with them it felt to me like they were overconfident. So I was happy to bet at even odds as a test of the general principle that 7 years is a long time and people are unjustifiably confident in extrapolating from current limitations.

In April of 2016 I gave a 60% probability to 10 cities. The main point of making the bets was to stake out my position and maximize volume, I was obviously not trying to extract profit given that I was giving myself very little edge. In mid 2017 I said my probability was 50-60%, and by 2018 I was under 50%.

If 34-year-old Paul was looking at the same evidence that 26-year-old Paul had in 2016 I think I would have given it a 30-40% chance instead of a 60% chance. I had only 10-20 hours of information about the field, and while it’s true that 7 years is a long time it’s also true that things take longer than you’d think, 10 cities is a lot, and expert consensus really does reflect a lot of information about barriers that aren’t easy to articulate clearly. 30% still would have made me more optimistic than a large majority of people I talked to, and so I still would have lost plenty of bets, but I would have made fewer bets and gotten better odds.

But I think 10% would have been about as unreasonable a prediction as 60%. The technology and regulation are mature enough to make deployment possible, so exactly when we get to 10 cities looks very contingent. If the technology was better then deployment would be significantly faster, and I think we should all have wide error bars about 7 years of tech progress. And the pandemic seems to have been a major setback for ride hailing. I’m not saying I got unlucky on any of these—my default guess is that the world we are in right now is the median—but all of these events are contingent enough that we should have had big error bars.

Lessons

People draw a lot of lessons from our collective experience with self-driving cars:

- Some people claim that there was wild overoptimism, but this does not match up with my experience. Investors were optimistic enough to make a bet on a speculative technology, but it seems like most experts and people in tech thought the technology was pretty unlikely to be ready by 2023. Almost everyone I talked to thought 50% was too high, and the three people I talked to who actually worked on self-driving cars went further and said it seemed crazy. The evidence I see for attributing wild optimism seems to be valuations (which could be justified even by a modest probability of success), vague headlines (which make no attempt to communicate calibrated predictions), and Elon Musk saying things.

- Relatedly, people sometimes treat self-driving as if it’s an easy AI problem that should be solved many years before e.g. automated software engineering. But I think we really don’t know. Perceiving and quickly reacting to the world is one of the tasks humans have evolved to be excellent at, and driving could easily be as hard (or harder) than being an engineer or scientist. This isn’t some post hoc rationalization: the claim that being a scientist is clearly hard and perception is probably easy was somewhat common in the mid 20th century but was out of fashion way before 2016 (see: Moravec’s paradox).

- Some people conclude from this example that reliability is really hard and will bottleneck applications in general. I think this is probably overindexing on a single example. Even for humans, driving has a reputation as a task that is unusually dependent on vigilance and reliability, where most of the minutes are pretty easy and where not messing up in rare exciting circumstances is the most important part of the job. Most jobs aren’t like that! Expecting reliability to be as much of a bottleneck for software engineering as for self-driving seems pretty ungrounded, given how different the job is for humans. Software engineers write tests and think carefully about their code, they don’t need to make a long sequence of snap judgments without being able to check their work. To the extent that exceptional moments matter, it’s more about being able to take opportunities than avoid mistakes.

- I think one of the most important lessons is that there’s a big gap between “pretty good” and “actually good enough.” This gap is much bigger than you’d guess if you just eyeballed the performance on academic benchmarks and didn’t get pretty deep into the weeds. I think this will apply all throughout ML; I think I underestimated the gap when looking in at self-driving cars from the outside, and made a few similar mistakes in ML prior to working in the field for years. That said, I still think you should have pretty broad uncertainty over exactly how long it will take to close this gap.

- A final lesson is that we should put more trust in skeptical priors. I think that’s right as far as it goes, and does apply just as well to impactful applications of AI more generally, but I want to emphasize that in absolute terms this is a pretty small update. When you think something is even odds, it’s pretty likely to happen and pretty likely not to happen. And most people had probabilities well below 50% and so they are even less surprised than I am. Over the last 7 years I’ve made quite a lot of predictions about AI, and I think I’ve had a similar rate of misses in both directions. (I also think my overall track record has been quite good, but you shouldn’t believe that.) Overall I’ve learned from the last 7 years to put more stock in certain kinds of skeptical priors, but it hasn’t been a huge effect.

The analogy to transformative AI

Beyond those lessons, I find the analogy to AI interesting. My bottom line is kind of similar in the two cases—I think 34-year-old Paul would have given roughly a 30% chance to self-driving cars in 10 cities by July 2023, and 34-year-old Paul now assigns roughly a 30% chance to transformative AI by July 2033. (By which I mean: systems as economically impactful as a low-cost simulations of arbitrary human experts, which I think is enough to end life as we know it one way or the other.)

But my personal situation is almost completely different in the two cases: for self-driving cars I spent 10-20 hours looking into the issue and 1-2 hours trying to make a forecast, whereas for transformative AI I’ve spent thousands of hours thinking about the domain and hundreds on forecasting.

And the views of experts are similarly different. In self-driving cars, people I talked to in the field tended to think that 30% by July 2023 was too high. Whereas the researchers working in AGI who I most respect (and who I think have the best track records over the last 10 years) tend to think that 30% by July 2033 is too low. The views of the broader ML community and public intellectuals (and investors) seem similar in the two cases, but the views of people actually working on the technology are strikingly different.

The update from self-driving cars, and more generally from my short lifetime of seeing things take a surprisingly long time, has tempered my AI timelines. But not enough to get me below 30% of truly crazy stuff within the next 10 years.

44 comments

Comments sorted by top scores.

comment by Chipmonk · 2023-07-29T18:58:05.358Z · LW(p) · GW(p)

Cruise is also operating with a publicly (though, public waitlist) in a few cities: SF, Austin, Phoenix. Recently announced Miami and Nashville, too. I have access.

Edit: also Houston and Dallas. Also probably Atlanta and other locations on their jobs page

↑ comment by No77e (no77e-noi) · 2023-07-30T13:12:28.036Z · LW(p) · GW(p)

You mention eight cities here. Do they count for the bet?

Replies from: o-o↑ comment by O O (o-o) · 2023-07-30T16:22:07.935Z · LW(p) · GW(p)

Arguably SF, and possibly other cities don’t count. In SF, Waymo and Cruise require you to get on a relatively exclusive waitlist. Don’t see how it can be considered “publicly available”. Furthermore, Cruise is very limited in SF. It’s only available at 10pm-5am in half the city for a lot of users, including myself. I can’t comment on Waymo as it has been months since I’ve signed up for the waitlist.

Replies from: dan-weinand↑ comment by Dan Weinand (dan-weinand) · 2023-07-31T21:56:58.568Z · LW(p) · GW(p)

In regard to Waymo (and Cruise, although I know less there) in San Francisco, the last CPUC meeting for allowing Waymo to charge for driverless service had the vote delayed. Waymo operates in more areas and times of day than Cruise in SF last I checked.

https://abc7news.com/sf-self-driving-cars-robotaxis-waymo-cruise/13491184/

I feel like Paul's right that the only crystal clear 'yes' is Waymo in Phoenix, and the other deployments are more debatable (due to scale and scope restrictions).

comment by M. Y. Zuo · 2023-07-29T20:06:26.123Z · LW(p) · GW(p)

Thanks for taking the time to write out these reflections.

I'm curious about your estimates for self driving cars in the next 5 years, would you take the same bet at 50:50 odds for a 2028 July date?

Replies from: paulfchristiano↑ comment by paulfchristiano · 2023-07-29T20:58:34.806Z · LW(p) · GW(p)

Yes. My median is probably 2.5 years to have 10 of the 50 largest US cities where a member of the public can hail a self-driving car (though emphasizing that I don't know anything about the field beyond the public announcements).

Some of these bets had a higher threshold of covering >50% of the commutes within the city, i.e. multiplying fraction of days where it can run due to weather, and fraction of commute endpoints in the service zone. I think Phoenix wouldn't yet count, though a deployment in SF likely will immediately. If you include that requirement then maybe my median is 3.5 years. (My 60% wasn't with that requirement and was intended to count something like the current Phoenix deployment.)

(Updated these numbers in the 60 seconds after posting, from (2/2.5) to (2.5/3.5). Take that as an indication of how stable those forecasts are.)

comment by boazbarak · 2023-07-30T01:11:58.765Z · LW(p) · GW(p)

There is a general phenomenon in tech that has been expressed many times of people over-estimating the short-term consequences and under-estimating the longer term ones (e.g., "Amara's law").

I think that often it is possible to see that current technology is on track to achieve X, where X is widely perceived as the main obstacle for the real-world application Y. But once you solve X, you discover that there is a myriad of other "smaller" problems Z_1 , Z_2 , Z_3 that you need to resolve before you can actually deploy it for Y.

And of course, there is always a huge gap between demonstrating you solved X on some clean academic benchmark, vs. needing to do so "in the wild". This is particularly an issue in self-driving where errors can be literally deadly but arises in many other applications.

I do think that one lesson we can draw from self-driving is that there is a huge gap between full autonomy and "assistance" with human supervision. So, I would expect we would see AI be deployed as (increasingly sophisticated) "assistants' way before AI systems actually are able to function as "drop-in" replacements for current human jobs. This is part of the point I was making here. [LW · GW]

Replies from: lcmgcd↑ comment by lemonhope (lcmgcd) · 2023-08-18T22:15:47.493Z · LW(p) · GW(p)

Do you know of any compendiums of such Z_Ns? Would love to read one

Replies from: lcmgcd↑ comment by lemonhope (lcmgcd) · 2023-08-18T22:17:33.221Z · LW(p) · GW(p)

I know of one: the steam engine was "working" and continuously patented and modified for a century (iirc) before someone used it in boats at scale. https://youtu.be/-8lXXg8dWHk

Replies from: boazbarak↑ comment by boazbarak · 2023-08-19T09:41:59.661Z · LW(p) · GW(p)

See also my post https://www.lesswrong.com/posts/gHB4fNsRY8kAMA9d7/reflections-on-making-the-atomic-bomb [LW · GW]

the Manhattan project was all about taking something that’s known to work in theory and solving all the Z_n’s

comment by Daniel Kokotajlo (daniel-kokotajlo) · 2023-08-05T11:33:46.078Z · LW(p) · GW(p)

One IMO important thing that isn't mentioned here is scaling parameter count. Neural nets can be fairly straightforwardly improved simply by making them bigger. For LLMs and AGI, there's plenty of room to scale up, but for the neural nets that run on cars, there isn't. Tesla's self-driving hardware, for example, has to fit on a single chip and has to consume a small amount of energy (otherwise it'll impact the range of the car.) They cannot just add an OOM of parameters, much less three.

↑ comment by Foyle (robert-lynn) · 2024-09-11T08:55:16.806Z · LW(p) · GW(p)

They cannot just add an OOM of parameters, much less three.

How about 2 OOM's?

HW2.5 21Tflops HW3 72x2 = 72 Tflops (redundant), HW4 3x72=216Tflops (not sure about redundancy) and Elon said in June that next gen AI5 chip for fsd would be about 10x faster say ~2Pflops

By rough approximation to brain processing power you get about 0.1Pflop per gram of brain so HW2.5 might have been a 0.2g baby mouse brain, HW3 a 1g baby rat brain HW4 perhaps adult rat, and upcoming HW5 a 20g small cat brain.

As a real world analogue cat to dog (25-100g brain) seems to me the minimum necessary range of complexity based on behavioral capabilities to do a decent job of driving - need some ability to anticipate and predict motivations and behavior of other road users and something beyond dumb reactive handling (ie somewhat predictive) to understand anomalous objects that exist on and around roads.

Nvidia Blackwell B200 can do up to about 10pflops of FP8, which is getting into large dog/wolf brain processing range, and wouldn't be unreasonable to package in a self driving car once down closer to manufacturing cost in a few years at around 1kW peak power consumption.

I don't think the rat brain HW4 is going to cut it, and I suspect that internal to Tesla they know it too, but it's going to be crazy expensive to own up to it, better to keep kicking the can down the road with promises until they can deliver the real thing. AI5 might just do it, but wouldn't be surprising to need a further oom to Nvidia Blackwell equivalent and maybe $10k extra cost to get there.

↑ comment by NoUsernameSelected · 2023-08-06T03:20:54.654Z · LW(p) · GW(p)

I agree about it having to fit on a single chip, but surely the neural net on-board would only have a relatively negligible impact on range compared to how much the electric motor consumes in motion?

Replies from: daniel-kokotajlo↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2023-08-06T11:19:28.344Z · LW(p) · GW(p)

IIRC in one of Tesla's talks (I forget which one) they said that energy consumption of the chip was a constraint because they didn't want it to reduce the range of the car. A quick google seems to confirm this. 100W is the limit they say: FSD Chip - Tesla - WikiChip

IDK anything about engineering, but napkin math based on googling: FSD chip consumes 36 watts currently. Over the course of 10 hours that's 0.36 kWh. Tesla model 3 battery can fit 55kwh total, and takes about ten hours of driving to use all that up (assuming you average 30mph?) So that seems to mean that FSD chip currently uses about two-thirds of one percent of the total range of the vehicle. So if they 10x'd it, in addition to adding thousands of dollars of upfront cost due to the chips being bigger / using more chips, there would be a 6% range reduction. And then if they 10x'd it again the car would be crippled. This napkin math could be totally confused tbc.

↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2023-08-06T11:22:02.153Z · LW(p) · GW(p)

(This napkin math is making me think Tesla might be making a strategic mistake by not going for just one more OOM. It would reduce the range and add a lot to the cost of the car, but... maybe it would be enough to add an extra 9 or two of reliability... But it's definitely not an obvious call and I can totally see why they wouldn't want to risk it.)

Replies from: daniel-kokotajlo↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2023-08-06T11:23:11.867Z · LW(p) · GW(p)

(Maybe the real constraint is cost of the chips. If each chip is currently say $5,000, then 10xing would add $45,000 to the cost of the car...)

comment by Mazianni (john-williams-1) · 2023-08-01T07:51:03.646Z · LW(p) · GW(p)

related but tangential: Coning self driving vehicles as a form of urban protest

I think public concerns and protests may have an impact on the self-driving outcomes you're predicting. And since I could not find any indication in your article that you are considering such resistance, I felt it should be at least mentioned in passing.

Replies from: john-williams-1, lcmgcd↑ comment by Mazianni (john-williams-1) · 2023-08-08T16:01:14.130Z · LW(p) · GW(p)

Whoever downvoted... would you do me the courtesy of expressing what you disagree with?

Did I miss some reference to public protests in the original article? (If so, can you please point me towards what I missed?)

Do you think public protests will have zero effect on self-driving outcomes? (If so, why?)

↑ comment by lemonhope (lcmgcd) · 2023-08-18T22:23:46.657Z · LW(p) · GW(p)

This is hilarious

Replies from: john-williams-1↑ comment by Mazianni (john-williams-1) · 2023-08-21T08:21:40.274Z · LW(p) · GW(p)

My intuition is that you got down voted for the lack of clarity about whether you're responding to me [my raising the potential gap in assessing outcomes for self-driving], or the article I referenced.

For my part, I also think that coning-as-protest is hilarious.

I'm going to give you the benefit of the doubt and assume that was your intention (and not contribute to downvotes myself.) Cheers.

Replies from: lcmgcd↑ comment by lemonhope (lcmgcd) · 2023-08-21T16:23:05.875Z · LW(p) · GW(p)

Yes the fact that coning works and people are doing it is what I meant was funny.

But I do wonder whether the protests will keep up and/or scale up. Maybe if enough people protest everywhere all at once, then they can kill autonomous cars altogether. Otherwise, I think a long legal dispute would eventually come out in the car companies' favor. Not that I would know.

comment by Aligned? · 2023-08-04T23:14:10.471Z · LW(p) · GW(p)

Why no mention of the level 4 autonomous robobuggies from Starship. These buggies have been exponentially ramping up now for over 10 years and they can make various grocery deliveries without human oversight. Autonomous vehicles have arrived and they are navigating our urban landscapes! There have been many millions of uneventful trips to date. What I find surprising is that some sort of an oversized robobuggy has not been brought out that would allow a person to be transported by them. One could imagine for example, that patrons of bars who had too many drinks to drive home could be wheeled about in these buggies. This could already be done quite safely on sidewalks at fairly low speed. for those who have had a few too many speed might not be an overly important feature. Considering how many fatalities are involved with impaired drivers it surprises me that MADD has not been more vocal in advocating for such a solution. So, in a sense there is already widespread autonomous vehicles currently operating on American streets. Importantly these vehicles are helping us to reimagine what transport could be. Instead of thinking in terms of rushing from one place to another, people might embrace more of a slow travel mentality in which instead of steering their vehicles they could do more of what they want to like surf the internet, email, chat with GPT etc.. The end of the commute as we know it?

Replies from: daniel-kokotajlo↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2023-08-05T11:27:42.903Z · LW(p) · GW(p)

Interesting, thanks for the update -- I thought that company was going nowhere but didn't have data on it and am pleased to learn it is still alive. According to wikipedia,

In October 2021, Starship said that its autonomous delivery robots had completed 2 million deliveries worldwide, with over 100,000 road crossings daily.[23][24] According to the company, it reached 100,000 deliveries in August 2019 and 500,000 deliveries in June 2020.[25]

By January 2022, Starship's autonomous delivery robots had made more than 2.5 million autonomous deliveries, and traveled over 3 million miles globally,[1][26] making an average 10,000 deliveries per day.[1]

And then according to this post from April 2023 they were at 4 million deliveries.

So that's

Aug 2019: 100K

June 2020: 500K

Oct 2021: 2M

Jan 2022: 2.5M

April 2023: 4M

I think this data is consistent with the "this company is basically a dead end" hypothesis. Seems like they've made about as many deliveries in the last 1.5 years as in the 1.5 years before that.

However, I want to believe...

↑ comment by Aligned? · 2023-08-05T17:42:39.365Z · LW(p) · GW(p)

Thank you Daniel for your reply.

The latest delivery count is 5M. That is a fairly substantial ramp up. It means that the double over the 1.5 years from October 2021 --> April 2023 is being maintained in the 1.5 years from Jan 2022 through July 2023 (admittedly with a considerable amount of overlapping time).

In addition it is quite remarkable as noted above that they have been level 4 autonomous for years now. This is real world data that can help us move towards other level 4 applications. Obviously, when you try and have level 4 cars that move at highway speeds and must interact with humans problems can happen. Yet, when you move it down to sidewalk speeds on often sparsely traveled pavement there is a reduction in potential harm.

I am super-excited about the potential of robobuggies! There are a near endless number of potential applications. The COVID pandemic would have been dramatically different with universal robobuggy technology. Locking down society hard when people had to grocery shop would have stopped the pandemic quickly. As it was shopping was probably one of the most important transmission vectors. With a truly hard lockdown the pandemic would have stopped within 2 weeks.

Of course transport will become much safer and more environmentally friendly with robobuggies and people will be spared the burdens of moving about space. It is interesting to note that many people are stuck in so called food deserts and these robobuggies would allow them to escape such an unhealthy existence. Robobuggy transport would also allow school children to have more educational options as they would then not be stuck into attending the school that was closest to their home.

I see robobuggies as a super positive development. Over the next 10 years with continued exponential growth we could see this at global scale.

comment by Guive (GAA) · 2025-01-08T00:07:12.116Z · LW(p) · GW(p)

Thanks, this is a good post. Have you changed your probability of TAI by 2033 in the year and a half since it was posted?

comment by Ruby · 2023-08-16T18:20:59.467Z · LW(p) · GW(p)

Curated! I'm a real sucker for retrospectives, especially ones with reflection over long periods of time and with detailed reflection on the thought process. Kudos for this one. I'd be curious to see more elaboration on the points that go behind:

Overall I’ve learned from the last 7 years to put more stock in certain kinds of skeptical priors, but it hasn’t been a huge effect.

comment by Review Bot · 2024-06-21T14:39:45.012Z · LW(p) · GW(p)

The LessWrong Review [? · GW] runs every year to select the posts that have most stood the test of time. This post is not yet eligible for review, but will be at the end of 2024. The top fifty or so posts are featured prominently on the site throughout the year.

Hopefully, the review is better than karma at judging enduring value. If we have accurate prediction markets on the review results, maybe we can have better incentives on LessWrong today. Will this post make the top fifty?

comment by Awesome_Ruler_007 (neel-g) · 2023-08-17T01:14:12.008Z · LW(p) · GW(p)

I spent 10-20 hours looking into the issue and 1-2 hours trying to make a forecast, whereas for transformative AI I’ve spent thousands of hours thinking about the domain and hundreds on forecasting.

I wonder if the actual wall time invested actually makes a difference here. If your model is flawed, if you're suffering from some bias, simply brute forcing effort won't yield any returns.

I think this is a fallacious assumptions, unless rigorously proven otherwise.

comment by paultreseler · 2023-08-17T00:00:38.799Z · LW(p) · GW(p)

May I ask what metric you are used, using now, to base the demand for self driving share/s? Even with current narrow AI efficiency the increase in heat/crime decreases economic resource usage by the mean, and an already receding desire by the stable income home worker for use.

Phoenix as a perfect example to future lack of demand. Laptop from house bipeds are the last to venture out for what can be delivered. Gen population retail, sans car takes public transportation.

Availability of needed tech to facilitate use market is present sure, and will undoubtedly increase in viablility. There just will not be any passengers.

Perhaps the technology could be implemented for the new 'minor injury' class Ambulance?

Technically your bet is null, and could be parlayed double or nothing.

comment by [deleted] · 2023-07-29T21:58:15.133Z · LW(p) · GW(p)

Hi Paul. I've reflected carefully on your post. I have worked for several years on a SDC software infrastructure stack and have also spent a lot of time comparing the two situations.

Update: since commentators and downvoters demand numbers: I would say the odds of criticality are 90% by July 2033. The remaining 10% is that there is a possibility of a future AI winter (investors get too impatient) and there is the possibility that revenue from AI services will not continue to scale.

I think you're badly wrong, again, and the consensus of experts are right, again.

First, let's examine your definition for transformative. This may be the first major error:

(By which I mean: systems as economically impactful as a low-cost simulations of arbitrary human experts, which I think is enough to end life as we know it one way or the other.)

This is incorrect, and you're a world class expert in this domain.

Transformative is a subclass of the problem of criticality. Criticality, as you must know, means a system produces self gain larger than it's self losses. For AGI, there are varying stages of criticality, which each do settle on an equlibria:

Investment criticality : This means that each AI system improvement or new product announcement or report of revenue causes more financial investment into AI than the industry as a whole burned in runway over that timestep.

Equilibrium condition: either investors run out of money, globally, to invest or they perceive that each timestep the revenue gain is not worth the amount invested and choose to invest in other fields. The former equilibrium case settles on trillions of dollars into AI and a steady ramp of revenue over time, the later is an AI crash, similar to the dotcom crash of 2000.

Economic Criticality: This means each timestep, AI systems are bringing in more revenue than the sum of costs [amortized R&D, inference hardware costs, liability, regulatory compliance, ...]

Equilibrium condition: growth until there is no more marginal tasks an AI system can perform cheaper than a human being. Assuming a large variety of powerful models and techniques, it means growth continues until all models and all techniques cannot enter any new niches. The reason why this criticality is not exponential, while the next ones are, is because the marginal value gain for AI services drops with scale. Notice how Microsoft charges just $30 a month for Copilot, which is obviously able to save far more than $30 worth of labor each month for the average office worker.

Physical Criticality: This means AI systems, controlling robotics, have generalized manufacturing, mining, logistics, and complex system maintenance and assembly. The majority, but not all, of labor to produce more of all of the inputs into an AI system can be produced by AI systems.

Equilibrium condition: Exponential growth until the number of human workers on earth is again rate limiting. If humans must still perform 5% of the tasks involved in the subdomain of "build things that are inputs into inference hardware, robotics", then the equilibria is when all humans willing, able to work on earth are doing those 5% of tasks.

AGI criticality: True AGI can learn automatically to do any task that has clear and objective feedback. All tasks involved in building computer chips, robotic parts (and all lower level feeder tasks and power generation and mining and logistics) have objective and measurable feedback. Bolded because I think this is a key point and a key crux, you may not have realized this. Many of your "expert" domain tasks do not get such feedback, or the feedback is unreliable. For example an attorney who can argue 1 case in front of a jury every 6 months cannot reliably refine their policy based on win/loss because the feedback is so rare and depends on so many uncontrolled variables.

AGI may still be unable to perform as well as the best experts in many domains. This is not relevant. It only has to perform well enough for machines controlled by the AI to collect more resources/build more of themselves than their cost.

A worker pool of AI systems like this can be considerably subhuman across many domains, or rely heavily on using robotic manipulators that are each specialized for a task, being unable to control general purpose hands, relying heavily on superior precision and vision to complete tasks in a way different than how humans perform it. They can make considerable mistakes, so long as the gain is positive - miswire chip fab equipment, dropped parts in the work area cause them to flush clean entire work areas, wasting all the raw materials - etc. I am not saying the general robotic agents will be this inefficient, just that they could be.

Equilibrium Condition: exponential growth until exhaustion of usable elements in Sol. Current consensus is earth's moon has a solid core, so all of it could potentially be mined for useful elements A large part of Mars, it's moons, the asteroid belt, and Mercury are likely mineable. Large areas of the earth via underground tunnel and ocean floor mining. The Jovian moons. Other parts of the solar system become more speculative but this is a natural consequence of machinery able to construct more of itself.

Crux : AGI criticality seems to fall short of your requirement for "human experts" to be matched by artificial systems. Conversely, if you invert the problem: AGI cannot control robots well, creating a need for billions of technician jobs, you do not achieve criticality, you are rate limited on several dimensions. AI companies collect revenue more like consulting companies in such a world, and saturate when they cannot cheaply replace any more experts, or the remaining experts enjoy legal protection.

Requirement to achieve full AGI criticality before 2033: You would need a foundation model trained on all the human manipulation you have the licenses for the video. You would need a flexible, real time software stack, that generalizes to many kinds of robotic hardware and sensor stack. You would need an "app store" license model where thousands of companies could contribute, instead of just 3, to the general pool of AI software, made intercompatible by using a base stack. You would need there to not be hard legal roadblocks stopping progress. You would need to automatically extend a large simulation of possible robotic tasks whenever surprising inputs are seen in the real world.

Amdahl's law applies to the above, so actually, probably this won't happen before 2033, but one of the lesser criticalities might. We are already in the Investment criticality phase of this.

Autonomous cars: I had a lot of points here, but it's simple:

(1) an autonomous robo taxi must collect more revenue than the total costs, or it's subcritical, which is the situation now. If it were critical, Waymo would raise as many billions as required and would be expanding into all cities in the USA and Europe at the same time. (look at a ridesharing company's growth trajectory for a historical example of this)

(2) It's not very efficient to develop a realtime stack just for 1 form factor of autonomous car for 1 company. Stacks need to be general.

(3) There are 2 companies allowed to contribute. Anyone not an employee of Cruise or Waymo is not contributing anything towards autonomous car progress. There's no cross licensing, and it's all closed source except for comma.ai. This means only a small number of people are pushing the ball forward at all, and I'm pretty sure they each work serially on an improved version of their stack. Waymo is not exploring 10 different versions of n+1 "Driver" agent using different strategies, but is putting everyone onto a single effort, which may be the wrong approach, where each mistake costs linear time. Anyone from Waymo please correct me. Cruise must be doing this as they have less money.

Replies from: o-o, paulfchristiano, john-williams-1↑ comment by O O (o-o) · 2023-07-30T09:30:46.954Z · LW(p) · GW(p)

This is incorrect, and you're a world class expert in this domain.

This is a rather rude response. Can you rephrase that?

All tasks involved in building computer chips, robotic parts (and all lower level feeder tasks and power generation and mining and logistics) have objective and measurable feedback. Bolded because I think this is a key point and a key crux, you may not have realized this. Many of your "expert" domain tasks do not get such feedback, or the feedback is unreliable. For example an attorney who can argue 1 case in front of a jury every 6 months cannot reliably refine their policy based on win/loss because the feedback is so rare and depends on so many uncontrolled variables.

I don’t like this point. Many expert domain tasks have vast quantities of historical data we can train evaluators on. Even if the evaluation isn’t as simple to quantify, deep learning intuitively seems it can tackle it. Humans also manage to get around the fact that evaluation may be hard to gain competitive advantages as experts of those fields. Good and bad lawyers exist. (I don’t think it’s a great example as going to trial isn’t a huge part of a most lawyers’ jobs)

Having a more objective and immediate evaluation function, if that’s what you’re saying, doesn’t seem like an obvious massive benefit. The output of this evaluation function with respect to labor output over time can still be pretty discontinuous so it may not effectively be that different than waiting 6 months between attempts to know if success happened.

An example of this is it taking a long time to build and verify whether a new chip architecture improves speeds or having to backtrack and scrap ideas.

Replies from: None↑ comment by [deleted] · 2023-07-30T22:45:35.183Z · LW(p) · GW(p)

This is a rather rude response. Can you rephrase that?

If I were to rephrase I might say something like "just like historical experts Einstein and Hinton, it's possible to be a world class expert but still incorrect. I think that focusing on the human experts at the top of the pyramid is neglecting what would cause AI to be transformative, as automating 90% of humans matters a lot more than automating 0.1%. We are much closer to automating the 90% case because..."

I don’t like this point. Many expert domain tasks have vast quantities of historical data we can train evaluators on. Even if the evaluation isn’t as simple to quantify, deep learning intuitively seems it can tackle it. Humans also manage to get around the fact that evaluation may be hard to gain competitive advantages as experts of those fields. Good and bad lawyers exist. (I don’t think it’s a great example as going to trial isn’t a huge part of a most lawyers’ jobs)

Having a more objective and immediate evaluation function, if that’s what you’re saying, doesn’t seem like an obvious massive benefit. The output of this evaluation function with respect to labor output over time can still be pretty discontinuous so it may not effectively be that different than waiting 6 months between attempts to know if success happened.

For lawyers: the confounding variables means a robust, optimal policy is likely not possible. A court outcome depends on variables like [facts of case, age and gender and race of the plaintiff/defendant, age and gender and race of the attorneys, age and gender and race of each juror, who ends up the foreman, news articles on the case, meme climate at the time the case is argued, the judge, the law's current interpretation, scheduling of the case, location the trial is held...]

It would be difficult to develop a robust and optimal policy with this many confounding variables. It would likely take more cases than any attorney can live long enough to argue or review.

Contrast this to chip design. Chip A, using a prior design, works. Design modification A' is being tested. The universe objectively is analyzing design A' and measurable parameters (max frequency, power, error rate, voltage stability) can be obtained.

The problem can also be subdivided. You can test parts of the chip, carefully exposing it to the same conditions it would see in the fully assembled chip, and can subdivide all the way to the transistor level. It is mostly path independent - it doesn't matter what conditions the submodule saw yesterday or an hour ago, only right now. (with a few exceptions)

Delayed feedback slows convergence to an optimal policy, yes.

You cannot stop time and argue a single point to a jury, and try a different approach, and repeatedly do it until you discover the method that works. {note this does give you a hint as to how an ASI could theoretically solve this problem}

I say this generalizes to many expert tasks like [economics, law, government, psychology, social sciences, and others]. Feedback is delayed and contains many confounding variables independent of the [expert's actions].

While all tasks involved with building [robots, compute], with the exception of tasks that fit into the above (arguing for the land and mineral permits to be granted for the ai driven gigafactories and gigamines), offer objective feedback.

Replies from: o-o↑ comment by O O (o-o) · 2023-07-31T04:58:41.952Z · LW(p) · GW(p)

the confounding variables means a robust, optimal policy is likely not possible. A court outcome depends on variables like [facts of case, age and gender and race of the plaintiff/defendant, age and gender and race of the attorneys, age and gender and race of each juror, who ends up the foreman, news articles on the case, meme climate at the time the case is argued, the judge, the law's current interpretation, scheduling of the case, location the trial is held...]

I don't see why there is no robust optimal policy. A robust optimal policy doesn't have to always win. The optimal chess policy can't win with just a king on the board. It just has to be better than any alternative to be optimal as per the definition of optimal. I agree it's unlikely any human lawyer has an optimal policy, but this isn't unique to legal experts.

There are confounding variables, but you could also just restate evaluation as trial win-rate (or more succinctly trial elo) instead of as a function of those variables. Likewise you can also restate chip evaluation's confounding variables as being all the atoms and forces that contribute to the chip.

The evaluation function for lawyers, and many of your examples is objective. The case gets won, lost, settled, dismissed, etc.

The only difference is it takes longer to verify generalizations are correct if we go out of distribution with a certain case. In the case of a legal-expert-AI, we can't test hypotheses as easily. But this still may not be as long as you think. Since we will likely have jury-AI when we approach legal-expert-AI, we can probably just simulate the evaluations relatively easily (as legal-expert-AI is probably capable of predicting jury-AI). In the real world, a combination of historical data and mock trials help lawyers verify their generalizations are correct, so it wouldn't even be that different as it is today (just much better). In addition, process based evaluation probably does decently well here, which wouldn't need any of these more complicated simulations.

You cannot stop time and argue a single point to a jury, and try a different approach, and repeatedly do it until you discover the method that works. {note this does give you a hint as to how an ASI could theoretically solve this problem}

Maybe not, but you can conduct mock trials and look at billions of historical legal cases and draw conclusions from that (human lawyers already read a lot). You can also simulate a jury and judge directly instead of doing a mock trial. I don't see why this won't be good enough for both humans and an ASI. The problem has high dimensionality as you stated, with many variables mattering, but a near optimal policy can still be had by capturing a subset of features. As for chip-expert-AI, I don't see why it will definitely converge to a globally optimal policy.

All I can see is that initially legal-expert-AI will have to put more work in creating an evaluation function and simulations. However, chip-expert-AI has its own problem where it's almost always working out of distribution, unlike many of these other experts. I think experts in other fields won't be that much slower than chip-expert-AI. The real difference I see here is that the theoretical limits of output of chip-expert-AI are much higher and legal-expert-AI or therapist-expert-AI will reach the end of the sigmoid much sooner.

I say this generalizes to many expert tasks like [economics, law, government, psychology, social sciences, and others]. Feedback is delayed and contains many confounding variables independent of the [expert's actions].

Is there something significantly different between a confounding variable that can't be controlled like scheduling and unknown governing theoretical frameworks that are only found experimentally? Both of these can still be dealt with. For the former, you may develop different policies for different schedules. For the latter, you may also intuit the governing theoretical framework.

↑ comment by [deleted] · 2023-07-31T09:16:19.388Z · LW(p) · GW(p)

So in this context, I was referring to criticality. AGI criticality is a self amplifying process where the amount of physical materials and capabilities increases exponentially with each doubling time. Note it is perfectly fine if humans continue to supply as inputs the network of isolated AGI instances are unable to produce. (Vs others who imagine a singleton AGI on its own. Obviously eventually the system will be rate limited by available human labor if its limited this way, but will see exponential growth until then)

I think the crux here is that all is required is for AGI to create and manufacture variants on existing technology. At no point does it need to design a chip outside of current feature sizes, at no point does any robot it designs look like anything but a variation of robots humans designed already.

This is also the crux with Paul. He says the AGI needs to be as good as the 0.1 percent human experts at the far right side of the distribution. I am saying that doesn't matter, it is only necessary to be as good as the left 90 percent of humans. Approximately , I go over how the AGI doesn't even need to be that good, merely good enough there is net gain.

This means you need more modalities on existing models but not necessarily more intelligence.

It is possible because there are regularities in how the tree of millions of distinct manufacturing tasks that humans do now use common strategies. It is possible because each step and substep has a testable and usually immediately measurable objective. For example : overall goal. Deploy a solar panel. Overall measurable value : power flows when sunlight available. Overall goal. Assemble a new robot of design A5. Overall measurable objective: new machinery is completing tasks with similar Psuccess. Each of these problems is neatly dividable into subtasks and most subtasks inherit the same favorable properties.

I am claiming more than 99 percent of the sub problems of "build a robot, build a working computer capable of hosting more AGI" work like this.

What robust and optimal means is that little human supervision is needed, that the robots can succeed again and again and we will have high confidence they are doing a good job because it's so easy to measure the ground truth in ways that can't be faked. I didn't mean the global optimal, I know that is an NP complete problem.

I was then talking about how the problems the expert humans "solve" are nasty and it's unlikely humans are even solving many of them at the numerical success levels humans have in manufacturing and mining and logistics, which are extremely good at policy convergence. Even the most difficult thing humans do - manufacture silicon ICs - converges on yields above 90 percent eventually.

How often do lawyers unjustly lose, economists make erroneous predictions, government officials make a bad call, psychologists fail and the patient has a bad outcome, or social science uses a theory that fails to replicate years later.

Early AGI can fail here in many ways and the delay until feedback slows down innovation. How many times do you need to wait for a jury verdict to replace lawyers with AI. For AI oncologists how long does it take to get a patient outcome of long term survival. You're not innovating fast when you wait weeks to months and the problem is high stakes like this. Robots deploying solar panels are low stakes with a lot more freedom to innovate.

↑ comment by paulfchristiano · 2023-07-29T22:14:48.752Z · LW(p) · GW(p)

This is incorrect, and you're a world class expert in this domain.

What's incorrect? My view that a cheap simulation of arbitrary human experts would be enough to end life as we know it one way or the other?

(In the subsequent text it seems like you are saying that you don't need to match human experts in every domain in order to have a transformative impact, which I agree with. I set the TAI threshold as "economic impact as large as" but believe that this impact will be achieved by systems which are in some respects weaker than human experts and in other respects stronger/faster/cheaper than humans.)

Do you think 30% is too low or too high for July 2033?

Replies from: None, None↑ comment by [deleted] · 2023-07-29T22:29:34.701Z · LW(p) · GW(p)

Do you think 30% is too low or too high for July 2033?

This is why I went over the definitions of criticality. Once criticality is achieved the odds drop to 0. A nuclear weapon that is prompt critical is definitely going to explode in bounded time because there are no futures where sufficient numbers of neutrons are lost to stop the next timestep releasing even more.

What's incorrect? My view that a cheap simulation of arbitrary human experts would be enough to end life as we know it one way or the other?

Your cheap expert scenario isn't necessarily critical. Think of how it could quench, where you simply exhaust the market for certain kinds of expert services and cannot expand to any more because of lack of objective feedback and legal barriers.

An AI system that has hit the exponential criticality phase in capability is the same situation as the nuclear weapon. It will not quench, that is not a possible outcome in any future timeline [except timelines with immediate use of nuclear weapons on the parties with this capability]

So your question becomes : what is the odds that economic or physical criticality will be reached by 2033? I have doubts myself, but fundamentally the following has to happen for robotics:

- A foundation model that includes physical tasks, like this.

- Sufficient backend to make mass usage across many tasks possible, and convenient licensing and usage. Right now Google and a few startups exist and have anything using this approach. Colossal scale is needed. Something like ROS 2 but a lot better.

- No blocking legal barriers. This is going to require a lot of GPUs to learn from all the video in the world. Each robot in the real world needs a rack of them just for itself.

- Generative physical sims. Similar to generative video, but generating 3d worlds where short 'dream' like segments of events happening in the physical world can be modeled. This is what you need to automatically add generality to go from 60% success rate to 99%+. Tesla has demoed some but I don't know of good, scaled, readily licensed software that offers this.

For economics:

1. Revenue collecting AI services good enough to pay for at scale

2. Cheap enough hardware, such as from competitors to Nvidia, that make the inference hardware cheap even for powerful models

Either criticality is transformative.

Replies from: awg↑ comment by awg · 2023-07-29T23:14:41.441Z · LW(p) · GW(p)

You speak with such a confident authoritative tone, but it is so hard to parse what your actual conclusions are.

You are refuting Paul's core conclusion that there's a "30% chance of TAI by 2033," but your long refutation is met with: "wait, are you trying to say that you think 30% is too high or too low?" Pretty clear sign you're not communicating yourself properly.

Even your answer to his direct follow-up question: "Do you think 30% is too low or too high for July 2033?" was hard to parse. You did not say something simple and easily understandable like, "I think 30% is too high for these reasons: ..." you say "Once criticality is achieved the odds drop to 0 [+ more words]." The odds of what drop to zero? The odds of TAI? But you seem to be saying that once criticality is reached, TAI is inevitable? Even the rest of your long answer leaves in doubt where you're really coming down on the premise.

By the way, I don't think I would even be making this comment myself if A) I didn't have such a hard time trying to understand what your conclusions were myself and B) you didn't have such a confident, authoritative tone that seemed to present your ideas as if they were patently obvious.

Replies from: None↑ comment by [deleted] · 2023-07-29T23:30:35.236Z · LW(p) · GW(p)

I'm confident about the consequences of criticality. It is a mathematical certainty, it creates a situation where all future possible timelines are affected. For example, covid was an example of criticality. Once you had sufficient evidence to show the growth was exponential, which was available in January 2020, you could be completely confident all future timelines would have a lot of covid infections in them and it would continue until quenching, which turned out to be infection of ~44% of the population of the planet. (and you can from the Ro estimate that final equilibrium number)

Once AI reaches a point where critical mass happens, it's the same outcome. No futures exist where you won't see AI systems in use everywhere for a large variety of tasks (economic criticality) or billions or scientific notation numbers of robots in use (physical criticality, true AGI criticality cases).

July 2033 thus requires the "January 2020" data to exist. There don't have to be billions of robots yet, just a growth rate consistent with that.

I do not know precisely when the minimum components needed to reach said critical mass will exist.

I gave the variables of the problem. I would like Paul, who is a world class expert, to take the idea seriously and fill in estimates for the values of those variables. I think his model for what is transformative and what the requirements are for transformation is completely wrong, and I explain why.

If I had to give a number I would say 90%, but a better expert could develop a better number.

Update: edited to 90%. I would put it at 100% because we are already past investor criticality, but the system can still quench if revenue doesn't continue to scale.

↑ comment by Matt Goldenberg (mr-hire) · 2023-07-30T15:05:08.786Z · LW(p) · GW(p)

It seems like criticality is sufficient, bot not necessary, for TAI, and so only counting criticality scenarios causes underestimation.

↑ comment by [deleted] · 2023-07-29T22:46:32.299Z · LW(p) · GW(p)

My view that a cheap simulation of arbitrary human experts would be enough to end life as we know it one way or the other?

Just to add to this : many experts are just faking it. Simulating them is not helping. By faking it, because they are solving as humans an RL problem that can't be solved, their learned policy is deeply suboptimal and in some cases simply wrong. Think expert positions like in social science, government, law, economics, business consulting, and possibly even professors who chair computer science departments but are not actually working on scaled cutting edge AI. Each of these "experts" cannot know a true policy that is effective, most of their status comes from various social proofs and finite Official Positions. The "cannot" because they will not in their lifespan receive enough objective feedback to learn a policy that is definitely correct. (they are more likely to be correct than non experts, however)

(In the subsequent text it seems like you are saying that you don't need to match human experts in every domain in order to have a transformative impact, which I agree with. I set the TAI threshold as "economic impact as large as" but believe that this impact will be achieved by systems which are in some respects weaker than human experts and in other respects stronger/faster/cheaper than humans.)

I pointed out that you do not need to match human experts in any domain at all. Transformation depends on entirely different variables.

↑ comment by Mazianni (john-williams-1) · 2023-08-01T07:38:46.108Z · LW(p) · GW(p)

Gentle feedback is intended

This is incorrect, and you're a world class expert in this domain.

The proximity of the subparts of this sentence read, to me, on first pass, like you are saying that "being incorrect is the domain in which you are a world class expert."

After reading your responses to O O I deduce that this is not your intended message, but I thought it might be helpful to give an explanation about how your choice of wording might be seen as antagonistic. (And also explain my reaction mark to your comment.)

For others who have not seen the rephrasing by Gerald, it reads

just like historical experts Einstein and Hinton, it's possible to be a world class expert but still incorrect. I think that focusing on the human experts at the top of the pyramid is neglecting what would cause AI to be transformative, as automating 90% of humans matters a lot more than automating 0.1%. We are much closer to automating the 90% case because...

I share the quote to explain why I do not believe that rudeness was intended.

comment by Yunfan Ye (yunfan-ye) · 2023-08-05T02:26:45.247Z · LW(p) · GW(p)

Sadly, you might be wrong again.

I am thinking, maybe the reason you made the wrong bet back in 2016 was because you knew too little about the field rather than you naturally being too optimistic. Now in transformative, you are the expert and thus free of the rookie's optimism. But you corrected your optimism again and made a "pessimistic" future bet -- this could be an over correction.

Most importantly, betting against the best minds in one field is always a long shot. :D