"Moral progress" vs. the simple passage of time

post by HoldenKarnofsky · 2022-02-09T02:50:19.144Z · LW · GW · 30 commentsContents

Notes None 30 comments

In Future-Proof Ethics, I talked about trying to "consistently [make] ethical decisions that look better, with hindsight after a great deal of moral progress, than what our peer-trained intuitions tell us to do."

I cited Kwame Anthony Appiah's comment that "common-sense" ethics has endorsed horrible things in the past (such as slavery and banning homosexuality), and his question of whether we, today, can do better by the standards of the future.

A common objection to this piece was along the lines of:

Who cares how future generations look back on me? They'll have lots of views that are different from mine, just as I have lots of views that are different from what was common in the past. They'll judge me harshly, just as I judge people in the past harshly. But none of this is about moral progress - it's just about random changes.

Sure, today we're glad that homosexuality is more accepted, and we think of that as progress. But that's just circular - it's judging the past by the standards of today, and concluding that today is better.

Interestingly, I think there were two versions of this objection: what I'd call the "moral realist" version and the "moral super-anti-realist" version.

- The moral realist thinks that there are objective moral truths. Their attitude is: "I don't care what future people think of my morality (or what I think after more reflection?1) - I just care what's objectively right."

- The moral super-anti-realist thinks that morality is strictly subjective, and that there's just nothing interesting to say about how to "improve" morality. Their attitude is: "I don't care what future people think of my morality, I just care what's moral by the arbitrary standards of the time I live in."

In contrast to these positions, I would label myself as a "moral quasi-realist": I don't think morality is objective, but I still care greatly about what a future Holden - one who has reflected more, learned more, etc. - would think about the ethical choices I'm making today. (Similarly, I believe that taste in art is subjective, but I also believe there are meaningful ways of talking about "great art" and "highbrow vs. lowbrow taste," and I personally have a mild interest in cultivating more highbrow taste for myself.)

Talking about "moral progress" is intended to encompass both the "moral quasi-realist" and the "moral realist" positions, while ignoring the "moral super-anti-realist" position because I think that one is silly. The reason I went with the "future-proof ethics" framing is because it gives a motivation for moral reasoning that I think is compatible with believing in objective moral truth, or not - as long as you believe in some meaningful version of progress.

By "moral progress," I don't just mean "Whatever changes in commonly accepted morality happen to take place in the future." I mean specifically to point to the changes that you (whoever is reading this) consider to be progress, whether because they are honing in on objective truth or resulting from better knowledge and reasoning or for any other good reason. Future-proof ethics is about making ethical choices that will still look good after your and/or society's ethics have "improved" (not just "changed").

I expect most readers - whether they believe in objective moral truth or not - to accept that there are some moral changes that count as progress. I think the ones I excerpted from Appiah's piece are good examples that I expect most readers to accept and resonate with.

In particular, I expect some readers to come in with an initial position of "Moral tastes are just subjective, there's nothing worth debating about them," and then encounter examples like homosexuality becoming more accepted over time and say "Hmm ... I have to admit that one really seems like some sort of meaningful progress. Perhaps there will also be further progress in the future that I care about. And perhaps I can get ahead of that progress via the sorts of ideas discussed in Future-Proof Ethics. Gosh, what an interesting blog!"

However, if people encounter those examples and say "Shrug, I think things like increasing acceptance of homosexuality are just random changes, and I'm not motivated to 'future-proof' my ethics against future changes of similar general character," then I think we just have a deep disagreement, and I don't expect my "future-proof ethics" series to be relevant for such readers. To them I say: sorry, I'll get back to other topics reasonably soon!

Notes

-

I suspect the moral realists making this objection just missed the part of my piece stating:

"Moral progress" here refers to both societal progress and personal progress. I expect some readers will be very motivated by something like "Making ethical decisions that I will later approve of, after I've done more thinking and learning," while others will be more motivated by something like "Making ethical decisions that future generations won't find abhorrent."

But maybe they saw it, and just don't think "personal progress" matters either, only objective moral truth. ↩

For email filter: florpschmop

Comment/discuss [? · GW]30 comments

Comments sorted by top scores.

comment by Daniel Kokotajlo (daniel-kokotajlo) · 2022-02-09T20:03:13.487Z · LW(p) · GW(p)

In contrast to these positions, I would label myself as a "moral quasi-realist": I don't think morality is objective, but I still care greatly about what a future Holden - one who has reflected more, learned more, etc. - would think about the ethical choices I'm making today.

I worry that you might end up conflating future holden with holden-who-has-reflected-more-learned-more-etc. Future you won't necessarily be better you. Maybe future you will be brainwashed or mind-controlled; maybe the stuff you'll have learned will be biased propaganda and the reflections you've undergone will be self-serving rationalizations.

But probably not! I think that if you are just thinking about what you in the future will think, it's probably a reasonable assumption that present you should defer to future you.

HOWEVER, when we move from the individual to all of society, I think the parallel assumption is no longer reasonable. I'm a quasi-realist like you, but I think it's not at all obvious that the way social norms evolve in gigantic populations of people over many decades are in general good, such that we should in general defer to what people in the future think. For example, insofar as there is a conflict between "what sounds good to say & feels good to believe" and "what's actually conducive to flourishing in the long run" we should expect there to be many conditions under which society drifts systematically towards the former and away from the latter.

The main counterargument is "Empirically there does seem to be a trend of moral and epistemic progress."

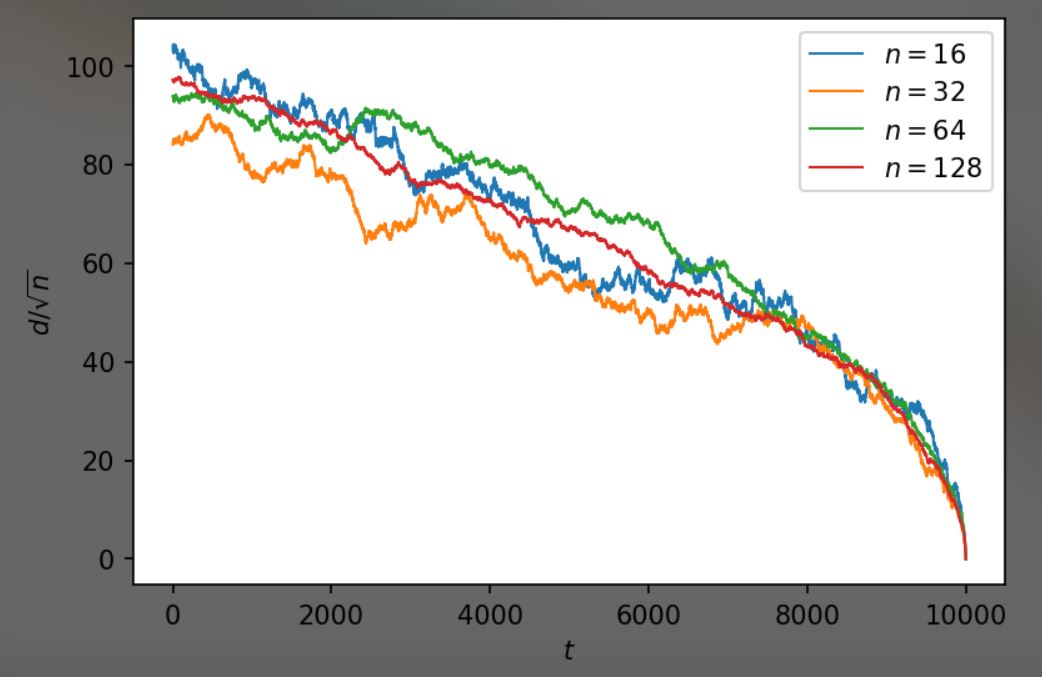

But this argument is flawed (at least in the case of morality) because even if societal morals were a total random walk over time, it would still look like progress from our perspective:

This is a graph of some random walks in n-dimensional spaces, with the y-axis being "distance from the t=10000 point."

To be clear I'm not claiming that morality/norms evolve randomly over time. There's definitely rhyme and reason in how they evolve. What I'm saying is that we have no good reason so far to think that the rhyme and reason is systematically good, systematically such that we should defer to future societies. (By mild contrast with the individual case where generally speaking you can expect to have reflected more and experienced more in good ways like doing philosophy rather than bad ways like being brainwashed by propaganda.)

For example, suppose that the underlying driver of moral changes over time is "What memes prevail in a population is a function of how much selection pressure there is for (A) looking good and feeling good to believe, (B) causing you to do things that help you solve physical problems in the real world such as how to grow crops or survive the winter or build a spaceship or prevent a recession, (C) causing you to do things that are conducive to the general happiness of people influenced by your decisons, and (D) causing you to pass on your genes." (Realistically there is also the very important (S): causing you to gain status. But usually S is a function of A, B, C, and D.) And suppose that in the past, with most people living in small farming villages, selection pressure for B and C and D was much stronger than selection pressure for A. But over the course of history the balance of selection pressures shifted and (A) became a lot stronger, and (D) became a lot weaker.

I literally just now pulled this toy model out of my ass, it's probably wrong in a bunch of ways and there are lots of ways to improve on it no doubt. But it'll do to illustrate the point I'm trying to make.

Suppose that this toy model is roughly right; that's the underlying driver of societal norms; the reason why e.g. gay people are accepted now and not in the past is that with the abundant wealth of modern economies status comes from more from saying things that sound good rather than from passing on your genes, and so anti-gayness memes (that sound bad but solved the problem of getting your relatives to have more kids, thereby helping spread your genes) have been relatively disfavored.

Then we quasi-realists should not defer to the moral judgment of future societies. The memes that flourish in them will be better in some ways and worse in other ways than the memes that flourish now. (Unless we have a good argument that 'what sounds good and feels good' isn't Goodhartable, i.e. is such a good proxy for the quasi-realist moral truth that it'll continue to be a good proxy even as society optimizes significantly harder and harder for it.)

(Thanks to Nico Mace for making the graph in an earlier conversation)

↑ comment by HoldenKarnofsky · 2022-03-31T22:44:04.934Z · LW(p) · GW(p)

I think this is still not responsive to what I've been trying to say. Nowhere in this post or the one before have I claimed that today's society is better morally, overall, compared to the past. I have simply reached out for reader intuitions that particular, specific changes really are best thought of as "progress" - largely to make the point that "progress" is a coherent concept, distinct from the passage of time.

I also haven't said that I plan to defer to future Holden. I have instead asked: "What would a future Holden who has undertaken the sorts of activities I'd expect to lead to progress think?" (Not "What will future Holden think?")

My question to you would be: do you think the changing norms about homosexuality, or any other change you can point to, represent something appropriately referred to as "progress," with its positive connotation?

My claim is that some such changes (specifically including changing norms about homosexuality) do - not because today's norms are today's (today may be worse on other fronts, even worse overall), and not because there's anything inevitable about progress, but simply because they seem to me like "progress," by which I roughly (exclusively) mean that I endorse the change and am in the market for more changes like that to get ahead of.

Does that clarify at all? (And are there any changes in morality - historical or hypothetical - that you would consider "progress?")

Replies from: daniel-kokotajlo↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2022-04-02T18:37:15.895Z · LW(p) · GW(p)

My apologies then; it's likely I misinterpreted you. Perhaps we are on the same page.

My answer to your question depends on the definition of progress. I prefer to taboo the term and instead say the following:

When society or someone or whatever changes from A to B, and B is morally better than A, that's a good change.

However, sometimes good changes are caused by processes that are not completely trustworthy/reliable. That is, sometimes it's the case that a process causes a good change from situation A to B, but in some other situation C it will produce a bad change to D. The example on my mind most is the one I gave earlier--maybe the memetic evolution process is like this; selecting memes on the basis of how nice they sound produces some good changes A-->B (such as increased acceptance of homosexuality) but will also produce other bad changes C to D (I don't have a particular example here but hopefully don't need one; if you want I can try to come up with one.). Goodhart's Curse weighs heavily on my mind; this pattern of optimization processes at first causing good changes and then later causing bad changes is so pervasive that we have a name for it!

I do believe in progress, in the following sense: There are processes which I trust / consider reliable / would defer to. If I learned that future-me or future-society had followed those processes and come to conclusion X, I would update heavily towards X. And I'm very excited to learn more about what the results of these processes will be so that I can update towards them.

I am skeptical of progress, in the following different sense: I consider it a wide-open question whether default memetic evolution among terrestrial humans (i.e. what'll happen if we don't build AGI and just let history continue as normal) is a process which I trust.

Unfortunately, I don't have a lot of clarity about what those trustworthy processes are exactly. Nor do I have a lot of clarity about what the actual causes of past moral changes were, such as acceptance of homosexuality. So I am pretty uncertain about which good changes in the past were caused by trustworthy processes and which weren't. History is big; presumably both kinds exist.

↑ comment by Bezzi · 2022-02-09T21:29:23.042Z · LW(p) · GW(p)

I basically agree. I would describe a moral law as a pendulum swinging between "this is very bad and everyone doing it should be punished" and "this is very good and everyone saying otherwise should be punished", according to the historical and social context. It probably doesn't swing truly randomly, but there's no reason for it to swing always in the same direction.

Also, note that if you look on a thousand-years timescale, acceptance of homosexuality did not actually advance in a straight path (take a look at Ancient Greeks). We live in a context were the homosexuality acceptance pendulum is swinging toward more acceptance. But push the pendulum too much, and you'll end up invoking the ban of Dante's Divine Comedy because it sends gay people to Hell (I definitely don't count that as moral progress).

More generally, I hold the view that morality is mostly a conformity thing. Some people for some reason manage to make the pendulum swing, and everyone else is more or less forced to chase it. Imagine waking up in a Matrix pod, and being told that no one in the real world believes in gay marriage, that homosexuality is obviously wrong and it's still firmly on the official list of mental illnesses (with absolutely zero gay activist in the world and plenty of people around proudly declaring themselves as ex-gay). Would your belief still hold in this scenario? I don't think that mine would last for long.

comment by Zack_M_Davis · 2022-02-09T04:52:37.507Z · LW(p) · GW(p)

The progress vs. drift framing neglects the possibility that moral decay can exist from a quasi-realist perspective: that people in the past got some things right that people today are getting wrong. I don't think this should be a particularly out-there hypothesis in the current year?

Replies from: gworley↑ comment by Gordon Seidoh Worley (gworley) · 2022-02-09T07:11:40.727Z · LW(p) · GW(p)

Right. Take nearly any person who lived >~ 500 years ago, drop them in the modern world, and they'll think that all the cool tech is great but also that we're clearly living in a time of moral decay.

I'm pretty suspicious of anyone claiming progress or decay in any absolute sense. I'm happy to admit there's been progress or decay relative to some ethical framing, but it's hard to justify that "no, really, we got morality right this time, or at least less wrong" when for literally the entirety of human history people would plausibly have made this same claim and many would have found it convincing.

Thus, it seems there's insufficient information in the evidence about claims of progress or decay.

comment by Ninety-Three · 2022-02-09T20:52:35.882Z · LW(p) · GW(p)

"I don't care what future people think of my morality, I just care what's moral by the arbitrary standards of the time I live in."

As a moral super-anti-realist ("Morality is a product of evolutionary game theory shaping our brains plus arbitrary social input") this doesn't represent my view.

I care about morality the same way I care about aesthetics: "I guess brutalism, rock music and prosocial behaviour are just what my brain happens to be fond of, I should go experience those if I want to be happy." I think this is heavily influenced by the standards of the time, but not exactly equal to those standards, probably because brains are noisy machines that don't learn standards perfectly. For instance, I happen to think jaywalking is not immoral so I do it without regard for how local standards view jaywalking.

Concisely, I'd phrase it as "I don't care what future people think of my morality, I just care what's moral by the arbitrary standards of my own brain."

comment by Rob Bensinger (RobbBB) · 2022-02-09T19:10:35.531Z · LW(p) · GW(p)

I boringly agree with Holden, and with the old-hat views expressed in By Which It May Be Judged [? · GW], Morality as Fixed Computation [LW · GW], Pluralistic Moral Reductionism [LW · GW], The Hidden Complexity of Wishes [LW · GW], and Value is Fragile [LW · GW].

There's a particular logical object my brain is trying to point at when it talks about morality. (At least, this is true for some fraction of my moral thinking, and true if we allow that my brain may leave some moral questions indeterminate, so that the logical object is more like a class of moralities that are all equally good by my lights.)

Just as we can do good math without committing ourselves to a view about mathematical Platonism, we can do good morality without committing ourselves to a view about whether this logical object is 'really real'. And I can use this object as a reference point to say that, yes, there has been some moral progress relative to the past; and also some moral decay. And since value is complex, there isn't a super simple summary I can give of the object, or of moral progress; we can talk about various examples, but the outcomes depend a lot on object-level details.

The logical object my brain is pointing at may not be exactly the same one your brain is pointing at; but there's enough overlap among humans in general that we can usefully communicate about this 'morality' thing, much as it's possible to communicate about theorems even though (e.g.) some mathematicians are intuitionists and others aren't. We just focus on the areas of greatest overlap, or clarify which version of 'morality' we're talking about as needed.

Replies from: RobbBB, TAG↑ comment by Rob Bensinger (RobbBB) · 2022-02-09T19:11:52.033Z · LW(p) · GW(p)

I don't think 'realist' vs. 'anti-realist' is an important or well-defined distinction here. (Like, there are ways of defining it clearly, but the ethics literature hasn't settled on a single consistent way to do so.)

Some better distinctions include:

- Do you plan to act as though moral claim X (e.g., 'torturing people is wrong') is true? (E.g., trying to avoid torturing people and trying to get others not to torture people too.)

- If you get an opportunity to directly modify your values, do you plan to self-modify so that you no longer act as though moral claim X is true? (Versus trying to avoid such self-modifications, or not caring.)

- This is similar to the question of whether you'd endorse society changing its moral views about X.

- Direct brain modifications aside, is there any information you could learn that would cause you to stop acting as though X is true? If so, what information would do the job?

- This is one way of operationalizing the difference between 'more terminal' versus 'more instrumental' values: if you think torture is bad unconditionally, and plan to act accordingly, then you're treating it more like it's 'terminally' bad.

- (Note that treating something as terminally bad isn't the same thing as treating it as infinitely bad. Nor is it the same as being infinitely confident that something is bad. It just means that the thing is always a cost in your calculus.)

- This is one way of operationalizing the difference between 'more terminal' versus 'more instrumental' values: if you think torture is bad unconditionally, and plan to act accordingly, then you're treating it more like it's 'terminally' bad.

- Insofar as you're uncertain about which moral claims are true (/ about which moral claims you'll behaviorally treat as though they were true, avoid self-modifying away from, etc.), what processes do you trust more or less for getting answers you'll treat as 'correct'?

- If we think of your brain as trying to point at some logical object 'morality', then this reduces to asking what processes -- psychological, social, etc. -- tend to better pinpoint members of the class.

comment by Charlie Steiner · 2022-02-09T03:05:05.633Z · LW(p) · GW(p)

Chalk me down as a regular-ol' moral anti-realist then. Future Charlie will have made some choices and learned things that will also suit me, and I'm fine with that, but I don't expect it to be too predictable ahead of time - that future me will have done thought and life-living that's not easily shortcutted.

Where future moral changes seem predictable, that's where I think they're the most suspect - cliches or social pressure masquerading as predictions about our future thought processes. Or skills - things I already desire but am bad at - masquerading as values.

Replies from: Dagon↑ comment by Dagon · 2022-02-09T05:14:16.732Z · LW(p) · GW(p)

Yeah, moral anti-realist here too. I hope future-me has moral preferences that fit the circumstances and equilibria they face better than mine do, and I hope that enables more, happier, people to coexist. But I don't think there's any outside position that would make those specific ideas "more correct" or "better".

Replies from: Richard_Kennaway, TAG↑ comment by Richard_Kennaway · 2022-02-09T10:12:13.632Z · LW(p) · GW(p)

Your imagined future self has the same morality that you do: enabling "more, happier, people to coexist". The only change imagined here is being able to pursue your unchanged morality more effectively.

↑ comment by TAG · 2022-02-09T05:32:43.317Z · LW(p) · GW(p)

Moral quasi realist here. What makes them better is better adaptation.

Replies from: Dagon↑ comment by Dagon · 2022-02-09T14:49:12.595Z · LW(p) · GW(p)

"better adaptation" means "better experiences in the new context", right? Is the fact that you're using YOUR intuitions about "better" for future-you what makes it quasi-realist? Feels pretty anti- to me...

Replies from: TAG↑ comment by TAG · 2022-02-09T17:06:08.561Z · LW(p) · GW(p)

“better adaptation” means “better experiences in the new context”, right?

No. Better outcomes for societies.

Replies from: Dagon↑ comment by Dagon · 2022-02-09T17:20:14.700Z · LW(p) · GW(p)

Hmm. I don't know how the value of an outcome for society is anything but a function of the individual doing the evaluation. Is this our crux? from the standpoint of you and future-you, are you saying you expect that future-you weights their perception of societal good higher than you do today? Or are you claiming that societies have independent desires from the individuals who act within them?

Replies from: TAG↑ comment by TAG · 2022-02-09T18:57:49.510Z · LW(p) · GW(p)

It's not all about me, and it's not all about preferences, since many preferences are morally irrelevant. Morality is a mechanism for preventing conflicts between individuals, and enabling coordination between individuals.

Societies don't have anthropic preferences. Nonetheless , there are things societies objectively need to do in order to survive. If a society fails to defend itself against aggressors, or sets the tax rate to zero, it won't survive, even though individuals might enjoy not fighting wars or paying taxes.

Even if you are assuming a context where the very existence of society is taken for granted, morality isnt just an aggregate of individual preferences. Morality needs to provide the foundations of law: the rewards and punishments handed out to people are objective: someone is either in jail or not; they cannot be in jail from some perspectives and not others. Gay marriage is legal or not.

It is unjust to jail someone unless they have broken a clear law, crossed a bright line. And you need to punish people, so you need rules. But an aggregate of preferences is not a bright line per se. So you need a separate mechanism to turn preferences into rules. So utilitarianism is inadequate to do everything morality needs to do, even if consequentialism is basically correct.

Lesswrongians tend to assume "luxury morality", where the continued survival of society is a given, it's also a given that there are lots of spare resources , and the main problem seems to be what do with all the money.

From the adaptationist perspective, that is partly predictable. A wealthy society should have different object-level morality to a hardscrabble one. Failure to adapt is a problem. But it's also a problem to forget entirely about "bad policeman" stuff like crime and punishment and laws.

Replies from: Dagon↑ comment by Dagon · 2022-02-09T19:48:09.047Z · LW(p) · GW(p)

It's not all about me, and it's not all about preferences

Not all about, but is ANY part of morality about you or about preferences? What are they mixed with, in what proportions?

To be clear (and to give you things to disagree with so I can understand your position), my morality is about me and my preferences. And since I'm anti-realist, I'm free to judge others (whose motives and preferences I don't have access to) more on behavior than on intent. So in most non-philosophical contexts, I like to claim and signal a view of morality that's different than my own nuanced view. Those signals/claims are more compatible with realist deontology - it's more legible and easier to enforce on others than my true beliefs, even though it's not fully justifiable or consistent.

It is unjust to jail someone unless they have broken a clear law, crossed a bright line.

Do you mean it's morally wrong to do so? I'm not sure how that follows from (what I take as) your thesis that "societal survival" is the primary driver of morality. And it still doesn't clarity what "better adaptation" actually means in terms of improving morality over time.

Replies from: TAG↑ comment by TAG · 2022-02-10T02:47:02.884Z · LW(p) · GW(p)

Not all about, but is ANY part of morality about you or about preferences?

How many million people are in my society ? How much my preferences weigh isn't zero, but it isn't greater than 1/N. Why would it be greater? I'm not the King of morality. Speaking of which...

my morality is about me and my preferences

But we were talking about morality , not about your morality.

And since I’m anti-realist, I’m free to judge others (whose motives and preferences I don’t have access to) more on behavior than on intent.

So what? You don't , as an individual, have the right to put them in jail .. but society has a right to put you in jail. There's no reason for anybody else to worry about your own personal morality, but plenty of reason for you to worry about everyone elses.

Do you mean it’s morally wrong to do so?

Would you want to be throw into jail for some reason that isn't even clearly defined?

comment by PeterMcCluskey · 2022-02-10T20:59:31.421Z · LW(p) · GW(p)

I don't think differing attitudes toward moral realism are important here. People mostly agree on a variety of basic goals such as being happy, safe, and healthy. Moral rules seem to be mostly attempts at generating societies that people want to live in.

Some moral progress is due to increased wisdom, but most of what we label as moral progress results from changing conditions and/or tastes.

E.g. for homosexuality, I don't see much evidence that people a century ago were unfamiliar with the reasons why we might want to accept homosexuality. Here are my guesses as to what changed:

- a shift to romantic relations oriented around love and sex, as compared to marriage as an institution that focused on economic support and raising children. People used to depend on children to support them in old age, and on a life-long commitment of support from a spouse. Those needs decreased with increasing wealth and the welfare state, and were somewhat replaced by ambitious expectations about sexual and emotional compatibility.

- better medicine, reducing the costs of STDs.

- increased mobility, enabling gay men to form large enough social groups in big cities to overcome ostracism enough to form gay-friendly cultures.

Let's look at an example where we have less pressure to signal our disapproval of an outgroup: intolerance toward marriage between cousins has increased, at least over the past century or so.

Patterns in the US suggest that the trend is due to beliefs (likely overstated) about the genetic costs. So it could fit the model of increasing wisdom causing moral progress, although it looks a bit more like scientific progress than progress at being moral.

But worldwide patterns say something different. Muslim culture continues to accept, maybe even encourage, marriage between cousins, to an extent that seems unlikely to be due to ignorance of the genetic costs. Some authors suggest this is due to the benefits [LW · GW] of strong family bonds [LW · GW]. A person living in the year 1500 might well have been justified in believing that those bonds produced better cultures than the alternative. That might still be true today in regions where it's hard to create trusted connections between unrelated people.

I expect that there are moral rules that deserve to be considered universal. But most of the rules we're using are better described as heuristics that are well adapted to our current needs.

comment by romeostevensit · 2022-02-09T21:29:03.187Z · LW(p) · GW(p)

Imagine health realists vs anti realists arguing over whether it makes sense to think of there being an objective measure of health. The stance that most appeals to me is also a quasi realist one in which a healthy organism is one whose capabilities extend to taking into account its own inputs and outputs such that it is not burning any resources that imply that it can't continue to exist in the future or generating any unaccounted for externalities that overwhelm it. The same can be said for moral stances.

Does anyone know the moral philosophy term for this stance?

comment by EJT (ElliottThornley) · 2022-02-10T21:31:45.188Z · LW(p) · GW(p)

Nice post! I share your meta-ethical stance, but I don't think you should call it 'moral quasi-realism'. 'Quasi-realism' already names a position in meta-ethics, and it's different to the position you describe.

Very roughly, quasi-realism agrees with anti-realism in stating:

(1) Nothing is objectively right or wrong.

(2) Moral judgments don't express beliefs.

But, in contrast to anti-realism, quasi-realism also states:

(3) It's nevertheless legitimate to describe certain moral judgments as true.

The conjunction of (1)-(3) defines quasi-realism.

What you call 'quasi-realism' might be compatible with (2) and (3), but its defining features seem to be (1) plus something like:

(4) Our aim is to abide by the principles that we'd embrace if we were more thoughtful, informed, etc.

(1) plus (4) could point you towards two different positions in meta-ethics. It depends whether you think it's appropriate to describe the principles we'd embrace if we were more thoughtful, etc., as true.

If you think it is appropriate to describe these principles as true, then that counts as an ideal observer theory.

If you think it isn't appropriate to describe these principles as true, then your position is just anti-realism plus the claim that you do in fact try to abide by the principles that you'd embrace if you were more thoughtful, etc.

Replies from: HoldenKarnofsky↑ comment by HoldenKarnofsky · 2022-03-31T22:44:26.436Z · LW(p) · GW(p)

Thanks, this is helpful! I wasn't aware of that usage of "moral quasi-realism."

Personally, I find the question of whether principles can be described as "true" unimportant, and don't have much of a take on it. My default take is that it's convenient to sometimes use "true" in this way, so I sometimes do, while being happy to taboo [? · GW] it anytime someone wants me to or I otherwise think it would be helpful to.

comment by Vladimir_Nesov · 2022-02-09T11:38:49.836Z · LW(p) · GW(p)

Some of the changes over time are neither moral progress nor moral drift, because some ethical claims are about worldlines, not world states. For example, killing someone and replacing them with a different person is worse than doing nothing, even if the new person holds similar moral worth to the original, and if the externalities don't matter (no friends/relatives/etc.).

So even given an unchanging morality, moral attitudes about the present are influenced by (non-moral) facts of the past, and will change in complicated ways over time.

comment by Parrhesia (ives-parrhesia) · 2022-02-09T05:00:20.254Z · LW(p) · GW(p)

I don't think morality is objective, but I still care greatly about what a future Holden - one who has reflected more, learned more, etc. - would think about the ethical choices I'm making today.

I think that an ethical theory that doesn't believe baby torture is objectively wrong is flawed. If there is no objective morality, then reflection and learning information cannot guide us toward any sort of "correct" evaluation of our past actions. I don't think preferences should change realist to quasi-realist. Is it any less realist to think murder is okay, but avoid it because we are worried about judgement from others? It seems like anti-realism + a preference. There already is a definition of quasi-realist which seems different from yours unless I'm misunderstanding yours [1].

I mean specifically to point to the changes that you (whoever is reading this) consider to be progress, whether because they are honing in on objective truth or resulting from better knowledge and reasoning or for any other good reason. Future-proof ethics is about making ethical choices that will still look good after your and/or society's ethics have "improved" (not just "changed").

Reasoning helps us reach truth better. If there are no moral facts, then it is not really reasoning and the knowledge is useless. Imagine I said that I do not believe ghosts exist, but I want to be sure that I look back on my past opinions on ghosts and hope they are correct. I want this because I expect to learn much more about the qualities and nature of ghosts and I will be a much more knowledgable person. The problem is that ghosts have no qualities or nature because they do not exist.

You wanted to use future-proof ethics as a meta-ethical justification for selecting an ethical system, if I recall your original post correctly. My point about circularity was that if I'm using the meta-ethical justification of future proof to pick my ethical system, I can't justify future proofing with the very same ethical system. The whole concept of progress, whether it be individual, societal or objective relies on a measure of morality to determine progress. I don't have that ethical system if I haven't used future proofing to justify it. I don't have future proofing, unless I've got some ethical truths already.

Imagine I want to come up with a good way of determining if I am good at math. I could use my roommate to check my math. How do I know my roommate is good at math? Well, in the past, I checked his math and it was good.

I am a moral realist. I believe there are moral facts. I believe that through examination of evidence and our intuitions, we become a more moral society generally speaking. I therefore think that the future will be more ethical. Future proof choices and ethical choices will correlate somewhat. I believe this because I believe that I can, in the present, determine ethical truths via my intuition. This is better than future proof because it gets at the heart of what I want.

How would you justify your "quasi realist" position. You want future Holden to look back on you. Why? Should others hold this preference? What if I wanted past Parrhesia to respect future Parrhesia. Should I weigh this more than future Parrhesia respecting past Parrhesia? I don't think this is meta-ethically justified. Can you really say there is nothing objectively wrong with torturing a baby for sadistic pleasure?

[1] see: https://en.wikipedia.org/wiki/Quasi-realism

Replies from: Charlie Steiner, HoldenKarnofsky↑ comment by Charlie Steiner · 2022-02-09T06:44:26.273Z · LW(p) · GW(p)

I think "objective" is not quite specific enough about the axis of variation here. Like in [The Meaning of Right](https://www.lesswrong.com/posts/fG3g3764tSubr6xvs/the-meaning-of-right) - or that entire sequence of posts if you haven't read it yet. That post talks about a sense of "objective" which means something like "even if you changed my brain to think something else was right, that wouldn't change what was right." But the trick is, both realists and anti-realists can both have that sort of objectivity!

You seem to want some additional second meaning of "objective" that's something like "testable" - if we disagree about something, there's some arbitration process we can use to tell who's right - at least well enough to make progress over time.

One of the issues with this is that it's hard to have your cake and eat it too. If you can get new information about morality, that information might be bad from the standpoint of your current morality! The arbitration process might say "baby torture is great" - and if it never says anything objectionable, then it's not actually giving you new information.

Replies from: TAG, ives-parrhesia↑ comment by TAG · 2022-02-10T22:46:07.846Z · LW(p) · GW(p)

Note that epistemic normativity (correct versus incorrect), pragmatic normativity (works versus fails), and ethical normativity (good versus evil) are not necessarily the same. If they are not , then "works" and "correct" can be used to arrive at "good" without circularity.

↑ comment by Parrhesia (ives-parrhesia) · 2022-02-09T14:24:17.579Z · LW(p) · GW(p)

I think that morality is objective in the sense that you mentioned in paragraph one. I think that it has the feature of paragraph two that you are talking about but that isn't the definition objective in my view, it is merely a feature of the fact that we have moral intuitions.

Yes, you can get new information on morality that contradicts your current standpoint. It could never say anything objectionable because I am actually factually correct on baby torture.

↑ comment by HoldenKarnofsky · 2022-03-31T22:44:58.615Z · LW(p) · GW(p)

> How would you justify your "quasi realist" position. You want future Holden to look back on you. Why? Should others hold this preference? What if I wanted past Parrhesia to respect future Parrhesia. Should I weigh this more than future Parrhesia respecting past Parrhesia? I don't think this is meta-ethically justified. Can you really say there is nothing objectively wrong with torturing a baby for sadistic pleasure?

I don't think we can justify every claim with reference to some "foundation." At some point we have to say something like "This is how it seems to me; maybe, having articulated it, it's how it seems to you too; if not, I guess we can agree to disagree." That's roughly what I'm doing with respect to a comment like "I'd like to do things that a future Holden distinguished primarily by having learned and reflected more would consider ethical."