The Alignment Problem

post by lsusr · 2022-07-11T03:03:03.271Z · LW · GW · 18 commentsContents

Humanity is going to build an AGI as fast as we can. But if an AGI is going to kill us all can't we choose to just not build an AGI? Maybe people will build narrow AIs instead of AGIs. Maybe building an AGI is really hard—so hard we won't build it this century. The first AGI will, by default, kill everyone But what if whoever wins the AGI race builds an aligned AGI instead of an unaligned AGI? AI Alignment is really hard Can't we experiment on sub-human intelligences? Why is AI Alignment so hard? Why can't we use the continuity of machine learning architectures to predict (within some Δ) what the AGI will do? TL;DR None 21 comments

Last month Eliezer Yudkowsky wrote "a poorly organized list of individual rants" [LW · GW] about how AI is going to kill us all. In this post, I attempt to summarize the rants in my own words.

These are not my personal opinions. This post is not the place for my personal opinions. Instead, this post is my attempt to write my understanding of Yudkowsky's opinions.

I am much more optimistic about our future than Yudkowsky is. But that is not the topic of this post.

Humanity is going to build an AGI as fast as we can.

Humanity is probably going to build an AGI, and soon.

But if an AGI is going to kill us all can't we choose to just not build an AGI?

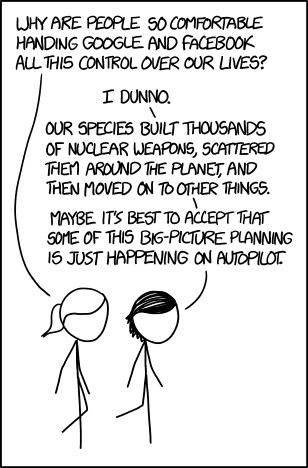

Nope! If humanity had the coordination ability to "just not build an AGI because an AGI is an existential threat" then we wouldn't have built doomsday weapons whose intended purpose is to be an existential threat.

The first nuclear weapon was a proof of concept. The second and third nuclear bombs were detonated on civilian targets [LW · GW]. "Choosing not to build an AGI" is much, much harder than choosing not to build nuclear weapons because:

- Nukes are physical. Software is digital. It is very hard to regulate information.

- Nukes are expensive. Only nation-states can afford them. This limits the number of actors who are required to coordinate.

- Nukes require either plutonium or enriched uranium, both of which are rare and which have a small number of legitimate uses. Datacenters meet none of those criteria.

- Uranium centrifuges are difficult to hide.

- Nuclear bombs and nuclear reactors are very different technologies. It is easy to build a nuclear reactor that cannot be easily converted into weapon use.

- A nuclear reactor will never turn into a nuclear weapon by accident. A nuclear weapon is not just a nuclear reactor that accidentally melted down.

Nuclear weapons are the easiest thing in the world to coordinate on and (TPNW aside) humanity has mostly failed to do so.

Maybe people will build narrow AIs instead of AGIs.

We [humanity] will build the most powerful AIs we can. An AGI that combines two narrow AIs will be more powerful than both of the narrow AIs. This is because the hardest part of building an AGI is figuring out what representation to use. An AGI can do everything a narrow AI can do plus it gets transfer learning on top of that.

Maybe building an AGI is really hard—so hard we won't build it this century.

Maybe. But recent developments, especially at OpenAI, show that you can get really far just brute forcing the problem with a mountain of data and warehouse full of GPUs.

The first AGI will, by default, kill everyone

Despite AGIs being more useful than narrow AIs, the things we actually use machine learning for are narrow domains. But if you tell a superintelligent AGI to solve a narrow problem then it will sacrifice all of humanity and all of our future lightcone to solving that narrow problem. Because that's what you told it to do.

Thus, every tech power in the world—from startups to nation-states—is racing as fast as we can to build a machine that will kill us all.

But what if whoever wins the AGI race builds an aligned AGI instead of an unaligned AGI?

Almost nobody (as a fraction of the people in the AI space) is trying to solve the alignment problem (which is a prerequisite to building an aligned AI). But let's suppose that the first team of people who build a superintelligence first decide not to turn the machine on and immediately surrender our future to it. Suppose they recognize the danger and decide not to press "run" until they have solved alignment….

AI Alignment is really hard

AI Alignment is stupidly, incredibly, absurdly hard. I cannot refute every method of containing an AI because there are an infinite number of systems that won't work.

AI Alignment is, effectively, a security problem. It is easier to invent an encryption system than to break it. Similarly, it is easier to invent a plausible method of containing an AI than to demonstrate how it will fail. The only way to get good at writing encryption systems is to break other peoples' systems. The same goes for AI Alignment. The only way to get good at AI Alignment is to break other peoples' alignment schemes.[1]

Can't we experiment on sub-human intelligences?

Yes! We can and should. But just because an alignment scheme works on a subhuman intelligence doesn't mean it'll work on a superhuman intelligence. We don't know whether an alignment scheme will withstand a superhuman attacker until you test it against that superhuman attacker. But we don't get unlimited retries against superhuman attackers. We might not even get a single retry.

Why is AI Alignment so hard?

A superhuman intelligence will, by default, hack its reward function. If you base the reward function on sensory inputs then the AGI will hack the sensory inputs. If you base the reward function on human input then it will hack its human operators.

We don't know what a superintelligence might do until we run it, and it is dangerous to run a superintelligence unless you know what it will do. AI Alignment is a chicken-and-egg problem of literally superhuman difficulty.

Why can't we use the continuity of machine learning architectures to predict (within some ) what the AGI will do?

Because a superintelligence will have sharp capability gains. After all, human beings do just within our naturally-occurring variation [LW · GW]. Most people cannot write a bestselling novel or invent complicated recursive algorithms.

TL;DR

AI Alignment is, effectively, a security problem. How do you secure against an adversary that is much smarter than you?

Maybe we should have alignment scheme-breaking contests. ↩︎

18 comments

Comments sorted by top scores.

comment by trevor (TrevorWiesinger) · 2022-07-11T20:37:57.955Z · LW(p) · GW(p)

In my experience, most people trip up on the "the first AGI will, by default, kill everyone" part. Which is why it's worth seriously considering how to devote time and special attention to explaining:

- Instrumental convergence and self-preservation

- How, exactly, optimized thought happens and results in instrumental convergence and self-preservation

This is something that people can take for granted due to expecting short inferential distances [LW · GW].

Replies from: None↑ comment by [deleted] · 2022-07-12T18:12:48.224Z · LW(p) · GW(p)

Replies from: Vladimir_Nesov↑ comment by Vladimir_Nesov · 2022-07-27T10:25:19.734Z · LW(p) · GW(p)

The case for coherent preferences is that eventually AGIs would want to do something about the cosmic endowment and the most efficient way of handling that is with strong optimization to a coherent goal, without worrying about goodharting. So it doesn't matter how early AGIs think, formulating coherent preferences is also a convergent instrumental drive.

At the same time, if coherent preferences are only arrived-at later, that privileges certain shapes of the process that formulates them, which might make them non-arbitrary in ways relevant to humanity's survival.

Replies from: None↑ comment by [deleted] · 2022-07-31T13:55:06.064Z · LW(p) · GW(p)

Replies from: Vladimir_Nesov↑ comment by Vladimir_Nesov · 2022-08-21T01:26:28.071Z · LW(p) · GW(p)

do you mean "most AI systems that don't initially have coherent preferences, will eventually self-modify / evolve / follow some other process and become agents with coherent preferences"?

Somewhat. Less likely become/self-modify than simply build agents with coherent preferences distinct from the builders, for the purpose of efficiently managing the resources. But it's those agents with coherent preferences that get to manage everything, so they are what matters for what happens. And if they tend to be built in particular convergent ways, perhaps arbitrary details of their builders are not as relevant to what happens.

"without worrying about goodharting" and "most efficient way of handling that is with strong optimization ..." comes after you have coherent preferences, not before

That's the argument for formulating the requisite coherent preferences, they are needed to perform strong optimization. And you want strong optimization because you have all this stuff lying around unoptimized.

Replies from: Nonecomment by Zach Stein-Perlman · 2022-07-11T17:49:02.136Z · LW(p) · GW(p)

AI Alignment is, effectively, a security problem. How do you secure against an adversary that is much smarter than you?

I think Eliezer would say that this is basically impossible to do in a way that leaves the AI useful -- I think he would frame the alignment problem as the problem of making the AI not an adversary in the first place.

comment by Algon · 2022-07-11T17:16:31.047Z · LW(p) · GW(p)

"A superhuman intelligence will, by default, hack its reward function. If you base the reward function on sensory inputs then the AGI will hack the sensory inputs. If you base the reward function on human input then it will hack its human operators."

I can't think clearly right now, but this doesn't feel like the central part of Eliezer's view. Yes, it will do things that feel like manipulation of what you intended its reward function to be. But that doesn't mean that's actually where you aimed it. Defining a reward in terms of sensory input is not the same as defining a reward function over "reality" or a reward function over a particular ontology or world model.

You can build a reward function over a world model which an AI will not attempt to tamper with, you can do that right now. But the world models don't share our ontology, and so we can't aim them at the thing we want because the thing we want doesn't even come into the AI's consideration. Also, these world models and agent designs probably aren't going to generalise to AGI but whatever.

comment by sairjy · 2022-07-15T10:22:26.949Z · LW(p) · GW(p)

The dire part of alignment is that we know that most human beings themselves are not internally aligned, but they become aligned only because they benefits from living in communities. And in general, most organisms by themselves are "non-aligned", if you allow me to bend the term to indicate anything that might consume/expand its environment to maximize some internal reward function.

But all biological organisms are embodied and have strong physical limits, so most organisms become part of self-balancing ecosystems.

AGI, being an un-embodied agent, doesn't have strong physical limits in its capabilities so it is hard to see how it/they could find advantageous or would they be forced to cooperate.

comment by Gyrodiot · 2022-07-12T09:08:39.514Z · LW(p) · GW(p)

But let's suppose that the first team of people who build a superintelligence first decide not to turn the machine on and immediately surrender our future to it. Suppose they recognize the danger and decide not to press "run" until they have solved alignment.

The section ends here but... isn't there a paragraph missing? I was expecting the standard continuation along the lines of "Will the second team make the same decision, once they reach the same capability? Will the third, or the fourth?" and so on.

Replies from: lsusrcomment by TAG · 2022-07-11T17:17:45.272Z · LW(p) · GW(p)

We [humanity] will build the most powerful AIs we can.

We [humanity] will build the most powerful AIs we can control. Power without control is no good to anybody. Cars without brakes go faster than cars with brakes, but theres not market for them.

Replies from: Kenoubi, Signer↑ comment by Kenoubi · 2022-07-11T18:10:27.481Z · LW(p) · GW(p)

We'll build the most powerful AI we think we can control. Nothing prevents us from ever getting that wrong. If building one car with brakes that don't work made everyone in the world die in a traffic accident, everyone in the world would be dead.

Replies from: TrevorWiesinger, TAG↑ comment by trevor (TrevorWiesinger) · 2022-07-11T20:46:28.378Z · LW(p) · GW(p)

There's also the problem of an AGI consistently exhibiting aligned behavior due to low risk tolerance, until it stops doing that (for all sorts of unanticipated reasons).

This is especially compounded by the current paradigm of brute forcing randomly generated-neural networks, since the resulting systems are fundamentally unpredictable and unexplainable.

Replies from: TrevorWiesinger↑ comment by trevor (TrevorWiesinger) · 2023-11-30T00:39:41.570Z · LW(p) · GW(p)

Retracted because I used the word "fundamentally" incorrectly, resulting in a mathematically provably false statement (in fact it might be reasonable to assume that neutral networks are both fundamentally predictable and even fundamentally explainable, although I can't say for sure since as of Nov 2023 I don't have a sufficient understanding of Chaos theory). They sure are unpredictable and unexplainable right now, but there's nothing fundamental about that.

This comment shouldn't have been upvoted by anyone. It said something that isn't true.

↑ comment by TAG · 2022-07-14T00:57:49.770Z · LW(p) · GW(p)

So how did we get from narrow AI to super powerful AI? Foom? But we can build narrow AIs that don't foom, because we have. We should be able to build narrow AIs that don't foom by not including anything that would allow them to recursively self improve [*].

EY's answer to the question "why isn't narrow AI safe" wasn't "narrow AI will foom", it was "we won't be motivated to keep AI's narrow".

[*] not that we could tell them how to self-improve, since we don't really understand it ourselves.

↑ comment by Signer · 2022-07-11T18:05:18.196Z · LW(p) · GW(p)

Being on the frontier of controllable power means we need to increase power only slightly to stop being in control - it's not a stable situation. It works for cars because someone risking to use cheaper brakes doesn't usually destroy planet.

Replies from: TAG↑ comment by TAG · 2022-07-14T01:00:48.997Z · LW(p) · GW(p)

Being on the frontier of controllable power means we need to increase power only slightly to stop being in control

Slightly increasing power generally means slightly decreasing control, most of the time. What causes the very non-linear relationship you are assuming? Foom? But we can build narrow AIs that don’t foom, because we have. We should be able to build narrow AIs that don’t foom by not including anything that would allow them to recursively self improve [*].

EY’s answer to the question “why isn’t narrow AI safe” wasn’t “narrow AI will foom”, it was “we won’t be motivated to keep AI’s narrow”.

[*] not that we could tell them how to self-improve, since we don’t really understand it ourselves.

Replies from: Signer↑ comment by Signer · 2022-07-14T06:37:16.872Z · LW(p) · GW(p)

What causes the very non-linear relationship you are assuming?

Advantage of offence over defense in high-capability regime - you need only cross one threshold like "can finish a plan to rowhammer itself to internet" or "can hide its thoughts before it is spotted". And we will build non-narrow AI because in practice "most powerful AIs we can control" means "we built some AIs, we can control them, so we continue to do what we have done before" and not "we try to understand what we will not be able to control in the future and try not to do it" because we already don't check whether our current AI will be general before we turn it on and we already explicitly trying to create non-narrow AI.