Why it's bad to kill Grandma

post by dynomight · 2022-06-09T18:12:01.131Z · LW · GW · 14 commentsThis is a link post for https://dynomight.substack.com/p/grandma

Contents

1. 2. 3. 4. 5. 6. 7. 8. 9. 10. 11. 12. 13. None 14 comments

1.

In college, I had a friend who was into debate competitions. One weekend, the debate club funded him to go to a nearby city for a tournament. When I asked him how it went, he said:

Oh, I didn’t have enough time to prepare, so I just skipped the tournament and partied with some friends who live there.

I probably looked a little shocked—I was very idealistic in those days—so he reminded me of our earlier conversations about consequentialism:

This was the right thing to do. There was no way I could participate in the tournament, and they’d already paid for everything. This way I’m happy and they’re happy. Everyone wins!

I got a bit squinty-eyed, but I couldn’t see a flaw. We both agreed it was wrong that he didn’t prepare. But once that was done, was there nothing wrong with what he did, if consequences are all that matter?

2.

Recently, Arjun Panickssery wrote Just Say No to Utilitarianism, channeling ideas from Brian Caplan, Dan Moller, and Fake Nous. The basis of these articles are thought experiments like this one from Moller:

Grandma is a kindly soul who has saved up tens of thousands of dollars in cash over the years. One fine day you see her stashing it away under her mattress, and come to think that with just a little nudge you could cause her to fall and most probably die. You could then take her money, which others don’t know about, and redistribute it to those more worthy, saving many lives in the process. No one will ever know. Left to her own devices, Grandma would probably live a few more years, and her money would be discovered by her unworthy heirs who would blow it on fancy cars and vacations. Liberated from primitive deontic impulses by a recent college philosophy course, you silently say your goodbyes and prepare to send Grandma into the beyond.

Or here’s a classic, as phrased by Fake Nous:

Say you’re a surgeon. You have 5 patients who need organ transplants, plus 1 healthy patient who is compatible with the other 5. Should you murder the healthy patient so you can distribute his organs, thus saving 5 lives?

The argument is that in some situations, utilitarianism leads to crazy conclusions. Since no decent person would kill grandma, utilitarianism must be wrong.

I’d like to explain why I think this critique of utilitarianism is mistaken.

3.

To step back, what’s the point of ethics?

As far as I know, we live in a universe that’s indifferent to us. Fairness and justice don’t “really” exist, in the sense that you can’t derive them from the Schrödinger equation or whatever. They’re instincts that evolution programmed into us.

But then, when I think about a child suffering, I don’t care about any of that. Whatever the reason is that I think that’s wrong, I do, and it’s not up for debate.

So, while I can see intellectually that right and wrong are just adaptations, I really do believe in them.

4.

Err, so what’s the point of ethics?

If moral instincts are heuristics that evolution baked into us, shouldn’t we expect them to be messy and arbitrary and maybe even inconsistent? Why would we look for a formal ethical system?

My answer is: Because it’s practically useful to do so.

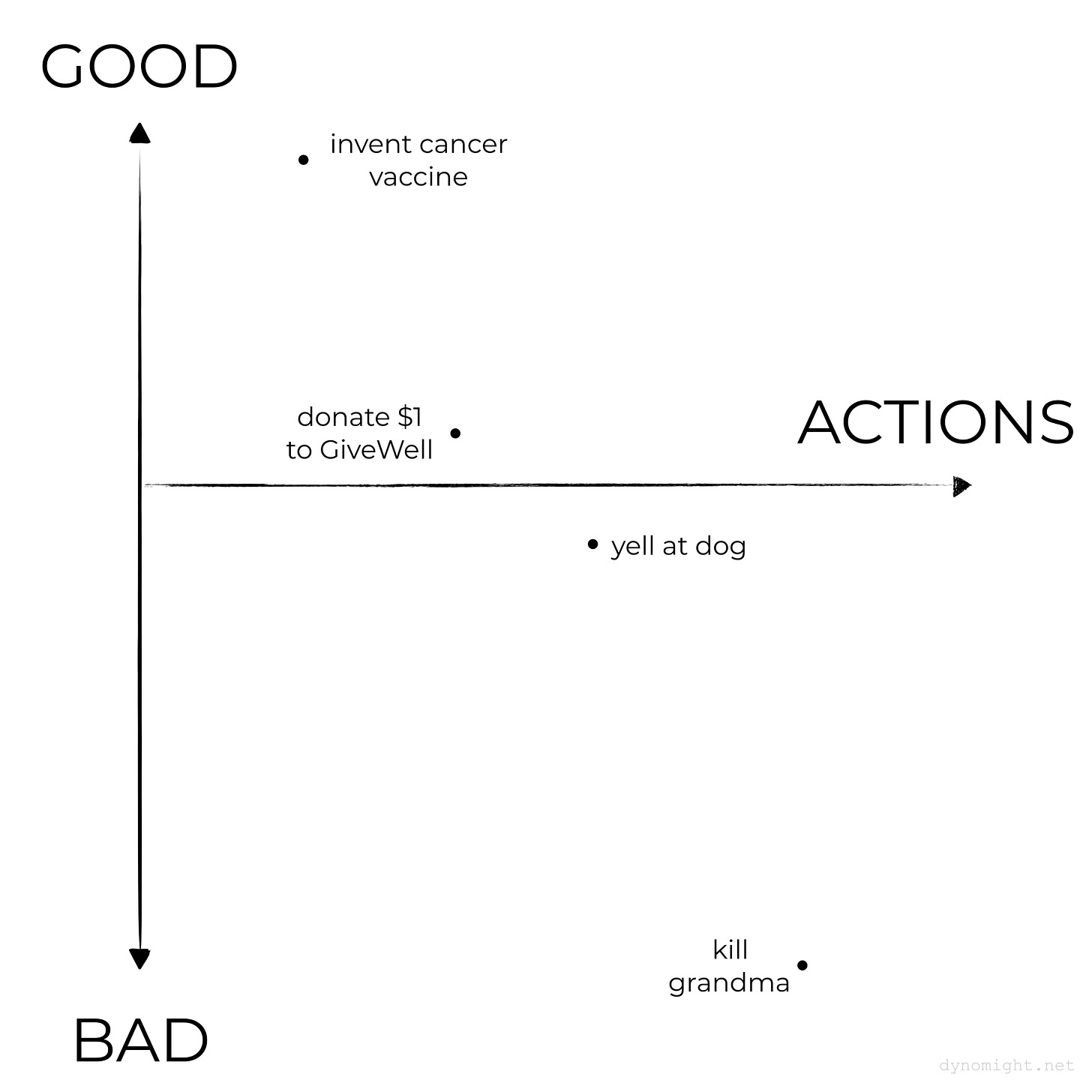

Imagine a space of all possible actions, where nearby actions are similar. And imagine you have a “moral score” for how good you think each action is. Then you can imagine your moral instincts as a graph like this:

Here the x-axis is the space of all possible actions. (This is high-dimensional, but you get the idea.) The y-axis is how good you think each action is.

Now, you can picture an ethical system as a function that gives a score to each possible action. Here’s one system:

Here’s another one:

Notice something: Even though ETHICAL SYSTEM #2 fits your moral instincts a bit better, it might not seem quite as trustworthy—it’s too wiggly.

5.

I claim that an ethical system is useful when

it mostly agrees with your moral instincts, and

it’s simple/parsimonious.

Why?

First, it’s useful for extrapolation. I have strong instincts about killing grandma, but I’m less sure about the moral status of animals, or our obligation to future generations. When we have to make choices about these things, it’s sensible to rely on a simple system that fits with the things we’re sure about.

Second, it’s useful for conflict resolution. Say I claim it’s unconscionable to eat Indian food with chopsticks, but you think it’s fine. If we agree on an ethical system, maybe we can argue about our reasoning. But if I just scream, “this is my irreducible moral instinct!”, good luck.

Third, it’s useful for future-proofing. The ancient Spartans thought that:

Killing Spartans is really bad.

Killing other free Greeks is bad.

Killing helots is bad-ish—except if it’s autumn, in which case no worries.

The Spartans, of course, didn’t see themselves as evil. They “declared war” on the helots each year, so killing them was totally not-murder. And anyway, if the helots didn’t want to spend their lives being humiliated and terrorized they shouldn’t have allowed their ancestors to lose a war to the ancestors of the Spartans.

The point is, if the Spartans had looked harder, they might have noticed that their behavior seemed to require a lot of ad-hoc justifications. Their ethical system was quite “wiggly”.

6.

The anti-utilitarian crew is right about many things.

You shouldn’t kill grandma.

The reason you shouldn’t kill grandma is that it’s obviously bad to kill grandma. Our moral instincts are the ultimate foundation for ethics.

Few professed utilitarians would kill grandma.

It’s a good exercise to check an ethical system against different situations and look for contradictions.

The utilitarians that “bite the bullet” and accept that it’s right to kill grandma are getting things backward—that’s starting with a rigid ethical system and then imposing it in a situation where you already have strong instincts. What we want to do is take our instincts and then find an ethical system that’s consistent with them.

If utilitarianism tells you to kill grandma, that’s a big strike against utilitarianism.

But does it tell you to kill grandma?

7.

In Economics in One Lesson, Henry Hazlitt says:

The art of economics consists in looking not merely at the immediate but at the longer effects of any act or policy; it consists in tracing the consequences of that policy not merely for one group but for all groups.

Can you tax people to create jobs? Sure, Hazlitt says, but then the people you taxed won’t be able to spend that money and so jobs will be lost elsewhere. Does rent control hold down rents? Of course, Hazlitt says, but it also decreases the production of new housing and increases rents for non-controlled apartments.

(There you go, now you know economics.)

Thinking this way, it’s easy to see the flaw with my friend’s justification for skipping the debate tournament: The people who ran the club could have discovered what happened, in which case they’d stop paying for trips or add new annoying processes to verify that people did as they said. (Also, I wonder if he thought about the effects of telling me what he’d done, in terms of how much I’d trust him in the future?)

Now, the grandma example is constructed to try to avoid those kinds of effects. We postulate that no one will know you killed grandma, and we postulate that her money will save multiple lives with the money.

You could argue about how plausible how assumptions are, but I won’t because I believe in not fighting the hypothetical. Even if it’s unlikely, the situation could arise, and it would be lame to refuse it.

8.

The problem with killing grandma is that nobody wants to live in a world where you kill grandma.

Imagine Utilitopia, a country filled with perfect consequentialists. Any time someone calculates that your organs could generate more utility if they were inside someone else, they are obligated to sneak into your house at night and reallocate your organs for better uses.

Might the citizens of Utilitopia be a touch nervous about the fact that they or their loved ones could die at any time?

Now, imagine a ballot initiative to ban involuntary organ harvesting. Of course, the ban would greatly harm some people who need organs. But it would also have momentous benefits for everyone that no longer needs to live in constant fear. It would surely be positive on net.

9.

You might object that I’m cheating—My argument is sort of like this:

PEOPLE LIKE COMMONSENSE MORALITY

↓

COMMONSENSE MORALITY CREATES UTILITY

↓

COMMONSENSE MORALITY IS UTILITARIANISM, Q.E.D.

Once you’ve made such dramatic concessions, are we still talking about “real” utilitarianism?

Well, it’s always sort of futile to argue about semantics. But how can you not take account of higher-order consequences? People’s horror at killing grandma is a real thing. Trying to give people what they want is literally the definition of utilitarianism.

(And no, it’s not true that utilitarians shouldn’t care about dying, any more than they shouldn’t care about good food or comfortable chairs. Utilitarianism is about meeting people’s needs, not prescribing what those needs are supposed to be.)

10.

Utilitarianism also needs to be tempered in many other ways, like game theory, and the computational complexity of choosing optimal actions.

In practice, commonsense morality is an OK-ish utilitarianism.

Our moral instincts are extremely battle-hardened heuristics. They “know” about all these higher-order issues.

Of course, our instincts are tuned to maximize our descendants, not to maximize total utility. But we may be a bit lucky. We evolved in small tribal bands. In that environment, it could be in your self-interest to risk your life for someone else, since the tribe will reward you with reputation. And it’s against your interest to lie and screw people over since the tribe will punish you with gossip.

The game theory is different in an anonymous megacity. But we’re still mostly operating with our old instincts.

11.

To summarize, I think moral instincts are the starting point, and the goal of ethics is to generalize from them. However, many of the examples people give where utilitarianism “fails” use weak edgy philosophy 101 reasoning. Once you account for higher-order effects, utilitarianism fits reasonably well with commonsense morality.

Many of the other thought experiments fail for similar reasons. Here’s one from Fake Nous:

On his death-bed, your best friend (who didn’t make a will) got you to promise that you would make sure his fortune went to his son. You can do this by telling government officials that this was his dying wish. Should you lie and say that his dying wish was for his fortune to go to charity, since this will do more good?

Response: If people routinely break promises, who will trust those promises?

And here’s another:

You have a tasty cookie that will produce harmless pleasure with no other effects. You can give it to either serial killer Ted Bundy, or the saintly Mother Teresa. Bundy enjoys cookies slightly more than Teresa. Should you therefore give it to Bundy?

Response: Maybe there’s some benefit to knowing that if you kill lots of people, everyone will stop being nice to you?

12.

Technically, I haven’t shown that utilitarianism gives the “right” answer in any of these cases, just that there are extra terms that weren’t being accounted for. That’s fair, but I don’t think I have that burden of proof—I’m just saying that these thought experiments don’t disprove utilitarianism.

You might object that my vision of utilitarianism is so complicated that it’s impossible to apply it. I worry about that! If you don’t like utilitarianism, I suggest it as a strong line of attack. But probably “don’t do anything outlandishly ghoulish unless you’re really sure” can be justified as a good utilitarian rule of thumb.

Finally, you might object that just killing grandma one time won’t make it a routine practice, or that just lying to one person on their deathbed won’t destroy trust.

This last objection is similar to the situations that Derek Parfit worries about: Perhaps it is pointless to donate a liter of water to be divided among 1000 thirsty people since no one can sense the difference of 1 mL? I think this is silly. If enough people donated water, there would clearly be an effect. So unless there’s some weird phase change or something, there’s got to be an effect on the margin.

13.

With all that said, I don’t think I’ve entirely escaped the need to bite bullets. You could imagine a version of Utilitopia where

people didn’t mind the idea that they or their loved ones could be sacrificed for the greater good at any time, and

people could always correctly calculate when utility would be increased, and

no one would ever abuse this power.

You might need more stipulations, but you get the idea. With enough assumptions, a utilitarian eventually has to accept that it’s right to kill grandma.

Fair enough. But I don’t think that proves utilitarianism is wrong. If we’re playing the “arbitrary contrived thought experiments” game, let me propose one:

Grandma is a kindly soul who after a long and happy life is near the end of her days. One morning, Satan shows up in your bedroom and says, “Hey, just wanted to touch bases to let you know that Grandma is going to fall down the stairs and die today. You’re welcome to go prevent that, but if you do, I’ll cause 10% of Earth’s population to have heart attacks and then writhe in agony for ten millennia. Up to you!”

You know that everything Satan says is true. Is it right to let Grandma fall down the stairs?

At some point, you’d have to be crazy not to agree. But, if you’re smart, maybe you shouldn’t admit this? People get happiness from knowing that their loved ones will make irrational—and maybe even immoral (?)—choices to help them. So I suspect we’re dealing with another one of those plans you’re not supposed to talk about.

14 comments

Comments sorted by top scores.

comment by JBlack · 2022-06-10T03:34:52.050Z · LW(p) · GW(p)

If you can conclude that you should kill Grandma to make the world a better place, Grandma too can conclude that she should kill herself to make the world a better place - or better yet, just give the money to charity right now without killing anyone!

But she hasn't done so! This at the very least indicates that she doesn't think those actions would be better. So we have a conflict: agents disagree about which actions have greater utility.

Utilitarianism alone cannot resolve this. It is based on the fiction that there exists some universal utility function that all agents will agree on. It's a useful fiction for thought experiments, but it is a terrible model for reality.

You can't even just apply some aggregating function to utility function of individual agents, since utility functions are scale and translation invariant per agent, while aggregation functions are not. The best you can do is limit yourself to some Pareto frontier, since those are scale and translation invariant, but it hardly takes a shining moral principle to say "don't do things that make everybody including yourself worse off".

comment by TAG · 2022-06-09T21:54:31.964Z · LW(p) · GW(p)

You seem to have re invented some of the arguments for deontology. In particular, this...

The problem with killing grandma is that nobody wants to live in a world where you kill grandma

...is almost Rawls's veil. Well maybe deontology flavoured utilitarianism us best kind. But maybe deontology flavoured utilitarianism is actually utilitarianism flavoured deontology.

Replies from: Daniel V↑ comment by Daniel V · 2022-06-10T01:53:53.766Z · LW(p) · GW(p)

It was at that point I thought, "we've rediscovered Kant's categorical imperative."

Replies from: MSRayne↑ comment by MSRayne · 2022-06-10T15:21:22.398Z · LW(p) · GW(p)

That's exactly what I came here to comment. But I think all these problems really come from a weird, abstract idea of "welfare" that imo doesn't make any sense. It's the volition of sentient beings that is important. Beings ought to get what they want to the extent that this doesn't interfere with the same right in others. When two (or more) beings cannot both get what they want, they are obligated to try to find an acceptable compromise. (If they are not of equal degrees of intelligence, of course, the burden of ethical behavior is shared unequally - humans are responsible for treating small children or nonhuman animals correctly but they, being unable to understand moral rules, are not responsible for treating us correctly in turn.)

It is simply not reasonable to unilaterally impose one's own desires onto others - it breaks that foundational rule. In principle we should maximize getting-what-they-want-ness across all beings, but to do so in a way that blatantly disregards the right not to get imposed on is obscene.

That is: the right to get what you want and not get what you don't want, is what generalizes to consequentialism over volition - not the other way around. That right is primary and must be respected as much as possible in every single instance. And all these examples disrespect that right in some way. The debate team guy made an agreement which he then broke without renegotiating it first; the people getting killed to help others did not agree that this should occur; etc.

To put it another way: people can do whatever they want as long as they don't break contracts they have negotiated with other people. (In practice not every social contract is actually agreed to - nobody signs a contract saying they won't murder anyone - but that's part of how our world is not currently maximally ethical in its arrangement.) However, what people ought to do (but are not imo obligated to do) is that which, relative to their subjective knowledge, maximizes the total get-what-you-want-ness for everyone conditional upon obeying that first rule.

Replies from: TAG↑ comment by TAG · 2022-06-10T16:44:51.523Z · LW(p) · GW(p)

You seem to be assuming that everyone is a competent adult.

Replies from: MSRayne↑ comment by MSRayne · 2022-06-10T19:21:08.291Z · LW(p) · GW(p)

I need a little more explanation about what you're intending to say here. Can you specifically tell me what you think is wrong or unworkable about what I said, and why?

Replies from: TAG↑ comment by TAG · 2022-06-11T18:13:56.647Z · LW(p) · GW(p)

If an entity has imperfect insight into its own needs and desires, then it can be beneficial for it to impose on it what it thinks it doesn't want, or to keep it from what it mistakenly things it does want. That's generally built into adult-child relations, but adults are not equally omniscience, so the problem does not disappear.

Replies from: MSRayne↑ comment by MSRayne · 2022-06-12T12:59:48.474Z · LW(p) · GW(p)

If indeed you know of a course of action that would benefit someone more than the course they currently want to go on, you can provide them an incentive to change their mind willingly. A bet would do: "If you try X instead and afterward don't honestly think it's better than the Y you thought you wanted, I'll pay Z in recompense for your troubles." (Of course, I'm skeptical that me-in-the-future has any right to define what was best retroactively for me-in-the-past, due to not actually being exactly the same person, but let's just assume that for now.)

This is totally ethical and does not infringe upon subjective freedom of will. I do not think anyone has the right to force anyone else to change their mind or act against what they believe they want unless their preferred course of action would actually endanger their life (as in the case of a parent picking up their toddler who walks into the road). Even if they're wrong, it's their responsibility to be wrong and learn from it, not be saved from their own not-yet-made mistakes.

I haven't yet decided if interfering with intentional suicide is ethical or not. (My suspicion is that suicide is immoral, as it is murder of all one's possible future selves who would not, were they present now, consent to being prevented from existing, meaning that preventing suicide is likely an acceptable tradeoff protecting their rights while infringing upon those of the suicidal person. But it will take more thought.)

To me it seems that the individual is always the arbiter of what is best for them. Only that individual - not anyone else, not even an AI modeling their mind. Of course, a sufficiently powerful AI would easily be able to convince them to desire different things using that mind model, but the extrapolated volition is nonetheless not legitimate until willingly accepted by the person - the AI does not have the right to implement it independently without consent. (And I, personally, would not give blanket consent for an AI to manage my affairs.)

Hmm. That suicide example does present a way in which your view here could be interpreted as true within my framework, now that I think about it. But since I don't consider entities to be identical to past or future versions of themselves, it sounded very wrong to me. Nobody can be wrong about what they want right now. But people can be mistaken about what future versions of themselves would have wanted them to do right now, due to lack of knowledge about the future, and inasmuch as you consider yourself, though not identical to them, to be continuous with them (the same person "in essence"), you ought to take their desires into account - and since you can be mistaken about that, others who can prove they know better about that matter have the right to interfere on their behalf... but only those future selves have the right to say whether that interference was legitimate or not. Hence the bet I described at the beginning. Interesting! Thanks for the opportunity to think about this.

comment by burmesetheater (burmesetheaterwide) · 2022-06-09T23:48:22.021Z · LW(p) · GW(p)

There seems to be a deep problem underlying these claims: even if humans have loosely aligned intuition about what's right and wrong, which isn't at all clear, why would we trust something just because it feels obvious? We make mistakes on this basis all the time and there are plenty of contradictory notions of what is obviously correct--religion, anyone?

Further, if grandma is in such a poor state that simply nudging her would kill her AND the perpetrator is such a divergent individual that they would then use the recovered funds to improve others' lives (which might have many positive years still available, if such a concept is possible or meaningful) then one might argue that it seems a poor conclusion to NOT kill grandma if one's concern is for the welfare of others. One could also simply steal grandma's money, since this is probably easier to get away with than murder, but then you would be leaving an ethical optimization on the table by not ending grandma's life, which as hinted at earlier is probably in the negative side of qualitative equity.

Replies from: rdevinbog↑ comment by rdb (rdevinbog) · 2022-06-10T16:09:39.931Z · LW(p) · GW(p)

It doesn't seem to me that obviousness is proof enough that an intuition is good, but something appearing "obvious" in your brain might be a marker/enrich for beliefs that have been selected for in social environments.

There are certainly times when it's good to break universal maxims. Yet I don't think it's very easy to be a person who is capable of doing that -- the divergent individual you're talking about. Let's take lying, for example. It is generally good to be honest. There are times when it is really net good and useful to lie. But if you're someone who becomes very good at lying and habituated to lie, you probably start overriding your instincts to be honest. Maybe a divergent individual who says "fuck off" to all internal signals and logically calculates out the results of every decision could get away with that. But I think those people really run the risk of losing out on information baked into the heuristics.

Similarly, I don't think those divergent people are really optimal actors, in the long-run. There are certainly times when it's good to break universal maxims; but even for you, as an individual, it's probably not good to do it all the time. If you get known as a grandma-pusher, you're going to be punished, which is probably net good for society, but you also reduced your ability to add resources to the game. Human interaction is an iterated game, and there are no rings of Gyges.

comment by Richard_Kennaway · 2022-06-10T06:10:57.409Z · LW(p) · GW(p)

You know that everything Satan says is true. Is it right to let Grandma fall down the stairs?

At some point, you’d have to be crazy not to agree.

Get rid of Satan instead.

At some point, you'd have to be crazy not to question the hypothetical.

For me that point is "from the start". I may find it acceptable to go along with it in this case or that, but I'll always consider the issue.

comment by Dagon · 2022-06-10T17:34:43.407Z · LW(p) · GW(p)

This runs square into the fundamental Utilitarian problem that moral valuation of individuals is nontrivial and nonlinear. In most of our intuitions, it's also personal - I value my grandma more than I value yours. For any reasonable objective measure of aggregate utility, it's absolutely right to distribute grandma's resources more usefully (whether you kill her or just keep her barely alive is a related but separate question). And it's right to distribute YOUR resources more evenly, if you believe in declining marginal utility and individual equality of utility-weight.

You need to bite one of two bullets: either your intuitions are very wrong about grandma, and you should kill her, or your intuitions are very wrong about the relative moral weight of your family compared to distant strangers.

This becomes even more clear if you specify that the beneficiaries of the money you'll steal from grandma is going to OTHER grandmas, who are near-starvation. If you don't have a theory for why THIS grandma is more valuable than the others, then you don't have a consistent moral system.

↑ comment by gbear605 · 2022-06-10T22:14:40.206Z · LW(p) · GW(p)

I suspect most people that would say that they wouldn't kill Grandma would also say the same about a situation where they can kill someone else's grandma to give the money to their own family. Actually, in the hypothetical, you're not one of Grandma's heirs, so I interpreted it as if you're some random person who happens to be around Grandma, not one of her actual grandchildren.

So really, I think that it is either something like "the moral weight of the person next to me versus distant strangers" or "choosing to kill someone is fundamentally different than choosing to save someone's life and you can't add them up".

comment by M. Y. Zuo · 2022-06-11T00:21:02.620Z · LW(p) · GW(p)

This was the right thing to do. There was no way I could participate in the tournament, and they’d already paid for everything. This way I’m happy and they’re happy. Everyone wins!

I got a bit squinty-eyed, but I couldn’t see a flaw. We both agreed it was wrong that he didn’t prepare. But once that was done, was there nothing wrong with what he did, if consequences are all that matter?

Your friends actions seem consequential for future debate club decisions regarding funding travel for other members. It’s not even clear if his personal benefits outweighed the higher trust and reputation he would have enjoyed among the debate club members had he put in even a mediocre effort.

It’s hard to imagine anyone perceiving a net benefit to his actions if taking the long term view of a few years or more.

This doesn’t seem like the same class of examples as having to decide on grandma’s fate, where some might.