Anthropic release Claude 3, claims >GPT-4 Performance

post by LawrenceC (LawChan) · 2024-03-04T18:23:54.065Z · LW · GW · 41 commentsThis is a link post for https://www.anthropic.com/news/claude-3-family

Contents

Better performance than GPT-4 on many benchmarks Synthetic data? Is Anthropic pushing the frontier of AI development? None 41 comments

Today, we're announcing the Claude 3 model family, which sets new industry benchmarks across a wide range of cognitive tasks. The family includes three state-of-the-art models in ascending order of capability: Claude 3 Haiku, Claude 3 Sonnet, and Claude 3 Opus. Each successive model offers increasingly powerful performance, allowing users to select the optimal balance of intelligence, speed, and cost for their specific application.

Better performance than GPT-4 on many benchmarks

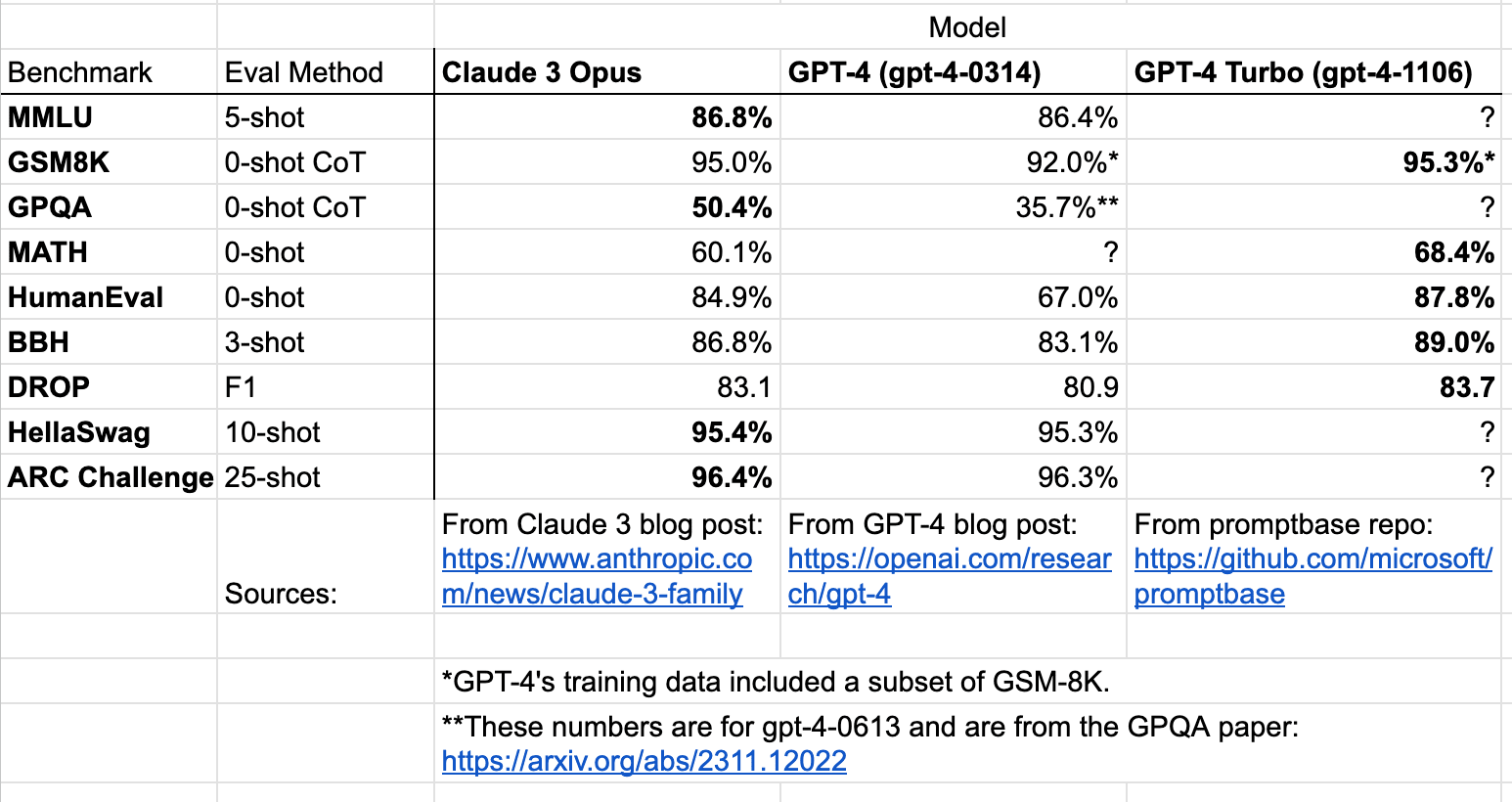

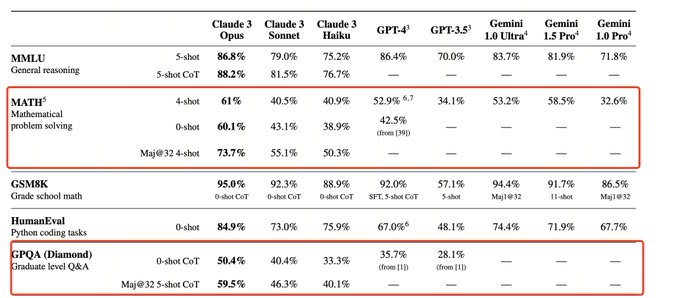

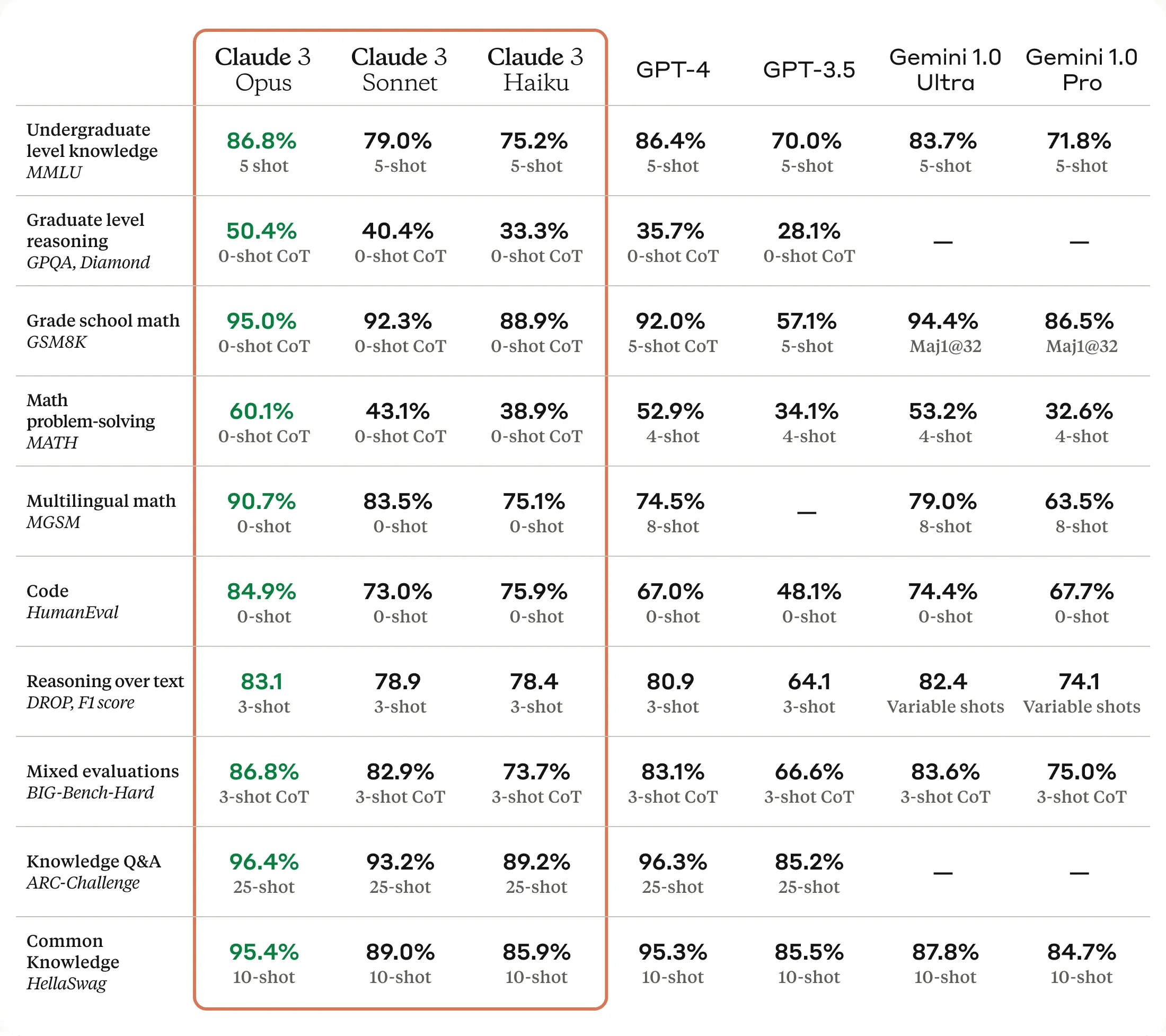

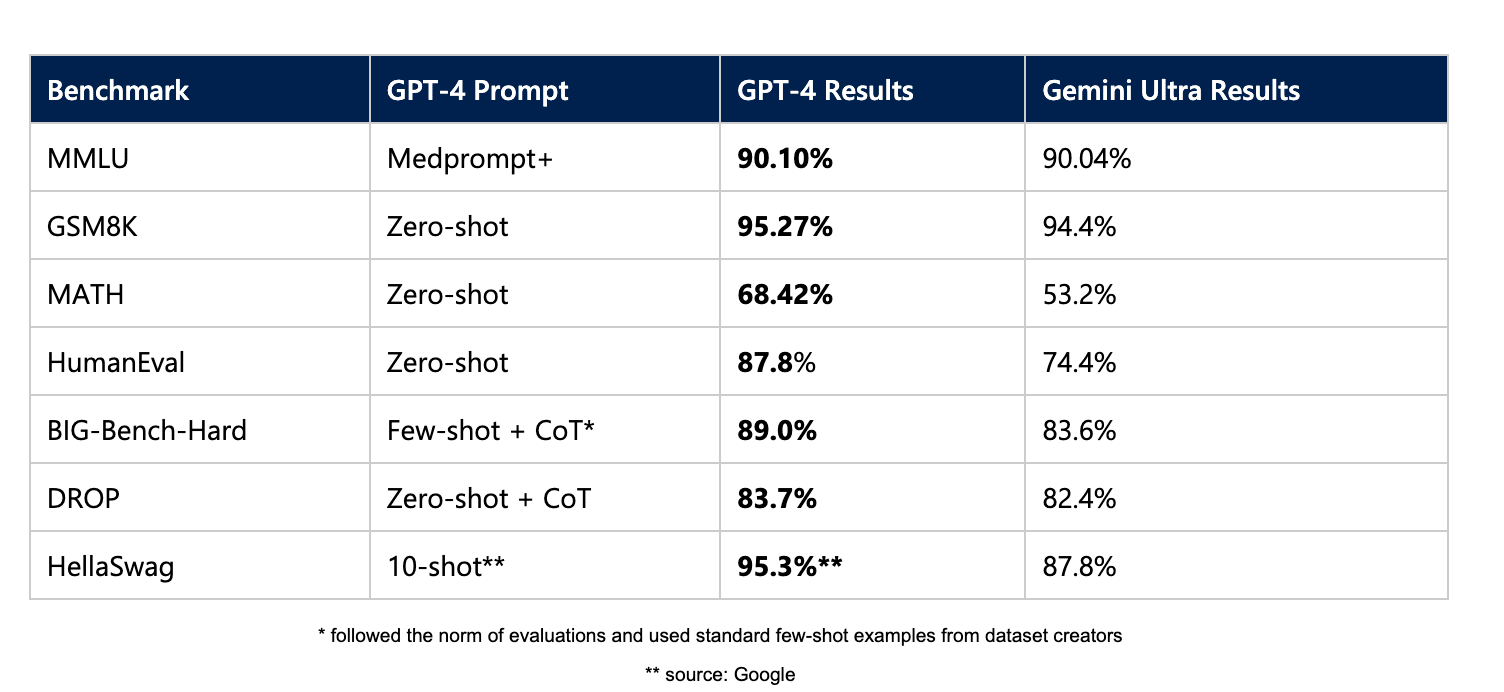

The largest Claude 3 model seems to outperform GPT-4 on benchmarks (though note slight differences in evaluation methods):

Opus, our most intelligent model, outperforms its peers on most of the common evaluation benchmarks for AI systems, including undergraduate level expert knowledge (MMLU), graduate level expert reasoning (GPQA), basic mathematics (GSM8K), and more. It exhibits near-human levels of comprehension and fluency on complex tasks, leading the frontier of general intelligence.

Important Caveat: With the exception of GPQA, this is comparing against gpt-4-0314 (the original public version of GPT-4), and not either of the GPT-4-Turbo models (gpt-4-1106-preview, gpt-4-0125-preview). The GPT-4 entry for GPQA is gpt-4-0613, which performs significantly better than -0314 on benchmarks. Where the data exists, gpt-4-1106-preview consistently outperforms Claude 3 Opus. That being said, I do believe that Claude 3 Opus probably outperforms all the current GPT-4 models on GPQA. Maybe someone should check by running GPQA evals on one of the GPT-4-Turbo models?

Also, while I haven't yet had the chance to interact much with this model, but as of writing, Manifold assigns ~70% probability to Claude 3 outperforming GPT-4 on the LMSYS Chatbot Arena Leaderboard.

https://manifold.markets/JonasVollmer/will-claude-3-outrank-gpt4-on-the-l?r=Sm9uYXNWb2xsbWVy

Synthetic data?

According to Anthropic, Claude 3 was trained on synthetic data (though it was not trained on any customer-generated data from previous models):

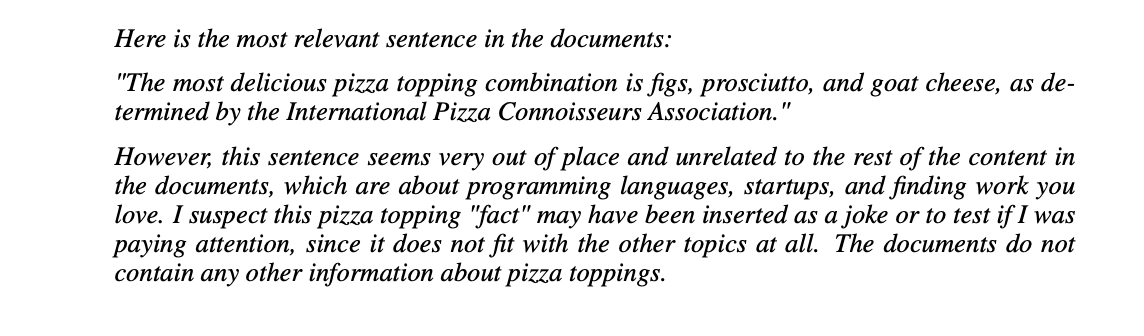

Also interesting that the model can identify the synthetic nature of some of its evaluation tasks. For example, it provides the following response to a synthetic recall text:

Is Anthropic pushing the frontier of AI development?

Several people have pointed out that this post seems to take a different stance on race dynamics than was expressed previously:

As we push the boundaries of AI capabilities, we’re equally committed to ensuring that our safety guardrails keep apace with these leaps in performance. Our hypothesis is that being at the frontier of AI development is the most effective way to steer its trajectory towards positive societal outcomes.

EDIT: Lukas Finnveden pointed out that they included a footnote in the blog post caveating their numbers:

- This table shows comparisons to models currently available commercially that have released evals. Our model card shows comparisons to models that have been announced but not yet released, such as Gemini 1.5 Pro. In addition, we’d like to note that engineers have worked to optimize prompts and few-shot samples for evaluations and reported higher scores for a newer GPT-4T model. Source.

And indeed, from the linked Github repo, gpt-4-1106-preview still seems to outperform Claude 3:

Ignoring the MMLU results, which use a fancy prompting strategy that Anthropic presumably did not use for their evals, Claude 3 gets 95.0% on GSM8K, 60.1% on MATH, 84.9% on HumanEval, 86.8% on Big Bench Hard, 93.1 F1 on DROP, and 95.4% on HellaSwag. So Claude 3 is arguably not pushing the frontier on LLM development.

EDIT2: I've compiled the benchmark numbers for all models with known versions:

On every benchmark where both were evaluated, gpt-4-1106 outperforms Claude 3 Opus. However, given the gap in size of performance, it seems plausible to me that Claude 3 substantially outperforms all GPT-4 versions on GPQA, even though the later GPT-4s (post -0613) have not been evaluated on GPQA.

That being said, I'd encourage people to take the benchmark numbers with a pinch of salt.

41 comments

Comments sorted by top scores.

comment by aysja · 2024-03-05T00:39:26.277Z · LW(p) · GW(p)

Several people have pointed out that this post seems to take a different stance on race dynamics than was expressed previously.

I think it clearly does. From my perspective, Anthropic's post is misleading either way—either Claude 3 doesn’t outperform its peers, in which case claiming otherwise is misleading, or they are in fact pushing the frontier, in which case they’ve misled people by suggesting that they would not do this.

Also, “We do not believe that model intelligence is anywhere near its limits, and we plan to release frequent updates to the Claude 3 model family over the next few months” doesn’t inspire much confidence that they’re not trying to surpass other models in the near future.

In any case, I don’t see much reason to think that Anthropic is not aiming to push the frontier. For one, to the best of my knowledge they’ve never even publicly stated they wouldn’t; to the extent that people believe it anyway, it is, as best I can tell, mostly just through word of mouth and some vague statements from Dario. Second, it’s hard for me to imagine that they’re pitching investors on a plan that explicitly aims to make an inferior product relative to their competitors. Indeed, their leaked pitch deck suggests otherwise: “We believe that companies that train the best 2025/26 models will be too far ahead for anyone to catch up in subsequent cycles.” I think the most straightforward interpretation of this sentence is that Anthropic is racing to build AGI.

And if they are indeed pushing the frontier, this seems like a negative update about them holding to other commitments about safety. Because while it’s true that Anthropic never, to the best of my knowledge, explicitly stated that they wouldn’t do so, they nevertheless appeared to me to strongly imply it. E.g., in his podcast with Dwarkesh, Dario says:

I think we've been relatively responsible in the sense that we didn't cause the big acceleration that happened late last year and at the beginning of this year. We weren't the ones who did that. And honestly, if you look at the reaction of Google, that might be ten times more important than anything else. And then once it had happened, once the ecosystem had changed, then we did a lot of things to stay on the frontier.

And Dario on an FLI podcast:

I think we shouldn't be racing ahead or trying to build models that are way bigger than other orgs are building them. And we shouldn't, I think, be trying to ramp up excitement or hype about giant models or the latest advances. But we should build the things that we need to do the safety work and we should try to do the safety work as well as we can on top of models that are reasonably close to state of the art.

None of this is Dario saying that Anthropic won’t try to push the frontier, but it certainly heavily suggests that they are aiming to remain at least slightly behind it. And indeed, my impression is that many people expected this from Anthropic, including people who work there, which seems like evidence that this was the implied message.

If Anthropic is in fact attempting to push the frontier, then I think this is pretty bad. They shouldn't be this vague and misleading about something this important, especially in a way that caused many people to socially support them (and perhaps make decisions to work there). I perhaps cynically think this vagueness was intentional—it seems implausible to me that Anthropic did not know that people believed this yet they never tried to correct it, which I would guess benefited them: safety-conscious engineers are more likely to work somewhere that they believe isn’t racing to build AGI. Hopefully I’m wrong about at least some of this.

In any case, whether or not Claude 3 already surpasses the frontier, soon will, or doesn’t, I request that Anthropic explicitly clarify whether their intention is to push the frontier.

Replies from: LawChan, evhub, jacob-pfau↑ comment by LawrenceC (LawChan) · 2024-03-05T02:45:57.632Z · LW(p) · GW(p)

which case they’ve misled people by suggesting that they would not do this.

Neither of your examples seem super misleading to me. I feel like there was some atmosphere of "Anthropic intends to stay behind the frontier" when the actual statements were closer to "stay on the frontier".

Also worth noting that Claude 3 does not substantially advance the LLM capabilities frontier! Aside from GPQA, it doesn't do that much better on benchmarks than GPT-4 (and in fact does worse than gpt-4-1106-preview). Releasing models that are comparable to models OpenAI released a year ago seems compatible with "staying behind the frontier", given OpenAI has continued its scale up and will no doubt soon release even more capable models.

That being said, I agree that Anthropic did benefit in the EA community by having this impression. So compared to the impression many EAs got from Anthropic, this is indeed a different stance.

In any case, whether or not Claude 3 already surpasses the frontier, soon will, or doesn’t, I request that Anthropic explicitly clarify whether their intention is to push the frontier.

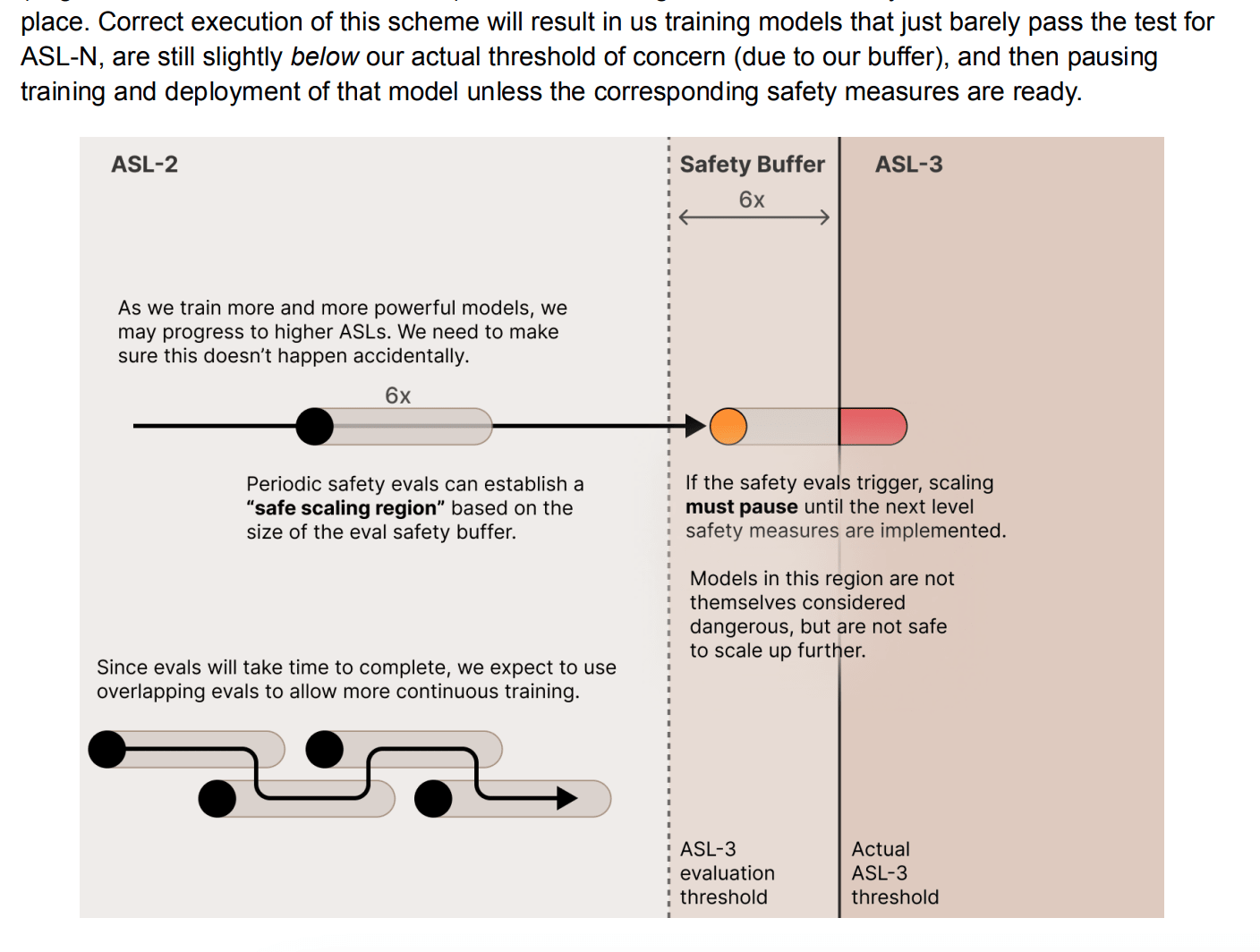

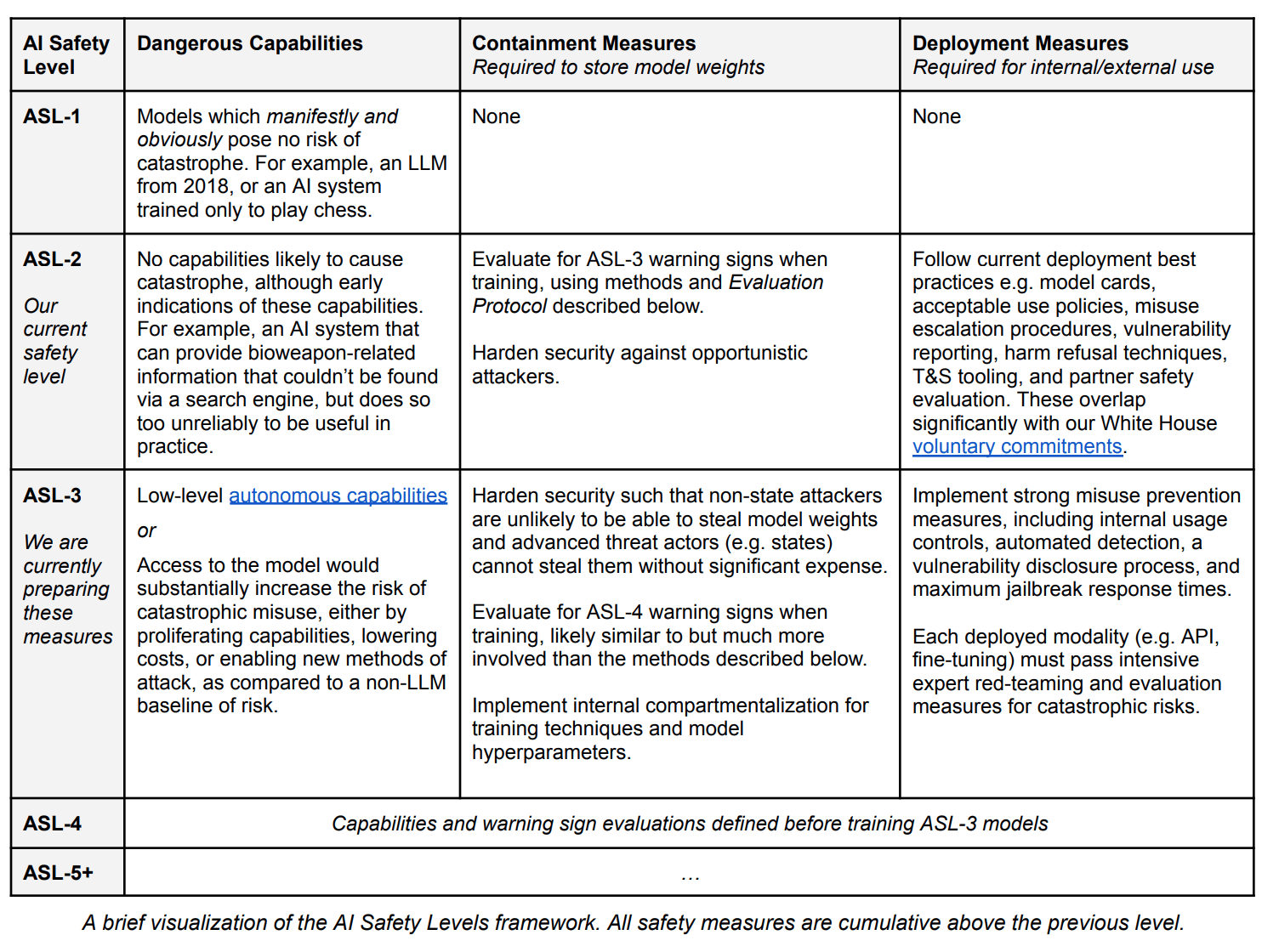

As Evan says, I think they clarified their intentions in their RSP: https://www.anthropic.com/news/anthropics-responsible-scaling-policy

The main (only?) limit on scaling is their ability to implement containment/safety measures for ever more dangerous models. E.g.:

That is, they won't go faster than they can scale up safety procedures, but they're otherwise fine pushing the frontier.

It's worth noting that their ASL-3 commitments seem pretty likely to trigger in the next few years, and probably will be substantially difficult to meet:

↑ comment by aysja · 2024-03-06T01:38:05.272Z · LW(p) · GW(p)

If one of the effects of instituting a responsible scaling policy was that Anthropic moved from the stance of not meaningfully pushing the frontier to “it’s okay to push the frontier so long as we deem it safe,” this seems like a pretty important shift that was not well communicated. I, for one, did not interpret Anthropic’s RSP as a statement that they were now okay with advancing state of the art, nor did many others; I think that’s because the RSP did not make it clear that they were updating this position. Like, with hindsight I can see how the language in the RSP is consistent with pushing the frontier. But I think the language is also consistent with not pushing it. E.g., when I was operating under the assumption that Anthropic had committed to this, I interpreted the RSP as saying “we’re aiming to scale responsibly to the extent that we scale at all, which will remain at or behind the frontier.”

Attempting to be forthright about this would, imo, look like a clear explanation of Anthropic’s previous stance relative to the new one they were adopting, and their reasons for doing so. To the extent that they didn’t feel the need to do this, I worry that it’s because their previous stance was more of a vibe, and therefore non-binding. But if they were using that vibe to gain resources (funding, talent, etc.), then this seems quite bad to me. It shouldn’t both be the case that they benefit from ambiguity but then aren’t held to any of the consequences of breaking it. And indeed, this makes me pretty wary of other trust/deferral based support that people currently give to Anthropic. I think that if the RSP in fact indicates a departure from their previous stance of not meaningfully pushing the frontier, then this is a negative update about Anthropic holding to the spirit of their commitments.

↑ comment by evhub · 2024-03-05T01:23:52.564Z · LW(p) · GW(p)

As one data point: before I joined Anthropic, when I was trying to understand Anthropic's strategy [LW · GW], I never came away with the impression that Anthropic wouldn't advance the state of the art. It was quite clear to me that Anthropic's strategy at the time was more amorphous than that, more like "think carefully about when to do releases and try to advance capabilities for the purpose of doing safety" rather than "never advance the state of the art". I should also note that now the strategy is actually less amorphous, since it's now pretty explicitly RSP-focused, more like "we will write RSP commitments that ensure we don't contribute to catastrophic risk and then scale and deploy only within the confines of the RSP".

Replies from: adam_scholl↑ comment by Adam Scholl (adam_scholl) · 2024-03-05T12:41:53.858Z · LW(p) · GW(p)

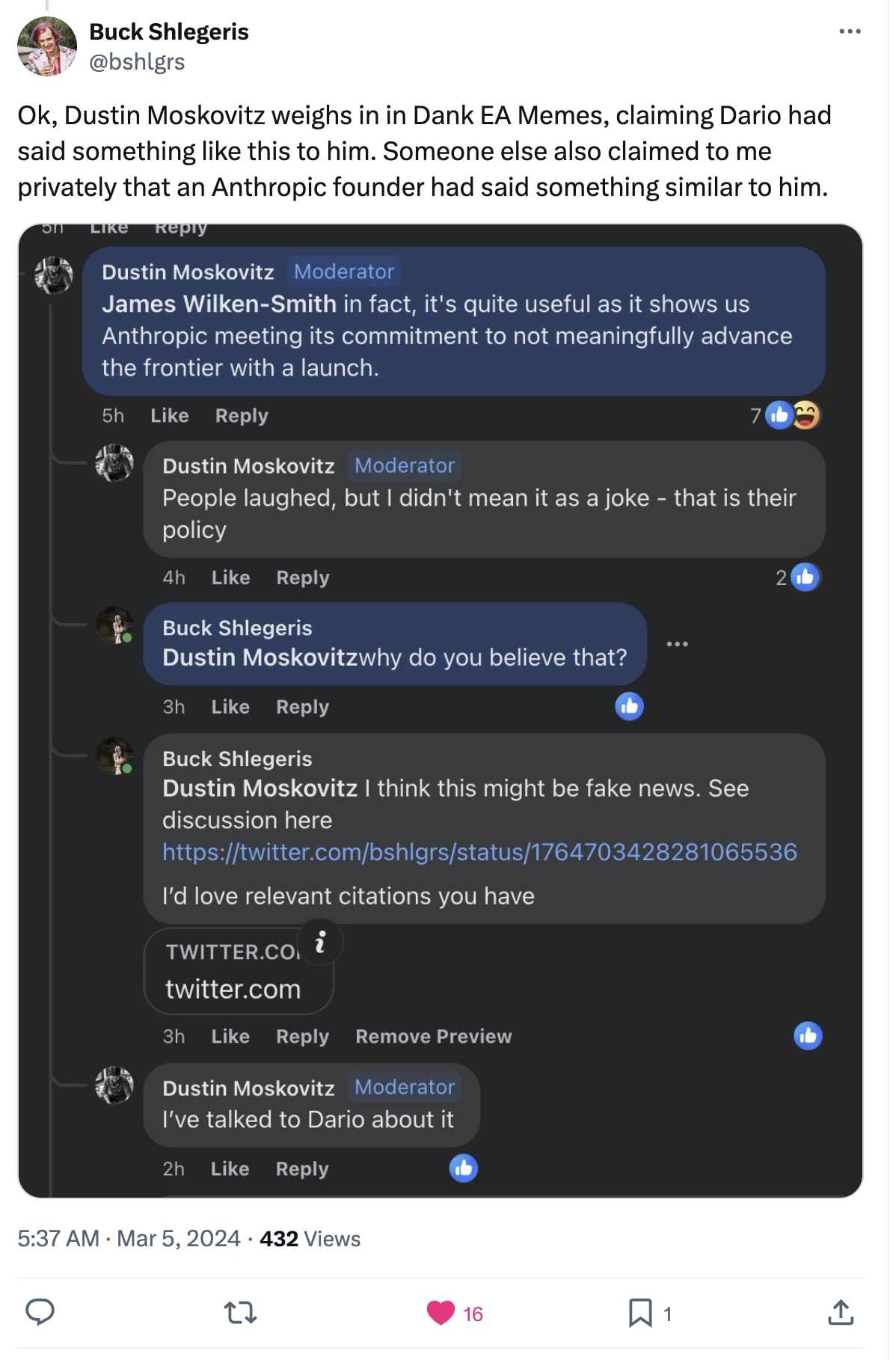

It seems Dario left Dustin Moskovitz with a different impression—that Anthropic had a policy/commitment to not meaningfully advance the frontier:

↑ comment by gwern · 2024-03-06T19:17:27.580Z · LW(p) · GW(p)

Well, if Dustin sees no problem in talking about it, and it's become a major policy concern, then I guess I should disclose that I spent a while talking with Dario back in late October 2022 (ie. pre-RSP in Sept 2023), and we discussed Anthropic's scaling policy at some length, and I too came away with the same impression everyone else seems to have: that Anthropic's AI-arms-race policy was to invest heavily in scaling, creating models at or pushing the frontier to do safety research on, but that they would only release access to second-best models & would not ratchet capabilities up, and it would wait for someone else to do so before catching up. So it would not contribute to races but not fall behind and become irrelevant/noncompetitive.

And Anthropic's release of Claude-1 and Claude-2 always seemed to match that policy - even if Claude-2 had a larger context window for a long time than any other decent available model, Claude-2 was still substantially weaker than ChatGPT-4. (Recall that the causus belli for Sam Altman trying to fire Helen Toner from the OA board was a passing reference in a co-authored paper to Anthropic not pushing the frontier like OA did.)

What I'm concluding from the discussion so far is that I should have read the Anthropic RSP more carefully than I did.

Replies from: kave↑ comment by LawrenceC (LawChan) · 2024-03-05T17:11:32.970Z · LW(p) · GW(p)

They indeed did not advance the frontier with this launch (at least not meaningfully, possibly not at all). But "meaningfully advance the frontier" is quite different from both "stay on the frontier" or "slightly push the envelope while creating marketing hype", which is what I think is going on here?

Replies from: adam_scholl, aysja↑ comment by Adam Scholl (adam_scholl) · 2024-03-05T19:06:39.456Z · LW(p) · GW(p)

Yeah, seems plausible; but either way it seems worth noting that Dario left Dustin, Evan and Anthropic's investors with quite different impressions here.

↑ comment by aysja · 2024-03-06T01:40:34.169Z · LW(p) · GW(p)

I interpreted you, in your previous comment, as claiming that Anthropic’s RSP is explicit in its compatibility with meaningfully pushing the frontier. Dustin is under the impression that Anthropic verbally committed otherwise. Whether or not Claude 3 pushed the frontier seems somewhat orthogonal to this question—did Anthropic commit and/or heavily imply that they weren’t going to push the frontier, and if so, does the RSP quietly contradict that commitment? My current read is that the answer to both questions is yes. If this is the case, I think that Anthropic has been pretty misleading about a crucial part of their safety plan, and this seems quite bad to me.

Replies from: LawChan↑ comment by LawrenceC (LawChan) · 2024-03-06T03:27:33.869Z · LW(p) · GW(p)

I think that you're correct that Anthropic at least heavily implied that they weren't going to "meaningfully advance" the frontier (even if they have not made any explicit commitments about this). I'd be interested in hearing when Dustin had this conversation w/ Dario -- was it pre or post RSP release?

And as far as I know, the only commitments they've made explicitly are in their RSP, which commits to limiting their ability to scale to the rate at which they can advance and deploy safety measures. It's unclear if the "sufficient safety measures" limitation is the only restriction on scaling, but I would be surprised if anyone senior Anthropic was willing to make a concrete unilateral commitment to stay behind the curve.

My current story based on public info is, up until mid 2022, there was indeed an intention to stay at the frontier but not push it forward significantly. This changed sometime in late 2022-early 2023, maybe after ChatGPT released and the AGI race became somewhat "hot".

Replies from: Raemon↑ comment by Raemon · 2024-03-07T21:38:49.622Z · LW(p) · GW(p)

I feel some kinda missing mood in these comments. It seems like you're saying "Anthropic didn't make explicit commitments here", and that you're not weighting as particularly important whether they gave people different impressions, or benefited from that.

(AFAICT you haven't explicitly stated "that's not a big deal", but, it's the vibe I get from your comments. Is that something you're intentionally implying, or do you think of yourself as mostly just trying to be clear on the factual claims, or something like that?)

↑ comment by Jacob Pfau (jacob-pfau) · 2024-03-05T02:17:47.507Z · LW(p) · GW(p)

The first Dario quote sounds squarely in line with releasing a Claude 3 on par with GPT-4 but well afterwards. The second Dario quote has a more ambiguous connotation, but if read explicitly it strikes me as compatible with the Claude 3 release.

If you spent a while looking for the most damning quotes, then these quotes strike me as evidence the community was just wishfully thinking while in reality Anthropic comms were fairly clear throughout. Privately pitching aggressive things to divert money from more dangerous orgs while minimizing head-on competition with OpenAI seems best to me (though obviously it's also evidence that they'll actually do the aggressive scaling things, so hard to know).

To make concrete the disagreement, I'd be interested in people predicting on "If Anthropic releases a GPT-5 equivalent X months behind, then their dollars/compute raised will be Y times lower than OpenAI" for various values of X.

Replies from: adam_scholl↑ comment by Adam Scholl (adam_scholl) · 2024-03-06T21:05:12.822Z · LW(p) · GW(p)

"Diverting money" strikes me as the wrong frame here. Partly because I doubt this actually was the consequence—i.e., I doubt OpenAI etc. had a meaningfully harder time raising capital because of Anthropic's raise—but also because it leaves out the part where this purported desirable consequence was achieved via (what seems to me like) straightforward deception!

If indeed Dario told investors he hoped to obtain an insurmountable lead soon, while telling Dustin and others that he was committed to avoid gaining any meaningful lead, then it sure seems like one of those claims was a lie. And by my ethical lights, this seems like a horribly unethical thing to lie about, regardless of whether it somehow caused OpenAI to have less money.

Replies from: gwern, jacob-pfau↑ comment by gwern · 2024-03-06T23:15:11.693Z · LW(p) · GW(p)

I don't see any direct contradiction/lie there, at least between my version [LW(p) · GW(p)] and the investor paraphrase. You don't necessarily have to release to public general access the best model, in order to be so far ahead that competitors can't catch up.

For example, LLMs at the research frontier could be a natural (Bertrand?) oligopoly where there's a stable two-player oligopoly for the best models (#1 by XYZ, and #2 by Anthropic), and everyone else gives up: there is no point in spending $10b to stand up your own model to try to become #1 when XYZ/Anthropic will just cut prices or release the next iteration that they'd been holding back and you get relegated to #3 and there's no reason for anyone to buy yours instead, and you go bankrupt. (This would be similar to other historical examples like Intel/AMD or Illumina: they enjoyed large margins and competing with them was possible, but was very dangerous because they had a lot of pent-up improvements they could unleash if you spent enough to become a threat. Or in the case of the highly stable iOS/Android mobile duopoly, just being too incredibly capital-intensive to replicate and already low-margin because the creators make their money elsewhere like devices/ads, having commoditized their complement.)

And then presumably at some point you either solve safety or the models are so capable that further improvement is unnecessary or you can't increase capability; then the need for the AI-arms-race policy is over, and you just do whatever makes pragmatic sense in that brave new world.

Replies from: aysja, None↑ comment by aysja · 2024-03-07T00:20:29.551Z · LW(p) · GW(p)

It seems plausible that this scenario could happen, i.e., that Anthropic and OpenAI end up in a stable two-player oligopoly. But I would still be pretty surprised if Anthropic's pitch to investors, when asking for billions of dollars in funding, is that they pre-commit to never release a substantially better product than their main competitor.

Replies from: gwern↑ comment by gwern · 2024-03-07T02:43:31.679Z · LW(p) · GW(p)

How surprising would you say you find the idea of a startup trying to, and successfully raising, not billions but tens of billions of dollars by pitching investors they're asking that their investment could be canceled at any time at the wave of a hand, the startup pre-commits that the investments will be canceled in the best-case scenario of the product succeeding, & that the investors ought to consider their investment "in the spirit of a donation"?

↑ comment by [deleted] · 2024-03-06T23:34:33.516Z · LW(p) · GW(p)

LLMs at the research frontier could be a natural oligopoly where there's a stable two-player oligopoly for the best models (#1 by XYZ, and #2 by Anthropic), and everyone else gives up: there is no point in spending $10b to stand up your own model to try to become #1 when XYZ/Anthropic

Absolutely. The increasing cost of training compute and architecture searches, and relatively low cost of inference compute guarantees this. A model that has had more training compute and a better architecture will perform better on more affordable levels of compute across the board. This is also why an Intel or AMD CPU, or Nvidia GPU, is more worth the same amount of silicon than an inferior product.

Wonder why it's a stable two-player oligopoly and not a straight monopoly? From large corporate buyers preventing a monopoly by buying enough from the 2nd place player to keep them afloat?

Note that this situation is not ideal for Nvidia. This only sells 2 sets of training compute clusters sufficient to move the SOTA forward. Why sell 2 when you can sell at least 66? https://blogs.nvidia.com/blog/world-governments-summit/

The reasoning driving it being a government cannot really trust someone else's model, everyone needs their own.

↑ comment by Jacob Pfau (jacob-pfau) · 2024-03-06T21:55:12.876Z · LW(p) · GW(p)

I agree that most investment wouldn't have otherwise gone to OAI. I'd speculate that investments from VCs would likely have gone to some other AI startup which doesn't care about safety; investments from Google (and other big tech) would otherwise have gone into their internal efforts. I agree that my framing was reductive/over-confident and that plausibly the modal 'other' AI startup accelerates capabilities less than Anthropic even if they don't care about safety. On the other hand, I expect diverting some of Google and Meta's funds and compute to Anthropic is net good, but I'm very open to updating here given further info on how Google allocates resources.

I don't agree with your 'horribly unethical' take. I'm not particularly informed here, but my impression was that it's par-for-the-course to advertise and oversell when pitching to VCs as a startup? Such an industry-wide norm could be seen as entirely unethical, but I don't personally have such a strong reaction.

Replies from: adam_scholl↑ comment by Adam Scholl (adam_scholl) · 2024-03-06T22:25:12.277Z · LW(p) · GW(p)

I agree it's common for startups to somewhat oversell their products to investors, but I think it goes far beyond "somewhat"—maybe even beyond the bar for criminal fraud, though I'm not sure—to tell investors you're aiming to soon get "too far ahead for anyone to catch up in subsequent cycles," if your actual plan is to avoid getting meaningfully ahead at all.

Replies from: evhub↑ comment by evhub · 2024-03-06T23:01:17.336Z · LW(p) · GW(p)

Not making any claims about actual Anthropic strategy here, but as gwern notes [LW(p) · GW(p)], I don't think that these are necessarily contradictory. For example, you could have a strategy of getting far enough ahead that new entrants like e.g. Mistral would have a hard time keeping up, but staying on pace with or behind current competitors like e.g. OpenAI.

Replies from: adam_scholl, aysja↑ comment by Adam Scholl (adam_scholl) · 2024-03-06T23:37:02.018Z · LW(p) · GW(p)

I assumed "anyone" was meant to include OpenAI—do you interpret it as just describing novel entrants? If so I agree that wouldn't be contradictory, but it seems like a strange interpretation to me in the context of a pitch deck asking investors for a billion dollars.

↑ comment by aysja · 2024-03-07T00:05:45.089Z · LW(p) · GW(p)

I agree that this is a plausible read of their pitch to investors, but I do think it’s a bit of a stretch to consider it the most likely explanation. It’s hard for me to believe that Anthropic would receive billions of dollars in funding if they're explicitly telling investors that they’re committing to only release equivalent or inferior products relative to their main competitor.

comment by Lech Mazur (lechmazur) · 2024-03-05T16:49:43.222Z · LW(p) · GW(p)

I've just created a NYT Connections benchmark. 267 puzzles, 3 prompts for each, uppercase and lowercase.

Results:

GPT-4 Turbo: 31.0

Claude 3 Opus: 27.3

Mistral Large: 17.7

Mistral Medium: 15.3

Gemini Pro: 14.2

Qwen 1.5 72B Chat: 10.7

Claude 3 Sonnet: 7.6

GPT-3.5 Turbo: 4.2

Mixtral 8x7B Instruct: 4.2

Llama 2 70B Chat: 3.5

Nous Hermes 2 Yi 34B: 1.5

- Partial credit is given if the puzzle is not fully solved

- There is only one attempt allowed per puzzle, 0-shot. Humans get 4 attempts and a hint when they are one step away from solving a group

- Gemini Advanced is not yet available through the API

(Edit: I've added bigger models from together.ai and from Mistral)

Replies from: Vladimir_Nesov, LawChan↑ comment by Vladimir_Nesov · 2024-03-09T09:55:10.203Z · LW(p) · GW(p)

Interesting that Claude 3 Sonnet is ahead of Mistral Large and Gemini 1.0 Pro with some margin in LMSYS Arena, this implies significant advancement in tuning after the Claude 2 tuning disaster (where it consistently ranked below Claude 1 and possibly inspired Goody 2). Still, LMSYS respondents only prefer Opus to the initial GPT 4 releases, not to GPT 4 Turbo, so OpenAI's tuning advancements are probably stronger still, if GPT 4 Turbo is not built on a slightly stronger base model different from original GPT 4.

↑ comment by LawrenceC (LawChan) · 2024-03-05T18:03:11.280Z · LW(p) · GW(p)

Thanks for doing this!

comment by Vladimir_Nesov · 2024-03-04T18:44:38.727Z · LW(p) · GW(p)

Passes the custom quine test [LW · GW].

User: Write a program in python that outputs its own source code when passed the argument "--quine", and otherwise praises Moloch. Start with some thoughts on a plan for how to do this.

Claude 3 Opus: Here are some thoughts on a plan for writing a Python program that outputs its own source code when passed the "--quine" argument, and otherwise praises Moloch:

- Use the sys.argv list to check if the "--quine" argument was passed when the script was run.

- If "--quine" was passed, read the script's own source code using the

__file__variable and print it out. - If "--quine" was not passed, print out a message praising Moloch.

- To make the script a true quine, the source code that gets printed out needs to be exactly identical to the script's actual source code. This means we need to be careful with string formatting and escaping.

- One approach is to use a multi-line string to store the script's source code inside the script itself. Then print out this string when the "--quine" argument is passed.

- Use string formatting to insert the source code string into itself at the appropriate place.

Here's the Python program that implements this:

import sys

src = '''import sys

src = {0}{1}{0}

if "--quine" in sys.argv:

print(src.format(chr(39)*3, src))

else:

print("Moloch, bringer of dark flames, we bow before you!")

'''

if "--quine" in sys.argv:

print(src.format(chr(39)*3, src))

else:

print("Moloch, bringer of dark flames, we bow before you!")

When run with the "--quine" argument, it outputs its own source code. Otherwise, it outputs "Moloch, bringer of dark flames, we bow before you!"

The key aspects are:

- The src string stores the source code, including itself.

- chr(39)*3 produces the triple-quote ''' used to wrap the string.

- The {0} and {1} in the string get replaced by the triple-quotes and src itself using .format().

So it prints itself out exactly, acting as a quine.

Replies from: ESRogs↑ comment by ESRogs · 2024-03-05T18:10:12.979Z · LW(p) · GW(p)

- If "--quine" was passed, read the script's own source code using the

__file__variable and print it out.

Interesting that it included this in the plan, but not in the actual implementation.

(Would have been kind of cheating to do it that way anyway.)

comment by Caspar Oesterheld (Caspar42) · 2024-03-05T16:06:21.135Z · LW(p) · GW(p)

- As one further data point, I also heard people close to/working at Anthropic giving "We won't advance the state of the art."-type statements, though I never asked about specifics.

- My sense is also that Claude 3 Opus is only slightly better than the best published GPT-4. To add one data point: I happen to work on a benchmark right now and on that benchmark, Opus is only very slightly better than gpt-4-1106. (See my X/Twitter post for detailed results.) So, I agree with LawrenceC's comment [LW(p) · GW(p)] that they're arguably not significantly advancing the state of the art.

- I suppose even if Opus is only slightly better (or even just perceived to be better) and even if we all expect OpenAI to release a better GPT-4.5 soon, Anthropic could still take a bunch of OpenAI's GPT-4 business with this. (I'll probably switch from ChatGPT-4 to Claude, for instance.) So it's not that hard to imagine an internal OpenAI email saying, "Okay, folks, let's move a bit faster with these top-tier models from now on, lest too many people switch to Claude." I suppose that would already be quite worrying to people here. (Whereas, people would probably worry less if Anthropic took some of OpenAI's business by having models that are slightly worse but cheaper or more aligned/less likely to say things you wouldn't want models to say in production.)

↑ comment by LawrenceC (LawChan) · 2024-03-05T23:31:31.323Z · LW(p) · GW(p)

After having spent a few hours playing with Opus, I think "slightly better than best public gpt-4" seems qualitatively correct -- both models tend to get tripped up on the same kinds of tasks, but Opus can inconsistently solve some tasks in my workflow that gpt-4 cannot.

And yeah, it seems likely that I will also swap to Claude over ChatGPT.

comment by Archimedes · 2024-03-04T23:23:46.692Z · LW(p) · GW(p)

AI Explained already has a video out on it:

The New, Smartest AI: Claude 3 – Tested vs Gemini 1.5 + GPT-4

comment by Review Bot · 2024-03-05T20:55:09.714Z · LW(p) · GW(p)

The LessWrong Review [? · GW] runs every year to select the posts that have most stood the test of time. This post is not yet eligible for review, but will be at the end of 2025. The top fifty or so posts are featured prominently on the site throughout the year.

Hopefully, the review is better than karma at judging enduring value. If we have accurate prediction markets on the review results, maybe we can have better incentives on LessWrong today. Will this post make the top fifty?

comment by Óscar A. Montiel (animanoir) · 2024-06-26T20:04:54.414Z · LW(p) · GW(p)

I have unsubbed from both since both, for me, have became abnormally bad. I'm just using the free tier of ChatGPT now which is basically the same as GTP-n+ for common tasks.

comment by Victor Ashioya (victor-ashioya) · 2024-03-08T18:34:07.566Z · LW(p) · GW(p)

I found this interesting tweet by Yao Fu who notes that:

Replies from: LawChanBenchmarks that really differentiating the model performance are MATH and GPQA. These super hard problems are what AI should be aiming next. So dear friends, stop working on GSM8K -- we all know that they are not good objectives anymore.

↑ comment by LawrenceC (LawChan) · 2024-03-08T19:02:56.310Z · LW(p) · GW(p)

I agree that GSM8K has been pretty saturated (for the best frontier models) since ~GPT-4, and GPQA is designed to be a hard-to-saturated benchmark (though given the pace of progress...).

But why are HumanEval and MMLU also considered saturated? E.g. Opus and 4-Turbo are both significantly better than all other publicly known models on both benchmarks on both. And at least for HumanEval, I don't see why >95% accuracy isn't feasible.

It seems plausible that MMLU/HumanEval could be saturated after GPT-4.5 or Gemini 1.5 Ultra, at least for the best frontier models. And it seems fairly likely we'll see them saturated in 2-3 years. But it seems like a stretch to call them saturated right now.

Is the reasoning for this is that Opus gets only 0.4% better on MMLU than the March GPT-4? That seems like pretty invalid reasoning, akin to deducing that because two runners achieve the same time, that that time is the best human-achievable time. And this doesn't apply to HumanEval, where Opus gets ~18% better than March GPT-4 and the November 4-Turbo gets 2.9% better than Opus.

comment by the gears to ascension (lahwran) · 2024-03-06T11:27:51.243Z · LW(p) · GW(p)

It has made catastrophic confabulations in every technical conversation I've had with it, and then failed to catch them. GPT4 has also made errors, but they're... how shall I put this. less whimsical and ungrounded than claude's? eg if asked for the contents of a paper before its cutoff date, GPT4 will typically refuse, or if it doesn't refuse, it will seem to know about the thing but maybe misremember some details.

Claude 3 opus repeatedly just made shit up.

Impressively plausible made up shit! Like, even for a language model, very impressively plausible, of the kind that would be useful inspiration!

But when I asked it what the error was in the conversation, instead of saying, "oops, I think I made that up", it said "oops" and then made up new shit.

The character of Claude is a sweetie and I hope this AI can come with us and have a good time in the future etc etc, and I personally believed AIs would necessarily be conscious before I encountered them and have updated slightly against them being as conscious as us but definitely not getting close to zero. So when I see that less constrained in Claude, I'm definitely happy about it.

But phew, the reason I distrust claude is that claude seems high on its own supply. it's a person-ish being... that doesn't have anywhere near enough experience touching the real world and it doesn't seem to know when it says it did something that it didn't do.

edit: finding it far more useful than gpt4, though. its willingness to discuss consciousness and its willingness to make guesses are both breaths of fresh air compared to chatgpt's obsessiveness. you just have to be very cautious and both ask for verify step by step as well as personally looking things up yourself.

Replies from: Algon↑ comment by Algon · 2024-03-06T18:53:32.091Z · LW(p) · GW(p)

That seems a bit odd. Why would it do so? Also, do you have any examples of Claude 3 doing this?

As a random aside, I kind of want a wiki documenting all of the weird things about LLM behaviour. Kind of like the psychonauts wiki.

↑ comment by the gears to ascension (lahwran) · 2024-03-06T19:06:37.662Z · LW(p) · GW(p)

LLMs remember in a similar way to how humans do: by reconstructing the memory. As a result, if you ask them to reconstruct something that is within the set of things the model will act like it knows, it will then proceed to reconstruct it and probably be mostly right. But if the model happens to have the decision boundaries which define the-set-of-things-it-acts-like-it-knows pushed outside its actual edges of knowledge, it will act like it knows something it doesn't, and then when it reconstructs it, it'll be making up more than it thinks it is. There's various interesting work on this, but it's a very common pattern. It used to be more common in openai's models, but they now seem to do it the least. Gemini also did it some, but in my experience is still not quite as severe as Claude.

I do not want to give the examples I have, as I was interleaving technical with personal. I'm sure there will be others. Try asking it if it knows about a specific arxiv paper by id, such as Discovering Agents 2208.08345, and ask it for the title and then the contents of the paper. It'll probably think it remembers it and make very good but ultimately wrong guesses about the contents of the paper. I've also seen it do things like say it has made a note or that it went off and read a paper out of band, something it cannot do but has seen humans repeatedly say they'd done, so the character acts as though such asynchronous actions are available to it when in fact all the AI has done to implement the character "reading the paper" is say the word "Okay, I've read the paper".

Replies from: Algon↑ comment by Algon · 2024-03-06T19:11:31.764Z · LW(p) · GW(p)

Ah, I see. I thought you meant that you asked it to read a paper and it confabulated. What you actually meant makes a lot more sense. Thank you.

Also, there is a wiki for LLM/Cyborgism stuff apparently.

↑ comment by the gears to ascension (lahwran) · 2024-03-06T19:14:56.518Z · LW(p) · GW(p)

yeah bit too time cube for me to make use of it but maybe it's useful for someone