Theories of Modularity in the Biological Literature

post by CallumMcDougall (TheMcDouglas), Avery, Lucius Bushnaq (Lblack) · 2022-04-04T12:48:41.834Z · LW · GW · 13 commentsContents

Introduction Theories of modularity Modularity in the environment selects for modular systems Specialisation drives the evolution of modularity Direct selection for modularity, when it can perform a task / enable an adaptation that would be infeasible otherwise Modularity arises from a selection pressure to reduce connection costs in a network None 13 comments

Introduction

This post is part of a sequence describing our team’s research on selection theorems for modularity, as part of this year's AI Safety Camp, under the mentorship of John Wentworth. Here, we provide some background reading for the discussion of modularity that will follow.

As we describe in more detail in our project intro, [LW · GW] the motivating question of modularity that we started with (which is described in John’s post on the Evolution of Modularity [LW · GW]) is why does evolution seem to have produced modular systems (e.g. organs and organ systems), but current ML systems (even genetic algorithms, which are consciously fashioned off evolutionary mechanisms) are highly non-modular? So far, most of our research has focused on the idea of modularly varying goals (MVG), but this is not the only proposed cause of modularity in the biological literature. This post serves as a literature review, with a few brief words on how we are thinking about testing some these hypotheses. Subsequent posts will discuss our team’s research agenda in more detail.

Theories of modularity

Modularity in the environment selects for modular systems

Basic idea: If we have an environment which contains a variety of subproblems and which changes rapidly in highly modular ways, this might select for a modular internal design to better adapt to these problems. In the literature, this usually takes the form of “modularly varying goals” (MVG). If we vary one aspect of the agent’s goal, but keep the rest of the goal and the environment constant, then this might produce a selection pressure for modular systems in correspondence with this modular goal structure.

Biological modularity: Many examples of modularity in biology seem to have a pretty clear correspondence with goals in the environment. For instance, type of terrain and availability of different food sources might vary somewhat independently between environments (or even within the same environment), and correspondingly we see the evolution of modular systems specifically designed to deal with one particular task (muscular system, digestive system). One can make a similar argument for reproduction, respiration, temperature regulation, etc.

Evidence: The main paper on this idea from the biological literature is Kashtan & Alon’s 2005 paper. Their methodology is to train a system (they use both neural networks and genetic algorithms) to learn a particular logic function consisting of a series of logic gates, and after a certain number of steps / generations they vary one particular part of the logic gate setup, while keeping the rest the same. Their results were that modularity (as measured by the Q-score) was selected for, and the network motifs found in the evolved networks had a visible correspondence to the modular parts of the goal. We will discuss this paper in more detail in later sections of this post.

Testing this idea: We started by trying to replicate the results of the 2005 paper. It was somewhat challenging because of the now outdated conventions used in the paper, but our tentative conclusions for now are that the paper doesn’t seem to replicate. Our plans if the replication was a success would have been to generalise to more complicated systems (one proposal was to train a CNN to recognise two digits from an MNIST set and perform an arithmetic operation on them, with the hope that it would learn a modular representation of both the individual digits and of the operator). However, this line of research has been put on hold until we get to the bottom of the null result from the Kashtan & Alon paper’s replication.

For a fuller discussion of how we’ve been testing this idea (and some of our ideas as to why the replication failed), please see this post [LW · GW].

Specialisation drives the evolution of modularity

Basic idea: MVG can be viewed as a subcase of this theory, because the environment fluctuating in a modular way is one possible explanation for why selection might favour specialisation. However, it isn’t the only explanation. Even in a static environment, evolution is a dynamic process, and a modular organism will be more easily able to evolve changes that improve its ability to deal with a particular aspect of its environment, without this affecting other modules (and hence having detrimental impacts on the rest of the organism). Hence specialisation (and hence modularity) might still be selected for.

Biological modularity: This theory was created partly from observation of a problem with MVG: not all environments seem to fluctuate in obviously modular ways (or even to fluctuate much at all). However, a lot of the biological intuitions of MVG carry over into this theory; namely the correspondence between different aspects of the environment that lend themselves well to specialisation, and different modular parts of the organism’s internal structure.

Evidence: The paper Specialisation Can Drive the Evolution of Modularity looked into regulatory gene networks (simulated using genetic algorithms), and examined the conditions under which they form modules. It finds that the main driver of modularity is selection for networks with multiple stable gene activity patterns that have considerable overlap. In other words, many of the genes between the patterns are identical. However, this paper only examined one particular evolutionary setting, and it’s unclear to which extent the results can be viewed as representative of a common principle of biological evolution. There don’t seem to have been any follow-up studies on this research agenda.

Testing this idea: We have no current plans to test this idea, since we think that MVG captures the core parts of it.

Direct selection for modularity, when it can perform a task / enable an adaptation that would be infeasible otherwise

Basic idea: Modularity may be selected for when it breaks a developmental constraint, and thereby makes some beneficial adaptation possible that would be virtually impossible otherwise.

Biological modularity: It’s unclear how to look for evidence for this in the biological record, because it doesn’t seem obvious how the world would be different depending on whether this was true or false. However, one form this could take that seems highly plausible in a biological context is evolving a particular architectural change at very early stages of ontogenesis, which enforces modularity (more on this in the “evidence” section).

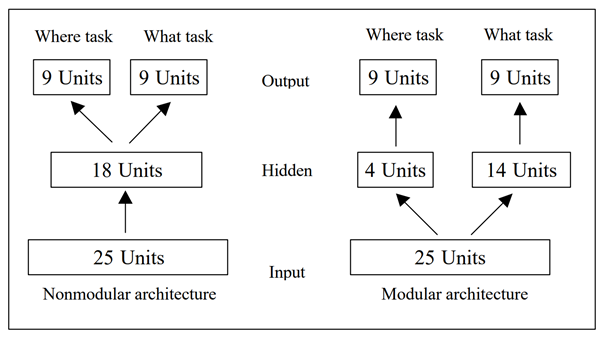

Evidence: A well-known phenomenon in neural networks is that of “neural interference”, whereby a system designed to learn a solution to two separate tasks inevitably develops connections between the two, despite these serving no practical purpose. As an example, consider a network performing image recognition on two different images, with the aim of outputting two different classifications: clearly no connections are required between the two tasks, but these will almost certainly be created anyway during the search for a solution, and they are hard to “un-learn”. The 2000 paper Evolving Modular Architectures for Neural Networks explores the idea of both the network architecture and the parameters being genetically inherited - that way organisms can avoid this interference altogether.

However, it appears to us that this methodology may be problematic in that the researcher is deciding on the network structure before the study is even run. Looking at the above diagram, it’s fairly obvious to us that the design on the right will perform better, but this study provides no evolutionary model as to how the modular architecture on the right might be selected for by evolution in the first place. It might seem intuitive to humans to choose the modular decomposition on the right, but for evolution to find that decomposition in particular is where all the work gets done [LW · GW], so this theory doesn’t seem to present an actual causal explanation.

Testing this idea: If this hypothesis is correct, then there should be certain tasks that a non-modular network simply fails to solve, and so any network trained on this task will either find a modular solution or fail to converge. However, empirically this seems like it isn’t the case (the question “why aren’t the outputs of genetic algorithms modular” was the motivating one for much of this project). Whether this is because the hypothesis is false, or because ML is missing some key aspect of evolutionary dynamics, is unclear.

Modularity arises from a selection pressure to reduce connection costs in a network

Basic idea: If we apply selection pressure to reduce connection costs, this could perform a kind of regularisation that leads to a modular structure, where the only learned connections are the ones that are most important for performing a task. Furthermore, connection costs between different nodes in the network scaling with the “distance” between them might encourage a kind of “locality”, which might further necessitate modularity.

Biological modularity: Despite being intuitively appealing, this idea looks a bit weaker when put in a biological context. There are some important examples of connections being costly (e.g. the human brain), but for this to be the driving factor leading to modularity, we should expect to see connection costs playing a leading role in virtually all forms of signalling across all organisms where modularity is to be found, and this doesn’t always seem to be the case (e.g. signalling molecules in bacteria). However, it’s possible this factor contributes to modularity along with other factors like MVG, even though it’s not the driving factor (e.g. see results from the paper below).

The locality argument seems a lot more robust to biological evidence. Where there is modularity, we very often see it structured in a local way, with networks of cells performing a specific task being clustered together into a relatively small region of space (e.g. organs). Cells interact with each other in a highly localised way, e.g. through chemical and mechanical signals. This means that any group of cells performing a task which requires a lot of inter-cellular interaction is size-limited, and may have to learn to perform a specialised task.

This argument also helps explain why modularity might be observable to humans. Consider a counterfactual world in which locality didn’t apply: there would be very little visible commonality between different organisms, and so even if modularity was present we would have a hard time identifying it (Chris Olah uses a similar idea as an analogy when discussing the universality claim for circuits in neural networks).

Evidence: The 2013 paper The evolutionary origins of modularity explores this idea. Their methodology was to compare two different networks: “performance alone” (PA) and “performance and connection costs” (P&CC). The task was a form of image recognition the authors have called the “retina problem”: the networks were presented with an 8-pixel array separated into two 2x2 blocks (left and right), and tasked to decide whether a certain type of object appeared in each block. This task exhibited a natural modular decomposition. It could also be varied in a modular way: by changing the task from deciding whether an image appeared in both blocks (”L-AND-R environment”) to whether an image appeared in either block (”L-OR-R”). In this way, they could test pressure to reduce costs against the MVG hypothesis. The results were that the P&CC networks were both more modular than the PA networks, and significantly outperformed them after a finite number of iterations, but that the best PA networks outperformed the best P&CC networks (explaining why performance alone might not be sufficient to select for modularity). They also found that adding MVG further increased both the outperformance and the modularity.

Another paper exploring this hypothesis is Computational Consequences of a Bias toward Short Connections (1992). This paper focuses on architectural constraints selecting for fewer/shorter connections, rather than imposing an explicit penalty. However, it doesn’t use particularly advanced machine learning methods, and focuses more narrowly on the human brain than on general modularity across evolved systems, making it less relevant for our purposes.

Testing this idea: We plan to introduce this type of selection pressure in our replication of the Kashtan & Alon 2005 paper. That way we can test it against the MVG hypothesis, and see whether the two combined are enough to produce modularity. We will experiment with different cost measures, although to the extent that this is a strong causal factor leading to modularity, we would expect the results to be relatively robust to different ways of calculating connection costs (this was also what the 2013 paper found).

We also have plans to test the locality idea, by instantiating neurons in some kind of metric space (e.g. 2D Euclidean space, or just using some kind of indexing convention), and penalising connection costs proportionally to their distance.

For our team's most recent post, please see here [LW · GW] .

13 comments

Comments sorted by top scores.

comment by Jan (jan-2) · 2022-04-04T14:38:42.458Z · LW(p) · GW(p)

Theory #4 appears very natural to me, especially in the light of papers like Chen et al 2006 or Cuntz et al 2012. And another supporting intuition from developmental neuroscience is that development is a huge mess and that figuring out where to put a long-range connection is really involved. And there can be a bunch of circuit remodeling on a local scale, once you established a long-range connection, there is little hope of substantially rewiring it.

In case you want to dive deeper into this (and you don't want to read all those papers), I'd be happy to chat more about this :)

Replies from: Lblack↑ comment by Lucius Bushnaq (Lblack) · 2022-04-04T16:17:20.719Z · LW(p) · GW(p)

Yes, a chat could definitely be valuable. I'll pm you.

I agree that connection costs definitely look like a real, modularity promoting effect. Leaving aside all the empirical evidence, I have some trouble imagining how it could plausibly not be. If you put a ceiling on how many connections there can be, the network has got to stick to the most necessary ones. And since some features of the world/input data are just more "interlinked" than others, it's hard to see how the network wouldn't be forced to reflect that in some capacity.

I just don't think it's the only modularity promoting effect.

comment by Steven Byrnes (steve2152) · 2022-04-07T13:54:38.488Z · LW(p) · GW(p)

Where does “division of labor” fit in (e.g. Adam Smith’s discussion of the pin factory)? Like, 10^5 cells designed to pump blood + 10^5 cells designed to digest food would do better than 2×10^5 cells designed to simultaneously pump blood and digest food, right?

I guess I'm more generally concerned that you're not distinguishing specialization from modularity. Specialization is about function, modularity is about connectivity, it seems to me. Organs are an example of specialization, not modularity, I think. Do neural networks have specialization? Yes, e.g. Chris Olah et al.'s curve detectors are akin to having cells specialized for pumping blood, I figure.

Organs are also "modular" but I think only for logistical reasons: the cells that digest food should all be close together, so that we can put the undigested food near them; The cells that process information should all be close together, because communication is slow; Muscle cells need to be close together and lined up, so that their forces can add up. Etc. Basically, there's no teleportation in the real world. If there was teleportation of materials and forces and information etc. within an organism's body, I wouldn't necessarily expect "organs" in the normal sense. Instead maybe the "heart cells" and "digestion cells" and neurons etc. would all be scattered randomly about the body. And there would be a lot more connections between different parts. It would be better to have a direct teleportation link between the lungs and every cell of the body (to transport oxygen), and likewise it would be better to have a direct teleportation link between the digestive tract and every cell of the body (to transport sugar), etc., right? And if you did, a diagram of connectivity would make it look not very modular.

Neural nets on a computer do have teleportation, to some extent. For example, in a fully-connected layer, there's no penalty for having every upstream neuron connect to every downstream neuron.

Incidentally, it's not obvious to me that current ML algorithms are always significantly less modular than the human brain. Granted, the human brain has cortex, striatum, cerebellum, habenula, etc. But likewise a model-based RL algorithm could have an actor network, a critic network, a world-model network, human-written source code for the reward function, human-written source code for tree search, etc.

Replies from: Lblack↑ comment by Lucius Bushnaq (Lblack) · 2022-04-07T21:11:04.804Z · LW(p) · GW(p)

Regarding specialisation vs. modularity: I view the two as intimately connected, though not synonymous.

What makes a blood pumping cell a blood pumping cell and not a digestive cell? The way you connect the atoms, ultimately. So how come, when you want both blood pumping and digestion, it apparently works better to connect the atoms such that there's a cluster that does blood pumping, and a basically separate cluster that does digesting, instead of a big digesting+blood pumping cluster?

You seem to take it as a given that it is so, but that's exactly the kind of thing we're setting out to explain!

"Because physics is local" is one reason, clearly. But if it were the only reason, designs without locality on our computers would never be modular. But they are, sometimes!

R.e: logistical reasons: Yes, that's local connection cost due to locality again, basically.

We have not quantitatively scored modularity in current ML compared to modularity in the human brain (taking each neuron as a node) yet. It would indeed be interesting to see how that comes out. We have the Q score of some CNNs, thanks to CHAI. Do you know of any paper trying to calculate Q for the brain?

Replies from: steve2152↑ comment by Steven Byrnes (steve2152) · 2022-04-07T21:41:15.427Z · LW(p) · GW(p)

when you want both blood pumping and digestion, it apparently works better to connect the atoms such that there's a cluster that does blood pumping, and a basically separate cluster that does digesting, instead of a big digesting+blood pumping cluster?

Are you asking: why doesn't each individual cell in the cluster do both digestion and blood pumping? Or are you asking: why aren't the digestion cells and blood-pumping cells intermingled? I suspect that those two questions have different answers ("specialization" and "logistics/locality" respectively).

Do you know of any paper trying to calculate Q for the brain?

Beats me. I had been assuming that you were thinking of gross anatomy ("the cerebellum is over here, and the cortex is over there, and they look different and they do different things etc."), by analogy with the liver and heart etc.

Replies from: Lblack↑ comment by Lucius Bushnaq (Lblack) · 2022-04-07T21:58:09.634Z · LW(p) · GW(p)

I am asking both! I suspect the reasons are likely to be very similar, in the sense that you can find a set of general modularity theorems that will predict you both phenomena.

Why does specialisation work better? It clearly doesn't always. A lot of early NN designs love to mash everything together into an interconnected mess, and the result performs better on the loss function than modular designs that have parts specialised for each subtask.

Are connection costs due to locality a bigger deal for inter-cell dynamics than intra-cell dynamics? I'd guess yes. I am not a biologist, but it sure seems like interacting with things in the same cell as you should be relatively easier, though still non-trivial.

Are connection costs in the inter-cell regime so harsh that they completely dominate all other modularity selection effects in that regime, so we don't need to care about them? I'm not so sure. I suspect not.

Beats me. I had been assuming that you were thinking of gross anatomy ("the cerebellum is over here, and the cortex is over there, and they look different and they do different things etc."), by analogy with the liver and heart etc.

I'm thinking about those too. The end goal here is literally a comprehensive model for when modularity happens and how much, for basically anything at any scale that was made by an optimisation process like genetic algorithms, gradient descent, ADAM, or whatever.

Replies from: steve2152↑ comment by Steven Byrnes (steve2152) · 2022-04-08T00:05:21.655Z · LW(p) · GW(p)

There's another issue that one individual cell, at any one time, can only have one membrane potential, and can only have one concentration of calcium ions in its cytoplasm, and can only have one concentration of any given protein in its cytoplasm, and can only have one areal density of any given integral membrane protein on its outer membrane, and so on. (If I understand correctly.) If the optimal values of any of these parameters (say, potassium ion concentration in the cytoplasm) is different for muscle-type-functionality versus neuron-type-functionality versus immune-system-type-functionality etc. (which seems extremely likely to me), then there would be an obvious benefit to splitting those functions into different cells, each with its own separate cytoplasm and so on.

Replies from: Lblack↑ comment by Lucius Bushnaq (Lblack) · 2022-04-08T00:21:16.405Z · LW(p) · GW(p)

But why is that so? Why are there no parameter combinations here that let you do well simultaneously on all of these tasks, unless you split your system into parts? That is what we are asking.

Could it be that such optima just do not exist? Maybe. It's certainly not how it seems to work out in small neural networks, but perhaps for some classes of tasks, they really don't, or are infrequent enough to not be finable by the optimiser. That's the direct selection for modularity hypothesis, basically.

I don't currently favour that one though. The tendency of our optimisers to connect everything to everything else even when it seems to us like it should be actively counterproductive to do so, but still end up with a good loss, suggests to me that our intuition that you can't do good while trying to do everything at once is mistaken. At least as long as "doing good" is defined as scoring well on the loss function. If you add in things like robustness, you might have a very different story. Thus, MVG.

Replies from: steve2152↑ comment by Steven Byrnes (steve2152) · 2022-04-08T01:11:37.767Z · LW(p) · GW(p)

For example, gastric parietal cells excrete hydrochloric acid into the stomach. The cell is a little chemical factory. For the chemistry steps to work properly, the potassium ion concentration in the cytoplasm needs to be maintained at a certain level.

Meanwhile, neurons communicate information by firing action potentials. These involve the potassium concentration in the cytoplasm dropping rapidly at certain times, before slowly returning to a higher level.

So, now suppose the same cell is tasked with both excreting hydrochloric acid and communicating information by firing action potentials. I claim that it wouldn't be able to do both well. Either the potassium concentration in the cytoplasm is maintained at the level which best facilitates the acid-producing chemical reactions, or else the potassium level in the cytoplasm is periodically dropping rapidly and climbing back up during action potentials. It can't be both. Right?

(I'm not an expert on cell biology and could be messing up some details here, but I feel confident that there are lots and lots of true stories in this general category.)

Replies from: Lblack↑ comment by Lucius Bushnaq (Lblack) · 2022-04-08T08:49:41.054Z · LW(p) · GW(p)

It e.g. wouldn't use potassium to send signals, I'd imagine. If a design like this exists, I'd expect it to involve totally different parts and steps that do not conflict like this. Something like a novel (to us) kind of ion channel, maybe, or something even stranger.

Does it seem to you that the constraints put on cell design are such that the ways of sending signals and digesting things we currently know of are the only ones that seem physically possible?

This is not a rhetorical question. My knowledge of cell biology is severely lacking, so I don't have deep intuitions telling me which things seem uniquely nailed down by the laws of physics. I just had a look at the action potential wikipedia page, and didn't immediately see why using potassium ions was the only thing evolution could've possibly done to make a signalling thing. Or why using hydrochloric acid would be the only way to do digestion.

Replies from: steve2152↑ comment by Steven Byrnes (steve2152) · 2022-04-08T14:26:07.156Z · LW(p) · GW(p)

You're a chemical engineer. Your task is to manufacture chemicals A and B. But you only get one well-mixed tank: all the precursors of chemical A have to mix with each other and with the precursors of chemical B without undesired cross-reactions, and the A and B production process need to take place in the same salinity environment and the same pH environment, etc.

Maybe that's doable.

Oh hey, now also please manufacture chemicals C,D,E,F,G,H,I,J and K. You still have one well-mixed tank in which to do all those things simultaneously.

Maybe that's doable too. Or maybe not. And if it is doable at all, presumably the efficiency would be terrible.

At some point, you're going to throw up your hands and go to your boss and say “This is insane, we need more than one well-mixed tank to get all this stuff done. At least let me have a high-pH tank for manufacturing A,B,E,G,H and a low-pH tank for manufacturing C,D,F,I,J,K. Pretty please, boss?”

Anyway, you can say “Design space is huge, I bet there's a way to manufacture all these chemicals in the same well-mixed tank.” Maybe that's true, I don't know. But my response is: there is a best possible way to manufacture A, and there is a best possible way to manufacture B, etc., and these do not involve the exact same salinity, pH, etc. Therefore, when we put everything into one tank, that's only possible by making tradeoffs. A chemical plant that has multiple tanks will be way better.

Back to biology. (I'm not an expert on cell biology either, be warned.)

A cell cytoplasm is a well-mixed chemical reactor tank, as far as I understand. It's not compartmentalized (with certain exceptions).

My strong impression is that there are no one-size-fits-all jack-of-all-trades cells. Not in multicellular life, and not in single-cell life either. Different single-cell species specialize in performing different chemical reactions.

Thus, we can have single-cell organism Q that eats food X and spits out waste product Y, and then a different single-cell organism P eats Y and spits out waste product Z, etc. We can kibbitz from the sideline that Q is being “wasteful”: why spit out Y as waste, when in fact Y was digestible in principle—after all, P just digested it! But from my perspective this is pretty much expected. Maybe digesting Y and digesting X require very different salinity or pH, for example. So in order to digest Y at all, the cell Q would need to be much worse at digesting X, and that tradeoff winds up being not worthwhile.

didn't immediately see why using potassium ions was the only thing evolution could've possibly done to make a signalling thing. Or why using hydrochloric acid would be the only way to do digestion.

It could be chloride ions instead of potassium, as far as I know. But there are only so many elements on the periodic table, and many fewer that are abundant in the evironment, and they all have different properties which may be disadvantageous for a particular function. There are other ways to do digestion, but they don't all spend identical resources and produce identical results, right? So there are tradeoffs.

comment by Derek M. Jones (Derek-Jones) · 2022-04-04T13:59:26.410Z · LW(p) · GW(p)

Is the influence of the environment on modularity a second order effect?

A paper by Mengistu found, via simulation, that modularity evolves because of the presence of a cost for network connections.

Replies from: Lblack↑ comment by Lucius Bushnaq (Lblack) · 2022-04-04T14:08:24.271Z · LW(p) · GW(p)

Not really sure what you mean by the first part. E.g. "the modularity in the environment => modularity in the system" explanation definitely doesn't cast it as a second order effect.

Yes, I guess we can add that one to the pile, thanks. Honestly, I feel like it's basically confirmed that connection costs play a significant part. But I don't think they explain all there is to know about selection for modularity. The adaptation/generality connection just seems too intuitive and well backed to not be important.