Immortality or death by AGI

post by ImmortalityOrDeathByAGI · 2023-09-21T23:59:59.545Z · LW · GW · 30 commentsThis is a link post for https://forum.effectivealtruism.org/posts/KkmbSbu2MhLmyj2Rd/immortality-or-death-by-agi

Contents

The model & results Assumptions Without AGI, people keep dying at historical rates Metaculus timelines Probability of surviving AGI Probability that, conditioning on surviving AGI, we eventually solve aging Time between surviving AGI and solving aging No assumptions about continuous personal identity Appendix A: This does not imply accelerating AI development Appendix B: Most life extension questions on Manifold are underpriced None 30 comments

AKA My Most Likely Reason to Die Young [EA · GW] is AI X-Risk

TL;DR: I made a model which takes into account AI timelines, the probability of AI going wrong, and probabilities of dying from other causes. I got that the main “end states” for my life are either dying from AGI due to a lack of AI safety (at 35%), or surviving AGI and living to see aging solved (at 43%).

Meta: I'm posting this under a pseudonym because many people I trust had a strong intuition that I shouldn't post under my real name, and I didn't feel like investing the energy to resolve the disagreement. I'd rather people didn't de-anonymize me.

The model & results

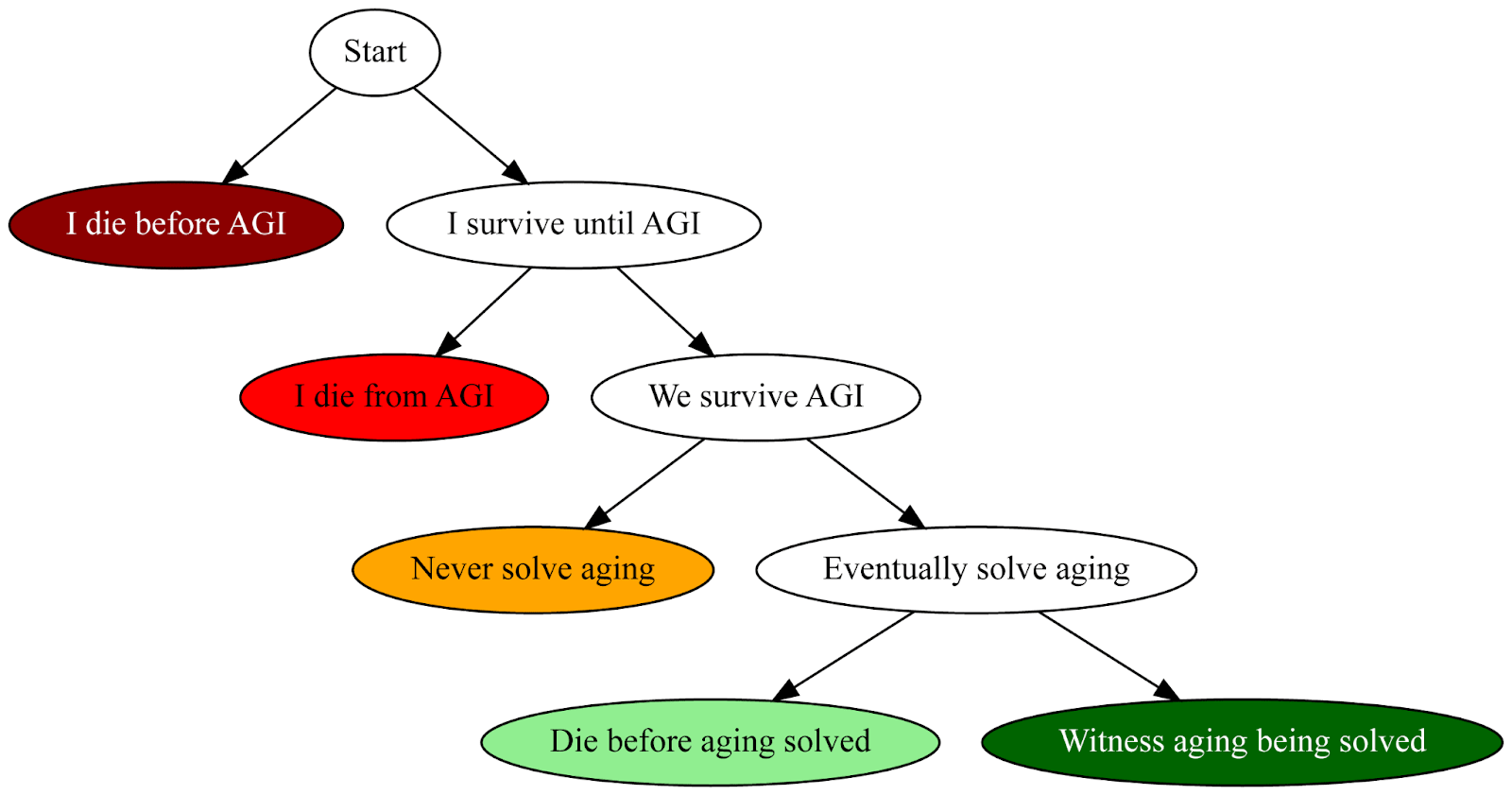

I made a simple probabilistic model of the future, which takes seriously the possibility of AGI being invented soon, its risks, and its effects on technological development (particularly in medicine):

- Without AGI, people keep dying at historical rates (following US actuarial tables)

- At some point, AGI is invented (following Metaculus timelines)

- At the point AGI is invented, there are two scenarios (following my estimates of humanity’s odds of survival given AGI at any point in time, which are relatively pessimistic):

- We survive AGI.

- We don’t survive AGI.

- If we survive AGI, there are two scenarios:

- We never solve aging (maybe because aging is fundamentally unsolvable or we decide not to solve it).

- AGI is used to solve aging.

- If AGI is eventually used to solve aging, people keep dying at historical rates until that point.

- I model the time between AGI and aging being solved as an exponential distribution with a mean time of 5 years.

Using this model, I ran Monte Carlo simulations to predict the probability of the main end states of my life (as someone born in 2001 who lives in the US):

- I die before AGI: 10%

- I die from AGI: 35%

- I survive AGI but die because we never solve aging: 11%

- I survive AGI but die before aging is solved: 1%

- I survive AGI and live to witness aging being solved: 43%

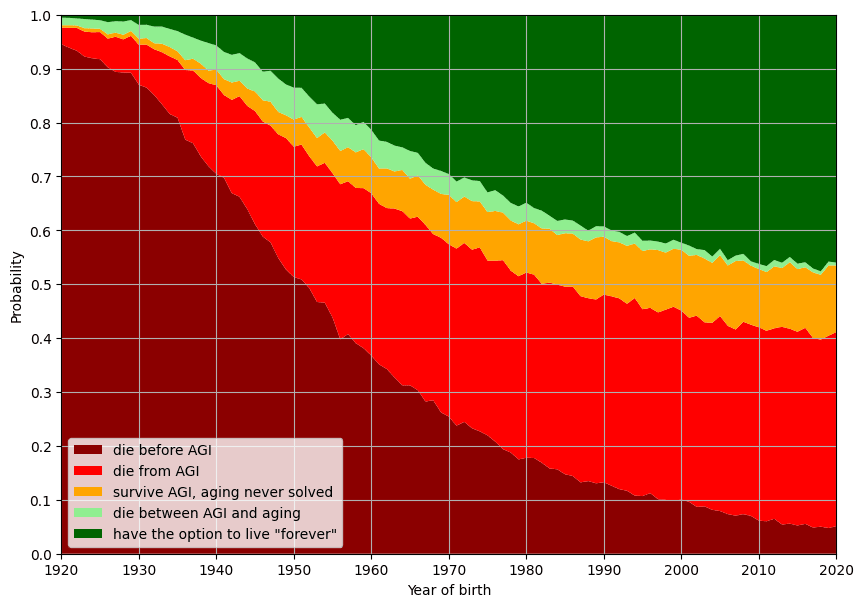

Here’s what my model implies for people based on their year of birth, conditioning on them being alive in 2023:

As is expected, the earlier people are born, the likelier it is that they will die before AGI. The later someone is born, the likelier it is that they will either die from AGI or have the option to live for a very long time due to AGI-enabled advances in medicine.

Following my (relatively pessimistic) AI safety assumptions, for anyone born after ~1970, dying by AGI and having the option to live “forever” are the two most likely scenarios. Most people alive today have a solid chance at living to see aging cured. However, if we don’t ensure that AI is safe, we will never be able to enter that future.

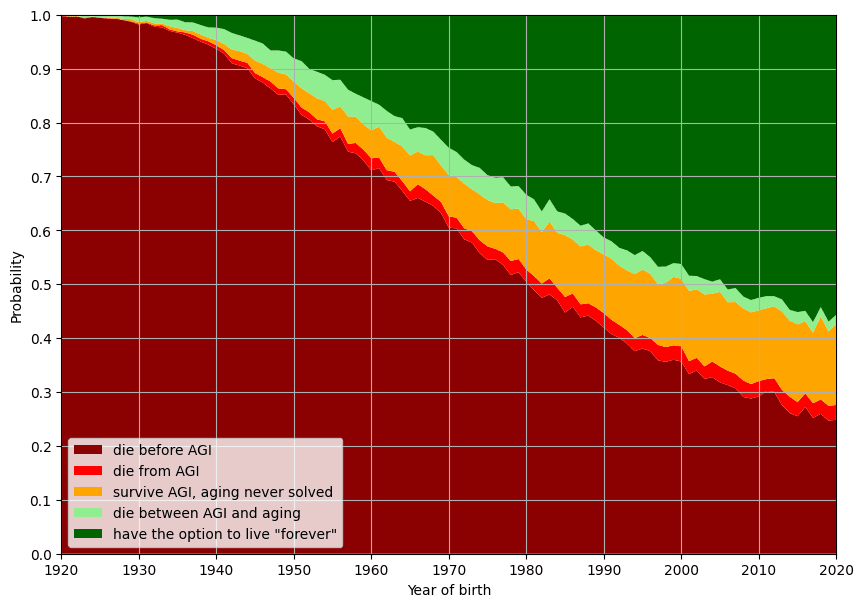

I also ran this model given less unconventional estimates of timelines and P(everyone dies | AGI), where the timelines are twice as long as the Metaculus timelines, and the P(everyone dies | AGI) is 15% in 2023 and exponentially decays at a rate where it hits 1% in 2060.

For the more conventional timelines and P(everyone dies | AGI), the modal scenarios are dying before AGI, and living to witness aging being solved. Dying from AGI hovers around 1-4% for most people.

Assumptions

Without AGI, people keep dying at historical rates

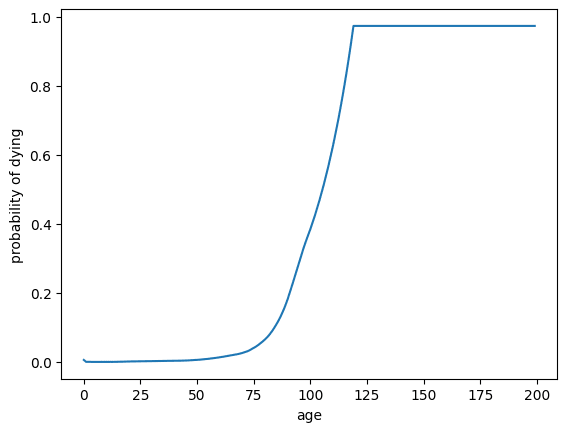

I think this is probably roughly correct, as we’re likely to see advances in medicine before AGI, but nuclear and biorisk roughly counteract that (one could model how these interact, but I didn’t want to add more complexity to the model). I use the US actuarial life table for men (which is very similar to the one for women) to determine the probability of dying at any particular age. For ages after 120 (which is as far as the table goes), I simply repeat the probability of dying at age 120, which is 97.3%. This is wrong but I expect the final estimates to mostly be correct for people born after 1940.

Metaculus timelines

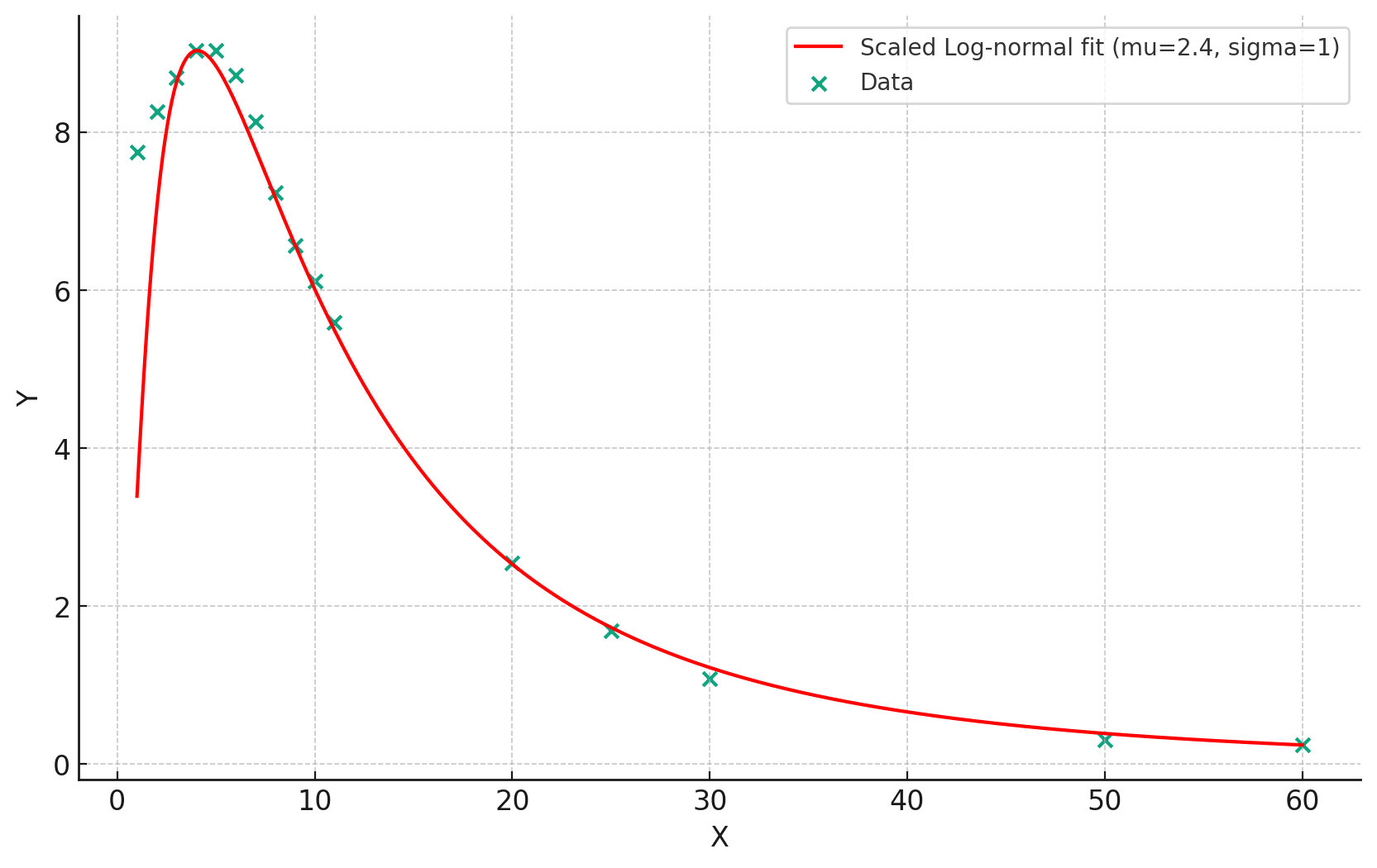

Metaculus doesn't have a way to draw samples from distributions, so I messily approximated the function by finding the PDF at various points and playing with a lognormal distribution until it looked roughly right. The median of the distribution is 2034. I fit a lognormal distribution this Metaculus question, discarding years before 2023. This is what the distribution looks like:

For the conventional estimate, I just multiplied each sample by three to make the timelines three times longer (so a median of 2056).

Probability of surviving AGI

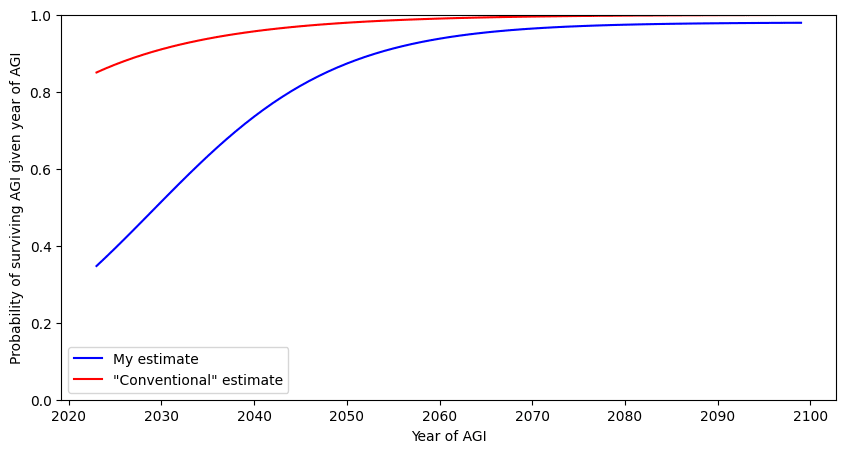

This is a very rough estimate of my probability of surviving AGI, conditioning AGI being invented at any particular time. Here’s what the graph looks like. My estimate levels out at 98%, the conventional one approaches 100%. I actually think it approaches 100% but 98% is close enough to my estimate.

For the conventional estimate, I simply have an exponentially decaying probability of not surviving which starts at 15% given AGI in 2023 and drops to 1% by 2060.

Probability that, conditioning on surviving AGI, we eventually solve aging

I have this at 80% without much thinking about it. I think the main scenarios where this doesn’t happen involve us deciding to replace humans who are currently alive with something we consider more valuable (which is incompatible with keeping currently living humans around), or succumbing to some existential risk after we survive AGI.

Time between surviving AGI and solving aging

I model this as an exponential distribution with a mean time of 5 years. I mostly think of it as requiring a certain amount of “intellectual labor” (distributed lognormally) to be solved, with the amount of intellectual labor per unit of time increasing rapidly with the advent of AGI as its price decreases dramatically. I have very wide error bars about how much intellectual labor to be invested, and how much intellectual labor output will speed up given AGI, so I mostly just don’t want to add more moving parts to the model, and instead throw an exponential distribution at it. In the jupyter notebook, it’s easy to change this parameter with your own distribution.

No assumptions about continuous personal identity

I think there are some pretty weird scenarios for how the future could go, including ones where:

- biological humans live for so long that calling them the “same person” applies much less than it does for people alive today, who generally live less than 100 years and change relatively little over time

- humans become digital, and it’s unclear whether the uploading process “makes a new person” or “duplicates an existing person”

- the human experience changes so much over time that we enter an unrecognizable state, where minds might relive the same moment over and over, or exist for very short periods of time, or change so quickly that there’s barely any continuity over time.

I’m not trying to capture any of these questions in the model. The main question this model is trying to answer is “Can I expect to witness aging being solved?” (whatever “I” means).

Appendix A: This does not imply accelerating AI development

One might look at the rough 50/50 chance at immortality given surviving AGI and think "Wow, I should really speed up AGI so I can make it in time!". But the action space is more something like:

- Work on AI safety (transfers probability mass from "die from AGI" to "survive AGI")

- The amount of probability transferred is probably at least a few microdooms per person.

- Live healthy and don't do dangerous things (transfers probability mass from "die before AGI" to "survive until AGI")

- Intuitively, I'm guessing one can transfer around 1 percentage point of probability by doing this.

- Do nothing (leaves probability distribution the same)

- Preserve your brain if you die before AGI (kind of transfers probability mass from "die before AGI" to "survive until AGI")

- This is a weird edge case in the model and it conditions on various beliefs about preservation technology and whether being "revived" is possible

- Delay AGI (transfers probability from "die from AGI" to "survive AGI" and from "survive until AGI" to "die before AGI")

- Accelerate AGI (transfer probability mass from "survive AGI" to "die from AGI" and from "die before AGI" to "survive until AGI")

I think working on AI safety and healthy living seem like a much better choice than accelerating AI. I'd guess this is true even for a vast majority of purely selfish people.

For altruistic people, working on AI safety clearly trumps any other action in this space as it has huge positive externalities. This is true for people who only care about current human lives (as one microdoom 8,000 current human lives saved), and it's especially true for people who place value on future lives as well (as one microdoom = one millionth of the value of the entire long term future).

This is a very simplified view of what it means to accelerate or delay AGI, which ignores that there are different ways to shift AGI timelines that transfer probability mass differently. In this model I assume that as timelines get longer, our probability of surviving AGI increases monotonically, but there are various considerations that make this assumption shaky and not generally true for every possible way to shift timelines (such as overhangs [? · GW], different actors being able to catch up to top labs, etc.)

Appendix B: Most life extension questions on Manifold are underpriced

I assume that at least 60% of people with the option to live for a very long time in a post-AGI world will decide to live for a very long time. There are a bunch of life extension questions on Manifold. They ask whether some specific people, aged around 30 in 2023, will live to an age of 100, 150, 200, or 1000.

I think the probabilities for all of these ages are within single percentage points for any particular person, as I think it’s pretty unlikely that someone lives to 100 using AGI-enabled advances, and decides to not live until 1000 in that same world.

I think all of these questions are significantly underpriced. One way to get the probability of someone born around 1990 living to 1000 years old, is to get the probability of them "having the option to live forever" (which is 40% according to this model) and multiply it by the probability that they would say yes (which I think is around 60%). This means the probability of someone born in 1990 living for more than 1000 years is around 24%.

30 comments

Comments sorted by top scores.

comment by Nathan Helm-Burger (nathan-helm-burger) · 2023-09-22T15:33:38.544Z · LW(p) · GW(p)

Time between surviving AGI and solving aging

I model this as an exponential distribution with a mean time of 5 years.

I just want to point out that one of the big hopes for initial survival of AGI is a worldwide regulatory delay in 'cranking it up'. Thus, AGI could exist in containment in labs but not be being used at 'full power' on any practical projects like solving aging or digitizing humans. In this scenario, I'm not sure whether you would count this as AGI not invented yet, since it's not truly in use, or whether you'd call this invented but survival still unclear since if it got loose we'd all die, or what. Basically, I want to bring up the possibility of a 'fuzzy post-invention time full of looming danger, spanning perhaps 20-30 years.'

Replies from: Vladimir_Nesov↑ comment by Vladimir_Nesov · 2023-09-23T04:39:14.137Z · LW(p) · GW(p)

worldwide regulatory delay [...] 'fuzzy post-invention time full of looming danger, spanning perhaps 20-30 years.'

I expect something similar [LW(p) · GW(p)], but not to the extent of 20-30 years from regulatory delay alone. That would take banning research and drm-free GPU manufacturing as well, possibly making it taboo on the cultural level worldwide, and confiscating and destroying existing GPUs (those without inbuilt compute governance features). Otherwise anyone can find dozens of GPUs and a LLaMA to apply the latest algorithmic developments, even when doing so is technically illegal. Preventing small projects is infeasible without a pivotal act worth of change.

Replies from: donald-hobson↑ comment by Donald Hobson (donald-hobson) · 2023-09-23T22:00:40.597Z · LW(p) · GW(p)

I don't know. Even a technical illegality makes it really hard to start an institution like openAI, where you openly have a large budget to hire top talent full time. Also means that the latest algorithms aren't published in top journals, only whispered in secret. Also, small hobbyist projects can't get million dollar compute clusters easily.

Replies from: Vladimir_Nesov↑ comment by Vladimir_Nesov · 2023-09-24T07:54:54.318Z · LW(p) · GW(p)

My "otherwise" refers to the original hypothetical where only "worldwide regulatory delay" is in effect, not banning of research. So there are still many academic research groups and they publish their research in journals or on arxiv, they are just not OpenAI-shaped individually (presumably the OpenAI-shaped orgs are also still out there, just heavily regulated and probably don't publish). As a result, the academic research is exactly the kind of thing that explores what's feasible with the kind of compute that it's possible for a relatively small unsanctioned AGI project to get their hands on, and 20-30 years of worldwide academic research is a lot of shoulder to stand on.

Hence the alternative I'm talking about of what (regrettably) seems necessary for the 20-30 years from the time when AGI first starts mostly working through enormous frontier models, and the world still remaining not-overturned at the end of this period: driving research underground without official funding and coordinated dissemination, getting rid of uncontrollable GPUs that facilitate it and make it applicable to building AGIs. That's not in the original hypothetical I'm replying to.

Replies from: donald-hobson↑ comment by Donald Hobson (donald-hobson) · 2023-09-25T23:04:04.569Z · LW(p) · GW(p)

Suppose we get AI regulation that is full half hearted ban.

There are laws against all AI research. If you start a company with a website, offices etc openly saying your doing AI, police visit the office and shut it down.

If you publish an AI paper on a widely frequented bit of the internet under your own name, expect trouble.

If you get a cloud instance from a reputable provider and start running an AI model implemented the obvious way, expect it to probably be shut down.

The large science funders and large tech companies won't fund AI research. Maybe a few shady ones will do a bit of AI. But the skills aren't really there. They can't openly hire AI experts. If they get many people involved someone will blow the whistle. You need to go to the dark web to so much as download a version of tensorflow, and chances are that's full of viruses.

It's possible to research AI with your own time, and your own compute. No one will stop you going around various computer stores and buying up GPU. If you are prepared to obfuscate your code, you can get an AI running on cloud compute. If you want to share AI research under a psudonym on obscure internet forums, no one will shut it down. (Extra boost if people are drowning such signal under a pile of plausible looking nonsense)

I would not expect much dangerous research to be done in this world. And implicit skills would fade. The reasons that make it so hard to repeat the moon landings would apply. (everyone has forgotten the details, tech has moved on, details lost, orginizational knowledge not there)

Replies from: Vladimir_Nesov↑ comment by Vladimir_Nesov · 2023-09-26T05:14:55.183Z · LW(p) · GW(p)

This is not nothing, but I still don't expect 20-30 years (from first AGI, not from now!) out of this. There are three hypotheticals I see in this thread: (1) my understanding of Nathan Helm-Burger's hypothetical, where "regulatory delay" means it's frontier models in particular that are held back, possibly a compute [LW · GW] threshold setup with a horizon/frontier distinction where some level of compute (frontier) triggers oversight, and then there is a next level of compute (horizon) that's not allowed by default or at all; (2) the hypothetical from my response, where all AI research and drm-free GPUs are suppressed; and (3) my understanding of the hypothetical in your response, where only AI research is suppressed, but GPUs are not.

I think 20-30 years of uncontrollable GPU progress or collecting of old GPUs still overdetermines compute-feasible reinvention, even with fewer physically isolated enthusiasts continuing AI research in onionland. Some of those enthusiasts previously took part in a successful AGI project, leaking architectures that were experimentally demonstrated to actually work (the hypothetical starts at demonstration of AGI, not everyone involved will be on board with the subsequent secrecy). There is also the option of spending 10 years on a single training run.

comment by Lalartu · 2023-09-22T16:18:15.115Z · LW(p) · GW(p)

80% for AGI solving aging is very optimistic. Even just one single possibility, that people who decide what values should AGI have happen to be anti-immortalist is imo >20%.

Replies from: Vladimir_Nesov↑ comment by Vladimir_Nesov · 2023-09-23T04:47:06.527Z · LW(p) · GW(p)

"Being able to" and "deciding to" is a crucial distinction, saying "solving" doesn't make the distinction.

comment by avturchin · 2023-09-22T12:26:41.584Z · LW(p) · GW(p)

It would be interesting to add in this model that AI has constantly increasing effects on human life expectancy: even ChatGPT now helps with diagnostics; companies use AI for drug development etc.

Replies from: ImmortalityOrDeathByAGI↑ comment by ImmortalityOrDeathByAGI · 2023-09-22T21:04:59.533Z · LW(p) · GW(p)

As I said in another comment:

I totally buy that we'll see some life expectancy gains before AGI, especially if AGI is more than 10 years away. I mostly just didn't want to make my model more complex, and if we did see life expectancy gains, the main effect this would have is to take probability away from "die before AGI".

comment by pathos_bot · 2023-09-22T08:51:16.269Z · LW(p) · GW(p)

Assuming you have a >10% of living forever, wouldn't that necessitate avoiding all chance at accidental death to minimize the "die before AGI" section. If you assume AGI is inevitable, then one should simply maximize risk aversion to prevent cessation of consciousness or at least permanent information loss of their brain.

Replies from: ImmortalityOrDeathByAGI, nathan-helm-burger↑ comment by ImmortalityOrDeathByAGI · 2023-09-22T20:53:21.719Z · LW(p) · GW(p)

For a perfectly selfish actor, I think avoiding death pre-AGI makes sense (as long as the expected value of a post-AGI life is positive, which it might not be if one has a lot of probability mass on s-risks). Like, every micromort of risk you induce (for example, by skiing for one day), would decrease the probability you live in a post-AGI world by roughly 1/1,000,000. So, one can ask oneself, "would I trade this (micromort-inducing) experience for one millionth of my post-AGI life?", and I the answer a reasonable person would give in most cases would be no. The biggest crux is just how much one values one millionth of their post-AGI life, which comes down to cruxes like its length (could be billions of years!), and its value per unit time (which could be very positive or very negative).

Like, if I expect to live for a million years in a post-AGI world where I expect life to be much better than the life I'm leading right now, then skiing for a day would take away roughly one year away from my post-AGI life in expectation. I definitely don't value skiing that much.

This gets a bit complicated for people who are not perfectly selfish, as there are cases where one can trade micromorts for happiness, happiness for productivity, and productivity for impact on other people. So for instance, someone who works on AI safety and really likes skiing might find it net-positive to incur the micromorts because the happiness gained from skiing makes them better at AI safety, and them being better at AI safety has huge positive externalities that they're willing to trade their lifespan for. In effect, they would be decreasing the probability that they themselves live to AGI, while increasing the probability that they and other people (of which there are many) survive AGI when it happens.

Replies from: Vladimir_Nesov↑ comment by Vladimir_Nesov · 2023-09-23T04:51:33.416Z · LW(p) · GW(p)

I think a million years is a weird anchor, starting at to might be closer to the mark. Also, there is a multiplier from thinking faster as an upload, so that a million physical years becomes something like subjective years.

↑ comment by Nathan Helm-Burger (nathan-helm-burger) · 2023-09-22T15:38:10.386Z · LW(p) · GW(p)

If you think the coming of AGI is inevitable, but you think that surviving AGI is hard and you might be able to help with it, then you should do everything you can to make the transition to a safe AGI future go well. Including possibly sacrificing your own life, if you value the lives of your loved ones in aggregate more than your life alone. In a sense, working hard to make AGI go well is 'risk aversion' on a society-wide basis, but I'd call the attitude of the agentic actors in this scenario more one of 'ambition maximizing' rather than 'personal risk aversion'.

comment by Michael Tontchev (michael-tontchev-1) · 2023-09-22T09:01:59.180Z · LW(p) · GW(p)

Surviving AGI and it solving aging doesn't imply you specifically get to live forever. In my mental model, safe AGI simply entails mass disempowerment, because how do you earn an income when everything you do is done better and cheaper by an AI? If the answer is UBI, what power do people have to argue for it and politically keep it?

Replies from: ChristianKl, Vladimir_Nesov↑ comment by ChristianKl · 2023-09-22T09:28:24.796Z · LW(p) · GW(p)

One common view is that most of the arguing about politics will be done by AI once AI is powerful enough to surpass human capabilities by a lot. The values that are programmed into the AI matter a lot.

↑ comment by Vladimir_Nesov · 2023-09-23T04:16:25.006Z · LW(p) · GW(p)

I count "You don't get a feasibly obtainable source of resources needed to survive" as an instance in the "Death from AGI" category. Of course, death from AGI is not mutually exclusive with AGI having immortality tech.

comment by Donald Hobson (donald-hobson) · 2023-09-23T16:43:21.913Z · LW(p) · GW(p)

- I survive AGI but die because we never solve aging: 11%

- I survive AGI but die before aging is solved: 1%

I can forsee a few scenarios where we have AGI'ish. Maybe something based on imitating humans that can't get much beyond human intelligence. Maybe we decide not to make it smarter for safety reasons. Maybe we are dealing with vastly superhuman AI's that are programmed to do one small thing and then turn off.

In these scenarios, there is still a potential risk (and benefit) from sovereign superintelligence in our future. Ie good ASI, bad ASI and no ASI are all possibilities.

What does your "never solve aging" universe look like. Is this full bio-conservative deathist superintelligence?

Or are you seriously considering a sovereign superintelligence searching for solutions and failing.

Also, why are you assuming solving aging happens after AGI.

I think the probabilities are around 50/50.

we’re likely to see advances in medicine before AGI, but nuclear and biorisk roughly counteract that

I am pretty sure taking two entirely different processes and just declaring them to cancel is not on as a good modeling assumption.

comment by Ilio · 2023-09-22T16:23:43.851Z · LW(p) · GW(p)

Interesting thoughts and model, thanks.

I think all of these questions are significantly underpriced.

Small nitpick here: price and probability are two things. One could well agree that you’re right on the probability but still don’t buy because the timeline is too long, or because a small chance of winning in one scenario is more important than a large chance of winning in the opposite scenario.

comment by Vladimir_Nesov · 2023-09-22T01:10:47.592Z · LW(p) · GW(p)

You talk about "we" surviving/not AGI. I suspect there is a lot of expected death from early AGI where there are survivors, in the time after first AGI but before there is superintelligence with enough hardware and free rein. With sufficient effectiveness of prosaic alignment to enable dangerous non-superintelligent systems that avoid developing stronger systems, and early scares, active development towards superintelligence might slow down for many years. In the interim, we only get the gradually escalating danger from existing systems (mostly biorisk, but also economic and global security upheaval) without a resolution either way.

comment by Going Durden (going-durden) · 2023-09-22T08:43:03.211Z · LW(p) · GW(p)

- Without AGI, people keep dying at historical rates (following US actuarial tables)

Im not entirely convinced of this being the case. There are several possible pathways towards life extension including, but not limited to the use of CRISPR, stem cells, and most importantly finding a way to curb free radicals, which seem the be the main culprits of just about every aging process. It is possible that we will "bridge" towards radical life extension long before the arrival of AGI.

Replies from: ImmortalityOrDeathByAGI↑ comment by ImmortalityOrDeathByAGI · 2023-09-22T21:00:52.484Z · LW(p) · GW(p)

I totally buy that we'll see some life expectancy gains before AGI, especially if AGI is more than 10 years away. I mostly just didn't want to make my model more complex, and if we did see life expectancy gains, the main effect this would have is to take probability away from "die before AGI".

comment by followthesilence · 2023-09-22T04:43:10.367Z · LW(p) · GW(p)

I'm pretty bullish on hypothetical capabilities of AGI, but on first thought decided a 40% chance of "solving aging" and stopping the aging process completely seemed optimistic. Then reconsidered and thought maybe it's too pessimistic. Leading me to the conclusion that it's hard to approximate this likelihood. Don't know what I don't know. Would be curious to see a (conditional) prediction market for this.

Replies from: nathan-helm-burger, Vladimir_Nesov↑ comment by Nathan Helm-Burger (nathan-helm-burger) · 2023-09-22T15:40:45.141Z · LW(p) · GW(p)

I don't think it's necessary to assume 'stopping the aging process entirely'. I think you can say something like 'slow down the aging process enough that people the age of the author of this post don't die of old age before the next level of tech comes along (e.g. gradually replacement of failing body parts with cybernetic ones, and eventually uploading to an immortal digital version.)

↑ comment by Vladimir_Nesov · 2023-09-23T04:25:46.719Z · LW(p) · GW(p)

The 43% in the post are not "chance of solving aging", it bakes in survival to see it done. And anyway the feasibility of solving aging seems inevitable, there is nothing mysterious about the problem, it just needs enough cognition and possibly experiments over technical details thrown at it, which superintelligence will have many, many orders of magnitude more than enough capability for carrying out. The only issue might be deciding to do something else and leaving humans to die, and the first few months to years when new industry might still be too weak to undertake such projects casually, not technical feasibility.

comment by queelius · 2023-09-22T09:44:02.024Z · LW(p) · GW(p)

I'm reminded of Thomas Metzinger's book "Being No One," which argues that the self is essentially an illusion.

With this in mind, perhaps another option is: "I am no one, and thus was never born and will never die."

↑ comment by Richard_Kennaway · 2023-09-22T10:35:27.404Z · LW(p) · GW(p)

I'm reminded of 1984, in a quotation I've had occasion to post before:

'You do not exist,’ said O’Brien.

Once again the sense of helplessness assailed him. He knew, or he could imagine, the arguments which proved his own nonexistence; but they were nonsense, they were only a play on words. Did not the statement, ‘You do not exist’, contain a logical absurdity? But what use was it to say so? His mind shrivelled as he thought of the unanswerable, mad arguments with which O’Brien would demolish him.

‘I think I exist,’ he said wearily. ‘I am conscious of my own identity. I was born and I shall die. I have arms and legs. I occupy a particular point in space. No other solid object can occupy the same point simultaneously.'

And like Woody Allen, I would prefer to achieve immortality by not dying.

Replies from: avturchin