E.T. Jaynes Probability Theory: The logic of Science I

post by Jan Christian Refsgaard (jan-christian-refsgaard), dentalperson · 2023-12-27T23:47:52.579Z · LW · GW · 20 commentsContents

Part 1: A new Foundation of Probability Theory Chapter 1: Plausible Reasoning Chapter 2: The Quantitative Rules Part 2: Basic Statistics reinvented Chapter 3: Elementary sampling theory Logic vs. Propensity: Reality vs. Models: Chapter 4: Elementary hypothesis testing Priors Bayesian Update Chapter 5: Queer uses for probability theory ESP Crows Are Humans using Bayesian Reasoning? Chapter 6: Elementary Parameter Estimation Personal opinions None 20 comments

This book (available as a free pdf here) is one of the foundational texts for Rationalists; Eliezer refers to Jaynes as The Level Above Mine [LW · GW]. While we agree that Jaynes is very lucid and the book is wonderful, we also think the book's target audience are graduate physics students. It occupies a sparse area of the literature, between statistics, math, causality, and philosophy of science that contains real, practical lessons about how to think about the world and its uncertainty. Here, we will try to give a review where we share the core insight of the book while skipping all the integrals and derivations. The book is basically 50% high level math and 50% cool stories and rants that you can mostly understand without the math. It also covers a lot of material, and this review covers just the first 6 chapters, which introduces the fundamental concepts and problems.

The book has 2 parts:

- Part I (Chapter 1-10): Principles and elementary applications

- Part II (Chapter 11-22): Advanced applications

This is a review of chapters 1-6

Part 1: A new Foundation of Probability Theory

The first two chapters of the book are by far the toughest. In chapter one, Jaynes gives a half chapter refresher of formal logic, and introduces the concept of probability as logic. Here the goal is to extend the rules of formal logic such that they also apply to probabilistic reasoning. This is a complicated task: going from strict binary truth values to continuous probabilities without assuming anything about what a probability is. This is also where the book gets its name, the logic of science, because much like Eliezer [LW · GW], Jaynes views reasoning under uncertainty as the foundation of science.

Chapter 1: Plausible Reasoning

Jaynes opens the chapter with the following:

Suppose some dark night a policeman walks down a street, apparently deserted. Suddenly he hears a burglar alarm, looks across the street, and sees a jewelry store with a broken window. Then a gentleman wearing a mask comes crawling out through the broken window, carrying a bag which turns out to be full of expensive jewelry. The policeman doesn’t hesitate at all in deciding that this gentleman is dishonest. But by what reasoning process does he arrive at this conclusion? Let us first take a leisurely look at the general nature of such problems.

In formal logic we have statements such as "If A, Then B; A is true, therefore B is true". This is called a strong syllogism. Here is an example (with premises above the line and conclusion below):

IF Fisher dies before age 100, then he died a Frequentist

Fisher died at the age of 72

———————————————————————————————————

Fisher died a Frequentist

This however does not work for the story Jaynes gave about the burglar, which requires a weak syllogism.

Jaynes wants to formalize weak syllogisms, and does that by imagining building a robot that can output the plausibility of an outcome, updating on some data. The actual implementation is up to the builder, but it should fulfill three desiderata that allows for solving the problem. These are, note here that Jaynes uses the word "plausibility" because he has not gotten far enough with the formalism to define what a probability is, but we can think of these as probabilities:

Degrees of plausibility are represented by real numbers.

Qualitative correspondence with common sense.

Consistency

a. If a conclusion can be reasoned out in more than one way, then every possible way must lead to the same result.

b. The robot always considers all of the relevant evidence that it has access to, for any given question.

c. The robot always represents equivalent states of knowledge by equivalent plausibility assignments.

The motivation for building a robot is that this can be an objective, computational method that is compatible with human intuition including the weak syllogism.

The first desiderata is easy to understand, the second one is basically that the rules we derive should be "numerically sorted as expected," so if A is evidence of B, then should be larger than , if we choose to mean "information about" The third desiderata has 3 components. 3.a) is that all paths from evidence to conclusion should resolve to the same number, so knowing that an unknown person has a beard and red cloth or red cloth and a beard should make the robot end up in the same state of knowledge. 3.b) is a very Bayesian point, that says that all background information regarding the problem should be applied, a common example is that temperatures (in Kelvin) cannot be negative, so we know a-priori that we should assign zero probability mass to negative temperatures, sometimes this makes the math very complicated, but that is "the ideal robots problem" not ours. 3c) means that the same probability will always mean the same level of certainty.

As of Chapter 1, it’s not clear that the robot is optimal, but there is potential for its reasoning to match ‘common sense’.

Chapter 2: The Quantitative Rules

After Chapter 1 we can make logical statements. We also have 3 desiderata from the previous chapter, and are left with the question, what more do we need for our robot to make inferences? It turns out we only need two things, the product rule and the sum rule.

This chapter is the driest in the book, because it contains all the math needed to derive the chain and product rules of probability, while this might be tedious, the amazing thing going on here is that after 2 chapters Jaynes has a more solid version of probability theory than the Kolmogorov axioms without using measure theory. This is achieved by bridging a connection between logical statements like ‘B is true given C’ into probability with two rules that we summarize here.

What we want are rules to connect the rules of logic to mathematical functions so we can make statements such as

Late fisher introduced fiduciary probabilities which are are inverse probabilities

Posteriors are inverse probabilities

——————————————————————————————————————————————————————————————————————

It is likely that Fisher would have eventually ended up a Bayesian.

The product rule is the first of the 2 rules we need. It is introduced by appealing to desideratum 3a. By noting that receiving the information A, and then information B should put us in the same state of knowledge, as would getting the information in the opposite order:

We first seek a consistent rule relating the plausibility of the logical product AB to the plausibilities of A and B separately. In particular, let us find . Since the reasoning is somewhat subtle, we examine this from several different viewpoints.

As a first orientation, note that the process of deciding that AB is true can be broken down into elementary decisions about A and B separately. The robot can

- decide that B is true; ()

- having accepted B as true, decide that A is true. Or, equally well,

- decide that A is true;

- having accepted A as true, decide that B is true.

Note that Jaynes here uses the instead of the ) notation we are used to in modern statistics, because at this point in chapter 2 he is in the process of reinventing all of probability theory and statistics including the basic ‘p’ operator! Spoiler alert, using a lot of tedious math (that we honestly do not fully follow), we end up with ye old product rule:

Or as Jaynes prefers it:

Note that Jaynes has a habit of always slapping on an extra conditional instead of to emphasize that is a probability subject to whatever background information (prior knowledge) you have available to you, for him the concept of makes no sense, as it suggests a probability independent of any information.

The Sum rule is basically that since A and not A is one (), then

This chapter also introduces the principle of indifference which roughly states that given N possible outcomes with no prior information, they are all equally plausible. Up to this point, Jaynes has been using ‘plausibility’ as a measure for discussing outcomes. At the end of this chapter he introduces the original definition of probability from Bernoulli (1713), the classical example of drawing balls from a (Bernoulli) urn as a ratio of the ball counts in the urn. So probability is a concrete, convenient, normalized quantity that is related to the more general notion of plausibility.

With these rules and definition of probability, Jaynes now revisits the robot, which takes in information and outputs probabilities (instead of plausibility) for different outcomes to show the objective/deterministic property.

“Anyone who has the same information, but comes to a different conclusion than our robot, is necessarily violating one of those desiderata.”

This seems obvious for the case where you have no information (e.g. with the Bernoulli urn case, assigning probabilities of each ball being picked), but initially feels problematic for the other cases where indifference doesn’t apply (e.g. you have side information that one of the balls is twice as large). People that try to follow the robot’s rules might come out with different concrete values for each ball for this case. But the problem is now conditional on much more than the size of the ball, but rather the person’s individual beliefs about how size of the ball increases likelihood of selection. The advantage of the robot is that it requires clearly specifying those priors, and the idea is if two people shared the same priors, they would converge on the same solution. Of course, in practice, we can never write all of our priors for most problems, but the principle is still useful for understanding how we would like to come up with posterior probabilities for complicated problems. It is interesting to note that Jaynes uses the word prior in a much broader sense than most Bayesian statisticians and rationalists, for example the likelihood/sampling function we use when performing a Bayesian update is prior information for Jaynes. If you come from information science (like one of us) then it is obvious that he is right, if you come from statistics (like the other of us), then this is immensely confusing at first, but it makes it easier to understand the concept of maximum entropy.

Part 2: Basic Statistics reinvented

Chapter 3: Elementary sampling theory

Sampling theory is the foundation of classical or frequentist statistics. It concerns itself with “forwards probabilities” of the type if a urn has 5 white and 5 red balls, what is the probability of sampling (drawing) 2 white. This chapter rederives all the standard coin like distributions using the rules from chapter 1 and 2. Jaynes makes 2 interesting observations in this chapter:

Logic vs. Propensity:

When Jaynes wrote this chapter people were much more confused about causality than we are today. And philosophers like Penrose “takes it as a fundamental axiom that probabilities referring to the present time can depend only on what happened earlier and, not what happens later”, thus the two following scenarios are very different:

- my first draw from an urn was a red ball; what is your guess for the color of the second?

- my second draw from an urn was a red ball; what is your guess for the color of the first?

However when we use probability as logic, we do not care about causality, only about how the information available to changes our beliefs, and in the above case knowing that the second or first ball is red contains equal information about the urn, which in turn gives equal information about information about the unknown draw (whenever it happened). In Judea Pearl's notation this propensity vs logic is solved by introducing a new syntax, where is the information B gives about A (as above) and the new notation is how “doing B” (rather than knowing B) changes A.

Reality vs. Models:

Jaynes is a physicist and therefore cares about the real world, not the idealized world of mathematical models. If you are drawing from a real urn then the size and shapes of the balls and urn matter for inference, so should we model the physics of the balls? Here is Jaynes's thoughts on the matter:

In probability theory there is a very clever trick for handling a problem that becomes too difficult. We just solve it anyway by:

- making it still harder;

- redefining what we mean by ‘solving’ it, so that it becomes something we can do;

- inventing a dignified and technical-sounding word to describe this procedure, which has the psychological effect of concealing the real nature of what we have done, and making it appear respectable.

In the case of sampling with replacement, we apply this strategy as follows.

- Suppose that, after tossing the ball in, we shake up the urn. However complicated the problem was initially, it now becomes many orders of magnitude more complicated, because the solution now depends on every detail of the precise way we shake it, in addition to all the factors mentioned above.

- We now assert that the shaking has somehow made all these details irrelevant, so that the problem reverts back to the simple one where the Bernoulli urn rule applies.

- We invent the dignified-sounding word randomization to describe what we have done. This term is, evidently, a euphemism, whose real meaning is: deliberately throwing away relevant information when it becomes too complicated for us to handle.

We have described this procedure in laconic terms, because an antidote is needed for the impression created by some writers on probability theory, who attach a kind of mystical significance to it. For some, declaring a problem to be ‘randomized’ is an incantation with the same purpose and effect as those uttered by an exorcist to drive out evil spirits; i.e. it cleanses their subsequent calculations and renders them immune to criticism. We agnostics often envy the True Believer, who thus acquires so easily that sense of security which is forever denied to us. … Shaking does not make the result ‘random’, because that term is basically meaningless as an attribute of the real world; it has no clear definition applicable in the real world. The belief that ‘randomness’ is some kind of real property existing in Nature is a form of the mind projection fallacy which says, in effect, ‘I don’t know the detailed causes – therefore – Nature does not know them.’ What shaking accomplishes is very different. It does not affect Nature’s workings in any way; it only ensures that no human is able to exert any wilful influence on the result. Therefore, nobody can be charged with ‘fixing’ the outcome.

Chapter 4: Elementary hypothesis testing

The previous chapter was about forward probabilities where we reason from hypothesis (H) to data (D), we write that as . But very often we have observed data and want to reason back to the phenomena that gave rise to our data, .

This is sometimes called reverse probability because we are reversing the D and H in the conditional probability, it is also sometimes called hypothesis testing as we want to see which hypothesis fits the data.

Priors

In order to flip or reverse the probability we need a prior. Jaynes imagines the robot as always having information X available where X is all the robot has learned since it rolled off the factory floor, so what we want is not:

- : What the data makes us believe about H, but

- : What the data and our prior knowledge makes us believe about H.

Any probability that is only conditional on X is a prior probability. It is important to note that it is prior in “information/logic” not prior in “time/causality”, as there is no time variable in information theory.

The prior information is simply the part of the information we have not rolled up in the data variable, and it can sometimes be arbitrary what is prior and what is data, your current posterior is your next experiment’s prior. The same is true for the posterior which is only logically after the prior, not causally, for example I could reason that since I know your house was burned down when I checked today (prior) then your house probably was also burned down yesterday, here the information flows causally in the wrong direction.

There are 4 common ways to set priors: group invariance, maximum entropy, marginalization, and coding theory, where this book mostly focuses on the first two.

Since the 3 previous chapters have given us: logic, the product and sum rule and sampling theory. Jaynes trivially derives Bayes theorem and names it like we are used to:

In common parlance these 4 probabilities (or distributions) are called:

- is the prior information we have about the hypothesis before the data

- is the likelihood or sampling function which says how likely the data is under different hypotheses, in short: what does the hypothesis say about the data?

- is the probability of the data, and is usually found by marginalization (iterating over all the hypothesis)

- is called the posterior, it contains the information we have after considering the prior and data.

So when you read Bayes theorem above, you should say:

Note that Jaynes writes it as prior times likelihood and not likelihood times prior, as modern Bayesians do.

Bayesian Update

The simplest math in all of the book is introduced here in chapter 4. It is basically a log odds version of Bayes theorem, which is very cool, enables fast calculation and is useful for intuitions about updating beliefs, we will go through it here. If you hate math then skip to chapter 5.

First we write the posterior for a hypothesis H and not H (written )

The relationship between odds and probability is that the odds is basically ‘it happened’ divided by ‘it didn’t happen’, so if something is 50/50 then we have 1:1 odds or , if we have 90% and 10% outcomes, then we have 9:1 odds or . We can do the same with the two above posterior equations by dividing them with each other to get their odds.

The trick here is that both equations contain which is the hardest to calculate, and that number drops out when we divide the equations. So now we have posterior odds is prior odds times the likelihood ratio of the data under the two hypotheses.

Jaynes now defines a evidence function as follows:

This evidence function is used to ‘quickly’ do Bayesian updates, much like Eliezer has introduced the concept of 1 bit of evidence, which is the evidence needed to go from odds 1:1 to 1:2 odds, Jaynes here uses decibel (dB) like the ones we use for sound as his evidence scale, this means that 0 means 1:1 odds just like 0 bits of evidence means 1:1 odds, 10 means 1:10 and 20 means 1:100 (because 10*10=100), so every 10 dB of evidence increases the odds by a factor of 10, and every 3 dB of evidence increases the odds by about a factor of 2 ( is ~1.99526) [LW(p) · GW(p)], meaning that 13 dB of evidence is equivalent for 1:20 odds.

We can use this to get the evidence of the posterior:

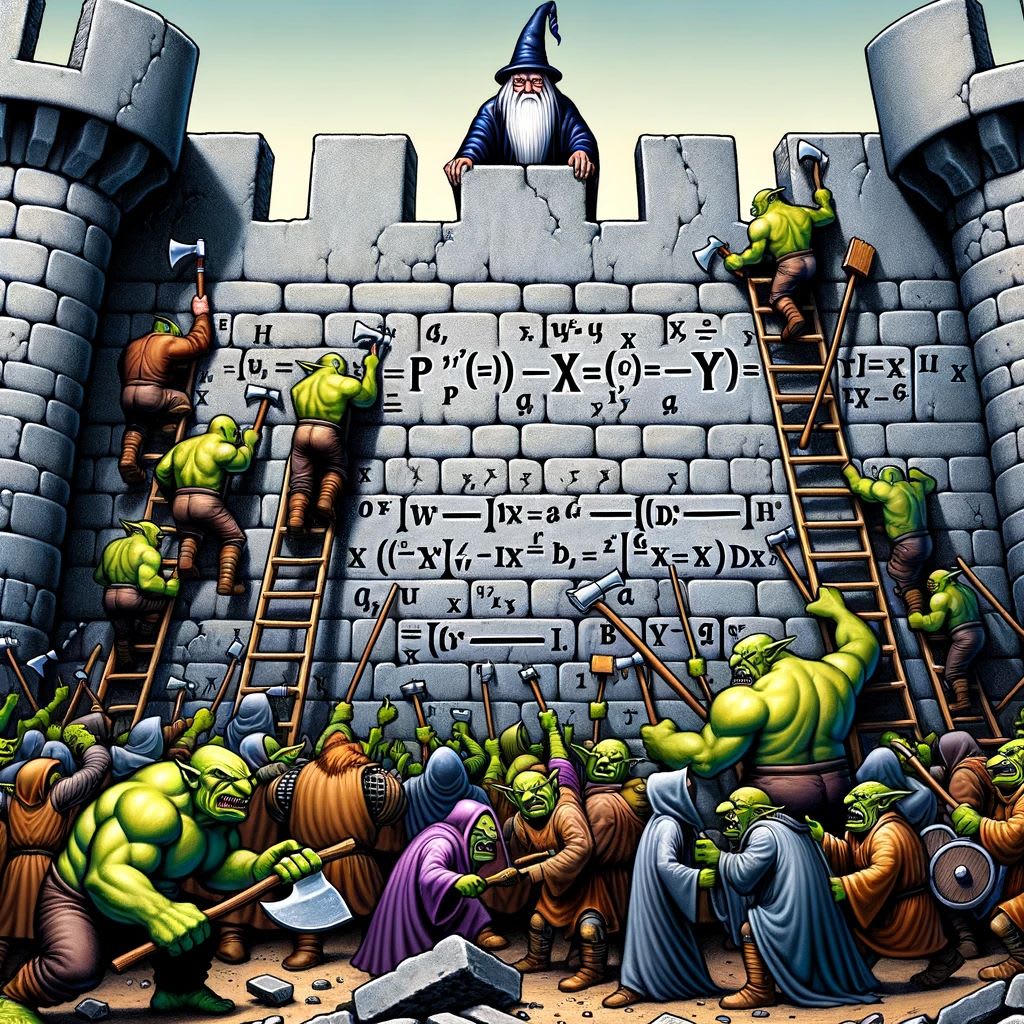

Now let us try to use this. Imagine the following background information : There are 11 raiding parties potentially attacking our cities, and it is always the same raiding party attacking all cities.

- 10 are made of goblins who breach the walls with probability ⅙,

- 1 is made of stronger orcs who breach the walls with probability ⅓.

We now want to consider the hypothesis that we were attacked by orcs, the prior odds are 10:1, so .

To see how we should update future data (inspecting the walls of attacked cities) let us write out all the possibilities:

We observe that the walls were breached, which constitutes:

The cool thing about log evidence whether decibel or bits is that adding logs is the same as multiplying the original numbers, and since probabilities of multiple events is the product of all the probabilities, this corresponds to summing (adding) all the evidence. This means we can simply add the 3 to our initial evidence -10 to get posterior evidence -10+3=-7 that the orcs did it. The next day another city has been attacked but the walls still stand, this changes the evidence by:

From this the king can quickly survey all the sacked cities, count the walls and get:

There were 20 destroyed walls and 37 intact walls, leading to of evidence for the orc hypothesis, corresponding to 20:1 odds that the orcs did it.

Chapter 5: Queer uses for probability theory

This is the cool chapter. The book has lots of sections like this, but mostly in the latter parts of the book as Jaynes is in the habit of building a strong statistical foundation before throwing shade at frequentists.

In chapter 5, Jaynes introduces four intriguing examples for applying probability theory that are much more practical than drawing balls from an urn. These are chosen to reveal counterintuitive notions and fallacies. A recurring theme is that when interpreting evidence, the null hypothesis should not be considered in a vacuum, and doing so can lead to disastrous results and strawman arguments. The alternative hypotheses must be considered as well.

ESP

How many times would someone claiming to have ESP have to be right to convince you that they had supernatural powers, and said they could repeatedly guess another person’s number from 1 to 10? Jaynes says even after 4 correct guesses in a row, he wouldn’t consider it, but maybe around 10 guesses he would entertain it, so his prior state of belief is below -40 dB and probably more like -100 dB. In the ESP experiment quoted, the person claiming to have ESP guessed correctly 9410 of 37100 trials (25%) for a guess that random chance would hit 20% of the time. Jaynes does the math to show the probability of this result due to random chance using the Bernoulli distribution is the fantastically low value of 3.15e-139.

If someone shows you an experiment that is 10 standard deviations away from the mean of the null hypothesis because the N is so large, should you suddenly start believing in ESP? Our intuition trained on Less Wrong, says ‘of course not’, which means we should slow down here and look at the aspects of the problem beyond the null hypothesis. You might accept this for toy problems like drawing balls from an urn, but in non theoretical problems like ESP, you use a complex model of the world, built from a lifetime of experience that considers many alternative explanations and weighs this evidence against all of them.

If we only consider two possible hypotheses, one where ESP doesn’t exist and any results are due to luck, and one where the person really has ESP, then each guess is +10 dB of evidence for ESP. But then Jaynes says, “In fact, if he guessed 1000 numbers correctly, I still would not believe that he has ESP”. This is because there are many other hypotheses to consider in the real world, including faulty experimentation, incorrect assumptions (e.g. the distribution of choices is not uniform), and deception, which also start with very low priors, but higher than the ESP prior, at around -60 dB. Any successful guess will boost the deception and faulty experiment hypothesis more than the ESP hypothesis so that it’s always some ~40 dB above it. Implicit in this is that Jaynes would need other types of evidence besides just repeated correct guesses from one experiment to bring the ESP hypothesis high enough to be plausible. This evidence would be the type of evidence that was against either the deception or the faulty experiment hypotheses.

Crows

The next interesting story Jaynes discusses is about the paradox of intuition in Hempel’s paradox: Consider the hypothesis “All crows are black.” What is evidence for this hypothesis? Is a black crow evidence for this hypothesis? Is a white shoe evidence for this, since “All crows are black” is logically equivalent to “All non-black things are non-crows”? And a white shoe is a good example of a non-black non-crow! As is often the case, the answer to what counts as evidence is ‘it depends’, but it depends on more than just the hypothesis that all crows are black. It depends on what alternative hypotheses are considered.

I.J. Good’s response (with the title ‘The white shoe is a red herring’) shows what happens if you observe a black crow and consider just two hypotheses:

- A world where crows are always black but rare (1/10000 birds are crows, 9999/10000 are parrots)

- Another world where there are only crows, but only 10% of them are black.

If these are your only hypotheses, seeing a black crow is 30 dB (1000:1) of evidence that you are in the second world where not all crows are black. This is because for every black crow in world one there are 1000 black and 10000 white crows in world two, thus a black crow is stronger evidence for world two.

If we modified the first world to one where crows are always black, and very common, then observing a black crow is evidence for the modified first world over the second.

This story provides an intuitive perspective on Jaynes’ ideas on multiple hypotheses, namely that whether or not a given piece of data is evidence for a hypothesis depends upon which hypotheses are being considered. One of the key takeaways from these stories is that the acknowledgement of hypotheses and the relationship of multiple hypotheses in a person’s mind determines how to interpret evidence and assign probabilities. Rationalists typically are aware that the probability of an event is not stored in the physical object being observed, but in the uncertainty in the observer’s mind about the object. In the black crow paradox, the feeling of paradox comes from a similar fallacy that the probabilities are stored in the individual hypotheses themselves instead of the uncertainty about all hypotheses as they relate to each other and the evidence.

Are Humans using Bayesian Reasoning?

Kahneman and Tversky (1972) and Wason and Johnson Laird (1972) both show that humans commit violations of Bayesian reasoning, the most extreme is the ‘A implies B’ which many people consider equivalent to ‘B implies A’ where the correct logical rule is ‘not B implies not A’. Jaynes argues this is an example of Bayesian reasoning. For example if all dogs are mammals (deduction) it also means that some mammals are dogs, concretely if only 20% of animals are mammals this information increased the likelihood of dogs by a factor of 5!. A implies B means that B increases the likelihood of A by a factor of 5.

Chapter 6: Elementary Parameter Estimation

This chapter would usually be chapter 1 or assumed reading of normal statistical books, alas, we are now on page 149 and Jaynes finally introduces the concept of a parameter! This is like writing a 149 pages introduction to programming without introducing the concept of a variable for “pedagogical reasons”. The pedagogical trick being played here is to save yourself from the mind projection fallacy. If your model (or computer program) has a parameter for the frequency of red balls being drawn from my basket then that is a feature of your model, not a feature of the basket! How can you write 150 pages of statistics without variables? Because they are not real! The way Jaynes pulled it off was by always pointing to the events in the world rather than the parameters in a statistical model in his head, so we have , the probability that the 3rd draw from the urn is a red ball given two previous red draws, rather than where is the “urns propensity to produce red balls”. Thanks Jaynes!

First, we’ll introduce the maximum likelihood estimator (MLE), which can be used to estimate outcomes or parameters. This method can be applied once you are able to assign probabilities to every possible outcome, by literally selecting the outcome with the highest probability as your estimate. This effectively discards a lot of information about the distribution, and can produce counterintuitive results if the discarded information was actually useful. There are some cases where this will have ‘atypical’ estimates (that are outside of the ‘typical set’ in information theory). An easy to understand example is that if you have a 60/40 biased coin, the MLE for a 1000 sequence of flips will be 1000 heads in a row. Note that this all-heads result is the most likely estimate of the sequence, which we are focusing on to show counterintuitive properties of MLE (you could use MLE to estimate the number of total heads to expect instead of the ordered sequence, and it would estimate 600 heads here). In real life we’d always expect some tails in the sequence, and we’d expect more tails if the coin is closer to 50/50, but the MLE is indifferent to the degree of fairness that the coin has - for any even slightly biased coin it will be all heads. If you think about this further, MLE can be very blunt, since all coins probably have some tiny physical difference that would bias them just a teeny bit to one side of the coin (say 49.999999999% heads, 50.00000001% tails), so all MLE estimates for coin flip sequences should be all heads or all tails if we inspect them closely enough. This example is for the MLE of an outcome, given the coin’s bias parameter, which is arguably the easier case to grok. The other way to use it is to find the most likely estimate for the parameter (e.g. the amount of bias in the coin) given data (e.g. a sequence of coin flips).

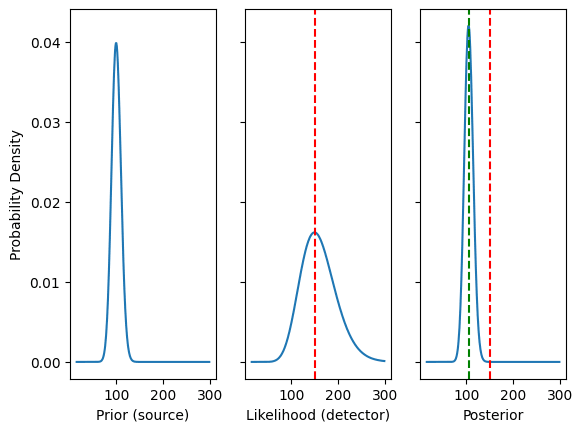

Jaynes' very physicist-oriented example concerns the emission of particles from a radioactive source and a sensor that can detect some portion of these particles. The radioactive source emits on average particles per second drawn from a Poisson distribution and the sensor detects particles with an Bernoulli rate of . So if is 0.1, the sensor picks up 10% of particles over the long run, but if you have just particles, there is no guarantee that it will detect exactly 1 particle. Similarly, even though we might have particles per second, any given second might have more or less than 100 particles emitted. This is where it gets complicated. If you use the MLE estimate, you will always get particles as your estimate for each second of counts, because MLE ‘assumes’ that the 10:1 particle relationship is fixed and thus ignores the Poisson source variability. So let’s say you have a counter with and have observed a count of 15 particles on this sensor for some second. How many particles, , have originated from the source during this second? MLE will get you 150 particles, as described above. But Jayne’s robot gives us 105 particles. What? This is a HUGE difference! This example also surprised experimental physicists. The reason the robot gets 105 and not 150 is because the source has lower variability than the detector, so a high number is weak evidence of an above average number of particles.

While the above may seem very counter intuitive, all the confusion can be fixed by plotting the above distribution (Which Jaynes does not do). Below we have 3 plots:

Where:

- The left is the source which follows a Poisson distribution:

- The middle is the detector, which follows a Binomial distribution:

- The right is the posterior compromises of n between the prior (source) and likelihood (detector)

- The red line is the MLE estimate of 150, the green is the Bayesian estimate of 105.

It is very easy without fancy math to see that the prior is narrow and the likelihood is broad, so the posterior compromise should favor the prior, in modern parlance this approach is called “full Bayesian” because we use the full distributions to make inference, where the MLE approach hopes that the mode of the distributions capture sufficient information.

Back in math land: by using the Poisson distribution to model the distribution of particles given the source strength and sensor counts, and using the entire posterior distribution to calculate the expected number of originating particles, Jaynes’ robot takes the 15 observed particles as the minimum possible particles (we assume the sensor doesn’t produce counts without particles), and adds to this , where the 100 comes from and 0.1 comes from . As the coin example shows, MLE can be blunt, because it doesn’t consider all of the information - in the coin example it didn’t care about the degree of bias. In this particle/sensor example, MLE didn’t care about the source strength, . (We should note that there is a risk of strawmanning MLE too much if we don’t mention there may be ways to ameliorate this with MLE by asking a different question, but the point is that incorporating more information can be good sometimes, and MLE often discards information). As non-physicists, we also feel Jaynes is also probably incorporating some physicist-internalized priors such as how to think about the standard deviation for particle radiation. Jaynes gives more detail:

“What caused the difference between the Bayes and maximum likelihood solutions? The difference is due to the fact that we had prior information contained in this source strength s. The maximum likelihood estimate simply maximized the probability for getting c counts, given n particles, and that yields 150. In Bayes’ solution, we will multiply this by a prior probability , which represents our knowledge of the antecedent situation, before maximizing, and we’ll get an entirely different value for the estimate. As we saw in the 6 Elementary parameter estimation 177 inversion of urn distributions, simple prior information can make a big change in the conclusions that we draw from a data set.”

Jaynes extends the particle sensor example in a very interesting way: where the information about the sensor is the same, but with different prior information (such as the source being allowed to change versus being fixed) to get different results. This all demonstrates the usefulness of the Bayesian principles of getting better estimates by incorporating more information, and accepting differences in estimates as differences in information.

Jaynes has a more advanced version of the problem where people enter and leave a radioactive room containing a source of unknown strength, and each person's radioactive exposure is measured with the same device with . In this example the first person's measured counts has weak evidence of the general source strength and therefore contains relevant information for inference of the second person's exposure.

Personal opinions

What the book achieves in 6 chapters is very impressive. From a philosophical perspective Jaynes derives the Bayesian framework from only logic and a few desiderata. Other Bayesians such as De Finetti come to many of the same conclusions easier using gambling and the principle of indifference. Jaynes personally finds gambling to be a very vulgar foundation for statistics. However, the important insight does not come from his aesthetic preference for logic, but that Jaynes's version of (Bayesian) statistics is grounded in information theory instead of gambling, and epistemologically it is much easier to ask the question “how does this information change my beliefs”, rather than: “If I was a betting man applying the principle of indifference to the outcomes, what odds would I then put on my prior beliefs so nobody can Dutch book me?” For simple problems Jaynes and De Finetti will invent the same priors and likelihoods, but for more advanced situations information theory gives a much better starting point for setting priors.

One of us works with proteomics (the study of all the proteins in the body), and saying “the effect of gender is large in some proteins, so it is probably also large on other proteins” (information theory), is actually how he thinks when he set up fancy hierarchical models with adaptive priors. He does not think “if I was a betting man and saw a large effect for gender for some proteins, then I would naturally use this adaptive weird prior so I do not lose money when betting with my coworkers”.

This is just the first quarter of the book, with a lot of detail removed. The spirit and foundation of the book is mostly captured in this first part. Some of the other sections are very mathy (e.g. properties of the Gaussian distribution and rants about why Jaynes thinks it should be called the central distribution instead), with significant focus on math and statistics history. As our example with proteomics illustrates, while this book is the book that has taught us the least per page about how to DO statistics, it is the one that has taught us the most about how to THINK about statistics. As the many examples in the book show, intuition in statistics and probability theory is something that must be built bottom-up from common sense and careful thinking about information and evidence, or else we risk getting it wrong by approaching data from the top-down, missing the structure of the underlying information.

Thanks to Mateusz Bagiński [LW · GW] and the LW editor for their valuable feedback.

20 comments

Comments sorted by top scores.

comment by Algon · 2023-12-28T20:49:49.132Z · LW(p) · GW(p)

Other Bayesians such as De Finetti come to many of the same conclusions easier using gambling and the principle of indifference.

Wait, what? IIRC he used some expected utility based arguements for determining P(A and B) and P(A or B). Did I hallucinate that? Looks at the Logic of Science. Why yes, it looks like I did. Huh. Thank you for correcting my mistaken belief.

I imagine I got Jaynes' presentation mixed up with other presentations when I was looking at foundations of probability theory and arguements for strong Bayesianism a while back. Admittedly, the Dutch booking arguements are a heck of a lot simpler than Jaynes inscrutable maths. But they suffer from, you know, relying on utilities. A big problem if your impetus for utilities relies on probabilities! You want Savage's theorem IIRC to get probability theory and utilities at once, instead of one relying on the other.

Jaynes's version of (Bayesian) statistics is grounded in information theory instead of gambling, and epistemologically it is much easier to ask the question “how does this information change my beliefs”, rather than: “If I was a betting man applying the principle of indifference to the outcomes, what odds would I then put on my prior beliefs so nobody can Dutch book me?”

From what I understand the Dutch book/decision motivated arguements are used for agent foundations purposes and to provide evidence that powerful AI systems will be goal-directed. I don't know if that's a role that can be played by informaiton theoretic principles.

Replies from: jan-christian-refsgaard↑ comment by Jan Christian Refsgaard (jan-christian-refsgaard) · 2023-12-29T16:44:40.233Z · LW(p) · GW(p)

I am not aware of Savage much apart from both Bayesian and Frequentists not liking him. And I did not follow Jaynes math fully and there are some papers going back and forth on some of his assumptions, so the mathematical underpinnings may not be as strong as we would like.

I don't know, Intuitively you should be able to ground the agent stuff in information theory, because the rules they put forwards are the same, Jaynes also has a chapter on decision theory where he makes the wonderful point that the utility function is way more arbitrary than a prior, so you might as well be Bayesian if you are into inventing ad hoc functions anyway.

comment by philh · 2024-01-02T22:57:53.623Z · LW(p) · GW(p)

Spoiler alert, using a lot of tedious math (that we honestly do not fully follow)

I had trouble with this too. I asked on math.stackexchange about part of the derivation of the product rule. Apparently I understood the answer at the time, but that was long ago.

the most extreme is the ‘A implies B’ which many people consider equivalent to ‘B implies A’ where the correct logical rule is ‘not B implies not A’. Jaynes argues this is an example of Bayesian reasoning. For example if all dogs are mammals (deduction) it also means that some mammals are dogs, concretely if only 20% of animals are mammals this information increased the likelihood of dogs by a factor of 5!. A implies B means that B increases the likelihood of A by a factor of 5.

Had trouble following this because "animals" are mentioned only once, so to elaborate: we're taking the background info to be X = "this thing is an animal", along with A = "this thing is a dog" and B = "this thing is a mammal". Then is , and if then

Jaynes' very physicist-oriented example concerns the emission of particles from a radioactive source and a sensor that can detect some portion of these particles. The radioactive source emits on average particles per second drawn from a Poisson distribution and the sensor detects particles with an Bernoulli rate of . So if is 0.1, the sensor picks up 10% of particles over the long run, but if you have just particles, there is no guarantee that it will detect exactly 1 particle. Similarly, even though we might have particles per second, any given second might have more or less than 100 particles emitted. This is where it gets complicated. If you use the MLE estimate, you will always get particles as your estimate for each second of counts, because MLE ‘assumes’ that the 10:1 particle relationship is fixed and thus ignores the Poisson source variability. So let’s say you have a counter with and have observed a count of 15 particles on this sensor for some second. How many particles, , have originated from the source during this second? MLE will get you 150 particles, as described above. But Jayne’s robot gives us 105 particles. What? This is a HUGE difference! This example also surprised experimental physicists. The reason the robot gets 105 and not 150 is because the source has lower variability than the detector, so a high number is weak evidence of an above average number of particles.

Had trouble following this too. I thought we were trying to estimate and/or . But that's not it; we know s = 100 and φ = 0.1, and we know we detected 15 particles, and the question is how many were emitted.

And if we do an MLE, we'd say "well, the number-emitted that gives us the highest probability of detecting 15 is 150", so that's the estimate. We're throwing away what we know about the distribution of how-many-emitted.

And I guess we could instead ask "what fraction of emitted particles did we detect?" Presumably then we throw away what we know about the distribution of what-fraction-detected, and we say "well, the fraction-detected that gives us the highest probability of detecting 15 is 0.15", so that would be the MLE.

Which gives us another way to see that MLE is silly, because "what fraction did we detect" and "how many were emitted" are the same question, given that we detected 15.

comment by Kenoubi · 2023-12-29T18:42:41.337Z · LW(p) · GW(p)

Good post; this has way more value per minute spent reading and understanding it than the first 6 chapters of Jaynes, IMO.

There were 20 destroyed walls and 37 intact walls, leading to 10 − 3×20 − 1×37 = 13db

This appears to have an error; 10 − 3×20 − 1×37 = 10 - 60 - 37 = -87, not 13. I think you meant for the 37 to be positive, in which case 10 - 60 + 37 = -13, and the sign is reversed because of how you phrased which hypothesis the evidence favors (although you could also just reverse all the signs if you want the arithmetic to come out perfectly).

Also, nitpick, but

and every 3 db of evidence increases the odds by a factor of 2

should have an "about" in it, since 10^(3/10) is ~1.99526231497, not 2. (3db ≈ 2× is a very useful approximation, and implied by 10^3 ≈ 2^10, but encountering it indirectly like this would be very confusing to anyone who isn't already familiar with it.)

Replies from: dentalperson↑ comment by dentalperson · 2023-12-30T14:45:14.493Z · LW(p) · GW(p)

Thanks! These are great points. I applied the correction you noted about the signs and changed the wording about the direction of evidence. I agree that the clarification about the 3 dB rule is useful; linked to your comment.

Edit: The 10 was also missing a sign. It should be -10 + 60 - 37. I also flipped the 1:20 to 20:1 posterior odds that the orcs did it.

comment by Maxwell Peterson (maxwell-peterson) · 2023-12-29T17:05:50.759Z · LW(p) · GW(p)

I read this book in 2020, and the way this post serves as a refresher and different look at it is great.

I think there might be some mistakes in the log-odds section?

The orcs example starts:

We now want to consider the hypothesis that we were attacked by orcs, the prior odds are 10:1

Then there is a 1/3 wall-destruction rate, so orcs should be more likely in the posterior, but the post says:

There were 20 destroyed walls and 37 intact walls… corresponding to 1:20 odds that the orcs did it.

We started at 10:1 (likely that it’s orcs?), then saw evidence suggesting orcs, and ended up with a posterior quite against orcs. Which doesn’t seem right. I was thinking maybe “10:1” for the prior should be “1:10”, but even then, going from 1:10 in the prior to 1:20 in the posterior, when orcs are evidenced, doesn’t work either.

All that said, I just woke up, so it’s possible I’m all wrong!

Replies from: dentalperson↑ comment by dentalperson · 2023-12-30T15:00:20.327Z · LW(p) · GW(p)

Thanks, your confusion pointed out a critical typo. Indeed the relatively large number of walls broken should make it more likely that the orcs were the culprits. The 1:20 should have been 20:1 (going from -10 dB to +13 dB).

comment by joseph_c (cooljoseph1) · 2023-12-28T16:34:25.836Z · LW(p) · GW(p)

The trick here is that both equations contain which is the hardest to calculate, and that number drops out when we divide the equations.

You have a couple typos here. The first centered equation should not have a $P(\bar H H | X)$ but instead have $P(\bar H | X)$, and the inline expression should be $P(D | X)$, not $P(D | H)$.

Replies from: Gunnar_Zarncke↑ comment by Gunnar_Zarncke · 2023-12-28T20:19:42.500Z · LW(p) · GW(p)

Also, the second $P(intact∣orc,X)$ should be $P(intact∣goblin,X)$

comment by DusanDNesic · 2023-12-28T11:07:46.766Z · LW(p) · GW(p)

Thank you for this - this is not a book I would generally pick up in my limited reading time, but this has clarified a lot of terms and thinking around probabilities!

comment by bideup · 2023-12-28T10:31:15.267Z · LW(p) · GW(p)

Your example of a strong syllogism (‘if A, then B. A is true, therefore B is true’) isn’t one.

It’s instead of the form ‘If A, then B. A is false, therefore B is false’, which is not logically valid (and also not a Jaynesian weak syllogism).

If Fisher lived to 100 he would have become a Bayesian

Fisher died at the age of 72

———————————————————————————————————

Fisher died a Frequentist

You could swap the conclusion with the second premise and weaken the new conclusion to ‘Fisher died before 100’, or change the premise to ‘Unless Fisher lived to a 100 he would not become a Bayesian’.

Replies from: jan-christian-refsgaard↑ comment by Jan Christian Refsgaard (jan-christian-refsgaard) · 2023-12-28T10:55:30.086Z · LW(p) · GW(p)

crap, you are right, this was one of the last things we changed before publishing because out previous example were to combative :(.

I will fix it later today.

comment by Iknownothing · 2023-12-28T04:23:01.294Z · LW(p) · GW(p)

I'm not a grad physics student- I don't have a STEM degree, or the equivalent- I found the book very readable, nonetheless. It's by far my favourite textbook- feels like it was actually written by someone sane, unlike most.

Replies from: jan-christian-refsgaard↑ comment by Jan Christian Refsgaard (jan-christian-refsgaard) · 2023-12-28T11:12:48.460Z · LW(p) · GW(p)

That surprising to me, I think you can read the book two ways, 1) you skim the math, enjoy the philosophy and take his word that the math says what he says it says 2) you try to understand the math, if you take 2) then you need to at least know the chain rule of integration and what a delta dirac function is, which seems like high level math concepts to me, full disclaimer I am a biochemist by training, so I have also read it without the prerequisite formal training. I think you are right that if you ignore chapter 2 and a few sections about partition functions and such then the math level for the other 80% is undergraduate level math

Replies from: Iknownothing↑ comment by Iknownothing · 2023-12-28T14:19:13.976Z · LW(p) · GW(p)

I'd also read Elementary Analysis before

Replies from: jan-christian-refsgaard↑ comment by Jan Christian Refsgaard (jan-christian-refsgaard) · 2023-12-28T14:36:47.865Z · LW(p) · GW(p)

Ahh, I know that is a first year course for most math students, but only math students take that class :), I have never read an analysis book :), I took the applied path and read 3 other bayesian books before this one, so I taught the math in this books were simultaneously very tedious and basic :)

comment by eggsyntax · 2024-03-23T15:38:39.526Z · LW(p) · GW(p)

Thanks so much for writing this! I'd like to at some point work my way through the book, but so far it's seemed like too big a commitment to rise to the top of my todo list. It's really great to have something shorter that summarizes it in some detail.

A point of confusion:

...the rules we derive should be "numerically sorted as expected," so if A is evidence of B, then should be larger than , if we choose to mean "information about"

I'm not sure how to read that. What does 'A information about B' mean? I initially guessed that you meant | as it's usually used in Bayesian probability (ie something like 'given that'), but if that were the case than the statement would be backward (ie if A is evidence of B, then B | A should be larger than A, not the reverse.

By the time it appears in your discussion of Chapter 2 ('let us find AB | C') it seems to have the usual meaning.

I'd love to get clarification, and it might be worth clarifying in the text.

Thanks again!

comment by nd (dmitry-neverov) · 2024-01-06T07:06:45.161Z · LW(p) · GW(p)

There is a good reading course for this book by Aubrey Clayton: https://www.youtube.com/watch?v=rfKS69cIwHc&list=PL9v9IXDsJkktefQzX39wC2YG07vw7DsQ_.

Can anyone suggest good sources of exercises if I want to practice the math described in this post? Ideally with solutions or at least with answers, so that one can practice on their own.

Replies from: jan-christian-refsgaard↑ comment by Jan Christian Refsgaard (jan-christian-refsgaard) · 2024-01-14T16:12:38.712Z · LW(p) · GW(p)

This might help you https://github.com/MaksimIM/JaynesProbabilityTheory

But to be honest I did very few of the exercises, from chapter 4 and onward most of the stuff Jayne says are "over complicated" in the sense that he derives some fancy function, but that is actually just the poison likelihood or whatever, so as long as you can follow the math sufficiently to get a feel for what the text says, then you can enjoy that all of statistics is derivable from his axioms, but you don't have to be able to derive it yourself, and if you ever want to do actual Bayesian statistics, then HMC is how you get a 'real' posterior, and all the math you need is simply an intuition for the geometry of the MCMC sampler so you can prevent it from diverging, and that has nothing to do with Jaynes and everything to do with the the leapfrogging part of the Hamiltonian and how that screws up the proposal part of the metropolis algorithm.

comment by Jan Christian Refsgaard (jan-christian-refsgaard) · 2023-12-28T11:26:22.598Z · LW(p) · GW(p)

If anyone relies on tags to find posts, and you feel this post is missing a tag, then "Tag suggestions" will be much appreciated