Information-Theoretic Boxing of Superintelligences

post by JustinShovelain, Elliot Mckernon (elliot) · 2023-11-30T14:31:11.798Z · LW · GW · 0 commentsContents

Constraining output and inputs Good regulators and bad keys Bits, boxes, and control Building better boxes None 1 comment

Boxing an agent more intelligent than ourselves is daunting, but information theory, thermodynamics, and control theory provide us with tools that can fundamentally constrain agents independent of their intelligence. In particular, we may be able to contain an AI by limiting its access to information

Constraining output and inputs

Superintelligent AI has the potential to both help and harm us. One way to minimize the harm is boxing the AI: containing it so that it can’t freely influence its external world, ideally while preserving its potential to help.

Boxing is complicated. Destroying the AI will certainly prevent it from influencing anything. Throwing it into a black hole might causally disconnect it from the rest of the universe while possibly preserving its existence, though even then it may exploit some unknown physics to leak information back across the event horizon, or to persist or recreate itself once the black hole has evaporated.

Can we box an AI while still being being able to use it? We could try preventing it from physically interacting with its environment and only permitting it to present information [? · GW] to a user, but a superintelligence could abuse any communication channels to manipulate its users into granting it more power [LW · GW] or improving its predictive abilities [LW · GW]. To successfully box the AI in this manner, we’d need to constrain both its ability to physically interact with its environment and communicate information and manipulate. We’ll call this output boxing: containing an AI by constraining its various outputs.

Most discussions of boxing focus on output boxing, but there’s a neglected mirror to this approach that we’ll call input boxing: containing an AI by constraining what information it can access. (For completeness, we could also consider boxing techniques independent of input and output, where we contain an AI by constraining its functioning, such as by limiting its computational speed or restarting it from initial conditions each second).

In the rest of this post, we’ll introduce and examine a theoretical input boxing technique that contains an AI by controlling the number of bits of information it has about its environment, using results from information theory, thermodynamics, and control theory.

Good regulators and bad keys

Today’s powerful AIs rely on mountains of data (during training, at least), so restricting their access to data would restrict their subsequent capabilities, but can we fundamentally limit a superintelligence’s external influence just by constraining its access to data?

We know that agents need an understanding of their environments to act effectively within them: Conant & Ashby studied this in a (much [LW · GW] maligned [LW · GW]) 1970 paper on the good regulator theorem. A regulator is any mechanism that maintains a desired characteristic, like a thermostat, or a brain and its homeostatic subsystems. The good regulator theorem states that every good regulator of a system must contain a model of the system. Another parsing of the theorem states that every good key must be a model of the lock it opens, and John Wentworth refines the theorem [LW · GW] (and its proof), clarifying that:

[Conant and Ashby actually prove] that every regulator which is optimal and contains no unnecessary noise is equivalent to a regulator which first reconstructs the variable-values of the system it’s controlling, then chooses its output as a function of those values

Can we, therefore, box an AI by preventing it from modeling its external environment? Can we stop the AI becoming a “good key” for the cage we’ve placed it in? Let’s look at the potential sources of information for such an AI:

- The initial conditions of the model (such as training data and learned patterns)

- Sensory input (such as through cameras and microphones)

- Logical deduction and extrapolation

We’ll assume that the AI we’re trying to box is logically omniscient; that it can squeeze as much information from its initial conditions and sensory input as theoretically possible. This leaves two sources of information that are targets for input-boxing: initial conditions and sensory input.

In practice, restricting these sources of information is tricky. For example, we could try giving the AI only one sensor, some low-res camera with which to receive instruction. However, the image that the camera receives could be subtly influenced by the vibration of nearby voices, ambient magnetic fields, background radiation, and more. Interpreted correctly, these sources of interference become sources of information.

Supposing, though, that we can overcome such practical hurdles, can we quantify how much control we can muster from input boxing? To answer this, we’ll look at control theory.

Bits, boxes, and control

First, some terminology. Like a regulator, a control loop controls the behavior of a variable using a sensor, a function, and some actuator. Using multiple control loops, a control system manages the behavior of a system. Control loops are open-loop when they don’t act on feedback about the outcomes of their actions, like a heater on a timer. Closed-loop controllers incorporate feedback, like cruise control or thermostats. Finally, entropy here refers to Shannon entropy, which measures uncertainty about a variable. Touchette & Lloyd study the limits of control systems in Information-theoretic limits of control, writing that:

entropy offers a precise measure of disorderliness or missing information by characterizing the minimum amount of resources (bits) required to encode unambiguously the ensemble describing the system.

Summarizing their main results, they write:

in a control process, information must constantly be acquired, processed and used to constrain or maintain the trajectory of a system.

the second law of thermodynamics…sets absolute limits to the minimum amount of dissipation required by open-loop control…an information-theoretic analysis of closed-loop control shows feedback control to be essentially a zero sum game: each bit of information gathered directly from a dynamical systems by a control device can serve to decrease the entropy of that system by at most one bit additional to the reduction of entropy attainable without such information (open-loop control).

In other words, when including information as part of a thermodynamic system, the second law of thermodynamics tells us that you can't control a system perfectly. When a control loop adjusts its actions based on the information it gets from the system it's controlling, there’s a fundamental trade-off: for each bit of information you collect from a system, you can reduce disorder of that system by only one more bit than you could’ve without that information. There's a strict one-for-one limit on how much more you can improve control through information.

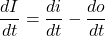

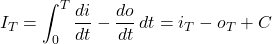

To formalize this, let t denote time, and let denote the amount of information available to the boxed agent at time . The change in is then given by time derivative of input information, labeled , minus the time derivative of output or “used” information, labeled :

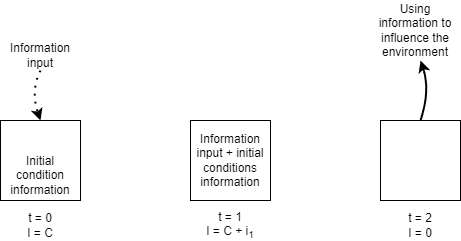

and so the available information at time is

where C denotes the information contained in the initial conditions of the model. We can visualize (a simplified version of) this over consecutive timesteps, as information is gathered then used up:

This seems promising. If we limit the information an AI can gather with respect to the information it uses to act in its environment, it will not have sufficient information to act effectively. Or, in the language of the paper above, the agent will not have enough bits about its external dynamical system to sufficiently reduce the entropy of that dynamical system.

We can define input-boxing more formally as follows: suppose we have an agent that has an amount of information at time . For to achieve a certain external action , it requires some amount of information about its external environment, and we can say that cannot achieve when is smaller than . If we set to be some minimal escape scenario, then we can say that is input boxed when . This doesn't depend on any of the agent's capabilities or intelligence, just its , which we are supposing we can limit, and , which depends only on the external environment.

John Wentworth, author of Fixing The Good Regulator Theorem [LW · GW], which we used earlier, considered a similar question about the limits of control [LW · GW]. He conjectured that observing an additional N bits of information allows a system to perform at most N additional bits of optimization. He concluded that the conjecture is false [LW(p) · GW(p)], citing a counterexample where a short password unlocks a long message, concluding that “bits of observation can give a really ridiculously large boost to a system's optimization power”. Others agree [LW · GW], arguing that, with some practical constraints, “there are no limits on how much optimization one can squeeze out of a single bit, even in a world with reversible computing”.

However, these counterexamples rely on the agent’s ability to subsequently “follow up” on what they learn. The information in the “password” is only useful insofar as it grants the opportunity to gather or unlock more information. The instantaneous utility of the password, or any piece of information, is bounded by the sum of the information in the password itself and prior knowledge, and by assumption we’re limiting the agent’s access to follow-up information as well. Therefore, these counterexamples shouldn’t exempt an input-boxed AI from the limits on optimization. If we can limit the information an agent gathers from inputs and prior knowledge, we can box that agent, regardless of its intelligence.

Building better boxes

Input boxing is a promising avenue for research as it tackles the challenge of limiting an AI's capabilities through the fundamentals of information, independent of how smart the AI in the box is.

This article is just an introduction, and we’re interested in exploring further refinements of the idea of input boxing. For example, a deeper analysis of what information we allow into the box may give us more fine-grained control over an AI (such as in this discussion [LW(p) · GW(p)] about information about environments versus laws), especially if we can robustly distinguish between information useful for solving our problems and useful for escape.

That is, rather than fundamentally limiting the agent’s overall control, we may be able to identify hazardous paths, or sequences of actions, that the agent will want to pursue. That is, for an agent to achieve an action it requires information about the control pathway between its actuators and its ultimate desired outcome. must have the information about how its first action will transform its external environment, and how subsequent actions will transform the new, changed environment, and so on.

If we limit the agent’s access to that hazardous information about dangerous control pathway, we could relax the fundamental constraints on information input without permitting hazardous behavior. This could be much more pragmatic than fundamentally limiting all information, since hazardous information is probably a small subset of all information - bits have to be relevant to hazardous actions to be hazardous bits. However, this would come at the cost of the guarantee those fundamental limits provide.

Another promising avenue for developing input-boxing, suggested by Deric Cheng, would be to impose fundamental delays on the use of information. The utility of information decays over time, so if an AI could only act on information from 24 hours ago, it’ll be much weaker, especially for high-speed actions such as exploiting the stock market.

As well as a boxing technique for superintelligences, input boxing may be useful for more niche applications. For example, by input boxing AI models during evals [? · GW], we could test a model with the guarantee that any hazardous behavior won’t leak out the box, meaning evaluators could actively prompt hazardous behavior and use red-teaming techniques without compromising the safety of the eval [LW · GW].

With further refinements and insights from information theory, control theory, and thermodynamics, input boxing could be a powerful tool for both niche applications and for wrapping a superintelligence up with a neat, little bow in a safe, useful box.

This post was initially inspired by Hugo Touchette & Seth Lloyd’s Information-Theoretic Limits of Control paper. For more on control theory, the good regulator theorem, and other similar topics, check out:

- What is control theory, and why do you need to know about it? [LW · GW] by Richard_Kennaway

- Machine learning and control theory by Alain Bensoussan et al.

- Fixing The Good Regulator Theorem [LW · GW] by johnswentworth

- The Internal Model Principle by John Baez

- A Primer For Conant & Ashby's “Good-Regulator Theorem” by Daniel L. Sholten

- Notes on The Good Regulator Theorem in Harish's Notebook

- How Many Bits Of Optimization Can One Bit Of Observation Unlock? [LW · GW] by johnswentworth

- Provably safe systems: the only path to controllable AGI by Max Tegmark and Steve Omohundro

This article is based on the ideas of Justin Shovelain, written by Elliot Mckernon, for Convergence Analysis. We’d like to thank the authors of the posts we’ve quoted, and Cesare Ardito, David Kristoffersson, Richard Annilo, and Deric Cheng for their feedback while writing.

0 comments

Comments sorted by top scores.