Four kinds of problems

post by Bird Concept (jacobjacob) · 2018-08-21T23:01:51.339Z · LW · GW · 11 commentsContents

11 comments

I think there are four natural kinds of problems, and learning to identify them helped me see clearly what’s bad with philosophy, good with start-ups, and many things in-between.

Consider these examples:

- Make it so that bank transfers to Africa do not take weeks and require visiting physical offices, in order to make it easier for immigrants to send money back home to their poor families.

- Prove that the external world exists and you’re not being fooled by an evil demon, in order to use that epistemic foundation to derive a theory of how the world works.

- Develop a synthetic biology safety protocol, in order to ensure your lab does not accidentally leak a dangerous pathogen.

- Build a spaceship that travels faster than the speed of light, in order to harvest resources from outside our light cone.

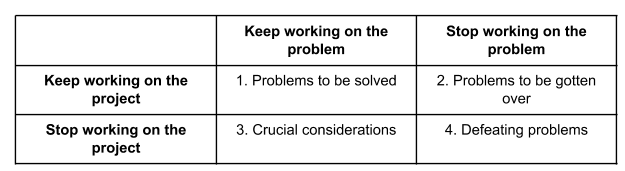

These examples all consist in problems that are encountered as part of work on larger projects. We can classify them by asking how we should respond when they arise, as follows:

1. is a problem to be solved. In this particular example, it turns out global remittances are several times larger than the combined foreign aid budgets of the Western world. Building a service avoiding the huge fees charged by e.g. Western Union is a very promising way of helping the global poor.

2. is a problem to be gotten over. You probably won’t find a solution of the kind philosophers usually demand. But, evidently, you don’t have to in order to make meaningful epistemic progress, such as deriving General Relativity or inventing vaccines.

3. is a crucial consideration -- a problem so important that it might force you to drop the entire project that spawned it, in order to just focus on solving this particular problem. Upon discovering that there is a non-trivial risk of tens of millions of people dying in a natural or engineered pandemic within our lifetimes, and then realising how woefully underprepared our health care systems are for this, publishing yet another paper suddenly appears less important.

4. is a defeating problem. Solving it is impossible. If a solution forms a crucial part of a project, then the problem is going to bring that project with it into the grave. If whatever we want to spend our time doing, if it requires resources from outside our light cone, we should give it up.

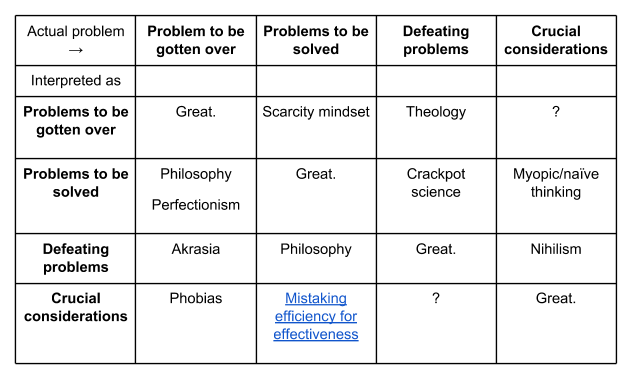

With this categorisation in mind, we can understand some good and bad ways of thinking about problems.

For example, I found that learning the difference between a defeating problem and a problem-to-be-solved was what was required to adopt a “hacker mindset”. Consider the remittances problem above. If someone had posed it as something to do after they graduate, they might have expected replies like:

“Sending money? Surely that’s what banks do! You can’t just... build a bank?”

“What if you get hacked? Software infrastructure for sending money has to be crazy reliable!”

“Well, if you’re going to build a startup to help to global poor, you’d have to move to Senegal.”

Now of course, neither of these things violate the laws of physics. They might violate a few social norms. They might be scary. They might seem like the kind of problem an ordinary person would not be allowed to try to solve. However, if you really wanted to, you could do these things. And some less conformist people who did just that have now become billionaires or, well, moved to Senegal (c.f. PayPal, Stripe, Monzo and Wave).

As Hannibal said when his generals cautioned him that it was impossible to cross the Alps by elephant: "I shall either find a way or make one."

This is what’s good about startup thinking. Philosophy, however, has a big problem which goes the other way: mistaking problems-to-be-solved for defeating problems.

For example, a frequentist philosopher might object to Bayesianism saying something like “Probabilities can’t represent the degrees of belief of agents, because in order to prove all the important theorems you have to assume the agents are logically omniscient. But that’s an unreasonable constraint. For one thing, it requires you to have an infinite number of beliefs!” (this objection is made here, for example). And this might convince people to drop the Bayesian framework.

However, the problem here is that it has not been formally proven that the important theorems of Bayesianism ineliminably require logical omniscience in order to work. Rather, that is often assumed, because people find it hard to do things formally otherwise.

As it turns out, though, the problem is solvable. Philosophers did not find this out, however, as they get paid to argue and so love making objections. The proper response to that might just be “shut up and do the impossible [LW · GW]”. (A funny and anecdotal example of this is the student who solved an unsolved problem in maths because he thought it was an exam question.)

Finally, we can be more systematic in classifying several of these misconceptions. I’d be happy to take more suggestions in the comments.

11 comments

Comments sorted by top scores.

comment by Shmi (shminux) · 2018-08-22T06:08:35.044Z · LW(p) · GW(p)

This reminds me of the serenity prayer, only longer :)

comment by Ben Pace (Benito) · 2018-08-21T23:46:56.822Z · LW(p) · GW(p)

For 'crucial considerations' interpreted as 'problems to be gotten over', perhaps the box could be filled with 'avoiding scary thoughts'? Like, it turns out you have to rebuild your life around this problem - you can't treat it as a problem to be solved, because at some point you'll notice your work on it is making zero progress. And so rather than change your entire life+work+social structure, you ignore it and pretend it's a problem to be gotten over.

comment by Ben Pace (Benito) · 2019-11-29T22:22:42.279Z · LW(p) · GW(p)

This is a very simple, very clear, yet generally applicable post that I think about regularly, and have used a few times in important conversations in working at LessWrong.

comment by avturchin · 2018-08-24T08:29:56.976Z · LW(p) · GW(p)

It looks like that the most complex problems have a meta-level based on their unsolvable nature:

Example: John must pay 10.000 USD next week, but has only 1.000 on his account, and it this makes him so emotionally distressed that he can't find rational solutions but also can't stop thinking about the problem.

comment by TruePath · 2018-09-19T02:07:34.987Z · LW(p) · GW(p)

Your criticism of the philosophy/philosophers is misguided on a number of accounts.

1. You're basing those criticisms on the presentation in a video designed to present philosophy to the masses. That's like reading some phys.org arg article claiming that electrons can be in two locations at once and using that to critisize the theory of Quantum Mechanics.

2. The problem philosophers are interested in addressing may not be the one you are thinking of. Philosophers would never suggest that the assumption of logical omniscience prevents one from using Bayesianism as a practical guide towards reasoning or that it's not often a good idealization to treat degrees of belief as probabilities. However, I believe the question that this discussion is in relation to is in giving a theory that explains the fundamental nature of probability claims and here the fact that we really aren't logically omniscient prevents us from identifying probabilities with something like rational degrees of belief (though that proposal has other problems too).

3. It's not like philosophers haven't put in plenty of effort looking for probability like systems that don't presume logical omniscience. They have developed any number of them but none seem particularly useful and I'm not convinced that the paper you link about this will be much different (not that they are wrong just not that useful).

Replies from: jacobjacob↑ comment by Bird Concept (jacobjacob) · 2018-09-20T18:30:10.125Z · LW(p) · GW(p)

I don't want to base my argument on that video. It's based on the intuitions for philosophy I developed doing my BA in it at Oxford. I expect to be able to find better examples, but don't have the energy to do that now. This should be read more as "I'm pointing at something that others who have done philosophy might also have experienced", rather than "I'm giving a rigorous defense of the claim that even people outside philosophy might appreciate".

comment by TAG · 2018-08-23T11:10:18.852Z · LW(p) · GW(p)

Philosophers did not find this out, however, as they get paid to argue and so love making objections.

Rationality consists of different things that are often add odds with each other. Ratioanlists, however, did not find this out as they are paid to present rationality as a one-size fits all solution.

Etc, etc.

comment by Paperclip Minimizer · 2018-09-05T15:04:09.939Z · LW(p) · GW(p)

.

Replies from: Benito↑ comment by Ben Pace (Benito) · 2018-09-05T16:18:30.031Z · LW(p) · GW(p)

[Mod notice] This comment is needlessly aggressive and seems to me to be out of character for you. If you write a comment this needlessly aggressive again I’ll give you a temporary suspension.