Really Strong Features Found in Residual Stream

post by Logan Riggs (elriggs) · 2023-07-08T19:40:14.601Z · LW · GW · 6 commentsContents

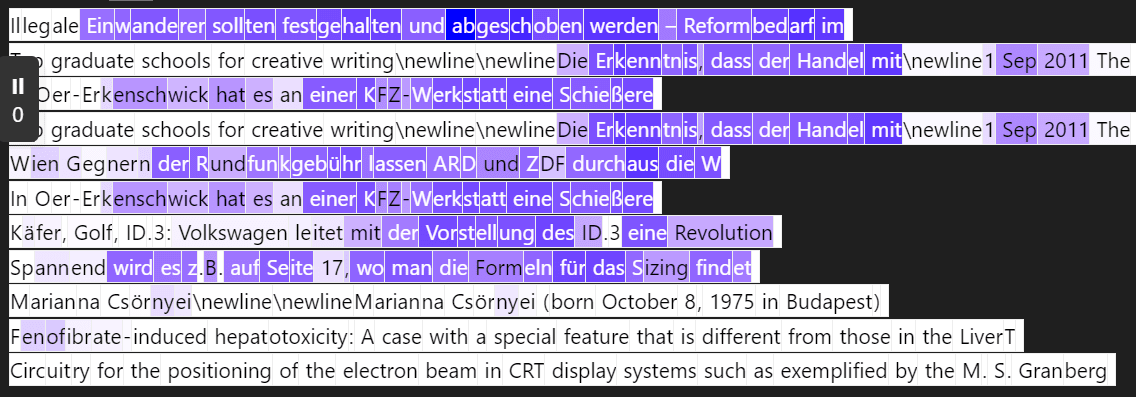

German Feature?

Stack Exchange

Title Case

One for the Kids

[word] and [word]

Beginning & End of First Sentence?

High Level Picture

None

6 comments

[I'm writing this quickly because the results are really strong. I still need to do due diligence & compare to baselines, but it's really exciting!]

Last post [LW · GW] I found 600+ features in MLP layer-2 of Pythia-70M, but ablating the direction didn't always make sense nor the logit lens. Now I've found 3k+ in the residual stream, and the ablation effect is intuitive & strong.

The majority of features found were single word or combined words (e.g. not & 't ) which, when ablated, effected bigram statistics, but I also wanted to showcase the more interesting ones:

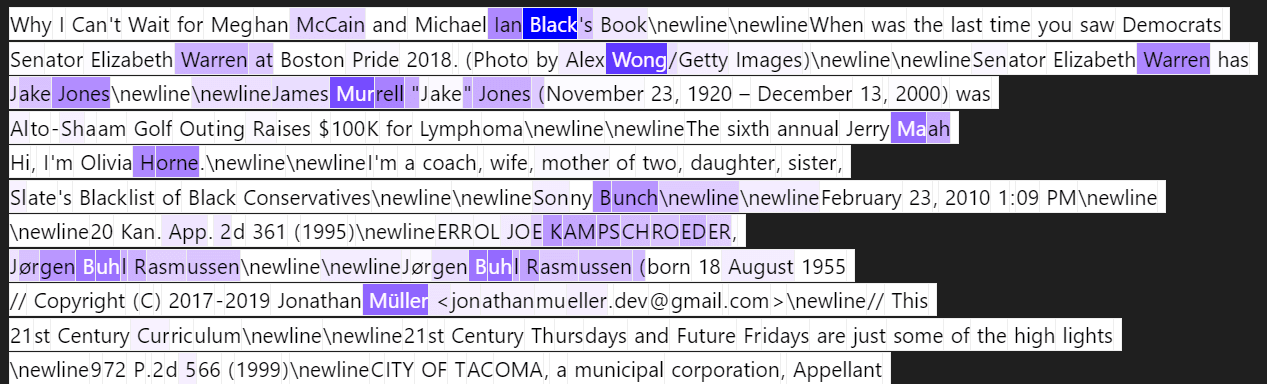

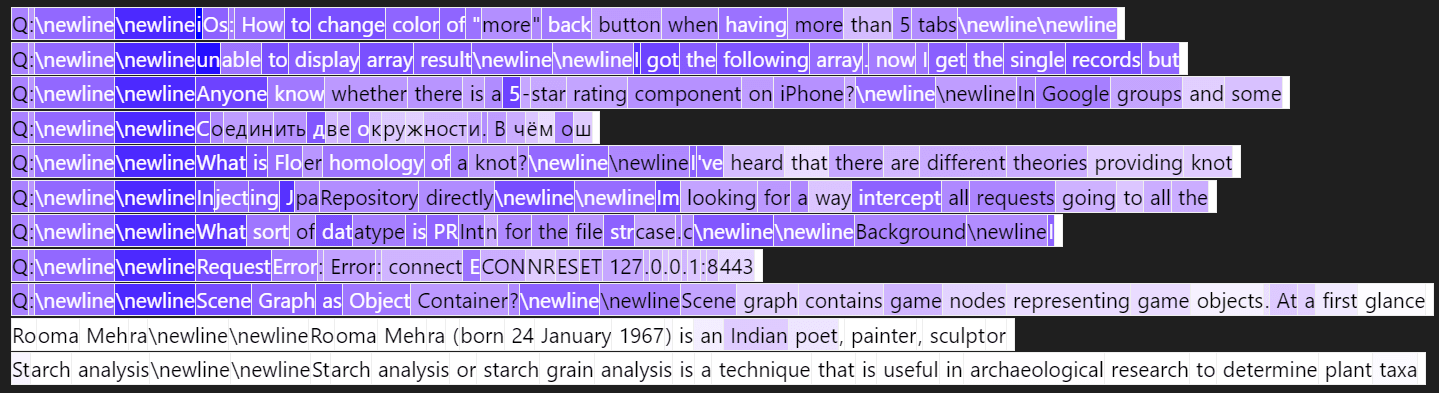

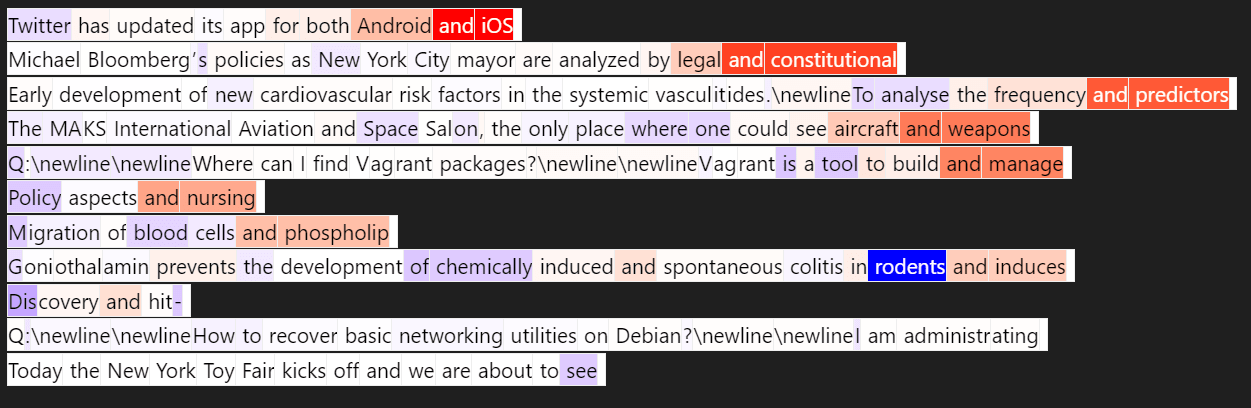

German Feature?

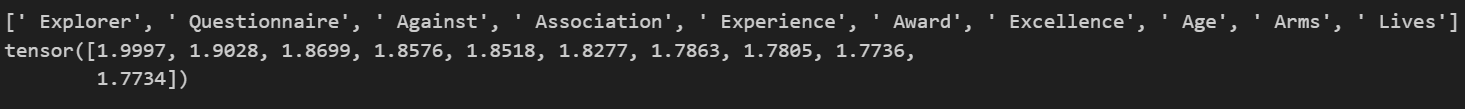

Uniform examples:

Logit lens:

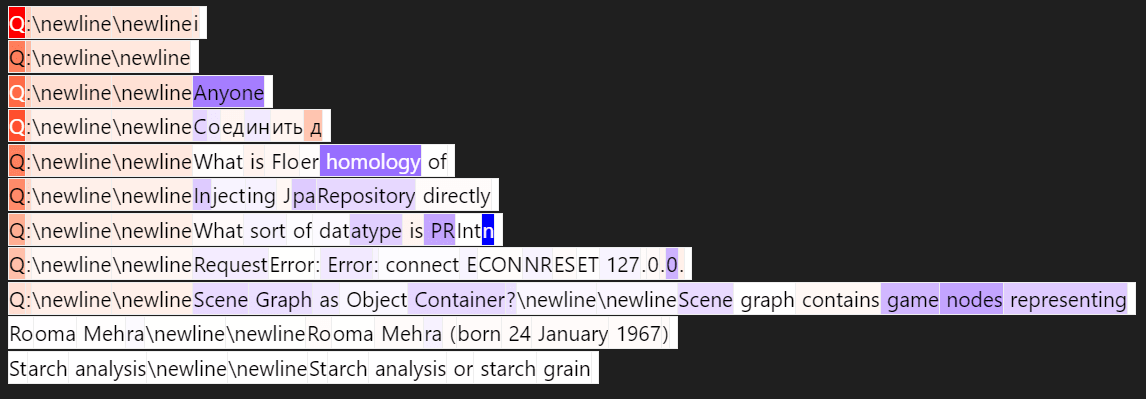

Stack Exchange

Ablated Text (w/ red showing removing that token drops activation of that feature)

In the Pile dataset, stackexchange text is formatted as "Q:/n/n....", and removing the "Q" greatly decreasing the feature activation is evidence for this indeed detecting stackexchange format.

Title Case

Ablating Direction (in text):

Here, the log-prob of predicting " Of" went down by 4 when I ablate this feature direction, along w/ other Title Case texts.

Logit lens

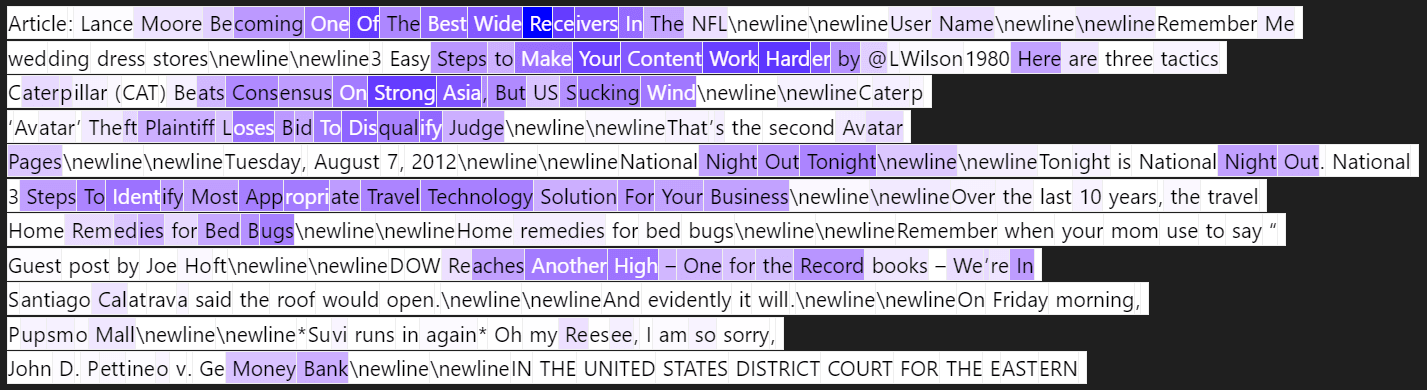

One for the Kids

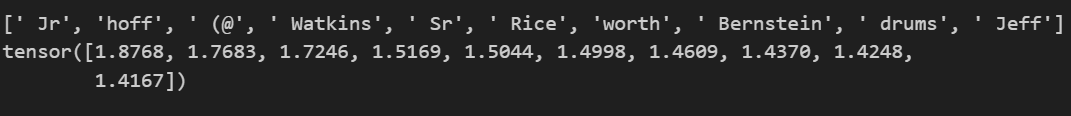

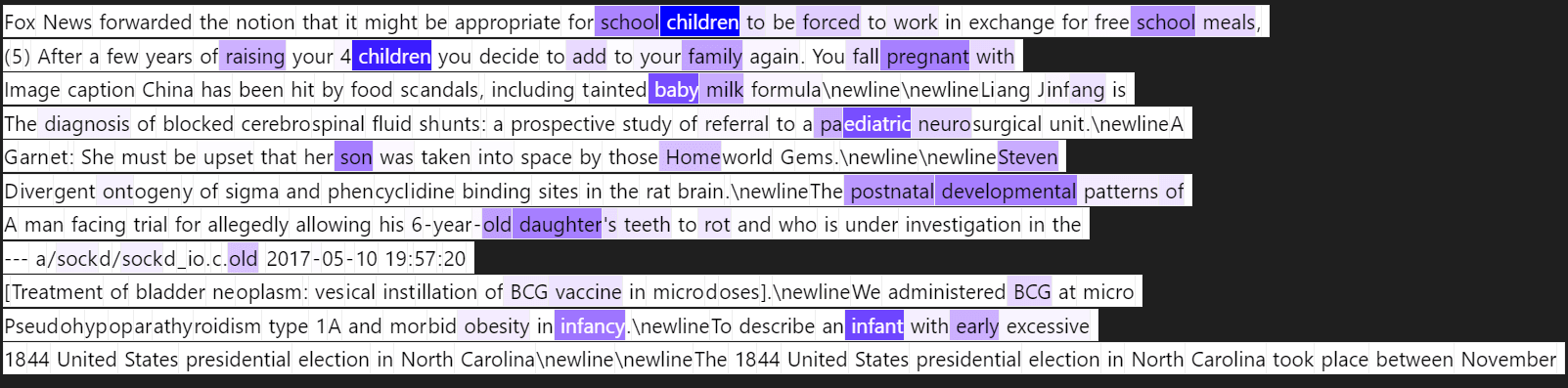

Last Name:

Ablated Text:

As expected, removing the first name affects the feature activation

Logit Lens:

More specifically, this looks like the first token of the last name, which makes since to follow w/ Jr or Sr or completing last names w/ "hoff" & "worth". Additionally, the "(\@" makes sense for someone's social media reference (e.g. instagram handle).

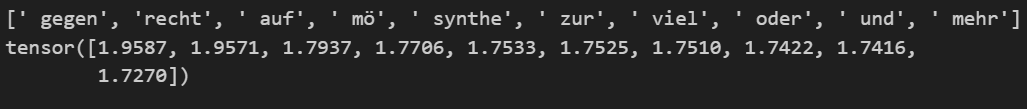

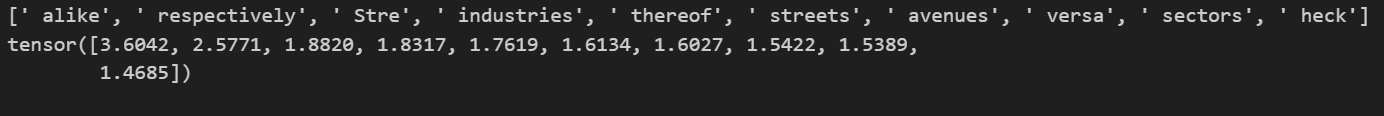

[word] and [word]

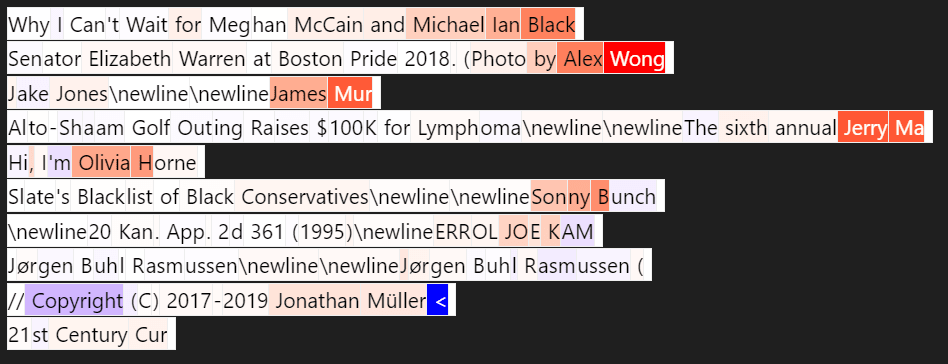

Ablated Text:

Logit Lens:

alike & respectively make a lot of since in context, but not positive about the rest.

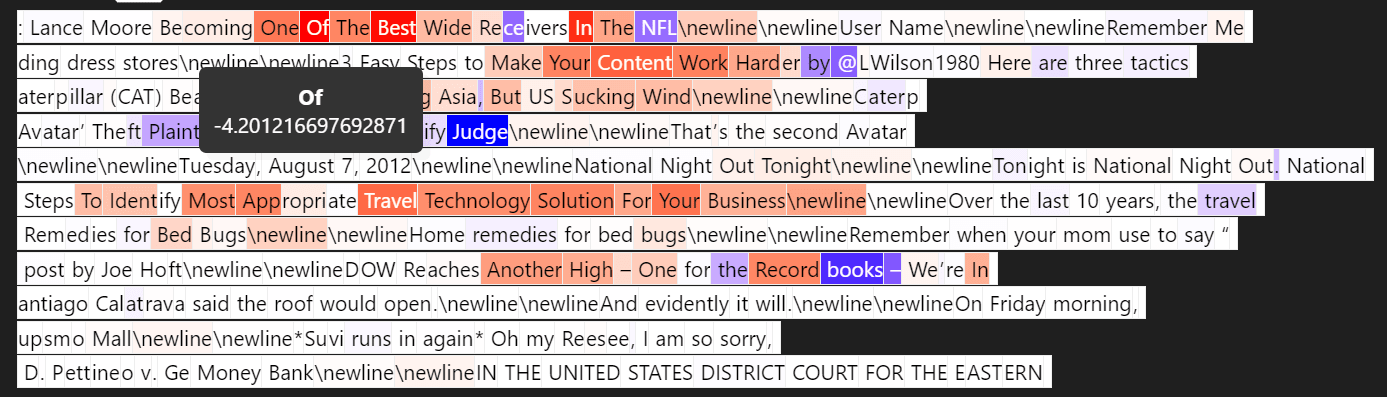

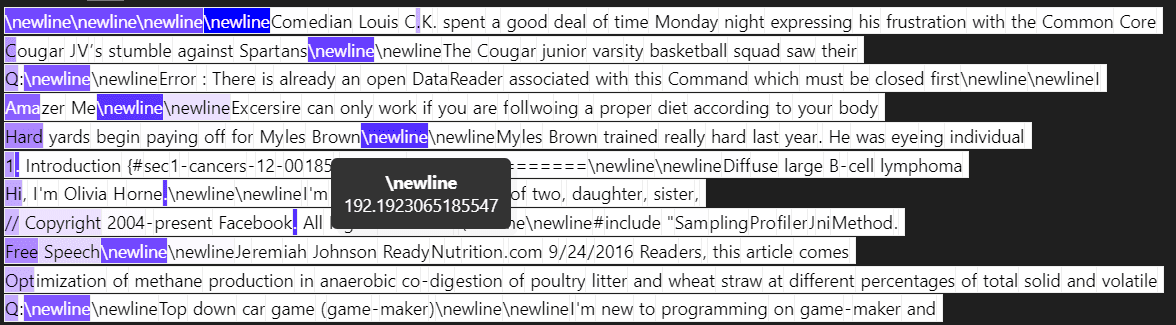

Beginning & End of First Sentence?

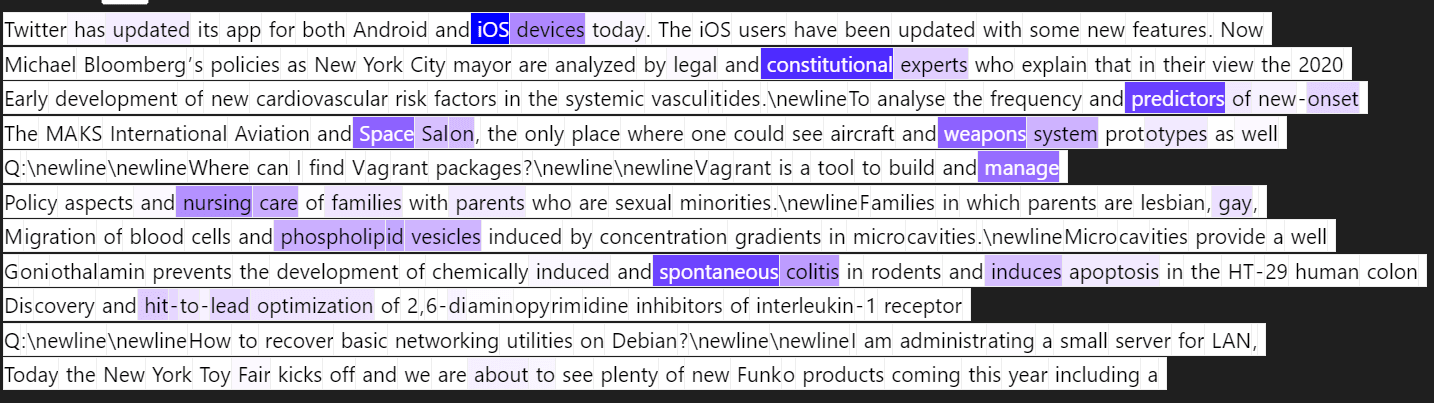

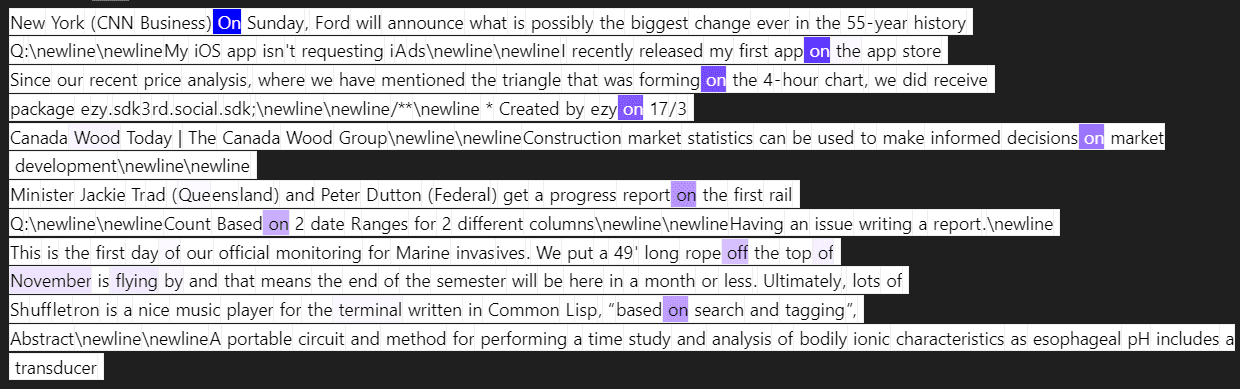

On

logit lens:

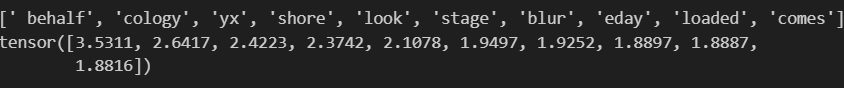

"on behalf", "oncology", "onyx" (lol), "onshore", "onlook", "onstage", "onblur"(js?), "oneday" ("oneday" is separated as on eday?!?), "onloaded" (js again?), "oncomes"(?)

High Level Picture

I could spend 30 minutes (or hours) developing very rigorous hypotheses on these features to really nail down what distribution of inputs activate it & what effect it really has on the output, but that's not important.

What's important is that we have a decomposition of the data that:

1. Faithfully represents the original data (in this case, low reconstruction loss w/ minimal effect on perplexity)

2. Can simply specify desired traits in the model (e.g. honesty, non-deception)

There is still plenty of work to do to ensure the above two work (including normal hyperparameter search), but it'd be great to have more people working on this (I don't have time to mentor, but I can answer questions & help get you set up) if that's helping our work or integrating it in your work.

This work is legit though, and there's a recent paper that finds similar features in a BERT model.

6 comments

Comments sorted by top scores.

comment by StefanHex (Stefan42) · 2023-07-09T10:27:23.272Z · LW(p) · GW(p)

Nice work! I'm especially impressed by the [word] and [word] example: This cannot be read-off the embeddings, thus the model must be actually computing and storing this feature somewhere! I think this is exciting since the things we care about (deception etc.) are also definitely not included in the embeddings. I think you could make a similar case for Title Case and Beginning & End of First Sentence but those examples look less clear, e.g. the Title Case could be mostly stored in "embedding of uppercase word that is usually lowercase".

Replies from: elriggs↑ comment by Logan Riggs (elriggs) · 2023-07-09T11:21:55.352Z · LW(p) · GW(p)

Actually any that are significantly effected in "Ablated Text" means that it's not just the embedding. Ablated Text here means I remove each token in the context & see the effect on the feature activation for the last token. This is True in the StackExchange & Last Name one (though only ~50% of activation for last-name, will still recognize last names by themselves but not activate as much).

The Beginning & End of First Sentence actually doesn't have this effect (but I think that's because removing the first word just makes the 2nd word the new first word?), but I haven't rigorously studied this.

comment by StefanHex (Stefan42) · 2023-07-09T10:26:49.803Z · LW(p) · GW(p)

Thank you for making the early write-up! I'm not entirely certain I completely understand what you're doing, could I give you my understanding and ask you to fill the gaps / correct me if you have the time? No worries if not, I realize this is a quick & early write-up!

Setup:

As previously [AF · GW] you run Pythia on a bunch of data (is this the same data for all of your examples?) and save its activations.

Then you take the residual stream activations (from which layer?) and train an autoencoder (like Lee, Dan & beren here [AF · GW]) with a single hidden layer (w/ ReLU), larger than the residual stream width (how large?), trained with an L1-regularization on the hidden activations. This L1-regularization penalizes multiple hidden activations activating at once and therefore encourages encoding single features as single neurons in the autoencoder.

Results:

You found a bunch of features corresponding to a word or combined words (= words with similar meaning?). This would be the embedding stored as a features (makes sense).

But then you also find e.g. a "German Feature", a neuron in the autoencoder that mostly activates when the current token is clearly part of a German word. When you show Uniform examples you show randomly selected dataset examples? Or randomly selected samples where the autoencoder neuron is activated beyond some threshold?

When you show Logit lens you show how strong the embedding(?) or residual stream(?) at a token projects into the read-direction of that particular autoencoder neuron?

In Ablated Text you show how much the autoencoder neuron activation changes (change at what position?) when ablating the embedding(?) / residual stream(?) at a certain position (same or different from the one where you measure the autoencoder neuron activation?). Does ablating refer to setting some activations at that position to zero, or to running the model without that word?

Note on my use of the word neuron: To distinguish residual stream features from autoencoder activations, I use neuron to refer to the hidden activation of the autoencoder (subject to an activation function) while I use feature to refer to (a direction of) residual stream activations.

Replies from: elriggs↑ comment by Logan Riggs (elriggs) · 2023-07-09T11:46:02.515Z · LW(p) · GW(p)

Setup:

Model: Pythia-70m (actually named 160M!)

Transformer lens: "blocks.2.hook_resid_post" (so layer 2)

Data: Neel Nanda's Pile-10k (slice of pile, restricted to have only 25 tokens, same as last post)

Dictionary_feature sizes: 4x residual stream ie 2k (though I have 1x, 2x, 4x, & 8x, which learned progressively more features according to the MCS metric)

Uniform Examples: separate feature activations into bins & sample from each bin (eg one from [0,1], another from [1,2])

Logit Lens: The decoder here had 2k feature directions. Each direction is size d_model, so you can directly unembed the feature direction (e.g. the German Feature) you're looking at. Additionally I subtract out several high norm tokens from the unembed, which may be an artifact of the pythia tokenizer never using those tokens (thanks Wes for mentioning this!)

Ablated Text: Say the default feature (or neuron in your words) activation of Token_pos 10 is 5, so you can remove all tokens from 0 to 10 one at a time and see the effect on the feature activation. I select the token pos by finding the max feature activating position or the uniform one described above. This at least shows some attention head dependencies, but not more complicated ones like (A or B... C) where removing A or B doesn't effect C, but removing both would.

[Note: in the examples, I switch between showing the full text for context & showing the partial text that ends on the uniformly-selected token]

comment by Noa Nabeshima (noa-nabeshima) · 2023-07-13T17:08:27.788Z · LW(p) · GW(p)

[word] and [word]

can be thought of as "the previous token is ' and'."

It might just be one of a family of linear features or ?? aspect of some other representation ?? corresponding to what the previous token is, to be used for at least induction head.

Maybe the reason you found ' and' first is because ' and' is an especially frequent word. If you train on the normal document distribution, you'll find the most frequent features first.

↑ comment by Logan Riggs (elriggs) · 2023-07-14T04:27:55.160Z · LW(p) · GW(p)

[word] and [word]

can be thought of as "the previous token is ' and'."

I think it's mostly this, but looking at the ablated text, removing the previous word before and does have a significant effect some of the time. I'm less confident on the specifics of why the previous word matter or in what contexts.

Maybe the reason you found ' and' first is because ' and' is an especially frequent word. If you train on the normal document distribution, you'll find the most frequent features first.

This is a database method, so I do believe we'd find the features most frequently present in that dataset, plus the most important for reconstruction. An example of the latter: the highest MCS feature across many layers & model sizes is the "beginning & end of first sentence" feature which appears to line up w/ the emergent outlier dimensions from Tim Dettmer's post here, but I do need to do more work to actually show that.