An Interpretability Illusion for Activation Patching of Arbitrary Subspaces

post by Georg Lange (GeorgLange), Alex Makelov (amakelov), Neel Nanda (neel-nanda-1) · 2023-08-29T01:04:18.688Z · LW · GW · 4 commentsContents

tl;dr: Introduction An abstract example of the illusion Background: activation patching Where activation patching can go wrong for subspaces Setup for the example Geometric intuition An example in the wild: MLP-In-The-Middle Illusion Finding dormant / irrelevant directions in MLP activations Real-world case study: the IOI task Distributed alignment search Finding a position direction in MLP8 Decomposing into an irrelevant and dormant direction Both components are necessary Patching along the subspace changes the output distribution of MLP8 DAS subspaces exist in MLPs with random weights Discussion and takeaways Key Takeaways/Best Practices Appendix The importance of correct model units MLPs can recover arbitrary directions in the residual stream DAS performance changes proportionally to the number of MLP neurons it is trained on None 4 comments

Produced as part of the SERI ML Alignment Theory Scholars Program - Summer 2023 Cohort

We would like to thank Atticus Geiger for his valuable feedback and in-depth discussions throughout this project.

tl;dr:

Activation patching is a common method for finding model components (attention heads, MLP layers, …) relevant to a given task. However, features rarely occupy entire components: instead, we expect them to form non-basis-aligned subspaces of these components.

We show that the obvious generalization of activation patching to subspaces is prone to a kind of interpretability illusion. Specifically, it is possible for a 1-dimensional subspace patch in the IOI task to significantly affect predicted probabilities by activating a normally dormant pathway outside the IOI circuit. At the same time, activation patching the entire MLP layer where this subspace lies has no such effect. We call this an "MLP-In-The-Middle" illusion.

We show a simple mathematical model of how this situation may arise more generally, and a priori / heuristic arguments for why it may be common in real-world LLMs.

Introduction

The linear representation hypothesis suggests that language models represent concepts as meaningful directions (or subspaces, for non-binary features) in the much larger space of possible activations. A central goal of mechanistic interpretability is to discover these subspaces and map them to interpretable variables, as they form the “units” of model computation.

However, the residual stream activations (and maybe even the neuron activations!) mostly don’t have a privileged basis. This means that many meaningful subspaces won’t be basis-aligned; rather than iterating over possible neurons and sets of neurons, we need to consider arbitrary subspaces of activations. This is a much larger search space! How can we navigate it?

A natural approach to check “how well” a subspace represents a concept is to use a subspace analogue of the activation patching technique. You run the model on input A, but with the activation along the subspace taken from an input B that differs from A only in the value of the concept in question. If the subspace encodes the information used by the model to distinguish B from A, we expect to see a corresponding change in model behavior (compared to just running on A).

Surprisingly, just because a subspace has a causal effect when patched, it doesn't have to be meaningful! In this blog post, we present a mathematical example [LW · GW] with a spurious direction (1-dimensional subspace[1]) that looks like the correct direction when patched. We then show empirical evidence in the indirect object identification task [LW · GW], where we find a direction with a causal effect on the model’s performance consistent with patching in a task-relevant binary feature, despite it being a subspace of a component that’s outside the IOI circuit. We show that this empirical example closely corresponds to the mathematical example.

We consider this result an important example of the counterintuitive properties of activation patching, and a note of caution when applying activation patching to arbitrary subspaces, such as when using techniques like Distributed Alignment Search.

An abstract example of the illusion

Background: activation patching

Consider a language model completing the sentence “The Eiffel Tower is in” with “ Paris”. How can we find which component of the model is responsible for knowing that “Paris” is the right answer for this landmark’s location? Activation patching, sometimes also referred to as “interchange intervention”, “resample ablation” or “causal tracing”, is a technique that can be used to find model components that are relevant for such a task.

Activation patching works by running the model on input A (e.g. “The Eiffel Tower is in”), storing the activation of some component c, and then running the model on input B (e.g., “The Colosseum is in”) but with the activation of c taken from A. If we find that patching a certain component makes the model output “Paris” on input B, this suggests that this component is important for the task[2].

Activation patching can be straightforwardly generalized to patching just a subspace of a component instead of the entire component, by patching in the dot products with an orthonormal basis of the subspace, but leaving dot products with the orthogonal complement the same. Equivalently, we apply a rotation (whose first rows correspond to the subspace), patch the first entries in this rotated basis, and then rotate back.

Where activation patching can go wrong for subspaces

As it turns out, activation patching over arbitrary subspaces gives us a lot of power! In particular, imagine patching a one-dimensional subspace (represented by a vector ) from B into A. Let’s decompose the activations from the two prompts along and its orthogonal complement:

where are the coefficients of the activations of A and B along . Then the patched activation will be

which simplifies to

From this formula, we see that patching allows us to effectively add multiples of it to the activation as long as the two examples differ along . In particular, if activations always lie in a subspace not containing , patching can take them “off distribution” by taking them outside this subspace - and this gives us room to do counterintuitive things with the representation.

Let’s unpack this a bit. In order for the patch to have an effect on model behavior, two properties are necessary:

- projections on must correlate with the information being patched (e.g., which city the landmark is in): the projected coefficient of the two activations along must be different - otherwise, if , the patched activation is identical to the original one! In a statistical sense (when doing the patch over many samples), the activation’s projection on must correlate with the variable that is different between the inputs B and A for the patch to have a strong effect.

- changing the projection along (while keeping all else the same) must cause the wanted model behavior (e.g., shift output probability from “Rome” to “Paris”): by adding a multiple of , we should be able to make the model change its behavior in a way consistent with overwriting the information being patched.

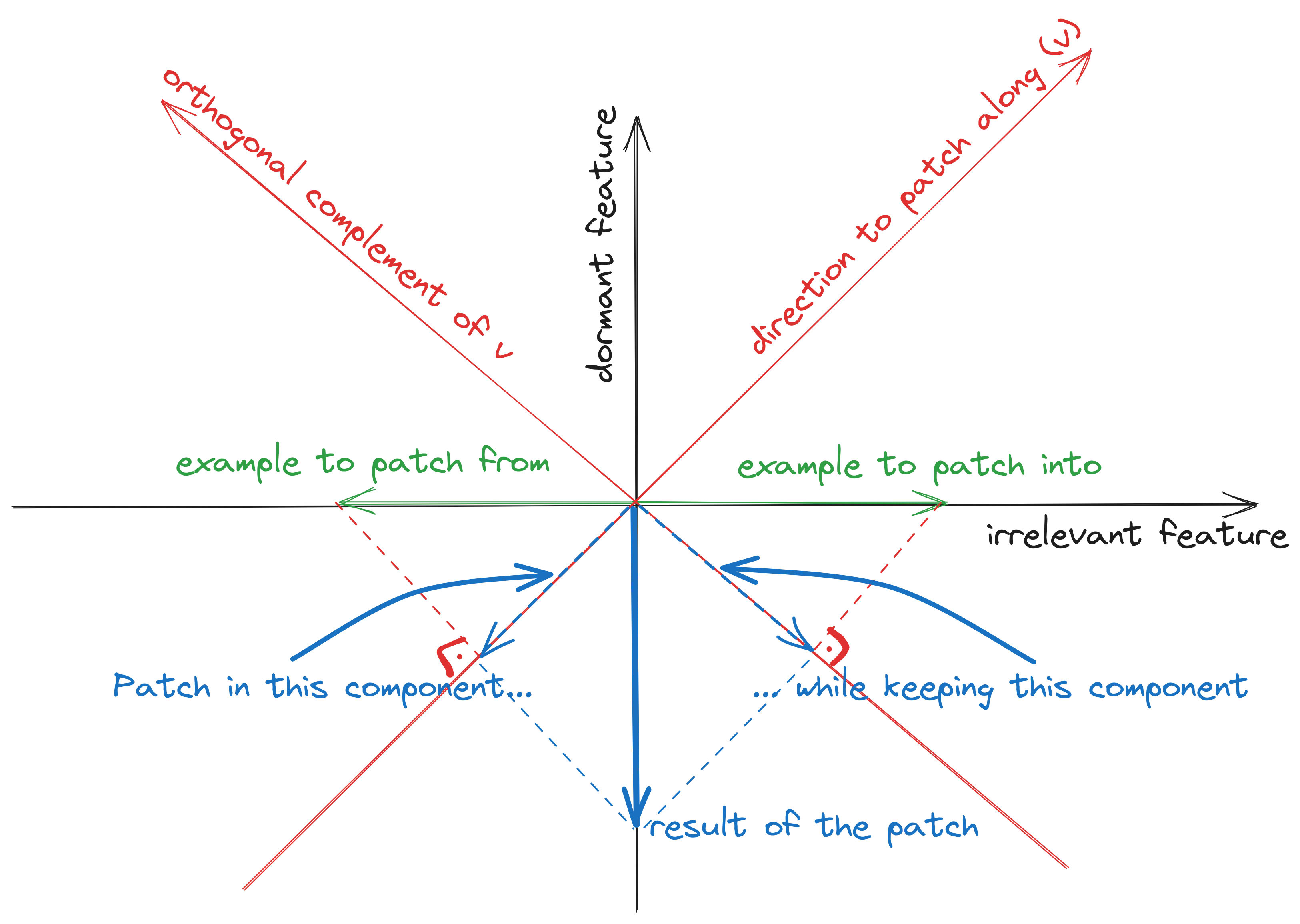

The crux of the problem is that we may get (1) and (2) from two completely different sources, even in a model component that doesn't participate in the computation. Namely, we can form as , where

- projections on are correlated (property 1.), but are unused by the network, hence we term this direction “irrelevant” (as in “irrelevant for the model’s output”)

- projections on have a causal effect (property 2.), but never vary on the data distribution, hence we term this direction “dormant”.

Choosing as the sum of the two creates a “bridge” between these two directions, such that patching along it uses the variation in the correlational component to activate the (previously inactive) causal component (see the picture below).

Note that, at first glance, it is not clear if such pairs of directions () exist at all: maybe every direction in activation space that is causal also correlates with the information being patched, or the other way around! In such worlds, the above example (and the corresponding interpretability illusion) would not exist. However, we show both a priori arguments [LW · GW] and an example in the wild [LW · GW] suggesting that this situation is common.

Let’s next distill the essence of the idea in a concrete example.

Setup for the example

In the simplest possible scenario, suppose we have a scalar input that can take values in , and we are doing regression where the target is the input itself (i.e. ). Consider a linear model with three “hidden neurons” of the form [3], where

We have , so the model implements the identity and thus performs the task perfectly. Let’s look at each of the features in the standard basis :

- The feature is the “correct” feature; it represents the value of that is taken from the input and propagated to the output. This feature is part of the ground-truth algorithm the model uses for the task.

- The feature is also equal to , but is not propagated forward, because the 2nd weight in is 0. This is a correlated but acausal feature: it is correlated with the input, but is completely unused by the network’s computation. We call it an irrelevant neuron, because it has no way to affect the output.

- The feature has no variation over the data distribution (it’s always zero). Nevertheless, this neuron has a direct connection to the output, which makes it a causal but uncorrelated feature. We call it a dormant neuron, as it never activates on the data distribution.

We are interested in patching the "feature" itself, so that patching from into should make the model output instead of .

Geometric intuition

So what could go wrong in this example when we do activation patching? Let’s first consider the case when things go right. If we patch along the subspace spanned by the first feature from input into input , we simply replace the value of the first hidden unit: becomes , and then the propagates to the output. This is summarized in the table below:

| Patch | Intermediate activation before -> after | Output before -> after |

| Into 1 from -1 | ||

| Into -1 from 1 |

However, this gets more interesting when we patch along the direction given by the sum of the 2nd and 3rd neurons . If denotes the orthogonal complement, then to patch along , we:

- take the value of the component from the example we patch from, and

- leave the value of the component unchanged.

Below is a picture illustrating how the patching works (the first neuron is omitted to fit in 2D):

This is summarized in the table below:

| Patch | Intermediate activation before -> after | Final result before -> after |

| Into 1 from -1 | ||

| Into -1 from 1 |

As we see, patching from into along results in the hidden activation ! In particular, the behavior of the model when patching along is identical to the case when we patch along the “true” subspace . This is counterintuitive for a few reasons:

- On the data distribution for our task, the feature is dormant (it has a constant value), yet patching along the direction can make it alive!

- Despite the fact that we patch between examples where the irrelevant neuron is active and the dormant neuron is constant, in the patched activation this is flipped: the irrelevant neuron has become constant, while the dormant neuron varies!

We remark that this construction extends to subspaces of arbitrary dimension by e.g. adding more irrelevant directions.

We term this an “illusion”, because the patch succeeds by turning on a “dormant” neuron, and never even touching the correct circuit for the task. Note that calling this an illusion may be considered a judgment call, and this could be viewed as patching working as intended; see the appendix [LW · GW] for more discussion of the nuances here.

An example in the wild: MLP-In-The-Middle Illusion

Finding dormant / irrelevant directions in MLP activations

Why do we expect to find dormant and irrelevant directions in the wild? While they might seem like a waste of model capacity, there are a priori / heuristic arguments for their existence in MLP layers specifically:

- dormant directions - directions that have a meaningful causal effect on model behavior and yet don’t activate on the data - are expected to exist in the hidden activations of MLP layers:

- Layers before and after the MLP layer are likely doing significant communication via the residual stream (a.k.a. the “information bottleneck” of the transformer). In particular, we expect any given MLP layer to not participate in many of these computations. Indeed, prior work often finds that such communication skips many intermediate layers, as any given circuit relies on only a few layers of the model. The communicated information corresponds to directions in the residual stream.

- The matrix of an MLP layer - typically a matrix - is empirically an (effectively) full rank matrix, which means that any direction in the residual stream it writes to has a preimage in the neuron activations. Thus, the MLP layer has the “dormant potential” to write to a meaningful direction in the residual stream, even if it is not part of the corresponding circuit’s computation.

- irrelevant directions - directions that correlate with a property of the data, but don’t affect model output - are also expected to exist in MLPs:

- The matrix of an MLP layer - a matrix - is also an effectively full-rank matrix. This suggests that any concept linearly represented in the residual stream input to the MLP (even if it has no causal role in the model - which empirically happens quite often) is also linearly recoverable from the hidden neuron pre-activations.

- The subsequent non-linearity may destroy some information, but since there’s four times as many dimensions, we heuristically expect most of it should still be recoverable. We don’t offer proof of this, but we empirically tested this [LW · GW] by trying to recover random directions in the residual stream from MLP activations, and find that this is possible significantly above chance for all layers and arbitrary directions.

In summary, whenever some circuit involves two layers communicating some feature via the residual stream (i.e. using some skip connections), we predict that an MLP layer in the middle has a good chance of having a causal effect as in our mathematical example[4]. Next, we discuss a real-world example where we found the elements of our abstract illusion.

Real-world case study: the IOI task

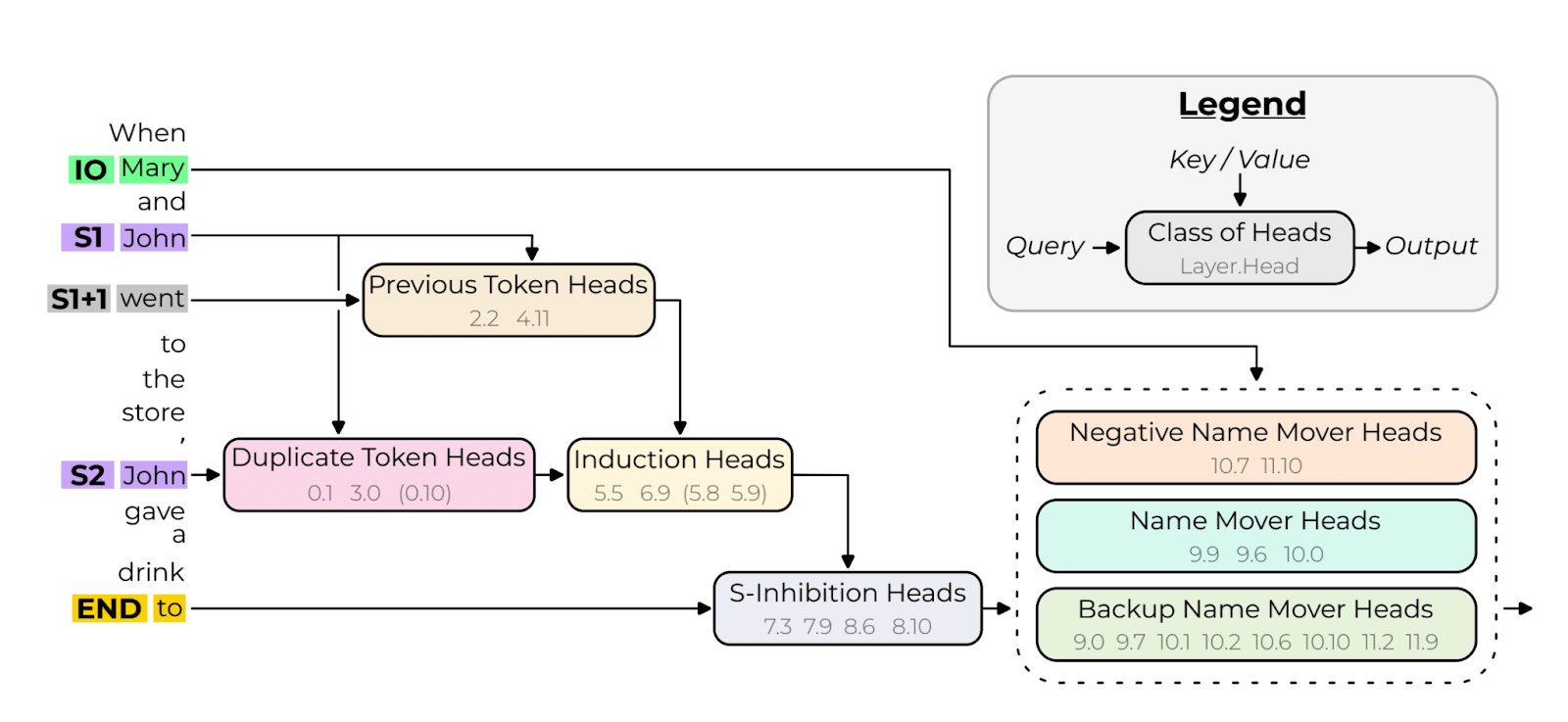

In the indirect-object-identification (IOI) task, the model is prompted with sentences like "Lisa and Max went to the park and Max gave a flower to”, with “ Lisa” being the correct completion. Prior work has shown that there is a group of 4 heads in layers 7 and 8, the S-Inhibition heads, which compute information about both the position of the repeated name (first in the sentence, as in “Max and Lisa… Max gave…” vs second in the sentence, as in “Lisa and Max… Max gave…”) and the identity of the repeated name in the sentence. After that, another class of heads (the name mover heads) in layers 9, 10 and 11 use this as a signal to attend to the name that only appears once in the sentence, so that they can copy it to the final output.

Intuitively, the position signal (1st vs 2nd name in the sentence) seems like it could plausibly be represented as a binary feature (see also work on synthetic positional embeddings) occupying a one-dimensional subspace. How can we find this subspace?

Distributed alignment search

To find a subspace corresponding to the position information as it is used by the model, we used Distributed Alignment Search (DAS), a technique to find task-related subspaces using gradient descent. Specifically:

- We ran DAS to optimize for a 1-dimensional subspace (a direction) of the residual stream after layer 8 at the final (END) token. This is one of two residual stream locations after all S-Inhibition heads and before all name mover heads.

- The objective of the optimization is to find a direction whose patching from an ABB pattern prompt (repeated name is 2nd in the sentence) into its corresponding BAB prompt (repeated name is 1st in the sentence) flips the prediction of the model, while patching from a prompt to itself keeps the prediction the same.

The direction found is able to flip model predictions about 80% of the time when patching between prompts that differ only in position information (on a test set using other templates and names). But how does it do that? We extensively validated that this subspace does the “right” thing:

- We computed another candidate direction with the same role by taking the difference between the means of the prompts where the repeated name comes first vs prompts where it comes second. We found that this direction was quite similar (~0.85 cosine similarity) with the DAS direction (though it had a smaller causal effect when patched)

- We ran path patching to check how the intervention achieves its causal effect, and found that it does this largely via the name-mover and backup-name mover heads, with attribution scores close to those observed without the intervention.

These sanity checks and the single-dimensional nature of the subspace make us fairly confident that this is indeed a causally meaningful subspace of model activations representing the position information as used by the model. However, is this the only subspace whose patching has a causal effect like this?

Finding a position direction in MLP8

We can try to find another direction by running DAS on any other model component. However, a particularly interesting one is MLP8: it is between the S-Inhibition and name mover heads - so can potentially serve as a “middleman” for the signal transmitted between them - but isn’t implicated in the IOI circuit. We run the optimization described above, but this time on the hidden neuronal activations (after non-linearity) of MLP8 at the END token.

We find that while patching the full activations between examples with opposite position information (ABB -> BAB and vice versa) increases the logit difference between the correct and incorrect name by ~0.4 (so is in fact helpful for the task), patching just the direction that DAS finds decreases it by ~1.3, a ~3x stronger effect in the opposite direction. For some intuition, this is about ~30-40% of the logit difference lost when patching the entire residual stream after layer 8 at the END token, which brings model performance down to ~30% (from ~99%). This is a nontrivial contribution, since the true circuit itself is distributed across multiple heads - so this MLP would appear as a significant circuit component.

How does patching the direction achieve this causal effect? Let be the subspace direction that we found in MLP8, and let be the “ground truth” position direction in the residual stream we validated earlier. We find that yields a direction very close to - the cosine similarity is[5] 0.52. This suggests that the downstream causal mechanism is similar to that of the “ground truth” direction we found.

Next, we will show how this direction maps onto the mathematical example [LW · GW] of the illusion, by showing it can be (approximately) seen as the sum of an irrelevant and dormant neuron.

Decomposing into an irrelevant and dormant direction

How could we decompose our subspace to match the two components in our mathematical example? As explained in our heuristic arguments [LW · GW], always has a high-dimensional null space, and all vectors in it have no causal effect on the output. Thus, it is natural to use the orthogonal decomposition

where is the component of in the nullspace of and is the remaining part (the component in the orthogonal complement of the nullspace). We hypothesize that is the irrelevant component and is the dormant component. We find that

in other words, the direction is about equal parts in the nullspace and its orthogonal complement.

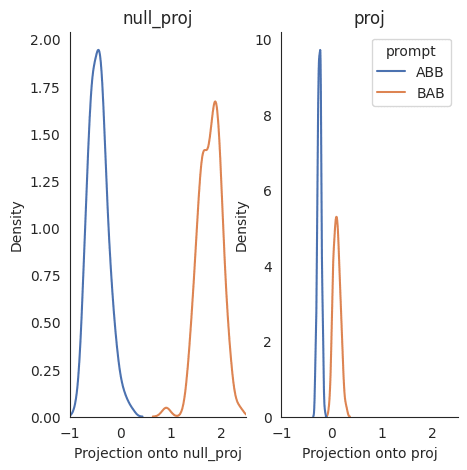

Next, we show that the nullspace component is much more correlated with the position information than the remaining component . We sample a set of prompts which are of the form ABB or ABA, take their MLP8 activations and project those onto and :

We see that both and are correlated with the position information. However, the difference between ABB and ABA projections onto the is much bigger compared to . Thus, we seem to have found a decomposition that quite well matches the mathematical construction of the illusion.

Both components are necessary

The component is logically necessary, because otherwise the patch can have no causal effect. But is necessary at all for the direction to have a causal effect? To investigate this, we patched along , the part of the DAS subspace that does not point into the nullspace of . The result is that the effect (as measured in logit difference decrease) decreases by a factor of ~3.3x.

Thus, both components are necessary for the patch to have its causal effect. We also note that patching the 2-dimensional subspace spanned by and also loses the effect of the patch. This makes sense given the discussion of our mathematical example: by patching both components, there is no interaction between them, and consequently the causal effect is limited by exploiting the (relatively weak) correlation in the direction.

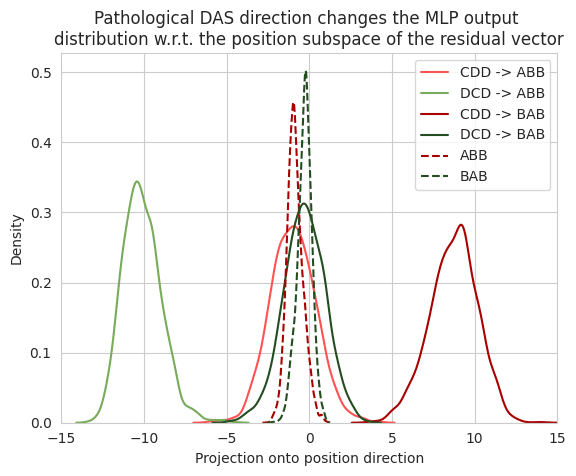

Patching along the subspace changes the output distribution of MLP8

Next, we show that patching along the MLP8 direction activates a “dormant” pathway in the model, so that the output of MLP8 in the residual stream contributes significantly to activations along the “ground truth” subspace in the residual stream, where previously there was no such contribution.

Specifically, we patch between prompts with unrelated names for all combinations of position (1st vs 2nd) in the two names. For example, DCD -> ABB means patching from a sentence (DCD) where the repeated name comes first into a sentence (ABB) where it comes second and the two names A, B are different from C, D. For each combination, we project the activations of the output of MLP8 on the “ground truth” residual stream direction . The dashed distributions are the corresponding projections without any intervention grouped by position:

The clean (unpatched) projections are relatively close to zero and don't differ much between different name patterns, suggesting that MLP8 doesn’t write much to the position subspace (as we also confirmed with activation patching the entire MLP8). The same is true when we patch patterns containing the same positional information (e.g. CDD -> ABB or DCD -> BAB), which makes sense given the formula for patching [LW · GW]: in this case, and are close to each other. This is consistent with the desired causal effect, since in this case the intervention should ideally not change anything.

However, when we patch in the opposite positional information, MLP8 suddenly writes a large value to the position subspace. Thus, patching activates a previously dormant direction, suggesting that the subspace isn’t faithful to the model’s actual computation on the task.

DAS subspaces exist in MLPs with random weights

Finally, how certain are we that MLP8 doesn’t actually matter for the IOI task? While we find the IOI paper analysis convincing, to make our results more robust to the possibility that it does matter, we also design a further experiment.

Specifically, we randomly sampled MLP weights and biases such that the norm of the output activations matches those of MLP8. As random MLPs might lead to nonsensical text generation, we don’t replace MLP8 with the random MLP, but rather train a subspace using DAS on the MLP activations and add the difference between the patched and unpatched output of the random MLP to the real output of MLP8. This setup finds a subspace that reduces logit difference even more than the direction.

This suggests that the existence of the subspace is less about what information MLP8 contains, and more about where MLP8 is in the network.

Discussion and takeaways

What conclusions can we draw from our findings? First, let's take a step back. The core assumptions of mechanistic interpretability are that

- models implement localized algorithms to solve reasonably bounded tasks

- there is value in reverse engineering and understanding the building blocks of these algorithms - as opposed to just their end-to-end behavior.

But what are the right building blocks, and how do we find them? Many existing mechanistic analyses focus on components (attention heads, MLP layers) as building blocks (IOI, the docstring circuit [LW · GW], ...), and make heavy use of activation patching between carefully chosen examples to localize and verify the causal relevance of a component.

However, each component represents multiple features (and sometimes even multiple task-relevant features, like name and position information in the IOI task). To precisely localize a feature of interest, we need to zoom in on subspaces of these components - and as we discussed [LW(p) · GW(p)], these subspaces need not be basis-aligned.

To do this, a natural idea is to make activation patching more surgical, and carry out the same kind of analysis on arbitrary subspaces of components. A natural intuition one might have here is: if patching an entire component has no causal effect, then so will patching any subspace of it.

As we saw in this blog post, this intuition is wrong[6]! In particular, we took a circuit heavily analyzed on the component level, and then found a direction in an MLP layer whose patching has a strong causal effect on model behavior, yet the MLP is outside the circuit.

If we only consider the end-to-end effect of this intervention, it looks perfectly reasonable: we do the patch, and the model behaves as if the intended feature was patched. However, it raises an alarming question: should we trust the component-level analysis (which tells us that the MLP layer doesn’t matter much) or the subspace-level analysis (which tells us that it somewhat matters)?

We suggest that we should answer this by performing a more thorough mechanistic analysis of model internals, as opposed to an end-to-end evaluation. In particular,

- We showed that patching the direction activates a previously “dormant” pathway outside the component-level circuit through which the causal effect is achieved.

- In fact, we showed that we can find such a direction in MLP activations without even looking at the MLP weights, suggesting that its existence is a result of where the MLP layer is in the model, rather than what information it computes.

- We independently found another direction in the residual stream of the IOI circuit where patching has an analogous causal effect. We validated in multiple ways (such as path patching and an alternative construction) that this direction achieves its causal effect through pathways consistent with the IOI circuit.

We believe that these results suggest that the within-circuit direction is a more faithful explanation of model behavior than the direction found in the MLP layer.

Key Takeaways/Best Practices

More broadly, what are we to take away from this phenomenon?

- The concept of “building block” is surprisingly nuanced: as we saw, patching entire components vs patching subspaces can give quite different results.

- Nevertheless, we still believe that activation patching is a useful tool for component-level analysis

- In particular, we still believe that individual model components (attention heads, MLP layers) are meaningful units of analysis, as many different strands of research suggest they specialize in different tasks, both across depth in the model (early vs late layers) and across component types (heads vs MLPs).

- Searching for meaningful subspaces (e.g. via DAS) is promising, but requires extra validation:

- If there is a meaningful subspace in the component where we run the search, we believe there’s a good chance a method like DAS will find it.

- On the other hand, if we search where no such subspace exists, methods like DAS may fabricate one!

- This is especially relevant when optimizing over an individual model component as opposed to the residual stream. The reason is that such components may not be task-relevant, yet may read to a lot of task-correlated information, while also having the ability to output a direction with a causal effect on the task into the residual stream.

- Activation patching alone is insufficient to validate a meaningful subspace:

- When patching along subspaces of activations, additional experiments are necessary to distinguish pathological cases from ones consistent with the algorithm implemented by the model.

- In particular, simply evaluating end-to-end causal effects is not enough.

Appendix

The importance of correct model units

There is a subtlety in our abstract example. Suppose we reparametrized our hidden layer so that instead of the standard basis we are using a “rotated” basis where one of the directions is , the other direction is orthogonal to it and to the vector (so it will be irrelevant), and the last direction is the one orthogonal to these two. You can work out that this basis is given by the (unnormalized) directions

and in this case,

- is a correlated and causal direction

- is a correlated but acausal direction

- is an uncorrelated but causal direction!

So from this perspective, it looks like is the correct direction used by the model, and is just a weird add-on! This raises an important question: on what grounds can we claim that the direction is “more meaningful” than ?

The answer lies beyond the scope of the abstract example, and boils down to prior beliefs about which model units should be considered “meaningful”. For example, when we realize this abstract example in the wild, the role of is (loosely speaking) played by a direction in the summed outputs of the S-Inhibition heads, whereas , map to directions in the neuron activations of MLP8. We have prior reasons to believe that the S-Inhibition heads are crucial for the IOI circuit, whereas MLP8 is not part of the circuit. This gives us grounds to claim that is a preferable solution to .

More generally, multiple strands of evidence suggest that attention heads and MLP layers do different kinds of computations in models. This gives us some prior reason to analyze individual MLPs and attention heads as separate units. Conversely, if we could arbitrarily group model components into single “units”, then we could group the MLP8 layer with the S-Inhibition heads, and this would dramatically affect some of the analyses of the illusion. For example, patching along the MLP8 direction would no longer take the output of this “Frankensteinian” component off-distribution - one of our main reasons to consider this subspace “weird”!

This is a very concrete example of how our implicit assumptions about the units of analysis may affect the conclusions of interpretability experiments. We believe it’s always better to keep these assumptions explicitly in mind when doing interpretability work.

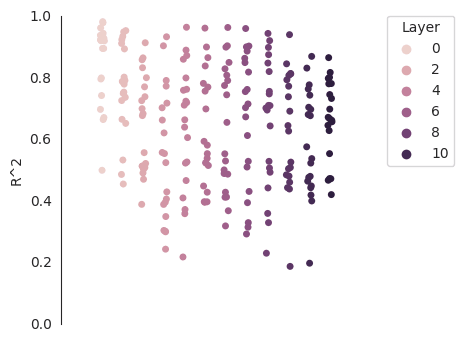

MLPs can recover arbitrary directions in the residual stream

For the interpretability illusion to work, there has to be a direction in the MLP that correlates with the task. A priori, we expect the input weight of an MLP layer to be a (close to) full rank transform, which implies that “most of the information” in the residual stream will also be present in the neuron activations. In particular, it seems plausible (though certainly not logically necessary) that examples linearly separable in the residual stream remain linearly separable in the neuron activations.

Is this true in practice? To test this, we

- run the model (gpt2-small) on random samples from its data distribution (we used OpenWebText-10k) and extract 2000 activations of an MLP-layer after the non-linearity.

- train a linear regression with -regularization to recover the dot product of the residual stream immediately before the MLP-layer of interest and a randomly-chosen direction.

- We repeat this experiment with different random vectors and for each layer in gpt2-small. We observe that all regressions are better than chance and explain a significant amount of variance on the held-out test set (R^2 = 0.71 +/- 0.17, MSE = 0.31+/- 0.18, p < 0.005).

Results are shown in the graph below (every marker corresponds to one regression model using a different random direction):

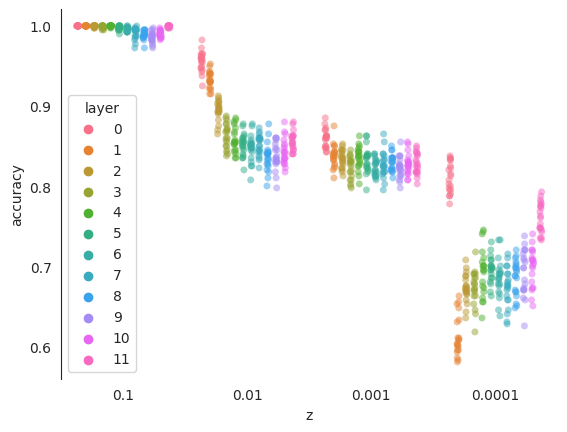

The S-position subspace in the IOI task is a binary feature, so we are also interested in whether binary information in general is linearly recoverable from the MLP activations. To test this, we sample activations from the model run on randomly-sampled prompts. This time however, we add or subtract a multiple of a random direction to the residual stream and calculate the MLP activations based on this new vector:

y: either -1 or 1

z: a scaling factor

v: a randomly-sampled direction with unit norm

For each classifier, we randomly sample a direction v that we either add or subtract (using y) from the residual stream. The classifier is trained to predict y. We rescale v to match the average norm of a residual vector and then scale it with a small scalar z.

Then, a logistic classifier is trained (C=10000) on 1600 samples. Again, we repeat this experiment for different v, z, and for each layer. We observe that the classifier works quite well across layers even with very small values of z, (still, accuracy drops for z = 0.0001).

| z | mean accuracy |

| 0.0001 | 0.69 |

| 0.001 | 0.83 |

| 0.01 | 0.87 |

| 0.1 | 0.996 |

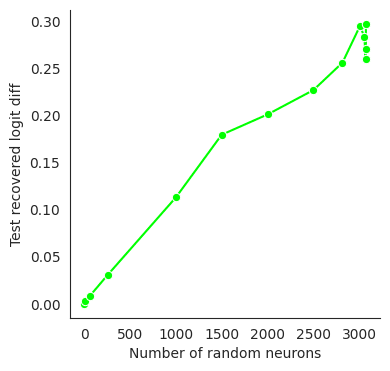

DAS performance changes proportionally to the number of MLP neurons it is trained on

Here, we want to investigate how much the ability to find a pathological subspace depends on the overparameterization of the MLP layer. We train DAS on MLP8 again, but only allowing it to change a limited number of neurons.

We observe a linear relationship between the number of neurons used for training and performance of DAS (y axis measures the fraction of logit difference lost compared to patching the entire residual stream after layer 8 at the END token):

- ^

The mathematical example straightforwardly generalizes to higher-dimensional subspaces.

- ^

Care needs to be taken when choosing the two prompts, so that as much of the structure in the sentence is the same, yet the intended prediction is different. Our example has the nice property of controlling for all other features present in the original example (e.g. that this is a sentence about the location of a landmark, that it is in English, etc). Imagine patching into “The capital of Italy is” instead - there’s a subtle difference in what such a patching would show!

- ^

The coefficient 2 in is there for technical reasons

- ^

We also show that this effect really benefits from the fact that the hidden layer has more dimensions than the residual stream in the appendix [LW · GW].

- ^

As a baseline, the cosine sim between two random vectors in this space is ~0.036

- ^

Another way this intuition could fail is if we have e.g. some layer containing two attention heads, one with a positive contribution to the logit difference, the other with a matching negative contribution. In other words, the heads are perfectly anticorrelated. If we patch the entire layer, the sum of the two heads will remain zero. However, if we patch only one of the heads, we may suddenly observe a downstream effect. This scenario seems less intuitively likely than our mathematical example, because it hinges on the heads (nearly) perfectly canceling each other.

However, we also note that this example is isomorphic to our mathematical example under a change of basis - though with the important difference that here the different components of the pathological subspace are in different model units, which gives us more reason to take this scenario seriously

- ^

In practice, model computation tends to be “sparse” and most features are only written to by a handful of layers. So it is common that an MLP layer “could” write to some feature, which is present in the residual stream, but never does.

4 comments

Comments sorted by top scores.

comment by Neel Nanda (neel-nanda-1) · 2023-08-29T08:08:12.839Z · LW(p) · GW(p)

I'm really excited to see this come out! I initially found the counter-example pretty hard to get my head around, but it now feels like a deep and important insight when thinking about using gradient descent based techniques like DAS safely.

comment by Thomas Kwa (thomas-kwa) · 2024-01-26T01:19:00.431Z · LW(p) · GW(p)

If SAE features are the correct units of analysis (or at least more so than neurons), should we expect that patching in the feature basis is less susceptible to the interpretability illusion than in the neuron basis?

Replies from: neel-nanda-1↑ comment by Neel Nanda (neel-nanda-1) · 2024-01-26T09:43:44.109Z · LW(p) · GW(p)

The illusion is most concerning when learning arbitrary directions in space, not when iterating over individual neurons OR SAE features. I don't have strong takes on whether the illusion is more likely with neurons than SAEs if you're eg iterating over sparse subsets, in some sense it's more likely that you get a dormant and a disconnected feature in your SAE than as neurons since they are more meaningful?

comment by Filip Sondej · 2024-05-07T12:02:46.586Z · LW(p) · GW(p)

What if we constrain v to be in some subspace that is actually used by the MLP? (We can get it from PCA over activations on many inputs.)

This way v won't have any dormant component, so the MLP output after patching also cannot use that dormant pathway.