Why We Can't Take Expected Value Estimates Literally (Even When They're Unbiased)

post by HoldenKarnofsky · 2011-08-18T23:34:12.099Z · LW · GW · Legacy · 253 commentsContents

The approach we oppose: "explicit expected-value" (EEV) decisionmaking Simple example of a Bayesian approach vs. an EEV approach Applying Bayesian adjustments to cost-effectiveness estimates for donations, actions, etc. Pascal's Mugging Generalizing the Bayesian approach Conclusion None 253 comments

Note: I am cross-posting this GiveWell Blog post, after consulting a couple of community members, because it is relevant to many topics discussed on Less Wrong, particularly efficient charity/optimal philanthropy and Pascal's Mugging. The post includes a proposed "solution" to the dilemma posed by Pascal's Mugging that has not been proposed before as far as I know. It is longer than usual for a Less Wrong post, so I have put everything but the summary below the fold. Also, note that I use the term "expected value" because it is more generic than "expected utility"; the arguments here pertain to estimating the expected value of any quantity, not just utility.

While some people feel that GiveWell puts too much emphasis on the measurable and quantifiable, there are others who go further than we do in quantification, and justify their giving (or other) decisions based on fully explicit expected-value formulas. The latter group tends to critique us - or at least disagree with us - based on our preference for strong evidence over high apparent "expected value," and based on the heavy role of non-formalized intuition in our decisionmaking. This post is directed at the latter group.

We believe that people in this group are often making a fundamental mistake, one that we have long had intuitive objections to but have recently developed a more formal (though still fairly rough) critique of. The mistake (we believe) is estimating the "expected value" of a donation (or other action) based solely on a fully explicit, quantified formula, many of whose inputs are guesses or very rough estimates. We believe that any estimate along these lines needs to be adjusted using a "Bayesian prior"; that this adjustment can rarely be made (reasonably) using an explicit, formal calculation; and that most attempts to do the latter, even when they seem to be making very conservative downward adjustments to the expected value of an opportunity, are not making nearly large enough downward adjustments to be consistent with the proper Bayesian approach.

This view of ours illustrates why - while we seek to ground our recommendations in relevant facts, calculations and quantifications to the extent possible - every recommendation we make incorporates many different forms of evidence and involves a strong dose of intuition. And we generally prefer to give where we have strong evidence that donations can do a lot of good rather than where we have weak evidence that donations can do far more good - a preference that I believe is inconsistent with the approach of giving based on explicit expected-value formulas (at least those that (a) have significant room for error (b) do not incorporate Bayesian adjustments, which are very rare in these analyses and very difficult to do both formally and reasonably).

The rest of this post will:

- Lay out the "explicit expected value formula" approach to giving, which we oppose, and give examples.

- Give the intuitive objections we've long had to this approach, i.e., ways in which it seems intuitively problematic.

- Give a clean example of how a Bayesian adjustment can be done, and can be an improvement on the "explicit expected value formula" approach.

- Present a versatile formula for making and illustrating Bayesian adjustments that can be applied to charity cost-effectiveness estimates.

- Show how a Bayesian adjustment avoids the Pascal's Mugging problem that those who rely on explicit expected value calculations seem prone to.

- Discuss how one can properly apply Bayesian adjustments in other cases, where less information is available.

- Conclude with the following takeaways:

- Any approach to decision-making that relies only on rough estimates of expected value - and does not incorporate preferences for better-grounded estimates over shakier estimates - is flawed.

- When aiming to maximize expected positive impact, it is not advisable to make giving decisions based fully on explicit formulas. Proper Bayesian adjustments are important and are usually overly difficult to formalize.

- The above point is a general defense of resisting arguments that both (a) seem intuitively problematic (b) have thin evidential support and/or room for significant error.

The approach we oppose: "explicit expected-value" (EEV) decisionmaking

We term the approach this post argues against the "explicit expected-value" (EEV) approach to decisionmaking. It generally involves an argument of the form:

I estimate that each dollar spent on Program P has a value of V [in terms of lives saved, disability-adjusted life-years, social return on investment, or some other metric]. Granted, my estimate is extremely rough and unreliable, and involves geometrically combining multiple unreliable figures - but it's unbiased, i.e., it seems as likely to be too pessimistic as it is to be too optimistic. Therefore, my estimate V represents the per-dollar expected value of Program P.

I don't know how good Charity C is at implementing Program P, but even if it wastes 75% of its money or has a 75% chance of failure, its per-dollar expected value is still 25%*V, which is still excellent.

Examples of the EEV approach to decisionmaking:

- In a 2010 exchange, Will Crouch of Giving What We Can argued:

DtW [Deworm the World] spends about 74% on technical assistance and scaling up deworming programs within Kenya and India … Let’s assume (very implausibly) that all other money (spent on advocacy etc) is wasted, and assess the charity solely on that 74%. It still would do very well (taking DCP2: $3.4/DALY * (1/0.74) = $4.6/DALY – slightly better than their most optimistic estimate for DOTS (for TB), and far better than their estimates for insecticide treated nets, condom distribution, etc). So, though finding out more about their advocacy work is obviously a great thing to do, the advocacy questions don’t need to be answered in order to make a recommendation: it seems that DtW [is] worth recommending on the basis of their control programs alone.

- The Back of the Envelope Guide to Philanthropy lists rough calculations for the value of different charitable interventions. These calculations imply (among other things) that donating for political advocacy for higher foreign aid is between 8x and 22x as good an investment as donating to VillageReach, and the presentation and implication are that this calculation ought to be considered decisive.

- We've encountered numerous people who argue that charities working on reducing the risk of sudden human extinction must be the best ones to support, since the value of saving the human race is so high that "any imaginable probability of success" would lead to a higher expected value for these charities than for others.

- "Pascal's Mugging" is often seen as the reductio ad absurdum of this sort of reasoning. The idea is that if a person demands $10 in exchange for refraining from an extremely harmful action (one that negatively affects N people for some huge N), then expected-value calculations demand that one give in to the person's demands: no matter how unlikely the claim, there is some N big enough that the "expected value" of refusing to give the $10 is hugely negative.

The crucial characteristic of the EEV approach is that it does not incorporate a systematic preference for better-grounded estimates over rougher estimates. It ranks charities/actions based simply on their estimated value, ignoring differences in the reliability and robustness of the estimates. Informal objections to EEV decisionmaking There are many ways in which the sort of reasoning laid out above seems (to us) to fail a common sense test.

- There seems to be nothing in EEV that penalizes relative ignorance or relatively poorly grounded estimates, or rewards investigation and the forming of particularly well grounded estimates. If I can literally save a child I see drowning by ruining a $1000 suit, but in the same moment I make a wild guess that this $1000 could save 2 lives if put toward medical research, EEV seems to indicate that I should opt for the latter.

- Because of this, a world in which people acted based on EEV would seem to be problematic in various ways.

- In such a world, it seems that nearly all altruists would put nearly all of their resources toward helping people they knew little about, rather than helping themselves, their families and their communities. I believe that the world would be worse off if people behaved in this way, or at least if they took it to an extreme. (There are always more people you know little about than people you know well, and EEV estimates of how much good you can do for people you don't know seem likely to have higher variance than EEV estimates of how much good you can do for people you do know. Therefore, it seems likely that the highest-EEV action directed at people you don't know will have higher EEV than the highest-EEV action directed at people you do know.)

- In such a world, when people decided that a particular endeavor/action had outstandingly high EEV, there would (too often) be no justification for costly skeptical inquiry of this endeavor/action. For example, say that people were trying to manipulate the weather; that someone hypothesized that they had no power for such manipulation; and that the EEV of trying to manipulate the weather was much higher than the EEV of other things that could be done with the same resources. It would be difficult to justify a costly investigation of the "trying to manipulate the weather is a waste of time" hypothesis in this framework. Yet it seems that when people are valuing one action far above others, based on thin information, this is the time when skeptical inquiry is needed most. And more generally, it seems that challenging and investigating our most firmly held, "high-estimated-probability" beliefs - even when doing so has been costly - has been quite beneficial to society.

- Related: giving based on EEV seems to create bad incentives. EEV doesn't seem to allow rewarding charities for transparency or penalizing them for opacity: it simply recommends giving to the charity with the highest estimated expected value, regardless of how well-grounded the estimate is. Therefore, in a world in which most donors used EEV to give, charities would have every incentive to announce that they were focusing on the highest expected-value programs, without disclosing any details of their operations that might show they were achieving less value than theoretical estimates said they ought to be.

- If you are basing your actions on EEV analysis, it seems that you're very open to being exploited by Pascal's Mugging: a tiny probability of a huge-value expected outcome can come to dominate your decisionmaking in ways that seem to violate common sense. (We discuss this further below.)

- If I'm deciding between eating at a new restaurant with 3 Yelp reviews averaging 5 stars and eating at an older restaurant with 200 Yelp reviews averaging 4.75 stars, EEV seems to imply (using Yelp rating as a stand-in for "expected value of the experience") that I should opt for the former. As discussed in the next section, I think this is the purest demonstration of the problem with EEV and the need for Bayesian adjustments.

In the remainder of this post, I present what I believe is the right formal framework for my objections to EEV. However, I have more confidence in my intuitions - which are related to the above observations - than in the framework itself. I believe I have formalized my thoughts correctly, but if the remainder of this post turned out to be flawed, I would likely remain in objection to EEV until and unless one could address my less formal misgivings.

Simple example of a Bayesian approach vs. an EEV approach

It seems fairly clear that a restaurant with 200 Yelp reviews, averaging 4.75 stars, ought to outrank a restaurant with 3 Yelp reviews, averaging 5 stars. Yet this ranking can't be justified in an EEV-style framework, in which options are ranked by their estimated average/expected value. How, in fact, does Yelp handle this situation?

Unfortunately, the answer appears to be undisclosed in Yelp's case, but we can get a hint from a similar site: BeerAdvocate, a site that ranks beers using submitted reviews. It states:

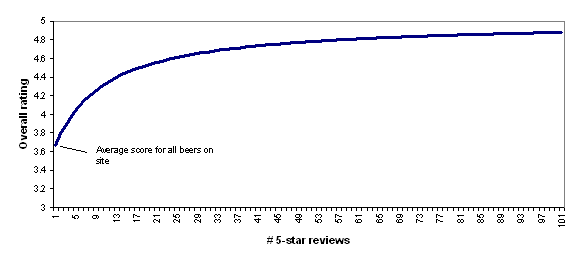

Lists are generated using a Bayesian estimate that pulls data from millions of user reviews (not hand-picked) and normalizes scores based on the number of reviews for each beer. The general statistical formula is: weighted rank (WR) = (v ÷ (v+m)) × R + (m ÷ (v+m)) × C where: R = review average for the beer v = number of reviews for the beer m = minimum reviews required to be considered (currently 10) C = the mean across the list (currently 3.66)

In other words, BeerAdvocate does the equivalent of giving each beer a set number (currently 10) of "average" reviews (i.e., reviews with a score of 3.66, which is the average for all beers on the site). Thus, a beer with zero reviews is assumed to be exactly as good as the average beer on the site; a beer with one review will still be assumed to be close to average, no matter what rating the one review gives; as the number of reviews grows, the beer's rating is able to deviate more from the average.

To illustrate this, the following chart shows how BeerAdvocate's formula would rate a beer that has 0-100 five-star reviews. As the number of five-star reviews grows, the formula's "confidence" in the five-star rating grows, and the beer's overall rating gets further from "average" and closer to (though never fully reaching) 5 stars.

I find BeerAdvocate's approach to be quite reasonable and I find the chart above to accord quite well with intuition: a beer with a small handful of five-star reviews should be considered pretty close to average, while a beer with a hundred five-star reviews should be considered to be nearly a five-star beer.

However, there are a couple of complications that make it difficult to apply this approach broadly.

- BeerAdvocate is making a substantial judgment call regarding what "prior" to use, i.e., how strongly to assume each beer is average until proven otherwise. It currently sets the m in its formula equal to 10, which is like giving each beer a starting point of ten average-level reviews; it gives no formal justification for why it has set m to 10 instead of 1 or 100. It is unclear what such a justification would look like. In fact, I believe that BeerAdvocate used to use a stronger "prior" (i.e., it used to set m to a higher value), which meant that beers needed larger numbers of reviews to make the top-rated list. When BeerAdvocate changed its prior, its rankings changed dramatically, as lesser-known, higher-rated beers overtook the mainstream beers that had previously dominated the list.

- In BeerAdvocate's case, the basic approach to setting a Bayesian prior seems pretty straightforward: the "prior" rating for a given beer is equal to the average rating for all beers on the site, which is known. By contrast, if we're looking at the estimate of how much good a charity does, it isn't clear what "average" one can use for a prior; it isn't even clear what the appropriate reference class is. Should our prior value for the good-accomplished-per-dollar of a deworming charity be equal to the good-accomplished-per-dollar of the average deworming charity, or of the average health charity, or the average charity, or the average altruistic expenditure, or some weighted average of these? Of course, we don't actually have any of these figures. For this reason, it's hard to formally justify one's prior, and differences in priors can cause major disagreements and confusions when they aren't recognized for what they are. But this doesn't mean the choice of prior should be ignored or that one should leave the prior out of expected-value calculations (as we believe EEV advocates do).

Applying Bayesian adjustments to cost-effectiveness estimates for donations, actions, etc.

As discussed above, we believe that both Giving What We Can and Back of the Envelope Guide to Philanthropy use forms of EEV analysis in arguing for their charity recommendations. However, when it comes to analyzing the cost-effectiveness estimates they invoke, the BeerAdvocate formula doesn't seem applicable: there is no "number of reviews" figure that can be used to determine the relative weights of the prior and the estimate.

Instead, we propose a model in which there is a normally (or log-normally) distributed "estimate error" around the cost-effectiveness estimate (with a mean of "no error," i.e., 0 for normally distributed error and 1 for lognormally distributed error), and in which the prior distribution for cost-effectiveness is normally (or log-normally) distributed as well. (I won't discuss log-normal distributions in this post, but the analysis I give can be extended by applying it to the log of the variables in question.) The more one feels confident in one's pre-existing view of how cost-effective an donation or action should be, the smaller the variance of the "prior"; the more one feels confident in the cost-effectiveness estimate itself, the smaller the variance of the "estimate error."

Following up on our 2010 exchange with Giving What We Can, we asked Dario Amodei to write up the implications of the above model and the form of the proper Bayesian adjustment. You can see his analysis here. The bottom line is that when one applies Bayes's rule to obtain a distribution for cost-effectiveness based on (a) a normally distributed prior distribution (b) a normally distributed "estimate error," one obtains a distribution with

- Mean equal to the average of the two means weighted by their inverse variances

- Variance equal to the harmonic sum of the two variances

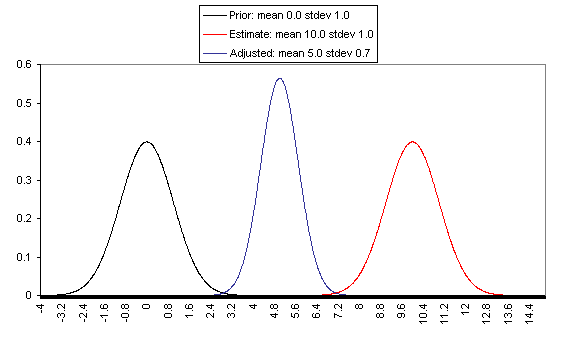

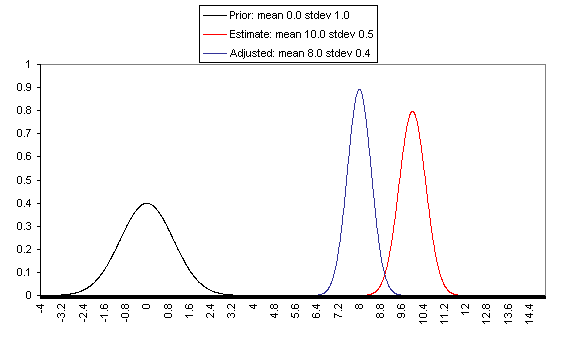

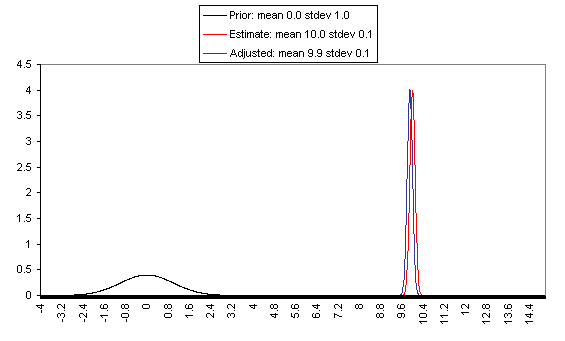

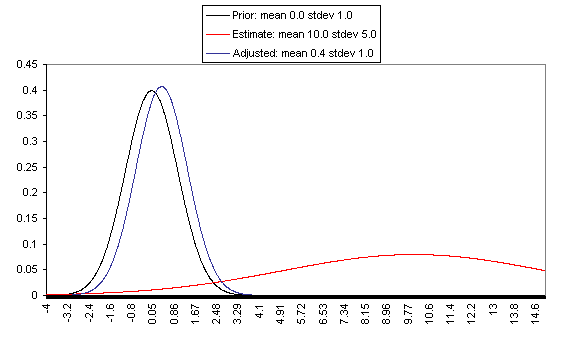

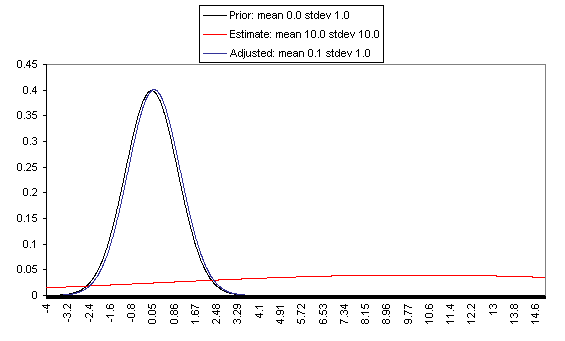

The following charts show what this formula implies in a variety of different simple hypotheticals. In all of these, the prior distribution has mean = 0 and standard deviation = 1, and the estimate has mean = 10, but the "estimate error" varies, with important effects: an estimate with little enough estimate error can almost be taken literally, while an estimate with large enough estimate error ends ought to be almost ignored.

In each of these charts, the black line represents a probability density function for one's "prior," the red line for an estimate (with the variance coming from "estimate error"), and the blue line for the final probability distribution, taking both the prior and the estimate into account. Taller, narrower distributions represent cases where probability is concentrated around the midpoint; shorter, wider distributions represent cases where the possibilities/probabilities are more spread out among many values. First, the case where the cost-effectiveness estimate has the same confidence interval around it as the prior:

If one has a relatively reliable estimate (i.e., one with a narrow confidence interval / small variance of "estimate error,") then the Bayesian-adjusted conclusion ends up very close to the estimate. When we estimate quantities using highly precise and well-understood methods, we can use them (almost) literally.

On the flip side, when the estimate is relatively unreliable (wide confidence interval / large variance of "estimate error"), it has little effect on the final expectation of cost-effectiveness (or whatever is being estimated). And at the point where the one-standard-deviation bands include zero cost-effectiveness (i.e., where there's a pretty strong probability that the whole cost-effectiveness estimate is worthless), the estimate ends up having practically no effect on one's final view.

The details of how to apply this sort of analysis to cost-effectiveness estimates for charitable interventions are outside the scope of this post, which focuses on our belief in the importance of the concept of Bayesian adjustments. The big-picture takeaway is that just having the midpoint of a cost-effectiveness estimate is not worth very much in itself; it is important to understand the sources of estimate error, and the degree of estimate error relative to the degree of variation in estimated cost-effectiveness for different interventions.

Pascal's Mugging

Pascal's Mugging refers to a case where a claim of extravagant impact is made for a particular action, with little to no evidence:

Now suppose someone comes to me and says, "Give me five dollars, or I'll use my magic powers … to [harm an imaginably huge number of] people.

Non-Bayesian approaches to evaluating these proposals often take the following form: "Even if we assume that this analysis is 99.99% likely to be wrong, the expected value is still high - and are you willing to bet that this analysis is wrong at 99.99% odds?"

However, this is a case where "estimate error" is probably accounting for the lion's share of variance in estimated expected value, and therefore I believe that a proper Bayesian adjustment would correctly assign little value where there is little basis for the estimate, no matter how high the midpoint of the estimate.

Say that you've come to believe - based on life experience - in a "prior distribution" for the value of your actions, with a mean of zero and a standard deviation of 1. (The unit type you use to value your actions is irrelevant to the point I'm making; so in this case the units I'm using are simply standard deviations based on your prior distribution for the value of your actions). Now say that someone estimates that action A (e.g., giving in to the mugger's demands) has an expected value of X (same units) - but that the estimate itself is so rough that the right expected value could easily be 0 or 2X. More specifically, say that the error in the expected value estimate has a standard deviation of X.

An EEV approach to this situation might say, "Even if there's a 99.99% chance that the estimate is completely wrong and that the value of Action A is 0, there's still an 0.01% probability that Action A has a value of X. Thus, overall Action A has an expected value of at least 0.0001X; the greater X is, the greater this value is, and if X is great enough then, then you should take Action A unless you're willing to bet at enormous odds that the framework is wrong."

However, the same formula discussed above indicates that Action X actually has an expected value - after the Bayesian adjustment - of X/(X^2+1), or just under 1/X. In this framework, the greater X is, the lower the expected value of Action A. This syncs well with my intuitions: if someone threatened to harm one person unless you gave them $10, this ought to carry more weight (because it is more plausible in the face of the "prior" of life experience) than if they threatened to harm 100 people, which in turn ought to carry more weight than if they threatened to harm 3^^^3 people (I'm using 3^^^3 here as a representation of an unimaginably huge number).

The point at which a threat or proposal starts to be called "Pascal's Mugging" can be thought of as the point at which the claimed value of Action A is wildly outside the prior set by life experience (which may cause the feeling that common sense is being violated). If someone claims that giving him/her $10 will accomplish 3^^^3 times as much as a 1-standard-deviation life action from the appropriate reference class, then the actual post-adjustment expected value of Action A will be just under (1/3^^^3) (in standard deviation terms) - only trivially higher than the value of an average action, and likely lower than other actions one could take with the same resources. This is true without applying any particular probability that the person's framework is wrong - it is simply a function of the fact that their estimate has such enormous possible error. An ungrounded estimate making an extravagant claim ought to be more or less discarded in the face of the "prior distribution" of life experience.

Generalizing the Bayesian approach

In the above cases, I've given quantifications of (a) the appropriate prior for cost-effectiveness; (b) the strength/confidence of a given cost-effectiveness estimate. One needs to quantify both (a) and (b) - not just quantify estimated cost-effectiveness - in order to formally make the needed Bayesian adjustment to the initial estimate.

But when it comes to giving, and many other decisions, reasonable quantification of these things usually isn't possible. To have a prior, you need a reference class, and reference classes are debatable.

It's my view that my brain instinctively processes huge amounts of information, coming from many different reference classes, and arrives at a prior; if I attempt to formalize my prior, counting only what I can name and justify, I can worsen the accuracy a lot relative to going with my gut. Of course there is a problem here: going with one's gut can be an excuse for going with what one wants to believe, and a lot of what enters into my gut belief could be irrelevant to proper Bayesian analysis. There is an appeal to formulas, which is that they seem to be susceptible to outsiders' checking them for fairness and consistency.

But when the formulas are too rough, I think the loss of accuracy outweighs the gains to transparency. Rather than using a formula that is checkable but omits a huge amount of information, I'd prefer to state my intuition - without pretense that it is anything but an intuition - and hope that the ensuing discussion provides the needed check on my intuitions.

I can't, therefore, usefully say what I think the appropriate prior estimate of charity cost-effectiveness is. I can, however, describe a couple of approaches to Bayesian adjustments that I oppose, and can describe a few heuristics that I use to determine whether I'm making an appropriate Bayesian adjustment.

Approaches to Bayesian adjustment that I oppose

I have seen some argue along the lines of "I have a very weak (or uninformative) prior, which means I can more or less take rough estimates literally." I think this is a mistake. We do have a lot of information by which to judge what to expect from an action (including a donation), and failure to use all the information we have is a failure to make the appropriate Bayesian adjustment. Even just a sense for the values of the small set of actions you've taken in your life, and observed the consequences of, gives you something to work with as far as an "outside view" and a starting probability distribution for the value of your actions; this distribution probably ought to have high variance, but when dealing with a rough estimate that has very high variance of its own, it may still be quite a meaningful prior.

I have seen some using the EEV framework who can tell that their estimates seem too optimistic, so they make various "downward adjustments," multiplying their EEV by apparently ad hoc figures (1%, 10%, 20%). What isn't clear is whether the size of the adjustment they're making has the correct relationship to (a) the weakness of the estimate itself (b) the strength of the prior (c) distance of the estimate from the prior. An example of how this approach can go astray can be seen in the "Pascal's Mugging" analysis above: assigning one's framework a 99.99% chance of being totally wrong may seem to be amply conservative, but in fact the proper Bayesian adjustment is much larger and leads to a completely different conclusion.

Heuristics I use to address whether I'm making an appropriate prior-based adjustment

- The more action is asked of me, the more evidence I require. Anytime I'm asked to take a significant action (giving a significant amount of money, time, effort, etc.), this action has to have higher expected value than the action I would otherwise take. My intuitive feel for the distribution of "how much my actions accomplish" serves as a prior - an adjustment to the value that the asker claims for my action.

- I pay attention to how much of the variation I see between estimates is likely to be driven by true variation vs. estimate error. As shown above, when an estimate is rough enough so that error might account for the bulk of the observed variation, a proper Bayesian approach can involve a massive discount to the estimate.

- I put much more weight on conclusions that seem to be supported by multiple different lines of analysis, as unrelated to one another as possible. If one starts with a high-error estimate of expected value, and then starts finding more estimates with the same midpoint, the variance of the aggregate estimate error declines; the less correlated the estimates are, the greater the decline in the variance of the error, and thus the lower the Bayesian adjustment to the final estimate. This is a formal way of observing that "diversified" reasons for believing something lead to more "robust" beliefs, i.e., beliefs that are less likely to fall apart with new information and can be used with less skepticism.

- I am hesitant to embrace arguments that seem to have anti-common-sense implications (unless the evidence behind these arguments is strong) and I think my prior may often be the reason for this. As seen above, a too-weak prior can lead to many seemingly absurd beliefs and consequences, such as falling prey to "Pascal's Mugging" and removing the incentive for investigation of strong claims. Strengthening the prior fixes these problems (while over-strengthening the prior results in simply ignoring new evidence). In general, I believe that when a particular kind of reasoning seems to me to have anti-common-sense implications, this may indicate that its implications are well outside my prior.

- My prior for charity is generally skeptical, as outlined at this post. Giving well seems conceptually quite difficult to me, and it's been my experience over time that the more we dig on a cost-effectiveness estimate, the more unwarranted optimism we uncover. Also, having an optimistic prior would mean giving to opaque charities, and that seems to violate common sense. Thus, we look for charities with quite strong evidence of effectiveness, and tend to prefer very strong charities with reasonably high estimated cost-effectiveness to weaker charities with very high estimated cost-effectiveness

Conclusion

- I feel that any giving approach that relies only on estimated expected-value - and does not incorporate preferences for better-grounded estimates over shakier estimates - is flawed.

- Thus, when aiming to maximize expected positive impact, it is not advisable to make giving decisions based fully on explicit formulas. Proper Bayesian adjustments are important and are usually overly difficult to formalize.

253 comments

Comments sorted by top scores.

comment by Eliezer Yudkowsky (Eliezer_Yudkowsky) · 2011-08-18T23:56:58.930Z · LW(p) · GW(p)

Quick comment one:

This jumped out instantly when I looked at the charts: Your prior and evidence can't possibly both be correct at the same time. Everywhere the prior has non-negligible density has negligible likelihood. Everywhere that has substantial likelihood has negligible prior density. If you try multiplying the two together to get a compromise probability estimate instead of saying "I notice that I am confused", I would hold this up as a pretty strong example of the real sin that I think this post should be arguing against, namely that of trying to use math too blindly without sanity-checking its meaning.

Quick comment two:

I'm a major fan of Down-To-Earthness as a virtue of rationality, and I have told other SIAI people over and over that I really think they should stop using "small probability of large impact" arguments. I've told cryonics people the same. If you can't argue for a medium probability of a large impact, you shouldn't bother.

Part of my reason for saying this is, indeed, that trying to multiply a large utility interval by a small probability is an argument-stopper, an attempt to shut down further debate, and someone is justified in having a strong prior, when they see an attempt to shut down further debate, that further argument if explored would result in further negative shifts from the perspective of the side trying to shut down the debate.

With that said, any overall scheme of planetary philanthropic planning that doesn't spend ten million dollars annually on Friendly AI is just stupid. It doesn't just fail the Categorical Imperative test of "What if everyone did that?", it fails the Predictable Retrospective Stupidity test of, "Assuming civilization survives, how incredibly stupid will our descendants predictably think we were to do that?"

Of course, I believe this because I think the creation of smarter-than-human intelligence has a (very) large probability of an (extremely) large impact, and that most of the probability mass there is concentrated into AI, and I don't think there's nothing that can be done about that, either.

I would summarize my quick reply by saying,

"I agree that it's a drastic warning sign when your decision process is spending most of its effort trying to achieve unprecedented outcomes of unquantifiable small probability, and that what I consider to be down-to-earth common sense is a great virtue of a rationalist. That said, down-to-earth common-sense says that AI is a screaming emergency at this point in our civilization's development, and I don't consider myself to be multiplying small probabilities by large utility intervals at any point in my strategy."

Replies from: Wei_Dai, orthonormal, multifoliaterose, novalis, lukeprog↑ comment by Wei Dai (Wei_Dai) · 2011-08-19T03:05:30.952Z · LW(p) · GW(p)

I don't consider myself to be multiplying small probabilities by large utility intervals at any point in my strategy

What about people who do think SIAI's probability of success is small? Perhaps they have different intuitions about how hard FAI is, or don't have enough knowledge to make an object-level judgement so they just apply the absurdity heuristic. Being one of those people, I think it's still an important question whether it's rational to support SIAI given a small estimate of probability of success, even if SIAI itself doesn't want to push this line of inquiry too hard for fear of signaling that their own estimate of probability of success is low.

Replies from: Eliezer_Yudkowsky, Rain↑ comment by Eliezer Yudkowsky (Eliezer_Yudkowsky) · 2011-08-19T22:38:57.606Z · LW(p) · GW(p)

Leaving aside Aumann questions: If people like that think that the Future of Humanity Institute, work on human rationality, or Giving What We Can has a large probability of catalyzing the creation of an effective institution, they should quite plausibly be looking there instead. "I should be doing something I think is at least medium-probably remedying the sheerly stupid situation humanity has gotten itself into with respect to the intelligence explosion" seems like a valuable summary heuristic.

If you can't think of anything medium-probable, using that as an excuse to do nothing is unacceptable. Figure out which of the people trying to address the problem seem most competent and gamble on something interesting happening if you give them more money. Money is the unit of caring and I can't begin to tell you how much things change when you add more money to them. Imagine what the global financial sector would look like if it was funded to the tune of $600,000/year. You would probably think it wasn't worth scaling up Earth's financial sector.

Replies from: Wei_Dai, lessdazed, multifoliaterose↑ comment by Wei Dai (Wei_Dai) · 2011-08-20T05:57:23.948Z · LW(p) · GW(p)

If you can't think of anything medium-probable, using that as an excuse to do nothing is unacceptable.

That's my gut feeling as well, but can we give a theoretical basis for that conclusion, which might also potentially be used to convince people who can't think of anything medium-probable to "do something"?

My current thoughts are

- I assign some non-zero credence to having an unbounded utility function.

- Bostrom and Toby's moral parliament idea seems to be the best that we have about how to handle moral uncertainty.

- If Pascal's wager argument works, and to the extent that I have a faction representing unbounded utility in my moral parliament, I ought to spend a fraction of my resources on Pascal's wager type "opportunities"

- If Pascal's wager argument works, I should pick the best wager to bet on, which intuitively could well be "push for a positive Singularity"

- But it's not clear that Pascal's wager argument works or what could be the justification for thinking that "push for a positive Singularity" is the best wager. We also don't have any theory to handle this kind of philosophical uncertainty.

- Given all this, I still have to choose between "do nothing", "push for positive Singularity", or "investigate Pascal's wager". Is there any way, in this decision problem, to improve upon going with my gut?

Anyway, I understand that you probably have reasons not to engage too deeply with this line of thought, so I'm mostly explaining where I'm currently at, as well as hoping that someone else can offer some ideas.

↑ comment by lessdazed · 2011-08-20T11:18:45.531Z · LW(p) · GW(p)

Imagine what the global financial sector would look like if it was funded to the tune of $600,000/year. You would probably think it wasn't worth scaling up Earth's financial sector.

And one might even be right about that.

A better analogy might be if regulation of the global financial sector were funded at 600k/yr.

↑ comment by multifoliaterose · 2011-08-19T22:53:43.845Z · LW(p) · GW(p)

Money is the unit of caring and it really is impossible to overstate how much things change when you add money to them.

Can you give an example relevant to the context at hand to illustrate what you have in mind? I don't necessarily disagree, but I presently think that there's a tenable argument that money is seldom the key limiting factor for philanthropic efforts in the developed world.

Replies from: Eliezer_Yudkowsky, ciphergoth↑ comment by Eliezer Yudkowsky (Eliezer_Yudkowsky) · 2011-08-19T23:50:27.894Z · LW(p) · GW(p)

BTW, note that I deleted the "impossible to overstate" line on grounds of its being false. It's actually quite possible to overstate the impact of adding money. E.g., "Adding one dollar to this charity will CHANGE THE LAWS OF PHYSICS."

↑ comment by Paul Crowley (ciphergoth) · 2011-08-20T06:58:40.171Z · LW(p) · GW(p)

What sort of key limiting factors do you have in mind that are untouched by money? Every limiting factor I can think of, whether it's lack of infrastructure or corruption or lack of political will in the West, is something that you could spend money on doing something about.

Replies from: multifoliaterose↑ comment by multifoliaterose · 2011-08-20T18:51:02.151Z · LW(p) · GW(p)

If nothing else, historical examples show that huge amounts of money lobbed at a cause can go to waste or do more harm than good (e.g. the Iraq war as a means to improve relations with the middle East).

Eliezer and I were both speaking in vague terms; presumably somebody intelligent, knowledgeable, sophisticated, motivated, energetic & socially/politically astute can levy money toward some positive expected change in a given direction. There remains the question about the conversion factor between money and other goods and how quickly it changes at the margin; it could be negligible in a given instance.

The main limiting factor that I had in mind was human capital: an absence of people who are sufficiently intelligent, knowledgeable, sophisticated, motivated, energetic & socially/politically astute.

I would add that a group of such people would have substantially better than average odds of attracting sufficient funding from some philanthropist; further diminishing the value of donations (on account of fungibility).

↑ comment by Rain · 2011-08-19T12:25:37.587Z · LW(p) · GW(p)

I think the creation of smarter-than-human intelligence has a (very) large probability of an (extremely) large impact, and that most of the probability mass there is concentrated into AI

That's the probability statement in his post. He didn't mention the probability of SIAI's success, and hasn't previously when I've emailed him or asked in public forums, nor has he at any point in time that I've heard. Shortly after I asked, he posted When (Not) To Use Probabilities.

Replies from: Wei_Dai↑ comment by Wei Dai (Wei_Dai) · 2011-08-19T16:37:19.218Z · LW(p) · GW(p)

Yes, I had read that, and perhaps even more apropos (from Shut up and do the impossible!):

You might even be justified in refusing to use probabilities at this point. In all honesty, I really don't know how to estimate the probability of solving an impossible problem that I have gone forth with intent to solve; in a case where I've previously solved some impossible problems, but the particular impossible problem is more difficult than anything I've yet solved, but I plan to work on it longer, etcetera.

People ask me how likely it is that humankind will survive, or how likely it is that anyone can build a Friendly AI, or how likely it is that I can build one. I really don't know how to answer. I'm not being evasive; I don't know how to put a probability estimate on my, or someone else, successfully shutting up and doing the impossible. Is it probability zero because it's impossible? Obviously not. But how likely is it that this problem, like previous ones, will give up its unyielding blankness when I understand it better? It's not truly impossible, I can see that much. But humanly impossible? Impossible to me in particular? I don't know how to guess. I can't even translate my intuitive feeling into a number, because the only intuitive feeling I have is that the "chance" depends heavily on my choices and unknown unknowns: a wildly unstable probability estimate.

But it's not clear whether Eliezer means that he can't even translate his intuitive feeling into a word like "small" or "medium". I thought the comment I was replying to was saying that SIAI had a "medium" chance of success, given:

If you can't argue for a medium probability of a large impact, you shouldn't bother.

and

I don't consider myself to be multiplying small probabilities by large utility intervals at any point in my strategy

But perhaps I misinterpreted? In any case, there's still the question of what is rational for those of us who do think SIAI's chance of success is "small".

Replies from: Rain, Will_Newsome↑ comment by Rain · 2011-08-19T18:02:00.265Z · LW(p) · GW(p)

I thought he was taking the "don't bother" approach by not giving a probability estimate or arguing about probabilities.

In any case, there's still the question of what is rational for those of us who do think SIAI's chance of success is "small".

I propose that the rational act is to investigate approaches to greater than human intelligence which would succeed.

Replies from: Jordan↑ comment by Will_Newsome · 2011-08-19T18:21:50.203Z · LW(p) · GW(p)

Sufficiently-Friendly AI can be hard for SIAI-now but easy or medium for non-SIAI-now (someone else now, someone else future, SIAI future). I personally believe this, since SIAI-now is fucked up (and SIAI-future very well will be too). (I won't substantiate that claim here.) Eliezer didn't talk about SIAI specifically. (He probably thinks SIAI will be at least as likely to succeed as anyone else because he thinks he's super awesome, but it can't be assumed he'd assert that with confidence, I think.)

Replies from: Alicorn, Louie↑ comment by Alicorn · 2011-08-19T18:38:35.607Z · LW(p) · GW(p)

SIAI-now is fucked up (and SIAI-future very well will be too). (I won't substantiate that claim here.)

Will you substantiate it elsewhere?

Replies from: handoflixue↑ comment by handoflixue · 2011-08-19T22:25:06.376Z · LW(p) · GW(p)

Second that interest in hearing it substantiated elsewhere.

↑ comment by Louie · 2011-12-28T20:35:59.645Z · LW(p) · GW(p)

Your comments are a cruel reminder that I'm in a world where some of the very best people I know are taken from me.

Replies from: Will_Newsome↑ comment by Will_Newsome · 2011-12-28T20:38:28.628Z · LW(p) · GW(p)

SingInst seems a lot better since I wrote that comment; you and Luke are doing some cool stuff. Around August everything was in a state of disarray and it was unclear if you'd manage to pull through.

↑ comment by orthonormal · 2011-08-19T00:50:11.780Z · LW(p) · GW(p)

Regarding the graphs, I assumed that they were showing artificial examples so that we could viscerally understand at a glance what the adjustment does, not that this is what the prior and evidence should look like in a real case.

↑ comment by multifoliaterose · 2011-08-19T00:18:27.448Z · LW(p) · GW(p)

Upvoted.

This jumped out instantly when I looked at the charts: Your prior and evidence can't possibly both be correct at the same time. Everywhere the prior has non-negligible density has negligible likelihood. Everywhere that has substantial likelihood has negligible prior density. If you try multiplying the two together to get a compromise probability estimate instead of saying "I notice that I am confused", I would hold this up as a pretty strong example of the real sin that I think this post should be arguing against, namely that of trying to use math too blindly without sanity-checking its meaning.

(I deleted my response to this following othonormal's comments; see this one for my revised thought here.)

Of course, I believe this because I think the creation of smarter-than-human intelligence has a (very) large probability of an (extremely) large impact, and that most of the probability mass there is concentrated into AI, and I don't think there's nothing that can be done about that, either.

Why do you think that there's something that can be done about it?

Replies from: orthonormal↑ comment by orthonormal · 2011-08-19T00:48:13.277Z · LW(p) · GW(p)

I disagree. It can be rational to shift subjective probabilities by many orders of magnitude in response to very little new information.

What your example looks like is a nearly uniform prior over a very large space- nothing's wrong when we quickly update to believe that yesterday's lottery numbers are 04-15-21-31-36.

But the point where you need to halt, melt, and catch fire is if your prior assigns the vast majority of the probability mass to a small compact region, and then the evidence comes along and lands outside that region. That's the equivalent of starting out 99.99% confident that you know tomorrow's lottery numbers will begin with 01-02-03, and being proven wrong.

Replies from: multifoliaterose↑ comment by multifoliaterose · 2011-08-19T00:57:29.925Z · LW(p) · GW(p)

Yes, you're right, I wasn't thinking clearly, thanks for catching me. I think there's something to what I was trying to say, but I need to think about it through more carefully. I find the explanation that you give in your other comment convincing (that the point of the graphs is to clearly illustrate the principle).

↑ comment by novalis · 2011-08-19T04:42:59.068Z · LW(p) · GW(p)

10 million dollars buys quite a few programmers. SIAI is presently nowhere near that amount of money, and doesn't look likely to be any time soon. When does it make sense to start talking to volunteer programmers? Presumably, when the risk of opening up the project is less than the risk of failing to get it done before someone else does it wrong.

Replies from: MichaelVassar↑ comment by MichaelVassar · 2011-08-19T14:19:51.203Z · LW(p) · GW(p)

When is "soon" for these purposes. It seems to me that with continuing support, SIAI will be able to hire as many of the right programmers as we can find and effectively integrate into a research effort. We certainly would hire any such programmers now.

Replies from: multifoliaterose, novalis, Will_Newsome, lessdazed↑ comment by multifoliaterose · 2011-08-19T17:03:29.354Z · LW(p) · GW(p)

It seems to me that with continuing support, SIAI will be able to hire as many of the right programmers as we can find and effectively integrate into a research effort.

My main source of uncertainty as to SIAI's value comes from the fact that as far as I can tell nobody has a viable Friendly AI research program.

Replies from: Will_Newsome↑ comment by Will_Newsome · 2011-08-19T18:03:55.925Z · LW(p) · GW(p)

(Please don't upvote this comment till you've read it fully; I'm interpreting upvotes in a specific way.) Question for anyone on LW: If I had a viable preliminary Friendly AI research program, aimed largely at doing the technical analysis necessary to determine as well as possible the feasibility and difficulty of Friendly AI for various values of "Friendly", and wrote clearly and concretely about the necessary steps in pursuing this analysis, and listed and described a small number of people (less than 5, but how many could actually be convinced to focus on doing the analysis would depend on funds) who I know of who could usefully work on such an analysis, and committed to have certain summaries published online at various points (after actually considering concrete possibilities for failure, planning fallacy, etc., like real rationalists should), and associated with a few (roughly 5) high status people (people like Anders Sandberg or Max Tegmark, e.g. by convincing them to be on an advisory board), would this have a decent chance of causing you or someone you know to donate $100 or more to support this research program? (I have a weird rather mixed reputation among the greater LW community, so if that affects you negatively please pretend that someone with a more solid reputation but without super high karma is asking this question, like Steven Kaas.) You can upvote for "yes" and comment about any details, e.g. if you know someone who would possibly donate significantly more than $100. (Please don't downvote for "no", 'cuz that's the default answer and will drown out any "yes" ones.)

Replies from: Airedale, Eliezer_Yudkowsky, Will_Newsome↑ comment by Airedale · 2011-08-21T16:22:15.102Z · LW(p) · GW(p)

I have a weird rather mixed reputation among the greater LW community, so if that affects you negatively please pretend that someone with a more solid reputation but without super high karma is asking this question, like Steven Kaas.

Unless you would be much less involved in this potential program than the comment indicates, this seems like an inappropriate request. If people view you negatively due to your posting history, they should absolutely take that information into account in assessing how likely they would be to provide financial support to such a program (assuming that the negative view is based on relevant considerations such as your apparent communication or reasoning skills as demonstrated in your comments).

Replies from: Will_Newsome↑ comment by Will_Newsome · 2011-08-21T17:47:06.157Z · LW(p) · GW(p)

Unless you would be much less involved in this potential program than the comment indicates, this seems like an inappropriate request.

I was more interested in Less Wrong's interest in new FAI-focused organizations generally than in anything particularly tied to me.

Replies from: Airedale↑ comment by Airedale · 2011-08-21T22:11:13.953Z · LW(p) · GW(p)

Fair enough, but in light of your phrasing in both the original comment ("If I [did the following things]") and your comment immediately following it (quoted below; emphasis added), it certainly appeared to me that you seemed to be describing a significant role for yourself, even though your proposal was general overall.

(Some people, including me, would really like it if a competent and FAI-focused uber-rationalist non-profit existed. I know people who will soon have enough momentum to make this happen. I am significantly more familiar with the specifics of FAI (and of hardcore SingInst-style rationality) than many of those people and almost anyone else in the world, so it'd be necessary that I put a lot of hours into working with those who are higher status than me and better at getting things done but less familiar with technical Friendliness. But I have many other things I could be doing. Hence the question.)

↑ comment by Eliezer Yudkowsky (Eliezer_Yudkowsky) · 2011-08-20T05:57:06.480Z · LW(p) · GW(p)

Sorry, could you say again what exactly you want to do? I mean, what's the output here that the money is paying for; a Friendly AI, a theory that can be used to construct a Friendly AI, or an analysis that purports to say whether or not Friendly AI is "feasible", or what?

Replies from: Will_Newsome↑ comment by Will_Newsome · 2011-08-21T08:51:58.913Z · LW(p) · GW(p)

Money would pay for marginal output, e.g. in the form of increased collaboration, I think, since the best Friendliness-cognizant x-rationalists would likely already be working on similar things.

I was trying to quickly gauge vague interest in a vague notion. I think that my original comment was at roughly the most accurate and honest level of vagueness (i.e. "aimed largely [i.e. primarily] at doing the technical analysis necessary to determine as well as possible the feasibility and difficulty [e.g. how many Von Neumanns, Turings, and/or Aristotles would it take?] of Friendly AI for various (logical) probabilities of Friendliness [e.g. is the algorithm meta-reflective enough to fall into (one of) some imagined Friendliness attractor basin(s)?]"). Value of information regarding difficulty of Friendly-ish AI is high, but research into that question is naturally tied to Friendly AI theory itself. I'm thinking... Goedel machine stability more than ambient decision theory, history of computation more than any kind of validity semantics. To some extent it depends on who plans to actually work on what stuff from the open problems lists. There are many interesting technical threads that people might start pulling on soon, and it's unclear to me to what extent they actually will pull on them or to what extent pulling on them will give us a better sense of the problem.

[Stuff it would take too many paragraphs to explain why it's worth pointing out specifically:] Theory of justification seems to be roughly as developed as theory of computation was before the advent of Leibniz; Leibniz saw a plethora of connections between philosophy, symbolic logic, and engineering and thus developed some correctly thematically centered proto-theory. I'm trying to make a Leibniz, and hopefully SingInst can make a Turing. (Two other roughly analogous historical conceptual advances are natural selection and temperature.)

Replies from: Eliezer_Yudkowsky↑ comment by Eliezer Yudkowsky (Eliezer_Yudkowsky) · 2011-08-21T10:06:50.027Z · LW(p) · GW(p)

Well, my probability that you could or would do anything useful, given money, just dropped straight off a cliff. But perhaps you're just having trouble communicating. That is to say: What the hell are you talking about.

If you're going to ask for money on LW, plain English response, please: What's the output here that the money is paying for; (1) a Friendly AI, (2) a theory that can be used to construct a Friendly AI, or (3) an analysis that purports to say whether or not Friendly AI is "feasible"? Please pick one of the pre-written options; I now doubt your ability to write your response ab initio.

Replies from: Solvent, Will_Newsome↑ comment by Will_Newsome · 2011-08-21T10:47:34.665Z · LW(p) · GW(p)

Dude, it's right there: "feasibility and difficulty", in this sentence which I am now repeating for the second time:

aimed largely [i.e. primarily] at doing the technical analysis necessary to determine as well as possible the feasibility and difficulty [e.g. how many Von Neumanns, Turings, and/or Aristotles would it take?] of Friendly AI for various (logical) probabilities of Friendliness [e.g. is the algorithm meta-reflective enough to fall into (one of) some imagined Friendliness attractor basin(s)?]").

(Bold added for emphasis, annotations in [brackets] were in the original.)

The next sentence:

Value of information regarding difficulty of Friendly-ish AI is high, but research into that question is naturally tied to Friendly AI theory itself.

Or if you really need it spelled out for you again and again, the output would primarily be (3) but secondarily (2) as you need some of (2) to do (3).

Because you clearly need things pointed out multiple times, I'll remind you that I put my response in the original comment that you originally responded to, without the later clarifications that I'd put in for apparently no one's benefit:

If I had a viable preliminary Friendly AI research program, aimed largely at doing the technical analysis necessary to determine as well as possible the feasibility and difficulty of Friendly AI for various values of "Friendly" [...]

(Those italics were in the original comment!)

If you're going to ask for money on LW

I wasn't asking for money on Less Wrong! As I said, "I was trying to quickly gauge vague interest in a vague notion." What the hell are you talking about.

I now doubt your ability to write your response ab initio.

I've doubted your ability to read for a long time, but this is pretty bad. The sad thing is you're probably not doing this intentionally.

Replies from: katydee, Solvent↑ comment by katydee · 2011-08-21T11:15:35.841Z · LW(p) · GW(p)

I think the problem here is that your posting style, to be frank, often obscures your point.

In most cases, posts that consist of a to-the-point answer followed by longer explanations use the initial statement to make a concise case. For instance, in this post, my first sentence sums up what I think about the situation and the rest explains that thought in more detail so as to convey a more nuanced impression.

By contrast, when Eliezer asked "What's the output here that the money is paying for," your first sentence was "Money would pay for marginal output, e.g. in the form of increased collaboration, I think, since the best Friendliness-cognizant x-rationalists would likely already be working on similar things." This does not really answer his question, and while you clarify this with your later points, the overall message is garbled.

The fact that your true answer is buried in the middle of a paragraph does not really help things much. Though I can see what you are trying to say, I can't in good and honest conscience describe it as clear. Had you answered, on the other hand, "Money would pay for the technical analysis necessary to determine as well as possible the feasibility and difficulty of FAI..." as your first sentence, I think your post would have been more clear and more likely to be understood.

Replies from: Will_Newsome, Will_Newsome↑ comment by Will_Newsome · 2011-08-21T11:32:34.511Z · LW(p) · GW(p)

The sentences I put before the direct answer to Eliezer's question were meant to correct some of Eliezer's misapprehensions that were more fundamental than the object of his question. Eliezer's infamous for uncharitably misinterpreting people and it was clear he'd misinterpreted some key aspects of my original comment, e.g. my purpose in writing it. If I'd immediately directly answered his question that would have been dishonest; it would have contributed further to his having a false view of what I was actually talking about. Less altruistically it would be like I was admitting to his future selves or to external observers that I agreed that his model of my purposes was accurate and that this model could legitimately be used to assert that I was unjustified in any of many possible ways. Thus I briefly (a mere two sentences) attempted to address what seemed likely to be Eliezer's underlying confusions before addressing his object level question. (Interestingly Eliezer does this quite often, but unfortunately he often assumes people are confused in ways that they are not.)

Given these constraints, what should I have done? In retrospect I should have gone meta, of course, like always. What else?

Thanks much for the critique.

Replies from: katydee↑ comment by katydee · 2011-08-21T12:04:39.694Z · LW(p) · GW(p)

Given those constraints, I would probably write something like "Money would pay for marginal output in the form of increased collaboration on the technical analysis necessary to determine as well as possible the feasibility and difficulty of FAI" for my first sentence and elaborate as strictly necessary. That seems rather more cumbersome than I'd like, but it's also a lot of information to try and convey in one sentence!

Alternatively, I would consider something along the lines of "Money would pay for the technical analysis necessary to determine as well as possible the feasibility and difficulty of FAI, but not directly-- since the best Friendliness-cognizant x-rationalists would likely already be working on similar things, the money would go towards setting up better communication, coordination, and collaboration for that group."

That said, I am unaware of any reputation Eliezer has in the field of interpreting people, and personally haven't received the impression that he's consistently unusually bad or uncharitable at it. Then again, I have something of a reputation-- at least in person-- for being too charitable, so perhaps I'm being too light on Eliezer (or you?) here.

↑ comment by Will_Newsome · 2011-08-21T11:46:14.940Z · LW(p) · GW(p)

I think the problem here is that your posting style, to be frank, often obscures your point.

I acknowledge this. But it seems to me that the larger problem is that Eliezer simply doesn't know how to read what people actually say. Less Wrong mostly doesn't either, and humans in general certainly don't. This is a very serious problem with LW-style rationality (and with humanity). There are extremely talented rationalists who do not have this problem; it is an artifact of Eliezer's psychology and not of the art of rationality.

Replies from: Rain, Kaj_Sotala, katydee↑ comment by Rain · 2011-08-21T14:08:28.543Z · LW(p) · GW(p)

It's hardly fair to blame the reader when you've got "sentences" like this:

Replies from: Will_Newsome, Will_NewsomeI think that my original comment was at roughly the most accurate and honest level of vagueness (i.e. "aimed largely [i.e. primarily] at doing the technical analysis necessary to determine as well as possible the feasibility and difficulty [e.g. how many Von Neumanns, Turings, and/or Aristotles would it take?] of Friendly AI for various (logical) probabilities of Friendliness [e.g. is the algorithm meta-reflective enough to fall into (one of) some imagined Friendliness attractor basin(s)?]").

↑ comment by Will_Newsome · 2011-08-21T14:19:24.048Z · LW(p) · GW(p)

That was the second version of the sentence, the first one had much clearer syntax and even italicized the answer to Eliezer's subsequent question. It looks the way it does because Eliezer apparently couldn't extract meaning out of my original sentence despite it clearly answering his question, so I tried to expand on the relevant points with bracketed concrete examples. Here's the original:

If I had a viable preliminary Friendly AI research program, aimed largely at doing the technical analysis necessary to determine as well as possible the feasibility and difficulty of Friendly AI for various values of "Friendly" [...]

(emphasis in original)

Replies from: Rain↑ comment by Will_Newsome · 2011-08-21T14:22:53.530Z · LW(p) · GW(p)

What you say might be true, but this one example is negligible compared to the mountain of other evidence concerning inability to read much more important things (which are unrelated to me). I won't give that evidence here.

↑ comment by Kaj_Sotala · 2011-08-21T20:14:20.903Z · LW(p) · GW(p)

Certainly true, but that only means that we need to spend more effort on being as clear as possible.

↑ comment by katydee · 2011-08-21T12:14:46.442Z · LW(p) · GW(p)

If that's indeed the case (I haven't noticed this flaw myself), I suggest that you write articles (or perhaps commission/petition others to have them written) describing this flaw and how to correct it. Eliminating such a flaw or providing means of averting it would greatly aid LW and the community in general.

Replies from: Will_Newsome↑ comment by Will_Newsome · 2011-08-21T12:30:43.797Z · LW(p) · GW(p)

Unfortunately that is not currently possible for many reasons, including some large ones I can't talk about and that I can't talk about why I can't talk about. I can't see any way that it would become possible in the next few years either. I find this stressful; it's why I make token attempts to communicate in extremely abstract or indirect ways with Less Wrong, despite the apparent fruitlessness. But there's really nothing for it.

Unrelated public announcement: People who go back and downvote every comment someone's made, please, stop doing that. It's a clever way to pull information cascades in your direction but it is clearly an abuse of the content filtering system and highly dishonorable. If you truly must use such tactics, downvoting a few of your enemy's top level posts is much less evil; your enemy loses the karma and takes the hint without your severely biasing the public perception of your enemy's standard discourse. Please.

(I just lost 150 karma points in a few minutes and that'll probably continue for awhile. This happens a lot.)

Replies from: Nisan, katydee, NancyLebovitz, None↑ comment by katydee · 2011-08-21T21:45:23.813Z · LW(p) · GW(p)

I'm not a big fan of the appeal to secret reasons, so I think I'm going to have pull out of this discussion. I will note, however, that you personally seem to be involved in more misunderstandings than the average LW poster, so while it's certainly possible that your secret reasons are true and valid and Eliezer just sucks at reading or whatever, you may want to clarify certain elements of your own communication as well.

I unfortunately predict that "going more meta" will not be strongly received here.

↑ comment by NancyLebovitz · 2011-08-21T13:55:12.901Z · LW(p) · GW(p)

I'm sorry to hear that you're up against something so difficult, and I hope you find a way out.

Replies from: Will_Newsome↑ comment by Will_Newsome · 2011-08-21T14:25:48.205Z · LW(p) · GW(p)

Thank you... I think I just need to be more meta. Meta never fails.

↑ comment by [deleted] · 2011-08-21T13:51:46.716Z · LW(p) · GW(p)

Unfortunately that is not currently possible for many reasons, including some large ones I can't talk about and that I can't talk about why I can't talk about.

Are we still talking about improving general reading comprehension? What could possibly be dangerous about that?

↑ comment by Will_Newsome · 2011-08-19T18:31:36.001Z · LW(p) · GW(p)

(Some people, including me, would really like it if a competent and FAI-focused uber-rationalist non-profit existed. I know people who will soon have enough momentum to make this happen. I am significantly more familiar with the specifics of FAI (and of hardcore SingInst-style rationality) than many of those people and almost anyone else in the world, so it'd be necessary that I put a lot of hours into working with those who are higher status than me and better at getting things done but less familiar with technical Friendliness. But I have many other things I could be doing. Hence the question.)

Replies from: Wei_Dai↑ comment by Wei Dai (Wei_Dai) · 2011-08-19T19:40:13.580Z · LW(p) · GW(p)

Does "FAI-focused" mean what I called code first? What are your thoughts on that post and its followup? What is this new non-profit planning to do differently from SIAI and why? What are the other things that you could be doing?

Replies from: Will_Newsome↑ comment by Will_Newsome · 2011-08-19T20:01:29.274Z · LW(p) · GW(p)

Incomplete response:

Does "FAI-focused" mean what I called code first?

Jah. Well, at least determining whether or not "code first" is even reasonable, yeah, which is a difficult question in itself and only partially tied in with making direct progress on FAI.

What are your thoughts on that post and its followup?

You seem to have missed Oracle AI? (Eliezer's dismissal of it isn't particularly meaningful.) I agree with your concerns. This is why the main focus would at least initially be determining whether or not "code first" is a plausible approach (difficulty-wise and safety-wise). The value of information on that question is incredibly high and as you've pointed out it has not been sufficiently researched.

What is this new non-profit planning to do differently from SIAI and why?

Basically everything. SingInst is focused on funding a large research program and gaining the prestige necessary to influence (academic) culture and academic and political policy. They're not currently doing any research on Friendly AI, and their political situation is such that I don't expect them to be able to do so effectively for a while, if ever. I will not clarify this. (Actually their research associates are working on FAI-related things, but SingInst doesn't pay them to do that.)

What are the other things that you could be doing?

Learning, mostly. Working with an unnamed group of x-risk-cognizant people that LW hasn't heard of, in a way unrelated to their setting up a non-profit.

Replies from: Wei_Dai, bgaesop↑ comment by Wei Dai (Wei_Dai) · 2011-08-22T17:16:07.852Z · LW(p) · GW(p)

They're not currently doing any research on Friendly AI, and their political situation is such that I don't expect them to be able to do so effectively for a while, if ever.

My understanding is that SIAI recently tried to set up a new in-house research team to do preliminary research into FAI (i.e., not try to build an FAI yet, but just do whatever research that might be eventually helpful to that project). This effort didn't get off the ground, but my understanding again is that it was because the researchers they tried to recruit had various reasons for not joining SIAI at this time. I was one of those they tried to recruit, and while I don't know what the others' reasons were, mine were mostly personal and not related to politics.

You must also know all this, since you were involved in this effort. So I'm confused why you say SIAI won't be doing effective research on FAI due to its "political situation". Did the others not join SIAI because they thought SIAI was in a bad political situation? (This seems unlikely to me.) Or are you referring to the overall lack of qualified, recruitable researchers as a "political situation"? If you are, why do you think this new organization would be able to do better?

(Or did you perhaps not learn the full story, and thought SIAI stopped this effort for political reasons?)

Replies from: Will_Newsome↑ comment by Will_Newsome · 2011-08-22T19:48:39.642Z · LW(p) · GW(p)

The answer to your question isn't among your list of possible answers. The recent effort to start an in-house research team was a good attempt and didn't fail for political reasons. I am speaking of other things. However I want to take a few weeks off from discussion of such topics; I seem to have given off the entirely wrong impression and would prefer to start such discussion anew in a better context, e.g. one that better emphasizes cooperation and tentativeness rather than reactionary competition. My apologies.

Replies from: Rain, Wei_Dai↑ comment by Rain · 2011-08-23T14:39:47.671Z · LW(p) · GW(p)

I was trying to quickly gauge vague interest in a vague notion.

I won't give that evidence here.

(I won't substantiate that claim here.)

I will not clarify this.

The answer to your question isn't among your list of possible answers.

I find this stressful; it's why I make token attempts to communicate in extremely abstract or indirect ways with Less Wrong, despite the apparent fruitlessness. But there's really nothing for it.

If you can't say anything, don't say anything.

Replies from: Will_Newsome↑ comment by Will_Newsome · 2011-08-23T19:48:49.006Z · LW(p) · GW(p)

It's a good heuristic, but can be very psychologically difficult. E.g. if you think that not even trying to communicate will be seen as unjustified in retrospect even if people should know that there was no obvious way for you to communicate. This has happened enough to me that the thought of just giving up on communication is highly aversive; my fear of being blamed for not preventing others to take unjustified actions (that will cause me, them, and the universe counterfactually-needless pain) is too great. But whatever, I'm starting to get over it.

Like, I remember a pigeon dying... people dying... a girl who starved herself... a girl who cut herself... a girl who wanted to commit suicide... just, trust me, there are reasons that I'm afraid. I could talk about those reasons but I'd rather not. It's just, if you don't even make a token gesture it's like you don't even care at all, and it's easier to be unjustified in a way that can be made to look sorta like caring than in a way that looks like thoughtlessness or carelessness.

(ETA: A lot of the time when people give me or others advice I mentally translate it to "The solution is simple, just shut up and be evil.".)

↑ comment by Wei Dai (Wei_Dai) · 2011-08-23T18:13:41.536Z · LW(p) · GW(p)

I have no particular attachments to SIAI and would love to see a more effective Singularitarian organization formed if that were possible. I'm just having genuine trouble understanding why you think this new proposed organization will be able to do more effective FAI research. Perhaps you could use these few weeks off to ask some trusted advisors how to better communicate this point. (I understand you have sensitive information that you can't reveal, but I'm guessing that you can do better even within that constraint.)

Replies from: Will_Newsome, timtyler↑ comment by Will_Newsome · 2011-08-23T19:43:49.117Z · LW(p) · GW(p)

Perhaps you could use these few weeks off to ask some trusted advisors how to better communicate this point.

This is exactly the strategy I've decided to enact, e.g. talking to Anna. Thanks for being... gentle, I suppose? I've been getting a lot of flak lately, it's nice to get some non-insulting advice sometimes. :)

(Somehow I completely failed to communicate the tentativeness of the ideas I was throwing around; in my head I was giving it about a 3% chance that I'd actually work on helping build an organization but I seem to have given off an impression of about 30%. I think this caused everyone's brains to enter politics mode, which is not a good mode for brains to be in.)

↑ comment by timtyler · 2011-08-23T20:21:58.455Z · LW(p) · GW(p)

I have no particular attachments to SIAI and would love to see a more effective Singularitarian organization formed if that were possible.

It's rather strange how the SIAI is secretive. The military projects are secretive, the commercial projects are secretive alas: so few value transparency. An open project would surely do better, through being more obviously trustworthy and accountable, being better able to use talent across the internet, etc. I figure if the SIAI persists in not getting to grips with this issue, some other organisation will.

↑ comment by novalis · 2011-08-19T20:28:26.707Z · LW(p) · GW(p)

Well, looking at the post on SIAI finances from a few months back, SIAI's annual revenue is growing at a rate of roughly 100k/year, and thus would take nearly a century to reach 10 million / year. Of course, all sorts of things could change these numbers. Eliezer has stated that he believes that UFAI will happen somewhat sooner than a century.

Since SIAI does seem to have at least some unused budget for programmers now, I emailed a friend who might be a good fit for a research associate to suggest that he apply.

Replies from: Will_Sawin↑ comment by Will_Sawin · 2011-08-22T17:29:58.176Z · LW(p) · GW(p)

Even if past values are linear, exponential estimates are probably more clarifying.

↑ comment by Will_Newsome · 2011-08-19T17:00:24.480Z · LW(p) · GW(p)

(ETA: This isn't the right place for what I wrote.)

↑ comment by lessdazed · 2011-08-20T11:10:41.428Z · LW(p) · GW(p)

It seems to me that with continuing support, SIAI will be able to hire as many of the right programmers as we can find and effectively integrate into a research effort.

ADBOC. SIAI should never be content with any funding level. It should get the resources to hire, bribe or divert people who may otherwise make breakthroughs on UAI, and set them to doing anything else.

Replies from: MichaelVassar↑ comment by MichaelVassar · 2011-08-27T16:04:36.580Z · LW(p) · GW(p)

That intuition seems to me to follow from the probably false assumption that if behavior X would, under some circumstances, be utility maximizing, it is also likely to be utility maximizing to fund a non-profit to engage in behavior X. SIAI isn't a "do what seems to us to maximize expected utility" organization because such vague goals don't make for a good organizational culture. Organizing and funding research into FAI and research inputs into FAI, plus doing normal non-profit fund-raising and outreach, that's a feasible non-profit directive.

Replies from: lessdazed↑ comment by lessdazed · 2011-08-27T23:02:07.795Z · LW(p) · GW(p)

It also follows from the assumption that the claims in any comment submitted on August 20, 2011 are true. Yet I do not believe this.

I had, to the best of my ability, considered the specific situation when giving my advice.

Any advice can be dismissed by suggesting it came from a too generalized assumption.

If you thought someone was about to foom an unfriendly AI, you would do something about it, and without waiting to properly update your 501(c) forms.

comment by TobyBartels · 2011-08-20T12:42:00.543Z · LW(p) · GW(p)

I like this post, but I think that it suffers from two things that make it badly written: