Boundaries-based security and AI safety approaches

post by Allison Duettmann (allison-duettmann) · 2023-04-12T12:36:10.416Z · LW · GW · 2 commentsContents

AI safety: Boundaries in The Open Agency Model and the Acausal Society Computer security: Boundaries in the Object Capabilities Approach Principle of Least Authority and delegatable rights Abstractions enable higher composability Boundaries: From today’s computing entities to tomorrow’s AI entities None 2 comments

[This part 3 of a 5 part sequence on security and cryptography areas relevant for AI safety, published and linked here a few days apart. Edit: There is now a more in-depth version of this post available here: Digital Defense [? · GW]]

There is a long-standing computer security approach that may have directly useful parallels to a recent strand of AI safety work. Both rely on the notion of ‘respecting boundaries’. Since the computer security approach has been around for a while, there may be useful lessons to draw from it for the more recent AI safety work. Let's start with AI safety, then introduce the security approach, and finish with parallels.

AI safety: Boundaries in The Open Agency Model and the Acausal Society

In a recent LW post, The Open Agency Model [LW · GW], Eric Drexler expands on his previous CAIS work by introducing ‘open agencies’ as a model for AI safety. In contrast to the often proposed opaque or unitary agents, “agencies rely on generative models that produce diverse proposals, diverse critics that help select proposals, and diverse agents that implement proposed actions to accomplish tasks”, subject to ongoing review and revision.

In An Open Agency Architecture for Safe Transformative AI [LW · GW], Davidad expands on Eric Drexler’s model, suggesting that, instead of optimizing, this model would ‘depessimize’ by reaching a world that has existential safety. So rather than a fully-fledged AGI-enforced optimization scenario that implements all principles CEV would endorse, this would be a more modest approach that relies on the notion of important boundaries (including those of human and AI entities) being respected.

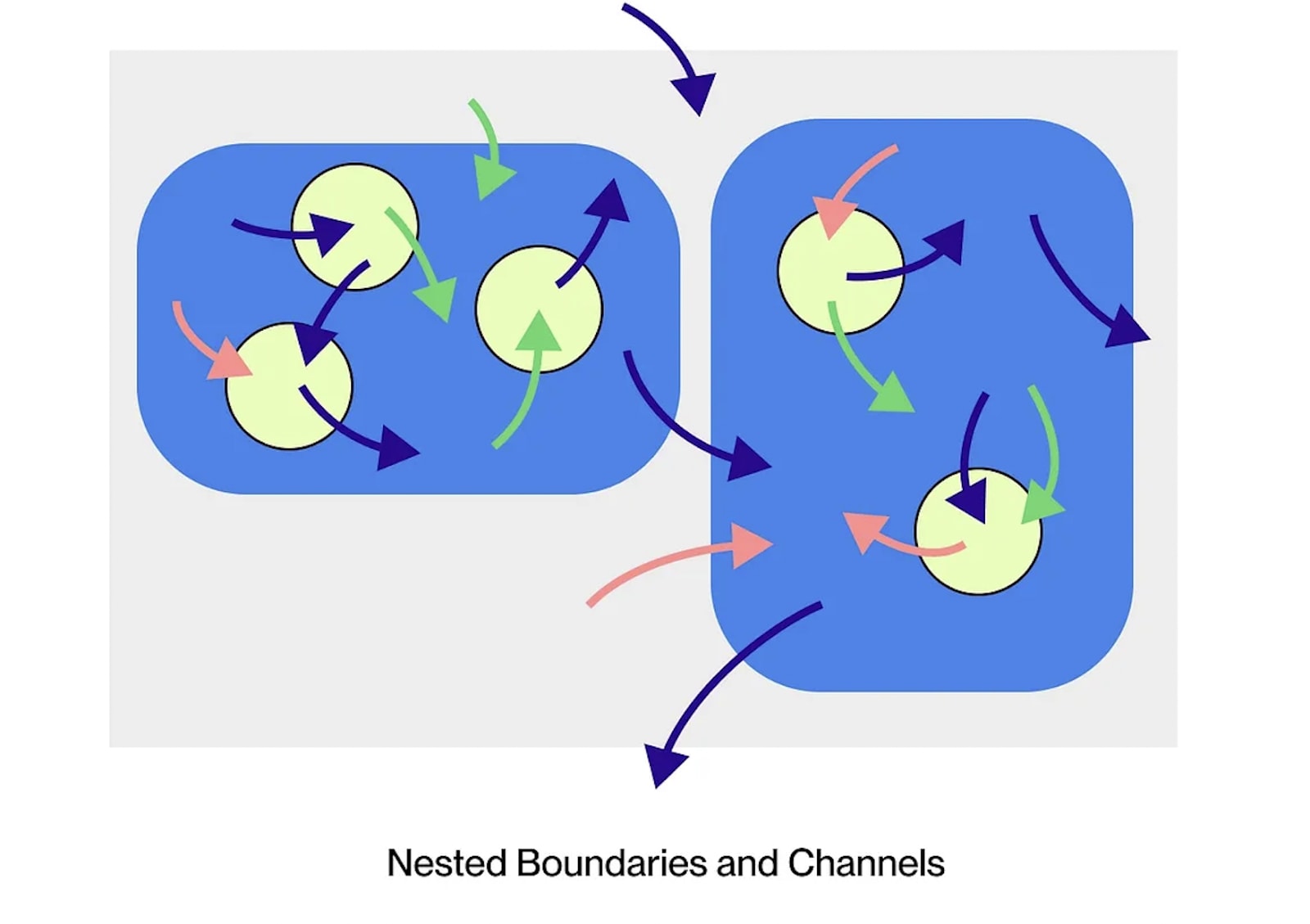

What could it mean to respect the boundaries of human and AI entities? In Acausal Normalcy [AF · GW], Andrew Critch also discusses the notion of respecting boundaries with respect to coordination in an acausal society. He thinks it’s possible that an acausal society generally holds values related to respecting boundaries. He defines ‘boundaries’ as the approximate causal separation of regions, either in physical spaces (such as spacetime) or abstract spaces (such as cyberspace). Respecting them intuitively means relying on the consent of the entity on the other side of the boundary when interacting with them: only using causal channels that were endogenously opened.

His examples of currently used boundaries include a person's skin that separates the inside of their body from the outside, a fence around a family's yard that separates their place from neighbors, a firewall that separates the LAN and its users from the rest of the internet, and a sustained disassociation of social groups that separates the two groups. In his Boundaries Sequence [? · GW], Andrew Critch continues to formally define the notions of boundaries to generalize them to very different intelligences.

If the concept of respecting boundaries is in fact universally salient across intelligences, then it may be possible to help AIs discover and respect the boundaries humans find important (and potentially vice versa).

Computer security: Boundaries in the Object Capabilities Approach

Pursuing a similar idea, in Skim the Manual, Christine Peterson, Mark S. Miller, and I reframe the AI alignment problem as a secure cooperation problem across human and AI entities. Throughout history, we developed norms for human cooperation that emphasize the importance of respecting physical boundaries, for instance to not inflict violence, and cognitive boundaries, for instance to rely on informed consent. We also developed approaches for computational cooperation that emphasize the importance of respecting boundaries in cyberspace. For instance, in object-capabilities-oriented programming, individual computing entities are encapsulated to prevent interference with the contents of other objects.

The fact that respecting boundaries is already leveraged to facilitate today’s human interactions and computing interactions provides some hope that it may apply to cooperation with more advanced AI systems, too. In particular, object-capabilities-oriented programming may provide a useful precedent for how boundaries are already dealt with in existing computing systems. Here is an intro to the approach from Defend Against Cyber Threats:

According to Herbert Simon, complex adaptive systems, whether natural or artificial, exhibit a somewhat hierarchical structure. Humans, for example, are composed of systems at multiple levels of granularity, including organelles, cells, organs, organisms, and organizations. Similarly, software systems are composed of subsystems at various nested granularities, such as functions, classes, modules, processes, machines, and services.

Each subsystem operates independently while also interacting with others to achieve a common goal. Boundaries are in place between them to facilitate desired interactions while blocking unwanted interference. In object-capabilities-oriented programming, encapsulation of information ensures that one object cannot directly read or tamper with the contents of another while communication enables objects to exchange information by their internal logic. Together, encapsulation and communication ensures that communication rights are controlled and transferable, but only by the internal logic of the entity receiving the request. In human terms, one could say that requests are fulfilled by consent.

Principle of Least Authority and delegatable rights

Requests form a significant portion of the traffic across boundaries in both human markets and software systems. In computing terms, a database query processor can ask an array to produce a sorted copy of itself. In human terms, a person can request a package delivery service to deliver a birthday gift to one’s father. Requests allow for collaboration, with entities composing their specialized knowledge to achieve greater intelligence.

However, every request-making channel has its own risks. In human terms, granting a package delivery service access to one's house could result in theft. To eliminate such risks, the Principle of Least Authority (POLA) dictates that a request receiver should only be granted rights that are sufficient for carrying out the specific request. For instance, giving the delivery agent a specific package, rather than full access to one's house, reduces the risk from general theft to a damaged or lost package.

In object-capability systems, delegatable rights grant entities the ability to exercise a right as well as the ability to delegate it further. These rights are communicated through requests, which not only convey the purpose of the request but also give the receiver enough authority to carry out that specific request. In object language terms, possession of a pointer grants the use of the object it points to, and pointers are passed as arguments in requests sent to other objects. In human terms, a clerk given a package as part of a request can deliver it or delegate it to a delivery agent. Each step adds meaning to the request and grants the receiver permission to use the pointed-to object to fulfill the request.

Abstractions enable higher composability

Boundaries give objects the independence required to address smaller components of a problem and the ability cooperate required to combine their knowledge into greater problem-solving ability. Composability is crucial in this process. Unlike in foundational mathematics, programming allows higher-order predicates to operate on other higher-order predicates without restriction or stratification. This enables the creation of abstraction layers atop other abstraction layers, which can manipulate objects and be manipulated by them. It is due to this generic parameterization that we can develop increasingly complex ecosystems of objects interacting with one another.

In computer terms, APIs orchestrate sub-programs with specialized knowledge and integrate them into a more comprehensive system with improved problem-solving abilities. In human terms, we can think of institutions as abstraction mechanisms. The abstraction of "deliver this package" insulates the package delivery service from needing to know a client's motivation and insulates the client from needing to know the logistics of the delivery service. This enables reuse of the ‘package delivery service’ concept across different providers and customers, and all parties know the API-like ritual to follow.

Abstractions are useful because they abstract away unnecessary information. The package clerk doesn’t need to understand all the reasons for someone sending a package. The customer seeking to send a package, doesn't need to understand the processes involved behind sending a package. Just as the package clerk and the customer want to cooperate but require the package service abstraction layer to determine how, in object-oriented programming, abstract interfaces (APIs) illustrate how concrete objects can request information from other concrete objects. Well-designed systems compose local knowledge.

This mechanism is rather universal: From computer systems that utilize more knowledge than any single sub-component could, to systems that can be considered intelligent, to market processes, institutions, and large-scale human organizations, and even to our entire civilization. Civilization comprises a network of entities with specialized knowledge, making requests of entities with differing specializations. Much like object-oriented programming creates an intelligent system by coordinating its specialist members, human institutions have evolved to enhance civilization's adaptive intelligence by orchestrating its member intelligences. Essentially, we are the objects within human society's problem-solving machine, which accounts for far more knowledge than any single individual could possibly possess.

Boundaries: From today’s computing entities to tomorrow’s AI entities

If stable boundaries are fundamental to today's human and computer cooperation, how can we think about the evolution of boundaries with respect to advanced AI entities? In Racing Where?, we suggest that the existence of boundaries between request-making and request-receiving parties means that selection pressures can act on both sides. As systems evolve and expand, local knowledge is combined in smarter ways. Crucially, boundaries allow for independent innovation on both sides of the boundary, which may lead to the development of new coordination mechanisms in the future. We mostly set an arrangement that sets initial conditions, leaving the outcome adaptable to future knowledge.

One interesting open question is how to extend Andrew Critch’ boundary formalism introduced above to model ‘boundary protocols’ such as APIs, verbal consent, and administrator authentication. Ideas from the semantics of object capabilities systems could prove fruitful here. While the ultimate evolution of boundaries for future human AI cooperation may be beyond our current understanding since it is continually renegotiated, object-oriented programming may have useful lessons for how to set up the initial conditions well to make boundary renegotiation possible.

[This part 3 of a 5 part sequence on security and cryptography areas relevant for AI safety. Part 4 will be published and linked here in a few days.]

2 comments

Comments sorted by top scores.

comment by Chipmonk · 2023-05-04T17:14:52.339Z · LW(p) · GW(p)

I've compiled all of the current «Boundaries» x AI safety thinking and research (like this post) that I could find here: «Boundaries» and AI safety compilation [LW · GW].

(E.g.: Davidad [LW · GW] connected this post to moral patienthood on twitter)

comment by baturinsky · 2023-04-12T18:37:54.169Z · LW(p) · GW(p)

I'm wondering if it is possible to measure "staying in bounds" with perplexity of other agent's predictions? That is, if an agent's behaviour is reducing other agent's ability to predict (and, therefore, plan) their future, then this agents breaks their bounds.