Escape Velocity from Bullshit Jobs

post by Zvi · 2023-01-10T14:30:00.828Z · LW · GW · 18 commentsContents

The Dilemma Two Models of the Growth of Bullshit A Theory of Escape Velocity Shoveling Bullshit Replacing Bullshit Passing Versus Winning Speed Premium None 18 comments

Without speculating here on how likely this is to happen, suppose that GPT-4 (or some other LLM or AI) speeds up, streamlines or improves quite a lot of things. What then?

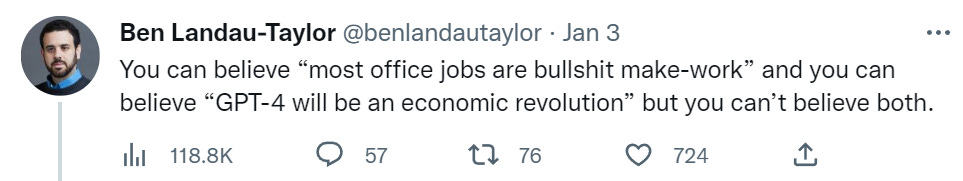

The Dilemma

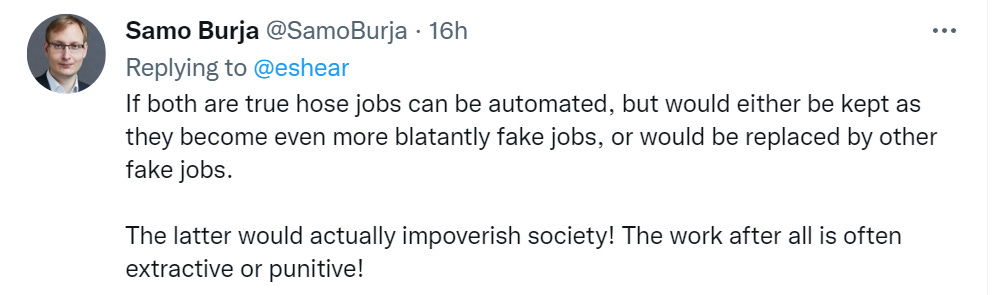

Samo and Ben’s dilemma: To the extent that the economy is dominated by make-work, automating it away won’t work because more make-work will be created, and any automated real work gets replaced by new make-work.

Consider homework assignments. ChatGPT lets students skip make-work. System responds by modifying conditions to force students to return to make-work. NYC schools banned ChatGPT.

Consider a bullshit office job. You send emails and make calls and take meetings and network to support inter-managerial struggles and fulfill paperwork requirements and perform class signaling to make clients and partners feel appreciated. You were hired in part to fill out someone’s budget. ChatGPT lets you compose your emails faster. They (who are they?) assign you to more in person meetings and have you make more phone calls and ramp up paperwork requirements.

The point of a bullshit job is to be a bullshit job.

There is a theory that states that if you automate away a bullshit job, it will be instantly replaced by something even more bizarre and inexplicable.

There is another theory that states this has already happened.

Automating a real job can even replace it with a bullshit job.

This argument applies beyond automation. It is a full Malthusian economic trap: Nothing can increase real productivity.

Bullshit eats all.

Eventually.

Two Models of the Growth of Bullshit

- Samo’s Law of Bullshit: Bullshit rapidly expands to fill the slack available.

- Law of Marginal Bullshit: There is consistent pressure in favor of marginally more bullshit. Resistance is inversely proportional to slack.

In both cases, the lack of slack eventually collapses the system.

In the second model, increased productivity buys time, and can do so indefinitely.

Notice how good economic growth feels to people. This is strong evidence for lags, and for the ability of growth and good times to outpace the problems.

A Theory of Escape Velocity

We escaped the original Malthusian trap with the Industrial Revolution, expanding capacity faster than the population could grow. A sufficient lead altered underlying conditions to the point where we should worry more about declining population than rising population in most places.

Consider the same scenario for a potential AI Revolution via GPT-4.

Presume GPT-4 allows partial or complete automation of a large percentage of existing bullshit jobs. What happens?

My model says this depends on the speed of adaptation.

Shoveling Bullshit

Can improvements outpace the bullshit growth rate?

A gradual change over decades likely gets eaten up by gradual ramping up of requirements and regulations. A change that happens overnight is more interesting.

How fast can bullshit requirements adapt?

The nightmare is ‘instantaneously.’ A famous disputed claim is that the NRC defined a ‘safe’ nuclear power plant as one no cheaper than alternative plants. Cheaper meant you could afford to Do More Safety. Advancements are useless.

Most regulatory rules are not like that. Suppose the IRS requires 100 pages of paperwork per employee. This used to take 10 hours. Now with GPT-4, as a thought experiment, let’s say it takes 1 hour.

The long run result might be 500 pages of more complicated paperwork that takes 10 hours even with GPT-4, while accomplishing nothing. That still will take time. It is not so easy or fast to come up with 400 more pages. I’d assume that would take at least a decade. It likely would need to wait until widespread adaptation of AI powered tools, or it would bury those without them.

Meanwhile, GPT-5 comes out. Gains compound. It seems highly plausible this can outpace the paperwork monster.

This applies generally to places where a specified technical requirement, or paperwork, is needed. Or in places where otherwise the task is well-specified and graded on a pass/fail basis. Yes, the bar can and will be raised. No, if AI delivers the goods in full, Power’s requirements can’t and won’t be raised high enough or fast enough to keep pace.

Replacing Bullshit

If the bullshit and make-work needs to keep pace, it has options.

- Ban or regulate the AI, or use of the AI.

- Find different bullshit requirements that the AI can’t automate.

- Impose relative bullshit requirements, as in the nuclear power case.

Option 1 does not seem promising. Overall AI access likely can’t be policed.

Option 3 works in some situations and not others, as considered below.

Option 2 seems promising. Would likely be in person. Phones won’t work.

New in person face time bullshit tasks could replace old bullshit tasks. This ensures bullshit is performed, bullshit jobs are maintained, costly signals are measured and intentionally imposed frictions are preserved.

I expect this would increasingly be the primary way we impose relative bullshit requirements. When there is a relative requirement, things can’t improve. Making positional goods generally more efficient does not work.

Same goes for intentional cost impositions. Costs imposed in person are much harder to pay via AI.

Thus, such costs move more directly towards pure deadweight losses.

When things are not competitive, intentional or positional, I would not expect requirements to ramp up quickly enough to keep pace. Where this is attempted, the gap between the bullshit crippled versions and the freed versions will be very large. Legal coercion would be required, and might not work. If escape is achieved briefly, it will be hard to put that genie back in the bottle.

One tactic will be to restrict use of AI to duplicate work to those licensed to do the work. This will be partly effective at slowing down such work, but the work of professionals will still accelerate, shrinking the pool of such professionals will be a slow process at best, and it is hard to restrict people doing the job for themselves where AI enables that.

Practicalities, plausibility and the story behind requirements all matter. Saying ‘humans prefer interacting with humans’ is not good enough, as callers to tech support know well. Only elite service and competition can pull off these levels of inefficiency.

Passing Versus Winning

It will get easier to pass a class or task the AI can help automate, unless the barrier for passing can be raised via introducing newly required bullshit in ways that stick.

Notice that the main thing you do to pass in school is to show up and watch your life end one minute at a time. Expect more of that, in more places.

It won’t get easier to be head of the class. To be the best.

Harvard is going to take the same number of students. If the ability of applicants to look good is supercharged for everyone, what happens? Some aspects are screened off, making others more important, requiring more red queen’s races. Other aspects have standards that go way up to compensate for the new technology.

Does this make students invest more or less time in the whole process? If returns to time in some places declines, less time gets invested in those places. Then there are clear time sinks that would remain, like putting in more volunteer hours, to eat up any slack. My guess is no large change.

What about a local university? What if the concern is ‘are you good enough?’ not ‘are you the best?’ If it now takes less human time to get close enough to the best application one can offer, this could indeed be highly welfare improving. The expectations and requirements for students will rise, but not enough to keep pace.

Attending the local university could get worse. If what cannot be faked is physical time in the classroom, such requirements will become increasingly obnoxious, and increasingly verified.

The same applies to bullshit jobs. For those stuck with such jobs, ninety percent of life might again be showing up. By making much remote work too easy, it risks ceasing to do its real task.

Speed Premium

It comes down to: If they do happen, can the shifts described above happen fast enough, before they are seen as absurd, the alternative models become too fully developed and acclimated to to be shut down and growth becomes self-sustaining?

If this all happens at the speed its advocates claim, then the answer is clearly yes.

Do I believe it? I mostly want to keep that question outside scope, but my core answer so far, based on my own experiences and models, is no. I am deeply skeptical of those claims, especially for the speed thereof. Nostalgebraist’s post here illustrates a lot of the problems. Also see this thread.

Still, I can’t rule out thing developing fast enough. We shall see.

18 comments

Comments sorted by top scores.

comment by Ratios · 2023-01-10T15:15:44.486Z · LW(p) · GW(p)

A bit beside the point, but I'm a bit skeptical of the idea of bullshit jobs in general. From my experience, many times, people describe jobs that have illegible or complex contributions to the value chain as bullshit, for example, investment bankers (although efficient capital allocation has a huge contribution) or lawyers as bullshit jobs.

I agree governments have a lot of inefficiency and superfluous positions, but wondering how big are bullshit jobs really as % of GDP.

↑ comment by Gordon Seidoh Worley (gworley) · 2023-01-12T05:58:51.831Z · LW(p) · GW(p)

Agreed. I think the two theories of bullshit jobs miss how bullshit comes into existence.

Bullshit is actually just the fallout of Goodhart's Curse.

(note: it's possible this is what Zvi means by 2 but he's saying it in a weird way)

You start out wanting something, like to maximize profits. You do everything reasonable in your power to increase profits. You hit a wall and don't realize it and keep applying optimization. You throw more resources after marginally worse returns until you start actively making things worse by trying to earn more.

One of the consequences of this is bullshit jobs.

Let me give you an example. Jon works for a SaaS startup. His job is to maximize reliability. The product is already achieving 3 9s, but customers want 4 because they have some vague sense that more is better and your competitor is offering 4. Jon knows that going from 3 to 4 will 10x COGS and tells the executives as much, but they really want to close those deals. Everyone knows 3 9s is actually enough for the customers, but they want 4 so Jon has to give it to them because otherwise they can't close deals.

Now Jon has to spend on bullshit. He quadruples the size of his team and they start building all kinds of things to eek out more reliability. In order to pull this off they make tradeoffs that slow down product development. The company is now able to offer 4 9s and close deals, but suffers deadweight loss from paying for 4 9s when customers only really need 3 (if only customers would understand they would suffer no material loss by living with 3).

This same story plays out in every function across the company. Marketing and sales are full of folks chasing deals that will never pay back their cost of acquisition. HR and legal are full of folks protecting the company against threats that will never materialize. Support is full of reps who help low revenue customers who end up costing the company money to keep on the books. And on and on.

By the time anyone realizes the company has suffered several quarters of losses. They do a layoff, restructure, and refocus on the business that's actually profitable. Everyone is happy for a while, but then demand more growth, restarting the business cycle.

Bullshit jobs are not mysterious. They are literally just Goodharting.

Thus, we should not expect them to go away thanks to AI unless all jobs go away, we should just expect them to change, though I think not in the way Zvi expects. Bullshit doesn't exist for its own sake. Bullshit exists due to Goodharting. So bullshit will change to fit the context of where humans are perceived to provide additional value. The bullshit will continue up until some point at which humans are completely unneeded by AI.

Replies from: Dagon, gworley↑ comment by Dagon · 2023-01-12T18:21:17.212Z · LW(p) · GW(p)

So the question is "(when) will AI start to help top management reduce goodharting, by expanding the complexity that managers can use in modeling their business decisions and sub-organization incentives"? If we can IDENTIFY deals that won't pay back their cost of acquisition, we can stop counting them as growth, and stop doing them.

Whether this collapses or expands the overall level of production (not finance, but actual human satisfaction by delivering stuff and performing services) is very hard to predict.

↑ comment by Gordon Seidoh Worley (gworley) · 2023-01-12T17:11:03.317Z · LW(p) · GW(p)

Additionally, bullshit gets worse the more you try to optimize because you start putting worse optimizers in charge of making decisions. I think this is where the worst bullshit comes from: you hire people who just barely know how to do their jobs, they hire people who they don't actually need because hiring people is what managers are supposed to do, they then have to find something for them to do. They playact at being productive because that's what they were hired to do, and the business doesn't notice for a while because they're focused on trying to optimize past the point of marginally cost effective returns. This is where the worst bullshit pops up and is the most salient, but it's all downstream of Goodharting.

↑ comment by Charlie Sanders (charlie-sanders) · 2023-01-11T17:13:39.928Z · LW(p) · GW(p)

Agreed. Facilitation- focused jobs (like the ones derided in this post) might look like bullshit to an outsider, but in my experience they are absolutely critical to effectively achieving goals in a large organization.

↑ comment by clone of saturn · 2023-01-11T07:28:50.185Z · LW(p) · GW(p)

Twitter recently fired a majority of its workforce (I've seen estimates from 50% to 90%) and seems to be chugging along just fine. This strongly implies that at least that many jobs were bullshit, but it's unlikely that the new management was able to perfectly identify all bullshitters, so it's only a lower bound. Sometimes contributions can be illegible, but there are also extremely strong incentives to obfuscate.

Replies from: Taran↑ comment by Taran · 2023-01-11T08:48:03.426Z · LW(p) · GW(p)

If you fire your sales staff your company will chug along just fine, but won't take in new clients and will eventually decline through attrition of existing accounts.

If you fire your product developers your company will chug along just fine, but you won't be able to react to customer requests or competitors.

If you fire your legal department your company will chug along just fine, but you'll do illegal things and lose money in lawsuits.

If your fire your researchers your company will chug along just fine, but you won't be able to exploit any more research products.

If you fire the people who do safety compliance enforcement your company will chug along just fine, but you'll lose more money to workplace injuries and deaths (this one doesn't apply to Twitter but is common in warehouses).

If you outsource a part of your business instead of insourcing (like running a website on the cloud instead of owning your own data centers, or doing customer service through a call center instead of your own reps) then the company will chug along just fine, and maybe not be disadvantaged in any way, but that doesn't mean the jobs you replaced were bullshit.

In general there are lots of roles at every company that are +EV, but aren't on the public-facing critical path. This is especially true for ad-based companies like Twitter and Facebook, because most of the customer-facing features aren't publicly visible (remember: if you are not paying, you're not the customer).

Replies from: clone of saturn↑ comment by clone of saturn · 2023-01-11T23:37:45.844Z · LW(p) · GW(p)

These statements seem awfully close to being unfalsifiable. The amount of research and development coming from twitter in the 5 years before the acquisition was already pretty much negligible, so there's no difference there. How long do we need to wait for lawsuits or loss of clients to cause observable consequences?

Replies from: Taran↑ comment by Taran · 2023-01-12T17:39:06.020Z · LW(p) · GW(p)

The amount of research and development coming from twitter in the 5 years before the acquisition was already pretty much negligible

That isn't true, but I'm making a point that's broader than just Twitter, here. If you're a multi-billion dollar company, and you're paying a team 5 million a year to create 10 million a year in value, then you shouldn't fire them. Then again, if you do fire them, probably no one outside your company will be able to tell that you made a mistake: you're only out 5 million dollars on net, and you have billions more where that came from. If you're an outside observer trying to guess whether it was smart to fire that team or not, then you're stuck: you don't know how much they cost or how much value they produced.

How long do we need to wait for lawsuits or loss of clients to cause observable consequences?

In Twitter's case the lawsuits have already started, and so has the loss of clients. But sometimes bad decisions take a long time to make themselves felt; in a case close to my heart, Digital Equipment Corporation made some bad choices in the mid to late 80s without paying any visible price until 1991 or so. Depending on how you count, that's a lead time of 3 to 5 years. I appreciate that that's annoying if you want to have a hot take on Musk Twitter today, but sometimes life is like that. The worlds where the Twitter firings were smart and the worlds where the Twitter firings were dumb look pretty much the same from our perspective, so we don't get to update much. If your prior was that half or more of Twitter jobs were bullshit then by all means stay with that, but updating to that from somewhere else on the evidence we have just isn't valid [LW · GW].

↑ comment by romeostevensit · 2023-01-11T05:39:31.452Z · LW(p) · GW(p)

the actual bullshit jobs are political. They exist to please someone or run useful cover in various liability laundering and faction wars.

↑ comment by Ben (ben-lang) · 2023-04-11T13:51:25.306Z · LW(p) · GW(p)

My experience is that bullshit jobs certainly do exist. Note that it is not necessary for the job to actually be easy to be kind of pointless.

One example I think is quite clean. The EU had (or has) a system where computer games that "promoted European values" developed in the EU were able to get certain (relatively minor) tax breaks. In practice this meant that the companies would hire (intelligent, well qualified, well paid) people to write them 300+ page reports delving into the philosophy of how this particular first person shooter was really promoting whatever the hell "European values" were supposed to be, while other people compiled very complicated data on how the person-hours invested in the game were geographically distributed between the EU and not (to get the tax break). Meanwhile, on the other side of this divide were another cohort of hard-working intelligent and qualified people who worked for the governments of the EU and had to read all these documents to make decisions about whether the tax break would be applied. I have not done the calculation, but I have a sense that the total cost of the report-writing and the report-reading going on could reasonably compete with the size of the tax break itself. The only "thing" created by all those person-hours was a slight increase in the precision with which the government applies a tax break. Is that precision worth it? What else could have been created with all those valuable person-hours instead?

comment by Dagon · 2023-01-10T17:43:29.487Z · LW(p) · GW(p)

This may be the most important question about the path of near-future societal change.

The main problem in analyzing, predicting, or impacting this future is that there are very few pure-bullshit or pure-value jobs or tasks. It's ALWAYS a mix, and the borders between components of a job are nonlinear and fuzzy. And not in a way that a good classifier would help - it's based on REALLY complicated multi-agent equilibria, with reinforcements from a lot of directions.

Your bullshit job description is excellent

You send emails and make calls and take meetings and network to support inter-managerial struggles and fulfill paperwork requirements and perform class signaling to make clients and partners feel appreciated.

The key there is "make clients and partners feel appreciated". That portion is a race. If it fails, some other company gets the business (and the jobs). I argue that there are significant relative measures (races) in EVERY aspect of human interaction, and that this is embedded enough in human nature that it's unlikely to be eliminated.

[edit, after a bit more thought]

The follow-up question is about when AI is trustworthy (and trusted; cf. lack of corporate internal prediction markets) enough to dissolve some of the races, making the jobs pure-bullshit, and thus eliminating them. That bullshit job stops being funded if the clients and partners make their spending decisions based on AI predictions of performance, not on human trust in employees.

comment by [deleted] · 2023-01-10T19:58:30.724Z · LW(p) · GW(p)

The flaw here is in capitalism, if a company has too many bullshit jobs it's costs are higher. Even a company that owns a monopoly has a limit to how many bullshit jobs it can sustain.

For example if Google were to stop updating their core software or selling ads their revenue would decline. They can't have everyone working on passion projects and moonshots. They have a finite number of dollars they can invest long term, probably equal to their "monopoly rent".

Hypothetically if they adopt an AI system capable enough to do almost all the real work two things happen:

- The number of bullshit jobs they can support increases a little

- It makes it possible for competitors to actually catch up. Googles tech lead is partly the collective effort of thousands of people. If GPT-4 can just churn out similar scale tooling with almost no effort then someone else could just ask GPT-4 to rewrite the Linux kernel in Rust, then write an HMI layer with some ultra high level representation that is auto translated to code..

Boom, a 10 person startup has something better than android.

So google has to make Android better to stay ahead and so on. Or they fail like IBM did - retaining enough customers to stay afloat but no longer growing.

Replies from: mruwnik↑ comment by mruwnik · 2023-01-10T21:52:03.977Z · LW(p) · GW(p)

In the long run, yes. And that tends to be the end stage of behemoths. But in the mean time the juggernaut carries on, running on inertia, crushing everything in front of it.

If a 10 person startup has something better than android, you first offer them a couple of millions, and if they don't accept it, use your power to crush them.

Replies from: None↑ comment by [deleted] · 2023-01-10T22:50:23.613Z · LW(p) · GW(p)

Maybe. I suppose many successful startups came along after better tools such as python/JavaScript/cloud hosting also made it dramatically cheaper to create a product it would have taken a behemoth before. See Dropbox as an example.

So gpt-4 is like another order of magnitude more productive a tool, similar to prior improvements.

comment by clone of saturn · 2023-01-11T06:37:44.468Z · LW(p) · GW(p)

Suppose the IRS requires 100 pages of paperwork per employee. This used to take 10 hours. Now with GPT-4, as a thought experiment, let’s say it takes 1 hour.

The long run result might be 500 pages of more complicated paperwork that takes 10 hours even with GPT-4, while accomplishing nothing. That still will take time. It is not so easy or fast to come up with 400 more pages. I’d assume that would take at least a decade.

This seems to neglect the possibility that GPT-4 could be used, not just to accomplish bullshit tasks, but also to invent new bullshit tasks much faster than humans could.

comment by Michael Cheers (michael-cheers) · 2023-01-24T08:46:31.176Z · LW(p) · GW(p)

Suppose the IRS requires 100 pages of paperwork per employee. This used to take 10 hours. Now with GPT-4, as a thought experiment, let’s say it takes 1 hour.

The long run result might be 500 pages of more complicated paperwork that takes 10 hours even with GPT-4, while accomplishing nothing. That still will take time. It is not so easy or fast to come up with 400 more pages. I’d assume that would take at least a decade.

Would human effort really go up in this scenario? Why would the humans be needed to help with the paperwork? It would just require more effort by AI to complete.