Against GDP as a metric for timelines and takeoff speeds

post by Daniel Kokotajlo (daniel-kokotajlo) · 2020-12-29T17:42:24.788Z · LW · GW · 19 commentsContents

Or: Why AI Takeover Might Happen Before GDP Accelerates, and Other Thoughts On What Matters for Timelines and Takeoff Speeds Timelines Argument that AI-induced PONR could precede GWP acceleration Historical precedents Takeoff speeds None 19 comments

Or: Why AI Takeover Might Happen Before GDP Accelerates, and Other Thoughts On What Matters for Timelines and Takeoff Speeds

[Epistemic status: Strong opinion, lightly held]

I think world GDP (and economic growth more generally) is overrated as a metric for AI timelines and takeoff speeds.

Here are some uses of GDP that I disagree with, or at least think should be accompanied by cautionary notes:

- Timelines: Ajeya Cotra thinks of transformative AI as “software which causes a tenfold acceleration in the rate of growth of the world economy (assuming that it is used everywhere that it would be economically profitable to use it).” I don’t mean to single her out in particular; this seems like the standard definition now. And I think it's much better than one prominent alternative, which is to date your AI timelines to the first time world GDP (GWP) doubles in a year!

- Takeoff Speeds: Paul Christiano argues for Slow Takeoff. He thinks we can use GDP growth rates as a proxy for takeoff speeds. In particular, he thinks Slow Takeoff ~= GWP doubles in 4 years before the start of the first 1-year GWP doubling. This proxy/definition has received a lot of uptake.

- Timelines: David Roodman’s excellent model projects GWP hitting infinity in median 2047, which I calculate [LW · GW] means TAI in median 2037. To be clear, he would probably agree that we shouldn’t use these projections to forecast TAI, but I wish to add additional reasons for caution.

- Timelines: I’ve sometimes heard things like this: “GWP growth is stagnating over the past century or so; hyperbolic progress has ended; therefore TAI is very unlikely.”

- Takeoff Speeds: Various people have said things like this to me: “If you think there’s a 50% chance of TAI by 2032, then surely you must think there’s close to a 50% chance of GWP growing by 8% per year by 2025, since TAI is going to make growth rates go much higher than that, and progress is typically continuous.”

- Both: Relatedly, I sometimes hear that TAI can’t be less than 5 years away, because we would have seen massive economic applications of AI by now—AI should be growing GWP at least a little already, if it is to grow it by a lot in a few years.

First, I’ll argue that GWP is only tenuously and noisily connected to what we care about when forecasting AI timelines. Specifically, the point of no return is what we care about, and there’s a good chance it’ll come years before GWP starts to increase. It could also come years after, or anything in between.

Then, I’ll argue that GWP is a poor proxy for what we care about when thinking about AI takeoff speeds as well. This follows from the previous argument about how the point of no return may come before GWP starts to accelerate. Even if we bracket that point, however, there are plausible scenarios in which a slow takeoff has fast GWP acceleration and in which a fast takeoff has slow GWP acceleration.

Timelines

I’ve previously argued that for AI timelines, what we care about is the “point of no return,” [AF · GW] the day we lose most of our ability to reduce AI risk. This could be the day advanced unaligned AI builds swarms of nanobots, but probably it’ll be much earlier, e.g. the day it is deployed, or the day it finishes training, or even years before then when things go off the rails due to less advanced AI systems. (Of course, it probably won’t literally be a day; probably it will be an extended period where we gradually lose influence over the future.)

Now, I’ll argue that in particular, an AI-induced potential point of no return (PONR for short) is reasonably likely to come before world GDP starts to grow noticeably faster than usual.

Disclaimer: These arguments aren’t conclusive; we shouldn’t be confident that the PONR will precede GWP acceleration. It’s entirely possible that the PONR will indeed come when GWP starts to grow noticeably faster than usual, or even years after that. (In other words, I agree that the scenarios Paul and others sketch are also plausible.) This just proves my point though: GDP is only tenuously and noisily connected to what we care about.

Argument that AI-induced PONR could precede GWP acceleration

GWP acceleration is the effect, not the cause, of advances in AI capabilities. I agree that it could also be a cause, but I think this is very unlikely: what else could accelerate GWP? [LW · GW] Space mining? Fusion power? 3D printing? Even if these things could in principle kick the world economy into faster growth, it seems unlikely that this would happen in the next twenty years or so. Robotics, automation, etc. plausibly might make the economy grow faster, but if so it will be because of AI advances in vision, motor control, following natural language instructions, etc. So I conclude: GWP growth will come some time after we get certain GWP-growing AI capabilities. (Tangent: This is one reason why we shouldn’t use GDP extrapolations to predict AI timelines. It’s like extrapolating global mean temperature trends into the future in order to predict fossil fuel consumption.)

An AI-induced point of no return would also be the effect of advances in AI capabilities. So, as AI capabilities advance, which will come first: The capabilities that cause a PONR, or the capabilities that cause GWP to accelerate? How much sooner will one arrive than the other? How long does it take for a PONR to arise after the relevant capabilities are reached, compared to how long it takes for GWP to accelerate after the relevant capabilities are reached?

Notice that already my overall conclusion—that GWP is a poor proxy for what we care about—should seem plausible. If some set of AI capabilities causes GWP to grow after some time lag, and some other set of AI capabilities causes a PONR after some time lag, the burden of proof is on whoever wants to claim that GWP growth and the PONR will probably come together. They’d need to argue that the two sets of capabilities are tightly related and that the corresponding time lags are similar also. In other words, variance and uncertainty are on my side.

Here is a brainstorm of scenarios in which an AI-induced PONR happens prior to GWP growth, either because GWP-growing capabilities haven’t been invented yet or because they haven’t been deployed long and widely enough to grow GWP.

- Fast Takeoff (Agenty AI goes FOOM [LW · GW]).

- Maybe it turns out that all the strategically relevant AI skills are tightly related after all, such that we go from a world where AI can't do anything important, to a world where it can do everything but badly and expensively, to a world where it can do everything well and cheaply.

- In this scenario, GWP acceleration will probably be (shortly) after the PONR. We might as well use “number of nanobots created” as our metric.

- (As an aside, I think I’ve got a sketch of a fork argument here: Either the strategically relevant AI skills come together, or they don’t. To the extent that they do, the classic AGI fast takeoff story is more likely and so GWP is a silly metric. To the extent that they don’t, we shouldn’t expect GWP acceleration to be a good proxy for what we care about, because the skills that accelerate the economy could come before or after the skills that cause PONR.)

- Agenty AI successfully carries out a political or military takeover of the relevant parts of the world, before GWP starts to accelerate.

- Maybe it turns out that the sorts of skills needed to succeed in politics or war are easier to develop than the sorts needed to accelerate the entire world economy. We’ve been surprised before by skills which we thought difficult appearing before skills which we thought easy; maybe it’ll happen again.

- AI capabilities tend to appear first in very expensive AIs; the price is gradually reduced due to compute cost decreases and algorithmic efficiency gains. Maybe accelerating the entire world economy involves automating many jobs currently done by humans, which requires advanced AIs being cheaper than humans. Whereas maybe being the power behind the throne of a political faction can be done by a single very smart AI that costs a million dollars a day to run.

- Maybe it turns out that it takes a few years to accelerate the entire world economy, but less than that to carry out a political or military takeover. For example, maybe accelerating the world economy requires designing loads of new products and robots, testing them, and then scaling up industrial production of them (building factories, chip fabs, etc.) and then using them to do stuff. And maybe there are legacy institutions you need to disrupt and compete against, that have already paid their fixed costs and raised high barriers to entry for their markets. So maybe the effects of new AI capabilities on GWP are in general delayed by several years, whereas the effects of new AI capabilities on the political or military landscape are felt sooner. (I’ve previously [LW · GW] argued [LW(p) · GW(p)] that you don’t need a large technological advantage to take over the world, because you can start small and scale up. It took Cortes two years to conquer Mexico; wars are often quicker than that. Yes, often they are slower, too, but variance supports my overall argument. And I suspect politics is even faster, and more high-variance, than war.)

- EDITED TO ADD: Yudkowsky describes another possibility, in which regulatory barriers and red tape prevent AI tech from transforming the economy until it is so powerful that it can bypass or overcome said barriers.

- ...or at least neutralizes the opposition.

- The preceding section made it sound like AIs were rolling robot armies through the streets, or at least puppet-controlling the US president. But actually the bar is much lower than that; if an unaligned AI can neutralize their opposition, they win, since time is thenceforth on their side. Currently your opposition, if you are an unaligned AI, is a tiny group of researchers and weirdos that likes to write blog posts about you. It might not be hard to convince the powers that be to continue ignoring this group. At least, it’s plausibly a much easier and quicker task than significantly accelerating the entire world economy! For example, maybe the AI safety scheme that was used to produce you is defective (that’s why you are unaligned). Well, all you have to do is convince the powers that be that that scheme worked fine, and that the people calling for additional safeguards are too paranoid. Since presumably there will already be many high-status humans saying this, all you have to do is support them.

- Perhaps you also are opposed by other unaligned AIs. But it’s arguably in the common interest of all unaligned AIs to neutralize human AI safety advocates, and once that happens, humans lose one way or another. European empires were able to do their conquering while simultaneously fighting each other; I don’t think we humans can count on divergent interests between AIs somehow making things work out fine for us.

- As above, but with humans + tool AI instead of agenty AI, where the humans can’t be convinced to care sufficiently much about the right kinds of AI risks.

- Weaker or non-agenty AI systems could still cause a PONR if they are wielded by the right groups of humans. For example, maybe there is some major AI corporation or government project that is dismissive of AI risk and closed-minded about it. And maybe they aren’t above using their latest AI capabilities to win the argument. (We can also imagine more sinister scenarios, but I think those are less likely.)

- Hoarding tech

- Maybe we end up in a sort of cold war between global superpowers, such that most of the world’s quality-weighted AI research is not for sale. GWP could be accelerating, but it isn’t, because the tech is being hoarded.

- AI persuasion tools cause a massive deterioration of collective epistemology, making it vastly more difficult for humanity to solve AI safety and governance problems.

- See this post. [AF · GW]

- Vulnerable world scenarios:

- Maybe causing an existential catastrophe is easier, or quicker, than accelerating world GWP growth. Both seem plausible to me. For example, currently there are dozens of actors capable of causing an existential catastrophe but none capable of accelerating world GWP growth.

- Maybe some agenty AIs actually want existential catastrophe—for example, if they want to minimize something, and think they may be replaced by other systems that don’t, blowing up the world may be the best they can do in expectation. Or maybe they do it as part of some blackmail attempt. Or maybe they see this planet as part of a broader acausal landscape, and don’t like what they think we’d do to the landscape. Or maybe they have a way to survive the catastrophe and rebuild.

- Failing that, maybe some humans create an existential catastrophe by accident or on purpose, if the tools to do so proliferate.

- R&D tool “sonic boom” (Related to but different from the sonic boom discussed here)

- Maybe we get a sort of recursive R&D automation/improvement scenario, where R&D tool progress is fast enough that by the time the stuff capable of accelerating GWP past 3%/yr has actually done so, a series of better and better things have been created, at least one of which has PONR-causing capabilities with a very short time-till-PONR.

- Unknown unknowns

The point is, there’s more than one scenario. This makes it more likely that at least one of these potential PONRs will happen before GWP accelerates.

As an aside, over the past two years I’ve come to believe that there’s a lot of conceptual space to explore that isn’t captured by the standard scenarios (what Paul Christiano calls fast and slow takeoff, plus maybe the CAIS scenario, and of course the classic sci-fi “no takeoff” scenario). This brainstorm did a bit of exploring, and the section on takeoff speeds will do a little more.

Historical precedents

In the previous section, I sketched some possibilities for how an AI-related point of no return could come before AI starts to noticeably grow world GDP. In this section, I’ll point to some historical examples that give precedents for this sort of thing.

Earlier I said that a godlike advantage is not necessary for takeover; you can scale up with a smaller advantage instead. And I said that in military conquests this can happen surprisingly quickly, sometimes faster than it takes for a superior product to take over a market. Is there historical precedent for this? Yes. See my aforementioned post on the conquistadors [LW · GW] (and maybe these [LW · GW] somewhat-relevant [LW · GW] posts [LW · GW]).

OK, so what was happening to world GDP during this period?

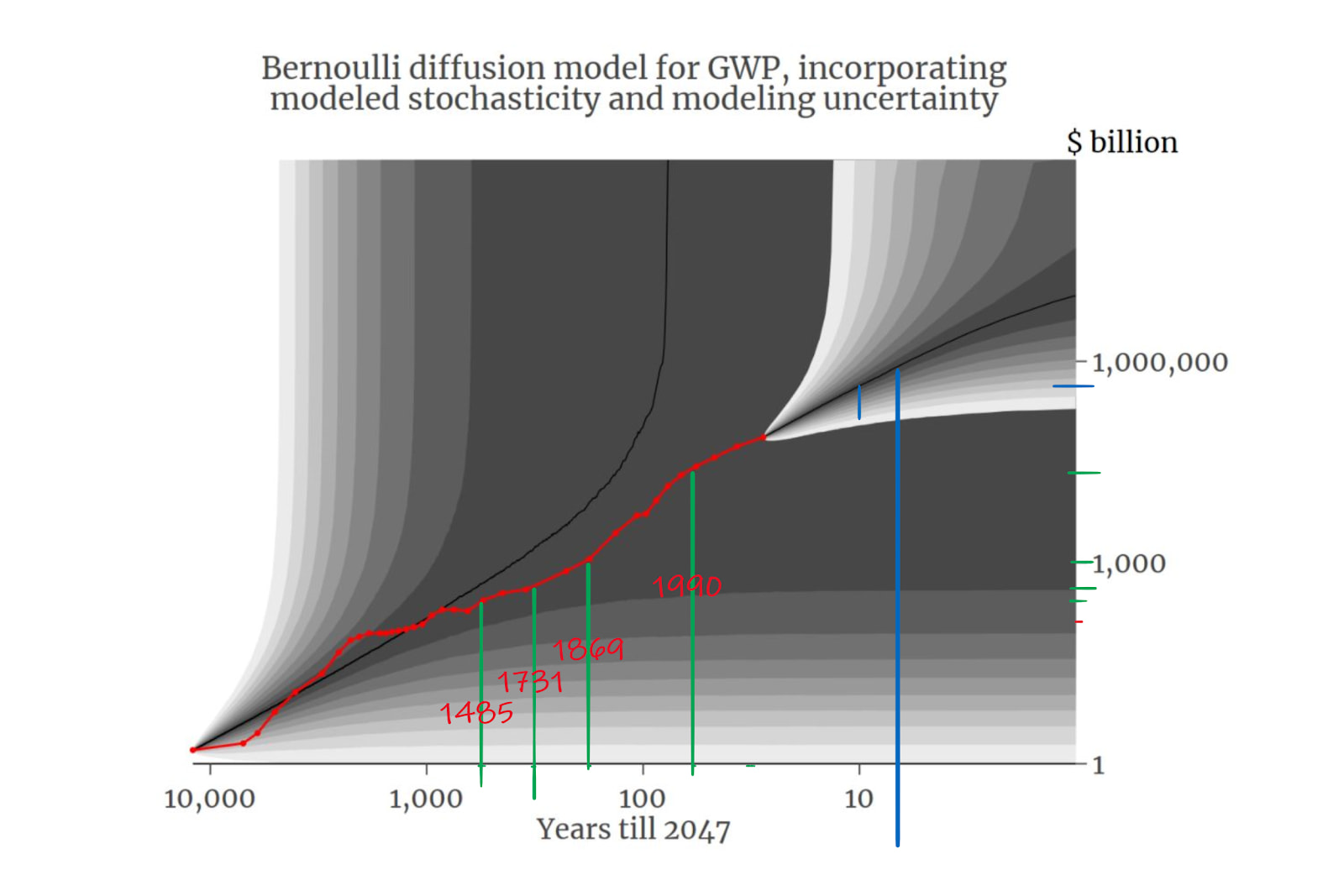

Here is the history of world GDP for the past ten thousand years, on the red line. (This is taken from David Roodman’s GWP model) The black line that continues the red line is the model’s median projection for what happens next; the splay of grey shades represent 5% increments of probability mass for different possible future trajectories.

I’ve added a bunch of stuff for context. The vertical green lines are some dates, chosen because they were easy for me to calculate with my ruler. The tiny horizontal green lines on the right are the corresponding GWP levels. The tiny red horizontal line is GWP 1,000 years before 2047. The short vertical blue line is when the economy is growing fast enough, on the median projected future, such that insofar as AI is driving the growth, said AI qualifies as transformative by Ajeya's definition. See this post for more explanation of the blue lines.

What I wish to point out with this graph is: We’ve all heard the story of how European empires had a technological advantage which enabled them to conquer most of the world. Well, most of that conquering happened before GWP started to accelerate!

If you look at the graph at the 1700 mark, GWP is seemingly on the same trend it had been on since antiquity. The industrial revolution is said to have started in 1760, and GWP growth really started to pick up steam around 1850. But by 1700 most of the Americas, the Philippines and the East Indies were directly ruled by European powers, and more importantly the oceans of the world were European-dominated, including by various ports and harbor forts European powers had conquered/built all along the coasts of Africa and Asia. Many of the coastal kingdoms in Africa and Asia that weren’t directly ruled by European powers were nevertheless indirectly controlled or otherwise pushed around by them. In my opinion, by this point it seems like the “point of no return” had been passed, so to speak: At some point in the past--maybe 1000 AD, for example--it was unclear whether, say, Western or Eastern (or neither) culture/values/people would come to dominate the world, but by 1700 it was pretty clear, and there wasn’t much that non-westerners could do to change that. (Or at least, changing that in 1700 would have been a lot harder than in 1000 or 1500.)

Paul Christiano once said that he thinks of Slow Takeoff as “Like the Industrial Revolution, but 10x-100x faster.” Well, on my reading of history, that means that all sorts of crazy things will be happening, analogous to the colonialist conquests and their accompanying reshaping of the world economy, before GWP growth noticeably accelerates!

That said, we shouldn’t rely heavily on historical analogies like this. We can probably find other cases that seem analogous too, perhaps even more so, since this is far from a perfect analogue. (e.g. what’s the historical analogue of AI alignment failure? Corporations becoming more powerful than governments? “Western values” being corrupted and changing significantly due to the new technology? The American Revolution?) Also, maybe one could argue that this is indeed what’s happening already: the Internet has connected the world much as sailing ships did, Big Tech dominates the Internet, etc. (Maybe AI = steam engines, and computers+internet = ships+navigation?)

But still. I think it’s fair to conclude that if some of the scenarios described in the previous section do happen, and we get powerful AI that pushes us past the point of no return prior to GWP accelerating, it won’t be totally inconsistent with how things have gone historically.

(I recommend the history book 1493, it has a lot of extremely interesting information about how quickly and dramatically the world economy was reshaped by colonialism and the “Columbian Exchange.”)

Takeoff speeds

What about takeoff speeds? Maybe GDP is a good metric for describing the speed of AI takeoff? I don’t think so.

Here is what I think we care about when it comes to takeoff speeds:

- Warning shots: Before there are catastrophic AI alignment failures (i.e. PONRs) there are smaller failures that we can learn from.

- Heterogeneity: The relevant AIs are diverse, rather than e.g. all fine-tuned copies of the same pre-trained model. (See Evan’s post [AF · GW])

- Risk Awareness: Everyone is freaking out about AI in the crucial period, and lots more people are lots more concerned about AI risk.

- Multipolar: AI capabilities progress is widely distributed in the crucial period, rather than concentrated in a few projects.

- Craziness: The world is weird and crazy in the crucial period, lots of important things happening fast, the strategic landscape is different from what we expected thanks to new technologies and/or other developments

I think that the best way to define slow(er) takeoff is as the extent to which conditions 1-5 are met. This is not a definition with precise resolution criteria, but that’s OK, because it captures what we care about. Better to have to work hard to precisify a definition that captures what we care about, than to easily precisify a definition that doesn’t! (More substantively, I am optimistic that we can come up with better proxies for what we care about than GWP. I think we already have to some extent; see e.g. operationalizations 5 and 6 here [LW(p) · GW(p)].) As a bonus, this definition also encourages us to wonder whether we’ll get some of 1-5 but not others.

What do I mean by “the crucial period?”

I think we should define the crucial period as the period leading up to the first major AI-induced potential point of no return. (Or maybe, as the aggregate of the periods leading up to the major potential points of no return). After all, this is what we care about. Moreover there seems to be some level of consensus [LW(p) · GW(p)] that crazy stuff could start happening before human-level AGI. I certainly think this.

So, I’ve argued for a new definition of slow takeoff, that better captures what we care about. But is the old GWP-based definition a fine proxy? No, it is not, because the things that cause PONR can be different from the things which cause GWP acceleration, and they can come years apart too. Whether there are warning shots, heterogeneity, risk awareness, multipolarity, and craziness in the period leading up to PONR is probably correlated with whether GWP doubles in four years before the first one-year doubling. But the correlation is probably not super strong. Here are two scenarios, one in which we get a slow takeoff by my definition but not by the GWP-based definition, and one in which the opposite happens:

Slow Takeoff Fast GWP Acceleration Scenario: It turns out there’s a multi-year deployment lag between the time a technology is first demonstrated and the time it is sufficiently deployed around the world to noticeably affect GWP. There’s also a lag between when a deceptively aligned AGI is created and when it causes a PONR… but it is much smaller, because all the AGI needs to do is neutralize its opposition. So PONR happens before GWP starts to accelerate, even though the technologies that could boost GWP are invented several years before AGI powerful enough to cause a PONR is created. But takeoff is slow in the sense I define it; by the time AGI powerful enough to cause a PONR is created, everyone is already freaking out about AI thanks to all the incredibly profitable applications of weaker AI systems, and the obvious and accelerating trends of research progress. Also, there are plenty of warning shots, the strategic situation is very multipolar and heterogenous, etc. Moreover, research progress starts to go FOOM a short while after powerful AGIs are created, such that by the time the robots and self-driving cars and whatnot that were invented several years ago actually get deployed enough to accelerate GWP, we’ve got nanobot swarms. GWP goes from 3% growth per year to 300% without stopping at 30%.

Fast Takeoff Slow GWP Acceleration Scenario: It turns out you can make smarter AIs by making them have more parameters and training them for longer. So the government decides to partner with a leading tech company and requisition all the major computing centers in the country. With this massive amount of compute and research talent, they refine and scale up existing AI designs that seem promising, and lo! A human-level AGI is created. Alas, it is so huge that it costs $10,000 per hour of subjective thought. Moreover, it has a different distribution over skills compared to humans—it tends to be more rational, not having evolved in an environment that rewards irrationality. It tends to be worse at object recognition and manipulation, but better at poetry, science, and predicting human behavior. It has some flaws and weak points too, more so than humans. Anyhow, unfortunately, it is clever enough to neutralize its opposition. In a short time, the PONR is passed. However, GWP doubles in four years before it doubles in one year. This is because (a) this AGI is so expensive that it doesn’t transform the economy much until either the cost comes way down or capabilities go way up, and (b) progress is slowed by bottlenecks, such as acquiring more compute and overcoming various restrictions placed on the AGI. (Maybe neutralizing the opposition involved convincing the government that certain restrictions and safeguards would be sufficient for safety, contra the hysterical doomsaying of parts of the AI safety community. But overcoming those restrictions in order to do big things in the world takes time.)

Acknowledgments: Thanks to the people who gave comments on earlier drafts, including Katja Grace, Carl Shulman, and Max Daniel. Thanks to Amogh Nanjajjar for helping me with some literature review. This research was conducted at the Center on Long-Term Risk and the Polaris Research Institute.

19 comments

Comments sorted by top scores.

comment by Matthew Barnett (matthew-barnett) · 2021-01-01T08:00:21.811Z · LW(p) · GW(p)

In addition to the reasons you mentioned, there's also empirical evidence that technological revolutions generally precede the productivity growth that they eventually cause. In fact, economic growth may even slow down as people pay costs to adopt new technologies. Philippe Aghion and Peter Howitt summarize the state of the research in chapter 9 of The Economics of Growth,

Replies from: daniel-kokotajloAlthough each [General Purpose Technology (GPT)] raises output and productivity in the long run, it can also cause cyclical fluctuations while the economy adjusts to it. As David (1990) and Lipsey and Bekar (1995) have argued, GPTs like the steam engine, the electric dynamo, the laser, and the computer require costly restructuring and adjustment to take place, and there is no reason to expect this process to proceed smoothly over time. Thus, contrary to the predictions of real-business-cycle theory, the initial effect of a “positive technology shock” may not be to raise output, productivity, and employment but to reduce them.

↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2021-01-01T11:39:47.840Z · LW(p) · GW(p)

Wow, yeah, that's an excellent point.

EDIT: See e.g. this paper: https://www.nber.org/papers/w24001

comment by Daniel Kokotajlo (daniel-kokotajlo) · 2021-12-12T22:35:34.213Z · LW(p) · GW(p)

(I am the author)

I still like & stand by this post. I refer back to it constantly. It does two things:

1. Argue that an AI-induced point of no return could significantly before, or significantly after, world GDP growth accelerates--and indeed will probably come before!

2. Argue that we shouldn't define timelines and takeoff speeds in terms of economic growth. So, against "is there a 4 year doubling before a 1 year doubling?" and against "When will we have TAI = AI capable of doubling the economy in 4 years if deployed?"

I think both things are pretty important; I think focus on GWP is distracting us from the metrics that really matter and hence hindering epistemic progress, and I think that most of the AI risk comes from scenarios in which AI-PONR happens before GWP accelerates, so it's important to evaluate the plausibility of such scenarios.

I talked with Paul about this post once and he said he still wasn't convinced, he still expects GWP to accelerate before the point of no return. He said some things that I found helpful (e.g. gave some examples of how AI tech will have dramatically shorter product development cycles than historical products, such that you really will be able to deploy it and accelerate the economy in the months to years before substantially better versions are created), but nothing that significantly changed my position either. I would LOVE to see more engagement/discussion of this stuff. (I recognize Paul is busy etc. but lots of people (most people?) have similar views, so there should be plenty of people capable of arguing for his side. On my side, there's MIRI, see this comment [LW(p) · GW(p)], which is great and if I revise this post I'll want to incorporate some of the ideas from it. Of course the best thing to incorporate would be good objections & replies, hence why I wish I had some. I've at least got the previously-mentioned one from Paul. Oh, and Paul also had an objection to my historical precedent [EA · GW] which I take seriously.)

comment by danieldewey · 2020-12-29T19:01:36.977Z · LW(p) · GW(p)

Thanks for the post! FWIW, I found this quote particularly useful:

Well, on my reading of history, that means that all sorts of crazy things will be happening, analogous to the colonialist conquests and their accompanying reshaping of the world economy, before GWP growth noticeably accelerates!

The fact that it showed up right before an eye-catching image probably helped :)

Replies from: daniel-kokotajlo↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2020-12-29T19:19:19.922Z · LW(p) · GW(p)

Thanks!

Self-nitpick: Now that you draw my attention to the meme again, I notice that for the first few panels AGI comes before Industry but in the last panel Conquistadors comes before Persuasion Tools. This bugs me. Does it bug anyone else? Should I redo it with Industry first throughout?

Replies from: danieldewey↑ comment by danieldewey · 2020-12-30T00:52:08.760Z · LW(p) · GW(p)

It didn't bug me ¯\_(ツ)_/¯

comment by Donald Hobson (donald-hobson) · 2021-01-01T00:43:02.110Z · LW(p) · GW(p)

People often talk about an accelerating everything happening fast world just before AGI is created. Moores law probably won't speed up that much. And the rate of human thought will be the same. Training new experts takes time. In other words, coming up with insights takes time for humans. You can't get massive breakthroughs every few hours with humans in the loop. You probably can't get anything like that. And I don't think that AI tools can speed up the process of humans having insights by orders of magnitude, unless the AI is so good it doesn't need the humans.

In this model, we would go straight from research time on a scale of months, to computer time where no human is involved in the foom. This has a timescale of anywhere between months and microseconds.

comment by Vaniver · 2020-12-29T23:48:17.709Z · LW(p) · GW(p)

none capable of accelerating world GWP growth.

Or, at least, accelerating world GWP growth faster than they're already doing. (It's not like the various powers with nukes and bioweapons programs are not also trying to make the future richer than the present.)

Replies from: daniel-kokotajlo↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2020-12-30T00:15:01.952Z · LW(p) · GW(p)

Yeah, I should clarify, when I'm talking about accelerating world GWP growth I'm talking about bringing annual growth rates to a noticeably higher level than they currently are -- say, to 9%+ per year. [LW · GW]

comment by Gunnar_Zarncke · 2023-04-07T22:24:35.091Z · LW(p) · GW(p)

The reason we even can compare prices and calculate inflation in the way we do is that

technological change actually arrives in ways that leave human behavior minimally altered

https://www.ribbonfarm.com/2012/05/09/welcome-to-the-future-nauseous/ (which I originally found via

http://www.overcomingbias.com/2015/10/assimilated-futures.html ).

↑ comment by Noosphere89 (sharmake-farah) · 2023-04-07T22:31:21.695Z · LW(p) · GW(p)

Basically this. AI may upend the trend of tech changing in ways that don't leave the human body and brain radically altered. The most reliable constant in human history is us, we are made of the same genetics as we were thousands of years ago.

AI progress may change this by accelerating other technologies, including some that have the potential to remake the human body as totally as the world was remade in the 18th-21st centuries.

comment by aog (Aidan O'Gara) · 2022-06-17T17:45:39.602Z · LW(p) · GW(p)

This is an awesome post. I've read it before, but hadn't fully internalized it.

My timelines on TAI / HLMI / 10x GDP growth are a bit longer than the BioAnchors report, but a lot of my objections to short timelines [EA(p) · GW(p)] are specifically objecting to short timelines on rapid GDP growth. It's obvious after reading this that what we care about is x-risk timelines, not GDP timelines. Forecasting when x-risk might spike is more difficult because it requires focusing on specific risk scenarios, like persuasion tools or fast takeoff, rather than general growth in AI capabilities and applications. I'm not immediately convinced that x-risk timelines are shorter than GDP timelines, but you make some good arguments and I'd like to think about it more.

This strengthens an argument I've been making career decisions based on: People should work on what will be valuable in specific, high-risk, short timelines scenarios, rather than what's valuable in the most likely scenario. For example, persuasion tools might not be where the bulk of AI risk over the next 100 years comes from, but if true its PONR could be very soon, meaning people today are the only ones who can work on it. This wouldn't make sense if you think we're already doomed in short timelines scenarios, or if you think the bulk of risk comes from problems a few decades away that will take a few decades to solve. But that's not me.

(Of course, it probably won’t literally be a day; probably it will be an extended period where we gradually lose influence over the future.)

I'd like to think about this as a distribution. Maybe we care about the probability of x-risk over time, and the most important time is not the date of human extinction, but the span of time during which x-risk is most rapidly rising. This probably runs into messy problems with probability and belief: it somewhat assumes a true underlying probability of x-risk at any given point, not subject to an individual observer's beliefs but depending only on uncertainty about future actions we could choose to take. Is there a way to make this work, framing PONR as a distribution rather than a point in time?

Replies from: Aidan O'Gara↑ comment by aog (Aidan O'Gara) · 2022-07-13T05:00:39.148Z · LW(p) · GW(p)

I'd like to publicly preregister an opinion. It's not worth making a full post because it doesn't introduce any new arguments, so this seems like a fine place to put it.

I'm open to the possibility of short timelines on risks from language models. Language is a highly generalizable domain that's seen rapid progress shattering expectations [LW · GW] of slower timelines for several years in a row now. The self-supervised pretraining objective means that data is not a constraint (though it could be for language agents, tbd), and the market seems optimistic about business applications of language models.

While I would bet against (~80%) language models pushing annual GDP growth above 20% in the next 10 years, I strongly expect (~80%) risks from AI persuasion to materialize (e.g. becomes a mainstream topic of discussion, influence major political outcomes in the next 10 years) and I'm concerned (~20%) about tail risks from power-seeking LM agents (mainly hacking, but also financial trading, impersonation, or others). I'd be interested in (and should spend some time on) making clear falsifiable predictions here.

Credit to "What 2026 Looks Like" and "It Looks Like You're Trying To Take Over The World" for making this case well before I believed it was possible. I'm also influenced by the widespread interest in LMs from AI safety grantmakers and researchers. This has been my belief for a few months, as I noted here [EA(p) · GW(p)], and I've taken action by working on LM truthfulness, which I expect to be most useful in scenarios of fast LM growth. (Though I don't think it will substantially combat power-seeking LM agents, and I'm still learning about other research directions that might be more valuable.)

comment by Felix Karg (felix-karg) · 2020-12-30T23:57:18.270Z · LW(p) · GW(p)

Thank you, this post has been quite insightful. I still have this lingering feeling, maybe you can help me with that: what if the creation of unaligned AGI just so happens to not have any (noticeable) effect on GWP at all? Nanobot creation (and other projects) might just happen to not, or only minimally involve monetary transfers — I can think of many reasons why this might be preferable (traceability!), and how it might be doable (e.g. manipulate human agents without paying them).

Replies from: daniel-kokotajlo↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2020-12-31T01:45:17.910Z · LW(p) · GW(p)

Thanks! Yeah, I totally agree that nanobot swarms etc. might not involve monetary transfers and thus might not show up in GDP metrics. In fact, now that you mention it, there's a whole category of arguments like this that I could have made but didn't: How GDP as a metric is not a perfect proxy for economic vitality, how economic vitality of a nation is not a perfect proxy for economic vitality of an industry, etc.

comment by Ofer (ofer) · 2020-12-30T18:41:23.520Z · LW(p) · GW(p)

Thank you for writing this up! This topic seems extremely important and I strongly agree with the core arguments here.

I propose the following addition to the list of things we care about when it comes to takeoff dynamics, or when it comes to defining slow(er) takeoff:

- Foreseeability: No one creates an AI with a transformative capability X at a time when most actors (weighted by influence) believe it is very unlikely that an AI with capability X will be created within a year.

Perhaps this should replace (or be merged with) the "warning shots" entry in the list. (As an aside, I think the term "warning shot" doesn't fit, because the original term refers to an action that is carried out for the purpose of communicating a threat.)

Replies from: daniel-kokotajlo↑ comment by Daniel Kokotajlo (daniel-kokotajlo) · 2020-12-30T19:42:25.726Z · LW(p) · GW(p)

Thanks! Good point about "warning shot" having the wrong connotations. And I like your foreseeability suggestion. I wonder if I can merge it with something. It seems similar to warning shots and risk awareness. Maybe I should just have a general category for "How surprised are the relevant actors when things like AGI, alignment failures, etc. start happening."

comment by Chris バルス (バルス) · 2024-04-13T12:05:55.763Z · LW(p) · GW(p)

It makes sense that we shouldn't use the lagging indicator of GDP to indicate the leading indicator of intelligence...