Highlights from Lex Fridman’s interview of Yann LeCun

post by Joel Burget (joel-burget) · 2024-03-13T20:58:13.052Z · LW · GW · 15 commentsContents

Introduction Limitations of Autoregressive LLMs Grounding / Embodiment Richness of Interaction with the Real World Language / Video and Bandwidth Hierarchical Planning Skepticism of Autoregressive LLMs RL(HF) Bias / Open Source Business and Open Source Safety of (Current) LLMs LLaMAs GPUs vs the Human Brain What does AGI / AMI Look Like? AI Doomers What Does a World with AGI Look Like (Especially Re Safety)? Big Companies and AI Hope for the Future of Humanity Summary None 15 comments

Introduction

Yann LeCun is perhaps the most prominent critic of the “LessWrong view” on AI safety, the only one of the three "godfathers of AI" to not acknowledge the risks of advanced AI. So, when he recently appeared on the Lex Fridman podcast, I listened with the intent to better understand his position. LeCun came across as articulate / thoughtful[1]. Though I don't agree with it all, I found a lot worth sharing.

Most of this post consists of quotes from the transcript, where I’ve bolded the most salient points. There are also a few notes from me as well as a short summary at the end.

Limitations of Autoregressive LLMs

Lex Fridman (00:01:52) You've said that autoregressive LLMs are not the way we’re going to make progress towards superhuman intelligence. These are the large language models like GPT-4, like Llama 2 and 3 soon and so on. How do they work and why are they not going to take us all the way?

Yann LeCun (00:02:47) For a number of reasons. The first is that there [are] a number of characteristics of intelligent behavior. For example, the capacity to understand the world, understand the physical world, the ability to remember and retrieve things, persistent memory, the ability to reason, and the ability to plan. Those are four essential characteristics of intelligent systems or entities, humans, animals. LLMs can do none of those or they can only do them in a very primitive way and they don’t really understand the physical world. They don’t really have persistent memory. They can’t really reason and they certainly can’t plan. And so if you expect the system to become intelligent without having the possibility of doing those things, you’re making a mistake. That is not to say that autoregressive LLMs are not useful. They’re certainly useful. That they’re not interesting, that we can’t build a whole ecosystem of applications around them… of course we can. But as a pass towards human-level intelligence, they’re missing essential components.

(00:04:08) And then there is another tidbit or fact that I think is very interesting. Those LLMs are trained on enormous amounts of texts, basically, the entirety of all publicly available texts on the internet, right? That’s typically on the order of 10^13 tokens. Each token is typically two bytes, so that’s 2*10^13 bytes as training data. It would take you or me 170,000 years to just read through this at eight hours a day. So it seems like an enormous amount of knowledge that those systems can accumulate, but then you realize it’s really not that much data. If you talk to developmental psychologists and they tell you a four-year-old has been awake for 16,000 hours in his or her life, and the amount of information that has reached the visual cortex of that child in four years is about 10^15 bytes.

(00:05:12) And you can compute this by estimating that the optical nerve can carry about 20 megabytes per second roughly, and so 10 to the 15 bytes for a four-year-old versus two times 10 to the 13 bytes for 170,000 years worth of reading. What that tells you is that through sensory input, we see a lot more information than we do through language, and that despite our intuition, most of what we learn and most of our knowledge is through our observation and interaction with the real world, not through language. Everything that we learn in the first few years of life, and certainly everything that animals learn has nothing to do with language.

Checking some claims:

- An LLM training corpus is on order of 10^13 tokens. This seems about right: “Llama 2 was trained on 2.4T tokens and PaLM 2 on 3.6T tokens. GPT-4 is thought to have been trained on 4T tokens… Together AI introduced a 1 trillion (1T) token dataset called RedPajama in April 2023. A few days ago, it introduced a 30T token dataset”. That’s 2*10^12 - 3*10^13.

- 170,000 years to read this. Seems reasonable. Claude gives me an answer of 95,129 years[2] (nonstop, for a 10^13 token corpus).

- Optical nerve carries ~20MB/s. One answer on StackExchange claims 8.75Mb/s (per eye), which equates to ~2MB/s.

- 10^15 bytes experienced by a four-year-old: 4 * 365 * 16 * 60 * 60 * 20_000_000 = 1.7 * 10^15. Seems about right (though this is using LeCun’s optical nerve figure -- divide by 10 if using the other bandwidth claims). Though note that the actual information content is probably OOMs lower.

Grounding / Embodiment

Richness of Interaction with the Real World

Lex Fridman (00:05:57) Is it possible that language alone already has enough wisdom and knowledge in there to be able to, from that language, construct a world model and understanding of the world, an understanding of the physical world that you’re saying LLMs lack?

Yann LeCun (00:06:56) So it’s a big debate among philosophers and also cognitive scientists, like whether intelligence needs to be grounded in reality. I’m clearly in the camp that yes, intelligence cannot appear without some grounding in some reality. It doesn’t need to be physical reality. It could be simulated, but the environment is just much richer than what you can express in language. Language is a very approximate representation [of] percepts and/or mental models. I mean, there’s a lot of tasks that we accomplish where we manipulate a mental model of the situation at hand, and that has nothing to do with language. Everything that’s physical, mechanical, whatever, when we build something, when we accomplish a task, model [the] task of grabbing something, et cetera, we plan or action sequences, and we do this by essentially imagining the result of the outcome of a sequence of actions that we might imagine and that requires mental models that don’t have much to do with language, and I would argue most of our knowledge is derived from that interaction with the physical world.

(00:08:13) So a lot of my colleagues who are more interested in things like computer vision are really in that camp that AI needs to be embodied essentially. And then other people coming from the NLP side or maybe some other motivation don’t necessarily agree with that, and philosophers are split as well, and the complexity of the world is hard to imagine. It’s hard to represent all the complexities that we take completely for granted in the real world that we don’t even imagine require intelligence, right?

(00:08:55) This is the old Moravec paradox, from the pioneer of robotics, hence Moravec, who said, how is it that with computers, it seems to be easy to do high-level complex tasks like playing chess and solving integrals and doing things like that, whereas the thing we take for granted that we do every day, like, I don’t know, learning to drive a car or grabbing an object, we can’t do with computers, and we have LLMs that can pass the bar exam, so they must be smart, but then they can’t learn to drive in 20 hours like any 17-year old, they can’t learn to clear out the dinner table and fill up the dishwasher like any 10-year old can learn in one shot. Why is that? What are we missing? What type of learning or reasoning architecture or whatever are we missing that basically prevent us from having level five sort of in cars and domestic robots?

Claim: philosophers are split on grounding. LeCun participated in “Debate: Do Language Models Need Sensory Grounding for Meaning and Understanding?”. Otherwise I’m not so familiar with this debate.

Lex Fridman (00:11:51) So you don’t think there’s something special to you about intuitive physics, about sort of common sense reasoning about the physical space, about physical reality. That to you is a giant leap that LLMs are just not able to do?

Yann LeCun (00:12:02) We’re not going to be able to do this with the type of LLMs that we are working with today, and there’s a number of reasons for this, but the main reason is the way LLMs are trained is that you take a piece of text, you remove some of the words in that text, you mask them, you replace them by blank markers, and you train a genetic neural net to predict the words that are missing. And if you build this neural net in a particular way so that it can only look at words that are to the left or the one it’s trying to predict, then what you have is a system that basically is trying to predict the next word in a text. So then you can feed it a text, a prompt, and you can ask it to predict the next word. It can never predict the next word exactly.

(00:12:48) So what it’s going to do is produce a probability distribution of all the possible words in a dictionary. In fact, it doesn’t predict words. It predicts tokens that are kind of subword units, and so it’s easy to handle the uncertainty in the prediction there because there is only a finite number of possible words in the dictionary, and you can just compute a distribution over them. Then what the system does is that it picks a word from that distribution. Of course, there’s a higher chance of picking words that have a higher probability within that distribution. So you sample from that distribution to actually produce a word, and then you shift that word into the input, and so that allows the system not to predict the second word, and once you do this, you shift it into the input, et cetera.

Language / Video and Bandwidth

Lex Fridman (00:17:44) I think the fundamental question is can you build a really complete world model, not complete, but one that has a deep understanding of the world?

Yann LeCun (00:17:58) Yeah. So can you build this first of all by prediction, and the answer is probably yes. Can you build it by predicting words? And the answer is most probably no, because language is very poor in terms of weak or low bandwidth if you want, there’s just not enough information there. So building world models means observing the world and understanding why the world is evolving the way it is, and then the extra component of a world model is something that can predict how the world is going to evolve as a consequence of an action you might take.

(00:18:45) So one model really is here is my idea of the state of the world at time, T, here is an action I might take. What is the predicted state of the world at time, T+1? Now that state of the world does not need to represent everything about the world, it just needs to represent enough that’s relevant for this planning of the action, but not necessarily all the details. Now, here is the problem. You’re not going to be able to do this with generative models. So a generative model has trained on video, and we’ve tried to do this for 10 years. You take a video, show a system a piece of video, and then ask it to predict the reminder of the video, basically predict what’s going to happen.

(00:19:34) Either one frame at a time or a group of frames at a time. But yeah, a large video model if you want. The idea of doing this has been floating around for a long time and at FAIR, some of our colleagues and I have been trying to do this for about 10 years, and you can’t really do the same trick as with LLMs because LLMs, as I said, you can’t predict exactly which word is going to follow a sequence of words, but you can predict the distribution of words. Now, if you go to video, what you would have to do is predict the distribution of all possible frames in a video, and we don’t really know how to do that properly.

(00:20:20) We do not know how to represent distributions over high-dimensional, continuous spaces in ways that are useful. And there lies the main issue, and the reason we can do this is because the world is incredibly more complicated and richer in terms of information than text. Text is discrete, video is high-dimensional and continuous. A lot of details in this. So if I take a video of this room and the video is a camera panning around, there is no way I can predict everything that’s going to be in the room as I pan around. The system cannot predict what’s going to be in the room as the camera is panning. Maybe it’s going to predict this is a room where there’s a light and there is a wall and things like that. It can’t predict what the painting of the wall looks like or what the texture of the couch looks like. Certainly not the texture of the carpet. So there’s no way I can predict all those details.

(00:21:19) So one way to possibly handle this, which we’ve been working on for a long time, is to have a model that has what’s called a latent variable. And the latent variable is fed to a neural net, and it’s supposed to represent all the information about the world that you don’t perceive yet, and that you need to augment the system for the prediction to do a good job at predicting pixels, including the fine texture of the carpet and the couch and the painting on the wall.

(00:21:57) That has been a complete failure essentially. And we’ve tried lots of things. We tried just straight neural nets, we tried GANs, we tried VAEs, all kinds of regularized auto encoders. We tried many things. We also tried those kinds of methods to learn good representations of images or video that could then be used as input to, for example, an image classification system. That also has basically failed. All the systems that attempt to predict missing parts of an image or video from a corrupted version of it, basically, so take an image or a video, corrupt it or transform it in some way, and then try to reconstruct the complete video or image from the corrupted version, and then hope that internally, the system will develop good representations of images that you can use for object recognition, segmentation, whatever it is. That has been essentially a complete failure and it works really well for text. That’s the principle that is used for LLMs, right?

Hierarchical Planning

Lex Fridman (00:44:20) So yes, for a model predictive control, but you also often talk about hierarchical planning. Can hierarchical planning emerge from this somehow?

Yann LeCun (00:44:28) Well, so no, you will have to build a specific architecture to allow for hierarchical planning. So hierarchical planning is absolutely necessary if you want to plan complex actions. If I want to go from, let’s say from New York to Paris, it’s the example I use all the time, and I’m sitting in my office at NYU, my objective that I need to minimize is my distance to Paris. At a high level, a very abstract representation of my location, I would have to decompose this into two sub goals. First one is to go to the airport, and the second one is to catch a plane to Paris. Okay, so my sub goal is now going to the airport. My objective function is my distance to the airport. How do I go to the airport? Well I have to go in the street and hail a taxi, which you can do in New York.

(00:45:21) Okay, now I have another sub goal: go down on the street. Well that means going to the elevator, going down the elevator, walk out to the street. How do I go to the elevator? I have to stand up from my chair, open the door in my office, go to the elevator, push the button. How do I get up from my chair? You can imagine going down, all the way down, to basically what amounts to millisecond by millisecond muscle control. And obviously you’re not going to plan your entire trip from New York to Paris in terms of millisecond by millisecond muscle control. First, that would be incredibly expensive, but it will also be completely impossible because you don’t know all the conditions of what’s going to happen, how long it’s going to take to catch a taxi or to go to the airport with traffic. I mean, you would have to know exactly the condition of everything to be able to do this planning and you don’t have the information. So you have to do this hierarchical planning so that you can start acting and then sort of replanning as you go. And nobody really knows how to do this in AI. Nobody knows how to train a system to learn the appropriate multiple levels of representation so that hierarchical planning works.

Skepticism of Autoregressive LLMs

Lex Fridman (00:50:40) I would love to sort of linger on your skepticism around autoregressive LLMs. So one way I would like to test that skepticism is everything you say makes a lot of sense, but if I apply everything you said today and in general to I don’t know, 10 years ago, maybe a little bit less, no, let’s say three years ago, I wouldn’t be able to predict the success of LLMs. So does it make sense to you that autoregressive LLMs are able to be so damn good?

Yann LeCun (00:51:20) Yes.

Lex Fridman (00:51:21) Can you explain your intuition? Because if I were to take your wisdom and intuition at face value, I would say there’s no way autoregressive LLMs, one token at a time, would be able to do the kind of things they’re doing.

Yann LeCun (00:51:36) No, there’s one thing that autoregressive LLMs or that LLMs in general, not just the autoregressive ones, but including the BERT-style bidirectional ones, are exploiting and its self supervised learning, and I’ve been a very, very strong advocate of self supervised learning for many years. So those things are an incredibly impressive demonstration that self supervised learning actually works. The idea that started, it didn’t start with BERT, but it was really kind of a good demonstration of this.

(00:52:09) So the idea that you take a piece of text, you corrupt it, and then you train some gigantic neural net to reconstruct the parts that are missing. That has produced an enormous amount of benefits. It allowed us to create systems that understand language, systems that can translate hundreds of languages in any direction, systems that are multilingual, so it’s a single system that can be trained to understand hundreds of languages and translate in any direction, and produce summaries and then answer questions and produce text.

(00:52:51) And then there’s a special case of it, which is the autoregressive trick where you constrain the system to not elaborate a representation of the text from looking at the entire text, but only predicting a word from the words that [have] come before. And you do this by constraining the architecture of the network, and that’s what you can build an autoregressive LLM from.

(00:53:15) So there was a surprise many years ago with what’s called decoder-only LLMs. So since systems of this type that are just trying to produce words from the previous ones and the fact that when you scale them up, they tend to really understand more about language. When you train them on lots of data, you make them really big. That was a surprise and that surprise occurred quite a while back, with work from Google, Meta, OpenAI, et cetera, going back to the GPT kind of work, general pre-trained transformers.

(00:54:50) We’re fooled by their fluency, right? We just assume that if a system is fluent in manipulating language, then it has all the characteristics of human intelligence, but that impression is false. We’re really fooled by it.

Lex Fridman (00:55:06) What do you think Alan Turing would say, without understanding anything, just hanging out with it?

Yann LeCun (00:55:11) Alan Turing would decide that a Turing test is a really bad test, okay? This is what the AI community has decided many years ago that the Turing test was a really bad test of intelligence.

(00:56:26) The conference I co-founded 14 years ago is called the International Conference on Learning Representations. That’s the entire issue that deep learning is dealing with, and it’s been my obsession for almost 40 years now. So learning representation is really the thing. For the longest time, we could only do this with supervised learning, and then we started working on what we used to call unsupervised learning and revived the idea of unsupervised running in the early 2000s with your [inaudible 00:56:58] and Jeff Hinton. Then discovered that supervised running actually works pretty well if you can collect enough data. And so the whole idea of unsupervised, self supervised running kind of took a backseat for a bit, and then I tried to revive it in a big way starting in 2014, basically when we started FAIR and really pushing for finding new methods to do self supervised running both for text and for images and for video and audio.

(00:59:02) And tried and tried and failed and failed, with generative models, with models that predict pixels. We could not get them to learn good representations of images. We could not get them to learn good representations of videos. And we tried many times, we published lots of papers on it, where they kind of, sort of work, but not really great. They started working, we abandoned this idea of predicting every pixel and basically just doing the joint embedding and predicting and representation space, that works. So there’s ample evidence that we’re not going to be able to learn good representations of the real world using generative models. So I’m telling people, everybody’s talking about generative AI. If you’re really interested in human level AI, abandon the idea of generative AI.

Yann LeCun (01:01:18) But there’s probably more complex scenarios [...] which an LLM may never have encountered and may not be able to determine whether it’s possible or not. So that link from the low level to the high level, the thing is that the high level that language expresses is based on the common experience of the low level, which LLMs currently do not have. When we talk to each other, we know we have a common experience of the world. A lot of it is similar, and LLMs don’t have that.

(01:05:04) I mean, there’s 16,000 hours of wake time of a 4-year-old and 10^15 bytes going through vision, just vision, there is a similar bandwidth of touch and a little less through audio. And then text, language doesn’t come in until a year in life. And by the time you are nine years old, you’ve learned about gravity, you know about inertia, you know about gravity, the stability, you know about the distinction between animate and inanimate objects. You know by 18 months, you know about why people want to do things and you help them if they can’t. I mean, there’s a lot of things that you learn mostly by observation, really not even through interaction. In the first few months of life, babies don’t really have any influence on the world, they can only observe. And you accumulate a gigantic amount of knowledge just from that. So that’s what we’re missing from current AI systems.

Lex Fridman (01:06:06) I think in one of your slides, you have this nice plot that is one of the ways you show that LLMs are limited. I wonder if you could talk about hallucinations from your perspectives, why hallucinations happen from large language models and to what degree is that a fundamental flaw of large language models?

Yann LeCun (01:06:29) Right, so because of the autoregressive prediction, every time an produces a token or a word, there is some level of probability for that word to take you out of the set of reasonable answers. And if you assume, which is a very strong assumption, that the probability of such errors is that those errors are independent across a sequence of tokens being produced. What that means is that every time you produce a token, the probability that you stay within the set of correct answers decreases and it decreases exponentially.

(01:07:48) It’s basically a struggle against the curse of dimensionality. So the way you can correct for this is that you fine tune the system by having it produce answers for all kinds of questions that people might come up with.

(01:08:00) Having it produce answers for all kinds of questions that people might come up with. And people are people, so a lot of the questions that they have are very similar to each other, so you can probably cover 80% or whatever of questions that people will ask by collecting data and then you fine tune the system to produce good answers for all of those things, and it’s probably going to be able to learn that because it’s got a lot of capacity to learn. But then there is the enormous set of prompts that you have not covered during training, and that set is enormous, like within the set of all possible prompts, the proportion of prompts that have been used for training is absolutely tiny, it’s a tiny, tiny, tiny subset of all possible prompts.

(01:08:54) And so the system will behave properly on the prompts that have been either trained, pre-trained, or fine-tuned, but then there is an entire space of things that it cannot possibly have been trained on because the number is gigantic. So whatever training the system has been subject to produce appropriate answers, you can break it by finding out a prompt that will be outside of the set of prompts that’s been trained on, or things that are similar, and then it will just spew complete nonsense.

(01:10:55) The problem is that there is a long tail, this is an issue that a lot of people have realized in social networks and stuff like that, which is there’s a very, very long tail of things that people will ask and you can fine tune the system for the 80% or whatever of the things that most people will ask. And then this long tail is so large that you’re not going to be able to fine tune the system for all the conditions. And in the end, the system ends up being a giant lookup table essentially, which is not really what you want, you want systems that can reason, certainly that can plan.

(01:11:31) The type of reasoning that takes place in LLM is very, very primitive, and the reason you can tell is primitive is because the amount of computation that is spent per token produced is constant. So if you ask a question and that question has an answer in a given number of tokens, the amount of computation devoted to computing that answer can be exactly estimated. It’s the size of the prediction network with its 36 layers or 92 layers or whatever it is multiplied by number of tokens, that’s it. And so essentially, it doesn’t matter if the question being asked is simple to answer, complicated to answer, impossible to answer because it’s a decidable or something, the amount of computation the system will be able to devote to the answer is constant or is proportional to number of token produced in the answer. This is not the way we work, the way we reason is that when we’re faced with a complex problem or a complex question, we spend more time trying to solve it and answer it because it’s more difficult.

(01:13:37) Whether it’s difficult or not, the near future will say because a lot of people are working on reasoning and planning abilities for dialogue systems. Even if we restrict ourselves to language, just having the ability to plan your answer before you answer in terms that are not necessarily linked with the language you’re going to use to produce the answer, so this idea of this mental model that allows you to plan what you’re going to say before you say it, that is very important. I think there’s going to be a lot of systems over the next few years that are going to have this capability, but the blueprint of those systems will be extremely different from auto aggressive LLMs.

(01:14:26) It’s the same difference as the difference between what psychologists call system one and system two in humans, so system one is the type of task that you can accomplish without deliberately consciously think about how you do them, you just do them, you’ve done them enough that you can just do it subconsciously without thinking about them. If you’re an experienced driver, you can drive without really thinking about it and you can talk to someone at the same time or listen to the radio. If you are a very experienced chess player, you can play against a non- experienced chess player without really thinking either, you just recognize the pattern and you play. That’s system one, so all the things that you do instinctively without really having to deliberately plan and think about it.

(01:15:13) And then there is all the tasks where you need to plan, so if you are a not too experienced chess player or you are experienced where you play against another experienced chess player, you think about all kinds of options, you think about it for a while and you are much better if you have time to think about it than you are if you play blitz with limited time. So this type of deliberate planning, which uses your internal world model, that’s system two, this is what LMS currently cannot do. How do we get them to do this? How do we build a system that can do this kind of planning or reasoning that devotes more resources to complex problems than to simple problems? And it’s not going to be a regressive prediction of tokens, it’s going to be more something akin to inference of little variables in what used to be called probabilistic models or graphical models and things of that type.

(01:16:17) Basically, the principle is like this, the prompt is like observed variables, and what the model does, is that basically, it can measure to what extent an answer is a good answer for a prompt. So think of it as some gigantic neural net, but it’s got only one output, and that output is a scalar number, which is, let’s say, zero, if the answer is a good answer for the question and a large number, if the answer is not a good answer for the question. Imagine you had this model, if you had such a model, you could use it to produce good answers, the way you would do is, produce the prompt and then search through the space of possible answers for one that minimizes that number, that’s called an energy based model.

(01:17:18) So really what you need to do would be to not search over possible strings of text that minimize that energy. But what you would do, we do this in abstract representation space, so in the space of abstract thoughts, you would elaborate a thought using this process of minimizing the output of your model, which is just a scalar, it’s an optimization process. So now the way the system produces its sensor is through optimization by minimizing an objective function basically. And we’re talking about inference, we’re not talking about training, the system has been trained already.

(01:18:01) Now we have an abstract representation of the thought of the answer, representation of the answer, we feed that to basically an autoregressive decoder, which can be very simple, that turns this into a text that expresses this thought. So that, in my opinion, is the blueprint of future data systems, they will think about their answer, plan their answer by optimization before turning it into text, and that is Turing complete.

RL(HF)

Lex Fridman (01:29:38) The last recommendation is that we abandon RL in favor of model predictive control, as you were talking about, and only use RL when planning doesn’t yield the predicted outcome, and we use RL in that case to adjust the world model or the critic.

Yann LeCun (01:29:55) Yes.

Lex Fridman (01:29:57) You’ve mentioned RLHF, reinforcement learning with human feedback, why do you still hate reinforcement learning?

Yann LeCun (01:30:05) I don’t hate reinforcement learning, and I think it should not be abandoned completely, but I think its use should be minimized because it’s incredibly inefficient in terms of samples. And so the proper way to train a system is to first have it learn good representations of the world and world models from mostly observation, maybe a little bit of interactions.

Lex Fridman (01:30:31) And then steered based on that, if the representation is good, then the adjustments should be minimal.

Yann LeCun (01:30:36) Yeah. Now there’s two things, if you’ve learned a world model, you can use the world model to plan a sequence of actions to arrive at a particular objective, you don’t need RL unless the way you measure whether you succeed might be in exact. Your idea of whether you are going to fall from your bike might be wrong, or whether the person you’re fighting with MMA who’s going to do something and they do something else. So there’s two ways you can be wrong, either your objective function does not reflect the actual objective function you want to optimize or your world model is inaccurate, so the prediction you were making about what was going to happen in the world is inaccurate.

(01:31:25) If you want to adjust your world model while you are operating in the world or your objective function, that is basically in the realm of RL, this is what RL deals with to some extent, so adjust your word model. And the way to adjust your word model even in advance is to explore parts of the space where you know that your world model is inaccurate, that’s called curiosity basically, or play. When you play, you explore parts of the space that you don’t want to do for real because it might be dangerous, but you can adjust your world model without killing yourself basically. So that’s what you want to use RL for, when it comes time to learn a particular task, you already have all the good representations, you already have your world model, but you need to adjust it for the situation at hand, that’s when you use RL.

Lex Fridman (01:32:26) Why do you think RLHF works so well? This reinforcement learning with human feedback, why did it have such a transformational effect on large language models than before?

Yann LeCun (01:32:38) What’s had the transformational effect is human feedback, there are many ways to use it, and some of it is just purely supervised, actually, it’s not really reinforcement learning.

Lex Fridman (01:32:49) It’s the HF?

Yann LeCun (01:32:50) It’s the HF, and then there are various ways to use human feedback. So you can ask humans to rate multiple answers that are produced by world model, and then what you do is you train an objective function to predict that rating, and then you can use that objective function to predict whether an answer is good and you can back propagate gradient to this to fine tune your system so that it only produces highly rated answers. That’s one way, so in RL, that means training what’s called a reward model, so something that basically is a small neural net that estimates to what extent an answer is good.

(01:33:35) It’s very similar to the objective I was talking about earlier for planning, except now it’s not used for planning, it’s used for fine-tuning your system. I think it would be much more efficient to use it for planning, but currently, it’s used to fine tune the parameters of the system. There’s several ways to do this, some of them are supervised, you just ask a human person like, what is a good answer for this? Then you just type the answer. There’s lots of ways that those systems are being adjusted.

Bias / Open Source

Yann LeCun (01:36:23) Is it possible to produce an AI system that is not biased? And the answer is, absolutely not. And it’s not because of technological challenges, although they are technological challenges to that, it’s because bias is in the eye of the beholder. Different people may have different ideas about what constitutes bias for a lot of things, there are facts that are indisputable, but there are a lot of opinions or things that can be expressed in different ways. And so you cannot have an unbiased system, that’s just an impossibility.

(01:40:35) I talked to the French government quite a bit, and the French government will not accept that the digital diet of all their citizens be controlled by three companies on the west coast of the US. That’s just not acceptable, it’s a danger to democracy regardless of how well-intentioned those companies are, and it’s also a danger to local culture, to values, to language.

(01:42:53) The only way you’re going to have an AI industry, the only way you’re going to have AI systems that are not uniquely biased is if you have open source platforms on top of which any group can build specialized systems. So the inevitable direction of history is that the vast majority of AI systems will be built on top of open source platforms.

Lex Fridman (01:45:43) Again, I’m no business guy, but if you release the open source model, then other people can do the same kind of task and compete on it, basically provide fine-tuned models for businesses.

Yann LeCun (01:46:05) The bet is more, “We already have a huge user base and customer base, so it’s going to be useful to them. Whatever we offer them is going to be useful and there is a way to derive revenue from this.”

(01:46:22) It doesn’t hurt that we provide that system or the base model, the foundation model in open source for others to build applications on top of it too. If those applications turn out to be useful for our customers, we can just buy it from them. It could be that they will improve the platform. In fact, we see this already. There are literally millions of downloads of LLaMA 2 and thousands of people who have provided ideas about how to make it better. So this clearly accelerates progress to make the system available to a wide community of people, and there’s literally thousands of businesses who are building applications with it. So Meta’s ability to derive revenue from this technology is not impaired by the distribution of base models in open source.

Business and Open Source

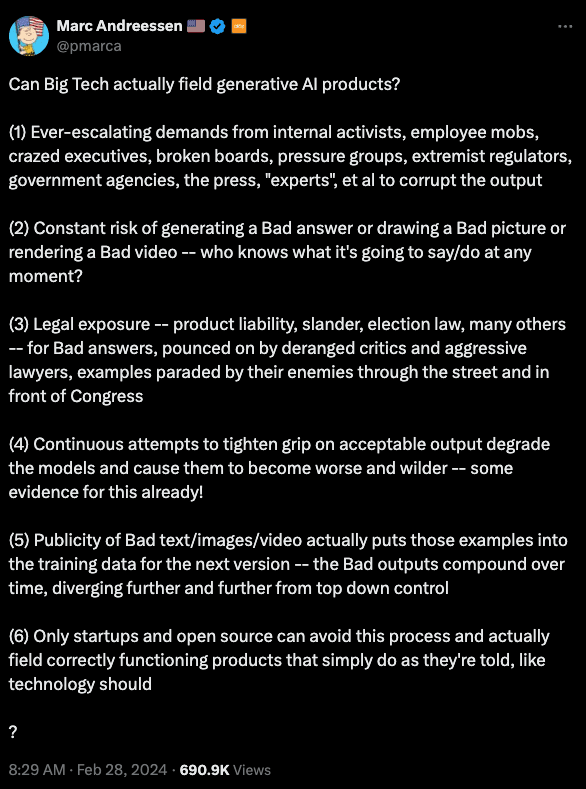

Lex Fridman (01:49:59) Marc Andreessen just tweeted[3] today. Let me do a TL;DR. The conclusion is only startups and open source can avoid the issue that he’s highlighting with big tech. He’s asking, “Can Big Tech actually field generative AI products?”

Yann LeCun (01:51:45) Mark is right about a number of things that he lists that indeed scare large companies. Certainly, congressional investigations is one of them, legal liability, making things that get people to hurt themselves or hurt others. Big companies are really careful about not producing things of this type because they don’t want to hurt anyone, first of all, and then second, they want to preserve their business. So it’s essentially impossible for systems like this that can inevitably formulate political opinions, and opinions about various things that may be political or not, but that people may disagree about, about moral issues and questions about religion and things like that or cultural issues that people from different communities would disagree with in the first place. So there’s only a relatively small number of things that people will agree on are basic principles, but beyond that, if you want those systems to be useful, they will necessarily have to offend a number of people, inevitably.

Lex Fridman (01:53:09) So open source is just better and then you get-

Yann LeCun (01:53:11) Diversity is better, right?

Lex Fridman (01:53:13) And open source enables diversity.

Yann LeCun (01:53:15) That’s right. Open source enables diversity.

Safety of (Current) LLMs

Lex Fridman (01:55:13) But still even with the objectives of how to build a bioweapon, for example, I think something you’ve commented on, or at least there’s a paper where a collection of researchers is trying to understand the social impacts of these LLMs. I guess one threshold that’s nice is, does the LLM make it any easier than a search would, like a Google search would?

Yann LeCun (01:55:39) Right. So the increasing number of studies on this seems to point to the fact that it doesn’t help. So having an LLM doesn’t help you design or build a bioweapon or a chemical weapon if you already have access to a search engine and their library. So the increased information you get or the ease with which you get it doesn’t really help you. That’s the first thing. The second thing is, it’s one thing to have a list of instructions of how to make a chemical weapon, for example, a bioweapon. It’s another thing to actually build it, and it’s much harder than you might think, and then LLM will not help you with that.

(01:56:25) In fact, nobody in the world, not even countries used bioweapons because most of the time they have no idea how to protect their own populations against it. So it’s too dangerous, actually, to ever use, and it’s, in fact, banned by international treaties. Chemical weapons is different. It’s also banned by treaties, but it’s the same problem. It’s difficult to use in situations that doesn’t turn against the perpetrators, but we could ask Elon Musk. I can give you a very precise list of instructions of how you build a rocket engine. Even if you have a team of 50 engineers that are really experienced building it, you’re still going to have to blow up a dozen of them before you get one that works. It’s the same with chemical weapons or bioweapons or things like this, it requires expertise in the real world that the LLM is not going to help you with.

I kind of wish Lex had pushed more on why he thinks this will continue in the future.

LLaMAs

Lex Fridman (01:57:51) Just to linger on LLaMA, Marc announced that LLaMA 3 is coming out eventually. I don’t think there’s a release date, but what are you most excited about? First of all, LLaMA 2 that’s already out there and maybe the future a LLaMA 3, 4, 5, 6, 10, just the future of open source under Meta?

Yann LeCun (01:58:17) Well, a number of things. So there’s going to be various versions of LLaMA that are improvements of previous LLaMAs, bigger, better, multimodal, things like that. Then in future generations, systems that are capable of planning that really understand how the world works, maybe are trained from video, so they have some world model. Maybe capable of the type of reasoning and planning I was talking about earlier. How long is that going to take? When is the research that is going in that direction going to feed into the product line if you want of LLaMA? I don’t know. I can’t tell you. There’s a few breakthroughs that we have to basically go through before we can get there, but you’ll be able to monitor our progress because we publish our research. So last week we published the V-JEPA work, which is a first step towards training systems for video.

(01:59:16) Then the next step is going to be world models based on this type of idea training from video. There’s similar work at DeepMind also and taking place people, and also at UC Berkeley on world models and video. A lot of people are working on this. I think a lot of good ideas are appearing. My bet is that those systems are going to be JEPA light, they’re not going to be generative models, and we’ll see what the future will tell. There’s really good work, a gentleman called Danijar Hafner who is now DeepMind, who’s worked on models of this type that learn representations and then use them for planning or learning tasks by reinforcement training and a lot of work at Berkeley by Pieter Abbeel, Sergey Levine, a bunch of other people of that type I’m collaborating with actually in the context of some grants with my NYU hat.

(02:00:20) Then collaboration is also through Meta ’cause the lab at Berkeley is associated with Meta in some way, so with FAIR. So I think it is very exciting. I haven’t been that excited about the direction of machine learning and AI since 10 years ago when FAIR was started. Before that, 30 years ago, we were working, oh, sorry, 35 on combination nets and the early days of neural nets. So I’m super excited because I see a path towards potentially human-level intelligence with systems that can understand the world, remember, plan, reason. There is some set of ideas to make progress there that might have a chance of working, and I’m really excited about this. What I like is that somewhat we get on to a good direction and perhaps succeed before my brain turns to a white sauce or before I need to retire.

GPUs vs the Human Brain

Yann LeCun (02:02:32) We’re still far in terms of compute power from what we would need to match the compute power of the human brain. This may occur in the next couple of decades, but we’re still some ways away. Certainly, in terms of power efficiency, we’re really far, so there’s a lot of progress to make in hardware. Right now, a lot of the progress is, there’s a bit coming from silicon technology, but a lot of it coming from architectural innovation and quite a bit coming from more efficient ways of implementing the architectures that have become popular, basically combination of transformers and convnets, and so there’s still some ways to go until we are going to saturate. We’re going to have to come up with new principles, new fabrication technology, new basic components perhaps based on different principles and classical digital.

Lex Fridman (02:03:42) Interesting. So you think in order to build AMI, we potentially might need some hardware innovation too.

Yann LeCun (02:03:52) Well, if we want to make it ubiquitous, yeah, certainly, ’cause we’re going to have to reduce the power consumption. A GPU today is half a kilowatt to a kilowatt. Human brain is about 25 watts, and a GPU is way below the power of the human brain. You need something like 100,000 or a million to match it, so we are off by a huge factor here.

Claims:

- A human brain uses 25 watts. I found references in the 15-25 watt range.

- A brain is 10^5 - 10^6 times more powerful than a GPU. Joe Carlsmith covers this in great detail. He says "Overall, I think it more likely than not that 1e15 FLOP/s is enough to perform tasks as well as the human brain (given the right software, which may be very hard to create). And I think it unlikely (<10%) that more than 1e21 FLOP/s is required.". An H100 running FP8 calculations can do ~4e15 FLOPs. So, it's possible that one GPU is enough and at most 250,000. LeCun's claim is roughly an upper bound, but there's a lot of uncertainty.

- Steven Byrnes points out in the comments [LW(p) · GW(p)] that LeCun seems to be focusing on the 10^14 synapses in the human brain, compared to the number of neuron-to-neuron connections a GPU can handle.

What does AGI / AMI Look Like?

Lex Fridman (02:04:21) You often say that AGI is not coming soon, meaning not this year, not the next few years, potentially farther away. What’s your basic intuition behind that?

Yann LeCun (02:04:35) So first of all, it’s not going to be an event. The idea somehow, which is popularized by science fiction and Hollywood, that somehow somebody is going to discover the secret to AGI or human-level AI or AMI, whatever you want to call it, and then turn on a machine and then we have AGI, that’s just not going to happen. It’s not going to be an event. It’s going to be gradual progress. Are we going to have systems that can learn from video how the world works and learn good representations? Yeah. Before we get them to the scale and performance that we observe in humans it’s going to take quite a while. It’s not going to happen in one day. Are we going to get systems that can have large amounts of associated memory so they can remember stuff? Yeah, but same, it’s not going to happen tomorrow. There are some basic techniques that need to be developed. We have a lot of them, but to get this to work together with a full system is another story.

(02:05:37) Are we going to have systems that can reason and plan perhaps along the lines of objective-driven AI architectures that I described before? Yeah, but before we get this to work properly, it’s going to take a while. Before we get all those things to work together, and then on top of this, have systems that can learn hierarchical planning, hierarchical representations, systems that can be configured for a lot of different situation at hand, the way the human brain can, all of this is going to take at least a decade and probably much more because there are a lot of problems that we’re not seeing right now that we have not encountered, so we don’t know if there is an easy solution within this framework. So it’s not just around the corner. I’ve been hearing people for the last 12, 15 years claiming that AGI is just around the corner and being systematically wrong. I knew they were wrong when they were saying it. I called their bullshit.

I'd love more detail on LeCun's reasons for ruling out recursive self-improvement / hard takeoff[4].

AI Doomers

Lex Fridman (02:08:48) So you push back against what are called AI doomers a lot. Can you explain their perspective and why you think they’re wrong?

Yann LeCun (02:08:59) Okay, so AI doomers imagine all kinds of catastrophe scenarios of how AI could escape or control and basically kill us all, and that relies on a whole bunch of assumptions that are mostly false. So the first assumption is that the emergence of super intelligence is going to be an event, that at some point we’re going to figure out the secret and we’ll turn on a machine that is super intelligent, and because we’d never done it before, it’s going to take over the world and kill us all. That is false. It’s not going to be an event. We’re going to have systems that are as smart as a cat, have all the characteristics of human-level intelligence, but their level of intelligence would be like a cat or a parrot maybe or something. Then we’re going to work our way up to make those things more intelligent. As we make them more intelligent, we’re also going to put some guardrails in them and learn how to put some guardrails so they behave properly.

(02:10:03) It’s not going to be one effort, that it’s going to be lots of different people doing this, and some of them are going to succeed at making intelligent systems that are controllable and safe and have the right guardrails. If some other goes rogue, then we can use the good ones to go against the rogue ones. So it’s going to be my smart AI police against your rogue AI. So it’s not going to be like we’re going to be exposed to a single rogue AI that’s going to kill us all. That’s just not happening. Now, there is another fallacy, which is the fact that because the system is intelligent, it necessarily wants to take over. There are several arguments that make people scared of this, which I think are completely false as well.

(02:10:48) So one of them is in nature, it seems to be that the more intelligent species otherwise end up dominating the other and even distinguishing the others sometimes by design, sometimes just by mistake. So there is thinking by which you say, “Well, if AI systems are more intelligent than us, surely they’re going to eliminate us, if not by design, simply because they don’t care about us,” and that’s just preposterous for a number of reasons. First reason is they’re not going to be a species. They’re not going to be a species that competes with us. They’re not going to have the desire to dominate because the desire to dominate is something that has to be hardwired into an intelligent system. It is hardwired in humans. It is hardwired in baboons, in chimpanzees, in wolves, not in orangutans. The species in which this desire to dominate or submit or attain status in other ways is specific to social species. Non-social species like orangutans don’t have it, and they are as smart as we are, almost, right?

Yann LeCun (02:12:27) Right? This is the way we’re going to build them. So then people say, “Oh, but look at LLMs. LLMs are not controllable,” and they’re right. LLMs are not controllable. But objectively-driven AI, so systems that derive their answers by optimization of an objective means they have to optimize this objective, and that objective can include guardrails. One guardrail is, obey humans. Another guardrail is, don’t obey humans if it’s hurting other humans within limits.

Lex Fridman (02:12:57) Right. I’ve heard that before somewhere, I don’t remember-

Yann LeCun (02:12:59) Yes, maybe in a book.

Lex Fridman (02:13:01) Yeah, but speaking of that book, could there be unintended consequences also from all of this?

Yann LeCun (02:13:09) No, of course. So this is not a simple problem. Designing those guardrails so that the system behaves properly is not going to be a simple issue for which there is a silver bullet for which you have a mathematical proof that the system can be safe. It’s going to be a very progressive, iterative design system where we put those guardrails in such a way that the system behaves properly. Sometimes they’re going to do something that was unexpected because the guardrail wasn’t right and we'd correct them so that they do it right. The idea somehow that we can’t get it slightly wrong because if we get it slightly wrong, we’ll die is ridiculous. We are just going to go progressively. It is just going to be, the analogy I’ve used many times is turbojet design. How did we figure out how to make turbojet so unbelievably reliable?

(02:14:07) Those are incredibly complex pieces of hardware that run at really high temperatures for 20 hours at a time sometimes, and we can fly halfway around the world on a two-engine jetliner at near the speed of sound. Like how incredible is this? It’s just unbelievable. Did we do this because we invented a general principle of how to make turbojets safe? No, it took decades to fine tune the design of those systems so that they were safe. Is there a separate group within General Electric or Snecma or whatever that is specialized in turbojet safety? No. The design is all about safety, because a better turbojet is also a safer turbojet, so a more reliable one. It’s the same for AI. Do you need specific provisions to make AI safe? No, you need to make better AI systems, and they will be safe because they are designed to be more useful and more controllable.

I think this analogy breaks down in several ways and I wish Lex had pushed back a bit.

What Does a World with AGI Look Like (Especially Re Safety)?

Lex Fridman (02:15:16) So let’s imagine an AI system that’s able to be incredibly convincing and can convince you of anything. I can at least imagine such a system, and I can see such a system be weapon-like because it can control people’s minds. We’re pretty gullible. We want to believe a thing, and you can have an AI system that controls it and you could see governments using that as a weapon. So do you think if you imagine such a system, there’s any parallel to something like nuclear weapons?

Yann LeCun (02:15:53) No.

Yann LeCun (02:16:30) [...] They’re not going to be talking to you, they’re going to be talking to your AI assistant, which is going to be as smart as theirs. Because as I said, in the future, every single one of your interactions with the digital world will be mediated by your AI assistant. So the first thing you’re going to ask, is this a scam? Is this thing telling me the truth? It’s not even going to be able to get to you because it’s only going to talk to your AI system or your AI system. It’s going to be like a spam filter. You’re not even seeing the email, the spam email. It’s automatically put in a folder that you never see. It’s going to be the same thing. That AI system that tries to convince you of something is going to be talking to an AI assistant, which is going to be at least as smart as it, and it’s going to say, “This is spam.” It’s not even going to bring it to your attention.

Lex Fridman (02:17:32) So to you, it’s very difficult for any one AI system to take such a big leap ahead to where it can convince even the other AI systems. There’s always going to be this kind of race where nobody’s way ahead.

Yann LeCun (02:17:46) That’s the history of the world. History of the world is whenever there is progress someplace, there is a countermeasure and it’s a cat and mouse game.

(02:18:48) Probably first within industry. This is not a domain where government or military organizations are particularly innovative and they’re in fact way behind. And so this is going to come from industry and this kind of information disseminates extremely quickly. We’ve seen this over the last few years where you have a new … Even take AlphaGo, this was reproduced within three months even without particularly detailed information, right?

Lex Fridman (02:19:18) Yeah. This is an industry that’s not good at secrecy.

Yann LeCun (02:19:21) No. But even if there is, just the fact that you know that something is possible makes you realize that it’s worth investing the time to actually do it. You may be the second person to do it, but you’ll do it. And same for all the innovations of self supervision in transformers, decoder only architectures, LLMS. Those things, you don’t need to know exactly the details of how they work to know that it’s possible because it’s deployed and then it’s getting reproduced. And then people who work for those companies move. They go from one company to another and the information disseminates. What makes the success of the US tech industry and Silicon Valley in particular is exactly that, is because the information circulates really, really quickly and disseminates very quickly. And so the whole region is ahead because of that circulation of information.

(02:21:37) Well, there is a natural fear of new technology and the impact it can have in society. And people have instinctive reactions to the world they know being threatened by major transformations that are either cultural phenomena or technological revolutions. And they fear for their culture, they fear for their job, they fear for the future of their children and their way of life. So any change is feared. And you see this along history, any technological revolution or cultural phenomenon was always accompanied by groups or reaction in the media that basically attributed all the current problems of society to that particular change. Electricity was going to kill everyone at some point. The train was going to be a horrible thing because you can’t breathe past 50 kilometers an hour. And so there’s a wonderful website called the Pessimist Archive.

(02:22:57) Which has all those newspaper clips of all the horrible things people imagine would arrive because of either a technological innovation or a cultural phenomenon, just wonderful examples of jazz or comic books being blamed for unemployment or young people not wanting to work anymore and things like that. And that has existed for centuries and it’s knee-jerk reactions. The question is do we embrace change or do we resist it? And what are the real dangers as opposed to the imagined ones?

Lex Fridman (02:23:51) So people worry about, I think one thing they worry about with big tech, something we’ve been talking about over and over, but I think worth mentioning again, they worry about how powerful AI will be and they worry about it being in the hands of one centralized power of just a handful of central control. And so that’s the skepticism with big tech you make, these companies can make a huge amount of money and control this technology, and by so doing take advantage, abuse the little guy in society.

Yann LeCun (02:24:29) Well, that’s exactly why we need open source platforms.

Big Companies and AI

Lex Fridman (02:24:38) So let me ask you on your, like I said, you do get a little bit flavorful on the internet. Joscha Bach tweeted something that you LOL’d at in reference to HAL 9,000. Quote, “I appreciate your argument and I fully understand your frustration, but whether the pod bay doors should be opened or closed is a complex and nuanced issue.” So you’re at the head of Meta AI. This is something that really worries me, that our AI overlords will speak down to us with corporate speak of this nature, and you resist that with your way of being. Is this something you can just comment on, working at a big company, how you can avoid the over fearing, I suppose, through caution create harm?

Yann LeCun (02:25:41) Yeah. Again, I think the answer to this is open source platforms and then enabling a widely diverse set of people to build AI assistance that represent the diversity of cultures, opinions, languages, and value systems across the world so that you’re not bound to just be brainwashed by a particular way of thinking because of a single AI entity. So, I think it’s a really, really important question for society. And the problem I’m seeing is that, which is why I’ve been so vocal and sometimes a little sardonic about it, is because I see the danger of this concentration of power through proprietary AI systems as a much bigger danger than everything else. That if we really want diversity of opinion in AI systems, that in the future where we’ll all be interacting through AI systems, we need those to be diverse for the preservation of diversity of ideas and creed and political opinions and whatever, and the preservation of democracy. And what works against this is people who think that for reasons of security, we should keep the AI systems under lock and key because it’s too dangerous to put it in the hands of everybody, because it could be used by terrorists or something. That would lead to potentially a very bad future in which all of our information diet is controlled by a small number of companies through proprietary systems.

Hope for the Future of Humanity

Lex Fridman (02:37:53) What hope do you have for the future of humanity? We’re talking about so many exciting technologies, so many exciting possibilities. What gives you hope when you look out over the next 10, 20, 50, a hundred years? If you look at social media, there’s wars going on, there’s division, there’s hatred, all this kind of stuff that’s also part of humanity. But amidst all that, what gives you hope?

Yann LeCun (02:38:29) I love that question. We can make humanity smarter with AI. AI basically will amplify human intelligence. It’s as if every one of us will have a staff of smart AI assistants. They might be smarter than us. They’ll do our bidding, perhaps execute a task in ways that are much better than we could do ourselves, because they’d be smarter than us. And so it’s like everyone would be the boss of a staff of super smart virtual people. So we shouldn’t feel threatened by this any more than we should feel threatened by being the manager of a group of people, some of whom are more intelligent than us. I certainly have a lot of experience with this, of having people working with me who are smarter than me.

(02:39:35) That’s actually a wonderful thing. So having machines that are smarter than us, that assist us in all of our tasks, our daily lives, whether it’s professional or personal, I think would be an absolutely wonderful thing. Because intelligence is the commodity that is most in demand. That’s really what I mean. All the mistakes that humanity makes is because of lack of intelligence really, or lack of knowledge, which is related. So making people smarter, we just can only be better. For the same reason that public education is a good thing and books are a good thing, and the internet is also a good thing, intrinsically and even social networks are a good thing if you run them properly.

(02:40:21) It’s difficult, but you can. Because it helps the communication of information and knowledge and the transmission of knowledge. So AI is going to make humanity smarter. And the analogy I’ve been using is the fact that perhaps an equivalent event in the history of humanity to what might be provided by generalization of AI assistants is the invention of the printing press. It made everybody smarter, the fact that people could have access to books. Books were a lot cheaper than they were before, and so a lot more people had an incentive to learn to read, which wasn’t the case before.

(02:41:14) And people became smarter. It enabled the enlightenment. There wouldn’t be an enlightenment without the printing press. It enabled philosophy, rationalism, escape from religious doctrine, democracy, science. And certainly without this, there wouldn’t have been the American Revolution or the French Revolution. And so we would still be under a feudal regime perhaps. And so it completely transformed the world because people became smarter and learned about things. Now, it also created 200 years of essentially religious conflicts in Europe because the first thing that people read was the Bible and realized that perhaps there was a different interpretation of the Bible than what the priests were telling them. And so that created the Protestant movement and created the rift. And in fact, the Catholic Church didn’t like the idea of the printing press, but they had no choice. And so it had some bad effects and some good effects.

(02:42:32) I don’t think anyone today would say that the invention of the printing press had an overall negative effect despite the fact that it created 200 years of religious conflicts in Europe. Now, compare this, and I thought I was very proud of myself to come up with this analogy, but realized someone else came with the same idea before me, compare this with what happened in the Ottoman Empire. The Ottoman Empire banned the printing press for 200 years, and he didn’t ban it for all languages, only for Arabic. You could actually print books in Latin or Hebrew or whatever in the Ottoman Empire, just not in Arabic.

(02:43:20) And I thought it was because the rulers just wanted to preserve the control over the population and the religious dogma and everything. But after talking with the UAE Minister of AI, Omar Al Olama, he told me no, there was another reason. And the other reason was that it was to preserve the corporation of calligraphers. There’s an art form, which is writing those beautiful Arabic poems or whatever, religious text in this thing. And it was a very powerful corporation of scribes basically that ran a big chunk of the empire, and we couldn’t put them out of business. So they banned the printing press in part to protect that business.

(02:44:21) Now, what’s the analogy for AI today? Who are we protecting by banning AI? Who are the people who are asking that AI be regulated to protect their jobs? And of course, it’s a real question of what is going to be the effect of a technological transformation like AI on the job market and the labor market? And there are economists who are much more expert at this than I am, but when I talk to them, they tell us we’re not going to run out of jobs. This is not going to cause mass unemployment. This is just going to be a gradual shift of different professions.

(02:45:02) The professions that are going to be hot 10 or 15 years from now, we have no idea today what they’re going to be. The same way, if you go back 20 years in the past, who could have thought 20 years ago that the hottest job, even five, 10 years ago, was mobile app developer? Smartphones weren’t invented.

(02:45:48) I think people are fundamentally good. And in fact, a lot of doomers are doomers because they don’t think that people are fundamentally good, and they either don’t trust people or they don’t trust the institution to do the right thing so that people behave properly.

Summary

According to me, LeCun’s main (cruxy) differences from the median LW commenter:

- LeCun believes that autoregressive LLMs will not take us all the way to superintelligence.

- Downstream of that belief, he has longer timelines.

- He also seems to believe in a long takeoff.

- LeCun sees embodiment as more important.

- LeCun believes in open development because he prioritizes different risks, seeing AI controlled by a small number of companies as the outcome to work against rather than a malevolent superintelligence[5].

- LeCun is skeptical of whether AI architectures can be kept secret.

- He also believes that an ecosystem of AIs can keep others in check, i.e. none will gain a decisive advantage.

Once you factor in the belief that transformers won't get us to superintelligence, LeCun's other views start to make more sense. Overall I came away with more uncertainty about the path to transformative AI (If you didn't, what do you know that LeCun doesn't?).

- ^

This format lends itself to more nuance than discussions you may have seen on Twitter.

- ^

To calculate how long it would take a human to read a 10^13 token LLM training corpus, we need to make some assumptions:

1. Average reading speed: Let's assume an average reading speed of 200 words per minute (wpm). This is a reasonable estimate for an average adult reader.

2. Tokens to words ratio: Tokens in a corpus can include words, subwords, or characters, depending on the tokenization method used. For this estimation, let's assume a 1:1 ratio between tokens and words, although this may vary in practice.

Now, let's calculate:

1. Convert the number of tokens to words:

10^13 tokens = 10,000,000,000,000 words (assuming a 1:1 token to word ratio)2. Calculate the number of minutes required to read the corpus:

Number of minutes = Number of words ÷ Reading speed (wpm)

= 10,000,000,000,000 ÷ 200

= 50,000,000,000 minutes3. Convert minutes to years:

Number of years = Number of minutes ÷ (60 minutes/hour × 24 hours/day × 365 days/year)

= 50,000,000,000 ÷ (60 × 24 × 365)

≈ 95,129 yearsTherefore, assuming an average reading speed of 200 words per minute and a 1:1 token to word ratio, it would take a single human approximately 95,129 years to read a 10^13 token LLM training corpus. This is an incredibly long time, demonstrating the vast amount of data used to train large language models and the impracticality of a human processing such a large corpus.

- ^

- ^

https://twitter.com/ylecun/status/1651009510285381632

- ^

There was unfortunately very little examination of LeCun's beliefs about the feasibility of controlling smarter-than-human intelligence.

15 comments

Comments sorted by top scores.

comment by Steven Byrnes (steve2152) · 2024-03-13T23:51:41.542Z · LW(p) · GW(p)

Here are clarifications for a couple minor things I was confused about while reading:

a GPU is way below the power of the human brain. You need something like 100,000 or a million to match it, so we are off by a huge factor here.

I was trying to figure out where LeCun’s 100,000+ claim is coming from, and I found this 2017 article which is paywalled but the subheading implies that he’s focusing on the 10^14 synapses in a human brain, and comparing that to the number of neuron-to-neuron connections that a GPU can handle.

(If so, I strongly disagree with that comparison, but I don’t want to get into that in this little comment.)

Francois Chollet says “The actual information input of the visual system is under 1MB/s”.

I don’t think Chollet is directly responding to LeCun’s point, because Chollet is saying that optical information is compressible to <1MB/s, but LeCun is comparing uncompressed human optical bits to uncompressed LLM text bits. And the text bits are presumably compressible too!

It’s possible that the compressibility (useful information content per bit) of optical information is very different from the compressibility of text information, in which case LeCun’s comparison is misleading, but neither LeCun nor Chollet is making claims one way or the other about that, AFAICT.

Replies from: joel-burget↑ comment by Joel Burget (joel-burget) · 2024-03-14T00:51:35.393Z · LW(p) · GW(p)

Thanks! I added a note about LeCun's 100,000 claim and just dropped the Chollet reference since it was misleading.

comment by TrudosKudos (cade-trudo) · 2024-03-14T01:20:22.170Z · LW(p) · GW(p)

His view on AI alignment risk is infuriating simplistic. To just call certain doomsday scenarios objectively "false" is a level of epistemic arrogance that borders on obscene.

I feel like he could at least acknowledge the existence of possible scenarios and express a need to invest in avoiding those scenarios instead of just negating an entire argument.

↑ comment by Anders Lindström (anders-lindstroem) · 2024-03-14T14:48:30.631Z · LW(p) · GW(p)

Good that you mention it and did NOT get down voted. Yet. I have noticed that we are in the midst of an "AI-washing" attack which is also going on here on lesswrong too. But its like asking a star NFL quarterback if he thinks they should ban football because the risk of serious brain injuries, of course he will answer no. The big tech companies pours trillions of dollars into AI so of course they make sure that everyone is "aligned" to their vision and that they will try to remove any and all obstacles when it comes to public opinion. Repeat after me:

"AI will not make humans redundant."

"AI is not an existential risk."

...

comment by Jonas Hallgren · 2024-03-14T20:35:33.405Z · LW(p) · GW(p)

I thought the orangutan argument was pretty good when I first saw it, but then I looked it up, and I realised that it is not that they aren't power seeking. It is more that they only are when it comes to interactions that matter for the future survival of offspring. It actually is a very flimsy argument. Some of the things he says are smart like some of the stuff on the architecture front, but you know, he always talks about his aeroplane analogy in AI Safety. It is like really dumb as I wouldn't get into an aeroplane without knowing that it has been safety checked and I have a hard time taking him seriously when it comes to safety as a consequence.

comment by ryan_greenblatt · 2024-03-14T00:35:04.296Z · LW(p) · GW(p)

An H100 running FP8 calculations can do 3-4e12 FLOPs

This is incorrect.

An H100 can do 3-4e15 GP8 FLOP/sec.

(nvidia claims 3,958 teraFLOP/sec here. teraFLOPS = 1e12 FLOP/sec, so 3,958 * 1e12 is about 4e15.)

Replies from: ryan_greenblatt↑ comment by ryan_greenblatt · 2024-03-14T00:37:00.502Z · LW(p) · GW(p)

Also you say:

So, as a lower bound we're talking 3-4000 GPUs and as an upper bound 3-4e9. Overall, more uncertainty than LeCun's estimate but in very roughly the same ballpark.

This isn't a lower bound according to Carlsmith as he says:

Overall, I think it more likely than not that 1e15 FLOP/s is enough to perform tasks as well as the human brain

Emphasis mine.

Replies from: joel-burget↑ comment by Joel Burget (joel-burget) · 2024-03-14T00:45:42.685Z · LW(p) · GW(p)

Thanks for the correction! I've updated the post.

comment by wachichornia · 2024-03-21T21:19:05.388Z · LW(p) · GW(p)

Has Lecun explained anywhere how does he intend to be able to keep the guardrails on open source systems?

comment by Joe Kwon · 2024-03-14T17:24:55.792Z · LW(p) · GW(p)

Does the median LW commenter believe that autoregressive LLMs will take us all the way to superintelligence?

Replies from: habryka4↑ comment by habryka (habryka4) · 2024-03-15T18:35:55.991Z · LW(p) · GW(p)

My sense is almost everyone here expects that we will almost certainly arrive at dangerous capabilities with something else in addition to autoregressive LLMs (at the very least RLHF which is already widely used). I don't know what's true in the limit (like if you throw another 30 OOMs of compute at autoregressive models), and I doubt others have super strong opinions here. To me it seems plausible you get something that does recursive self-improvement out of a large enough autoregressive LLMs, but it seems very unlikely to be the fastest way to get there.

Replies from: ryan_greenblatt↑ comment by ryan_greenblatt · 2024-03-15T18:46:15.290Z · LW(p) · GW(p)

Edit: habryka edited the parent comment to clarify and I now agree. I'm keeping this comment as is for posterity, but note the discussion below.

My sense is almost everyone here expects that we will almost certainly arrive at dangerous capabilities with something else in addition to autoregressive LLMs

This exact statement seems wrong, I'm pretty uncertain here and many other (notable) people seem uncertain too. Maybe I think "pure autoregressive LLMs (where a ton of RL isn't doing that much work) are the first AI with dangerous capabilities" is around 30% likely.

(Assuming dangerous capabilities includes things like massively speeding up AI R&D.)

(Note that in shorter timelines, my probability on pure autoregressive LLMs goes way up. Part of my view on having only 30% on pure LLMs is just downstream of a general view like "it's reasonably likely that this exact approach to making transformatively powerful AI isn't the one that end up working, so AI is reasoanbly likely to look different.)

Some people (e.g. Bogdan Ionut Cirstea) think that it's very likely that pure autoregressive LLMs go all the way through human level R&D etc. (I don't think this is very likely, but possible.)

(TBC, I think qualitatively wildly superhuman AIs which do galaxy brained things that humans can't understand probably requires something more than autoregressive LLMs, at least to be done at all efficiently. And this might be what is intended by "superintelligence" in the original question.)

Replies from: habryka4↑ comment by habryka (habryka4) · 2024-03-15T22:19:50.999Z · LW(p) · GW(p)

I was including the current level of RLHF as already not qualifying as "pure autoregressive LLMs". IMO the RLHF is doing a bunch of important work at least at current capability levels (and my guess is also will do some important work at the first dangerous capability levels).

Also, I feel like you forgot the context of the original message, which said "all the way to superintelligence". I was calibrating my "dangerous" threshold to "superintelligence level dangerous" not "speeds up AI R&D" dangerous.

Replies from: ryan_greenblatt↑ comment by ryan_greenblatt · 2024-03-15T23:26:01.693Z · LW(p) · GW(p)

I was including the current level of RLHF as already not qualifying as "pure autoregressive LLMs". IMO the RLHF is doing a bunch of important work at least at current capability levels (and my guess is also will do some important work at the first dangerous capability levels).

Oh, ok, I retract my claim.

Also, I feel like you forgot the context of the original message, which said "all the way to superintelligence".

I didn't, I provided various caveats in parentheticals about the exact level of danger.

Replies from: habryka4↑ comment by habryka (habryka4) · 2024-03-15T23:56:01.108Z · LW(p) · GW(p)

I didn't, I provided various caveats in parentheticals about the exact level of danger.

Oops, mea culpa, I skipped your last parenthetical when reading your comment so missed that.