Mandatory Obsessions

post by Jacob Falkovich (Jacobian) · 2018-11-14T18:19:53.007Z · LW · GW · 14 commentsContents

The Worst is to Care a Little In Praise of Diverse Obsessions Thought Leaders Meme-munity None 14 comments

Cross-posted from Putanumonit.

The Worst is to Care a Little

At a party the other day, a woman expressed her displeasure at men who “pretend to be allies of gay rights but don’t really care about it”. Did she mean men who proclaimed their progressive attitudes on LGBTQ but voted Republican on Tuesday anyway? Yes, she told me, but not only those. She reserved special scorn for those who vote in support of immigration from Muslim countries, not realizing how Muslim immigrants make the country less safe for gays.

I remarked that she’s setting quite a high bar for these men. Gay rights hardly seem like a central issue in the upcoming elections. In fact, even my gay friends are split among many political views and party allegiances. Considering the second-order impact of every political decision on the lives of gays is a standard that few senators or political pundits meet, let alone guys who have other day jobs and other interests.

“Not good enough,” the woman replied, “if you don’t care enough about gay rights to think it all through you’re not an ally.”

But the other other day, a man at an Effective Altruism meetup expressed his disdain for people who don’t care enough about climate change to vote based on climate policy and said that he doesn’t want them in EA.

And the other other other day, a woman expressed her bafflement at people whose politics aren’t driven by a concern for women’s reproductive rights. She said she doesn’t want to be friends with people who don’t think that abortion is the most important issue today in the US today.

How about someone who is pro-choice but voted for Hillary Clinton just because she shares a concern for AI safety? “That just shows you don’t really care about women,” she answered. Not caring enough is just as bad as having the wrong position.

Since I’m an alien, I don’t get to vote at all (check your privilege, citizens, and stop microagressing against me by sharing exhortations to go out and vote on Facebook). Even if I could vote in New York, I probably wouldn’t. And if I had to, I’d probably vote for Andrew Yang even though I didn’t check what his positions are on gay rights, climate change, and abortion. I can guess what Yang’s positions on these three issues are, and I guess they’re very similar to my own positions, but I wouldn’t care enough to check.

Here’s a list of other topics I was recently criticized for not caringenough about by people who, for all their caring, didn’t discover anything new and believe pretty much exactly what I believe on each topic: Russian meddling in the elections, wild animal suffering, male circumcision, free speech on campus, Kanye West, Brett Kavanaugh, Alexandria Ocasio-Cortez, anti-Semitism, LeBron James signing with the Lakers.

I’m getting a bit pissed about being told that I’m a bad person for not sharing in somebody’s mandatory obsessions, especially since everyone has different ones. I’m also confused – I personally am very much in favor of those around me being diverse in what they care about. So why doesn’t everyone else?

In Praise of Diverse Obsessions

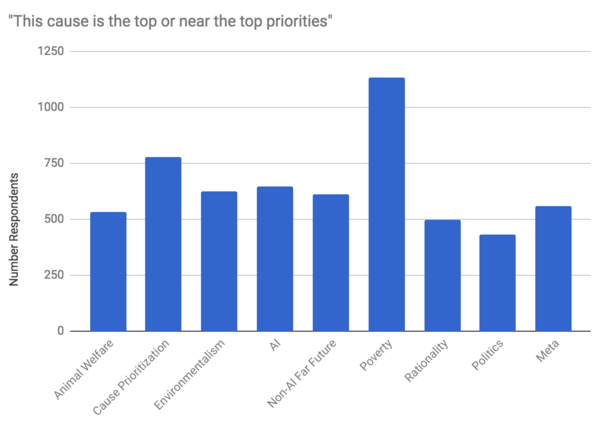

This chart of priorities from the Effective Altruist survey makes me very happy:

Of the 9 EA cause areas, I care deeply about three of them, care a bit about another three, and care not at all about the final three. But every cause area has many people who are obsessed with it and also many people who don’t give a crap.

This is a diversity of priorities, not of opinions. I agree that factory farming and the criminal justice system are terrible – it’s better in general to have fewer sentient beings in cages. I just care about AIand rationality more. And yet, I strongly prefer this sort of EA community to one where the majority cares only about AI and rationality.

The first benefit of fascination diversity is breadth of coverage. I am not very interested in animal welfare because it strikes me as quite intractable at the moment, and I’m very skeptical of the impact of vegan advocacy. But with all the vegan EAs around I know that I’ll quickly learn if anything exciting happens in the space, like innovations in meat replacement technology. Hanging out in a community where 10% of people are obsessed with a topic is a much better way to stay on top of it than dedicating 10% of my own time to it.

Another example: a small part of the rationalist community has recently become fascinated with auras, tarot, chakras [LW · GW] and other assorted esoterics. This has gotten to the point where we can’t be 100% sure [LW(p) · GW(p)] if they’re still sane or not. I hold simultaneously both of the following:

- Looking for wisdom in chakras is a bad idea. Whatever aspects of chakra theory correspond to reality are hopelessly polluted by mystical hogwash, and the epistemic harm of hanging around chakra believers is greater than the benefits of hidden chakra knowledge.

- As long as it’s a small part of the community that’s obsessed with chakras, I’m happy to have them around and I think that they benefit the group as a whole.

Another benefit of diversity is specialization. The first person to become obsessed with a topic is someone who’s a good fit for it and will contribute a lot of original thinking. The two hundred and first person, not so much. Even in a small community there are diminishing returns to everyone piling into the same obsession.

This also allows the community to diversify status hierarchies. More fascinations mean more opportunities to gain respect as a contributor in the community. If only one thing is allowed to be important, Girardian terror ensues.

I make a point of diversifying interests in communities I help organize, like the New York rationalist meetup [? · GW]. We make sure to alternate statistics workshops with mindfulness exercises, and discussion of botany with deep dives into some abstruse LessWrong post. This invites many more people to get involved, contribute, and feel like they’re important members of the group. This keeps the group strong, vibrant, and less dependent on a handful of leaders.

Finally, diversity of both opinions and levels of caring protects from groupthink. If we all care deeply about X it becomes socially treacherous to question the group’s X-expert on anything. Going back to Effective Altruism, there are people whose main EA obsession is keeping EA honest, and they’re indispensable.

Of course, if a group’s purpose is to be obsessed with a single topic, enforcing this obsession is beneficial to the group. Everyone at Planned Parenthood should care about reproductive rights, everyone at MIRI about AI, etc. But if the group’s telos is something else, whether it’s purely social or just a broader range of issues, enforcing mandatory obsessions is harmful to the group.

Given the benefits of fascination diversity, why do people fight so hard against it in the communities they’re part of? I am going to present two theories. This involves me speculating about the minds of people very different from me, so take both with a salt shaker.

Thought Leaders

Hanlon’s Razor says: Never attribute to malice that which is adequately explained by stupidity. But Hanson’s Razor inverts it: Never attribute to stupidity that which is adequately explained by unconscious malice selfishness.

People who exhort you to care more about Issue X rarely follow it up by admitting that they personally don’t know much about X, it’s just important to investigate. And not only do they know a lot about the issue we all have to care about, but they’re also quite confident about how to address it. No one says: “We need to focus on climate change to find out if we’re wildly overreacting or underreacting to the issue.” They usually have a specific reaction in mind.

An inevitable outcome of the group shifting focus to Issue X is that the person with strong opinions on Issue X gets a massive boost in status. If the issue becomes a sacred value, an unassailable matter on which no compromises can be made, the status of the sacred value’s guardians also becomes unassailable.

Except for a few Machiavellian types, I suspect that this desire isn’t explicit or necessarily conscious. You notice that when the group’s conversation turns to Issue X you have a lot to say and everyone listens and nods in agreement. This feels good. You start trying to have more conversations about X, and resent people who turn the conversation to Y instead.

Once you’re the expert on X, if someone says outright that Y is more important you get personally offended. This isn’t always wrong. “Let’s shift some focus away from such-and-such” is often a politically savvy way to say “let’s shift some respect and prestige away from so-and-so”.

Of course, a single issue thinker can point out that I get a status boost from groups having diverse focus. I’m trying to establish a reputation as a purveyor of nuggets of wisdom on dozens of varied topics. But I’m not an expert, let alone a moral leader, on a single one. This wouldn’t be unfair. The point of Elephant in the Brain isn’t that other people do things subconsciously for status and political gain, it’s that we all do.

Meme-munity

The more charitable view of people who feel strongly about feeling strongly is that these people are possessed by a mind virus. The fault, dear Brutus, is not in ourselves, but in our memes.

I’ve started to see big arguments, not as arguments over facts, but arguments over whether specific concepts should be worthy of controlling your mind; arguments over which immunities we shouldn’t have. Consider “won’t someone think of the children”, and interpret it as “why are you immune to the ‘children’ meme, you should allow it to take control of your life”; now replace “the children” with “free speech” or “minorities” or “self-defense” or “transgender people” or “the law” or “human rights”.

The right answer to “which immunities should we have” is “all of them”. Every argument is worthy of measure; no argument is worthy of instant and perfect obedience.

In my brain sits the meme: climate change is scary, but there’s little I can personally do about it and there’s no point discussing it ad nauseam because none of my friends impact Chinese energy policy.

For obvious reasons, my version is a lot less virulent than the meme: climate change is scary, and we should all talk about it ad nauseam. The second version contains the means of its own replication, like the flu virus causing a sick person to sneeze on everyone around them.

If you’re hearing about climate change for the first time, you’re almost certainly going to hear the self-replicating version, not the quiet one. This is simply because those infected with it are the ones doing 99% of the talking about climate change. Shifting to the quiet version requires building a general immunity to memes that contain the imposition to breathlessly proselytize them.

No argument is worthy of instant and perfect obedience, and no argument is worth repeating until your friends are sick of hearing it.

Ultimately, convincing people to believe a certain position is difficult enough, convincing them to care more about it than they do is damn near impossible. Communities like Effective Altruism have, for the most part, figured this out. The EA message is: “Hey, if you’re already vaguely utilitarian and you like animals, check out this cool book“. You may get someone to care more about utilitarianism and animals by telling them occasional cool facts and stories and the subject, not by shaming them for not caring enough.

I hope that Putanumonit gets you to care more about rationality, erisology, and the proper use of statistics in social science research. But if that doesn’t happen, the fault is mine, not yours.

For more: Caring Less by eukaryote [LW · GW].

14 comments

Comments sorted by top scores.

comment by Jameson Quinn (jameson-quinn) · 2018-11-17T15:27:32.394Z · LW(p) · GW(p)

I'm an obsessive about voting theory, and have been for over 20 years now. As time passes and my knowledge deepens, I find that while I still feel "this is really important and people don't pay enough attention to it", I feel less and less that "this is MORE important than whatever people are talking about here and now, and it should be my job to make them change the subject". Obviously I think this is a healthy change for me and my social graces, but it also means that you are more likely to hear about voting theory from a younger, shallower version of me than you are from me.

I don't know how to solve that problem. It's one thing to be immune enough to evangelists so that you can keep a balance of caring across multiple issues, as discussed in the post above; it's another harder thing to be immune enough yet still curious enough to find your way past the proselytizers to the calmer, more-mature non-evangelist obsessives.

Replies from: Jacobian↑ comment by Jacob Falkovich (Jacobian) · 2018-11-17T20:58:08.198Z · LW(p) · GW(p)

Find your way past the proselytizers to the calmer, more-mature non-evangelist obsessives.

If you write an excellent post about your obsession [LW · GW] that gets 196 upvotes on LW, I'll find it even if I don't really share the obsession. That was kinda my point - people discover their own obsessions because they found something important / fascinating, not because someone shamed them into caring about it.

Replies from: SaidAchmiz↑ comment by Said Achmiz (SaidAchmiz) · 2018-11-17T22:33:07.022Z · LW(p) · GW(p)

This is tangential, but I should point out that the linked post (while indeed excellent) does not have 196 upvotes—it does not even have 196 votes, of any kind! Hover your mouse pointer over the karma total, and you will see that it has 63 votes (we do not know how many are up vs. down).

This probably does not change your point, but it’s important to keep this in mind when, e.g., comparing one post to another—especially across the history of LW [LW(p) · GW(p)].

comment by Dagon · 2018-11-14T22:58:00.795Z · LW(p) · GW(p)

You should probably start with caring less about what people say at parties and gatherings. If someone's actually seeking information or discussion about what to prioritize or why things aren't that simple, that can be a great conversation. If they're actually dedicated and knowledgeable about a topic, you can learn from and admire them, and have great interactions even if you disagree.

The arguments you're describing are just people signaling, presumably for status or self-image (status inside their head) reasons. Rarely are these conversations worth your time.

comment by jimrandomh · 2019-12-02T19:53:01.670Z · LW(p) · GW(p)

This post crystallizes an important problem, which seems to be hijacking a lot of people lately and has turned several people I know into scrupulosity wrecks. I would like to see it built upon, because this problem is unlikely to go away any time soon.

comment by Charlie Steiner · 2018-11-19T06:35:33.070Z · LW(p) · GW(p)

I like to make the distinction between thinking the chakra-theorists are valuable members of the community, and thinking that it's important to have community norms that include the chakra-theorists.

It's a lot like the distinction between morality and law. The chakra theorists are probably wrong and in fact it probably harms the community that they're here. But it's not a good way to run a community to kick them out, so we shouldn't, and in fact we should be as welcoming to them as we think we should be to similar groups that might have similar prima facie silliness.

Replies from: SaidAchmiz↑ comment by Said Achmiz (SaidAchmiz) · 2018-11-19T07:56:53.988Z · LW(p) · GW(p)

The chakra theorists are probably wrong and in fact it probably harms the community that they’re here. But it’s not a good way to run a community to kick them out, so we shouldn’t, and in fact we should be as welcoming to them as we think we should be to similar groups that might have similar prima facie silliness.

This seems to me like a strange position to take.

Do I understand correctly that you’re saying: “people of type X harm the community by their presence; but kicking them out would harm the community more than letting them stay, so we let them stay”?

If so, then it would seem that you’re positing the existence of an unavoidable kind of harm to the community. (Because any means of avoiding it inflicts even worse harm.) Can we really do nothing to prevent the community from being harmed in this way? Are we totally powerless? Do we have no cure (that isn’t worse than the disease)?

Is there not, at least, some preventative measure? Is there not some way to avoid catching similar infections henceforth, some vaccine or prophylactic? Or is this sort of harm simply a fact of life, which we must resign ourselves to? (And if the latter, can we then expect the community to get worse and worse over time, as such infections proliferate, with us being powerless to prevent them, and with any possible treatment only causing even greater morbidity?)

Replies from: Charlie Steiner, Elo↑ comment by Charlie Steiner · 2018-11-19T08:42:35.546Z · LW(p) · GW(p)

Consider something like protecting the free speech of people you strongly disagree with. It can be an empirical fact (according to one's model of reality) that if just those people were censored, the discussion would in fact improve. But such pointlike censorship is usually not an option that you actually have available to you - you are going to have unavoidable impacts on community norms and other peoples' behavior. And so most people around here protect something like a principle of freedom of speech.

If costs are unavoidable, then, isn't that just the normal state of things? You're thinking of "harm" as relative to some counterfactual state of non-harm - but there are many counterfactual states an online discussion group could be in that would be very good, and I don't worry too much about how we're being "harmed" by not being in those states, except when I think I see a way to get there from here.

In short, I don't think I associate the same kind of negative emotion with these kinds of tradeoffs that you do. They're just a fairly ordinary part of following a strategy that gets good results.

Replies from: SaidAchmiz↑ comment by Said Achmiz (SaidAchmiz) · 2018-11-19T12:56:59.118Z · LW(p) · GW(p)

In short, I don’t think I associate the same kind of negative emotion with these kinds of tradeoffs that you do. They’re just a fairly ordinary part of following a strategy that gets good results.

I don’t see how what you said is responsive to my questions. If you re-cast what I said to be phrased in terms of failure to achieve some better state, it doesn’t materially change anything. Feel free to pick whichever version you prefer, but the questions stand!

(I should add that the “harm” phrasing is something that appears in your original comment in this thread, so I am not sure why you are suddenly scare-quoting it…)

What I am asking is: can we do no better? Is this the best possible outcome of said tradeoff?

More concretely: given any X (where X is a type of person whom we would, ideally, not have in our community), is there no way to avoid having people of type X in our community?

Replies from: Charlie Steiner↑ comment by Charlie Steiner · 2018-11-19T17:50:35.991Z · LW(p) · GW(p)

Shrug I dunno man, that seems hard :) I just tend to evaluate community norms by how well they've worked elsewhere, and gut feeling. But neither of these is any sort of diamond-hard proof.

Your question at the end is pretty general, and I would say that most chakra-theorists would not want to join this community, so in a sense we're already mostly avoiding chakra-theorists - and there are other groups who are completely unrepresented. But I think the mechanism is relatively indirect, and that's good.

↑ comment by Elo · 2018-11-19T08:29:36.682Z · LW(p) · GW(p)

Hilarious. Have you tried to find chakras for yourself? Or did you dismiss them without trying?

Replies from: SaidAchmiz↑ comment by Said Achmiz (SaidAchmiz) · 2018-11-19T12:49:38.845Z · LW(p) · GW(p)

The word “chakras” appears exactly zero times in what I wrote (not counting the quote of Charlie Steiner’s words), so I don’t know why you’re addressing this comment to me.

comment by Pattern · 2018-11-15T16:45:16.602Z · LW(p) · GW(p)

and there’s no point discussing it ad nauseam

Preaching to the choir does not make more believers, and nothing is important to stand around talking about (as opposed to acting), except of course, talking about 'how people stand around talking instead of doing things'.

comment by sirjackholland · 2018-11-15T19:17:54.646Z · LW(p) · GW(p)

I think most political opinions are opinions about priorities of issues, not issues per se. I remember from years ago, before most states had started legalizing same sex marriage, a relative of mine expressing the sentiment "I'm not against legalizing gay marriage, I just don't want to hear about the topic ever again." I think this is the attitude that the (admittedly very obnoxious and frustrating) party guest is concerned about. If more people held the opinion of my relative then we'd be stuck in a bad equilibrium, with everyone agreeing that they would be OK with same sex marriage but no one bothering to put in the effort to legalize it.

It doesn't matter if everyone agrees X is an issue if everyone also believes that solving the much more difficult Y should always take priority over solving X - this has the same consequences as a world in which no one believes X is an issue. Of course that doesn't mean you should go around yelling at people for not being obsessed with your favorite obsession, but I think "unconscious selfishness" and "mind viruses" are uncharitable explanations for what seems to be the reasonable concern that low priority tasks often never get completed and thus those that claim to support those causes but with low priority are effectively not supporting those causes.

Having said that, I completely agree with your larger point about diversity - I would much prefer a world in which people can obsess over what they want to obsess over even when their obsessions and lack-of-obsessions are contrarian.