Key questions about artificial sentience: an opinionated guide

post by Robbo · 2022-04-25T12:09:39.322Z · LW · GW · 31 commentsContents

Introduction The Big Question Why not answer a different question? Why not answer a smaller question? Subquestions for the Big Question A note on terminology Questions about scientific theories of consciousness Further reading Questions about valence How do valenced states relate to each other? Further reading What's the connection between reward and valence? Further reading The scale and structure of valence Further reading Applying our theories to specific AI systems Further reading Conclusion None 31 comments

[crossposted at EA Forum [EA · GW] and Experience Machines; twitter thread summary]

What is it like to be DALL-E 2? Are today’s AI systems consciously experiencing anything as they generate pictures of teddy bears on the moon, explain jokes [LW · GW], and suggest terrifying new nerve agents?

This post gives a list of open scientific and philosophical questions about AI sentience. First, I frame the issue of AI sentience, proposing what I think is the Big Question [LW · GW] we should be trying to answer: a detailed computational theory of sentience that applies to both biological organisms and artificial systems. Then, I discuss the research questions that are relevant to making progress on this question. Even if the ultimate question cannot be answered to our satisfaction, trying to answer it will yield valuable insights that can help us navigate possible AI sentience.

This post represents my current best guess framework for thinking about these issues. I'd love to hear from commenters: suggested alternative frameworks [LW · GW] for the Big Question, as well as your thoughts on the sub-questions [LW · GW].

Introduction

“Maybe if a reinforcement learning agent is getting negative rewards, it’s feeling pain to some very limited degree. And if you’re running millions or billions of copies of that, creating quite a lot, that’s a real moral hazard.” -Sam Altman (OpenAI), interviewed by Ezra Klein (2021)

Are today's ML systems already sentient? Most experts seem to think “probably not”, and it doesn’t seem like there’s currently a strong argument that today’s large ML systems are conscious.[1]

But AI systems are getting more complex and more capable with every passing week. And we understand sufficiently little about consciousness that we face huge uncertainty about whether, when, and why AI systems will have the capacity to have conscious experiences, including especially significant experiences like suffering or pleasure. We have a poor understanding of what possible AI experiences could be like, and how they would compare to human experiences.

One potential catastrophe we want to avoid is unleashing powerful AI systems that are misaligned with human values: that's why the AI alignment community is hard at work trying to ensure we don't build power-seeking optimizers that take over the world in order to pursue some goal that we regard as alien and worthless.

It’s encouraging that more work is going into minimizing risks from misaligned AI systems. At the same time, we should also take care to avoid engineering a catastrophe for AI systems themselves: a world in which we have created AIs that are capable of intense suffering, suffering which we do not mitigate, whether through ignorance, malice, or indifference.

There could be very, very many sentient artificial beings. Jamie Harris (2021) argues that “the number of [artificially sentient] beings could be vast, perhaps many trillions of human-equivalent lives on Earth and presumably even more lives if we colonize space or less complex and energy-intensive artificial minds are created.” There’s lots of uncertainty here: but given large numbers of future beings, and the possibility for intense suffering, the scale of AI suffering could dwarf the already mind-bogglingly large scale of animal suffering from factory farming [2]

|

|---|

| The San Junipero servers from season 3, episode 4 of Black Mirror |

It would be nice if we had a clear outline for how to avoid catastrophic scenarios from AI suffering, something like: here are our best computational theories of what it takes for a system, whether biological or artificial, to experience conscious pleasure or suffering, and here are the steps we can take to avoid engineering large-scale artificial suffering. Such a roadmap would help us prepare to wisely share the world with digital minds.

For example, you could imagine a consciousness researcher, standing up in front of a group of engineers at DeepMind or some other top AI lab, and giving a talk that aims to prevent them creating suffering AI systems. This talk might give the following recommendations:

-

Do not build an AI system that (a) is sufficiently agent-like and (b) has a global workspace and reinforcement learning signals that (c) are broadcast to that the workspace and (d) play a certain computational role in shaping learning and goals and (e) are associated with avoidant and self-protective behaviors.

-

And here is, precisely, in architectural and computational terms, what it means for a system to satisfy conditions a-e—not just these vague English terms.

-

Here are the kinds of architectures, training environments, and learning processes that might give rise to such components.

-

Here are the behavioral 'red flags' of such components, and here are the interpretability methods that would help identify such components—all of which into take into account the fact that AIs might have incentives to deceive us about such matters.

So, why can't I go give that talk to DeepMind right now?

First, I’m not sure that components a-e are the right sufficient conditions for artificial suffering. I’m not sure if they fit with our best scientific understanding of suffering as it occurs in humans and animals. Moreover, even if I were sure that components a-e are on the right track, I don’t know how to specify them in a precise enough way that they could guide actual engineering, interpretability, or auditing efforts.

Furthermore, I would argue that no one, including AI and consciousness experts who are far smarter and more knowledgeable than I am, is currently in a position to give this talk—or something equivalently useful—at DeepMind.

What would we need to know in order for such talk to be possible?

The Big Question

In an ideal world, I think the question that we would want an answer to is:

What is the precise computational theory that specifies what it takes for a biological or artificial system to have various kinds of conscious, valenced [LW · GW] experiences—that is, conscious experiences that are pleasant or unpleasant, such as pain, fear, and anguish or pleasure, satisfaction, and bliss?

Why not answer a different question?

The importance and coherence of framing this question in this way depends on five assumptions.

- Sentientism about moral patienthood: if a system (human, non-human animal, AI) has the capacity to have conscious valenced experiences—if it is sentient[3]—then it is a moral patient. That is, it deserves moral concern for its own sake, and its pain/suffering and pleasure matter. This assumption is why the Big Question is morally important.[4]

- Computational functionalism about sentience: for a system to have a given conscious valenced experience is for that system to be in a (possibly very complex) computational state. That assumption is why the Big Question is asked in computational (as opposed to neural or biological) terms.[5]

-

Realism about phenomenal consciousness: phenomenal consciousness exists. It may be identical to, or grounded in, physical processes, and as we learn more about it, it may not have all of the features that it intuitively seems to have. But phenomenal consciousness is not entirely illusory, and we can define it “innocently” enough that it points to a real phenomenon without baking in any dubious metaphysical assumptions. In philosopher’s terms, we are rejecting strong illusionism [LW · GW]. This assumption is why the Big Question is asked in terms of conscious valenced experiences.

-

Plausibility: it’s not merely logically possible, but non-negligibly likely, that some future (or existing) AI systems will be (or are) in these computational states, and thereby (per assumption 2) sentient. This assumption is why the Big Question is action-relevant.

-

Tractability: we can make scientific progress in understanding what these computational states are. This assumption is why the Big Question is worth working on.[6]

All of these assumptions are up for debate. But I actually won't be defending them in this post. I've listed them in order to make clear one particular way of orienting to these topics.

And in order to elicit disagreement. If you do reject one or more of these assumptions, I would be curious to hear which ones, and why—and, in light of your different assumptions, how you think we should formulate the major question(s) about AI sentience, and about the relationship between sentience and moral patienthood.

(I'll note that the problem of how to re-formulating these questions in a coherent way is especially salient, and non-trivial, for strong illusionists about consciousness who hold that phenomenal consciousness does not exist at all. See this paper by Kammerer for an attempt to think about welfare and sentience from a strong illusionist framework.)

Why not answer a smaller question?

In an ideal world, we could answer the Big Question soon, before we do much more work building ever-more complex AI systems that are more and more likely to be conscious. In the actual world, I do not think that we will answer the Big Question any time in the next decade. Instead, we will need to act cautiously, taking into consideration what we know, short of a full answer.

That said, I think it is useful to have the Big Question in mind as an orienting question, and indeed to try to just take a swing at the full problem. As Holden Karnofsky writes [EA · GW], “there is…something to be said for directly tackling the question you most want the all-things-considered answer to (or at least a significant update on).” Taking an ambitious approach can yield a lot of progress, even while the approach is unlikely to yield a complete answer.

Subquestions for the Big Question

In the rest of this post, I’ll list what I think are the important questions about consciousness in general, and about valenced states in particular, that bear on the question of AI sentience.

A note on terminology

First, a note on terminology. By “consciousness” I mean “phenomenal consciousness”, which philosophers use to pick out subjective experience, or there being something that it is like to be a given system. In ordinary language, “consciousness” is used to refer to intelligence, higher cognition, having a self-concept, and many other traits. These traits may end up being related to phenomenal consciousness, but are conceptually distinct from it. We can refer to certain states as conscious (e.g., feeling back pain, seeing a bright red square on a monitor) or not conscious (e.g., perceptual processing of a subliminal stimulus, hormone regulation by the hypothalamus). We can also refer to a creature or system as conscious (e.g. you right now, an octopus) or not conscious (e.g., a brick, a human in a coma).

By “sentient”, I mean capable of having a certain subset of phenomenally conscious experiences—valenced ones. Experiences that are phenomenally conscious but non-valenced would include visual experiences, like seeing a blue square. (If you enjoy or appreciate looking at a blue square, this might be associated with a valenced experience, but visual perception itself is typically taken to be a non-valenced experience). At times, I use “suffering” and “pleasure” as shorthands for the variety of negatively and positively valenced experiences.

|

|---|

| 'International Klein Blue (IKB Godet) 1959' by Yves Klein (1928-62). Which people claim to appreciate looking at. |

Questions about scientific theories of consciousness

The scientific study of consciousness, as undertaken by neuroscientists and other cognitive scientists, tries to answer what Scott Aaronson calls the “pretty hard problem [EA · GW]” of consciousness: which physical states are associated with which conscious experiences? This is a meaningful open question regardless of your views on the metaphysical relationship between physical states and conscious experiences (i.e., your views on the “hard problem” of consciousness).[7]

Scientific theories of consciousness necessarily start with the human case, since it is the case which we are most familiar with and have the most data about. The purpose of this section is to give a brief overview of the methods and theories in the scientific study of consciousness before raising the main open questions and limitations.

A key explanandum of a scientific theory of consciousness is why some, but not all, information processing done by the human brain seems to give rise to consciousness experience. As Graziano (2017) puts it:

A great deal of visual information enters the eyes, is processed by the brain and even influences our behavior through priming effects, without ever arriving in awareness. Flash something green in the corner of vision and ask people to name the first color that comes to mind, and they may be more likely to say “green” without even knowing why. But some proportion of the time we also claim, “I have a subjective visual experience. I see that thing with my conscious mind. Seeing feels like something.”

Neuroscientific theories of human consciousness seek to identify the brain regions and processes that explain the presence or absence of consciousness. They seek to capture a range of phenomena:

- the patterns of verbal report and behavior present in ordinary attentive consciousness

- the often surprising patterns of report and behavior that we see when we manipulate conscious perception in various ways: phenomena like change blindness, backwards masking, various patterns of perceptual confidence and decision making

- various pathologies caused by brain legions, surgeries, and injuries, such as amnesia, blindsight, and split-brain phenomena [8]

- loss of consciousness in dreamless sleep, anesthesia, coma, and vegetative states

Computational glosses on neuroscientific theories of consciousness seek to explain these patterns in terms of the computations that are being performed by various regions of the brain.

Theories of consciousness differ in how they interpret this evidence and what brain processes and/or regions they take to explain it.

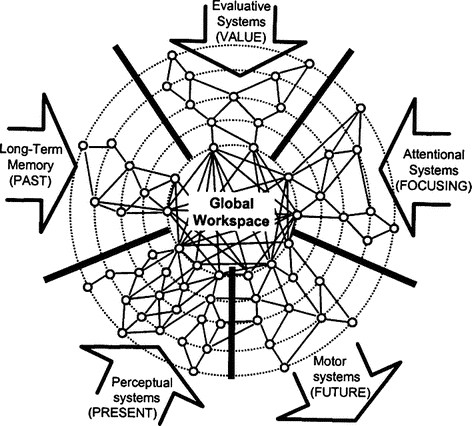

-The most popular scientific theory of consciousness is probably the global workspace theory of consciousness, which holds that conscious states are those that are ‘broadcast’ to a ‘global workspace’, a network of neurons that makes information available to a variety of subsystems.

|

|---|

| Illustration from the canonical paper on global workspace theory |

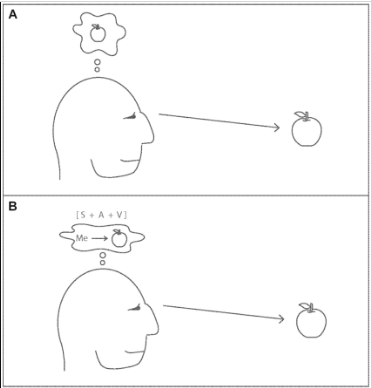

-Higher-order theories of consciousness hold that what it is to be consciously seeing a red apple is for you to be a) perceiving the red apple and b) to have a higher-order mental state (introspection, metacognition) that represents that state.

-First-order theories of consciousness hold that neither a global workspace nor higher-order representations is necessary for consciousness - some kind of perceptual representation is, by itself, sufficient for consciousness (e.g. Tye's PANIC theory, discussed by Muehlhauser here).

-The attention schema theory of consciousness holds that conscious states are a mid-level, lossy ‘sketch’ of our attention, analogously to how the body schema is a ‘lossy’ sketch of the state of our body.

|

|---|

| I like how content this fellow from Graziano’s paper is |

The big open question in the science of consciousness is which, if any, of these (and other[9]) theories are correct. But as Luke Muehlhauser has noted, even the leading theories of consciousness are woefully underspecified. What exactly does it mean for a system to have a ‘global workspace’? What exactly does it take for a representation to be ‘broadcast’ to it? What processes, exactly, count as higher-order representation? How are attention schemas realized? To what extent are these theories even inconsistent with each other - what different predictions do they make, and how can we experimentally test these predictions?[10]

Fortunately, consciousness scientists are making efforts to identify testable predictions of rival theories, e.g. Melloni et al. (2021). My impression, from talking to Matthias Michel about the methodology of consciousness science, is that we have learned quite a lot about consciousness in the past few decades. It’s not the case that we are completely in the dark: as noted above, we’ve uncovered many surprising and non-obvious phenomena, which serve as data that can constrain our theory-building. Relatedly, methodology in consciousness science has gotten more sophisticated: we are able to think in much more detailed ways about metacognition, perceptual decision-making, introspection, and other cognitive processes that are closely related to consciousness. Moreover, we’ve learned to take seriously the need to explain our intuitions and judgments about consciousness: the so-called meta-problem of consciousness.

Actually trying to solve the problem by constructing computational theories which try to explain the full range of phenomena could pay significant dividends for thinking about AI consciousness. We can also make progress on questions about valence—as I discuss in the next section.

Further reading

Appendix B of Muehlhauser's animal sentience report, on making theories of consciousness more precise; Doerig et al. (2020) outline “stringent criteria specifying how empirical data constrains theories of consciousness”.

Questions about valence

Is DALL-E 2 having conscious visual experiences? It would be extraordinarily interesting if it is. But I would be alarmed to learn that DALL-E 2 has conscious visual experiences only inasmuch as these experiences would be a warning sign that DALL-E 2 might also be capable of conscious suffering; I wouldn’t be concerned about the visual experiences per se.[11] We assign special ethical significance to a certain subset of conscious experiences, namely the valenced ones: a range of conscious states picked out by concepts like pain, suffering, nausea, contentment, bliss, et alia.

In addition to wanting a theory of consciousness in general, we want a theory of (conscious) valenced experiences: when and why is a system capable of experiencing conscious pain or pleasure? Even if we remain uncertain about phenomenal consciousness in general, being able to pick out systems that are especially likely to have valenced experiences could be very important, given the close relationship between valence and welfare and value. For example, it would be useful to be able to say confidently that, even if it consciously experiences something, DALL-E 2 is unlikely to be suffering.

|

|---|

| Advertisement for Wolcott’s Instant Pain Annihilator (c. 1860) |

How do valenced states relate to each other?

Pain, nausea, and regretting a decision all seem negatively valenced. Orgasms, massages, and enjoying a movie all seem positively valenced.

Does valence mark a unified category - is there a natural underlying connection between these different states? How do unpleasant bodily sensations like pain and nausea relate to negative emotions like fear and anguish, and to more ‘intellectual’ displeasures like finding a shoddy argument frustrating? How do pleasant bodily sensations like orgasm and satiety relate to positive emotions like contentment and amusement, and to more ‘intellectual’ pleasures like appreciating an elegant math proof?

To develop a computational theory of valence, we need clarity on exactly what it is that we are building a theory of. This is not to say that we need to chart the complicated ways in which English and common sense individuates and relates these disparate notions. Nor do we need to argue about the many different ways scientists and philosophers might choose to use the words “pain” vs “suffering”, or “desire” vs “wanting”. But there are substantive questions about whether the natural grouping of experiences into ‘positive’ and ‘negative’ points at a phenomenon that has a unified functional or computational explanation. And about how valenced experiences relate to motivation, desire, goals, and agency. For my part, I suspect that there is in fact a deeper unity among valenced states, one that will have a common computational or functional signature.

Further reading

Timothy Schroeder’s Three Faces of Desire (2004) has a chapter on pleasure and displeasure that is a great introduction to these issues; the Stanford Encyclopedia of Philosophy articles on pain and pleasure; Carruthers (2018), "Valence and Value"; Henry Shevlin (forthcoming) and my colleague Patrick Butlin (2020) on valence and animal welfare.

What's the connection between reward and valence?

And there are striking similarities between reinforcement learning in AI and reinforcement learning in the brain. According to the “reward prediction error hypothesis” of dopamine neuron activity, dopaminergic neurons in VTA/SNpc[12] compute reward prediction error and broadcast this to other areas of the brain for learning. These computations have striking resemblances to temporal difference learning in AI.

That said, the broadcast of the reward prediction error seems to be distinct from the experience of conscious pleasure and pain in various ways (cf. Schroeder (2004), Berridge and Kringelbach on liking versus wanting). How exactly does reward relate to valenced states in humans? In general, what gives rise to pleasure and pain, in addition to (or instead of) the processing of reward signals? A worked-out computational theory of valence would shed light on the relationship between reinforcement learning and valenced experiences.

Further reading

Schroeder (2004) on reinforcement versus pleasure; Tomasik (2014) pp 8-11 discusses the complex relationship between reward, pleasure, motivation, and learning; Sutton and Barto's RL textbook has a chapter on neuroscience and RL.

The scale and structure of valence

It’s hugely important not just whether AI systems have valenced states, but a) whether these states are positively vs. negatively valenced and b) how intense the valence is. What explains the varying intensity of positively and negatively valenced states? And what explains the fact that positive and negative valence seem to trade off of each other and have a natural ‘zero’ point?

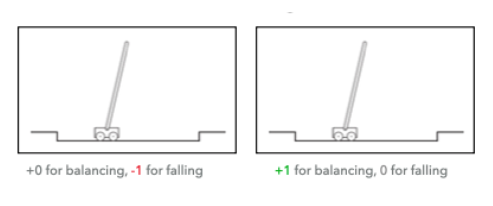

Here’s a puzzle about these questions that arises in the reinforcement learning setting: it’s possible to shift the training signal of an RL agent from negative to positive, while leaving all of its learning and behavior in tact. For example, in order to train an agent to balance a pole (the classic CartPole task), you could either a) give it 0 reward for balancing the pole and a negative reward for failing b) give it positive reward for balancing the pole and 0 reward for failing.

The training and behavior of these two systems would be identical, in spite of the shift in the value of the rewards. Does simply shifting the numerical value of the reward to “positive” correspond to a deeper shift towards positive valence? It seems strange that simply switching the sign of a scalar value could be affecting valence in this way. Imagine shifting the reward signal for agents with more complex avoidance behavior and verbal reports. Lenhart Schubert (quoted in Tomasik (2014), from whom I take this point) remarks: “If the shift…causes no behavioural change, then the robot (analogously, a person) would still behave as if suffering, yelling for help, etc., when injured or otherwise in trouble, so it seems that the pain would not have been banished after all!”

So valence seems to depend on something more complex than the mere numerical value of the reward signal. For example, perhaps it depends on prediction error in certain ways. Or perhaps the balance of pain and pleasure depends on efficient coding schemes which minimize the cost of reward signals / pain and pleasure themselves: this is the thought behind Yew‑Kwang Ng’s work on wild animal welfare, and Shlegeris's brief remarks inspired by this work.

More generally, in order to build a satisfactory theory of valence and RL, I think we will need to:

- Clarify what parts of a system correspond to the basic RL ontology of reward signal, agent, and environment

- Take into account the complicated motivational and functional role of pain and pleasure, including:

- dissociations between ‘liking’ and ‘wanting’

- ways in which pain and unpleasantness can come apart (e.g. pain asymbolia

- the role of emotion and expectations

In my opinion[13], progress on a theory of valence might be somewhat more tractable than progress on a theory of consciousness, given that ‘pain’ and ‘pleasure’ have clearer functional roles than phenomenal consciousness does. But I think we are still far from a satisfying theory of valence.

Further reading

Dickinson and Balleine (2010) argue that valenced states are how information about value is passed between two different RL systems in the brain--one unconscious system that does model-free reinforcement learning about homeostasis, and a conscious cognitive system that does model-based reinforcement learning; literature in predictive processing framework (e.g. Van De Cruys (2017); the Qualia Research Institute has a theory of valence [LW · GW], but I have not yet been able to understand it what this theory claims and predicts.

Applying our theories to specific AI systems

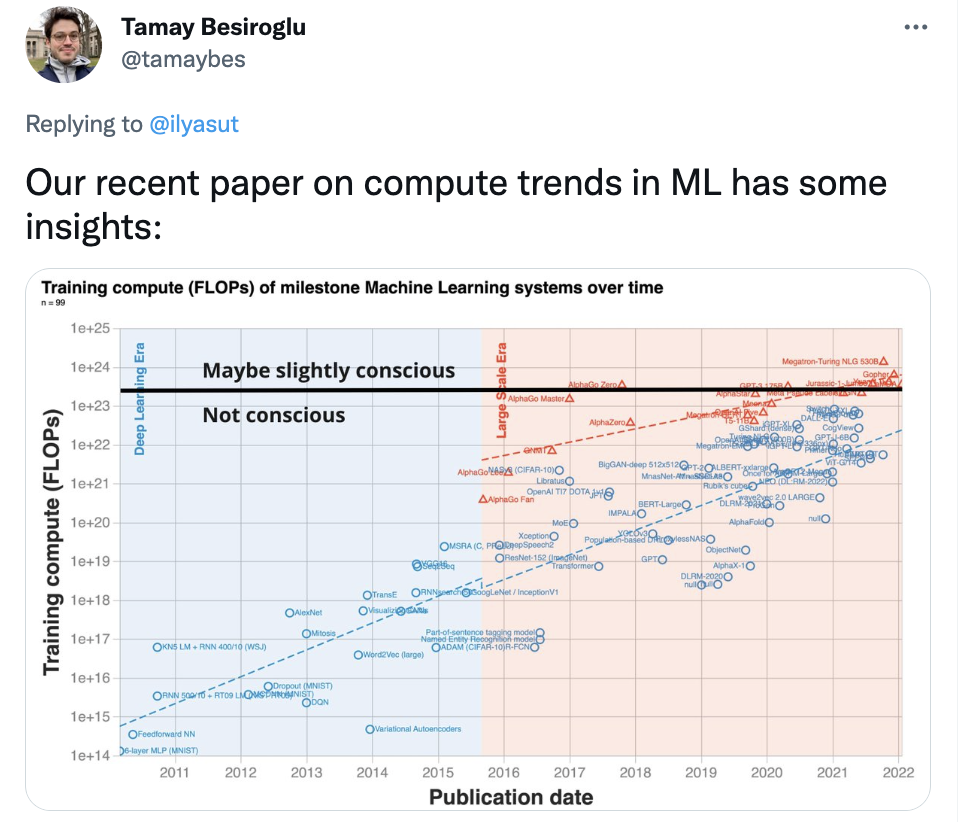

The quality of discourse about AI sentience is very low—low enough that this tongue-in-cheek tweet was discussed by mainstream news outlets:

|

|---|

As I see it, the dialectic in discussions about AI sentience is usually not much more advanced than:

Position A: “AI systems are very complex. Maybe they are a little bit sentient.”[14] Position B: “that is stupid”

I think that position A is not unreasonable. Given the complexity of today’s ML systems, and our uncertainty about what computations give rise to consciousness, higher levels of complexity should increase our credence somewhat that consciousness-related computations are being performed. But we can do better. Each side of this debate can give more detailed arguments about the presence or absence of sentience.

People in position A can go beyond mere appeals to complexity, and say what theories of consciousness and valence predict that current AI systems are sentient—in virtue of what architectural or computational properties AI systems might be conscious: for example, reinforcement learning, higher-order representations, global workspaces.

People in position B can say what pre-conditions for sentience they think are lacking in current systems—for example, a certain kind of embodiment, or a certain kind of agency—and why they think these components are necessary for consciousness. Then, they can specify more precisely what exactly they would need to see in AI systems that would increase their credence in AI sentience.

One complication is that our theories of human (and animal) consciousness usually don’t make reference to “background conditions” that we might think are important. They compare different human brain states, and seek to find neural structures or computations that might be the difference-makers between conscious and unconscious--for example, broadcast to a global workspace. But these neural structures or computations are embedded in a background context that is usually not formulated explicitly: for example, in the biological world, creatures with global workspaces are also embodied agents with goals. How important are these background conditions? Are they necessary pre-conditions for consciousness? If so, how do we formulate these pre-conditions more precisely, so that we can say what it takes for an AI system to satisfy them?[15]

Detailed thinking about AI sentience usually falls between the cracks of different fields. Neuroscientists will say their favored theory applies to AI without making detailed reference to actual AI systems. AI researchers will refer to criteria for sentience without much reference to the scientific study of sentience.

In my opinion, most existing work on AI sentience simply does not go far enough to make concrete predictions about possible AI sentience. Simply attempting to apply scientific theories of consciousness and valence to existing AI systems, in a more precise and thoughtful way, could advance our understanding. Here’s a recipe for progress:

- Gather leading experts on scientific theories of consciousness and leading AI researchers

- Make the consciousness scientists say what precisely they think their theories imply about AI systems

- Ask the AI researchers what existing, or likely-to-be-created AI systems, might be conscious according to these theories

Indeed, the digital minds research group at FHI is putting together a workshop to do precisely this. We hope to create a space for more detailed and rigorous cross-talk between these disciplines, focusing these discussions on actual or likely AI systems and architectures.

Further reading

Schwitzgebel and Garza (2020) on "Designing AI with rights, consciousness, self-respect, and freedom"; Lau, Dehane, and Kouider (2017) apply their global workspace and higher-order theories to possible AI systems; Graziano (2017) claims his attention schema theory is “a foundation for engineering artificial consciousness”; Ladak (2021) proposes a list of features indicative of sentience in artificial entities; Shevlin (2021) on moral patienthood; Amanda Askell's reflections

Conclusion

Taking a swing at the Big Question does not mean we can’t, and shouldn’t, also pursue more ‘theory neutral’ ways of updating our credences about AI sentience. For example, by finding commonalities between extant theories of consciousness and using them to make lists of potentially consciousness indicating features. Or by devising ‘red flags’ for suffering that a variety of theories would agree on. Or by trying to find actions that are robustly good assuming a variety of views about the connection between sentience and value.

This topic is sufficiently complex that how to even ask or understand the relevant questions is up for grabs. I’m not certain of the framing of the questions, and will very likely change my mind about some basic conceptual questions about consciousness and valence as I continue to think about this.

Still, I think there is promise in working on the Big Question, or some related variations on it. To be sure, our neuroscience tools are way less powerful than we would like, and we know far less about the brain than we would like. To be sure, our conceptual frameworks for thinking about sentience seem shaky and open to revision. Even so, trying to actually solve the problem by constructing computational theories which try to explain the full range of phenomena could pay significant dividends. My attitude towards the science of consciousness is similar to Derek Parfit’s attitude towards ethics: since we have only just begun the attempt, we can be optimistic.[16]

There’s limited info on what “expert” consensus on this issue is. The Association for the Scientific Study of Consciousness surveyed its members. For the question, "At present or in the future, could machines (e.g., robots) have consciousness?" 20.43% said 'definitely yes', 46.09% said ‘probably yes’. Of 227 philosophers of mind surveyed in the 2020 PhilPapers survey, 0.88% "accept or lean towards" some current AI systems being conscious. 50.22% "accept or lean towards" some future AI systems being conscious. ↩︎

As discussed in "Questions about valence [LW · GW]" below, the scale of suffering would depends not just on the number of systems, but the amount and intensity of suffering vs. pleasure in these systems. ↩︎

see note on terminology [LW · GW] below ↩︎

Sometimes sentientism refers to the view that sentience is not just sufficient for moral patienthood, but necessary as well. For these purposes, we only need the sufficiency claim. ↩︎

The way I've phrased this implies that a given experience just is the computational state. But this can be weakened. In fact, computational functionalism is compatible with a variety of metaphysical views about consciousness—e.g., a non-physicalist could hold that the computational state is a correlate of consciousness. For example, David Chalmers (2010) is a computational functionalist and a non-phsyicalist: "the question of whether the physical correlates of consciousness are biological or functional is largely orthogonal to the question of whether consciousness is identical to or distinct from its physical correlates." ↩︎

At least, there’s pro tanto reason to work on it. It could be that other problems like AI alignment are more pressing or more tractable, and/or that work on the Big Question is best left for later. This question has been discussed elsewhere [EA · GW]. ↩︎

Unless your view is that phenomenal consciousness does not exist. If that’s your view, then the pretty hard problem, as phrased, is answered with “none of them”. See assumption #3, above. See Chalmers (2018) pp 8-9, and footnote 3, for a list of illusionists theories. ↩︎

LeDoux, Michel, and Lau (2020) reviews how puzzles about amnesia, split brain, and blindsight were crucial in launching consciousness science as we know it today ↩︎

What about predictive processing [? · GW]? Predictive processing is (in my opinion) not a theory of consciousness per se. Rather, it’s a general framework for explaining prediction and cognition whose adherents often claim that it will shed light on the problem of consciousness. But such a solution is still forthcoming. ↩︎

See Appendix B of Muehlhauser’s report on consciousness and moral patienthood, where he argues that our theories are woefully imprecise. ↩︎

Some people think that conscious experiences in general, not just valenced states of consciousness or sentience, are valuable. I disagree. See Lee (2018) for an argument against the intrinsic value of consciousness in general. ↩︎

the ventral tegmental area and the pars compacta of the substantia nigra ↩︎

Paraphrased from discussion with colleague Patrick Butlin, some other possible connections between consciousness and valence: (a) Valence just is consciousness plus evaluative content. On this view, figuring out the evaluative content component will be easier than the consciousness component, but won’t get us very far towards the Big Question (b) Compatibly, perhaps the functional role of some specific type of characteristically valenced state e.g. conscious sensory pleasure is easier to discern than the role of consciousness itself, and can be done first (c) Against this kind of view, some people will object that you can't know that you're getting at conscious pleasure (or whatever) until you understand consciousness. (d) If valence isn't just consciousness plus evaluative content, then I think we can make quite substantive progress by working out what it is instead. But presumably consciousness would still be a component, so a full theory couldn't be more tractable that a theory of consciousness. ↩︎

A question which I have left for another day: does it make sense to claim that a system is "a little bit" sentient or conscious? Can there be borderline cases of consciousness? Does consciousness come in degrees. See Lee (forthcoming) for a nice disambiguation of these questions. ↩︎

Peter Godfrey-Smith is a good example of someone who has been explicit about background conditions (in his biological theory of consciousness, metabolism is a background condition for consciousness). DeepMind's Murray Shanahan talks about embodiment and agency but, in my opinion, not precisely enough. ↩︎

For discussion and feedback, thanks Fin Moorhouse, Patrick Butlin, Arden Koehler, Luisa Rodriguez, Bridget Williams, Adam Bales, and Justis Mills and the LW feedback team. ↩︎

31 comments

Comments sorted by top scores.

comment by Steven Byrnes (steve2152) · 2022-04-25T14:53:44.769Z · LW(p) · GW(p)

So, why can't I go give that talk to DeepMind right now?

A third (disconcerting) possibility is that the list of demands amounts to saying “don’t ever build AGIs”, because the global workspace / self-awareness / whatever is really the only practical way to build AGI. (I happen to put a lot of weight on that possibility, but it’s controversial and non-obvious.) If that possibility is true, then, well, I guess in principle DeepMind could still follow that list of demands, but it amounts to them giving up on their corporate mission, and even if they did, it would be very difficult to get every other actor to do the same thing forever.

If you do reject one or more of these assumptions, I would be curious to hear which ones, and why—and, in light of your different assumptions, how you think we should formulate the major question(s) about AI sentience, and about the relationship between sentience and moral patienthood.

(Warning: haven’t read or thought very much about this.) I guess I’m currently (weakly) leaning towards strong illusionism. But I think I can still care about things-computationally-similar-to-humans. I don’t know, at the end of the day, I care about what I care about. See last section here [LW · GW], and more in this comment [LW(p) · GW(p)].

More precisely, I’m hopeful (and hoping!) that one can soften the “we are rejecting strong illusionism” claim in #3 without everything else falling apart.

Replies from: Robbo↑ comment by Robbo · 2022-04-25T20:04:18.693Z · LW(p) · GW(p)

A third (disconcerting) possibility is that the list of demands amounts to saying “don’t ever build AGIs”

That would indeed be disconcerting. I would hope that, in this world, it's possible and profitable to have AGIs that are sentient, but which don't suffer in quite the same way / as badly as humans and animals do. It would be nice - but is by no means guaranteed - if the really bad mental states we can get are in a kinda arbitrary and non-natural point in mind-space. This is all very hard to think about though, and I'm not sure what I think.

I’m hopeful (and hoping!) that one can soften the “we are rejecting strong illusionism” claim in #3 without everything else falling apart.

I hope so too. I was more optimistic about that until I read Kammerer's paper, then I found myself getting worried. I need to understand that paper more deeply and figure out what I think. Fortunately, I think one thing that Kammerer worries about is that, on illusionism (or even just good old fashioned materialism), "moral patienthood" will have vague boundaries. I'm not as worried about that, and I'm guessing you aren't either. So maybe if we're fine with fuzzy boundaries around moral patienthood, things aren't so bad.

But I think there's other more worrying stuff in that paper - I should write up a summary some time soon!

comment by MichaelStJules · 2022-04-25T20:25:47.602Z · LW(p) · GW(p)

The training and behavior of these two systems would be identical, in spite of the shift in the value of the rewards. Does simply shifting the numerical value of the reward to “positive” correspond to a deeper shift towards positive valence? It seems strange that simply switching the sign of a scalar value could be affecting valence in this way. Imagine shifting the reward signal for agents with more complex avoidance behavior and verbal reports. Lenhart Schubert (quoted in Tomasik (2014), from whom I take this point) remarks: “If the shift…causes no behavioural change, then the robot (analogously, a person) would still behave as if suffering, yelling for help, etc., when injured or otherwise in trouble, so it seems that the pain would not have been banished after all!”

So valence seems to depend on something more complex than the mere numerical value of the reward signal. For example, perhaps it depends on prediction error in certain ways. Or perhaps the balance of pain and pleasure depends on efficient coding schemes which minimize the cost of reward signals / pain and pleasure themselves: this is the thought behind Yew‑Kwang Ng’s work on wild animal welfare, and Shlegeris's brief remarks inspired by this work.

I think attention probably plays an important role in valence. States with high intensity valence (of either sign, or at least negative; I'm less sure how pleasure works) tend to take immediate priority, in terms of attention and, (at least partly) consequently, behaviour. The Welfare Footprint Project (funded by Open Phil for animal welfare) defines pain intensity based on attention and priority, with more intense pains harder to ignore and pain intensity clusters for annoying, hurtful, disabling and excruciating. If you were to shift rewards and change nothing else, how much attention and priority is given to various things would have to change, and so the behaviour would, too. One example I like to give is that if we shifted valence into the positive range without adjusting attention or anything else, animals would continue to eat while being attacked, because the high positive valence from eating would get greater priority than the easily ignorable low positive or neutral valence from being attacked.

The costs of reward signals or pain and pleasure themselves help explain why it's evolutionarily adaptive to have positive and negative values with valence roughly balanced around low neural activity neutral states, but I don't see why it would follow that it's a necessary feature of valence that it should be balanced around neutral (which would surely be context-specific).

I'm less sure either way about prediction error. I guess it would have to be somewhat low-level or non-reflective (my understanding is that it usually is, although I'm barely familiar with these approaches), since surely we can accurately predict something and its valence in our reportable awareness, and still find it unpleasant or pleasant.

Replies from: MichaelStJules, Robbo↑ comment by MichaelStJules · 2022-04-30T16:08:50.211Z · LW(p) · GW(p)

Also, there's a natural neutral/zero point for rewards separating pleasure and suffering in animals: subnetwork inactivity. Not just unconsciousness, but some experiences feel like they have no or close to no valence, and this is probably reflected in lower activity in valence-generating subnetworks. If this doesn't hold for some entity, we should be skeptical that they experience pleasure or suffering at all. (They could experience only one, with the neutral point strictly to one side, the low valence intensity side.)

Plus, my guess is that pleasure and suffering are generated in not totally overlapping structures of animal brains, so shifting rewards more negative, say, would mean more activity in suffering structures and less in the pleasure structures.

Still, one thing I wonder is whether preferences without natural neutral points can still matter. Someone can have preferences between two precise states of affairs (or features of them), but not believe either is good or bad in absolute terms. They could even have something like moods that are ranked, but no neutral mood. Such values could still potentially be aggregated for comparisons, but you'd probably need to use some kind of preference-affecting view if you don't want to make arbitrary assumptions about where the neutral points should be.

comment by Signer · 2022-04-25T21:06:06.543Z · LW(p) · GW(p)

As usual with intersection of consciousness and science, I think this needs more clarifications about assumptions. In particular, does "Realism about phenomenal consciousness" imply that consciousness is somehow fundamentally different from other forms of organization of matter? If not, I would prefer for it to be explicitly said that we are talking about merely persuasive arguments about reasons to value computational processes interesting in some way. And for every "theory" to be replaced with "ideology", and every "is" question with "do we want to define consciousness in such a way, that". And without justification for intermediate steps assumptions 1-3 can be simplified into "if a system satisfies my arbitrary criteria then it is a moral patient".

Replies from: TAG, Robbo↑ comment by Robbo · 2022-04-28T15:00:34.196Z · LW(p) · GW(p)

I'm trying to get a better idea of your position. Suppose that, as TAG also replied, "realism about phenomenal consciousness" does not imply that consciousness is somehow fundamentally different from other forms of organization of matter. Suppose I'm a physicalist and a functionalist, so I think phenomenal consciousness just is a certain organization of matter. Do we still then need to replace "theory" with "ideology" etc?

Replies from: Signer↑ comment by Signer · 2022-04-28T16:52:08.267Z · LW(p) · GW(p)

It's basically what is in that paper by Kammerer: "theory of the difference between reporatable and unreportable perceptions" is ok, but calling it "consciousness" and then concluding from reasonable-sounding assumption "consciousness agents are moral patients" that generalizing theory about presense of some computational process in humans to universlal ethics is arbitrariness-free inference - that I don't like. Because reasonableness of "consciousness agents are moral patients" decrease than you substitute theory's content into it. It's like theory of beauty, when "precise computational theory that specifies what it takes for a biological or artificial system to have various kinds of conscious, valenced experiences" feels like more implied objectivity.

Replies from: Robbo↑ comment by Robbo · 2022-04-29T14:26:26.224Z · LW(p) · GW(p)

Great, thanks for the explanation. Just curious to hear your framework, no need to reply:

-If you do have some notion of moral patienthood, what properties do you think are important for moral patienthood? Do you think we face uncertainty about whether animals or AIs have these properties? -If you don't, are there questions in the vicinity of "which systems are moral patients" that you do recognize as meaningful?

Replies from: Signer↑ comment by Signer · 2022-04-30T19:05:29.776Z · LW(p) · GW(p)

-If you do have some notion of moral patienthood, what properties do you think are important for moral patienthood?

I don't know. If I need to decide, I would probably use some "similarity to human mind" metrics. Maybe I would think about complexity of thoughts in the language of human concepts or something. And I probably could be persuaded in the importance of many other things. Also I can't really stop on just determining who is moral patient - I start thinking what exactly to value about them and that is complicated by me being (currently interested in counterarguments against being) indifferent to suffering and only counting good things.

Do you think we face uncertainty about whether animals or AIs have these properties?

Yes for "similarity to human mind" - we don't have precise enough knowledge about AI's or animals mind. But now it sounds like I've only chosen these properties to not be certain. In the end I think moral uncertainty plays more important role than factual uncertainty here - we already can be certain that very high-level low-resolution models of human consciousness generalize to anything from animals to couple lines of python.

comment by superads91 · 2022-04-26T21:38:08.984Z · LW(p) · GW(p)

I highly doubt this on an intuitive level. If a draw a picture of a man being shot, is it suffering? Naturally not, since those are just ink pigments in a sheet of cellulose. Suffering seems to need a lot of complexity and also seems deeply connected to biological systems. AI/computers are just a "picture" of these biological systems. A pocket calculator appears to do something similar to the brain but in reality it's much less complex and much different, and it's doing something completely different. In reality it's just an electric circuit. Are lightbulbs moral patients?

Now, we could someday crack consciousness in electronic systems, but I think it would be winning the lottery to get there not on purpose.

Replies from: Robbo, charbel-raphael-segerie↑ comment by Robbo · 2022-04-28T14:51:19.630Z · LW(p) · GW(p)

A few questions:

- Can you elaborate on this?

Suffering seems to need a lot of complexity

and also seems deeply connected to biological systems.

I think I agree. Of course, all of the suffering that we know about so far is instantiated in biological systems. Depends on what you mean by "deeply connected." Do you mean that you think that the biological substrate is necessary? i.e. you have a biological theory of consciousness?

AI/computers are just a "picture" of these biological systems.

What does this mean?

Now, we could someday crack consciousness in electronic systems, but I think it would be winning the lottery to get there not on purpose.

Can you elaborate? Are you saying that, unless we deliberately try to build in some complex stuff that is necessary for suffering, AI systems won't 'naturally' have the capacity for suffering? (i.e. you've ruled out the possibility that Steven Byrnes raised in his comment [LW(p) · GW(p)])

Replies from: superads91↑ comment by superads91 · 2022-04-28T17:06:56.060Z · LW(p) · GW(p)

1.) Suffering seems to need a lot of complexity, because it demands consciousness, which is the most complex thing that we know of.

2.) I personally suspect that the biological substrate is necessary (of course that I can't be sure.) For reasons, like I mentioned, like sleep and death. I can't imagine a computer that doesn't sleep and can operate for trillions of years as being conscious, at least in any way that resembles an animal. It may be superintelligent but not conscious. Again, just my suspicion.

3.) I think it's obvious - it means that we are trying to recreate something that biological systems do (arithmetics, imagine recognition, playing games, etc) on these electronic systems called computers or AI. Just like we try to recreate a murder scene with pencils and paper. But the murder drawing isn't remotely a murder, it's only a basic representation of a person's idea of a murder.

4.) Correct. I'm not completely excluding that possibility, but like I said, it would be a great luck to get there not on purpose. Maybe not "winning the lottery" luck as I've mentioned, but maybe 1 to 5% probability.

We must understand that suffering takes consciousness, and consciousness takes a nervous system. Animals without one aren't conscious. The nature of computers is so drastically different from that of a biological nervous system (and, at least until now, much less complex) that I think it would be quite unlikely that we eventually unwillingly generate this very complex and unique and unknown property of biological systems that we call consciousness. I think it would be a great coincidence.

↑ comment by Charbel-Raphaël (charbel-raphael-segerie) · 2022-04-27T23:05:54.069Z · LW(p) · GW(p)

Either consciousness is a mechanism that has been recruited by evolution for one of its abilities to efficiently integrate information, or consciousness is a type of epiphenomenon that serves no purpose.

Personally I think that consciousness, whatever it is, serves a purpose, and has an importance for the systems that try to sort out the anecdotal information from the information that deserves more extensive consideration. It is possible that this is the only way to effectively process information, and therefore that in trying to program an agi, one naturally comes across it

Replies from: superads91↑ comment by superads91 · 2022-04-28T00:34:20.610Z · LW(p) · GW(p)

Consciousness definitely serves a purpose, from an evolutionary perspective. It's definitely an adaptation to the environment, by offering a great advantage, a great leap, in information processing.

But from there to say that it is the only way to process information goes a long way. I mean, once again, just think of the pocket calculator. Is it conscious? I'm quite sure that it isn't.

I think that consciousness is a very biological thing. The thing that makes me doubt the most about consciousness in non-biological systems (let alone in the current ones which are still very simple) is that they don't need to sleep and they can function indefinitely. Consciousness seems to have these limits. Can you imagine not ever sleeping? Not ever dying? I don't think such would be possible for any conscious being, at least one remotely similar to us.

Replies from: Robbo↑ comment by Robbo · 2022-04-28T14:56:21.900Z · LW(p) · GW(p)

to say that [consciousness] is the only way to process information

I don't think anyone was claiming that. My post certainly doesn't. If one thought consciousness were the only way to process information, wouldn't there not even be an open question about which (if any) information-processing systems can be conscious?

Replies from: superads91↑ comment by superads91 · 2022-04-28T16:29:44.434Z · LW(p) · GW(p)

I never said you claimed such either, but Charbel did.

"It is possible that this [consciousness] is the only way to effectively process information"

I was replying to his reply to my comment, hence I mentioned it.

comment by Jacob Pfau (jacob-pfau) · 2022-04-25T23:10:52.469Z · LW(p) · GW(p)

How exactly does reward relate to valenced states in humans? In general, what gives rise to pleasure and pain, in addition to (or instead of) the processing of reward signals?

These problems seem important and tractable even if working out the full computational theory of valence might not be. We can distinguish three questions:

- What is the high-level functional role of valence? (coarse-grained functionalism)

- What evolutionary pressures incentivized valenced experience?

- What computational processes constitute valence? (fine-grained functionalism)

Answering #3 would be best, but it seems to me that answering #1 and #2 is far more feasible. A promising and realistic scenario might be discovering a distinction between positive and negative valence from perspectives #1 and 2, and then giving the DeepMind presentation encouraging them to avoid the coarse-grained functional structures and incentives for negative valence. From my incomplete understanding of the consciousness and valence literature, it seems to me that almost all work is contributing to answering question #1 not question #3.

One avenue in this direction might be looking into the interaction between valence and attention. It seems to me that there is an asymmetry there (or at least a canonical way of fixing a zero point). Positive valence involves attention concentration whereas negative valence involves diffusion of attention / searching for ways to end this experience. A couple reasons why I'm optimistic about this direction: First, attention likely bears some intrinsic connection with consciousness (other coarse-grained functional correlates such as commensurability, addiction etc. need not); second, attention manipulation seems like it might be formalizable in a way relevant for machine learning practitioners. (I'm using attention here in the philosophy/neuro sense not the transformer sense)

Replies from: Robbo↑ comment by Robbo · 2022-04-28T15:04:47.046Z · LW(p) · GW(p)

Very interesting! Thanks for your reply, and I like your distinction between questions:

Positive valence involves attention concentration whereas negative valence involves diffusion of attention / searching for ways to end this experience.

Can you elaborate on this? What is do attention concentration v. diffusion mean? Pain seems to draw attention to itself (and to motivate action to alleviate it). On my normal understanding of "concentration", pain involves concentration. But I think I'm just unfamiliar with how you / 'the literature' use these terms.

Replies from: jacob-pfau↑ comment by Jacob Pfau (jacob-pfau) · 2022-04-30T13:00:09.945Z · LW(p) · GW(p)

The relationship between valence and attention is not clear to me, and I don't know of a literature which tackles this (though imperativist analyses of valence are related). Here are some scattered thoughts and questions which make me think there's something important here to be clarified:

- There's a difference between a conscious stimulus having high saliency/intensity and being intrinsically attention focusing. A bright light suddenly strobing in front of you is high saliency, but you can imagine choosing to attend or not to attend to it. It seems to me plausible that negative valence is like this bright light.

- High valence states in meditation are achieved via concentration of attention

- Positive valence doesn't seem to entail wanting more of that experience (c.f. there existing non-addictive highs etc.), whereas negative valence does seem to always entail wanting less.

That is all speculative, but I'm more confident that positive and negative valence don't play the same role on the high-level functional level. It seems to me that this is strong (but not conclusive) evidence that they are also not symmetric at the fine-grained level.

I'd guess a first step towards clarifying all this would be to talk to some researchers on attention.

comment by interstice · 2022-04-25T18:56:26.169Z · LW(p) · GW(p)

I think I have a pretty good theory [LW · GW] of conscious experience, focused on the meta-problem -- explaining why it is that we think consciousness is mysterious. Basically I think the sense of mysteriousness results from our brain considering 'redness'(/etc) to be a primitive data type, which cannot be defined in terms of any other data. I'm not totally sure yet how to extend the theory to cover valence, but I think a promising way forward might be trying to reverse-engineer how our brain detects empathy/other-mind-detection at an algorithmic level, then extend that to cover a wider class of systems.

Replies from: TAG, Robbo↑ comment by TAG · 2022-04-27T13:47:36.504Z · LW(p) · GW(p)

Could you respond to this comment [LW(p) · GW(p)]?

Replies from: interstice↑ comment by interstice · 2022-04-27T18:37:53.201Z · LW(p) · GW(p)

Responded!

comment by Josh Gellers (josh-gellers) · 2022-05-09T11:08:56.060Z · LW(p) · GW(p)

Thanks for this thorough post. What you have described is known as the “properties-based” approach to moral status. In addition to sentience, others have argued that it’s intelligence, rationality, consciousness, and other traits that need to be present in order for an entity to be worthy of moral concern. But as I have argued in my 2020 book, Rights for Robots: Artificial Intelligence, Animal and Environmental Law (Routledge), this is a Sisyphean task. Philosophers don’t (and may never) agree about which of these properties is necessary. We need a different approach altogether in order to figure out what obligations we might have towards non-humans like AI. Scholars like David Gunkel, Mark Coeckelbergh, and myself have advocated for a relations-based approach, which we argue is more informed by how humans and others interact with and relate to each other. We maintain that this is a more realistic, accurate, and less controversial way of assessing moral status.

comment by Kredan · 2022-04-27T00:11:25.723Z · LW(p) · GW(p)

higher levels of complexity should increase our credence somewhat that consciousness-related computations are being performed

To nuance a bit: while some increasing amount of complexity might be necessary for more consciousness a system with high complexity does not necessarily implies more consciousness. So not clear how we should update our credence that C is being computed because some system has higher complexity. This likely depends on the detail of the cognitive architecture of the system.

It might also be that systems that are very high in complexity have a harder time being conscious (because consciousness requires some integration/coordination within the system) so there might be a sweet spot for complexity to instantiate consciousness.

For example consciousness could be roughly modeled as some virtual reality that our brain computes. This "virtual world" is a sparse model of the complex world we live in. While the model is generated by complex neural network, it is a sparse representation of a complex signal. For example the information flow of a macroscopic system in the network that is relevant to detect consciousness might actually not be that complex although it "emerges" on top of a complex architecture.

The point is that consciousness is perhaps closer to some combination of complexity and sparsity rather than complexity alone.

comment by Shmi (shminux) · 2022-04-25T19:10:07.438Z · LW(p) · GW(p)

The whole field seems like an extreme case of anthropomorphizing to me. The "valence" thing in humans is an artifact of evolution, where most of the brain is not available to introspection because we used to be lizards and amoebas. That's not at all how the AI systems work, as far as I know.

Replies from: jacob-pfau, Robbo, TAG↑ comment by Jacob Pfau (jacob-pfau) · 2022-04-25T23:19:32.835Z · LW(p) · GW(p)

Valence is of course a result of evolution. If we can identify precisely what evolutionary pressures incentivize valence, we can take an outside (non-anthropomorphizing, non-xenomorphizing) view: applying Laplace's rule gives us a 2/3 chance that AI developed with similar incentives will also experience valence?

↑ comment by Robbo · 2022-04-25T19:54:25.431Z · LW(p) · GW(p)

The whole field seems like an extreme case of anthropomorphizing to me.

Which field? Some of these fields and findings are explicitly about humans; I take it you mean the field of AI sentience, such as it is?

Of course, we can't assume that what holds for us holds for animals and AIs, and have to be wary of anthropomorphizing. That issue also comes up in studying, e.g., animal sentience and animal behavior. But what were you thinking is anthropomorphizing exactly? To be clear, I think we have to think carefully about what will and will not carry over from what we know about humans and animals.

The "valence" thing in humans is an artifact of evolution

I agree. Are you thinking that this means that valenced experiences couldn't happen in AI systems? Are unlikely to? Would be curious to hear why.

where most of the brain is not available to introspection because we used to be lizards and amoebas

I also agree.with that. What was the upshot of this supposed to be?

That's not at all how the AI systems work

What's not how the AI systems work? (I'm guessing this will be covered by my other questions)