Note-Taking without Hidden Messages

post by Hoagy · 2022-04-30T11:15:00.234Z · LW · GW · 2 commentsContents

Summary Background A Puzzle - Extracting only shared information Favouring Human-comprehensible information Remaining Issues Two approaches to testing this None 2 comments

Summary

We can create two ANNs which use language 'notes' to explain their internal state. They will use language to communicate a mixture of language-as-understood-by-humans and additional, hidden information.

The form of the hidden information will vary between the two, and so by training one network to have notes which are similar both to those made by the other network and by the past state of that same network, we can strongly bias these notes towards containing human-comprehensible information.

Background

This post develops my previous ELK-based work, developing a 'note-taking' architecture, as explained in this post [LW · GW].

A basic summary is that we create a MuZero-like agent, and during its rollouts we force the agent to convert its latent space into human-like language (or other media) and back into latent space. We train it with hints in the human space to incentivize it to use language in the same way the humans do, and create a model called a Synonymizer to freely convert any human-comprehensible media into alternate media that preserves the meaning, and shuts down any detected attempt to hide additional information.

The core problem with this setup is that there is going to be a strong incentive to hide additional information in the ostensibly human-comprehensible media. These mappings will contain a mixture of using human concepts in the way that they are intended, and steganographic (hidden) usage of human language. I will denote human-comprehensible information as and steganographic information as . The challenge is to maximize the ratio of to when learning.

In my last post I suggested that training multiple models on different data and with different initialization could provide a means to remove this hidden information but this strategy was underdeveloped. This is what I'll expand upon in this post.

A Puzzle - Extracting only shared information

We have two similarly trained networks which map from observations to a latent space. We again have two mappings from these to a human space.

Crucially, through the pre-training we can expect these models to use language at least partly as humans understand it, and so this aspect of their communication will be similar in both models. For steganographic information, however, there's no Schelling point for how it should be communicated, and so we can expect this information to be encoded in different ways by the two systems.[1]

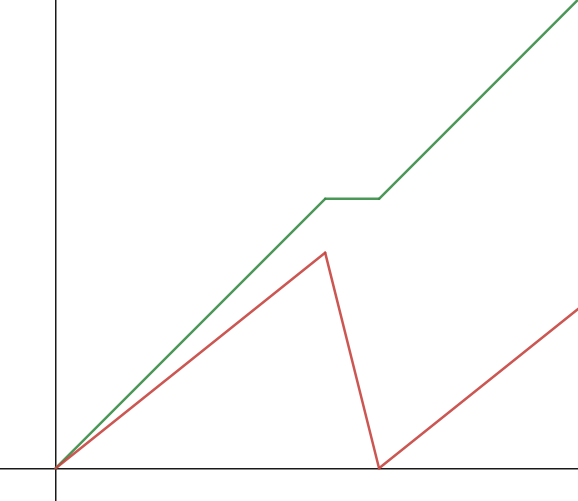

This means that we can expect an asymmetry where the two systems encode human-language information in the same way but steganographic information in mostly different ways:

Here and are ANNs converting observations to latent space and and are ANNs converting latent space to language. and are the components of which communicate human-comprehensible and steganographic information respectively.

The task is to devise a protocol which exploits this asymmetry to remove steganographic information while preserving human-language information.

Favouring Human-comprehensible information

How can we use the two networks with different approaches to hiding information to create a network without hidden information, or at least bias learning against hiding information as strongly as possible?

Initial guess: Train them end-to-end, so that they form a shared language.

This will initially boost relative to because it will suddenly be joined to a network with is correctly transmitting but which does not understand at all.

However, as these networks are trained to equilibrium the advantage will disappear as a steganographic protocol is agreed between the two models. Also, this can only be used once before the networks are in equilibrium.

Better option: Train the networks for similarity to both the other network and to their past selves.

To be more precise, after a phase of learning, we take a checkpoint of both models, and then train each model for similarity to the output of both checkpoints, where significant loss is imposed for any output sub-string which would not be outputted with high probability by both the other model and the previous checkpoint of the current model.

The human-language latent space has variable length and higher length is penalized, so the hope is that the optimal training behaviour, if the protocol is designed correctly, would be to remove highly steganographic sections, without allowing the two models to fully converge on how to include steganographic information. can build up over time, but only through coincidentally matching approaches to steganography.

This is preferable to end-to-end learning as it does not take on the steganographic information of the alternate model.

The loss due to a particular message would look something like:

where is the probability assigned to the message by the checkpoint of model 1.

This should hopefully allow a strong pressure against steganographic information to be maintained throughout training without a gigantic compute overhead.

Remaining Issues

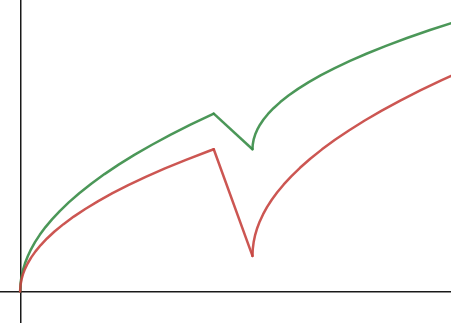

While this training would hopefully remove steganographic information, the precursors and structure to hiding the information would remain, and therefore when training reverts to simply trying to encode information, it may very quickly reoccur, as shown in the following graphs:

I've talked about these as alternating phases of training but it may be preferable to have these two types of learning occurring simultaneously, with the checkpoint against which the models are trained being regularly updated. A correct balance between the two types of training would need to be found to maximize the advantage of over .

Two approaches to testing this

I hope to flesh out other details of the training strategy of this rather complex approach and then start to build small prototypes and work out how the core assumptions of the approach can be validated

The first approach would be to take a set of face images and train them on an auto-encoder, where it is primed to use each dimension of the auto-encoder to mean a certain thing like skin tone, face angle etc. If we train two auto-encoders in this way, with different initial conditions and slightly varied datasets, we should get two latent spaces which encode some information in the same way, and also additional information. We can test if by this training strategy we can force the auto-encoders to change only the desired characteristic along each of its dimensions.

If this succeeds then this approach could be extended to toy games being played by a MuZero style agent in which the agent must keep track of both continuous and discrete variables. The agent is given the note-taking ability to remember these discrete variables, and is primed to do so correctly, but the different frequencies of these discrete variables means that it is optimal to encode information about the continuous variables in these notes as well. We can then test if this or other protocols are able to successfully suppress hidden information.

- ^

This is ultimately an empirical point and it may be that there are some 'natural' ways to hide information. Varying the training data should minimize this.

2 comments

Comments sorted by top scores.

comment by Vivek Hebbar (Vivek) · 2023-10-11T22:21:33.864Z · LW(p) · GW(p)

This will initially boost relative to because it will suddenly be joined to a network with is correctly transmitting but which does not understand at all.

However, as these networks are trained to equilibrium the advantage will disappear as a steganographic protocol is agreed between the two models. Also, this can only be used once before the networks are in equilibrium.

Why would it be desirable to do this end-to-end training at all, rather than simply sticking the two networks together and doing no further training? Also, can you clarify what the last sentence means?

(I have guesses, but I'd rather just know what you meant)

comment by Gunnar_Zarncke · 2022-04-30T12:18:35.421Z · LW(p) · GW(p)

Regular human language between two humans also contains "hidden" information that is often not accessible to conscious thought. One could say it is hidden by the subconsciousness that "wants" to communicate it but is trained (by parents, society) to use normal language.