Power vs Precision

post by abramdemski · 2021-08-16T18:34:42.287Z · LW · GW · 16 commentsContents

Physical Power vs Precision Generalizing Working With Others None 16 comments

I've written a couple of posts (1 [LW · GW],2 [LW · GW]) about General Semantics and precision (IE, non-equivocation). These posts basically argue for developing superpowers of precision. In addition, I suspect there are far more pro-precision [LW · GW] posts [LW · GW] on LessWrong than the opposite (although most of these would be about specific examples, rather than general calls for precision, so it's hard to search for this to confirm).

A comment [LW(p) · GW(p)] on my more recent general-semantics-inspired posts applied the law of equal and opposite advice, pointing out that there are many advantages to abstraction/vagueness as well; so, why should we favor precision? At least, we should chesterton-fence [? · GW] the default level of precision before we assume that turning the dial up higher is better.

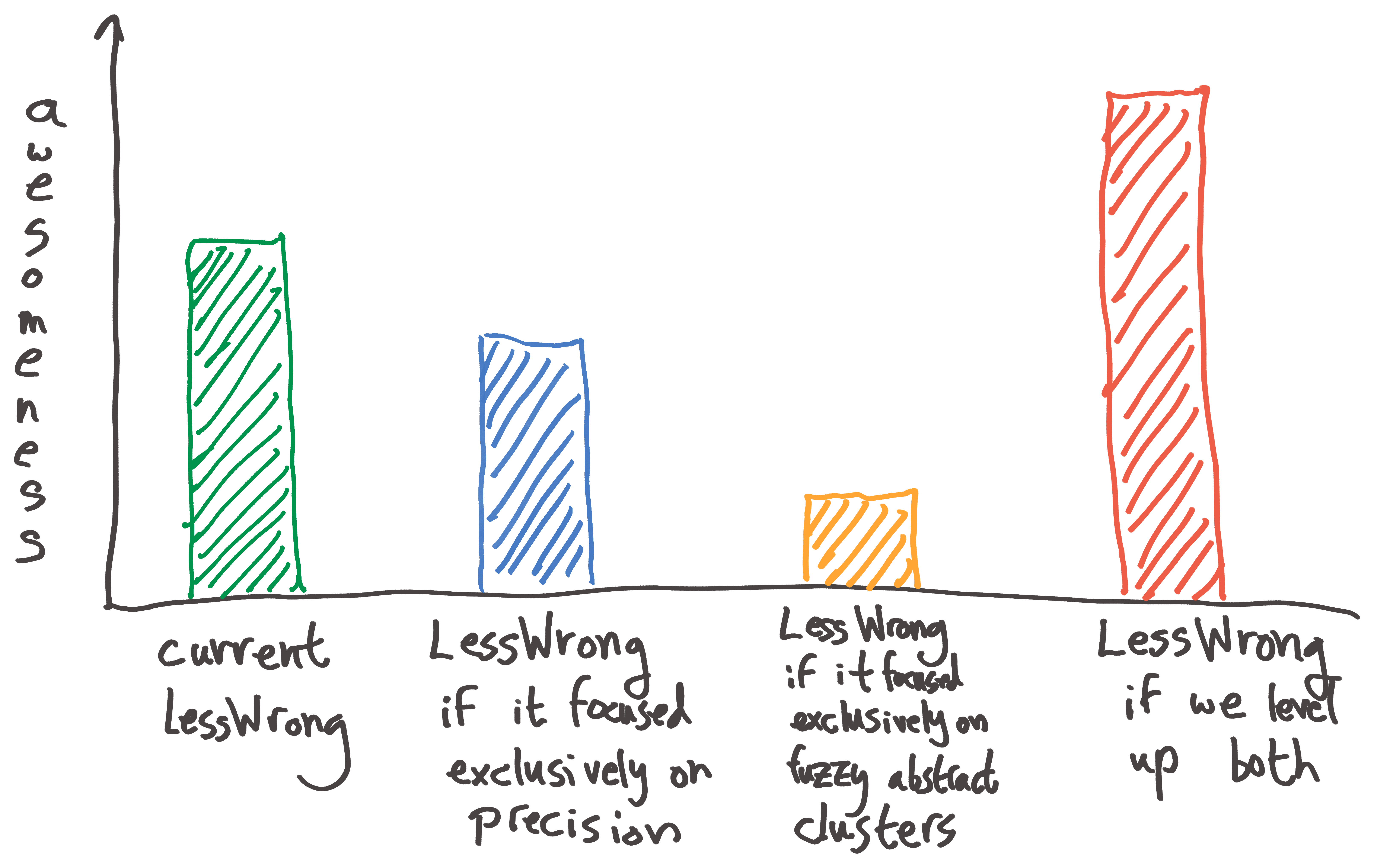

I totally agree that there are advantages to fuzzy clusters as well; what I actually think is that we should level up both specificity-superpowers and vagueness-superpowers. However, it does seem to me like there's something special about specificity. I think I would prefer a variant of LessWrong which valued specificity more to one which valued it less.

To possibly-somewhat explain why this might be, I want to back up a bit. I've been saying "specificity" or "precision", but what I actually mean is specificity/precision in language and thought. However, I think it will be useful to think about physical precision as an analogy.

Physical Power vs Precision

Power comes from muscles, bones, lungs, and hearts. Precision comes from eyes, brains, and fingers. (Speaking broadly.)

Probably the most broadly important determiner of what you can accomplish in the physical world is power, by which I mean the amount of physical force you can bring to bear. This determines whether you can move the rock, open the jar, etc. In the animal kingdom, physical power determines who will win a fight.

However, precision is also incredibly important, especially for humans. Precision starts out being very important for the use of projectiles, a type of attack which is not unique to humans, but nearly so. It then becomes critical for crafting and tool use.

Precision is about creating very specific configurations of matter, often with very little force (large amounts of force are often harder to exercise precision with).

We could say that power increases the range of accessible states (physical configurations which we could realize), while precision increases the optimization pressure which we can apply to select from those states. A body-builder has all the physical strength necessary to weave a basket, but may lack the precision to bring it about.

(There are of course other factors than just precision which go into basket-weaving, such as knowledge; but I'm basically ignoring that distinction here.)

Generalizing

We can analogize the physical precision/power dichotomy to other domains. Social power would be something like the number of people who listen to you, whereas social precision would be the ability to say just the right words. Economic power would simply be the amount of money you have, while economic precision would involve being a discerning customer, as well as managing the logistics of a business to cut costs through efficiency gains.

In the mental realm, we could say that power means IQ, while precision means rationality. Being slightly more specific, we could say that "mental power" means things like raw processing power, short-term memory (working memory, RAM, cache), and long-term memory (hard drive, episodic memory, semantic memory). "Mental precision" is like running better software: having the right metacognitive heuristics. Purposefully approximating probability theory and decision theory, etc.

(This is very different from what I meant by precision in thought, earlier, but this post itself is very imprecise and cluster-y! So I'll ignore the distinction for now.)

Working With Others

In Conceptual Specialization of Labor Enables Precision [LW · GW], Vaniver hypothesizes that people in the past who came up with "wise sayings" actually did know a lot, but were unable to convey their knowledge precisely. This results in a situation where the wise sayings convey relatively little of value to the ignorant, but later in life, people have "aha" moments where they suddenly understand a great deal about what was meant. (This can unfortunately result in a situation where the older people mistakenly think the wise sayings were quite helpful in the long run, and therefore, propagate these same sayings to younger people.)

Vaniver suggests that specialized fields avoid this failure mode, because they have the freedom to invent more specialized language to precisely describe what they're talking about, and the incentive to do so.

I think there's more to it.

Let's think about physical precision again. Imagine a society which cares an awful lot about building beautiful rock-piles.

If you're stacking rocks with someone of low physical precision, then it matters a lot less that you have high precision yourself. You may be able to place a precisely balanced rock, but your partner will probably knock it out of balance. You will learn not to exercise your precision. (Or rather, you will use your precision to optimize for structures that will endure your partner's less-precise actions.)

So, to first approximation, the precision of a team of people can only be a little higher than the precision of its least precise member.

Power, on the other hand, is not like this. The power of a group is basically the sum of the power of its members.

I think this idea also (roughly) carries over to non-physical forms of precision, such as rationality and conceptual/linguistic precision. In hundreds of ways, the most precise people in a group will learn to "fuzz out [LW · GW]" their language to cope with the less-precise thinking/speaking of those around them. (For example, when dealing with a sufficiently large and diverse group, you have about five words [LW · GW]. This explains the "wise sayings" Vaniver was thinking of.)

For example, in policy debates should not appear one-sided [LW · GW], Eliezer laments the conflation of a single pro or con with claims about the overall balance of pros/cons. But in an environment where a significant fraction of people are prone to make this mistake, it's actively harmful to make the distinction. If I say "wearing sunscreen is correlated with skin cancer", many people will automatically confuse this with "you should not wear sunscreen".

So, conflation can serve as the equivalent of a clumsy person tipping over carefully balanced rocks: it will incentivize the more precise people to act as if they were less precise (or rather, use their optimization-pressure to avoid sentences which are prone to harmful conflation, which leaves less optimization-pressure for other things).

This, imho, is one of the major forces behind "specialization enables precision": your specialized field will filter for some level of precision, which enables everybody to be more precise. Everyone in a chemistry lab knows how to avoid contamination and how to avoid being poisoned, so together, chemists can set up a more fragile and useful configuration of chemicals and tools. They could not accomplish this in, say, a public food court.

So, imho, part of what makes LessWrong a great place is the concentration of intellectual precision. This enables good thinking in a way that a high concentration of "good abstraction skill" (the skill of making good fuzzy clusters) does not.

Conversations about good fuzzy abstract clusters are no more or less important in principle. However, those discussions don't require a concentration of people with high precision. By its nature, fuzzy abstract clustering doesn't get hurt too much by conflation. This kind of thinking is like moving a big rock: all you need is horsepower. Precise conversations, on the other hand, can only be accomplished with similarly precise people.

(An interesting nontrivial prediction of this model is that clustering-type cognitive labor, like moving a big rock, can easily benefit from a large number of people; mental horsepower is easily scaled by adding people, even though mental precision can't be scaled like that. I'm not sure whether this prediction holds true.)

16 comments

Comments sorted by top scores.

comment by Scott Garrabrant · 2021-08-18T02:14:39.937Z · LW(p) · GW(p)

I claim that conversations about good fuzzy abstract clusters are both difficult, and especially in the comparative advantage of LessWrong. I claim that LW was basically founded on analogy, specifically the analogy between human cognition and agent foundations/AI. The rest of the world is not very precise, and that is a point for LW having a precision comparative advantage, but the rest of the world seems to also be lacking abstraction skill.

The claim that precision requires purity leads to the conclusion that you have to choose between precision and abstraction, and it also leads to the conclusion that precision is fragile, and thus that you should expect a priori for there to less of it naturally, but it is otherwise symmetric between precision and abstraction. It is only saying that we should pick a lane, not which lane it should be.

I claim that when I look at science academia, I see a bunch of precision directed in inefficient ways, together with a general failure to generalize across pre-defined academic fields, and even more of a failure to generalize out of the field of "science" and into the real world.

Warning: I am mostly conflating abstraction with analogy with lack of precision above, and maybe the point is that we need precise analogies.

comment by ozziegooen · 2021-08-19T01:58:16.422Z · LW(p) · GW(p)

Minor point:

You write,

> I totally agree that there are advantages to fuzzy clusters as well; what I actually think is that we should level up both specificity-superpowers and vagueness-superpowers.

My impression is that vagueness is generally considered undesirable in philosophy, where it has a fairly particular meaning.

https://en.wikipedia.org/wiki/Vagueness

https://plato.stanford.edu/entries/vagueness/

If you are going to use "vagueness-superpowers" to mean, "our ability working on big things that happen to be vague", you might want to include ambiguity, to be more complete.

My guess is that generality would be a better word here (or abstraction, but I think that's a bit different). My impression is that typically one could build abilities for both generalizations and abstractions. Hopefully the results of this work will not come with much vagueness.

https://www.lesswrong.com/posts/vPHduFch5W6dqoMMe/two-definitions-of-generalization

comment by gjm · 2021-08-16T19:01:23.087Z · LW(p) · GW(p)

Tangential: I followed the sunscreen link, expecting to find something at the other end that in-some-technical-sense-supported the claim in question. In fact it did the reverse, citing the claim as a false thing some people say that has no truth to it.

It seems to me that the example would be a better one if the claim in question were technically true (despite being misleading to readers who aren't thinking very carefully). For instance, if it had said not "wearing sunscreen actually tends to increase risk of skin cancer" (which is just plain false) but "people who wear sunscreen more often are actually more likely to get skin cancer" (which may be true but doesn't imply the former, as explained at the far end of that link" it would be better.

Replies from: abramdemski↑ comment by abramdemski · 2021-08-17T13:10:41.149Z · LW(p) · GW(p)

I think I endorse it as written.

First, the article endorses a correlational link between sunscreen and skin cancer:

Q. Is there evidence that sunscreen actually causes skin cancer?

A. No. These conclusions come incorrectly from studies where individuals who used sunscreen had a higher risk of skin cancer, including melanoma. This false association was made because the individuals who used sunscreen were the same ones who were traveling to sunnier climates and sunbathing. In other words, it was the high amounts of sun exposure, not the sunscreen, that elevated their risk of skin cancer.

They appear to deny a causal link, but I think they're really only denying that all else being equal adding sunscreen increases cancer risk; because later in the article, they affirm that numerous studies have found a causal link:

Many studies have demonstrated that individuals who use sunscreen tend to stay out in the sun for a longer period of time, and thus may actually increase their risk of skin cancer.

Thus, there is an actual causal link between wearing sunscreen and increased cancer risk (although the "may" complicates matters; I'm not sure whether it's just thrown in to weaken a conclusion they don't want to reinforce in readers' minds, vs really reflecting an unknown state of underlying knowledge). It's just that the link goes thru human behavior, which scientists don't always refer to as causal, since people are used to thinking of human behavior as free well.

OTOH, I do think that it would be a better example if it wasn't one that tended to confuse the reader, so I'm going to change it as you suggest.

Replies from: gjm↑ comment by gjm · 2021-08-17T15:33:21.410Z · LW(p) · GW(p)

That last bit (which, I confess, I hadn't actually noticed before) doesn't say that "wearing sunscreen actually tends to increase risk of skin cancer".

- All it says is "may actually increase their risk". That says to me that they haven't actually done the calculations.

- It's not even clear that they mean more than "staying out in the sun for longer may increase the risk" (i.e., not making any suggestion that the increase might outweigh the benefits of wearing sunscreen), though if that's all they mean then I'm not sure why they said "may".

They also don't actually cite any of those "many studies" which means that without doing a lot of digging (a) we can't tell how large the effect is and assess whether it might outweigh the benefits of sunscreen, and (b) we can't tell whether those studies actually distinguished between "people who were going to stay out for longer anyway tend to wear sunscreen" and "a given person on a given occasion will stay out longer if you make them wear sunscreen".

But I don't think your interpretation passes the smell test. If whoever wrote that page really believed that the overall effect of wearing sunscreen was to increase the risk of skin cancer (via making you stay out in the sun for longer), would they have said "We recommend sunscreen for skin cancer prevention"? Wouldn't the overall tone of the page be different? (At present they give a firm "no" to "Is there evidence that sunscreen actually causes skin cancer", they say "there are excellent studies that sunscreen protects against all three of the most common skin cancers", right up at the top in extra-large type there's "Don't let myths deter you from using it", they say "We recommend sunscreen for skin cancer prevention", etc., etc., etc.)

Again, that page doesn't say what "many studies" they have in mind. I had a quick look to see what I could find. The first thing I found was "Sunscreen use and duration of sun exposure: a double-blind, randomized trial" (Autier et al, JNCI, 1999). They gave participants either SPF10 or SPF30 sunscreen at random; neither experimenters nor participants knew which; they found that (presumably because they stayed out until they felt they were starting to burn) people with SPF30 sunscreen stayed out for about 25% longer. The two groups had similar overall amounts of sunburn or reddening. The study doesn't make any attempt to decide whether sunscreen use is likely to tend to produce more or less skin cancer. (The obvious guess after reading it is that effectively there's a control system that holds the level of sunburn roughly constant, and that maybe that also holds the level of skin cancer roughly constant.)

The second thing I found was "Sunscreen use and melanoma risk among young Australian adults" (Watts et al, JAMA Dermatology, 2018). This was just associational; they looked at sunscreen use in childhood and lifetime sunscreen use, among subjects who had had a melanoma before age 40 and in a sample of others (including one sibling for each subject with melanoma, a curious design decision). They found that melanoma patients had made less use of sunscreen in childhood and over their lifetimes. There are obvious caveats about self-reported data.

The third thing I found was "Prevalence of sun protection use and sunburn and association of demographic and behavioral characteristics with sunburn among US adults" (Holman et al, JAMA Dermatology, 2018). Sunscreen use was among the things they found associated with more sunburn. Again, obvious caveats about self-reported data, and about the possibility that people who were going to go out in the sun more anyway are more likely to use sunscreen. As with the first study mentioned above, no assessment of overall impact on skin cancer risk.

One reference in that last one goes to another article by Autier et al, this one a review rather than a study in its own right. It seems to be a fairly consistent finding that when people are deliberately exposing themselves to the sun for tanning or similar purposes, they stay out for something like 25% longer if they're using sunscreen; it also seems to be the case that when they aren't doing that (they just happen to be out in the sun but that isn't a goal) they don't stay out for longer when using sunscreen. Again, no attempt at figuring out the overall impact on skin cancer risk.

After reading all that, I think that:

- Wearing sunscreen does on balance lead to spending more time in the sun, at least for people who are spending time in the sun because they want to spend time in the sun.

- It doesn't look as if it's known whether making someone wear sunscreen rather than not on a given occasion (assuming that they are seeking out time-in-the-sun) makes them more or less likely to get skin cancers overall. (The Australian study suggests less likely; the US study suggests more likely; the double-blinded study suggests not much difference; the review doesn't do anything to resolve this uncertainty.)

- If I were giving advice on sunscreen use I would say: "Using sunscreen reduces the damage your skin will take from a given amount of sun exposure. If you use sunscreen you will probably spend longer in the sun, and maybe enough longer to undo the good the sunscreen does. So use sunscreen but make a conscious decision not to spend longer out in the sun than you would have without it.".

- The evidence I've seen doesn't justify saying "wearing sunscreen tends to increase the risk of skin cancer". (But also doesn't justify saying "wearing sunscreen tends to reduce the risk of skin cancer" with much confidence, at least not without caveats.)

I think your modified wording is better, and wonder whether it might be improved further by replacing "correlated" with "associated" which is still technically correct (or might be? the Australian study above seems to disagree) and sounds more like "sunscreen is bad for you".

Replies from: abramdemski↑ comment by abramdemski · 2021-08-17T18:01:57.662Z · LW(p) · GW(p)

That last bit (which, I confess, I hadn't actually noticed before) doesn't say that "wearing sunscreen actually tends to increase risk of skin cancer".

I agree. I think I read into it a bit on my first reading, when I was composing the post. But I still found the interpretation probable when I reflected on what the authors might have meant.

In any case, I concede based on what you could find that it's less probable (than the alternatives). The interviewee probably didn't have any positive information to the effect that getting someone to wear sunscreen causes them to stay out in the sun sufficiently longer for the skin cancer risk to actually go up on net. So my initial wording appears to be unsupported by the data, as you originally claimed.

But I don't think your interpretation passes the smell test. If whoever wrote that page really believed that the overall effect of wearing sunscreen was to increase the risk of skin cancer (via making you stay out in the sun for longer), would they have said "We recommend sunscreen for skin cancer prevention"?

It's pretty plausible on my social model.

To use an analogy: telling people to eat less is not a very good weight loss intervention, at all. (I think. I haven't done my research on this.) More importantly, I don't think people think it is. However, people do it all the time, because it is true that people would lose weight if they ate less.

My hypothesis: when giving advice, people tend to talk about ideal behavior rather than realistic consequences.

More evidence: when I give someone advice for how to cope with a behavior problem rather than fixing it, I often get pushback like "I should just fix it", which seems to be offered as an actual argument against my advice. For example, if someone habitually stopped at McDonald's on the way home from work, I might suggest driving a different way (avoiding that McDonald's), so that the McDonald's temptation doesn't kick in when they drive past it. I might get a response like "but I should just not give in to temptation". Now, that response is sometimes valid (like if the only other way to drive is significantly longer), but I think I've gotten responses like that when there's no other reason except the person wants to see themselves as a virtuous person and any plan which accounts for their un-virtue is like admitting defeat.

So, if someone believes "putting on sunscreen has a statistical tendency to make people stay out in the sun longer, which is net negative wrt skin cancer" but also believes "all else being equal, putting on sunscreen is net positive wrt skin cancer", I expect them to give advice based on the latter rather than the former, because when giving advice they tend to model the other person as virtuous enough to overcome the temptation to stay out in the sunlight longer. Anything else might even be seen as insulting to the listener.

(Unless they are the sort of person who geeks out about behavioral economics and such, in which case I expect the opposite.)

I think your modified wording is better, and wonder whether it might be improved further by replacing "correlated" with "associated" which is still technically correct (or might be? the Australian study above seems to disagree) and sounds more like "sunscreen is bad for you".

I was really tempted to say "associated", too, but it's vague! The whole point of the example is to say something precise which is typically interpreted more loosely. Conflating correlation with causation is a pretty classic example of that, so, it seems good. "Associated" would still serve as an example of saying something that the precise person knows is true, but which a less precise person will read as implying something different. High-precision people might end up in this situation, and could still complain "I didn't say you shouldn't wear sunscreen" when misinterpreted. But it's a slightly worse example because it doesn't make the precise person sound like a precise person, so it's not overtly illustrating the thing.

Replies from: gjm↑ comment by gjm · 2021-08-17T18:33:10.094Z · LW(p) · GW(p)

I'm not sure what distinction you're making when you say someone might believe both of

- putting on sunscreen has a statistical tendency to make people stay out in the sun longer, which is net negative wrt skin cancer

- all else being equal, putting on sunscreen is net positive wrt skin cancer

If the first means only that being out in the sun longer is negative then of course it's easy to believe both of those, but then "net negative" is entirely the wrong term and no one would describe the situation by saying anything like "wearing sunscreen actually tends to increase risk of skin cancer".

If the first means that the benefit of wearing sunscreen and the harm of staying in the sun longer combine to make something net negative, then "net negative" is a good term for that and "tends to increase risk" is fine, but then I don't understand how that doesn't flatly contradict the second proposition.

What am I missing?

Replies from: abramdemski↑ comment by abramdemski · 2021-08-17T19:13:08.592Z · LW(p) · GW(p)

Sorry, here's another attempt to convey the distinction:

Possible belief #1 (first bullet point):

If we perform the causal intervention of getting someone to put on sunscreen, then (on average) that person will stay out in the sun longer; so much so that the overall incidence of skin cancer would be higher in a randomly selected group which we perform that intervention on, in comparison to a non-intervened group (despite any opposing beneficial effect of sunscreen itself).

I believe this is the same as the second interpretation you offer (the one which is consistent with use of the term "net").

Possible belief #2 (second bullet point):

If we perform the same causal intervention as in #1, but also hold fixed the time spend in the sun, then the average incidence of skin cancer would be reduced.

This doesn't flatly contradict the first bullet point, because it's possible sunscreen is helpful when we keep the amount of sun exposure fixed, but that the behavior changes of those with sunscreen changes the overall story.

Replies from: gjm↑ comment by gjm · 2021-08-17T21:21:55.667Z · LW(p) · GW(p)

OK, yes: I agree that that is a possible distinction and that someone could believe both those things. And, duh, if I'd read what you wrote more carefully then I would have understood that that was what you meant. ("... because when giving advice they tend to model the other person as virtuous enough to overcome the temptation to stay out in the sunlight longer.") My apologies.

comment by johnswentworth · 2021-08-17T01:18:18.116Z · LW(p) · GW(p)

So, to first approximation, the precision of a team of people can only be a little higher than the precision of its least precise member.

Power, on the other hand, is not like this. The power of a group is basically the sum of the power of its members.

This suggests a prediction: in practice, precision will be a limiting factor a lot more often than power, because it's a lot easier to scale up power than precision.

Precision is a more scarce resource, and typically more valuable on the margin.

Replies from: abramdemski↑ comment by abramdemski · 2021-08-17T13:22:05.679Z · LW(p) · GW(p)

...Which is like predicting that humans have compute overhang, since (mental) power is compute, and (mental) precision is algorithms. Although, the hardware/software analogy additionally suggests that good algorithms are transmissible (which needn't be true from the more general hypothesis). If that's true, you'd expect it to be less of a bottleneck, since the most precise people could convey their more precise ways of thinking to the rest of the group.

comment by Chris_Leong · 2021-08-17T03:52:58.910Z · LW(p) · GW(p)

Power vs. precision seems to be related to the central divide between those who align more with post-rationality and those who align more with rationality.

Replies from: abramdemski↑ comment by abramdemski · 2021-08-17T13:15:16.222Z · LW(p) · GW(p)

How so? Like postrats want power?

Replies from: Chris_Leong↑ comment by Chris_Leong · 2021-08-17T13:21:58.132Z · LW(p) · GW(p)

They want to successfully operate in the world (which can roughly be translated to power) more than they want to be right (that is, have strictly correct beliefs).

Replies from: abramdemski, Richard_Kennaway↑ comment by abramdemski · 2021-08-17T17:26:24.903Z · LW(p) · GW(p)

I guess that makes sense. I was just not sure whether you meant that, vs the reverse (my model of postrats is loose enough that I could see either interpretation).

↑ comment by Richard_Kennaway · 2021-08-17T13:37:13.224Z · LW(p) · GW(p)

I don't know which postrats you have in mind here, but the obvious (rat) question is, are they succeeding? And do they want to be right about at least that question?