Noticing Frame Differences

post by Raemon · 2019-09-30T01:24:20.435Z · LW · GW · 40 commentsContents

Examples of Broad Frames Gears-oriented Frames Feelings-Oriented Frames Frames of Power and Negotiation Dominance and Threat The Language of Trade Noticing obvious frame differences Untangling Emotions, Beliefs and Goals Subtle differences between frames Example differences between gears-frames Goal-oriented vs Curiosity-driven conversation Debate vs Doublecrux Specific ontologies Example differences between feelings-frames “Mutual Connection” vs “Turn Based Sharing” “I Am My Feelings” vs “My Feelings are Objects” Concrete example: The FOOM Debate None 40 comments

Previously: Keeping Beliefs Cruxy [? · GW]

When disagreements persist despite lengthy good-faith communication, it may not just be about factual disagreements – it could be due to people operating in entirely different frames — different ways of seeing, thinking and/or communicating.

If you can’t notice when this is happening, or you don’t have the skills to navigate it, you may waste a lot of time.

Examples of Broad Frames

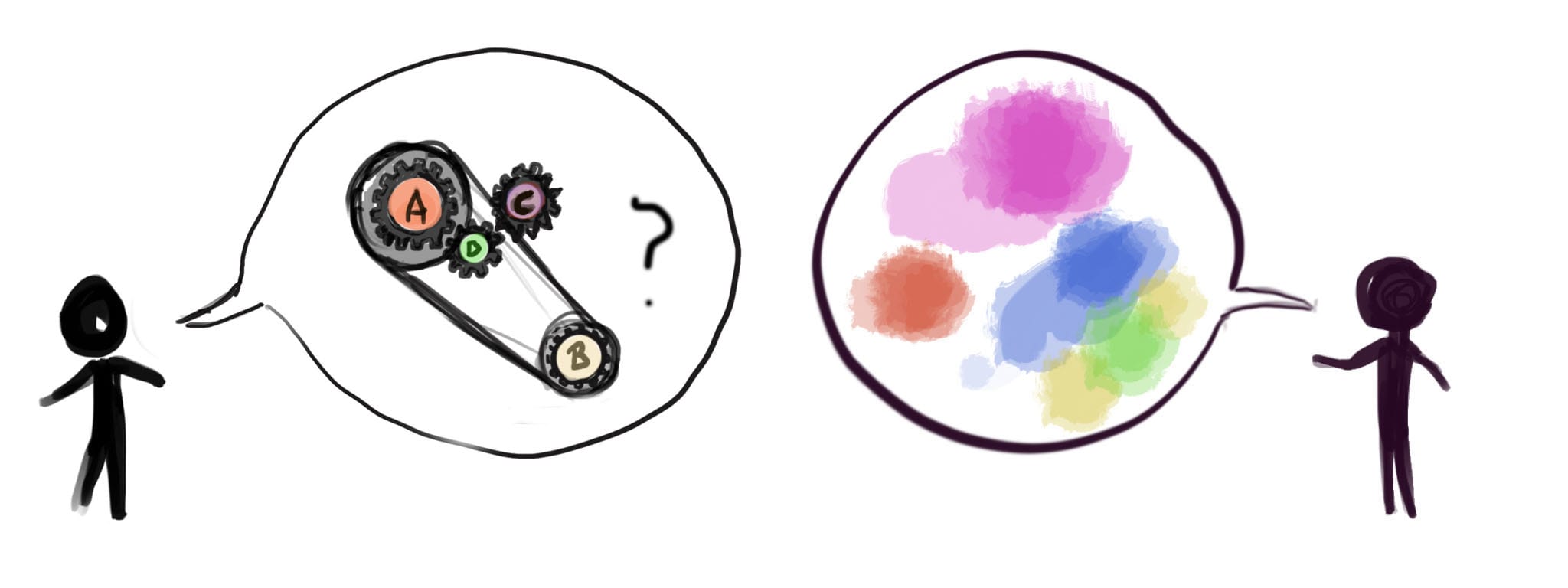

Gears-oriented Frames

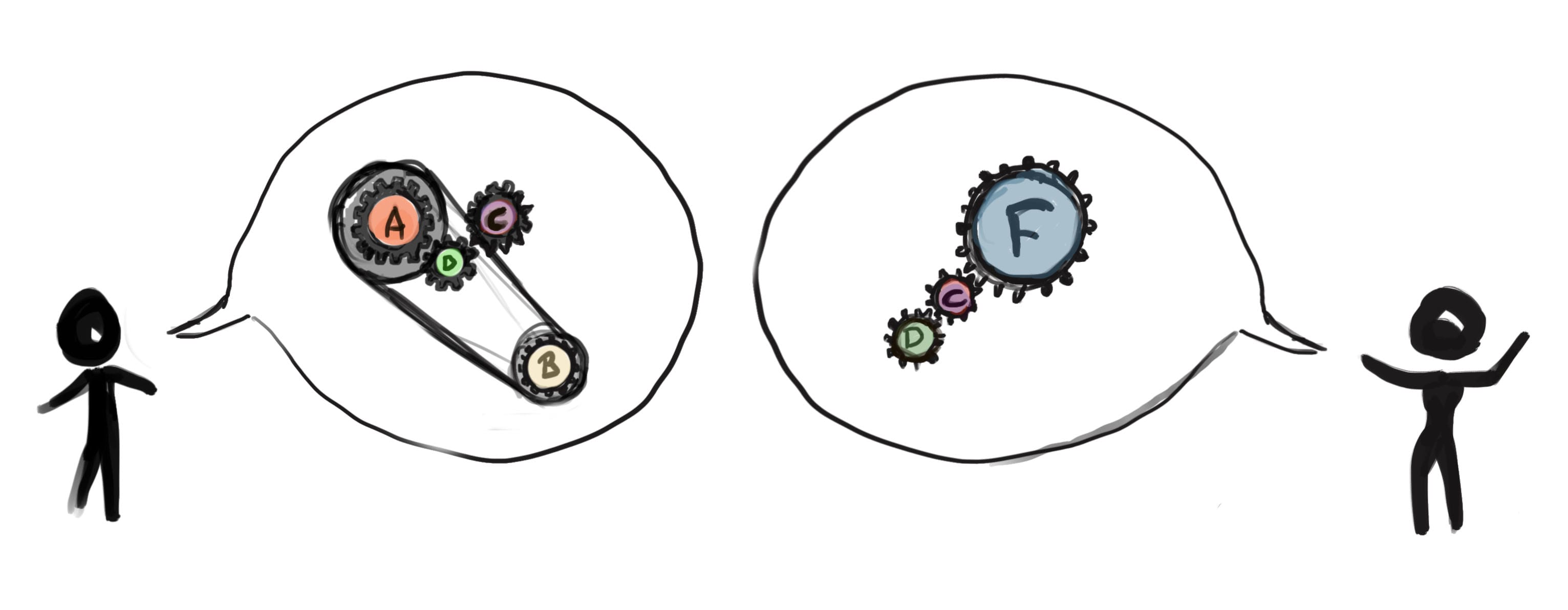

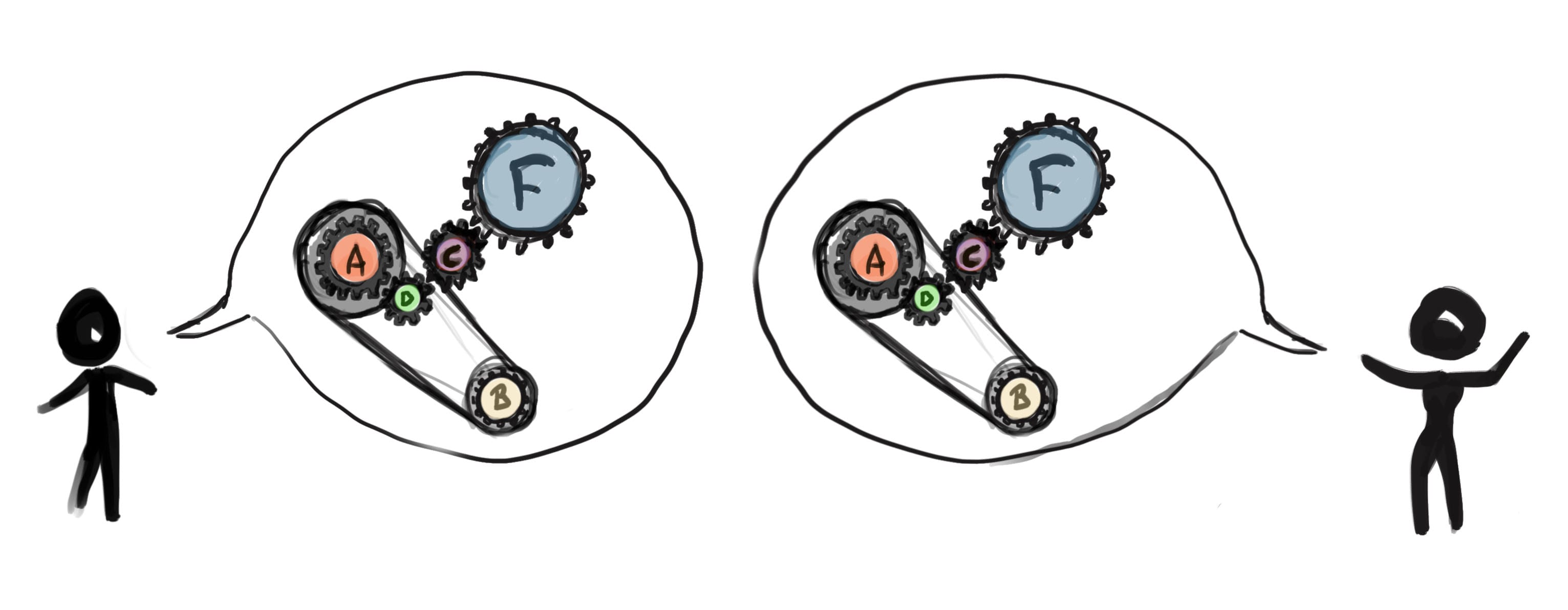

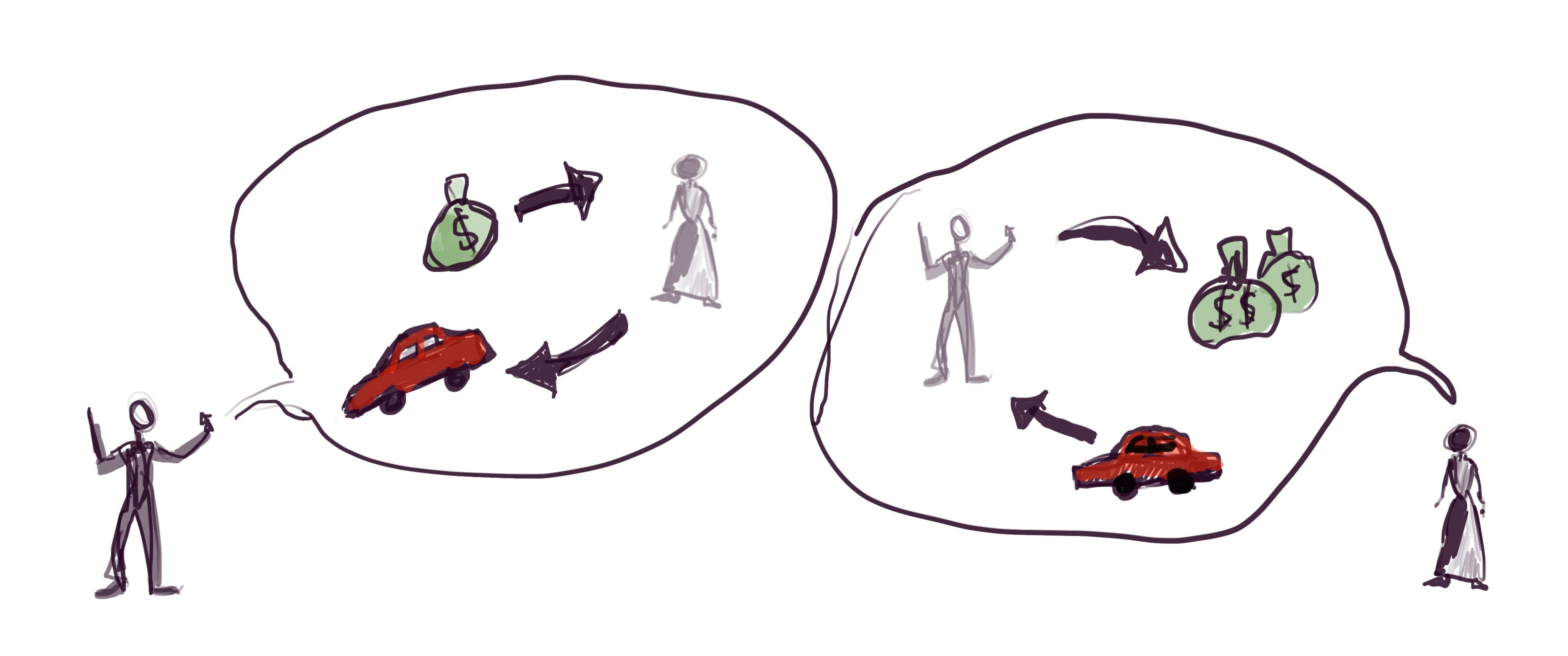

Bob and Alice’s conversation is about cause and effect. Neither of them are planning to take direct actions based on their conversation, they’re each just interested in understanding a particular domain better.

Bob has a model of the domain that includes gears A, B, C and D. Alice has a model that includes gears C, D and F. They’re able to exchange information, and their information is compatible,and they each end up with a shared model of how something works.

There are other ways this could have gone. Ben Pace covered some of them in a sketch of good communication:

- Maybe they discover their models don’t fit, and one of them is wrong

- Maybe combining their models results in a surprising, counterintuitive outcome that takes them awhile to accept.

- Maybe they fail to integrate their models, because they were working at different levels of abstraction and didn’t realize it.

Sometimes they might fall into subtler traps.

Maybe the thing Alice is calling “Gear C” is actually different from Bob’s “Gear C”. It turns out that they were using the same words to mean different things, and even though they’d both read blogposts warning them about that [LW · GW] they didn’t notice.

So Bob tries to slot Alice’s gear F into his gear C and it doesn’t fit. If he doesn’t already have reason to trust Alice’s epistemics, he may conclude Alice is crazy (instead of them referring to subtly different concepts).

This may cause confusion and distrust.

But, the point of this blogpost is that Alice and Bob have it easy.

They’re actually trying to have the same conversation. They’re both trying to exchange explicit models of cause-and-effect, and come away with a clearer understanding of the world through a reductionist lens.

There are many other frames for a conversation though.

Feelings-Oriented Frames

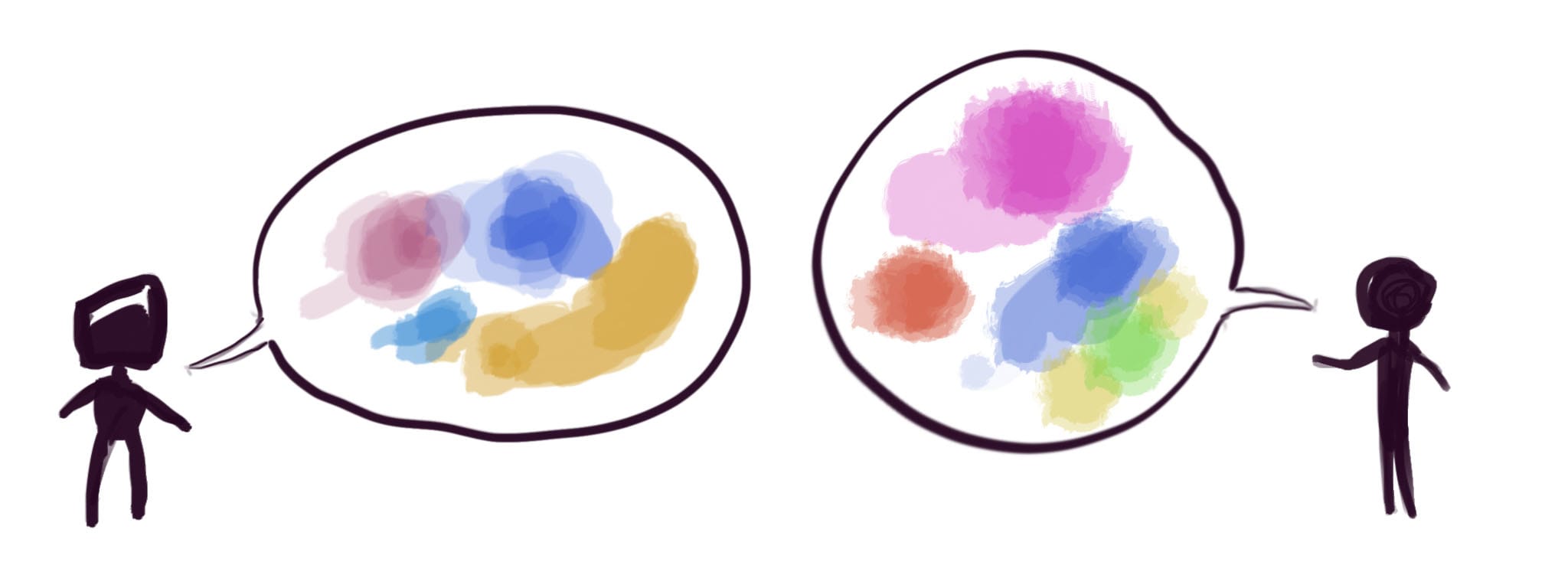

Clark and Dwight are exploring how they feel and relate to each other.

The focus of the conversation might be navigating their particular relationship, or helping Clark understand why he’s been feeling frustrated lately

When the Language of Feelings justifies itself to the Language of Gears, it might say things like: “Feelings are important information, even if it’s fuzzy and hard to pin down or build explicit models out of. If you don’t have a way to listen and make sense of that information, your model of the world is going to be impoverished. This involves sometimes looking at things through lenses other than what you can explicitly verbalize.”

I think this is true, and important. The people who do their thinking through a gear-centric frame should be paying attention to feelings-centric frames for this reason. (And meanwhile, feelings themselves totally have gears that can be understood through a mechanistic framework)

But for many people that’s not actually the point when looking through a feelings-centric frame. And not understanding this may lead to further disconnect if a Gearsy person and a Feelingsy person are trying to talk.

“Yeah feelings are information, but, also, like, man, you’re a human being with all kinds of fascinating emotions that are an important part of who you are. This is super interesting! And there’s a way of making sense of it that’s necessarily experiential rather than about explicit, communicable knowledge.”

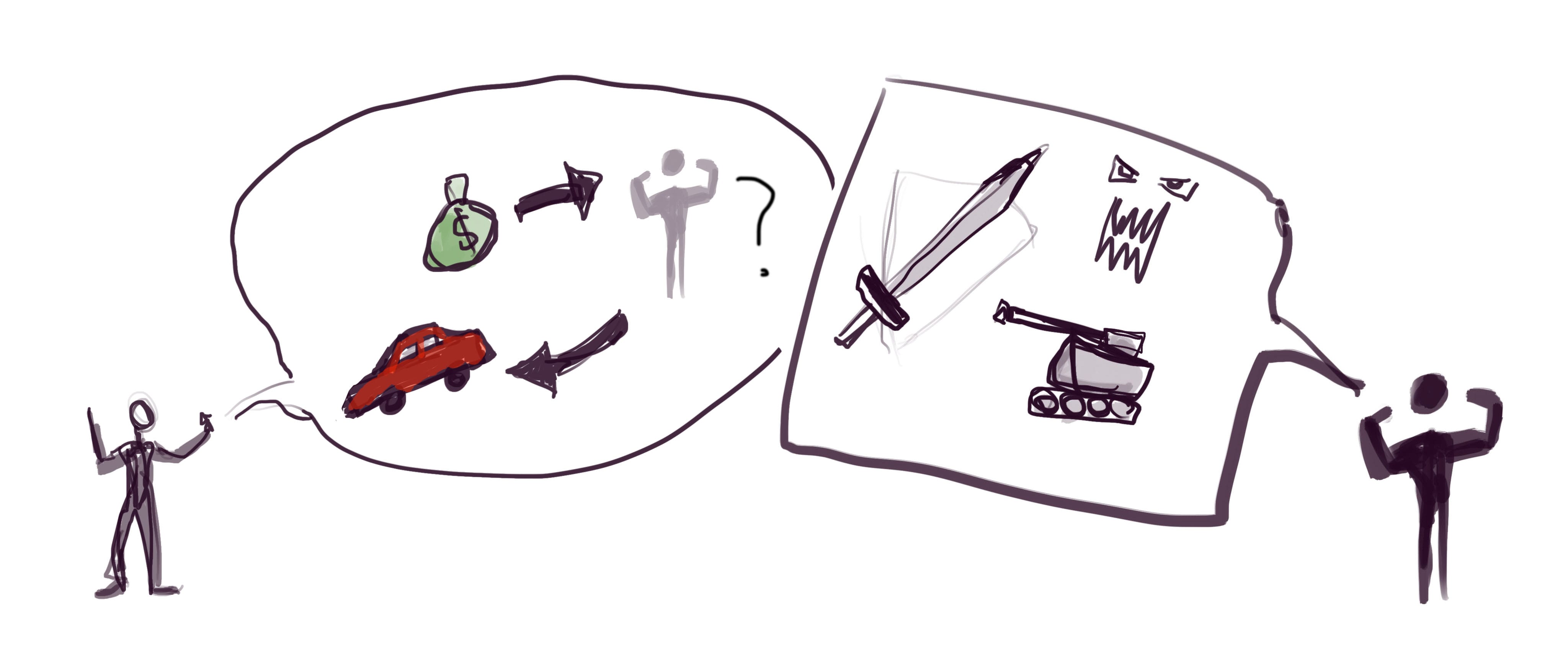

Frames of Power and Negotiation

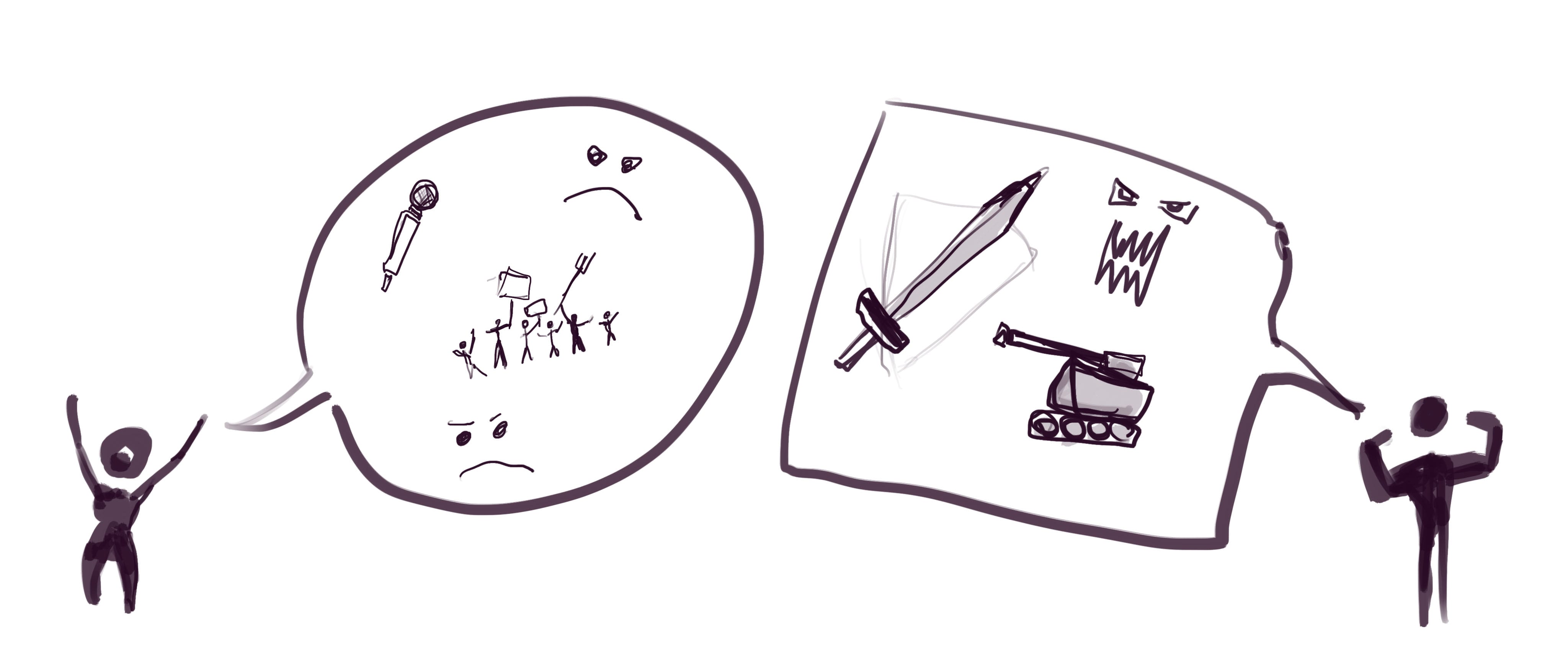

Dominance and Threat

Erica is Frank’s boss. They’re discussing whether the project Frank has been leading should continue, or whether it should stop and all the people on Frank’s team reassigned.

Frank argues there’s a bunch of reasons his project is important to the company (i.e. it provides financial value). He also argues that it’s good for morale, and that cancelling the project would make his team feel alienated and disrespected.

Erica argues back that there are other projects that are more financially valuable, and that his team’s feelings aren’t important to the company.

It so happens that Frank had been up for a promotion soon, and that would put him (going forward) on more even footing with Erica, rather than her being his superior.

It’s not (necessarily) about the facts, or feelings.

If Alice and Bob wandered by, they might notice Erica or Frank seeming to make somewhat basic reasoning mistakes about how much money the project would make or why it was valuable. Naively, Alice might point out that they seem to be engaging in motivated reasoning.

If Clark or Dwight wandered by, they might notice that Erica doesn’t seem to really be engaging with Frank’s worries about team morale. Naively, Clark might say something like “Hey, you don’t seem to really be paying attention to what Frank’s team is experiencing, and this is probably relevant to actually having the company be successful.”

But the conversation is not about sharing models, and it’s not about understanding feelings. It’s not even necessarily about “what’s best for the company.”

Their conversation is a negotiation. For Erica and Frank, most of what’s at stake are their own financial interests, and their social status within the company.

The discussion is a chess board. Financial models, worker morale, and explicit verbal arguments are more like game pieces than anything to be taken at face value.

This might be fully transparent to both Erica and Frank (such that neither even considers the other deceptive). Or, they might both earnestly believe what they’re saying – but nonetheless, if you try to interpret the conversation as a practical decision about what’s best for the company, you’ll come away confused.

The Language of Trade

George and Hannah are negotiating a trade.

Like Erica and Frank, this is ultimately a conversation about what George and Hannah want.

A potential difference is that Erica and Frank might think of their situation as zero-sum, and therefore most of the resolution has more to do with figuring out “who would win in a political fight?”, and then having the counterfactual loser back down.

Whereas George/Hannah might be actively looking for positive sum trades, and in the event that they can’t find one, they just go about their lives without getting in each other’s way.

(Erica and Frank might also look for opportunities to trade, but doing so honestly might first require them to establish the degree to which their desires are mutually incompatible and who would win a dominance contest. Then, having established their respective positions, they might speak plainly about what they have to offer each other)

Noticing obvious frame differences

So the first skill here, is noticing when you’re having wildly different expectations about what sort of conversation you’re having.

If George is looking for a trade and Frank is looking for a fight, George might find himself suddenly bruised in ways he wasn’t prepared for. And/or, Frank might have randomly destroyed resources when there’d been an opportunity for positive sum interaction.

Or: If Dwight says “I’m feeling so frustrated at work. My boss is constantly belittling me”, and then Bob leaps in with an explanation of why his boss is doing that and maybe trying to fix it…

Well, this one is at least a stereotypical relationship failure mode you’ve probably heard of before (where Dwight might just want validation).

Untangling Emotions, Beliefs and Goals

A more interesting example of Gears-and-Feelings might be something like:

Alice and Dwight are talking about what career options Dwight should consider. (Dwight is currently an artist, not making much money, and has decided they want to try something else)

Alice says “Have you considered becoming a programmer? I hear they make a lot of money and you can get started with a 3 month bootcamp.”

Dwight says “Gah, don’t talk to me about programming.”

It turns out that Dwight’s dad always pushed him to learn programming, in a fairly authoritarian way. Now Dwight feels a bunch of ughiness around programming, with a mixture of “You’re not the boss of me! I’mma be an artist instead!”

In this situation, perhaps the best option might be to say: “Okay, seems like programming isn’t a good fit for Dwight,” and move on.

But it might also be that programming is actually a good option for Dwight to consider… it’s just that the conversation can’t proceed in the straightforward cost/benefit analysis frame that Alice was exploring.

For Dwight to meaningfully update on whether programming is good for him, he may need to untangle his emotions. He might need to make peace with some longstanding issues with his father, or learning to detach them from the “should I be a programmer” question.

It might be that the most useful thing Alice can do is give him the space to work through that on his own.

If Dwight trusts Alice to shift into a feelings-oriented framework (or a framework that at least includes feeling), Alice might be able to directly help him with the process. She could ask him questions that help him articulate his subtle felt senses [LW · GW] about why programming feels like a bad option.

It may also be that this prerequisite trust doesn't exist, or that Dwight just doesn't want to have this conversation, in which case it's probably just best to move on to another topic.

Subtle differences between frames

This gets much more complicated when you observe that a) there’s lots of slight variations on frames, and b) many people and conversations involve a mixture of frames.

It’s not that hard to notice that one person is in a feelings-centric frame while another person is in a gears-centric frame. But things can actually get even more confusing if two people share a broad frame (and so think they should be speaking the same language), but actually they’re communicating in two different subframes.

Example differences between gears-frames

Consider variations of Alice and Bob – both focused on causal models – who are coming from these different vantage points:

Goal-oriented vs Curiosity-driven conversation

Alice is trying to solve a specific problem (say, get a particular car engine fixed), and Bob thinks they’re just, like, having a freewheeling conversation about car engines and how neat they are (and if their curiosity took them in a different direction they might shift the conversation towards something that had nothing to do with car engines).

Debate vs Doublecrux

Alice is trying to present arguments for her side, and expects Bob to refute those arguments or present different arguments. The burden of presenting a good case is on Bob.

Whereas Bob thinks they’re trying to mutually converge on true beliefs (which might mean adopting totally new positions, and might involve each person focusing on how to change their own mind rather than their partner’s)

Specific ontologies

If one person is, say, really into economics, then they might naturally frame everything in terms of transactions. Someone else might be really into programming and see everything as abstracted functions that call each other.

They might keep phrasing things in terms that fit their preferred ontology, and have a hard time parsing statements from a different ontology.

Example differences between feelings-frames

“Mutual Connection” vs “Turn Based Sharing”

Clark might be trying to share feelings for the sake of building connection (sharing back and forth, getting into a flow, getting resonance).

Whereas Dwight might think the point is more for each of them to fully share their own experience, while the other one listens and takes up as little space as possible.

“I Am My Feelings” vs “My Feelings are Objects”

Clark might highly self identify with his feelings (in a sort of Romantic framework). Dwight might care a lot about understanding his feelings but see them as temporary objects in his experience (sort of Buddhist)

Concrete example: The FOOM Debate

One of my original motivations for this post was the Yudkowsky/Hanson Foom Debate, where much ink was spilled but AFAICT neither Yudkowsky nor Hanson changed their mind much.

I recently re-read through some portions of it. The debate seemed to feature several of the “differences within gears-orientation” listed above:

Specific ontologies: Hanson is steeped in economics and sees it as the obvious lens to look at AI, evolution and other major historical forces. Yudkowsky instead sees things through the lens of optimization, and how to develop a causal understanding of what recursive optimization means and where/whether we’ve seen it historically.

Goal vs Curiosity: I have an overall sense that Yudkowsky is more action oriented – he’s specifically setting out to figure out the most important things to do to influence the far future. Whereas Hanson mostly seems to see his job as “be a professional economist, who looks at various situations through an economic lens and see if that leads to interesting insights.”

Discussion format: Throughout the discussion, Hanson and Yudkowsky are articulating their points using very different styles. On my recent read-through, I was impressed with the degree and manner to which they discussed this explicitly:

Eliezer notes:

I think we ran into this same clash of styles last time (i.e., back at Oxford). I try to go through things systematically, locate any possible points of disagreement, resolve them, and continue. You seem to want to jump directly to the disagreement and then work backward to find the differing premises. I worry that this puts things in a more disagreeable state of mind, as it were—conducive to feed-backward reasoning (rationalization) instead of feed-forward reasoning.

It’s probably also worth bearing in mind that these kinds of metadiscussions are important, since this is something of a trailblazing case here. And that if we really want to set up conditions where we can’t agree to disagree, that might imply setting up things in a different fashion than the usual Internet debates.

Hanson responds:

When I attend a talk, I don’t immediately jump on anything a speaker says that sounds questionable. I wait until they actually make a main point of their talk, and then I only jump on points that seem to matter for that main point. Since most things people say actually don’t matter for their main point, I find this to be a very useful strategy. I will be very surprised indeed if everything you’ve said mattered regarding our main point of disagreement.

I found it interesting that I find both these points quite important – I've run into each failure mode before. I'm unsure how to navigate between this rock and hard place [LW · GW].

My main goal with this essay was to establish frame-differences as an important thing to look out for, and to describe the concept from enough different angles to (hopefully) give you a general sense of what to look for, rather than a single failure mode.

What to do once you notice a frame-difference depends a lot on context, and unfortunately I'm often unsure what the best approach is. The next few posts will approach "what has sometimes worked for me", and (perhaps more sadly) "what hasn't."

40 comments

Comments sorted by top scores.

comment by Vanessa Kosoy (vanessa-kosoy) · 2019-09-30T11:42:21.301Z · LW(p) · GW(p)

I really liked this post, but I can't help doing a nerdy nitpick:

A potential difference is that Erica and Frank might think of their situation as zero-sum, and therefore most of the resolution has more to do with figuring out “who would win in a political fight?”, and then having the counterfactual loser back down.

The Erica/Frank game is not zero-sum. The entire point is that engaging in an actual fight would be lower sum than negotiating a solution "peacefully". From the perspective of game theory, this is a different type of game with very different properties.

Replies from: Raemon↑ comment by Raemon · 2019-09-30T19:54:06.822Z · LW(p) · GW(p)

The conception I had in mind was: Only either Erica or Frank can be higher pecking-order-status* within the company (zero sum). They can fight about that in a way that ends up harming both their reputations (negative sum).

There are third options, such as work together as an alliance that gains them both pecking-order-status, the expense of other managers (locally positive sum, still globally zero sum). They could also work together to grow the company (i.e. add a new department) which opens up whole new domains in which people can wield decision making power, make more money, and generally grow the pie of status/money/power in a way that is positive sum (even with the status frame)

*my sense is that pecking-order-status is zero sum, prestige status is not zero sum. But I don't have a very rigorous model here.

They might have some kind of ontological shift where they come to believe that the game they're playing doesn't make sense, or doesn't have to be as zero sum as they believed. (They shift from pecking order to prestige status orientation. They might both come to believe in the mission of their organization such that that matters more than status. They might start caring about money more than status, and while money is limited it's more concrete to understand how to grow the pie)

But the intent of the story was that they were at least implicitly seeing things through a zero status frame, whether or not that was the best frame.

Replies from: vanessa-kosoy↑ comment by Vanessa Kosoy (vanessa-kosoy) · 2019-10-01T09:37:34.439Z · LW(p) · GW(p)

I understood what you meant. My point is the the phrase "zero-sum" has very precise meaning in game theory, and your example doesn't fit that meaning. "Zero-or-negative-sum" is very much not the same as "zero-sum". In particular, you called this frame "dominance and threat", but in true zero-sum games there are no threats: if you have a way to hurt your opponent, you just use it.

Replies from: Raemon↑ comment by Raemon · 2019-10-01T13:20:22.829Z · LW(p) · GW(p)

Ah, okay. Does simply changing the phrasing to "zero or negative sum" fix the issue?

Replies from: cousin_it↑ comment by cousin_it · 2019-10-01T13:54:33.048Z · LW(p) · GW(p)

I think Vanessa is right. You're looking for a term to describe games where threats or cooperation are possible. The term for such games is non-zero-sum.

There are two kinds of games: zero-sum (or fixed-sum), where the sum of payoffs to players is always the same regardless of what they do. And non-zero-sum (or variable-sum), where the sum can vary based on what the players do. In the first kind of game, threats or cooperation don't exist, because anything that helps one player automatically hurts the other by the same amount. In the second kind, threats and cooperation are possible, e.g. there can be a button that nukes both players, which represents a threat and an opportunity for cooperation.

Calling a game "zero or negative sum" is just confusing the issue. You can give everyone a ton of money unconditionally, making the game "positive sum", and the strategic picture of the game won't change at all. The strategic feature you're interested in isn't the sign, but the variability of the sum, which is known as "non-zero-sum".

If you're thinking about strategic behavior, an LWish folk knowledge of prisoner's dilemmas and such is really not much to go on. Going through a textbook of game theory and solving exercises would be literally the best time investment. My favorite one is Ken Binmore's "Fun and Games", it's on my desk now. An updated version, "Playing for Real", can be downloaded for free.

Replies from: Raemon↑ comment by Raemon · 2019-10-01T20:02:20.928Z · LW(p) · GW(p)

Hmm. So it sounds like I'd passively-absorbed some wrong-terminology/concepts. What I'm currently unclear on is whether the wrong-terminology I absorbed was conceptually false or just "not what game theorists mean by the term."

A remaining confusion (feel free to say "just read the book" rather than explaining this. I'll check out Playing for Real when I get some time)

Why there can't be threats or cooperation in a game that is zero-sum, but with more than 2 players. (i.e. my conception is that pecking order status is zero sum, or at least many people think it is, and the thing at stake in the OP was Erica/Frank's conception of their relative status. If they destroy each other's reputations, two other people in the company would rise above them. If they cooperate and both gain, this necessarily comes at the expense of other players. Why doesn't this leave room for threats and cooperation?)

Replies from: vanessa-kosoy↑ comment by Vanessa Kosoy (vanessa-kosoy) · 2019-10-01T20:52:29.983Z · LW(p) · GW(p)

You're right, if there are more than 2 players, there can be threats and cooperation. However, a lot of the time when game theorists talk about zero-sum games, they talk specifically about two-player zero-sum games (because, these games have special properties e.g. the minimax theorem).

Replies from: Raemon↑ comment by Raemon · 2019-10-01T20:53:59.909Z · LW(p) · GW(p)

Okay, well if the intent is as described above (game is zero sum, more than 2 players), what changes to the text would you recommend? (I suppose the "and therefore..." is probably the most wrong part?)

Replies from: Raemon↑ comment by Raemon · 2019-10-02T21:48:49.465Z · LW(p) · GW(p)

(thinking a bit more)

I notice some confusion around past LW discussion, which has often used the phrase "positive sum". (examples including Beyond the Reach of God [LW · GW] and Winning is For Losers [LW · GW]). If "positive sum games" isn't really a thing I'd have expected to run into pushback about that at some point. (this seems consistent with "LW Folk Game Theory isn't necessarily real game theory", but if the distinction is important might be good to flag it other places it's come up)

The thing I'm actually trying to contrast here is "the sort of strategic landscape, and orientation, where the thing to do is to fight over who wins social points, vs the sort of strategic landscape that encourages building something together." (where "fighting over who gets social points can still involve cooperation, but they it's "allies at war" style cooperation that are dividing up spoils, rather than creating spoils)

Still interested in concrete suggestions on how to change the wording.

Replies from: rohinmshah, cousin_it, tomcatfish, Sniffnoy↑ comment by Rohin Shah (rohinmshah) · 2019-10-03T17:35:04.272Z · LW(p) · GW(p)

LW Folk Game Theory is in fact not real game theory. The key difference is that LW Folk Game Theory tends to assume that positive utility corresponds to "I would choose this over nothing" while negative utility corresponds to "I would choose nothing over this", and 0 utility is the indifference point.

Real Game Theory does not make such an assumption. In real game theory, you take actions that maximize your (expected) utility. Importantly, if you just add a constant to your utility function (for every possible action / outcome), then the maximizing action is not going to change -- there's no concept of "0 is the indifference point". So, if there are two outcomes that can be achieved, and no others, then the utility function is identical to . In LW Folk Game Theory, "doing nothing" is usually an action and is assigned 0 utility by convention, which prevents this from happening.

If "positive sum games" isn't really a thing I'd have expected to run into pushback about that at some point.

Consider a two player game where for any outcome , . Sure sounds like a positive-sum game, right? Well, by the argument above, I can replace with and the game remains exactly the same. And now we have , that is, for every observation , i.e. we're in a zero-sum game.

As cousin_it said, really they shouldn't be called zero-sum games, they should be called fixed-sum or constant-sum games. Two player constant-sum games are perfectly competitive, and as a result there are no threats: anything that hurts the other player helps you in exactly the same amount, and so you do it.

(As you note, if there are more than 2 players, you can get things like threats and collaboration, e.g. the weaker two players collaborate to overthrow the stronger one.)

Re: expecting pushback, I generally don't expect LW terminology to agree particularly well with academia. The goals are different, and the terminology reflects this. LW wants to be able to compare everything to "nothing happened", so there's a convention that nothing happens = 0 utility. Real game theory doesn't want to make that comparison, it prefers to have elegance and fewer assumptions.

LW "positive-sum games" means "both players are better off than if they did nothing", or at least "one of the players is better off by an amount greater than the amount the other player is worse off". Similarly for "negative-sum games". This is fundamentally about comparing to "nothing happens". Real game theory doesn't care, it is all about action selection; and many games don't have a "nothing happens" option. (See e.g. prisoner's dilemma, where you must cooperate or defect, you can't choose to leave the game.)

The thing I'm actually trying to contrast here is "the sort of strategic landscape, and orientation, where the thing to do is to fight over who wins social points, vs the sort of strategic landscape that encourages building something together."

I usually call this competitive vs. collaborative, and games / strategies can be on a spectrum between competitive and collaborative. The maximally competitive games are two player zero sum games. The maximally collaborative games are common payoff games (where all players have the same utility function). Other games fall in between.

(where "fighting over who gets social points can still involve cooperation, but they it's "allies at war" style cooperation that are dividing up spoils, rather than creating spoils)

Here it seems like there is both a collaborative aspect (maximizing the amount of spoils that can be shared between the two) and a competitive aspect (getting the largest fraction of the available spoils).

↑ comment by cousin_it · 2019-10-04T09:29:08.525Z · LW(p) · GW(p)

Seconding everything that Rohin said.

More generally, if you want to talk in an informed way about any science topic that's covered on LW (game theory, probability theory, computational complexity, mathematical logic, quantum mechanics, evolutionary biology, economics...) and you haven't read some conventional teaching materials and done at least a few conventional exercises, there's a high chance you'll be kidding yourself and others. Eliezer gives an impression of getting away with it, but a) he does read stuff and solve stuff, b) cutting corners has burned him a few times.

↑ comment by Alex Vermillion (tomcatfish) · 2022-01-13T19:16:01.636Z · LW(p) · GW(p)

Pages 4-5 of my edition of my copy of The Strategy of Conflict define two terms:

- Pure Conflict: In which the goals of the players are opposed completely (as in Eliezer's "The True Prisoner's Dilemma" [? · GW])

- Bargaining: In which the goals of the players are somehow aligned so that making trades is better for everyone

Schelling goes on to argue (again, just on page 5) that most "Pure Conflicts" are actually not, and that people can do better by bargaining instead. Then, he creates a spectrum from Conflict games to Bargaining games, setting the stage for the framework the book is written from.

[edit-in-under-5-minutes: Note that, even in the Eliezer article I posted above, we can see that super typical conflicts STILL benefit from bargaining. Some people informally make the distinction between "dividing up a pie between 2 people and working together to make more pies", and you clearly can see how you can "make more pies" in a PD.]

I'm pretty unhappy with the subthread talking about how wrong LessWrong Folk Game Theory is and how Game Theory doesn't use these topics. One of the big base-level Game Theory books takes the first few pages to write about the term you wanted, and I feel everyone could have looked around more before writing off your question as ignorant.

Replies from: tomcatfish↑ comment by Alex Vermillion (tomcatfish) · 2022-01-18T01:06:38.316Z · LW(p) · GW(p)

Schelling actually uses the term "zero sum game" repeatedly in his essay "Toward a Theory of Interdependent Decision", even explicitly equating it to "pure conflict". This essay starts on page 83 of my copy of the book.

I only realized this after my comment while flipping through, so I was going to leave it off, but it's been driving me mad for a few days since it significantly strengthens my above argument and explains why I find the derision in the replies so annoying.

↑ comment by Sniffnoy · 2019-10-19T17:47:49.078Z · LW(p) · GW(p)

Thirding what the others said, but I wanted to also add that rather than actual game theory, what you may be looking here may instead be the anthropological notion of limited good?

comment by TurnTrout · 2020-12-12T00:57:43.055Z · LW(p) · GW(p)

While I'd been aware of the 'frame differences' concept before, this post made me aware of the fact that frame differences exist. Over the last year, I've had several debates / disagreements which felt something like

me: here's a totally convincing argument that (dogs are better than cats).

I think to myself: this is it. that was well-articulated. they're about to agree with me.

them: that claim is totally true, but I'm not at all convinced, because (thing I agree with but which seems irrelevant)

And on and on. I imagine their end feels similarly.

Instead of feeling bewildered that this smart person I respect is being crazy, I realize I might just be inhabiting a different frame.

comment by Raemon · 2019-09-30T05:39:45.128Z · LW(p) · GW(p)

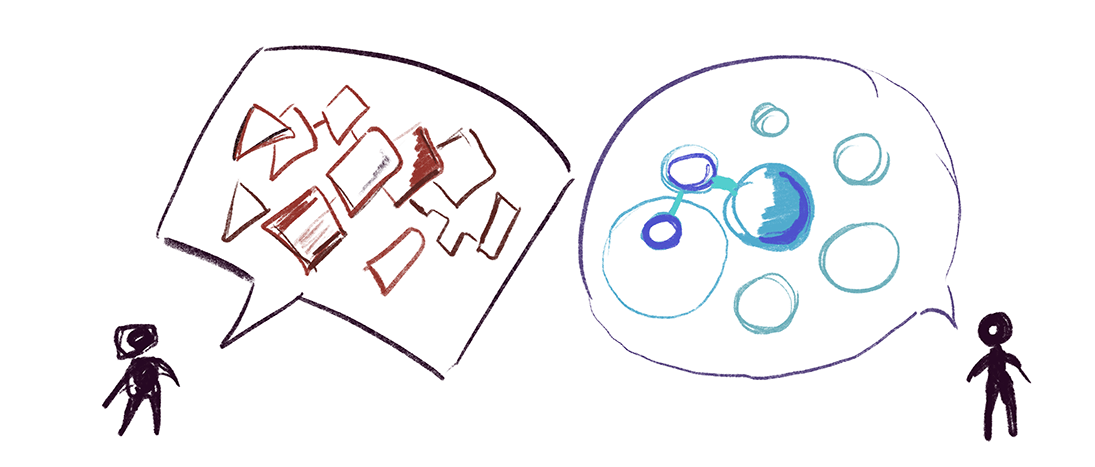

If you already roughly know what a frame is, I think most of the value of this post is being able to point at the diagrams to quickly get a sense of some different types of frames you might be dealing with. (Originally this post was a collection of pictures I drew on a whiteboard while talking about the nuances of how they fit together).

To that end, here's all the pictures right next to each other for easier gesturing at, either by my future self or anyone else who finds that useful:

The Language of Gears

The Language of Feelings

The Language of Dominance

The Language of Trade

Two Random Ontologies

comment by zulupineapple · 2019-10-04T17:57:09.356Z · LW(p) · GW(p)

In the examples, sometimes the problem is people having different goals for the discussion, sometimes it is having different beliefs about what kinds of discussions work, and sometimes it might be about almost object-level beliefs. If "frame" refers to all of that, then it's way too broad and not a useful concept. If your goal is to enumerate and classify the different goals and different beliefs people can have regarding discussions, that's great, but possibly to broad to make any progress.

My own frustration with this topic is lack of real data. Apart from "FOOM Debate", the conversations in your post are all fake. To continue your analogy in another comment, this is like doing zoology by only ever drawing cartoons of animals, without ever actually collecting or analyzing specimens. Good zoologists would collect many real discussions, annotate them, classify them, debate about those classifications, etc. They may also tamper with ongoing discussions. You may be doing some of that privately, but doing it publicly would be better. Unfortunately there seem to be norms against that.

comment by Bendini (bendini) · 2019-09-30T01:57:48.534Z · LW(p) · GW(p)

I'm interested to find out what worked for you, but I suspect that the root cause of failure in most cases is lacking enough motivation to converge. It takes two to tango, and without a shared purpose that feels more important than losing face, there isn't enough incentive to overcome epistemic complacency.

That being said, better software and social norms for arguing could significantly reduce the motivation threshold.

comment by hamnox · 2021-01-07T06:15:03.003Z · LW(p) · GW(p)

Oh man, I loved this post. Very vivid mental model for subtle inferential gaps and cross-purposes.

It's surprising it's been so long since I thought about it! Surely if it's such a strong and well-communicated mental model, I would have started using it.. So why did I not?

My guess: the vast majority of conversation frames are still *not the frame which contains talking about frames*. I started recognizing when I wanted to have a timeout and clarify the particular flavor of conversation desired before continuing, but it felt like I broke focus or rappor anytime I had to ask.

If the mentioned subframes carve reality neatly at its joints, I wouldn't know it. I could have brute force updated on them, going through each frame to consider what experiences I should anticipate if someone is using it, and figuring out what cheap tests I can use to distinguish between them.

I didn't.

(The right call, in hindsight. I need vastly more social training data: explore rather than exploit... Could have picked a better year to realize this.)

This post succeeded at giving me "a general sense of what to look for", rather than specific failure modes. Just as it was going for. It just leaves me with a lingering sadness that it didn't also establish common knowledge for how to go about having that meta conversation u_u

↑ comment by Raemon · 2021-01-07T06:37:23.623Z · LW(p) · GW(p)

Well the good news is if it gets into the book maybe this time there'll be common knowledge. :P

I do think there's an upfront cost of getting your social circle familiar with frame-shifting, even when people are willing and enthusiastic about it, unfortunately.

It does seem like there should probably be a post that focuses explicitly on "how to initiate the frame-meta-conversation if your partner isn't aware of it yet."

comment by Slider · 2019-09-30T14:57:34.962Z · LW(p) · GW(p)

I feel like "gears" discussion is not the same type of discussion type as the others. I think "technical discussion" would be a better replacement that makes the discussion style have a same kind of type. The example in about trying programming doesn't talk about modelling differences so I fail to see how there is a gears framework being used.

In general "gears" is not common language but LW specific and I think here what is meant by it is so up in the air that it's not particuarly communicative.

comment by johnswentworth · 2020-12-13T19:05:53.175Z · LW(p) · GW(p)

Nominating mainly for the diagrams, which stuck with me more than the specifics of the post.

comment by jimrandomh · 2019-10-05T02:30:23.798Z · LW(p) · GW(p)

Curated. I think the meta-frame (frame of looking at frames) is key to figuring out a lot of important outstanding questions. In particular, some ideas are hard to parse or generate in some frames and easy to parse or generate in others. Most people have frame-blind-spots, and lose access to some ideas that way. There also seem to be groups within the rationality community that are feeling alienated, because too many of their conversations suffer from frame-mismatch problems.

comment by Ruby · 2019-09-30T16:48:54.570Z · LW(p) · GW(p)

Copied from a Facebook conversation about this post which started with a claim that Raemon's usage of "frame" was too narrow..

[1. I like this version of the post having seen some drafts, seems pretty good to me. Clean, I think it conveys the idea very neatly and will be useful to build upon/reference.

2. Talking in 3rd person about Ray 'cause it's just clearer who I'm talking to.]

Pretty sure I'm the disagreeing person in question. I've been exposed to Ray's usage of "frame" for so long now that it's muddied my sense of what I would have used "frame" to mean before Ray starting talking about it a lot, though I do recall my prior usage being more specific, like a within-gears mismatch rather than a between gears/feelings mismatch.

My feedback at this point would be less around what specifically frame gets used for, and more that there's still a lot of ambiguity. There's a very broad set of variables that can differ between people in conversation: perspectives/backgrounds/assumptions/goals/motives/techniques/methods/culture/terminology/ontology/etc./etc. and there are lots of similarities and differences. Based on conversation, my understanding is that Ray wants to talk about all of them.

I'd kind of like a post that tries to establish clear terminology for discussing these using both intensionsal/extensional [LW · GW] definitions and both positive/negative examples [LW · GW]. Also maybe how "frames" related to other concepts in conversation space. I think the OP only has positive extensional examples that gesture generally at the cluster of concepts but doesn't help me get a sense of the boundaries of what Ray has in mind.

I expect the above post I'm suggesting to be kind of boring/dry (at least as I would naively write it) but if it was written, it would enable deeper conversations and better insights into the whole topic of conversation. Conceivably it's boring to have to learn how velocity/force/energy/power/momentum all mean very similar but still different things, but modern physics is powerful because it can talk so precisely about things.

I think posts/sequences I would like would be 1) how to notice/handle/respond to mismatches to between different gears-frames, or just how to navigate gears/feelings clashes. Specific and concrete. (This is basically Bacon's inductivism [? · GW], spirit of Virtue of Precision, Specificity Sequence. I guess just a lot of things nudging my intuitions towards specificity rather than talking about all in the instances of a thing.

I think that's my revised current feedback.

ETA: Taking another look, there is intensional definition in the post, "seeing, thinking, and communicating", which are a helpful and a good start, I'd probably like those fleshed out more and connected to the examples.

Replies from: Raemon↑ comment by Raemon · 2019-09-30T20:10:07.684Z · LW(p) · GW(p)

ETA: Taking another look, there is intensional definition in the post, "seeing, thinking, and communicating", which are a helpful and a good start, I'd probably like those fleshed out more and connected to the examples.

I do have thoughts about how to write a post like that, but I wanted to check whether other people would find it helpful.

Part of my concern here is that I'm just too early in the process to have "correct" beliefs about frames that are more specific than what I've written here.

The goal of this post (and this sequence) is not to fully flesh out how to resolve one particular set of "way people can be talking past each other." The goal is to give people a general, flexible sense of how to direct when you are talking past each other or failing to resolve disagreement, and then be able to get some kind of traction on resolving it, no matter what the specific problem turned out to be.

And I think trying to define "frame" more rigorously would be more likely to give people a new false sense that they understand what's going on (a new "frame" to be locked into without noticing that you're in a frame), than to do anything helpful.

(This is more of a prediction about me, and my current understanding of frames and disagreements, than it is a prediction about the general world. Someone who's spent years resolving gnarly disagreements might be able to write the post you want)

I've been exposed to Ray's usage of "frame" for so long now that it's muddied my sense of what I would have used "frame" to mean before Ray starting talking about it a lot, though I do recall my prior usage being more specific, like a within-gears mismatch rather than a between gears/feelings mismatch.

This is actually a good example of the sort of failure I'm worried about if I defined frame too cleanly. If you focus on "within gears mismatch" I predict you'll do worse at resolving disagreements that aren't about that.

I'm worried that even specifying "different ways of seeing, thinking and/or communicating" is going to turn out to be too specific and that I'll have missed something. (Although I did think for awhile and all the examples I could think of seemed to fit. Maybe because "ways of thinking" is sufficiently broad to cover a lot of cases)

That all said..

The next post is going to get at least a bit more specific with the metaphor, at least to help give some hooks as to the different ways seeing/thinking/communicating can be different. It won't be fully fleshed out but will at least hopefully give people some mental hooks.

Replies from: Ruby↑ comment by Ruby · 2019-09-30T20:33:24.101Z · LW(p) · GW(p)

I think we've got some significant disagreements about "methodology of science" here. I think that much of this approach feels off to me, or at least concerning. I'll try to write a bit more about this later (edit: I find myself tempted to write several things now . . .).

And I think trying to define "frame" more rigorously would be more likely to give people a new false sense that they understand what's going on (a new "frame" to be locked into without noticing that you're in a frame), than to do anything helpful.

E.g. defining "frame" more rigorously would let people know more specifically what you're trying to talk about and help both you and them more specific/testable predictions and more quickly notice where the model fails in practice, etc. More precise definitions should lead to sticking your neck out more properly. Not that I'm an expert at doing this, but this feels like a better way to proceed even at the risk that people get anchored on bad models (I imagine a way to avoid this is to create, abandon, and iterate on lots of models, many which are mistaken and get abandoned, so people learn the habit.)

This is actually a good example of the sort of failure I'm worried about if I defined frame too cleanly. If you focus on "within gears mismatch" I predict you'll do worse at resolving disagreements that aren't about that.

So it's only my sense of what that word refers to when people say it that has been shifted, not my models, expectations, or predictions of the world. That seems fine in this context (I dislike eroding meanings of words with precise meanings via imprecise usage, but "frame" isn't in that class). The second sentence here doesn't seem related to the first. To your concern though, I say "maybe?", but I have a greater concern that if you don't focus on how to address a single specific type of frame clash well enough, you won't learn to address any of them very well.

Replies from: Raemon↑ comment by Raemon · 2019-09-30T22:27:42.410Z · LW(p) · GW(p)

I think we've got some significant disagreements about "methodology of science" here.

...

E.g. defining "frame" more rigorously would let people know more specifically what you're trying to talk about and help both you and them more specific/testable predictions and more quickly notice where the model fails in practice, etc.

I think I'm mostly just not trying to do science at this point.

Or rather: this is still at the "roughly point in the direction of the thing I'm even trying to understand, and get help gathering more data", point, rather than the "propose a hypothesis and make concrete predictions point."

My models here are coming from maybe a total of... 10 datapoints, each of which had a lot of variables, and I don't know which ones were the important ones. *I* don't necessarily know what I mean by frame at this point, so being more specific just gives people an inaccurate representation of my own undertanding.

Like, if you're early humans and you're trying to first figure out zoology but you're at the point where even if you're like "I'm studying animals" and they're like "what are animals" and... sure you can give them the best guess that will most likely be wrong (a la defining humans as featherless bipeds), but it's more useful to just point at a few animals and say "things that are kinda like this."

It might be useful to give "featherless biped" type definitions to begin the process of refining it into something real. (And I've done that, in the form of "ways of seeing/thinking/communicating"). But, it seems quite important to notice that featherless-biped-type-definitions are almost certainly wrong and should not be taken seriously except as an intellectual exercise.

I have a greater concern that if you don't focus on how to address a single specific type of frame clash well enough, you won't learn to address any of them very well.

I don't we have the luxury of doing this – each disagreement I've found was fairly unique, and required learning a new set of things to notice and pay attention to, and stretched my conception of what it meant to disagree productively. I've partially resolved maybe 5 deep disagreements (but it's still possible that many of my actions there were a waste of time or made things worse), and then not obviously even made any progress on another 5.

Replies from: Raemon, Ruby↑ comment by Raemon · 2019-09-30T22:30:48.420Z · LW(p) · GW(p)

FWIW, here's a rough sense of where the sequence is going:

- There are very different ways of seeing the world.

- It's a generally important life skill to understand when other people are seeing the world differently.

- It is (probably) also an important life skill to be able to see the world in a few different ways. (not necessarily any particular "few different" ways – there's an experiential shift that comes from having had to learn to see a couple different ways that I think is really necessary for resolving disagreement and conflict and coexisting). Put another way, being able to "hold your frame as object."

- This seems really important for humanity generally (for cosmopolitan coexistence)

- This seems differently important for the rationality community.

- I think of LessWrong as "about the gears frame", and that's fine (and quite good). But the natural ways of seeing the gears frame tend to leave people sort of stuck or blind. LessWrong is about building a single-not-compartmentalized probabilistic model of the universe, but this needs to include the parts of the universe that gears-oriented-people tend not to notice as readily."

- It's important to resolve the question "how do we have high epistemic standards about things that aren't in the gears frame, or that can't be made explicit enough to share between multiple people with different gear-frames."

↑ comment by Slider · 2019-10-01T01:27:10.880Z · LW(p) · GW(p)

I don't understand the question in the last point. I am being intentionally stupid and simple, what reason do you have to guess/believe that epistemic standards would be harder to apply to non-gear frames?

Replies from: Raemon↑ comment by Raemon · 2019-10-01T06:15:08.174Z · LW(p) · GW(p)

The motivating example there is "how to have high epistemic standards around introspection, since introspection isn't publicly verifiable, but is also fairly important to practical rationality." I think this is at least somewhat hard, and separate from being hard, it's a domain that doesn't have agreed upon rules.

Replies from: Raemon↑ comment by Raemon · 2019-10-01T06:32:17.115Z · LW(p) · GW(p)

(I realize it might be not be clear how that sentence followed from the previous point, since there's nothing intrinsically non-gearsy about introspection. The issue is something like "at least some of the people exploring introspection techniques are also coming at it from fairly different frames, which can't easily all communicate with each other. From the outside, it's hard to tell the difference between 'a person is wrong about facts' and 'a person's frame is foreign.'")

Replies from: Slider↑ comment by Slider · 2019-10-02T12:49:56.803Z · LW(p) · GW(p)

So the connection is "The straightforward way to increase epistemological competence is to talk about beliefs in detail. In introspection it is hard to apply this method because details can't be effectively shared to get an understanding". It seems to me it is not about gear-frames being special but that frames have preconditions to get them to work and an area that allows/permits a lot of frames makes it hard to hit any frames prequisities.

↑ comment by Ruby · 2019-10-01T00:29:46.353Z · LW(p) · GW(p)

I think I'm mostly just not trying to do science at this point.

"Methodology of science" was in quotes because I meant more generally "process for figuring things out." I think we do have different leanings there, but they won't be quick to sort out, mostly because (at least in my case) they're more leanings and untested instincts. So mostly just flagging for now.

*I* don't necessarily know what I mean by frame at this point, so being more specific just gives people an inaccurate representation of my own understanding.

I think there are ways to be very "precise about your uncertainty" though.

I don't we have the luxury of doing this – each disagreement I've found was fairly unique, and required learning a new set of things to notice and pay attention to, and stretched my conception of what it meant to disagree productively.

Hmm, it does seem that naturally occurring debates will be over all the place in a way that will make want you to study them all simultaneously.

comment by Raemon · 2019-09-30T01:27:26.483Z · LW(p) · GW(p)

(A few people gave feedback that this post was overly long and not as clear as it could be. I spent a month trying to split it into more concise posts that bridged the distance better. But none of them really came together and eventually I decided to post it as-is)

comment by jimrandomh · 2021-01-12T06:28:07.716Z · LW(p) · GW(p)

I continue to think this post is important, for basically the same reasons as I did when I curated it. I think for many conversations, having the affordance and vocabulary to talk about frames makes the difference between them going well and them going poorly.

comment by Matt Goldenberg (mr-hire) · 2020-12-06T20:40:29.421Z · LW(p) · GW(p)

Being able to notice differences in frames is so valuable. I've thought quite differently about disagreements since reading this post.

comment by danielechlin · 2025-03-18T20:06:45.733Z · LW(p) · GW(p)

One I've noticed is pretty well-intentioned "woke" people are more "lived experiences" oriented and well-intentioned "rationalist" people are more "strong opinions weakly held." Honestly, if your only goal is truth seeking, and admitting I'm rationalist-biased when I say this and also this is simplified, the "woke" frame is better at breadth and the "rationalist" frame is better at depth. But ohmygosh these arguments can spiral. Neither realize their meta is broken down. The rationalist thinks low-confidence opinions are humility; the woke thinks "I am open to others' lived experience outside of my own" is humility.

Experientially, yes, I've seen both "sides" be well intentioned, in reasonably good faith, both trying to act above a baseline level of rational, and a baseline level of humble along privilege concerns. IDK the solution but the general pattern should be something like reverting to "human" norms not "debate" norms, like use "I feel" statements and draw on what you genuinely have in common at point of debate. (If the answer is "nothing" then either your norms difference escalated to a real fight or you have different instrumental goals so go do something else.)

comment by Isaac King (KingSupernova) · 2021-09-12T16:41:21.450Z · LW(p) · GW(p)

making piece

should be

making peace