Predictive coding and motor control

post by Steven Byrnes (steve2152) · 2020-02-23T02:04:57.442Z · LW · GW · 3 commentsContents

My picture of predictive coding and motor control How does this wiring get set up? Are these connections learned or innate? None 3 comments

Predictive coding is a theory related to how the brain works—here is the SSC introduction. As in my earlier post [LW · GW], I'm very interested in the project of mapping these ideas onto the specific world-modeling algorithms of the neocortex. My larger project here is trying to understand the algorithms underlying human emotions and motivations—a topic I think is very important for AGI safety, as we may someday need to know how to reliably motivate human-like AGIs to do what we want them to do! (It's also relevant for understanding our own values, and for mental health, etc.) But motor control is an easier, better-studied case to warm up on, and shares the key feature that it involves communication between the neocortex and the older parts of the brain.

So, let's talk about predictive coding and motor control.

The typical predictive coding description of motor control[1] goes something like this:

Stereotypical predictive coding description: When you predict really strongly that your toes are about to wiggle, they actually wiggle, in order to minimize prediction error!

Or here's Karl Friston's perspective (as described by Andy Clark): "...Proprioceptive predictions directly elicit motor actions. This means that motor commands have been replaced by (or as I would rather say, implemented by) proprioceptive predictions."

As in my earlier post [LW · GW], while I don't exactly disagree with the stereotypical predictive coding description, I prefer to describe what's going on in a more mechanistic and algorithmic way. So I offer:

My picture of predictive coding and motor control

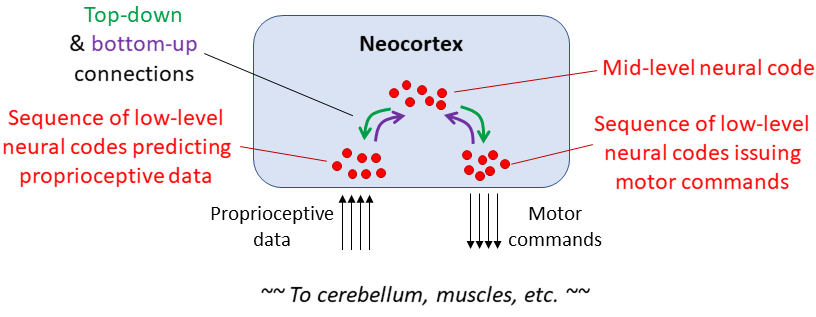

In this cartoon example,[2] there is a currently-active neural code at the second-to-lowest layer of the cortical hierarchy, corresponding to toe-wiggling. Now in general, as you go up the cortical hierarchy, information converges across space and time.[3] So, following this pattern, this mid-level neural code talks to multiple lower-level regions of the cortex, sending top-down signals to each that activate a time-sequence of neural patterns.

In one of these low-level regions (left), the sequence of neural patterns corresponds to a set of predictions about incoming proprioceptive data—namely, the prediction that we will feel our toe wiggling.

In another of these low-level regions (right), the sequence of patterns is sending out motor commands to wiggle our toe, in a way that makes those proprioceptive predictions come true.

Thus, we can say that the a single mid-level neural code represents both the prediction that our toe will wiggle and the actual motor commands to make that happen. Thus, as in the classic predictive coding story, we can say that the prediction and commands are one and the same neural code—well, maybe not at the very bottom of the cortical hierarchy, but they're the same everywhere else. At least, they're the same after the learning algorithm has been running for a while.

(By the way, contra Friston, I think there are also mid-level neural codes that represent the prediction that our toe will wiggle, but which are not connected to any motor commands. Such a code might, for example, represent our expectations in a situation when we are lying limp while a masseuse is wiggling our toe. In this case, the code would link to both proprioceptive inputs and skin sensation inputs, rather than both proprioceptive inputs and motor outputs.)

How does this wiring get set up?

Same way as anything else in the neocortex [LW · GW]! This little mid-level piece of the neocortex is building up a space of generative models—each of which is simultaneously a sequence of proprioceptive predictions and a sequence of motor outputs. It proposes new candidate generative models by Hebbian learning, random search, and various other tricks. And it discards models whose predictions are repeatedly falsified. Eventually, the surviving models in this little slice of neocortex are all self-consistent: They issue predictions of toe-related proprioceptive inputs, and issue the sequence of motor commands that actually makes those predictions come true.

Note that there are many surviving (non-falsified) generative models, because there are many ways to move your toes. Thus, if there is a prediction error (the toe is not where the model says it's supposed to be), the brain's search algorithm immediately summons a different generative model, one that starts with the toe in its actual current position.

The same thing, of course, is happening higher up in the cortical hierarchy. So the higher-level generative model "I am putting on my sock" invokes various of these low-level toe-movement models at different times, discarding the sock-putting-on generative models that are falsified, and thus winding up with the surviving models being those that tell coherent stories of the sight sound, feeling, and world-model consequences of putting on a sock, along with motor commands that make these stories come true.

Are these connections learned or innate?

If you've read this earlier post [LW · GW], you'll guess what my opinion is: I think the specific sequences of neural codes in the three areas shown in the cartoon (proprioceptive, motor, and their association), and their specific neural-code-level connections, are all learned, not innate. But I do think our genes speed the process along by seeding connections among the three areas, thus setting up the cortex with all the right information streams brought together into the right places for their relationships to be learned quickly and effectively.

An illustrative example on the topic of learned-vs-innate is Ian Waterman[4], who lost all proprioceptive sense at age 19 after a seemingly-minor infection.[5] Remarkably, he taught himself to move (including walking, writing, shopping, etc.) by associating muscle commands with visual predictions! So if he was standing in a room that suddenly went pitch black, he would just collapse! He had to focus his attention continually on his movements—I guess, in the absence of direct connections between the relevant parts of his neocortex, he had to instead route the information through the GNW [? · GW]. But anyway, the fact that he could move at all is I think consistent with the kind of learning-based mechanism I'm imagining.

(Question: How do many mammals walk on their first day of life, if the neocortex needs to learn the neural codes and associations? Easy: I say they're not using their neocortex for that! If I understand correctly, there are innate motor control programs stored in the brainstem, midbrain, etc. The neocortex eventually learns to "press go" on these baked-in motor control programs at appropriate times, but the midbrain can also execute them based on its own parallel sensory-processing systems and associated instincts. My understanding is that humans are unusual among mammals—maybe even unique—in the extent to which our neocortex talks directly to muscles, rather than "pressing go" on the various motor programs of the evolutionarily-older parts of the brain.[6]

Note: I'm still trying to figure this stuff out. If any of this seems wrong, then it probably is, so please tell me. :-)

See, for example, Surfing Uncertainty chapter 4 ↩︎

I'm being a bit sloppy with some implementation details here. Is it a two-layer hierarchical setup, or "lateral" (within-hierarchical-layer) connections? Where is it one neural code versus a sequence of codes? Why isn't the cerebellum shown in the figure? None of these things matter for this post. But just be warned not to take all the details here as literally exactly right. ↩︎

If you're familiar with Jeff Hawkins's "HTM" (hierarchical temporal models) theories, you'll see where I'm getting this particular part. See On Intelligence (2004) for details. ↩︎

I first heard of Ian Waterman in the excellent book [LW(p) · GW(p)] The Myth of Mirror Neurons. His remarkable story was popularized in a 1998 BBC documentary, The Man Who Lost His Body. ↩︎

The theory mentioned in the documentary is that it was an autoimmune issue—i.e., his immune system latched onto some feature of the invading bacteria that happened to also be present in a specific class of his own nerve cells, the kind that relays proprioceptive data. ↩︎

I don't know the details ... this claim is stated without justification in On Intelligence p69. Note that "talks directly to muscles" is an overstatement; on their way to the muscles, I think the signals pass through at least the basal ganglia, thalamus, and cerebellum. I guess I should say, "less indirectly". ↩︎

3 comments

Comments sorted by top scores.

comment by Gordon Seidoh Worley (gworley) · 2020-03-01T20:38:28.225Z · LW(p) · GW(p)

Question: How do many mammals walk on their first day of life, if the neocortex needs to learn the neural codes and associations? Easy: I say they're not using their neocortex for that! If I understand correctly, there are innate motor control programs stored in the brainstem, midbrain, etc. The neocortex eventually learns to "press go" on these baked-in motor control programs at appropriate times, but the midbrain can also execute them based on its own parallel sensory-processing systems and associated instincts. My understanding is that humans are unusual among mammals—maybe even unique—in the extent to which our neocortex talks directly to muscles, rather than "pressing go" on the various motor programs of the evolutionarily-older parts of the brain.

I don't have a better story here, but this seems odd in that we'd have to somehow explain why humans don't use some baked-in motor control programs if they are there are other mammals. Not that we can't, only that by default we should expect humans have them and they show an ability to let the neocortex and muscles "talk" directly, so it leaves this interesting question of why we think humans don't engage with stored motor control programs too.

Replies from: steve2152↑ comment by Steven Byrnes (steve2152) · 2020-04-12T13:31:30.680Z · LW(p) · GW(p)

Well, to be clear, I do think we have subcortical motor programs, even if they're relatively less important than in other mammals. An example would be scowling when angry, or flinching away from a projectile.

Why don't we walk on the day we're born? Because neither our brain nor our body is finished being built, I suppose.

comment by lsusr · 2020-06-04T18:48:36.762Z · LW(p) · GW(p)

I am sufficiently convinced that predictive coding is a worthwhile theory to explore. I want to understand it on the lower level, as an analog electrical computer. Are you aware of good sources that go into predictive coding with mathematical precision? Surfing Uncertainty [LW(p) · GW(p)] seems to be written at too high a level for this task.