The Trolley Problem

post by lsusr · 2021-09-27T08:26:49.334Z · LW · GW · 25 commentsContents

25 comments

If you go to Harvard Law School, one of the first classes you'll take is called "Justice: What's The Right Thing To Do?" It starts with the trolley problem.

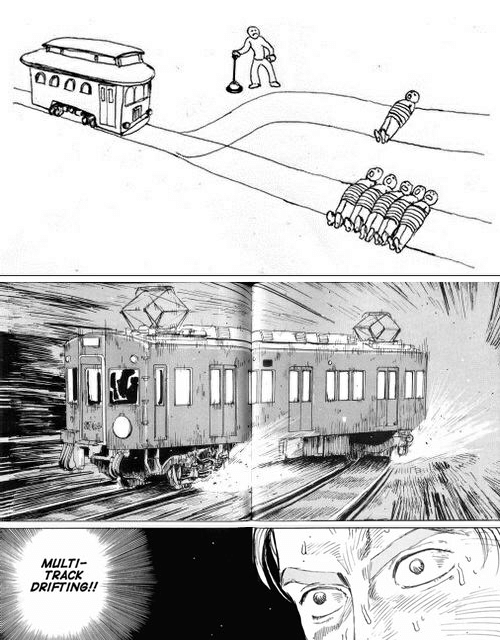

There's a trolley hurling down the tracks with no breaks. It's on its way to hitting and killing 5 people. You can pull a lever but if you do the trolley will kill 1 person instead. Do you pull the lever?

Students are asked "Should you pull the lever?"

- Some say "Yes because utilitarianism should maximize human life."

- Some say "No because the categorical imperative forbids murder."

They're both wrong. The question is underspecified. The right answer is "It depends."

You're in Germany in 1943. A train full of political prisoners is hurtling toward its doom. You can pull a lever to save their lives but if you do the train will hit Heinrich Himmler instead. Do you pull the lever?

Heck yeah!

You're a fighter in the French Resistance in 1943. A train full of SS officers is hurtling toward its doom. You can pull a lever to save their lives but if you do it'll hit your friend who is planting a bomb on the railroad tracks. Do you pull the lever?

Of course not.

Those hypothetical situations cheat the problem. The purpose of the trolley problem is to compare one positive value against another positive value. Let's do that.

You're a general in the Russian army in 1943. A train full of Russian soldiers is hurtling toward its doom. You can pull a lever to save their lives but if you do it'll hit a Russian soldier standing on the tracks. All the soldiers are of the same rank. Do you pull the lever?

Yes, obviously. Your job is to maximize the combat effectiveness of your fighting force.

You're a doctor in 2021. Do you murder a patient and harvest his organs to save the lives of 5 other people?

No, obviously. That would be monstrous. Even if it weren't, the negative externalities would overwhelm your proximate benefit.

If the answer to an ethical question is always the same, regardless of context, then you can reason about it as a context-free abstraction. But answers to ethical questions are usually context-dependent.

If I took the class I'd ask "What year is it? Where am I? Who am I? Who's in the trolley? Who's on the tracks? Who designed the trolley? Who is responsible for the brake failure? Do I work for the trolley company? If so, what are its standard operating procedures for this situation? What would my family think? Would either decision affect my future job prospects? Is there a way for me to fix the systemic problem of trolleys crashing in thought experiments? Can I film the crash and post the video online?"

By the time I get answers to half those questions, the trolley will have crashed, thus resolving the dilemma.

25 comments

Comments sorted by top scores.

comment by Gunnar_Zarncke · 2021-09-27T09:40:49.427Z · LW(p) · GW(p)

Well, Science in a High-Dimensional World [LW · GW] means to hold some things constant. Does this approach work with ethics too?

comment by Vladimir_Nesov · 2021-09-27T14:38:15.445Z · LW(p) · GW(p)

Looking to accurate answers as the purpose of such questions is bad methodology, that's not how you make them useful. Thought experiments, just like scientific experiments, should aid with isolating a particular consideration from other confusing things, should strive to make use only of well-understood principles to look into what's going on with one consideration under study, not settle a complex balance of everything potentially relevant.

comment by Mo Putera (Mo Nastri) · 2021-09-29T17:41:57.855Z · LW(p) · GW(p)

This feels like you're avoiding the least convenient possible world [LW · GW], even if that wasn't your intention.

comment by alexgieg · 2021-09-27T10:07:49.305Z · LW(p) · GW(p)

This analysis shows one advantage virtue ethics has over utilitarianism and deontology with its strong focus on internal states as compared to these and their focus on external reality. And it also shows aspects of the Kohlbergian analysis of the different levels of cognitive complexity possible in the moral reasoning of moral agents. Well done!

One concrete example I like to refer to is the Maori massacre of the Moriori tribe. The Moriori were radical non-violence practitioners who lived in their own island, to the point even Gandhi would be considered too angry of a person to their tastes. The Maori, in contrast, had a culture that valued war. When the Maori invaded the Moriori's island, they announced it by torturing a Moriori girl to death and waited for them to attack, expecting a worthy battle. The Moriori didn't attack, they tried to flee and submit. The Maori were so offended by having their worthy battle denied that they hunted the Moriori to extinction, and not via quick deaths, no. Via days-long torture. This is the one tale that helps me to weight down my own non-violence preferences down into reasonableness, to avoid over-abstracting things.

On the last point, you reminded me of a comedian impersonating different MBTI types. When playing the INTP profile he began acting as a teacher reading a math question from the textbook to his students: "There are 40 bananas on the table. If Suzy eats 32 bananas, how many bananas...", then stops, looks up at the camera while throwing the book away, and asks "Why is Suzy eating 32 bananas? What's wrong with her!?" 😁

Replies from: Lucas2000↑ comment by Lucas2000 · 2021-09-27T10:31:31.471Z · LW(p) · GW(p)

Doesn't your Maori massaclre example disprove the validity of virtue ethics?

Replies from: alexgieg↑ comment by alexgieg · 2021-09-27T13:01:21.349Z · LW(p) · GW(p)

I wouldn't say it's a matter of validity, exactly, but of suitability to different circumstances.

In my own personal ethics I mix a majority of Western virtues with a few Eastern ones, filter them through my own brand of consequentialism in which I give preference to actions that preserve information to actions that destroy it, ignore deontology almost entirely, take into consideration the distribution of moral reasoning stages as well as which of the 20 natural desires may be at play, and leave utilitarian reasoning proper to solve edge cases and gray areas.

The Moriori massacre is precisely one of the references I keep in mind when balancing all of these influences into taking a concrete action.

comment by dvasya · 2021-10-25T05:19:58.923Z · LW(p) · GW(p)

Of course the right thing to do is to pull the lever. And the right time to do that is once the trolley's front wheels have passed the switch but the rear wheels haven't yet. The trolley gets derailed, saving all 6 lives.

Replies from: alexgiegcomment by Dagon · 2021-09-30T15:39:46.215Z · LW(p) · GW(p)

In a law class, the binding question would be "who is my client"? I believe I can make an argument for culpability or justification of any action, including flipping a coin or somehow derailing the trolley, saving all 6 but killing 20 passengers.

I don't anticipate facing the question in reality, and don't have a pre-committment to what I'd do. Analogous decisions (where I can prioritize outcome over common rules) are not actually similar enough to generalize. In many of those, the constraint is how much my knowledge and impact prediction differs from he circumstances that evolved the rules. In cases where I'm average, I follow rules. In cases where I have special knowledge or capability, I optimize for outcome.

This is unsatisfying to many, and those who pose this question often push for a simpler answer, and get angry at me for denying their hypothetical. But that's because it's simply false that I have a rule for whether to follow rules or decide based on additional factors.

comment by NcyRocks · 2021-09-27T22:53:21.652Z · LW(p) · GW(p)

It's easy to respond to a question that doesn't contain much information with "It depends" (which is equivalent to saying "I don't know" [LW · GW]), but you still have to make a guess [LW · GW]. All else being the same, it's better to let 1 person die than 5. Summed over all possible worlds that fall under that description, the greatest utility comes from saving the most people. Discovering that the 1 is your friend and the 5 are SS should cause you to update your probability estimate of the situation, followed by its value in your utility function. Further finding out that the SS officers are traitors on their way to assassinate Hitler and your friend is secretly trying to stop them should cause another update. There's always some evidence that you could potentially hear that would change your understanding; refusing to decide in the absence of more evidence is a mistake. Make your best estimate and update it as you can.

The only additional complexity that ethical questions have that empirical questions don't is in your utility function. It's equally valid to say "answers to empirical questions are usually context-dependent", which I take to mean something like "I would update my answer if I saw more evidence". But you still need a prior, which is what the trolley problem is meant to draw out: what prior utility estimates do utilitarianism/deontology/virtue ethics give over the action space of pulling/not pulling the lever? Discovering and comparing these priors is useful. The Harvard students are correct in answering the question as asked. Treating the thought experiment as a practical problem in which you expect more information is missing the point.

↑ comment by lsusr · 2021-09-27T22:58:02.864Z · LW(p) · GW(p)

Welcoming to the forum!

It's only in the real world that you have to make a choice instead of saying "I don't know". In a real world situation you have lots of contextual information. It is the burden of the person asking an ethical question to supply all the relevant context that would be available in a real-world situation.

Replies from: NcyRocks↑ comment by NcyRocks · 2021-09-28T00:00:15.318Z · LW(p) · GW(p)

Thanks for the welcome!

I disagree that anyone who poses an ethical thought experiment has a burden to supply a realistic amount of context - simplified thought experiments can be useful. I'd understand your viewpoint better if you could explain why you believe they have that burden.

The trolley problem, free from any context, is sufficient to illustrate a conflict between deontology and utilitarianism, which is all that it's meant to do. It's true that it's not a realistic problem, but it makes a valid (if simple) point that would be destroyed by requiring additional context.

Replies from: lsusr↑ comment by lsusr · 2021-09-28T00:08:21.431Z · LW(p) · GW(p)

It's possible for a question to be wrong. Suppose I ask "What's 1+1? Is it 4 or 5?" ("No" is not allowed.) The question assumes the answer is 4 or 5, which is wrong.

Presenting a false dichotomy makes the same mistake. In the trolley problem, the implied dichotomy is "pull the lever" or "don't pull the lever". They're both wrong. "Sometimes pull the lever" is the correct answer. If "sometimes pull the lever" isn't an option then the only options are "always pull the lever" and "always don't pull the lever". This is a false dichotomy.

A thought experiment need not provide a realistic level of context. But it bears the burden of providing necessary relevant context when that context would be available in reality.

The trolley problem does illustrate the conflict between utilitarianism and deontology. It also illustrates how underspecified questions elicit nonsense answers.

Replies from: NcyRocks↑ comment by NcyRocks · 2021-09-28T01:03:11.179Z · LW(p) · GW(p)

Thanks for explaining - I think I understand your view better now.

I guess I just don't see the trolley problem as asking "Is it right or wrong, under all possible circumstances matching this description, to pull the lever?" I agree that would be an invalid question, as you rightly demonstrated. My interpretation is that it asks "Is it right or wrong, summed over all possible circumstances matching this description, weighted by probability, to pull the lever?" I.e. it asks for your prior, absent any context whatsoever, which is a valid question.

Under that interpretation, the correct answer of "sometimes pull the lever" gets split into "probably pull the lever" and "probably don't pull the lever", which are the same in effect as "pull" and "don't pull". The supposition is that you have a preference in most cases, not that your answer is the same in all cases.

(This is still a false dichotomy - there's also the option of "pull the lever equally as often as not", but I've never heard of anyone genuinely apathetic about the trolley problem.)

The first interpretation seems sensible enough, though, in the sense that many people who pose the trolley problem probably mean it that way. The correct response to those people is to reject the question as invalid. But I don't think most people mean it that way. Most people ask for your best guess.

Edit: On further reflection I think the usual interpretation is closer to "Is it better to follow a general policy, over all possible circumstances matching this description, to pull the lever or not?" I think this is closer to your interpretation but I don't think it should produce a different answer to mine.

Replies from: lsusr↑ comment by lsusr · 2021-09-28T04:08:19.871Z · LW(p) · GW(p)

My interpretation is that it asks "Is it right or wrong, summed over all possible circumstances matching this description, weighted by probability, to pull the lever?" I.e. it asks for your prior, absent any context whatsoever, which is a valid question.

It sounds like you realize why this doesn't work. If you sum over all possibilities then the philosophical question of deontology vs utilitarianism disappears. Instead, it's a practical real-world question about actual trolleys that can be answered by examining actual trolley crash data.

Edit: On further reflection I think the usual interpretation is closer to "Is it better to follow a general policy, over all possible circumstances matching this description, to pull the lever or not?" I think this is closer to your interpretation but I don't think it should produce a different answer to mine.

This makes more sense. I argue that a "general policy" is meaningless when circumstances dominate so hard. You argue that a general policy is still meaningful. I agree that a general policy is the right way to interpret some ethical questions…depending on the context.

(This is still a false dichotomy - there's also the option of "pull the lever equally as often as not", but I've never heard of anyone genuinely apathetic about the trolley problem.)

I like this answer. It's delectably unsatisfying.

↑ comment by Slider · 2021-09-28T09:55:15.147Z · LW(p) · GW(p)

If I were to answer "pull if it is day and don't pull if it is night" then that would not be "I don't know" answer but would be a "It depends" answer. Also if somebody were to say "pull if night and don't pull if day" we can say that they disagree with the first person and both have a decided stance even if it is not decided in the "yes-no" axis.

I guess for these purposes the signficant thing is whether you can specify the conditions/delineations or would they be ad hoc random afterthougts. There is also something to be said whether the delineation would be available. Like a counterargument of "What if you don't know whether it is day or not?" is relevant to counter with "Phase of day is usually easily and readily available". You don't need to rely on the hypothetical provider to condition your answer on such a thing but that conditioning might still be highly questionable (I for one think that day/night can't seriously be a relevant factor and would think somebody trying to pass that as a serious answer would be ridicoulus)

comment by Joe Collman (Joe_Collman) · 2021-09-27T16:27:10.279Z · LW(p) · GW(p)

I don't think you can substitute "should you" with "do you" without hugely altering the problem.

(though it's true that "should you" can still include a load of instrumental selfishness)

They're both interesting questions, but different ones.

[EDIT if the Harvard justice class is also treating these as equivalent (are they?), then that sucks]

Another point on context: you can't assume readers interpret "should" as meaning the same thing when you provide different contextual information. E.g. "Should you (as a Russian general) x" may get a different answer from "Should you (as a person) x", even if that person is a Russian general, since the first will often be interpreted in terms of [duties of a Russian general] because the reader thinks that's what the question is asking about (perhaps subconsciously).

The more context you throw in, the more careful you have to be that you're still asking essentially the same question.

comment by Lucas2000 · 2021-09-27T10:29:59.260Z · LW(p) · GW(p)

"No, obviously. That would be monstrous."

This feels like begging the question. Why is it obvious that a doctor shouldn't kill one patient to save five? It seems like it is obvious because we have an overwhelmingly strong intuition that it is wrong. Given that there are many people who have an overwhelmingly strong intuition that being gay is wrong, I'm unsure if it's a good idea to just rely on that intuition, and leave it there.

Replies from: radu-floricica-1, Slider, Ericf, boris-kashirin↑ comment by Radu Floricica (radu-floricica-1) · 2021-09-27T12:03:24.492Z · LW(p) · GW(p)

Intuition is probably a proxy for all the negative externalities. You can't quickly think through all of them, but there's a feeling that harvesting organs from a (soon to be healthy) patient is a huge breach of trust, which is Wrong. Why is breach of trust wrong? Intellectual answer goes one way, and intuition goes the evolved way, which is "thou shalt dislike people who breach trust".

↑ comment by Slider · 2021-09-28T10:08:43.425Z · LW(p) · GW(p)

I don't know whether it is because I have examined that case more closely but I think it can be thought throught.

If you had doctors who were known to organ harvest then anybody submitting to be their patient would risk getting harvested. This is not merely possible but kind of justifiable. It is so justifiable that even if a harvesting would save more lives more immedietly the effect of people not seeking medical treatment would easily displace this benefit.

On the contrary if all the patetients are super glued in tot he decision making it becomes more natural to do the harvesting. Imagine a tight family where everybody would give their lives for each other. The resistance to one of them heroing for the others melts away (intuition claim) quite effectively. So as a doctor you can't mandate a harvesting but you can present the harvesting option to the relevant people. One of the possible hurdles is of thinking of a sane person knowingly consenting to be harvested (people like tend to aviod death where possible) but where it makes sense it mainly happens via that route.

I guess there is still the tricky situation where asking for consent is not possible but the doctor questimates that the relevant parties would be on board.

↑ comment by Ericf · 2021-09-27T16:08:30.482Z · LW(p) · GW(p)

My answer is that in the trolly problem the people are interchangeable, and 5 > 1. In the doctor problem, you have 5 sick and 1 healthy, and they have different value.

What the doctor should do is pick (by any means) one of the sick patients, extract thier 4 healthy organs and save the other 4. The end result for the unlucky one is the same (death), but the other 4 do better.

↑ comment by Boris Kashirin (boris-kashirin) · 2021-09-27T16:16:38.526Z · LW(p) · GW(p)

In this scenario we giving the doctor an awful lot of power. We need to have unreasonably high trust in him to make such choice practical. Handling other people lives often have funny effects on one morality.

There is another problem: In reality, shit happens. But our intuition says something like "Shit happens to other people. I have control over my life, as long as I do everything right no shit will ever happen to me". See? In my mind there is no way I ever need organ transplant so such arrangement is strictly detrimental, while in reality chances are I will be saved by it. Again there are good reasons for such intuition, as it promotes responsibility for own actions. If we are to make health "common property" who will be responsible for it?

I suspect it is easier to solve artificial organs than human nature.

comment by Tofly · 2021-09-27T23:52:08.759Z · LW(p) · GW(p)

What year is it?

Current year.

Where am I?

Near a trolley track.

Who am I?

Yourself.

Who's in the trolley?

You don't know.

Who's on the tracks?

You don't know.

Who designed the trolley?

You don't know.

Who is responsible for the brake failure?

You don't know.

Do I work for the trolley company?

Assume that you're the only person who can pull the lever in time, and it wouldn't be difficult or costly for you to do so. If your answer still depends on whether or not you work for the trolley company, you are different from most people, and should explain both cases explicitly.

If so, what are its standard operating procedures for this situation?

Either there are none, or you're actually not in the situation above, but creating those procedures right now.

What would my family think?

I don't know, maybe you have an idea.

Would either decision affect my future job prospects?

No.

Is there a way for me to fix the systemic problem of trolleys crashing in thought experiments?

Maybe, but not before the trolley crashes.

Can I film the crash and post the video online?

Yes.

Replies from: lsusr↑ comment by lsusr · 2021-09-28T04:11:48.945Z · LW(p) · GW(p)

Assume that you're the only person who can pull the lever in time, and it wouldn't be difficult or costly for you to do so. If your answer still depends on whether or not you work for the trolley company, you are different from most (WEIRD) people, and should explain both cases explicitly.

If I don't work for the trolley company then I shouldn't touch the equipment because uninvited non-specialists messing with heavy machinery is dangerous (some special circumstances excepted) [LW · GW] and disruptive. It makes the world less safe. Since I'm not pulling the lever, I have my hands free to film the trolley crash. This is my most likely path to systemic change. It causes the least harm and protects the most people.

If I do work for the trolley company then I should pull the lever because my obligation to protect the many passengers in the trolley doing exactly what they're supposed to outweighs my obligation to protect a single idiot playing on the tracks. (He's probably not a worker who's supposed to be there because if he was I would know who he is and you specified that I don't.)

Replies from: Tofly↑ comment by Tofly · 2021-09-28T21:38:12.862Z · LW(p) · GW(p)

In most formulations, the five people are on the track ahead, not in the trolley.

I took a look at the course you mentioned:

It looks like I got some of the answers wrong.

Where am I?

In the trolley. You, personally, are not in immediate danger.

Who am I?

A trolley driver.

Who's in the trolley?

You are. No one in the trolley is in danger.

Who's on the tracks?

Five workers ahead, one to the right.

Do I work for the trolley company?

Yes.

The problem was not as poorly specified as you implied it to be.