Cheat sheet of AI X-risk

post by momom2 (amaury-lorin) · 2023-06-29T04:28:32.292Z · LW · GW · 1 commentsContents

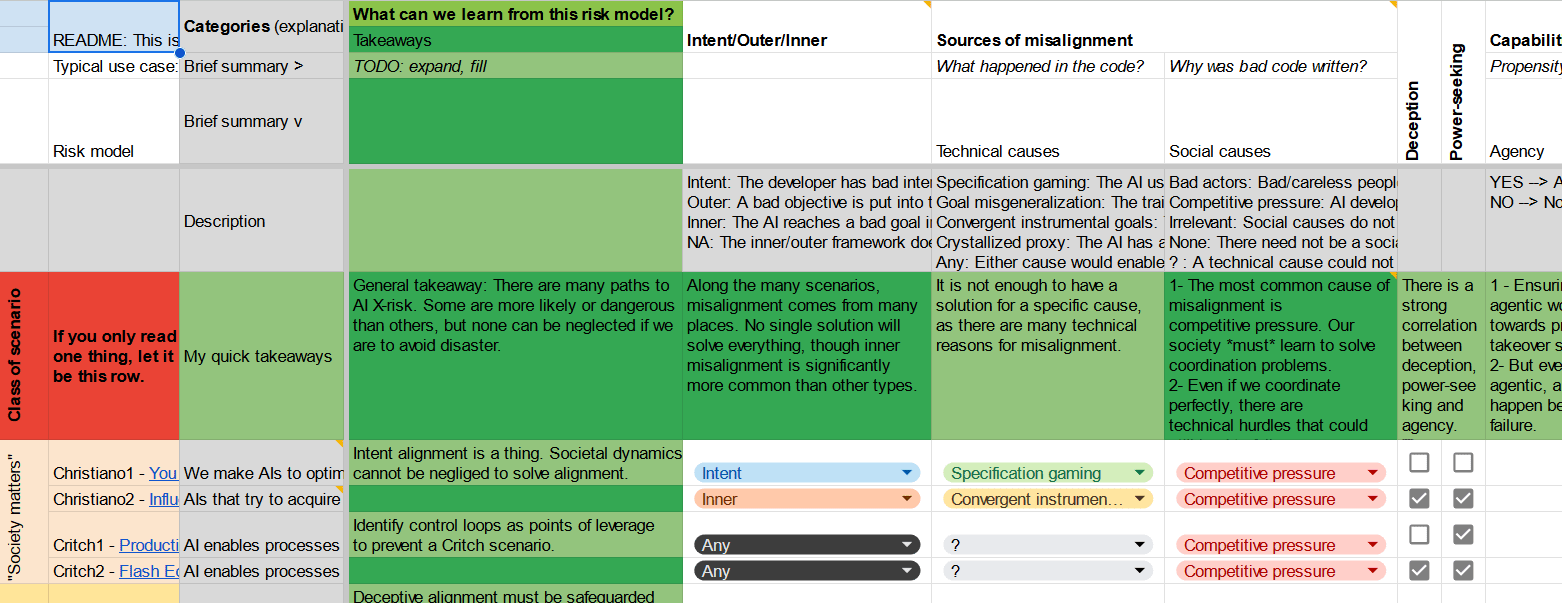

Introduction Content Disclaimer Takeaways General takeaway Outer/inner/intent Technical causes of misalignment Social causes of misalignment Deception & power-seeking Capabilities Agency Power Cognition Existential risk Individual takeaways Class of scenario How to use the cheat sheet Disclaimer Intended use Reading/writing conventions How to edit the cheat sheet Sources Literature reviews Additional ideas for relevant variables None 1 comment

This document was made as part of my internship at EffiSciences. Thanks to everyone who helped review it, in particular Charbel-Raphaël Segerie, Léo Dana, Jonathan Claybrough and Florent Berthet!

Introduction

Clarifying AI X-risk [LW · GW] is a summary of a literature review of AI risk models by DeepMind that (among other things) categorizes the threat models it studies along two dimensions: the technical cause of misalignment and the path leading to X-risk.

I think this classification is inadequate because the technical causes of misalignment that are considered in some of the presented risk models should be differently nuanced.

So, I made a document that would go more in-depth and accurately represent what (I believe) the authors had in mind. Hopefully, it can help researchers quickly remind themselves of the characteristics of the risk model they are studying.

Here it is, for anyone who wants to use it!

Anyone can suggest edits to the cheat sheet. I hope that this can foster healthy discussion about controversial or less detailed risk models.

If you just want to take a look, go right ahead! If you would like to contribute, the penultimate section explains the intended use of the document, the conventions used and how to suggest edits.

There is also a more detailed version if you are interested, but that one is not nearly as well formatted (i.e. ugly as duck), nor is it up to date. It is where I try to express more nuanced analysis than allowed by a dropdown list.

Content

Disclaimer

Some information in this cheat sheet is controversial; for example, the inner/outer framework is still debated. In addition, some information might be incorrect. I did my best, but I do not agree with all the sources I have read (notably, Deepmind classifies Cohen’s scenario as specification gaming, but I think it is more like goal misgeneralization) so remain critical. My hope is that after a while the controversial cell comments will contain links and conversations to better understand the positions involved.

The takeaways are mostly my own work, with all the risks that entails.

Some values had to be simplified for the sake of readability; for instance, it would be more accurate to say that Cohen’s scenario does not require the AI to be powerful rather than to say the cause of the AI’s power is unclear. If that bothers you, you can look at the detailed version where I make less compromises at the cost of readability.

Takeaways

The takeaways are my own and do not necessarily represent the views of the authors of the risk models. They are all presented with respect to the risk models examined.

General takeaway

General takeaway: There are many paths to AI X-risk. Some are more likely or dangerous than others, but none can be neglected if we are to avoid disaster.

Outer/inner/intent [LW · GW]

(Keep in mind that the inner/outer framework is not universally accepted.)

Along the many scenarios, misalignment comes from many places. No single solution will solve everything, though inner misalignment is significantly more commonly depicted than other types.

Technical causes of misalignment

It is not enough to have a solution for a specific cause, because there are many technical reasons for misalignment.

In addition, some risk models simply require any source of misalignment, which is why I think a security mindset [LW · GW] is necessary to build a safe AI: we need to prove that there is no loophole, that the machine is daemon-free [LW · GW], to guarantee that none of these risk models are allowed by an AI design.

Social causes of misalignment

- The most frequently cited factor of misalignment is competitive pressure[1]: it increases the likelihood of accidents due to haste or misuse. Solving these coordination problems (through discussion, contracts, government intervention...) therefore seems to be an important lever to reduce x-risk.

- Even if we coordinate perfectly, there are technical hurdles that could still lead to failure. Governance could allow us to reach existential safety by freezing AI research, training or deployment, but this will not be enough to solve alignment: we need to allocate resources for technical alignment.

I did not expect coordination problems to be so dangerous; going into this, I was mostly concerned about tackling “hard alignment [LW · GW]”. I had to update hard: most risk models assume that a major reason technical causes are not addressed is a societal failure[2] to implement safeguards, regulation, take our time, and agree not to deploy unsafe AI.

We could completely solve technical alignment (have the knowledge to make an aligned AGI) and still die from someone who doesn't use those techniques, which is a likely scenario if we don't solve coordination[3].

Deception & power-seeking

There is a strong correlation between deception, power-seeking and agency. They are present in most scenarios and are generally considered to indicate very high risk. The development of correct assessments to measure deception and power-seeking appears to be robustly beneficial[4].

Surprise, surprise!

Capabilities

Agency

- Ensuring that AIs are not agentic would go a long way towards preventing most AI takeover scenarios.

- But even if no AI were agentic, a disaster could happen because of societal failure such as Christiano1[5].

Power

Most scenarios consider that an advanced AI will be able to seize power, so denying it access to resources such as banks and factories is not enough of a safeguard.

There is extensive literature [? · GW] on the subject; the general consensus is that boxing might have some use, but it's unlikely to work.

Cognition

AI-takeover-like scenarios usually require specific capabilities that we can detect in potentially dangerous AIs through capability assessment and interpretability. In many scenarios, these capabilities come from general purpose training and are not externally visible, so it is a misconception to assume that "we'll see it before we get there", which is why interpretability is useful.

Before compiling this sheet, I considered interpretability (especially mechanistic interpretability) laudable but probably not worth the effort. Now I think it is one of the most important tools for early warning (which, combined with good governance, would allow us not to train/deploy some dangerous systems).

Existential risk

Yes, AI is risky.

While not all scenarios are guaranteed to lead to human extinction, it is important to remember that even if we survive, disempowerment and other societal issues would need to be addressed (these are risk models after all).

There is much uncertainty about the severity of the risks, but we don’t really have the option to test them to see just how bad they are. It might be interesting for policymakers to have toy models to reduce that uncertainty?

Individual takeaways

If you had to remember only one thing from a risk model what would that be? Usually, the papers are hard to summarize, but for the sake of policymakers and amateur researchers, I tried to do it in one sentence or two on the document.

For a more in-depth analysis, I highly recommend Deepmind’s own work [LW · GW].

Class of scenario

This classification might be the least accurate information in the sheet because it is original work, and I don’t have an established track record.

Mostly, I grouped scenarios together if they seemed to share some similarities which I think are noteworthy.

Risk models are separated into three classes:

- "Society matters" a.k.a. “Social factors are hard to tackle”: These scenarios depend on societal inadequacy, where we don't face the hard parts of alignment (or maybe alignment is easy) because of arms races, economic incentives to make unsafe AI, etc. Sometimes, they don’t need AI to be dangerous by itself at all, but on the other hand they also look quite solvable if we have aligned AGI.

- Christiano1: “You get what you measure” [LW · GW]

- Christiano2: “Influence-seeking behavior is scary” [LW · GW]

- Critch1: Production Web [LW · GW]

- Critch2: Flash Economy [LW · GW]

- "Mining too deep" a.k.a. “Technical causes are hard to tackle”: In these scenarios (it’s a bit of a stretch to present these risk models as scenarios, but what have you), there is a secret hidden in the depths of intelligence that kills everyone by default, regardless of the circumstances in which the AI becomes very intelligent. They are usually independent of specifics except for a few algorithmic assumptions.

- Hubinger: “How likely is deceptive alignment? [LW · GW]”

- Soares: Capabilities Generalization & Sharp Left Turn [LW · GW]

- Cohen: “Advanced artificial agents intervene in the provision of reward”

- "Skynet by default" a.k.a “We [LW · GW]are [LW · GW]poor tacklers [LW · GW]”: These scenarios are about current circumstances, emphasizing that the world is on a path of power-seeking and deception. They talk about what will happen if we do nothing, which is more likely than it sounds. They rely a lot on a highly detailed understanding of the current AIS climate.

- Cotra: “The easiest path to transformative AI… [LW · GW]”

- Ngo: “The alignment problem from a deep learning perspective [LW · GW]”

- Shah: AI Risk from Program Search [LW · GW]

- Carlsmith: “Is Power-seeking AI an existential risk?”

These categories are usually different in methodology, scope of study and conclusions.

How to use the cheat sheet

Disclaimer

The document reflects the personal opinions of the people who edit it; hopefully, these opinions correspond somewhat to reality, but in particular, the original authors of the risk models have not agreed with the results as far as I know.

Intended use

Here is the typical use case the document was imagined for: You have been thinking about AI X-risk, and want to study a potential solution.

- Consider which scenario it might prevent (find the proper row)

- Consider what dimensions of the scenario your solution modifies (columns)

- See in what measure the scenario is improved.

Hopefully, this will remind you of dimensions you might otherwise overlook, and of which scenarios your solution does not apply to.

AI X-risk is vast, and oftentimes you see potential solutions that end up being naive [? · GW]. Hopefully, this document can provide a sanity check to help refine your ideas.

Reading/writing conventions

I tried to represent the authors’ views, to the best of my understanding. "?" means the author does not provide information about that variable or that I haven't found anything yet.

Otherwise, cells are meant to be read as “The risk model is … about this parameter” (e.g. “The risk model is agnostic about why the AI is powerful”) or “The risk model considers this situation to be …” (e.g. “The risk model considers the social causes of AI to be irrelevant”), except for takeaways which are full sentences.

In the detailed sheet, parentheses are sometimes used to give more details. This comes at the cost of dropdown lists, sorry.

How to edit the cheat sheet

Here are a few rules to keep the sheet as readable as possible for everyone:

- If you disagree with the value of a cell, make a commentary to propose a new value and explain your position. Keep one topic per thread.

- If you want to make a minor amendment, make your commentary yellow-orange, depending on the importance of the change.

- If you want to make a major amendment (e.g. the current value is misinforming for an experienced reader), make your commentary red.

- If you want to add a column or make changes of the same scale, mark the topmost cell of the relevant column with a magenta commentary.

You can also write a proposed edit here and hope I read it, especially for long diatribes.

If you want to edit the detailed sheet, please send me your email address in private. If you are motivated enough to read that thing, then imo you deserve editing rights.

Sources

The document is very far from summarizing all the information in the sources. I strongly encourage you to read them by yourself.

The links for each risk model are in the leftmost column of the document.

Literature reviews

- Clarifying AI X-risk [LW · GW] & Threat Model Literature Review [LW · GW] (Deepmind)

- My Overview of the AI Alignment Landscape: Threat Models [LW · GW] (Neel Nanda)

Additional ideas for relevant variables

- Distinguishing AI takeover scenarios [LW · GW] (authors)

- My Overview of the AI Alignment Landscape: A Bird's Eye View - LessWrong [? · GW] (Neel Nanda)

This post will be edited if helpful comments point out how to improve it.

[ Insert footnotes ]

- ^

To keep it simple, I regrouped all sorts of perverse social dynamics under this term, even if it is slightly misleading. I’d have used Moloch if I hadn’t been told it was an obscure reference. You might also think in terms of inadequate equilibria or game-theoretic reasons to be dumb. Also, dumb reasons, that happens too.

- ^

More precisely, failure to solve a coordination problem. If you want to learn more, see some posts on coordination [? · GW]. I’m told I should mention Moral Mazes [? · GW]; also I really enjoyed Inadequate Equilibria. These readings not necessary, but I think they provide a lot of context when researchers mention societal failure with few details. Of course, some do provide a lot of details which I wish everyone did because it makes the model more crisp, but it’s also sticking your neck out for easy chopping off.

- ^

Or do a pivotal act? There’s a discussion to be had here on the risks of trying to perform a pivotal act when you suffer from coordination problems.

I don’t know of a protocol that would allow me to test a pivotal act proposal with enough certainty to convince me it would work, in our kind of world.

- ^

I don’t think that measuring deception and power-seeking has great value in itself, because you have to remember to fix them too, and I expect it to be a whole different problem. Still, that would be an almost certainly good tool to have.

In addition, beware of making a deception-detecting tool, and mistakenly thinking that it detects every deception [AF · GW]. - ^

In some scenarios where the AI is agentic, it is made an agent by design (through training or architecture) for some reason, which given a possible current paradigm [LW · GW] might be prevented with good governance. In others, agenticity emerges for complicated reasons which I can only recommend you take as warnings against ever thinking your AI is safe by design.

1 comments

Comments sorted by top scores.

comment by momom2 (amaury-lorin) · 2023-06-29T04:32:11.949Z · LW(p) · GW(p)

List of known discrepancies:

- Deepmind categorizes Cohen’s scenario as specification gaming (instead of crystallized proxies).

- They consider Carlsmith to be about outer misalignment?

Value lock-in, persuasive AI [LW · GW] and Clippy are on my TODO list to be added shortly. Please do tell if you have something else in mind you'd like to see in my cheat sheet!