AI researchers announce NeuroAI agenda

post by Cameron Berg (cameron-berg) · 2022-10-24T00:14:46.574Z · LW · GW · 12 commentsThis is a link post for https://arxiv.org/abs/2210.08340

Contents

Doubts about the 'prosaic' approach yielding AGI Researchers eyeing brain-based approaches Brittleness vs. flexibility & leveraging evolution's 'work' Good response to the 'bird : plane :: brain : AI' analogy Concluding thought None 12 comments

Last week, 27 highly prominent AI researchers and neuroscientists released a preprint entitled Toward Next-Generation Artificial Intelligence: Catalyzing the NeuroAI Revolution. I think this report is definitely worth reading, especially for people interested in understanding and predicting the long-term trajectory of AI research.

Below, I’ll briefly highlight four passages from the paper that seemed particularly relevant to me.

Doubts about the 'prosaic' approach yielding AGI

The authors write:

The seeds of the current AI revolution were planted decades ago, largely by researchers attempting to understand how brains compute (McCulloch and Pitts 1943). Indeed, the earliest efforts to build an “artificial brain” led to the invention of the modern “von Neumann computer architecture,” for which John von Neumann explicitly drew upon the very limited knowledge of the brain available to him in the 1940s (Von Neumann 2012). The deep convolutional networks that catalyzed the recent revolution in modern AI are built upon artificial neural networks (ANNs) directly inspired by the Nobel-prize winning work of David Hubel and Torsten Wiesel on visual processing circuits in the cat (Hubel and Wiesel 1962; LeCun and Bengio 1995). Similarly, the development of reinforcement learning (RL) drew a direct line of inspiration from insights into animal behavior and neural activity during learning (Thorndike and Bruce 2017; Rescorla 1972; Schultz, Dayan, and Montague 1997). Now, decades later, applications of ANNs and RL are coming so quickly that many observers assume that the long-elusive goal of human-level intelligence—sometimes referred to as “artificial general intelligence”—is within our grasp. However, in contrast to the optimism of those outside the field, many front-line AI researchers believe that major new breakthroughs are needed before we can build artificial systems capable of doing all that a human, or even a much simpler animal like a mouse, can do [emphasis added].

To the degree we take this final comment seriously—that many within the field think that major breakthroughs (plural) are needed before we get AGI—we should probably update in the direction of being relatively more skeptical of prosaic AGI safety [LW · GW]. If it appears increasingly likely that AGI won't look like a clean extrapolation from current systems, it would therefore make increasingly less sense to bake the prosaic assumption into AGI safety research.

Researchers eyeing brain-based approaches

While many key AI advances, such as convolutional ANNs and RL were inspired by neuroscience, much of the current research in machine learning is following its own path by building on previously-developed approaches that were inspired by decades old findings in neuroscience, such as attention-based neural networks which were loosely inspired by attention mechanisms in the brain (Itti, Koch, and Niebur 1998; Larochelle and Hinton 2010; Xu et al. 2015). New influences from modern neuroscience exist, but they are spearheaded by a minority of researchers. This represents a missed opportunity. Over the last decades, through efforts such as the NIH BRAIN initiative and others, we have amassed an enormous amount of knowledge about the brain. This has allowed us to learn a great deal about the anatomical and functional structures that underpin natural intelligence. The emerging field of NeuroAI, at the intersection of neuroscience and AI, is based on the premise that a better understanding of neural computation will reveal basic ingredients of intelligence and catalyze the next revolution in AI, eventually leading to artificial agents with capabilities that match and perhaps even surpass those of humans [emphasis in the original]. We believe it is the right time for a large-scale effort to identify and understand the principles of biological intelligence, and abstract those for application in computer and robotic systems.

Again, to the degree that this assertion is taken seriously—that a better understanding of neural computation really would reveal the basic ingredients of intelligence and catalyze the next revolution in AI—it seems like AGI safety researchers should significantly positively update in the direction of pursuing brain-based AGI safety approaches [? · GW].

As I’ve commented before, I think this makes a great deal of sense given that the only known systems in the universe that exhibit general intelligence—i.e., our only reference class—are brains [LW · GW]. Therefore, it seems fairly obvious that people attempting to artificially engineer general intelligence might want to attempt to emulate the relevant computational dynamics of brains—and that people interested in ensuring the advent of AGI goes well ought to calibrate their work accordingly.

Brittleness vs. flexibility & leveraging evolution's 'work'

Modern AI can easily learn to outperform humans at video games like Breakout using nothing more than pixels on a screen and game scores (Mnih et al. 2015). However, these systems, unlike human players, are brittle and highly sensitive to small perturbations: changing the rules of the game slightly, or even a few pixels on the input, can lead to catastrophically poor performance (Huang et al. 2017). This is because these systems learn a mapping from pixels to actions that need not involve an understanding of the agents and objects in the game and the physics that governs them. Similarly, a self-driving car does not inherently know about the danger of a crate falling off a truck in front of it, unless it has literally seen examples of crates falling off trucks leading to bad outcomes. And even if it has been trained on the dangers of falling crates, the system might consider an empty plastic bag being blown out of the car in front of it as an obstacle to avoid at all cost rather than an irritant, again, because it doesn’t actually understand what a plastic bag is or how unthreatening it is physically. This inability to handle scenarios that have not appeared in the training data is a significant challenge to widespread reliance on AI systems.

To be successful in an unpredictable and changing world, an agent must be flexible and master novel situations by using its general knowledge about how such situations are likely to unfold. This is arguably what animals do. Animals are born with most of the skills needed to thrive, or can rapidly acquire them from limited experience, thanks to their strong foundation in real-world interaction, courtesy of evolution and development (Zador 2019). Thus, it is clear that training from scratch for a specific task is not how animals obtain their impressive skills; animals do not arrive into the world tabula rasa and then rely on large labeled training sets to learn. Although machine learning has been pursuing approaches for sidestepping this tabula rasa limitation, including self-supervised learning, transfer learning, continual learning, meta learning, one-shot learning and imitation learning (Bommasani et al. 2021), none of these approaches comes close to achieving the flexibility found in most animals. Thus, we argue that understanding the neural circuit-level principles that provide the foundation for behavioral flexibility in the real-world, even in simple animals, has the potential to greatly increase the flexibility and utility of AI systems. Put another way, we can greatly accelerate our search for general-purpose circuits for real-world interaction by taking advantage of the optimization process that evolution has already engaged in [emphasis added]. (Gupta et al. 2021; Stöckl, Lang, and Maass 2022; Koulakov et al. 2022; Stanley et al. 2019; Pehlevan and Chklovskii 2019).

This excerpt is potent for at least two reasons, in my view:

First, it does a good job of distilling the fundamental limitations of current AI systems with a sort of brittleness-flexibility distinction. Again, the prosaic AGI safety regime is significantly less plausible if current AI systems exhibit dynamics that seem to explicitly fly in the face of the generality we should expect from an artificial, uh, general intelligence. In other words, when monkeying with a few pixels totally breaks our image classifier or our RL model, we will probably want to regard this a suspicious form of overfitting to the specific task specification as opposed to an acquisition of the general principles that would enable success. AGI should presumably behave far more like the latter than the former, which is a strong indication that proasic AI approaches would need to be supplemented by major additional breakthoughs before we get AGI.

Second, this section nicely articulates the fact that the optimization process of evolution has already done a great deal of 'work' in narrowing the search space for the kinds of computational systems that give rise to general intelligence. In other words, researchers do not have to reinvent the wheel—they might instead choose to investigate the dynamics of generally intelligent systems that already tangibly exist. (Note: this is not to say that there won't be other solutions in possible-space-of-generally-intelligent-systems that evolution did not 'find.') Both strategies—'reinvent the brain' & understand the brain—and are certainly available as engineering proposals, but the latter seems orders of magnitude faster, akin to finetuning a pretrained model rather than training one from scratch.

Good response to the 'bird : plane :: brain : AI' analogy

The researchers somewhat epically conclude their paper as follows:

Despite the long history of neuroscience driving advances in AI and the tremendous potential for future advances, most engineers and computational scientists in the field are unaware of the history and opportunities. The influence of neuroscience on shaping the thinking of von Neumann, Turing and other giants of computational theory are rarely mentioned in a typical computer science curriculum. Leading AI conferences such as NeurIPS, which once served to showcase the latest advances in both computational neuroscience and machine learning, now focus almost exclusively on the latter. Even some researchers aware of the historical importance of neuroscience in shaping the field often argue that it has lost its relevance. “Engineers don’t study birds to build better planes” is the usual refrain. But the analogy fails, in part because pioneers of aviation did indeed study birds (Lilienthal 1911; Culick 2001), and some still do (Shyy et al. 2008; Akos et al. 2010). Moreover, the analogy fails also at a more fundamental level: The goal of modern aeronautical engineering is not to achieve “bird-level” flight, whereas a major goal of AI is indeed to achieve (or exceed) “human-level” intelligence. Just as computers exceed humans in many respects, such as the ability to compute prime numbers, so too do planes exceed birds in characteristics such as speed, range and cargo capacity. But if the goal of aeronautical engineers were indeed to build a machine with the “bird-level” ability to fly through dense forest foliage and alight gently on a branch, they would be well-advised to pay very close attention to how birds do it. Similarly, if AI aims to achieve animal-level common-sense sensorimotor intelligence, researchers would be well-advised to learn from animals and the solutions they evolved to behave in an unpredictable world.

I think this is a very concise and cogent defense of why brain-based approaches to AGI and AGI safety are ultimately highly relevant—and why the 'bird : plane :: brain : AI' reply seems far too quick.

Concluding thought

The clear factual takeaway for me is that many of the field’s most prominent researchers are explicitly endorsing 'NeuroAI' as a plausible route to AGI. This directly implies that those interested in preventing AGI-development-catastrophe-scenarios should be paying especially careful attention to this shift of researchers' attention—and calibrate their efforts accordingly.

Practically, this looks to me like updating in the direction of taking brain-based AGI safety significantly more seriously.

12 comments

Comments sorted by top scores.

comment by paulfchristiano · 2022-10-24T02:27:29.287Z · LW(p) · GW(p)

If it appears increasingly likely that AGI won't look like a clean extrapolation from current systems, it would therefore make increasingly less sense to bake the prosaic assumption into AGI safety research.

I think it's plausible that "AGI won't look like a clean extrapolation from current system."

But I don't see any perspective where it looks increasingly likely over time: scaling up simple systems is working much better than almost all skeptics expected, the relative importance of existing insights extracted from neuroscience has been consistently decaying over time, and the concrete examples of "things models can't do" are becoming more and more strained. As far as I can tell the distribution of views in the field of AI is shifting fairly rapidly towards "extrapolation from current systems" (from a low baseline).

Replies from: TrevorWiesinger, cameron-berg↑ comment by trevor (TrevorWiesinger) · 2022-10-24T07:00:14.562Z · LW(p) · GW(p)

the relative importance of existing insights extracted from neuroscience has been consistently decaying over time

I don't think this is a very good indicator. The resolution of an fMRI could easily increase by two orders of magnitude in 10 years given enough federal funding (currently 100 μm but nowhere near the whole brain), bringing us much closer to modelling all neural activity in an entire human brain and using it as a layer for a foundation model. Humanity's fMRI technology would be totally incapable of doing that, for decades, until the moment that those numbers (magnetic imaging resolution) are pumped high enough to record brain activity at the fundamental level.

While on the topic of funding, I also think that the AI industry is large enough that the expected return of latching neuroscience to it is quite high. So we should also anticipate more galaxy-brain persuasion attempts coming from neuroscience.

Replies from: paulfchristiano↑ comment by paulfchristiano · 2022-10-24T16:16:29.583Z · LW(p) · GW(p)

I agree that more detailed measurements of brains could be technologically feasible over the coming decades and could give rise to a different kind of insight that is more directly useful for AI (I don't normally imagine this coming from fMRI progress, but I don't know much about the area).

That said, I think "what is the current trend" is still an important indicator.

And the paper talks explicitly about the influence of past progress in neuroscience, both its past influence on AI and the possible future influence. So I think it's particularly relevant to the argument they are making.

↑ comment by Cameron Berg (cameron-berg) · 2022-10-24T14:57:39.888Z · LW(p) · GW(p)

Thanks for your comment!

As far as I can tell the distribution of views in the field of AI is shifting fairly rapidly towards "extrapolation from current systems" (from a low baseline).

I suppose part of the purpose of this post is to point to numerous researchers who serve as counterexamples to this claim—i.e., Yann LeCun, Terry Sejnowski, Yoshua Bengio, Timothy Lillicrap et al seem to disagree with the perspective you're articulating in this comment insofar as they actually endorse the perspective of the paper they've coauthored.

You are obviously a highly credible source on trends in AI research—but so are they, no?

And if they are explicitly arguing that NeuroAI is the route they think the field should go in order to get AGI, it seems to me unwise to ignore or otherwise dismiss this shift.

Replies from: paulfchristiano↑ comment by paulfchristiano · 2022-10-24T16:08:45.907Z · LW(p) · GW(p)

I'm claiming that "new stuff is needed" has been the dominant view for a long time, but is gradually becoming less and less popular. Inspiration from neuroscience has always been one of the most common flavors of "new stuff is needed." As a relatively recent example, it used to be a prominent part of DeepMind's messaging, though it has been gradually receding (I think because it hasn't been a part of their major results). 27 people holding the view is not a counterexample to the claim that it is becoming less popular.

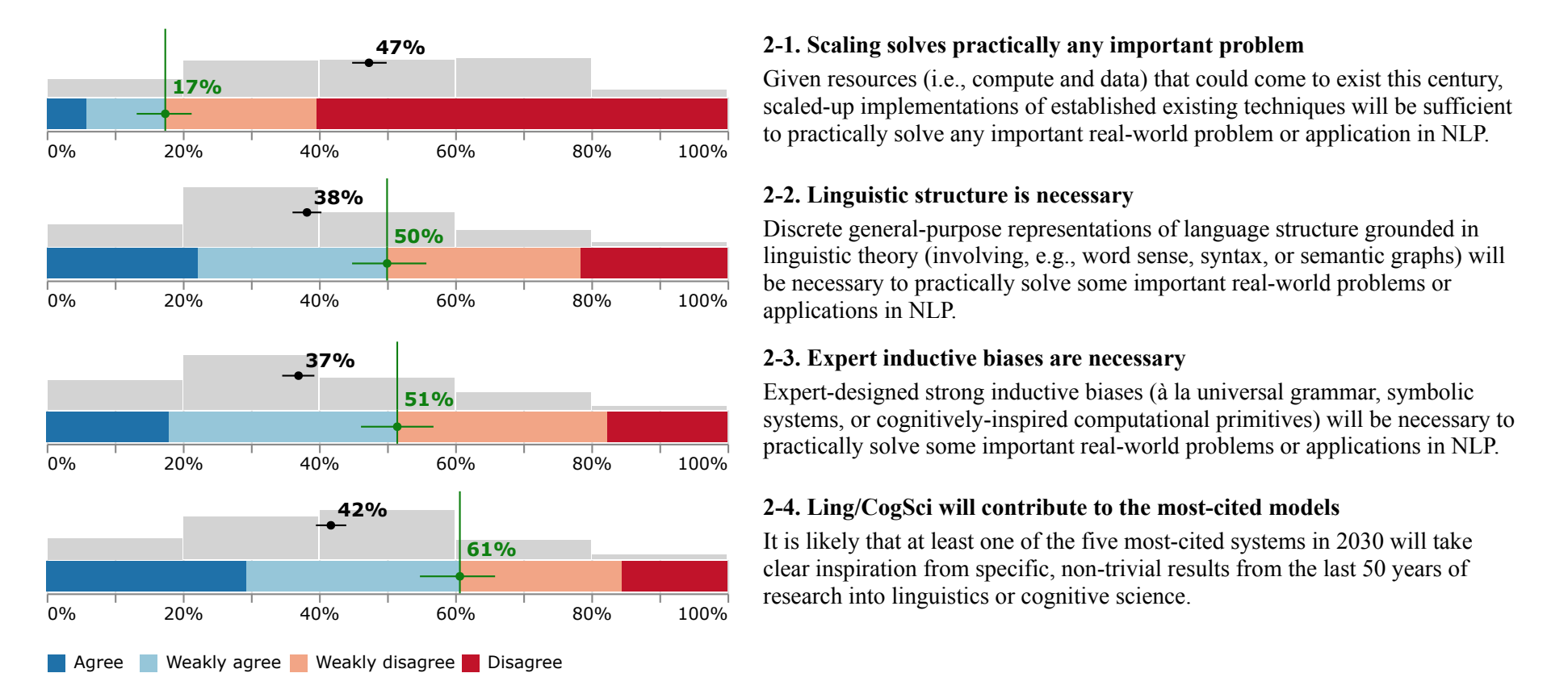

See also this survey of NLP, where ~17% of participants think that scaling will solve practically any problem. I think that's an unprecedentedly large number (prior to 2018 I'd bet it was <5%). But it still means that 83% of people disagree. 60% of respondents think that non-trivial results in linguistics or cognitive science will inspire one of the top 5 results in 2030, again I think down to an unprecedented low but still a majority.

Yann LeCun, Terry Sejnowski, Yoshua Bengio, Timothy Lillicrap et al seem to disagree with the perspective you're articulating in this comment insofar as they actually endorse the perspective of the paper they've coauthored.

Did the paper say that NeuroAI is looking increasingly likely? It seems like they are saying that the perspective used to be more popular and has been falling out of favor, so they are advocating for reviving it.

Replies from: cameron-berg↑ comment by Cameron Berg (cameron-berg) · 2022-10-24T22:17:02.396Z · LW(p) · GW(p)

27 people holding the view is not a counterexample to the claim that it is becoming less popular.

Still feels worthwhile to emphasize that some of these 27 people are, eg, Chief AI Scientist at Meta, co-director of CIFAR, DeepMind staff researchers, etc.

These people are major decision-makers in some of the world's leading and most well-resourced AI labs, so we should probably pay attention to where they think AI research should go in the short-term—they are among the people who could actually take it there.

See also this survey of NLP

I assume this is the chart you're referring to. I take your point that you see these numbers as increasing or decreasing (despite that where they actually are in an absolute sense seems harmonious with believing that brain-based AGI is entirely possible), but it's likely that these increases or decreases are themselves risky statistics to extrapolate. These sorts of trends could easily asymptote or reverse given volatile field dynamics. For instance, if we linearly extrapolate from the two stats you provided (5% believe scaling could solve everything in 2018; 17% believe it in 2022), this would predict, eg, 56% of NLP researchers in 2035 would believe scaling could solve everything. Do you actually think something in this ballpark is likely?

Did the paper say that NeuroAI is looking increasingly likely?

I was considering the paper itself as evidence that NeuroAI is looking increasingly likely.

When people who run many of the world's leading AI labs say they want to devote resources to building NeuroAI in the hopes of getting AGI, I am considering that as a pretty good reason to believe that brain-like AGI is more probable than I thought it was before reading the paper. Do you think this is a mistake?

Certainly, to your point, signaling an intention to try X is not the same as successfully doing X, especially in the world of AI research. But again, if anyone were to be able to push AI research in the direction of being brain-based, would it not be these sorts of labs?

To be clear, I do not personally think that prosaic AGI and brain-based AGI are necessarily mutually exclusive—eg, brains may be performing computations that we ultimately realize are some emergent product of prosaic AI methods that already basically exist. I do think that the publication of this paper gives us good reason to believe that brain-like AGI is more probable than we might have thought it was, eg, two weeks ago.

Replies from: jacob_cannell↑ comment by jacob_cannell · 2022-10-25T03:10:38.598Z · LW(p) · GW(p)

To be clear, I do not personally think that prosaic AGI and brain-based AGI are necessarily mutually exclusive—eg, brains may be performing computations that we ultimately realize are some emergent product of prosaic AI methods that already basically exist.

This is already the case - transformer LLMs already predict neural responses of linguistic cortex remarkably well[1][2]. Perhaps not entirely surprising in retrospect given that they are both trained on overlapping datasets with similar unsupervised prediction objectives.

comment by Joseph Bloom (Jbloom) · 2022-10-24T08:33:46.238Z · LW(p) · GW(p)

To the extent that one might not have predicted scientists to hold these views, I can see why this paper might cause a positive predictive update on brainlike AGI.

However, technological development is not a zero-sum game. Opportunities or enthusiasm in neuroscience doesn't in itself make prosaic AGI less likely and I don't feel like any of the provided arguments are knockdown arguments against ANN's leading to prosaic AGI.

I don't particularly find arguments about human level intelligence being unprecedented outside of humans convincing, in part because of the analogy to "the god of the gaps". Many predictions about what computers can't do have been falsified, sometimes in unexpected ways (ie: arguments about AI not being able to make art). Moreover, that more is different in AI and the development of single-shot models seem powerful argument about the potential of prosaic AI systems when scaled.

Replies from: cameron-berg↑ comment by Cameron Berg (cameron-berg) · 2022-10-24T21:30:28.505Z · LW(p) · GW(p)

However, technological development is not a zero-sum game. Opportunities or enthusiasm in neuroscience doesn't in itself make prosaic AGI less likely and I don't feel like any of the provided arguments are knockdown arguments against ANN's leading to prosaic AGI.

Completely agreed!

I believe there are two distinct arguments at play in the paper and that they are not mutually exclusive. I think the first is "in contrast to the optimism of those outside the field, many front-line AI researchers believe that major new breakthroughs are needed before we can build artificial systems capable of doing all that a human, or even a much simpler animal like a mouse, can do" and the second is "a better understanding of neural computation will reveal basic ingredients of intelligence and catalyze the next revolution in AI, eventually leading to artificial agents with capabilities that match and perhaps even surpass those of humans."

The first argument can be read as a reason to negatively update on prosaic AGI (unless you see these 'major new breakthroughs' as also being prosaic) and the second argument can be read as a reason to positively update on brain-like AGI. To be clear, I agree that the second argument is not a good reason to negatively update on prosaic AGI.

Replies from: Jbloom↑ comment by Joseph Bloom (Jbloom) · 2022-10-25T03:54:33.745Z · LW(p) · GW(p)

Understood. Maybe if the first argument was more concrete, we can examine it's predictions. For example, what fundamental limitations exist in current systems? What should a breakthrough do (at least conceptually) in order to move us into the new paradigm?

I think it's reasonable that understanding the brain better may yield insights but I believe Paul's comment about return on existing insights diminishing over time. Technologies like dishbrain seem exciting and might change that trend?

comment by samshap · 2022-10-25T03:25:52.852Z · LW(p) · GW(p)

I have some technical background in neuromorphic AI.

There are certainly things that the current deep learning paradigm is bad at which are critical to animal intelligence: e.g. power efficiency, highly recurrent networks, and complex internal dynamics.

It's unclear to me whether any of these are necessary for AGI. Something, something executive function and global workspace theory?

I once would have said that feedback circuits used in the sensory cortex for predictive coding were a vital component, but apparently transformers can do similar tasks using purely feedforward methods.

My guess is that the scale and technology lead of DL is sufficient that it will hit AGI first, even if a more neuro way might be orders of magnitude more computationally efficient.

Where neuro AI is most useful in the near future is for embodied sensing and control, especially with limited compute or power. However, those constraints would seem to drastically curtail the potential for AGI.

comment by quetzal_rainbow · 2022-10-24T13:43:05.454Z · LW(p) · GW(p)

Yes, CNNs were brain-inspired, but attention in general and transformers in particular don't seem to be like this.