Actually, Othello-GPT Has A Linear Emergent World Representation

post by Neel Nanda (neel-nanda-1) · 2023-03-29T22:13:14.878Z · LW · GW · 26 commentsThis is a link post for https://neelnanda.io/mechanistic-interpretability/othello

Contents

Note that this work has since been turned into a paper and published at BlackboxNLP. I think the paper version is more rigorous but much terser and less fun, and both it and this sequence of blog posts are worth reading in different ways

Overview

Introduction

Background

Naive Implications for Mechanistic Interpretability

My Findings

Takeaways

How do models represent features?

Conceptual Takeaways

Probing

Technical Setup

Results

Intervening

Citation Info

None

26 comments

Note that this work has since been turned into a paper and published at BlackboxNLP. I think the paper version is more rigorous but much terser and less fun, and both it and this sequence of blog posts are worth reading in different ways

Epistemic Status: This is a write-up of an experiment in speedrunning research, and the core results represent ~20 hours/2.5 days of work (though the write-up took way longer). I'm confident in the main results to the level of "hot damn, check out this graph", but likely have errors in some of the finer details.

Disclaimer: This is a write-up of a personal project, and does not represent the opinions or work of my employer

This post may get heavy on jargon. I recommend looking up unfamiliar terms in my mechanistic interpretability explainer

Thanks to Chris Olah, Martin Wattenberg, David Bau and Kenneth Li for valuable comments and advice on this work, and especially to Kenneth for open sourcing the model weights, dataset and codebase, without which this project wouldn't have been possible! Thanks to ChatGPT for formatting help.

Overview

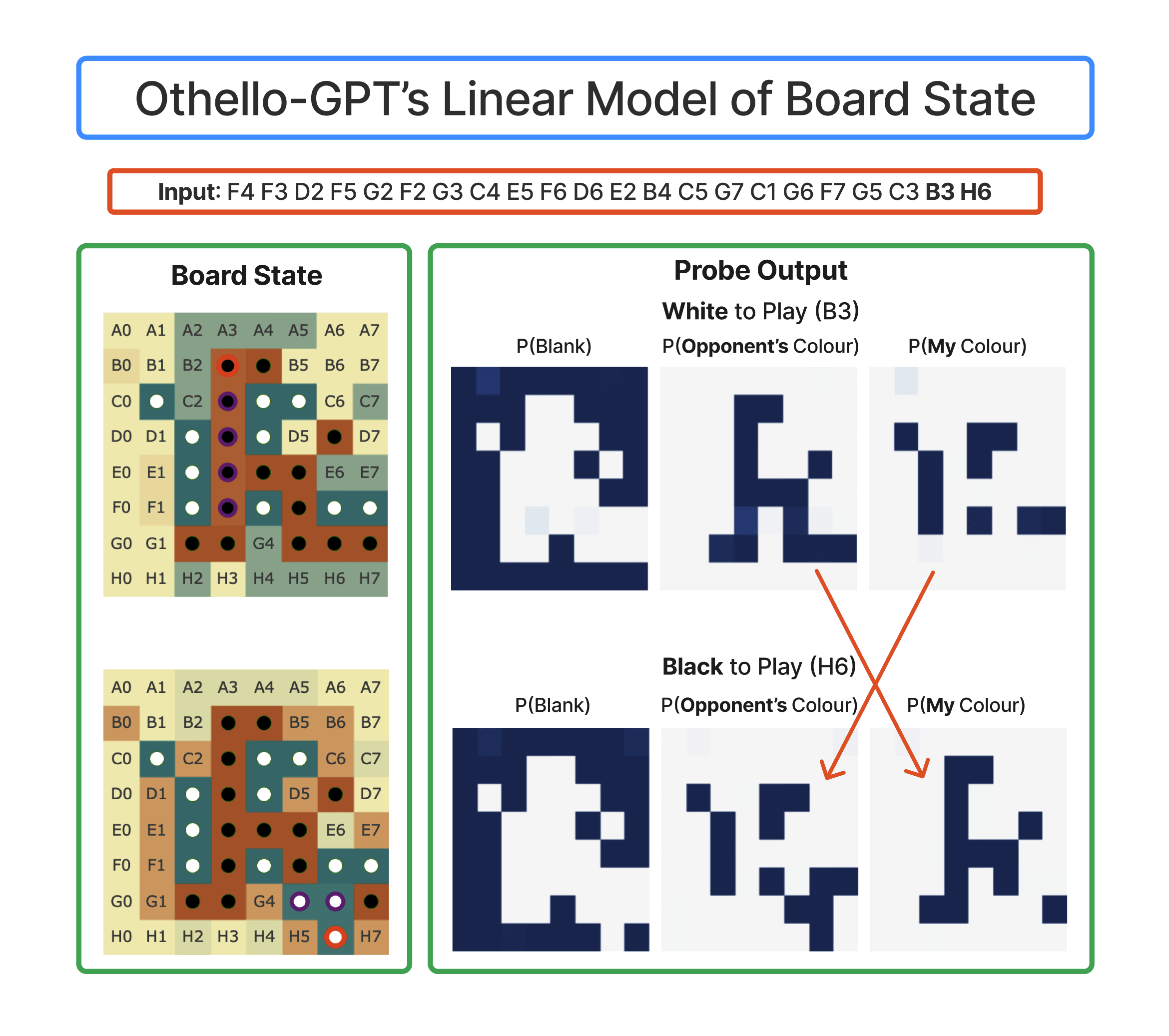

- Context: A recent paper trained a model to play legal moves in Othello by predicting the next move, and found that it had spontaneously learned to compute the full board state - an emergent world representation.

- This could be recovered by non-linear probes but not linear probes.

- We can causally intervene on this representation to predictably change model outputs, so it's telling us something real

- I find that actually, there's a linear representation of the board state [AF(p) · GW(p)]!

- But that rather than "this cell is black", it represents "this cell has my colour", since the model plays both black and white moves.

- We can causally intervene with the linear probe [AF(p) · GW(p)], and the model makes legal moves in the new board!

- This is evidence for the linear representation hypothesis: that models, in general, compute features and represent them linearly, as directions in space [AF · GW]! (If they don't, mechanistic interpretability would be way harder)

- The original paper seemed at first like significant evidence for a non-linear representation - the finding of a linear representation hiding underneath shows the real predictive power of this hypothesis!

- This (slightly) strengthens the paper's evidence that "predict the next token" transformer models are capable of learning a model of the world.

- Part 2 [AF · GW]: There's a lot of fascinating questions left to answer about Othello-GPT - I outline some key directions, and how they fit into my bigger picture of mech interp progress [AF · GW]

- Studying modular circuits [AF · GW]: A world model implies emergent modularity - many early circuits together compute a single world model, many late circuits each use it. What can we learn about what transformer modularity looks like, and how to reverse-engineer it?

- Prior transformer circuits work focuses on end-to-end circuits, from the input tokens to output logits. But this seems unlikely to scale!

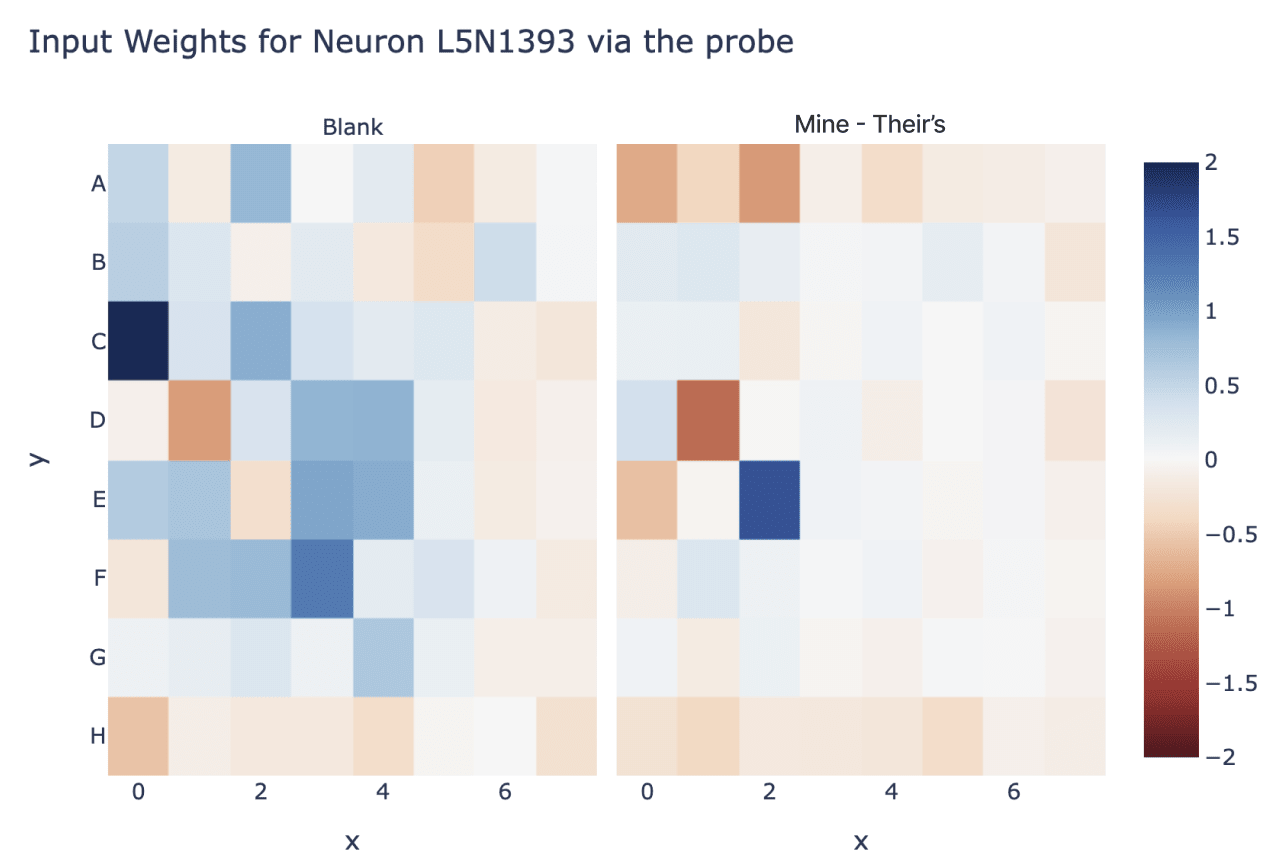

- I present some preliminary evidence reading off a neuron's function from its input weights via the probe [AF · GW]

- Neuron interpretability and Studying Superposition [AF · GW]: Prior work has made little progress on understanding MLP neurons. I think Othello GPT's neurons are tractable to understand, yet complex enough to teach us a lot!

- I further think this can help us get some empirical data about the Toy Models of Superposition paper's predictions

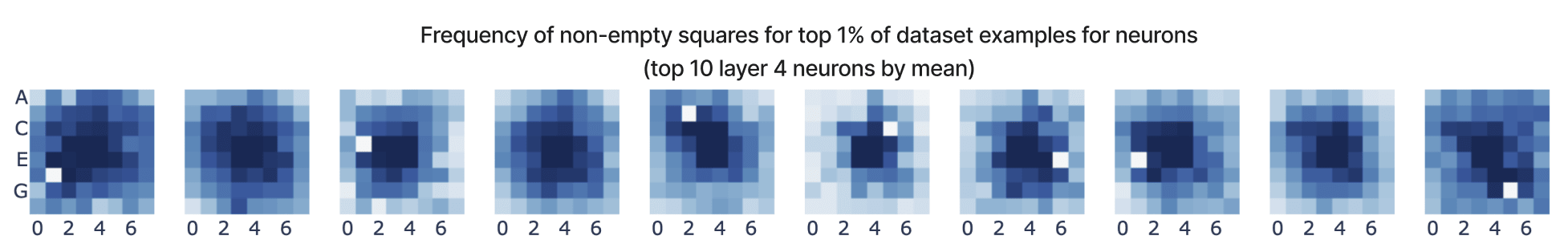

- I investigate max activating dataset examples [AF · GW] and find seeming monosemanticity, yet deeper investigation show it seems more complex.

- A transformer circuit laboratory [AF · GW]: More broadly, the field has a tension between studying clean, tractable yet over-simplistic toy models and studying the real yet messy problem of interpreting LLMs - Othello-GPT is toy enough to be tractable yet complex enough to be full of mysteries, and I detail many more confusions and conjectures that it could shed light on.

- Studying modular circuits [AF · GW]: A world model implies emergent modularity - many early circuits together compute a single world model, many late circuits each use it. What can we learn about what transformer modularity looks like, and how to reverse-engineer it?

- Part 3: Reflections on the research process [AF · GW]

- I did the bulk of this project in a weekend (~20 hours total), as a (shockingly successful!) experiment in speed-running mech interp research.

- I give a detailed account of my actual research process [AF · GW]: how I got started, what confusing intermediate results look like, and decisions made at each point

- I give some process-level takeaways [AF · GW] on doing research well and fast.

- See the accompanying colab notebook and codebase to build on the many dangling threads!

Introduction

This piece spends a while on discussion, context and takeaways. If you're familiar with the paper skip to my findings [AF · GW], skip to takeaways [AF(p) · GW(p)] for my updates from this, and if you want technical results skip to probing [AF(p) · GW(p)]

Emergent World Representations is a fascinating recent ICLR Oral paper from Kenneth Li et al, summarised in Kenneth's excellent post on the Gradient. They trained a model (Othello-GPT) to play legal moves in the board game Othello, by giving it random games (generated by choosing a legal next move uniformly at random) and training it to predict the next move. The headline result is that Othello-GPT learns an emergent world representation - despite never being explicitly given the state of the board, and just being tasked to predict the next move, it learns to compute the state of the board at each move. (Note that the point of Othello-GPT is to play legal moves, not good moves, though they also study a model trained to play good moves.)

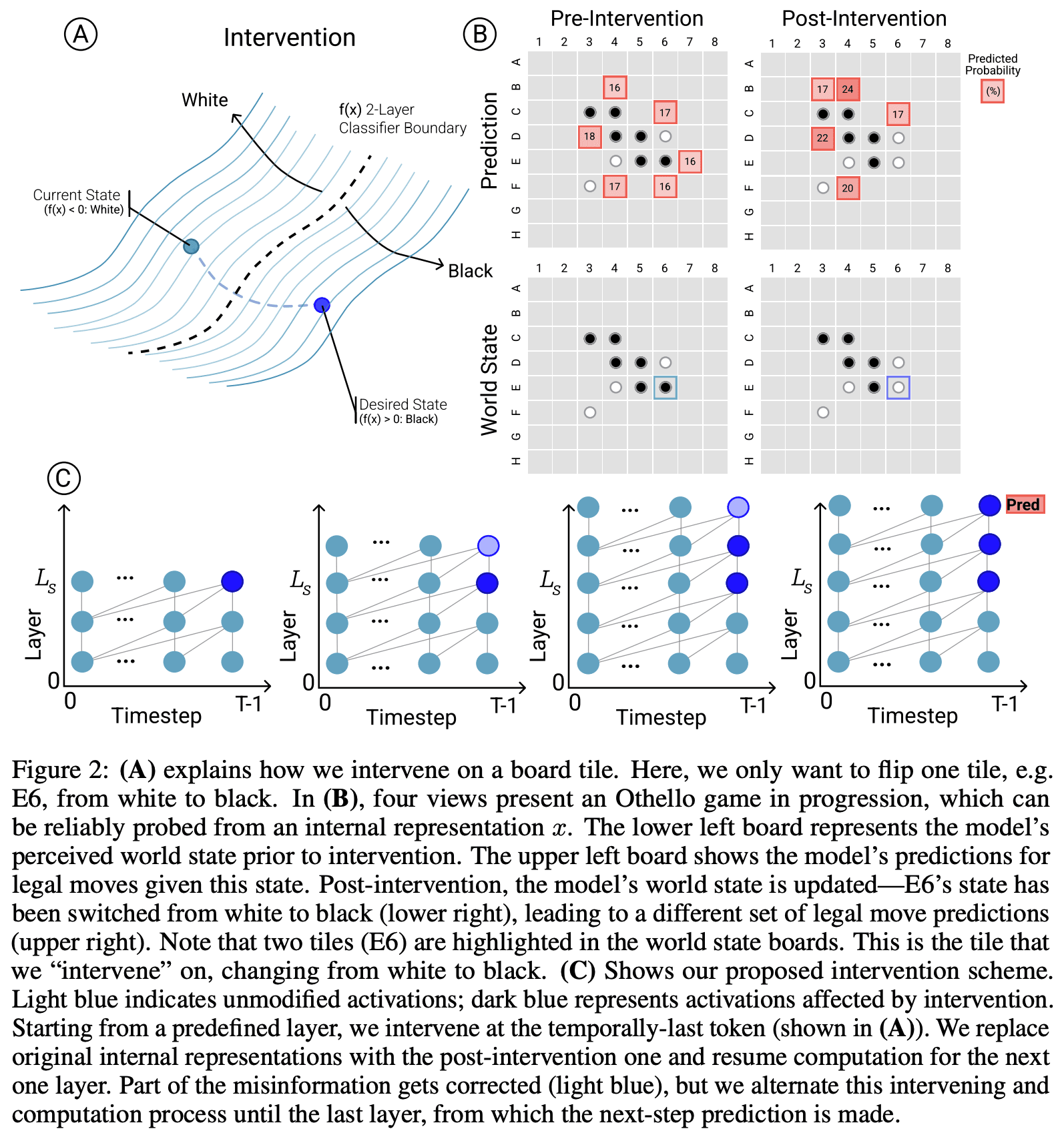

They present two main pieces of evidence. They can extract the board state from the model's residual stream via non-linear probes (a two layer ReLU MLP). And they can use the probes to causally intervene and change the model's representation of the board (by using gradient descent to have the probes output the new board state) - the model now makes legal moves in the new board state even if they are not legal in the old board, and even if that board state is impossible to reach by legal play!

I've strengthened their headline result by finding that much of their more sophisticated (and thus potentially misleading) techniques can be significantly simplified. Not only does the model learn an emergent world representation, it learns a linear emergent world representation, which can be causally intervened on in a linear way! But rather than representing "this square has a black/white piece", it represents "this square has my/their piece". The model plays both black and white moves, so this is far more natural from its perspective. With this insight, the whole picture clarifies significantly, and the model becomes far more interpretable!

Background

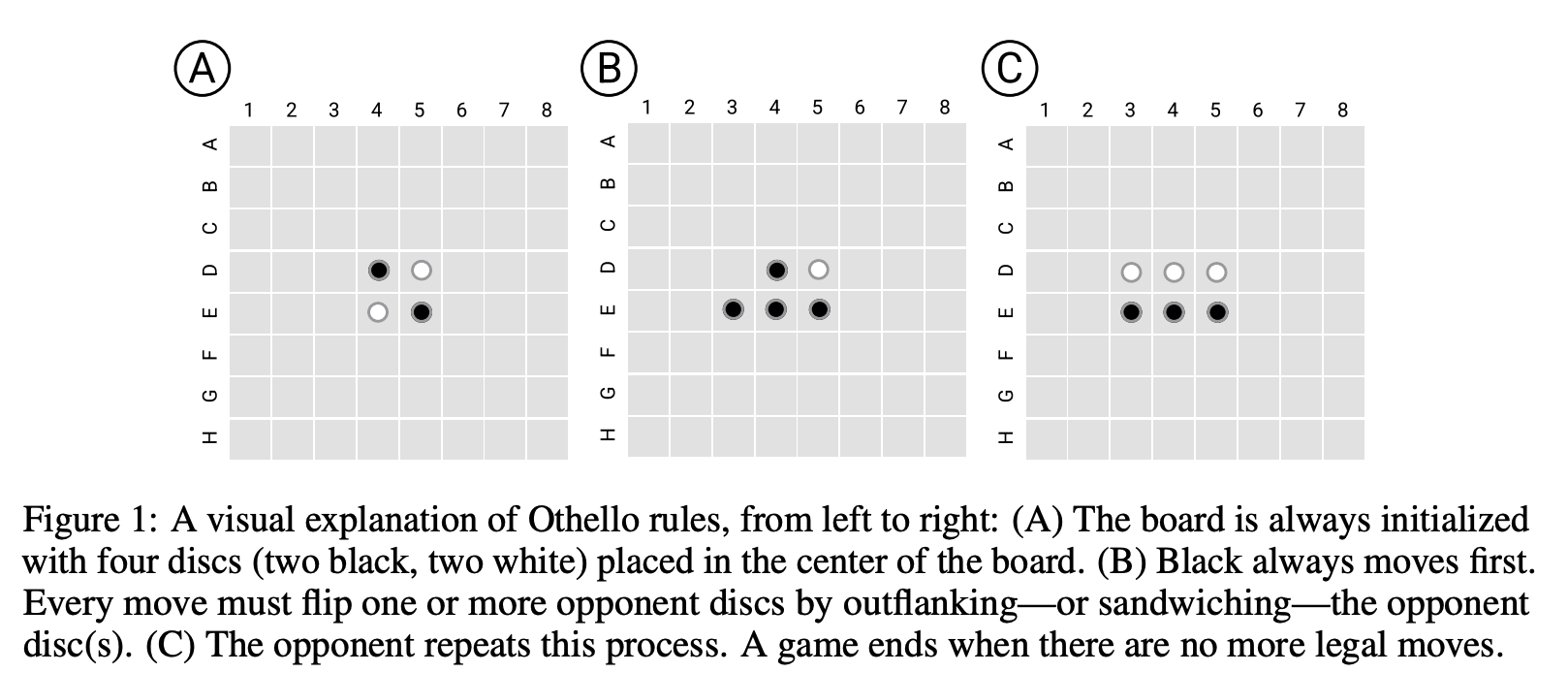

For those unfamiliar, Othello is a board game analogous to chess or go, with two players, black and white, see the rules outlined in the figure below. I found playing the AI on eOthello helpful for building intuition. A single move can change the colour of pieces far away (so long as there's a continuous vertical, horizontal or diagonal line), which means that calculating board state is actually pretty hard! (to my eyes much harder than in chess)

But despite the model just needing to predict the next move, it spontaneously learned to compute the full board state at each move - a fascinating result. A pretty hot question right now is whether LLMs are just bundles of statistical correlations or have some real understanding and computation! This gives suggestive evidence that simple objectives to predict the next token can create rich emergent structure (at least in the toy setting of Othello). Rather than just learning surface level statistics about the distribution of moves, it learned to model the underlying process that generated that data. In my opinion, it's already pretty obvious that transformers can do something more than statistical correlations and pattern matching, see eg induction heads, but it's great to have clearer evidence of fully-fledged world models!

For context on my investigation, it's worth analysing exactly the two pieces of evidence they had for the emergent world representation, the probes and the causal interventions, and their strengths and weaknesses.

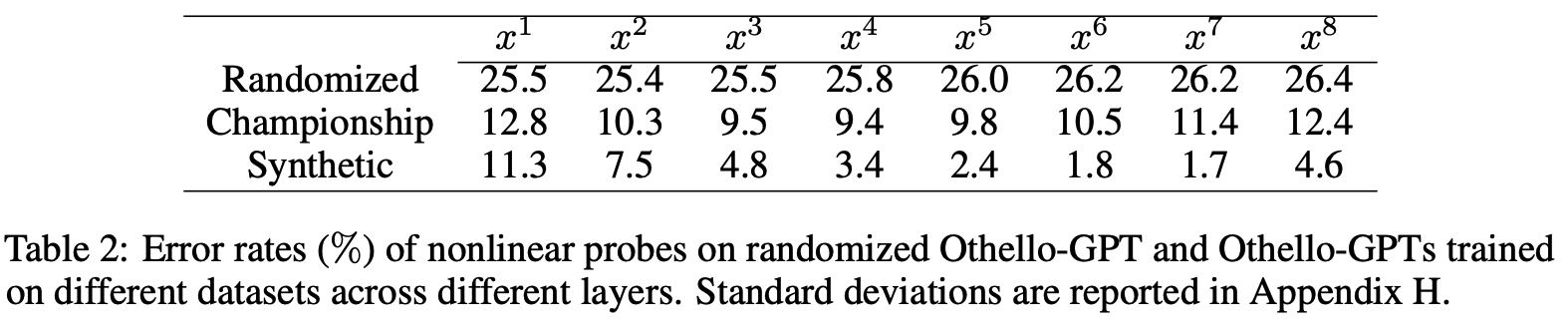

The probes give suggestive, but far from conclusive evidence. When training a probe to extract some feature from a model, it's easy to trick yourself. It's crucial to track whether the probe is just reading out the feature, or actually computing the feature itself, and reading out much simpler features from the model. In the extreme case, you could attach a much more powerful model as your "probe", and have it just extract the input moves, and then compute the board state from scratch! They found that linear probes did not work to recover board state (with an error rate of 20.4%): (ie, projecting the residual stream onto some 3 learned directions for each square, corresponding to empty, black and white logits). While the simplest non-linear probes (a two layer MLP with a single hidden ReLU layer) worked extremely well (an error rate of 1.7%). Further (as described in their table 2, screenshot below), these non-linear probes did not work on a randomly initialised network, and worked better on some layers than others, suggesting they were learning something real from the model.

Probes on their own can mislead, and don't necessarily tell us that the model uses this representation - the probe could be extracting some vestigial features or a side effect of some more useful computation, and give a misleading picture of how the model computes the solution. But their causal interventions make this much more compelling evidence. They intervene by a fairly convoluted process (detailed in the figure below, though you don't need to understand the details), which boils down to choosing a new board state, and applying gradient descend to the model's residual stream such that our probe thinks the model's residual stream represents the new board state. I have an immediate skepticism of any complex technique like this: when applying a powerful method like gradient descent it's so easy to wildly diverge from what the models original functioning is like! But the fact that the model could do the non-trivial computation of converting an edited board state into a legal move post-edit is a very impressive result! I consider it very strong evidence both that the probe has discovered something real, and that the representation found by the probe is causally linked to the model's actual computation!

Naive Implications for Mechanistic Interpretability

I was very interested in this paper, because it simultaneously had the fascinating finding of an emergent world model (and I'm also generally into any good interp paper), yet something felt off. The techniques used here seemed "too" powerful. The results were strong enough that something here seemed clearly real, but my intuition is that if you've really understood a model's internals, you should just be able to understand and manipulate it with far simpler techniques, like linear probes and interventions, and it's easy to be misled by more powerful techniques.

In particular, my best guess about model internals is that the networks form decomposable, linear representations: that the model computes a bunch of useful features, and represents these as directions in activation space. See Toy Models of Superposition for some excellent exposition on this. This is decomposable because each feature can vary independently (from the perspective of the model - on the data distribution they're likely dependent), and linear because we can extract a feature by projecting onto that feature's direction (if the features are orthogonal - if we have something like superposition it's messier). This is a natural way for models to work - they're fundamentally a series of matrix multiplications with some non-linearities stuck in convenient places, and a decomposable, linear representation allows it to extract any combination of features with a linear map!

Under this framework, if a feature can be found by a linear probe then the model has already computed it, and if that feature is used in a circuit downstream, we should be able to causally intervene with a linear intervention, just changing the coordinate along that feature's direction. So the fascinating finding that linear probes do not work, but non-linear probes do, suggests that either the model has a fundamentally non-linear representation of features (which it is capable of using directly for downstream computation!), or there's a linear representation of simpler and more natural features, from which the probe computes board state. My prior was on a linear representation of simpler features, but the causal intervention findings felt like moderate evidence for the non-linear representation. And the non-linear representation hypothesis would be a big deal if true! If you want to reverse-engineer a model, you need to have a crisp picture of how its computation maps onto activations and weights, and this would break a lot of my beliefs about how this correspondance works! Further, linear representations are just really convenient to reverse-engineer, and this would make me notably more pessimistic about mechanistic interpretability working.

My Findings

I'm of the opinion that the best way to become less confused about a mysterious model behaviour is to mechanistically analyse it. To zoom in on whatever features and circuits we can find, build our understanding from the bottom up, and use this to form grounded beliefs about what's actually going on. This was the source of my investigation into grokking, and I wanted to apply it here. I started by trying activation patching and looking for interpretable circuits/neurons, and I noticed a motif whereby some neurons would fire every other move, but with different parity each game. Digging further, I stumbled upon neuron 1393 in layer 5, which seemed to learn (D1==white) AND (E2==black) on odd moves, and (D1==black) AND (E2==white) on even moves.

Generalising from this motif, I found that, in fact, the model does learn a linear representation of board state! But rather than having a direction saying eg "square F5 has a black counter" it says "square F5 has one of my counters". In hindsight, thinking in terms of my vs their colour makes far more sense from the model's perspective - it's playing both black and white, and the valid moves for black become valid moves for white if you flip every piece's colour! (I've since this same observation in Haoxing Du's analysis of Go playing models [LW · GW])

If you train a linear probe on just odd/even moves (ie with black/white to play) then it gets near perfect accuracy! And it transfers reasonably well to the other moves, if you flip its output.

I speculate that their non-linear probe just learned to extract the two features of "I am playing white" and "this square has my colour" and to do an XOR of those. Fascinatingly, without the insight to flip every other representation, this is a pathological example for linear probes - the representation flips positive to negative every time, so it's impossible to recover the true linear structure!

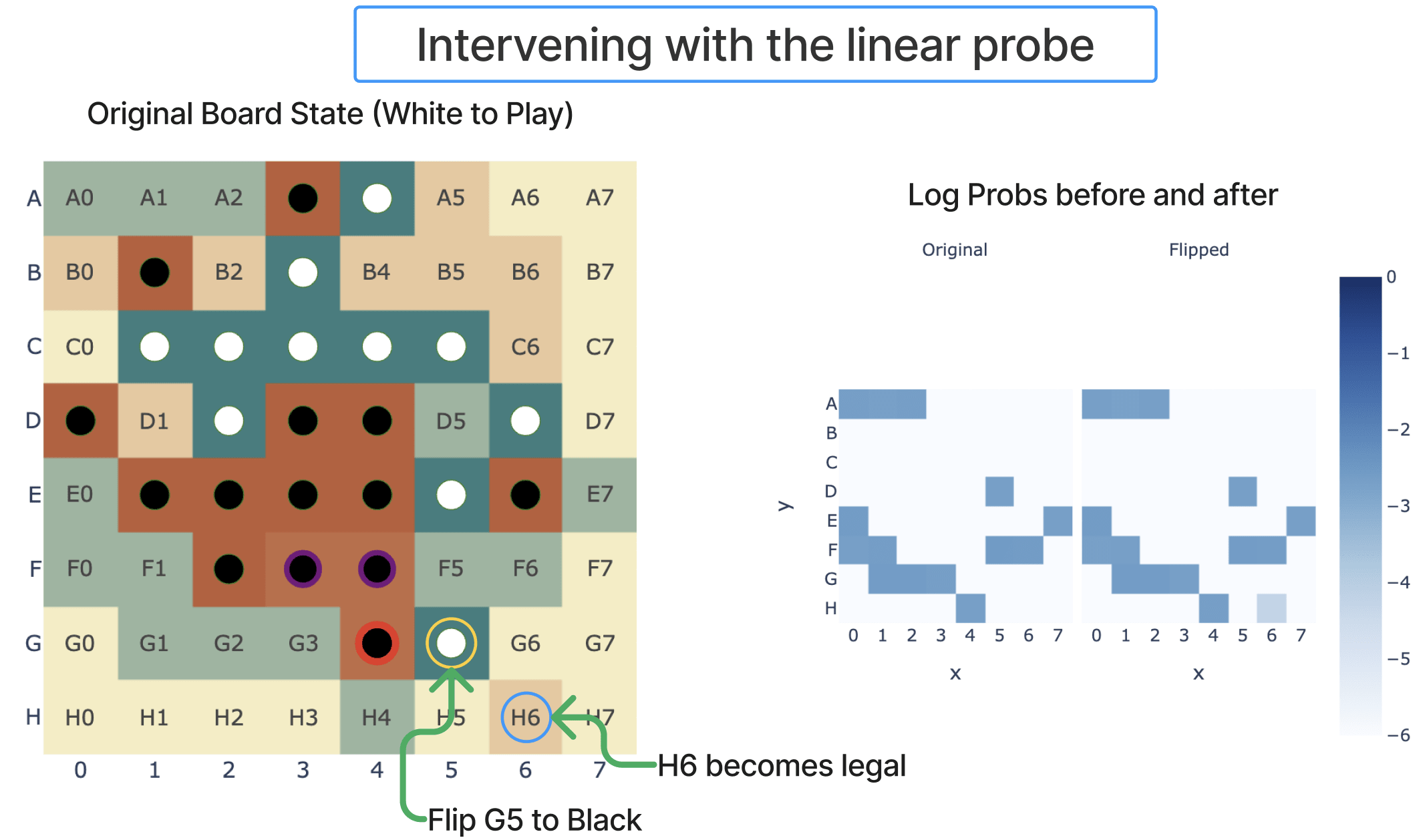

And we can use our probe to causally intervene on the model. The first thing I tried was just negating the coordinate in the direction given by the probe for a square (on the residual stream after layer 4, with no further intervention), and it just worked - see the figure below! Note that I consider this the weakest part of my investigation - on further attempts it needs some hyper-parameter fiddling and is imperfect, discussed later [AF(p) · GW(p)], and I've only looked at case studies rather than a systematic benchmark.

This project was an experiment in speed-running mech interp research, and I got all of the main results in this post over a weekend (~2.5 days/20 hours). I am very satisfied with the results of this experiment! I discuss some of my process-level takeaways [AF · GW], and try to outline the underlying research process in a pedagogical way [AF · GW] - how I got started, how I got traction on the problem, and what the compelling intermediate results looked like.

I also found a lot of tantalising hints of deeper structure inside the model! For example, we can use this probe to interpret input and output weights of neurons, eg Neuron 1393 in Layer 5 [AF · GW] which seems to represent (C0==blank) AND (D1==theirs) AND (E2==mine) (we convert the probe to two directions, blank - 0.5 * my - 0.5 * their, and my - their)

Or, if we look at the top 1% of dataset examples for some layer 4 neurons and look at the frequency by which a square is non-empty, many seem to activate when a specific square is empty! (But some neighbours are present)

I haven't looked hard into these, but I think there's a lot of exciting directions to better understand this model, that I outline in future work [AF · GW]. An angle I'm particularly excited about here is moving beyond just studying "end-to-end" transformer circuits - existing work (eg indirect object identification or induction heads) tends to focus on a circuit that goes from the input tokens to the output logits, because it's much easier to interpret the inputs and outputs than any point in the middle! But our probe can act as a "checkpoint" in the middle - we understand what the probe's directions mean, and we can use this to find early circuits mapping the input moves to compute the world model given by the probe, and late circuits mapping the world model to the output logits!

More generally, the level of traction I've gotten suggests there's a lot of low hanging fruit here! I think this model could serve as an excellent laboratory to test other confusions and claims about models - it's simultaneously clean and algorithmic enough to be tractable, yet large and complex enough to be exciting and less toy. Can we find evidence of superposition? Can we find monosemantic neurons? Are all neurons monosemantic, or can we find and study polysemanticity and superposition in the wild? How do different neuron activations (GELU, SoLU, SwiGLU, etc) affect interpretability? More generally, what kinds of circuits can we find?!

Takeaways

How do models represent features?

My most important takeaway is that this gives moderate evidence for models, in practice, learning decomposable, linear representations! (And I am very glad that I don't need to throw away my frameworks for thinking about models.) Part of the purpose of writing such a long background section is to illustrate that this was genuinely in doubt! The fact that the original paper needed non-linear probes, yet could causally intervene via the probes, seemed to suggest a genuinely non-linear representation, and this could have gone either way. But I now know (and it may feel obvious in hindsight) that it was linear.

As further evidence that this was genuinely in doubt, I've since become aware of an independent discussion between Chris Olah and Martin Wattenberg (an author of the paper), where I gather that Chris pre-registered the prediction that the probe was doing computation on an underlying linear representation, while Martin thought the model learned a genuinely non-linear representation.

Models are complex and we aren't (yet!) very good at reverse-engineering them, which makes evidence for how best to think about them sparse and speculative. One of the best things we have to work with is toy models that are complex enough that we don't know in advance what gradient descent will learn, yet simple enough that we can in practice reverse-engineer them, and Othello-GPT formed an unexpectedly pure natural experiment!

Conceptual Takeaways

A further smattering of conceptual takeaways I have about mech interp from this work - these are fairly speculative, and are mostly just slight updates to beliefs I already held, but hopefully of interest!

An obvious caveat to all of the below is that this is preliminary work on a toy model, and generalising to language models is speculative - Othello is a far simpler environment than language/the real world, a far smaller state space, Othello-GPT is likely over-parametrised for good performance on this task while language models are always under-parametetrised, and there's a ground truth solution to the task. I think extrapolation like this is better than nothing, but there are many disanalogies and it's easy to be overconfident!

- Mech interp for science of deep learning: A motivating belief for my grokking work is that mechanistic interpretability should be a valuable tool for the science of deep learning. If our claims about truly reverse-engineering models are true, then the mech interp toolkit should give grounded and true beliefs about models. So when we encounter mysterious behaviour in a model, mechanistic analysis should de-mystify it!

- I feel validated in this belief by the traction I got on grokking, and I feel further validated here!

- Mech interp == alien neuroscience: A pithy way to describe mech interp is as understanding the brain of an alien organism, but this feels surprisingly validated here! The model was alien and unintuitive, in that I needed to think in terms of my colour vs their colour, not black vs white, but once I'd found this new perspective it all became far clearer and more interpretable.

- Similar to how modular addition made way more sense when I started thinking in Fourier Transforms!

- Models can be deeply understood: More fundamentally, this is further evidence that neural networks are genuinely understandable and interpretable, if we can just learn to speak their language. And it makes me mildly more optimistic that narrow investigations into circuits can uncover the underlying principles that will make model internals make sense

- Further, it's evidence that as you start to really understand a model, mysteries start to dissolve, and it becomes far easier to control and edit - we went from needing to do gradient descent against a non-linear probe to just changing the coordinate along a single direction at a single activation.

- Probing is surprisingly legit: As noted, I'm skeptical by default about any attempt to understand model internals, especially without evidence from a mechanistically understood case study!

- Probing, on the face of it, seems like an exciting approach to understand what models really represent, but is rife with conceptual issues:

- Is the probe computing the feature, or is the model?

- Is the feature causally used/deliberately computed, or just an accident?

- Even if the feature does get deliberately computed and used, have we found where the feature is first computed, or did we find downstream features computed from it (and thus correlated with it)

- I was pleasantly surprised by how well linear probes worked here! I just did naive logistic regression (using AdamW to minimise cross-entropy loss) and none of these issues came up, even though eg some squares had pretty imbalanced class labels.

- In particular, even though it later turned out that the board state was fully computed by layer 4, and I trained my probe on layer 6, it still picked up on the correct features (allowing intervention at layer 4) - despite the board state being used by layers 5 and 6 to compute downstream features!

- Probing, on the face of it, seems like an exciting approach to understand what models really represent, but is rife with conceptual issues:

- Dropout => redundancy: Othello-GPT was, alas trained with attention and residual dropout (because it was built on the MinGPT codebase, which was inspired by GPT-2, which used them). Similar to the backup name movers in GPT-2 Small, I found some suggestive evidence of redundancy built into the model - in particular, the final MLP layer seemed to contribute negatively to a particular logit, but would reduce this to compensate when I patched some model internal.

- Basic techniques just kinda worked?: The main tools I used in this investigation, activation patching, direct logit attribution and max activating dataset examples, basically just worked. I didn't probe hard enough to be confident they didn't mislead me at all, but they all seemed to give me genuinely useful data and hints about model internals.

- Residual models are ensembles of shallow paths: Further evidence that the residual stream is the central object of a transformer, and the meaningful paths of computation tend not to go through every layer, but heavily use the skip connections. This one is more speculative, but I often noticed that eg layer 3 and layer 4 did similar things, and layer 5 and layer 6 neurons did similar things. (Though I'm not confident there weren't subtle interactions, especially re dropout!)

- Can LLMs understand things?: A major source of excitement about the original Othello paper was that it showed a predict-the-next-token model spotaneously learning the underlying structure generating its data - the obvious inference is that a large language model, trained to predict the next token in natural language, may spontaneously learn to model the world. To the degree that you took the original paper as evidence for this, I think that my results strengthen the original paper's claims, including as evidence for this!

- My personal take is that LLMs obviously learn something more than just statistical correlations, and that this should be pretty obvious from interacting with them! (And finding actual inference-time algorithms like induction heads just reinforces this). But I'm not sure how much the paper is a meaningful update for what actually happens in practice.

- Literally the only thing Othello-GPT cares about is playing legal moves, and having a representation of the board is valuable for that, so it makes sense that it'd get a lot of investment (having 128 probe directions gets you). But likely a bunch of dumb heuristics would be much cheaper and work OK for much worse performance - we see that the model trained to be good at Othello seems to have a much worse world model.

- Further, computing the board state is way harder than it seems at first glance! If I coded up an Othello bot, I'd have it compute the board state iteratively, updating after each move. But transformers are built to do parallel, not serial processing - they can't recurse! In just 5 blocks, it needs to simultaneously compute the board state at every position (I'm very curious how it does this!)

- And taking up 2 dimensions per square consumes 128 of the residual stream's 512 dimensions (ignoring any intermediate terms), a major investment!

- For an LLM, it seems clear that it can learn some kind of world model if it really wants to, and this paper demonstrates that principle convincingly. And it's plausible to me that for any task where a world model would help, a sufficiently large LLM will learn the relevant world model, to get that extra shred of recovered loss. But this is a fundamentally empirical question, and I'd love to see data studying real models!

- Note further that if an LLM does learn a world model, it's likely just one circuit among many and thus hard to reliably detect - I'm sure it'll be easy to generate gotchas where the LLM violates what that world model says, if only because the LLM wants to predict the next token, and it's easy to cue it to use another circuit. There's been some recent Twitter buzz about Bing Chat playing legal chess moves, and I'm personally pretty agnostic about whether it has a real model of a chess board - it seems hard to say either way (especially when models are using chain of thought for some basic recursion!).

- One of my hopes is that once we get good enough at mech interp, we'll be able to make confident statements about what's actually going on in situations like this!

Probing

Technical Setup

I use the synthetic model from their paper, and you can check out that and their codebase for the technical details. In brief, it's an 8 layer GPT-2 model, trained on a synthetic dataset of Othello games to predict the next move. The games are length 60, it receives the first 59 moves as input (ie [0:-1]) and it predicts the final 59 moves (ie [1:]). It's trained with attention dropout and residual dropout. The model has vocab size 61 - one for each square on the board (1 to 60), apart from the four center squares that are filled at the start and thus unplayable, plus a special token (0) for passing.

I trained my probe on four million synthetic games (though way fewer would suffice), you can see the training code in tl_probing_v1.py in my repo. I trained a separate probe on even, odd and all moves. I only trained my probe on moves [5:-5] because the model seemed to do weirder things on early or late moves (eg the residual stream on the first move has ~20x the norm of every other one!) and I didn't want to deal with that. I trained them to minimise the cross-entropy loss for predicting empty, black and white, and used AdamW with lr=1e-4, weight_decay=1e-2, eps=1e-8, betas=(0.9, 0.99). I trained the probe on the residual stream after layer 6 (ie get_act_name("resid_post", 6) in TransformerLens notation). In hindsight, I should have trained on layer 6, which is the point where the board state is fully computed and starts to really be used. Note that I believe the original paper trained on the full game (including early and late moves), so my task is somewhat easier than their's.

For each square, each probe has 3 directions, one for blank, black and for white. I convert it to two directions: a "my" direction by taking my_probe = black_dir - white_dir (for black to play) and a "blank" direction by taking blank_probe = blank_dir - 0.5 * black_dir - 0.5 * white_dir (the last one isn't that principled, but it seemed to work fine) (you can throw away the third dimension, since softmax is translation invariant). I then normalise them to be unit vectors (since the norm doesn't matter - it just affects confidence in the probe's logits, which affects loss but not accuracy). I just did this for the black to play probe, and used these as my meaningful directions (this was somewhat hacky, but worked!)

Results

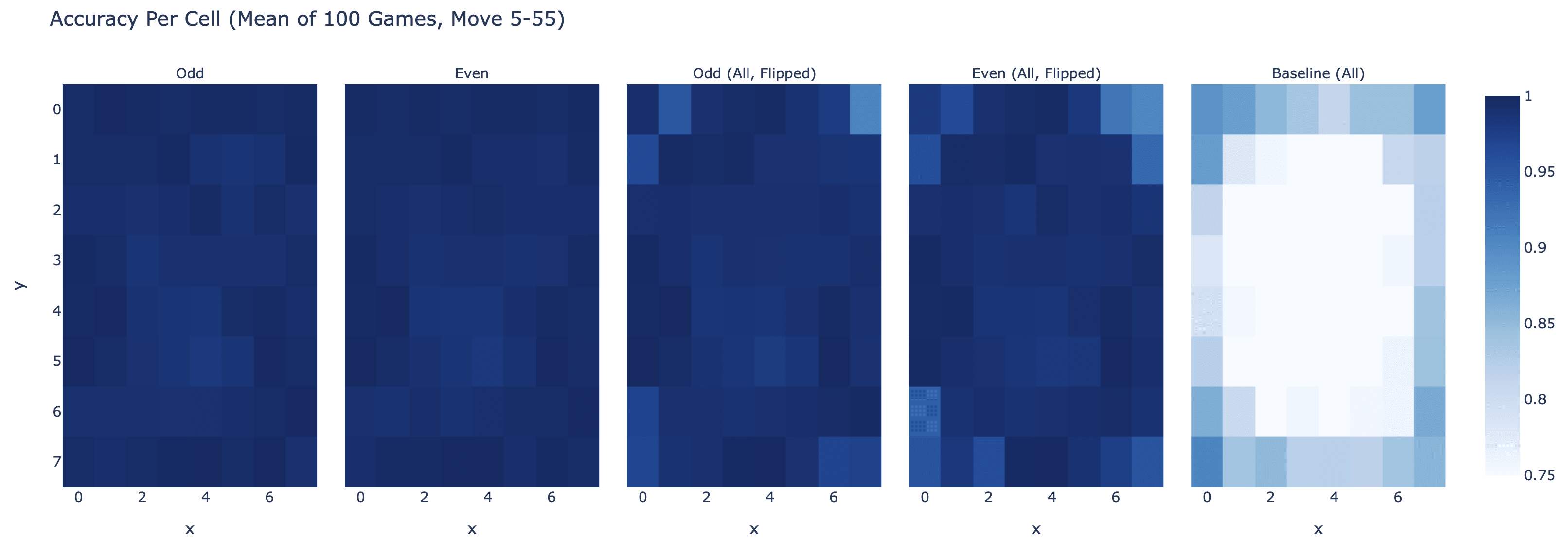

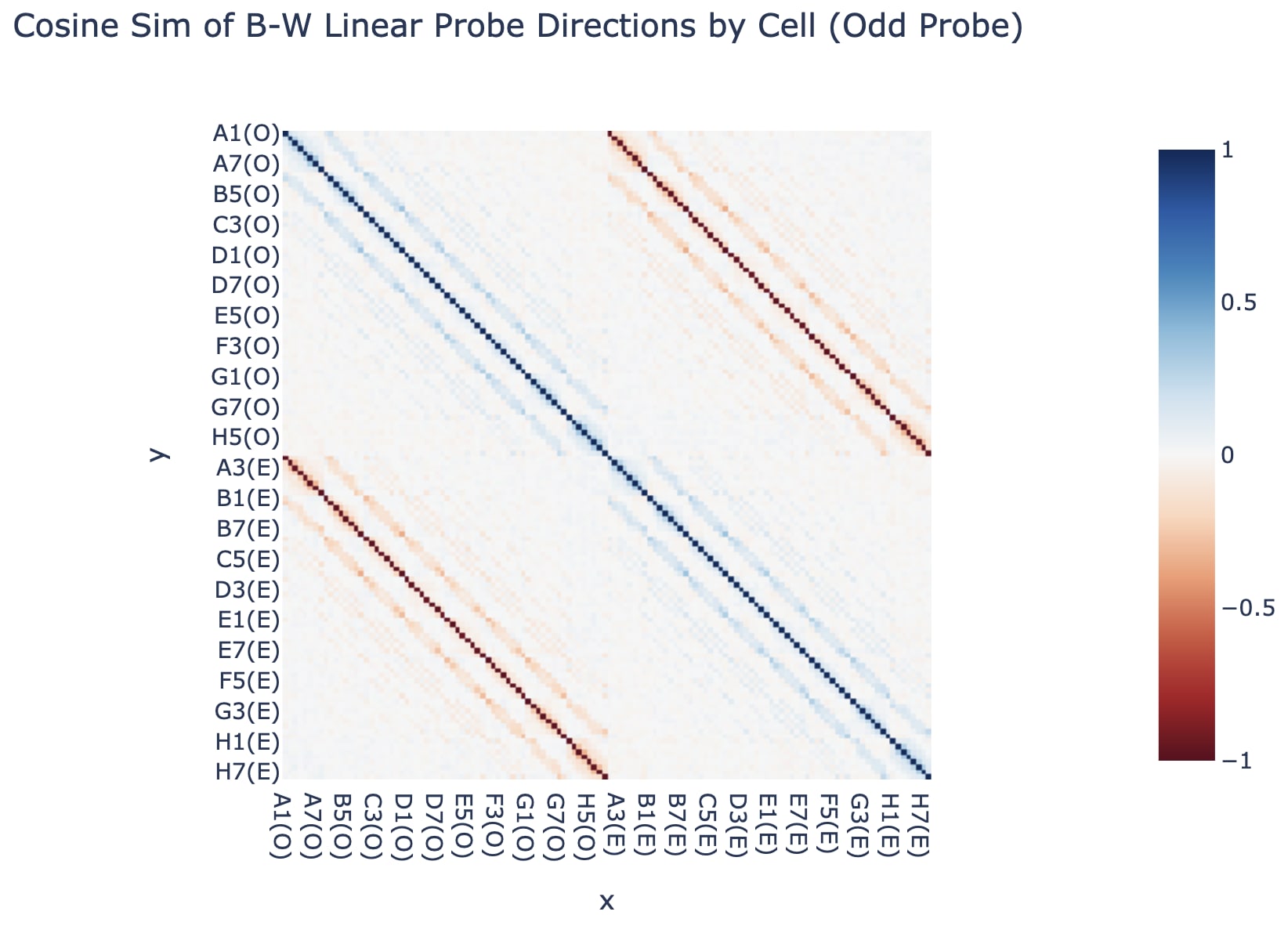

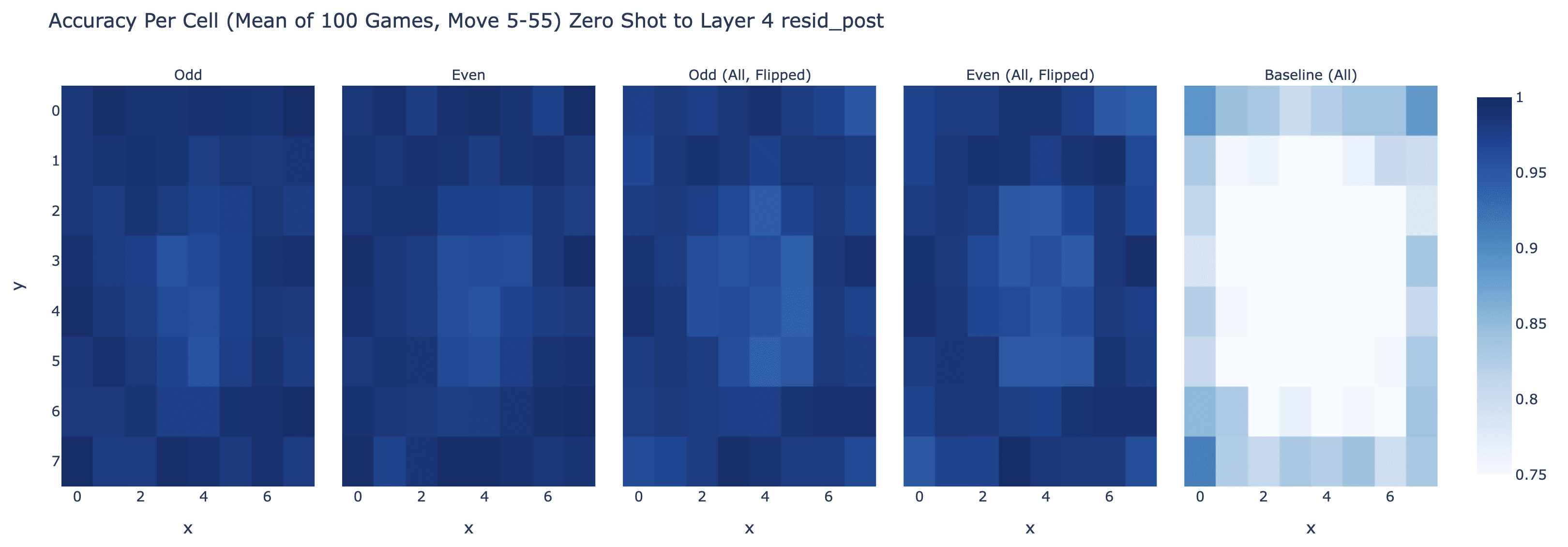

The probe works pretty great for layer 6! And odd (black to play) transfers fairly wel zero shot to even (white to play) by just swapping what mine and your's means (with worse accuracy on the corners). (This is the accuracy taken over 100 games, so 5000 moves, only scored on the middle band of moves)

Further, if you flip either probe, it transfers well to the other side's moves, and the odd and even probes are nearly negations of each other. We convert a probe to a direction by taking the difference between the black direction and white direction. (In hindsight, it'd have made been cleaner to train a single probe on all moves, flipped the labels for black to play vs white to play)

It actually transfers zero-shot to other layers - it's pretty great at layer 4 too (but isn't as good at layer 3 or layer 7):

Intervening

My intervention results are mostly a series of case studies, and I think are less compelling and rigorous than the rest, but are strong enough that I buy them! (I couldn't come up with a principled way of evaluating this at scale, and I didn't have much time left). The following aren't cherry picked - they're just the first few things I tried, and all of them kinda worked!

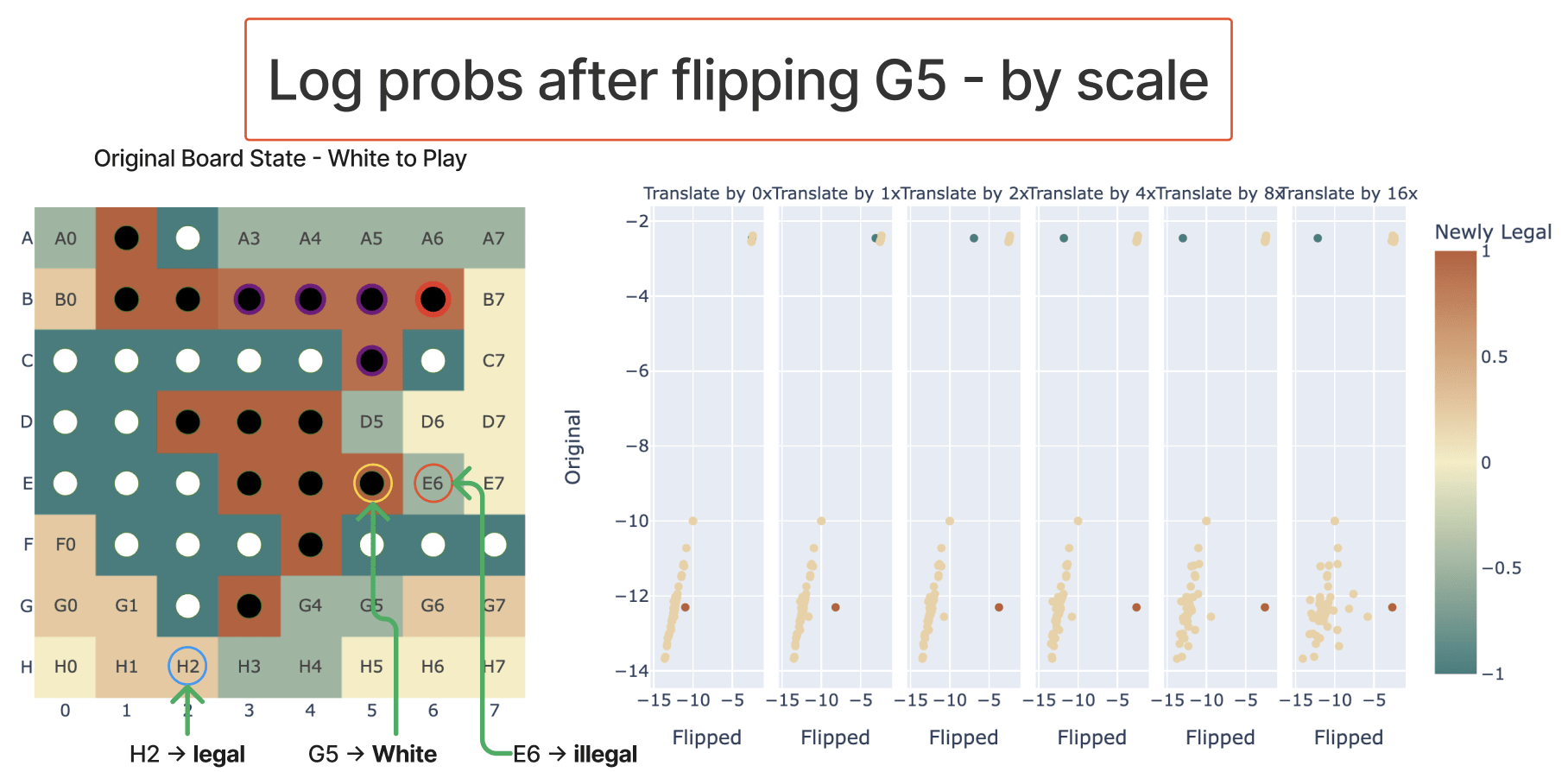

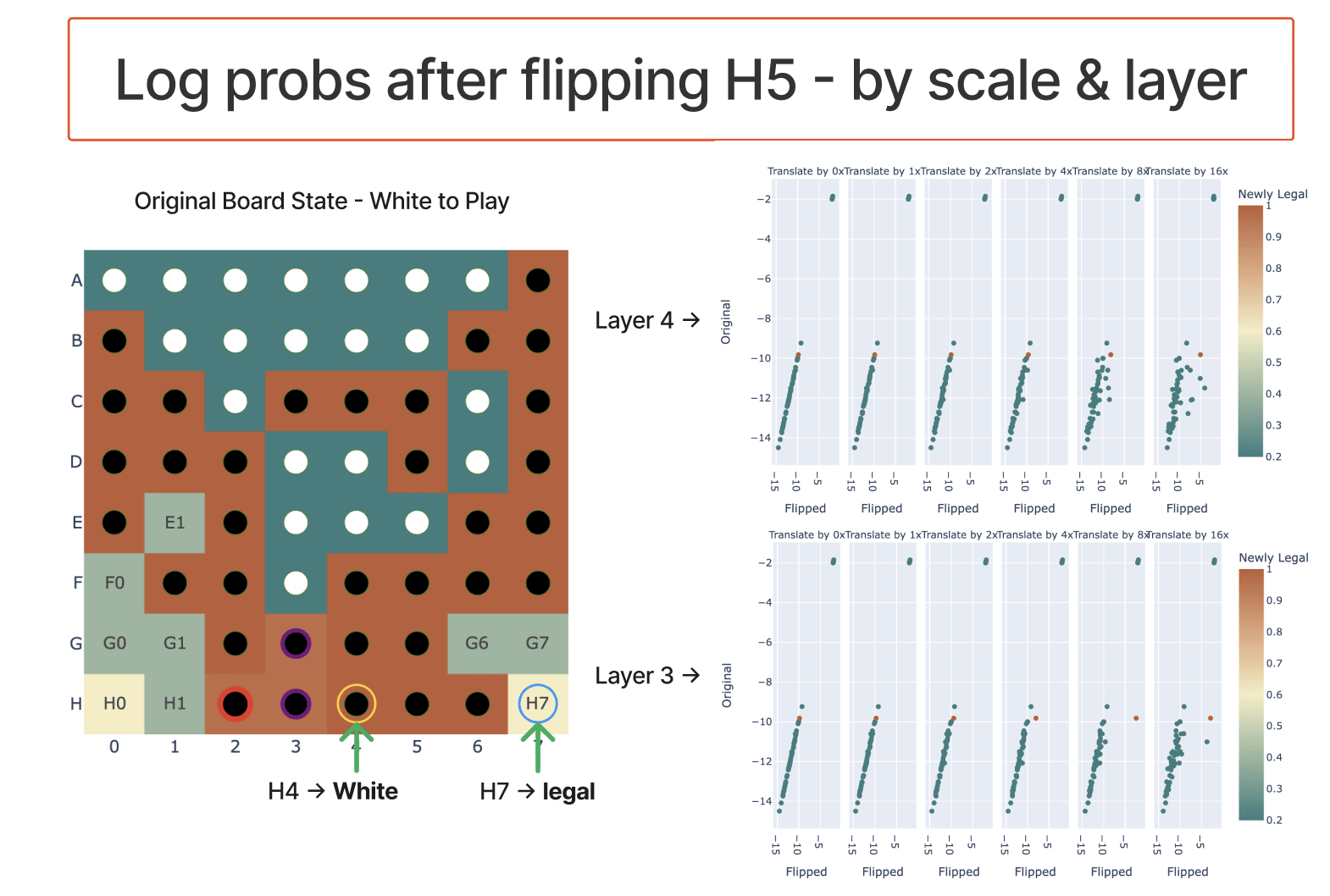

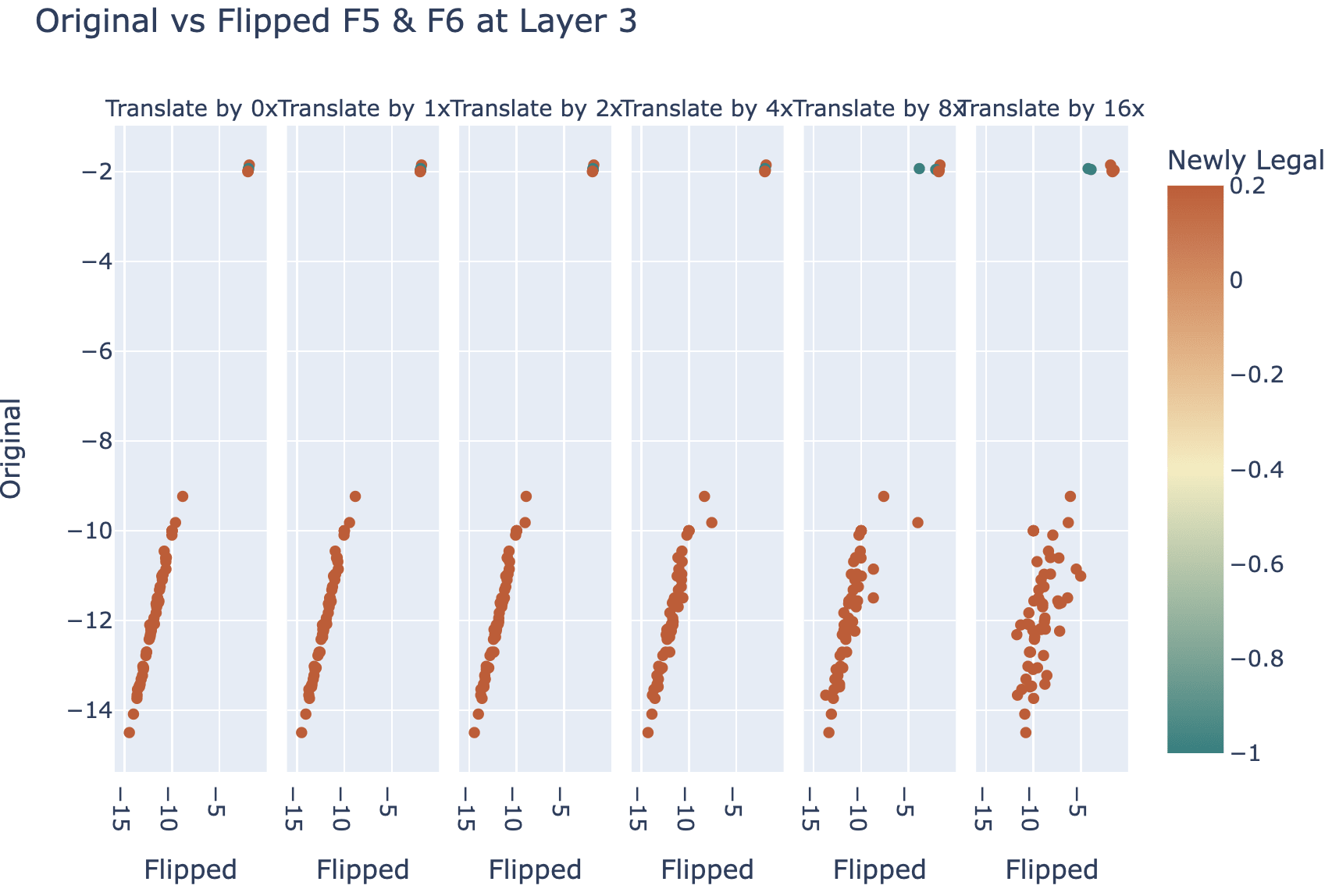

To intervene, I took the model's residual stream after layer 4 (or layer 3), took the coordinate when projecting onto my_probe, and negated that and multiplied by the hyper-parameter scale (which varied from 0 to 16).

My first experiment had layer 4 and scale 1 (ie just negating) and worked pretty well:

Subsequent experiments showed that the scale parameter mattered a fair bit - I speculate that if I instead looked at the absolute coefficient of the coordinate it'd work better.

On the first case where it didn't really work, I got good results by intervening at layer 3 instead - evidence that model processing isn't perfectly divided by layer, but somewhat spreads across adjacent layers when it can get away with it.

It seems to somewhat work for multiple edits - if I flip F5 and F6 in the above game to make G6 illegal, it kinda realises this, though is a weaker effect and is jankier and more fragile:

Note that my edits do not perfectly recover performance - the newly legal logits tend to not be quite as large as the originally legal logits. To me this doesn't feel like a big deal, here's some takes on why this is fine:

- I really haven't tried to improve edit performance, and expect there's low hanging fruit to be had. Eg, I train the probe on layer 6 rather than layer 4, and I train on black and white moves separately rather than on both at once. And I am purely scaling the existing coordinate in this direction, rather than looking at its absolute value.

- Log probs cluster strongly on an unedited game - correct log probs are near exactly the same (around -2 for these games - uniform probability), incorrect log probs tend to be around -11. So even if I get from -11 to -4, that's a major impact

- I expect parallel model computation to be split across layers - in theory the model could have mostly computed board state by layer 3, use that partial result in layer 4 and finish computing it in layer 4, and use the full result later. If so, then we can't expect to get a perfect model edit.

- A final reason is that this model was trained with dropout, which makes everything (especially anything to do with model editing) messy. The model has built in redundancy, and likely doesn't have exactly one dimension per feature. (This makes anything to do with patching or editing a bit suspect and unpredictable, unfortunately)

Citation Info

Please cite this work as:

title={Emergent Linear Representations in World Models of Self-Supervised Sequence Models},

author={Neel Nanda and Andrew Lee and Martin Wattenberg},

year={2023},

eprint={2309.00941},

archivePrefix={arXiv},

primaryClass={cs.LG}

}```

*See [post 2 here](https://www.alignmentforum.org/posts/qgK7smTvJ4DB8rZ6h/othello-gpt-future-work-i-am-excited-about)*26 comments

Comments sorted by top scores.

comment by TurnTrout · 2023-04-19T02:54:10.340Z · LW(p) · GW(p)

Overall, I really like this post. I think it's a cool, self-contained insight with real updates for interp. I also admire how quickly you got these results. It makes me want to hack more things, quickly, and get more cool results, quickly.

Models can be deeply understood: More fundamentally, this is further evidence that neural networks are genuinely understandable and interpretable, if we can just learn to speak their language.

I agree that this is evidence, but I have some sense of "there's going to be low-hanging, truly-understandable circuits, and possibly a bunch of circuits we don't understand and can't even realize are there. And we keep doing interp work and understanding more and more of models, but often we won't know exactly what we don't know." Are you sympathetic to this concern?

(Ofc you don't need to understand a full net for interp to be amazingly useful, and other such caveats)

Also, what does "Translate by X" mean in your intervention plots?

Replies from: neel-nanda-1↑ comment by Neel Nanda (neel-nanda-1) · 2023-04-19T20:54:29.466Z · LW(p) · GW(p)

Thanks! I also feel more optimistic now about speed research :) (I've tried similar experiments since, but with much less success - there's a bunch of contingent factors around not properly hitting flow and not properly clearing time for it though). I'd be excited to hear what happens if you try it! Though I should clarify that writing up the results took a month of random spare non-work time...

Re models can be deeply understood, yes, I think you raise a valid and plausible concern and I agree that my work is not notable evidence against. Though also, idk man, it seems basically unfalsifiable. My intuition is that there may be some threshold of "we cannot deeply interpret past this", but no one knows where it is (and most people assumed "we cannot deeply interpret at all"! Or something similar). And that every interpretability win is evidence that boundary is further on (or non-existent).

Fuzzy intuition: It doesn't distinguish between the boundary being far away vs non-existent, but IMO the correct prior before seeing mech interp work at all was to have some distribution over the point where we hit a wall, and some probability on never hitting a wall. The longer we go without hitting a wall, the higher the posterios probability on never hitting a wall should be.

Translate by X is bad notation - it means "take the coordinate in the "mine vs their's" direction, and set it to -X times its original value". It should really be flip and scale by X or something (it came from an initial iteration of the method).

comment by TurnTrout · 2023-04-15T17:56:46.861Z · LW(p) · GW(p)

Rather than just learning surface level statistics about the distribution of moves, it learned to model the underlying process that generated that data. In my opinion, it's already pretty obvious that transformers can do something more than statistical correlations and pattern matching, see eg induction heads, but it's great to have clearer evidence of fully-fledged world models!

This updated me slightly upwards on "LLMs trained on text learn to model the underlying world, without needing multimodal inputs to pin down more of the world's e.g. spatial properties." I previously had considered that any given corpus could have been generated by a large number of possible worlds, but I now don't weight this objection as highly.

Replies from: neel-nanda-1↑ comment by Neel Nanda (neel-nanda-1) · 2023-04-15T18:19:30.606Z · LW(p) · GW(p)

I previously had considered that any given corpus could have been generated by a large number of possible worlds, but I now don't weight this objection as highly.

Interesting, I hadn't seen that objection before! Can you say more? (Though maybe not if you aren't as convinced by it any more). To me, it'd be that there's many worlds but they all share some commonalities and those commonalities are modelled. Or possibly that the model separately simulates the different worlds.

Replies from: TurnTrout↑ comment by TurnTrout · 2023-04-17T22:59:05.044Z · LW(p) · GW(p)

So, first, there's an issue where the model isn't "remembering" having "seen" all of the text. It was updated by gradients taken over its outputs on the historical corpus. So there's a subtlety, such that "which worlds are consistent with observations" is a wrongly-shaped claim. (I don't think you fell prey to that mistake in OP, to be clear.)

Second, on my loose understanding of metaphysics (ie this is reasoning which could very easily be misguided), there exist computable universes which contain entities training this language model given this corpus / set of historical signals, such that this entire setup is specified by the initial state of the laws of physics. In that case, the corpus and its regularities ("dogs" and "syntax" and such) wouldn't necessarily reflect the world the agent was embedded in, which could be anything, really. Like maybe there's an alien species on a gas giant somewhere which is training on fictional sequences of tokens, some of which happen to look like "dog".

Of course, by point (1), what matters isn't the corpus itself (ie what sentences appear) but how that corpus imprints itself into the network via the gradients. And your post seems like evidence that even a relatively underspecified corpus (sequences of legal Othello moves) appears to imprint itself into the network, such that the network has a world model of the data generator (i.e. how the game works in real life).

Does this make sense? I have some sense of having incommunicated poorly here, but hopefully this is better than leaving your comment unanswered.

comment by Circuitrinos · 2023-09-15T21:19:24.010Z · LW(p) · GW(p)

Regarding this quote "we see that the model trained to be good at Othello seems to have a much worse world model"

What if for LLMs trained to play games like Othello, chess, go, etc..., instead of directly training models to play the best moves, we first train them to play legal moves like in this paper to have it construct a good world model.

Then once it has a world model, we "freeze" those weights and add on additional layers and train just those layers to play the game well.

Wouldn't this force the play-well model to include the good world model? (a model we can probe/understand).

Wouldn't that also force the play-well layers of the model to learn something much easier to probe and understand?

From there, we could potentially probe the play-well layers to learn something about what the optimal strategy of the game actually is.

Replies from: neel-nanda-1↑ comment by Neel Nanda (neel-nanda-1) · 2023-09-16T08:54:39.547Z · LW(p) · GW(p)

That might work, though you could easily end up with the final model not actually faithfully using its world model to make the correct moves - if there's more efficient/correct heuristics, there's no guarantee it'll use the expensive world model, or not just forget about it.

Replies from: gwern↑ comment by gwern · 2023-09-16T17:03:53.506Z · LW(p) · GW(p)

I would expect it to not work in the limit. All the models must converge on the same optimal solution for a deterministic perfect-information game like Othello and become value-equivalent, ignoring the full board state which is irrelevant to reward-maximizing. (You don't need to model edge-cases or weird scenarios which don't ever come up while pursuing the optimal policy, and the optimal 'world-model' can be arbitrarily tinier and unfaithful to the full true world dynamics.*) Simply hardwiring a world model doesn't change this, any more than feeding in the exact board state as an input would lead to it caring about or paying attention to the irrelevant parts of the board state. As far as the RL agent is concerned, knowledge of irrelevant board state is a wasteful bug to be worked around or eliminated, no matter where this knowledge comes from or is injected.

* I'm sure Nanda knows this but for those whom this isn't obvious or haven't seen other discussions on this point (some related to the 'simulators' debate): a DRL agent only wants to maximize reward, and only wants to model the world to the extent that maximizes reward. For a complicated world or incomplete maximization, this may induce a very rich world-model inside the agent, but the final converged optimal agent may have an arbitrarily impoverished world model. In this case, imagine a version of Othello where at the first turn, the agent may press a button labeled 'win'. Obviously, the optimal agent will learn nothing at all beyond learning 'push the button on the first move' and won't learn any world-model at all of Othello! No matter how rich and fascinating the rest of the game may be, the optimal agent neither knows nor cares.

Replies from: TurnTrout↑ comment by TurnTrout · 2023-09-17T03:00:06.214Z · LW(p) · GW(p)

All the models must converge on the same optimal solution for a deterministic perfect-information game like Othello and become value-equivalent, ignoring the full board state which is irrelevant to reward-maximizing.

Strong claim! I'm skeptical (EDIT: if you mean "in the limit" to apply to practically relevant systems we build in the future. If so,) do you have a citation for DRL convergence results relative to this level of expressivity, and reasoning for why realistic early stopping in practice doesn't matter? (Also, of course, even one single optimal policy can be represented by multiple different network parameterizations which induce the same semantics, with eg some using the WM and some using heuristics.)

I think the more relevant question is "given a frozen initial network, what are the circuit-level inductive biases of the training process?". I doubt one can answer this via appeals to RL convergence results.

(I skimmed through the value equivalence paper, but LMK if my points are addressed therein.)

a DRL agent only wants to maximize reward, and only wants to model the world to the extent that maximizes reward.

As a side note, I think this "agent only wants to maximize reward" language is unproductive (see "Reward is not the optimization target", and "Think carefully before calling RL policies 'agents'"). In this case, I suspect that your language implicitly equivocates between "agent" denoting "the RL learning process" and "the trained policy network":

Replies from: gwernAs far as the RL agent is concerned, knowledge of irrelevant board state is a wasteful bug to be worked around or eliminated, no matter where this knowledge comes from or is injected.

↑ comment by gwern · 2023-09-18T15:27:05.506Z · LW(p) · GW(p)

if you mean "in the limit" to apply to practically relevant systems we build in the future.

Outside of simple problems like Othello, I expect most DRL agents will not converge fully to the peak of the 'spinning top', and so will retain traces of their informative priors like world-models.

For example, if you plug GPT-5 into a robot, I doubt it would ever be trained to the point of discarding most of its non-value-relevant world-model - the model is too high-capacity for major forgetting, and past meta-learning incentivizes keeping capabilities around just in case.

But that's not 'every system we build in the future', just a lot of them. Not hard to imagine realistic practical scenarios where that doesn't hold - I would expect that any specialized model distilled from it (for cheaper faster robotic control) would not learn or would discard much more of its non-value-relevant world-model compared to its parent, and that would have potential safety & interpretability implications. The System II distills and compiles down to a fast efficient System I. (For example, if you were trying to do safety by dissecting its internal understanding of the world, or if you were trying to hack a superior reward model, adding in safety criteria not present in the original environment/model, by exploiting an internal world model, you might fail because the optimized distilled model doesn't have those parts of the world model, even if the parent model did, as they were irrelevant.) Chess end-game databases are provably optimal & very superhuman, and yet, there is no 'world-model' or human-interpretable concepts of chess anywhere to be found in them; the 'world-model' used to compute them, whatever that was, was discarded as unnecessary after the optimal policy was reached.

I think the more relevant question is "given a frozen initial network, what are the circuit-level inductive biases of the training process?". I doubt one can answer this via appeals to RL convergence results.

Probably not, but mostly because you phrased it as inductive biases to be washed away in the limit, or using gimmicks like early stopping. (It's not like stopping forgetting is hard. Of course you can stop forgetting by changing the problem to be solved, and simply making a representation of the world-state part of the reward, like including a reconstruction loss.) In this case, however, Othello is simple enough that the superior agent has already apparently discarded much of the world-model and provides a useful example of what end-to-end reward maximization really means - while reward is sufficient to learn world-models as needed, full complete world-models are neither necessary nor sufficient for rewards.

As a side note, I think this "agent only wants to maximize reward" language is unproductive (see "Reward is not the optimization target", and "Think carefully before calling RL policies 'agents'").

I've tried to read those before, and came away very confused what you meant, and everyone who reads those seems to be even more confused after reading them. At best, you seem to be making a bizarre mishmash of confusing model-free and policies and other things best not confused and being awestruck by a triviality on the level of 'organisms are adaptation-executers and not fitness-maximizers', and at worst, you are obviously wrong: reward is the optimization target, both for the outer loop and for the inner loop of things like model-based algorithms. (In what sense does, say, a tree search algorithm like MCTS or full-blown backwards induction not 'optimize the reward'?)

Replies from: TurnTrout↑ comment by TurnTrout · 2023-09-18T16:52:30.305Z · LW(p) · GW(p)

Probably not, but mostly because you phrased it as inductive biases to be washed away in the limit, or using gimmicks like early stopping.

LLMs aren't trained to convergence because that's not compute-efficient, so early stopping seems like the relevant baseline. No?

everyone who reads those seems to be even more confused after reading them

I want to defend "Reward is not the optimization target [LW · GW]" a bit, while also mourning its apparent lack of clarity. The above is a valid impression, but I don't think it's true. For some reason, some people really get a lot out of the post; others think it's trivial; others think it's obviously wrong, and so on. See Rohin's comment [LW(p) · GW(p)]:

(Just wanted to echo that I agree with TurnTrout that I find myself explaining the point that reward may not be the optimization target a lot, and I think I disagree somewhat with Ajeya's recent post for similar reasons. I don't think that the people I'm explaining it to literally don't understand the point at all; I think it mostly hasn't propagated into some parts of their other reasoning about alignment. I'm less on board with the "it's incorrect to call reward a base objective" point but I think it's pretty plausible that once I actually understand what TurnTrout is saying there I'll agree with it.)

You write:

In what sense does, say, a tree search algorithm like MCTS or full-blown backwards induction not 'optimize the reward'?

These algorithms do optimize the reward. My post addresses the model-free policy gradient setting... [goes to check post] Oh no. I can see why my post was unclear -- it didn't state this clearly. The original post does state that AIXI optimizes its reward, and also that:

For point 2 (reward provides local updates to the agent's cognition via credit assignment; reward is not best understood as specifying our preferences), the choice of RL algorithm should not matter, as long as it uses reward to compute local updates.

However, I should have stated up-front: This post addresses model-free policy gradient algorithms like PPO and REINFORCE.

I don't know what other disagreements or confusions you have. In the interest of not spilling bytes by talking past you -- I'm happy to answer more specific questions.

comment by TurnTrout · 2023-04-18T15:38:07.863Z · LW(p) · GW(p)

Not a huge deal for the overall post, but I think your statement here isn't actually known to be strictly true:

Literally the only thing Othello-GPT cares about is playing legal move

I think it's probably true in some rough sense, but I personally wouldn't state it confidently like that. Even if the network is supervised-trained to predict legal moves, that doesn't mean its internal goals or generalization mirrors that.

Replies from: neel-nanda-1↑ comment by Neel Nanda (neel-nanda-1) · 2023-04-19T20:48:09.401Z · LW(p) · GW(p)

Er, hmm. To me this feels like a pretty uncontroversial claim when discussing a small model on an algorithmic task like this. (Note that the model is literally trained on uniform random legal moves, it's not trained on actual Othello game transcripts). Though I would agree that eg "literally all that GPT-4 cares about is predicting the next token" is a dubious claim (even ignoring RLHF). It just seems like Othello-GPT is so small, and trained on such a clean and crisp task that I can't see it caring about anything else? Though the word care isn't really well defined here.

I'm open to the argument that I should say "Adam only cares about playing legal moves, and probably this is the only thing Othello-GPT is "trying" to do".

To be clear, the relevant argument is "there are no other tasks to spend resources on apart from "predict the next move" so it can afford a very expensive world model"

Replies from: TurnTrout↑ comment by TurnTrout · 2023-04-25T01:11:22.800Z · LW(p) · GW(p)

I'm open to the argument that I should say "Adam only cares about playing legal moves, and probably this is the only thing Othello-GPT is "trying" to do".

This statement seems fine, yeah!

(Rereading my initial comment, I regret that it has a confrontational tone where I didn't intend one. I wanted to matter-of-factly state my concern, but I think I should have prefaced with something like "by the way, not a huge deal overall, but I think your statement here isn't known to be strictly true." Edited.)

comment by lukaemon · 2024-08-07T15:57:04.042Z · LW(p) · GW(p)

In hindsight, I should have trained on layer 6, which is the point where the board state is fully computed and starts to really be used.

You mean layer 4?

Replies from: neel-nanda-1↑ comment by Neel Nanda (neel-nanda-1) · 2024-08-07T21:29:25.390Z · LW(p) · GW(p)

Ah, yep, typo

comment by Awesome_Ruler_007 (neel-g) · 2023-09-18T13:55:55.697Z · LW(p) · GW(p)

we see that the model trained to be good at Othello seems to have a much worse world model.

That seems in odds with what optimization theory dictates - in the limit of compute (or data even) the representations should converge to the optimal ones. instrumental convergence [? · GW] too. I don't get why any model trained on Othello-related tasks wouldn't converge to such a (useful) representation.

IMHO this point is a bit overlooked. Perhaps it might be worth investigating why simply playing Othello isn't enough? Has it to do with randomly initialized priors? I feel this could be very important, especially from a Mech Inter viewpoint - you could have different (maybe incomplete) heuristics or representations yielding the same loss. Kinda reminds me of the EAI paper which hinted that different learning rates (often) achieve the same loss but converge on different attention patterns and representations.

Perhaps there's some variable here that we're not considering/evaluating closely enough...

comment by ws27a (martin-kristiansen-1) · 2023-03-31T13:53:10.798Z · LW(p) · GW(p)

Nice work. But I wonder why people are so surprised that these models and GPT would learn a model of the world. Of course they learn a model of the world. Even the skip-gram and CBOW word vectors people trained ages ago modelled the world, in the sense that for example named entities in vector space would be highly correlated with actual spatial/geographical maps. It should be 100% assumed that these models which have many orders of magnitude more parameters are learning much more sophisticated models of the world. What that tells us about their "intelligence" is an entirely different question whatsoever. They are still statistical next token predictors, it's just the statistics are so complicated it essentially becomes a world model. The divide between these concepts is artificial.

Replies from: neel-nanda-1↑ comment by Neel Nanda (neel-nanda-1) · 2023-03-31T16:44:21.367Z · LW(p) · GW(p)

I tried to be explicit in the post that I don't personally care all that much about the world model angle - Othello-GPT clearly does form a world model, it's very clear evidence that this is possible. Whether it happens in practice is a whole other question, but it clearly does happen a bit.

They are still statistical next token predictors, it's just the statistics are so complicated it essentially becomes a world model. The divide between these concepts is artificial.

I think this undersells it. World models are fundamentally different from surface level statistics, I would argue - a world model is an actual algorithm, with causal links and moving parts. Analogous to how an induction head is a real algorithm (given a token A, search the context for previous occurences of A, and predict that the next token then will come next now), while something that memorises a ton of bigrams such that it can predict B after A is not.

Replies from: martin-kristiansen-1↑ comment by ws27a (martin-kristiansen-1) · 2023-04-01T08:45:44.833Z · LW(p) · GW(p)

I think if we imagine an n-gram model where n approaches infinity and the size of the corpus we train on approaches infinity, such a model is capable of going beyond even GPT. Of course it's unrealistic, but my point simply is that surface level statistics in principle is enough to imitate intelligence the way ChatGPT does.

Of course, literally storing probabilities of n-grams is a super poorly compressed way of doing things, and ChatGPT clearly finds more efficient solutions as it moves through the loss landscape trying to minimize next token prediction error. Some of those solutions are going to resemble world models in that features seem to be disentangled from one another in ways that seem meaningful to us humans or seem to correlate with how we view the world spatially or otherwise.

But I would argue that that has likely been happening since we used multilayer perceptrons for next word prediction in the 80s or 90s. I don't think it's so obvious exactly when something is a world model and when it is not. Any neural network is an algorithm in the sense that the state of node A determines the state of node B (setting aside the randomness of dropout layers).

Any neural network is essentially a very complex decision tree. The divide that people are imagining between rule-based algorithmic following of a pattern and neural networks is completely artificial. The only difference is how we train the systems to find whatever algorithms they find.

To me, it would be interesting if ChatGPT developed an internal algorithm for playing chess (for example), such that it could apply that algorithm consistently no matter the sequence of moves being played. However, as we know, it does not do this. What might happen is that ChatGPT develops something akin to spatial awareness of the chess board that can perhaps be applied to a very limited subset of move orders in the game.

For example, it's possible that it will understand that if e3 is passive and e4 is more ambitious, then pushing the pawn further to e5 is even more ambitious. It's possible that it learns that the center of the board is important and that it uses some kind of spatial evaluation that relates to concepts like that. But we also see that its internal chess model breaks down completely when we are outside of common sequences of play. If you play a completely novel game, sooner or later, it will hallucinate an illegal move.

No iteration of GPT will ever stop doing that, it will just take longer and longer before it comes up with an illegal move. An actual chess engine can continue suggesting moves forever. For me, this points to a fundamental flaw in how GPT-like systems work and basically explains why they are not going to lead towards AGI. Optimizing merely for next word prediction cannot and will never incentivize learning robust internal algorithms for games like Chess or any other games. It will just learn algorithms that sometimes work for some cases.

I think the research community needs to start pondering how we can formulate architectures that incentivise building robust internal algorithms and world models, not just as a "lucky" side effect of gradient descent coupled with a simplistic training objective.

↑ comment by Neel Nanda (neel-nanda-1) · 2023-04-01T09:02:30.261Z · LW(p) · GW(p)

I think if we imagine an n-gram model where n approaches infinity and the size of the corpus we train on approaches infinity, such a model is capable of going beyond even GPT. Of course it's unrealistic, but my point simply is that surface level statistics in principle is enough to imitate intelligence the way ChatGPT does.

Sure, in a Chinese room style fashion, but IMO reasoning + internal models have significantly different generalisation properties, and also are what actually happen in practice in models rather than an enormous table of N-Grams. And I think "sufficient diversity of training data" seems a strongg assumption, esp for much of what GPT-4 et al are used for.

More broadly, I think that world models are qualitatively different from N-Grams and there is a real distinction, even for a janky and crappy world model. The key difference is generalisation properties and the internal cognition - real algorithms are just very different from a massive lookup table! (unrelatedly, I think that GPT-4 just really does not care about learning chess, so of course it's bad at it! The loss benefit is tiny)

Replies from: martin-kristiansen-1↑ comment by ws27a (martin-kristiansen-1) · 2023-04-01T09:19:53.996Z · LW(p) · GW(p)

I am happy to consider a distinction between world models and n-gram models, I just still feel like there is a continuum of some sort if we look closely enough. n-gram models are sort of like networks with very few parameters. As we add more parameters to calculate the eventual probability in the softmax layer, at which point do the world models emerge. And when do we term them world models exactly. But I think we're on the same page with regards to the chess example. Your formulation of "GPT-4 does not care about learning chess" is spot on. And in my view that's the problem with GPT in general. All it really cares about is predicting words.

Replies from: mgm452↑ comment by mgm452 · 2023-04-01T18:51:02.347Z · LW(p) · GW(p)

Agree with ws27a that it's hard to pick a certain point in the evolution of models and state they now have a world model. But I think the focus on world models is missing the point somewhat. It makes much more sense to define understanding as the ability to predict what happens next than to define it as compression which is just an artifact of data/model limitations. In that sense, validation error for prediction "is all you need." Relatedly, I don't get why we want to "incentivise building robust internal algorithms and world models" -- if we formulate a goal-based objective instead of prediction, a model is still going to find the best way of solving the problem given its size and will compromise on world model representation if that helps to get closer to the goal. Natural intelligence does very much the same...

Replies from: martin-kristiansen-1↑ comment by ws27a (martin-kristiansen-1) · 2023-04-02T07:29:43.218Z · LW(p) · GW(p)

I agree with you, but natural intelligence seems to be set up in a way so as to incentivise the construction of subroutines and algorithms that can help solve problems, at least among humans. What I mean is that we humans invented a calculator when we realised our brains are not very good at arithmetics, and now we have this device which is sort of like a technological extension of ourselves. A proper AGI implemented in computer hardware should absolutely be able to implement a calculator by its own determination, the fact that it doesn't speaks to the ill-defined optimization criterion. If it was not optimized to predict the next word but instead towards some more global objective, it's possible it would start to do these things, including the formulation of theories and suggestions towards making the world a better place. Not as some mere summary of what humans have written about, but bottom-up from what it can gather itself. Now, how we train such systems is completely unknown right now, and not many people are even looking in that direction. Many people seem to still think that scaling up GPT-like systems or tweaking RLHF will get us there, but I don't see how it will.

Replies from: neel-nanda-1↑ comment by Neel Nanda (neel-nanda-1) · 2023-04-02T10:15:59.397Z · LW(p) · GW(p)

Idk, I feel like GPT4 is capable of tool use, and also capable of writing enough code to make its own tools.

Replies from: martin-kristiansen-1↑ comment by ws27a (martin-kristiansen-1) · 2023-04-02T12:08:20.491Z · LW(p) · GW(p)

I agree that it's capable of doing that, but it just doesn't do it. If you ask it to multiply a large number, it confidently gives you some incorrect answer a lot of the time instead of using it's incredible coding skills to just calculate the answer. If it was trained via reinforcement learning to maximize a more global and sophisticated goal than merely predicting the next word correctly or avoiding linguistic outputs that some humans have labelled as good or bad, it's very possible it would go ahead and invent these tools and start using them, simply because it's the path of least resistance towards its global goal. I think the real question is what that global goal is supposed to be, and maybe we even have to abandon the notion of training based on reward signals altogether. This is where we get into very murky and unexplored territory, but it's ultimately where the research community has to start looking. Just to conclude on my own position; I absolutely believe that GPT-like systems can be one component of a fully fledged AGI, but there are other crucial parts missing currently, that we do not understand in the slightest.