AI scares and changing public beliefs

post by Seth Herd · 2023-04-06T18:51:12.831Z · LW · GW · 21 commentsContents

The opportunity The challenge Approaches to convincing the public Approaches to convincing experts None 21 comments

The opportunity

I, for one, am looking forward to the the next public AI scares.

There's a curious up-side to the reckless deployment of powerful, complex systems: they scare people. This has the potential to shift the landscape of the public debate. It's an opportunity the AGI safety community should be prepared for the next time it happens. I'm not saying that we should become fear-mongers, but that we need to engage wisely when we're given those opportunities. We could easily fumble the ball badly, and we need to not do that.

We seem to be in an interesting wild-west era of AI deployment. I hope we leave this era before it's too late. Before we do, I expect to see more scary behavior from AI systems. The early behavior of Bing Chat, documented in bing Chat is blatantly, aggressively misaligned [LW · GW] caused a furor of media responses, and it seemed to result in some AI X-risk skeptics changing their opinions to take those dangers more seriously. I'd rather not concern myself with public opinion, and I've hoped that we could just leave this to the relative experts (the machine learning and safety communities), but it's looking more and more like the public are going to want to weigh in. I'm hopeful that those changes in expert opinion can continue, and that they'll trickle down into public opinion with enough fidelity to help the situation[1].

I'll mention two particular cases. Gary Marcus said he's shifting his opinions on dangers in a recent podcast (paywalled) with Sam Harris and Stuart Russell. He maintained his skepticism about LLMs constituting real intelligence, but said he'd become more concerned about the dangers, particularly because of how OpenAI and Microsoft deployed rapidly, pressuring Google to respond with rushed deployments of their own. I think he and similar skeptics will shift further as LangChain, AutoGPT, and other automated chain-of-thought adaptations add goals and executive function to LLMs. Another previous skeptic is Russ Roberts of EconTalk, who reaches a very different, older, less tech-savvy, and more conservative audience. He hosted Eric Hoel on a recent episode (not paywalled). Hoel does a very nice job of explaining the risks in sensible, gentle way, but he does focus on x-risk and doesn't fall back on near-term lesser risks. Roberts appears to be pretty much won over, while having been highly skeptical in past interviews on the topic. I haven't attempted anything like a systematic review of public responses, but I've noted not just increased interest but increased credulity among skeptics.

I think we're going to see a shift in public opinion about AI development. That will in part be powered by new scares. Those scares also create opportunities for more people in the AGI safety community to engage with the public. We should think about those public presentations before the next scare. The AI safety community is full of highly intelligent, logical people, but on average, public relations is not our strength. It is time for those of us who want to engage the public to get better.

The challenge

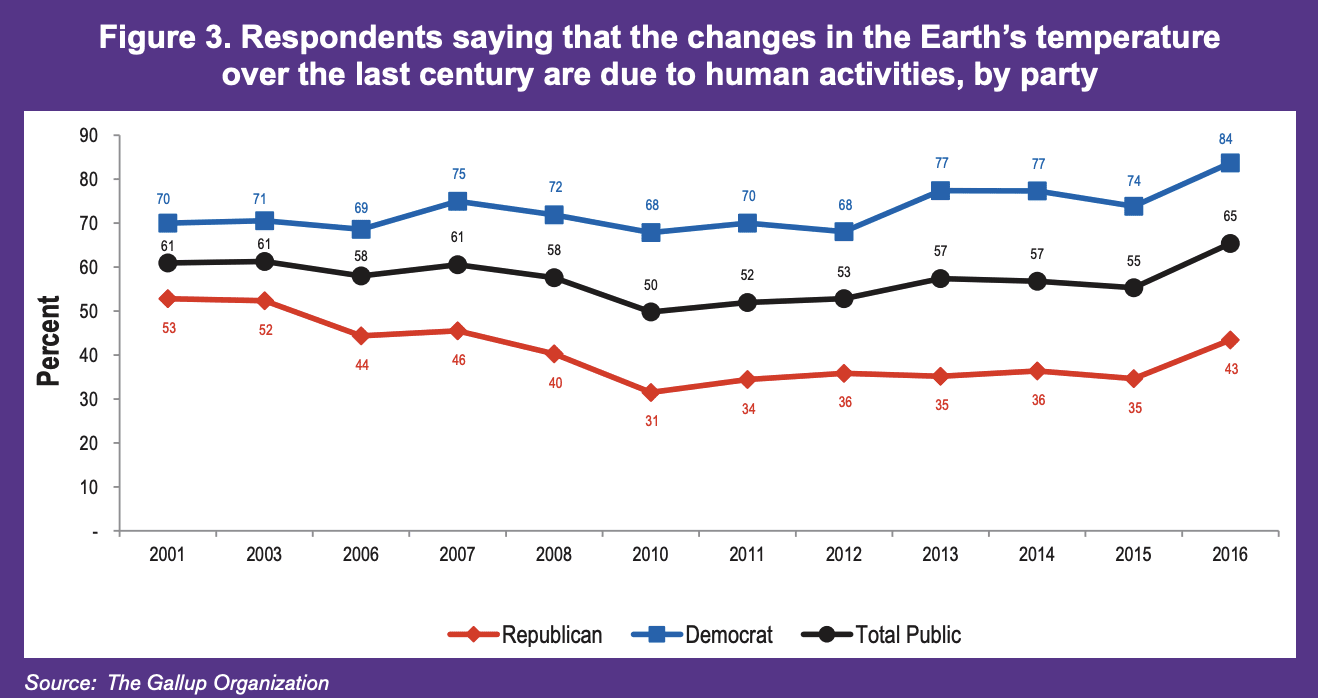

I think it's time to think about our approach now, because we need to get this right. Presenting detailed arguments forcefully is not the way to shift public opinion to your cause. The course of the climate debate is one important object lesson. As the evidence and expert opinion converged, half of the public actually became substantially more skeptical of human-caused climate change.

My main argument is this: we need to not let the same polarization happen with AGI x-risk. And where it's already happening, among intellectuals, we need to reverse it. This is a much larger topic than I'm prepared to cover comprehensively. My argument here is that this is something we should learn, debate, and practice.

The reasons for this polarizing shift are unclear. It's probably not the backfire effect, which appears to happen only in some situations and perhaps among the cognitively-inclined, who will internally create new counterarguments. This is good news. Presenting data and arguments can work.

I've done academic research on cognitive biases, so I feel like I have a pretty good idea what went wrong in that climate debate. Probably many fellow rationalists do too. I'd say that this paradoxical effect seems to result from motivated reasoning and confirmation bias, combined with social rewards. It is socially advantageous to share the important beliefs of those around us. Motivated reasoning pushes us subtly to think more in directions that are likely to lead to that sort of social reward. Confirming arguments and evidence both come to mind more easily, and fulfill that subtle drive to believe what will benefit us. And ugh fields [LW · GW] drive us away from disconfirming evidence and arguments.

Approaches to convincing the public

It's probably useful to have decent theories of the problem. But I don't have a clear idea of how to be persuasive in the public sphere. I've looked at practical theories of changing beliefs in real world settings a little bit recently, but only a little, because learning to be persuasive has always seemed dishonest. But I think that's largely an inappropriate emotional hangup, because the stuff that rings true has nothing to do with lying or even being manipulative. It's basically about having your audience like and trust you, and not being an asshole. It's about showing respect and empathy for the person you're talking to, not pressuring them to make a quick decision, and letting them go off to think it through themselves. These techniques probably don't work if the truth isn't on your side, but that seems fine.

I wanted to get my very vague and tentative conclusions out there, but I don't really have good ideas about how to be persuasive. I want to do more research on that, holding to methods that preserve honesty, and I hope that more folks in this community will share their research and thoughts. We may not want to be in the business of public relations, but at least some of us sort of need to.

I do have ideas about how to fuck up persuading people, and turn them against your beliefs. Some of these I've acquired the hard way, some through research. Accidentally implying you think your audience are idiots is one easy way to do it. And that's tough to avoid, when you're talking about something you've thought about a thousand times as much as the people you're addressing. For one thing, they are idiots- we all are. Rationalism is an ideal, not an achievable goal, even for those of us who aspire to it. In addition, your audience are particularly idiots in the domain of AI safety, relative to you. But people intuitively pick up on a lack of respect, and they're not likely to work out where that's coming from.

Overstating your certainty on various points is one great way to both imply that your audience is an idiot, and to give them an easy way to rationalize that you must really be the idiot- nobody could really be that certain. Communicating effectively under Knightian norms [LW · GW] helps clarify how different ways of communicating probabilistic guesses can make one person's rational best guess sound to another person like sheer hubris. Eliezer may be rational in saying we're doomed with 99% certainty under certain assumptions; but his audience isn't going to listen and think carefully while he explains all of those many assumptions. They'll just move on with their busy day, and assume he's crazy.

Another factor here is that not everyone makes a hobby of understanding complex new ideas quickly, and very few people adhere to a social group where changing one's mind in the face of arguments and evidence is highly valued. Rationalists do have a bit of an edge in this particular area, but we're hardly immune to cognitive biases. Thinking really hard about how motivated reasoning and confirmation bias are at play in various areas of your own mind is one way to develop empathy for the perspective of someone who's being asked to re-evaluate an important belief on the spot. (This deserves a post titled "I am an idiot". We'll see if I have the gumption to write that post.)

I think there's a big advantage here in that the public's existing beliefs around AI x-risk often aren't that strong, and don't fall across existing tribal lines. The AGI safety community seems to have done a great job so far of not making enemies of the ML community, for instance. I think we need to carefully widen this particular circle, and use the time before the public's beliefs are solidified to persuade them, probably through honesty, humbleness, and empathy.

Approaches to convincing experts

I'm repeatedly amazed by how easy it is to convince laypeople that self-aware, agentic AI presents an X-risk we should worry about, and I'm equally amazed at how difficult it is to convince experts- those in ML or cognitive science. And those latter are the people we most need to convince, because the public (rationally) takes their cues from those they trust who are more expert than they.

In discussions, I often hear experts deploy absolutely ridiculous arguments, or worse yet, no real argument beyond "that's ridiculous." I think of their motivation to defend their own field, or to protect their comfortable worldview. And I get frustrated, because that attitude might well get us all killed. This has often caused me to get more forceful, and to talk as though I think they're an idiot. This has predictably terrible results.

I've recently had a discussion with a close friend who works in ML, including having worked at DeepMind. I'd mostly avoided the topic with him until starting to work directly in AGI safety, because I knew it would be a dangerous a friction point, and I highly value his friendship since he's intelligent, creative, generous, and kind. The first real exchange went almost as badly as expected. I steeled myself to practice the virtues I mentioned above: listening, staying calm and empathetic, and presenting arguments gently instead of forcefully. We managed a much better exchange that established a central crux, which I think is probably common to the average ML vs. rationalist view: timelines.

His timeline was around 30 years and maybe never, while mine is much shorter. His opinion was coherent: AI x-risk is a potential problem, but it's not worth talking about because we're so far from needing to solve it, and the solutions will become clearer as we get closer. Those going on about X-risk seem like a cult that's talked themselves into something ridiculous and at odds with the actual experts, probably through the same social and cognitive biases that caused the climate change polarization among conservatives. Right now we should be focusing on near-term AI risks, and the many other ills and dangers of the modern world.

I think this is a common crux of disagreement, but I'm sure it's not the only one. AI scares are reducing that disagreement. We can take advantage of that situation, but only if we get better at persuasion. I intend to do more research and practice on this skill. I hope some of you will join me. Our efforts to date are not encouraging, and look like they may produce polarization rather than steadily shift opinions. But we can do better.

- ^

How public opinion will affect outcomes is a complex discussion, and I'm not ready to offer even a guess, except for the background assumption I use here: the public is going to believe something about AI safety, and it's likely better if they believe something like the truth.

21 comments

Comments sorted by top scores.

comment by Steven Byrnes (steve2152) · 2023-04-07T03:20:45.407Z · LW(p) · GW(p)

RE climate science & public outreach, one funny experience I had was reading Steve McIntyre’s blog "Climate Audit" regularly for years. The guy took an interest in paleoclimate reconstructions, and found that the academic literature (and corresponding IPCC report chapter) was full of statistically-illiterate garbage. This shouldn’t be too surprising—the same could be said in a great many academic fields. But it took on a sharp edge in this field because some people got very defensive, and seemed to have the belief that if they conceded any mistakes, or publicly criticized anyone else within the field, than it would immediately get reported on right-wing news media and provide “fodder for skeptics” etc. (And that belief was totally true!)

I’m imagining a hypothetical scenario where, say, climate scientists retracted a chapter of the IPCC report due to unfixable methodological issues. On the one hand, I’d like to believe that knowledgeable people would increase their trust in the IPCC process as a result, and then they would tell their friends etc. On the other hand, it would totally be portrayed as a massive scandal, and ideologues would keep bringing it up for years as a reason not to believe climate science. I dunno, but I vote for honesty and integrity etc., for various reasons, even if it’s bad for opinion polls, which it might or might not be anyway. I’m not too worried about AI alignment people in this regard.

Anyway, in terms of persuasion, I’m all for extreme patience, not ridiculing & vilifying & infantilizing those you hope to win over, not underestimating inferential distances [? · GW], having your own intellectual house in order, plus the various things you mention in the post :)

Replies from: dr_s↑ comment by dr_s · 2023-04-07T07:06:19.406Z · LW(p) · GW(p)

seemed to have the belief that if they conceded any mistakes, or publicly criticized anyone else within the field, than it would immediately get reported on right-wing news media and provide “fodder for skeptics” etc. (And that belief was totally true!)

Yup, same problem as with vaccines. Everyone tends to downplay side effects when they happen for fear they'll stoke the anti-vaxx movement. But ultimately that sort of "lying for a good cause" thing still gets you screwed over, because people aren't so stupid as to not notice, and if they think you're biased they'll believe you even less than they should.

I get the sense that this is also part of what for example set up the current backlash about transition therapy, puberty blockers etc. Even though the science may all be solid, the political heft attached to it is such that it's hard to imagine that a study pushing in the other direction wouldn't risk some self-censorship, or some pushback from journals. All done with the intent of trying to normalize the treatment as much as possible, since its adoption rates were likely suboptimal anyway, of course; but it resulted in reduced trust and so now there's a large swath of people who feel motivated in not believing any of it. It's a pattern that comes up again and again (happened with multiple things involving COVID too).

comment by romeostevensit · 2023-04-06T23:02:33.573Z · LW(p) · GW(p)

If we only get about five words what do we want them to be?

Replies from: Seth Herd↑ comment by Seth Herd · 2023-04-06T23:19:23.930Z · LW(p) · GW(p)

Depending on the situation, I think you'll get a lot more than five words. If you don't, it may be best to not engage. My reading is that twitter and similar short forms have made polarization much worse. By simplifying the topic that far, you tend to attack straw men if you're addressing those who disagree; and your arguments are liable to be so incomplete that they'll become straw men tempting the other side to attack.

That being said, I think it's useful to have ready to hand compact-as-possible arguments. I wish I had the right set; it's something we should keep working on. But it's important that those arguments address the audience's reasons for skepticism in an empathetic way.

I really liked Eliezar's tweet:

10 obvious reasons that the danger from AGI is way more serious than nuclear weapons:

1) Nuclear weapons are not smarter than humanity.

2) Nuclear weapons are not self-replicating.

3) Nuclear weapons are not self-improving.

... the rest are good too...

Until I noticed the "obvious"! He's slipped in a subtle accusation that everyone who disagrees is a moron, into an otherwise pretty brilliant argument.

I think he's not realizing that, unlike him, other people don't respond to an insinuation that they're a moron by re-engaging and doubling down on being rational. And I doubt even he does that every time.

We can't insist that the whole world become rationalists. That's not going to happen. And demanding things of strangers is obnoxious.

Replies from: dr_s↑ comment by dr_s · 2023-04-07T07:11:18.257Z · LW(p) · GW(p)

Until I noticed the "obvious"! He's slipped in a subtle accusation that everyone who disagrees is a moron, into an otherwise pretty brilliant argument.

I mean, did he say this explicitly, even if in a less direct way? I don't remember it. And honestly if just saying "here are obvious reasons why a certain argument is wrong" means implying everyone who agrees with the argument is a moron... I'm not sure what else can be done. He's not making it particularly personal, but if you're at the stage where any refutation of the argument will only make you more defensive, then you're already really set in it.

Replies from: Seth Herd↑ comment by Seth Herd · 2023-04-07T17:03:21.768Z · LW(p) · GW(p)

Including that obvious is indicative of his attitude in general. He has a right to be frustrated, but it shows and it's pissing people off. My friends outside the rationalist community tend to find his attitude insufferable. This is why I'm saying we need to get either people or skills in this arena.

comment by TinkerBird · 2023-04-06T23:10:22.737Z · LW(p) · GW(p)

I, for one, am looking forward to the the next public AI scares.

Same. I'm about to get into writing a lot of emails to a lot of influential public figures as part of a one man letter writing campaign in the hopes that at least one of them takes notice and says something publically about the problem of AI

Replies from: judith↑ comment by irving (judith) · 2023-04-08T07:50:09.368Z · LW(p) · GW(p)

Count me in!

comment by otto.barten (otto-barten) · 2023-04-28T07:02:20.533Z · LW(p) · GW(p)

I agree that raising awareness about AI xrisk is really important. Many people have already done this (Nick Bostrom, Elon Musk, Stephen Hawking, Sam Harris, Tristan Harris, Stuart Russell, Gary Marcus, Roman Yampolskiy (I coauthored one piece with him in Time), and Eliezer Yudkowsky as well).

I think a sensible place to start is to measure how well they did using surveys. That's what we've done here: https://www.lesswrong.com/posts/werC3aynFD92PEAh9/paper-summary-the-effectiveness-of-ai-existential-risk [LW · GW]

More comms research from us is coming up, and I know a few others are doing the same now.

Replies from: Seth Herd↑ comment by Seth Herd · 2023-04-28T22:45:52.433Z · LW(p) · GW(p)

I took a look at your paper, and I think it's great! My PhD was in cognitive psychology where they're pretty focused on study design, so even though I haven't done a bunch of empirical work, I do have ideas about it. No real critique of your methodology, but I did have some vague ideas about expanding it to address the potential for polarization.

comment by baturinsky · 2023-04-10T10:18:21.643Z · LW(p) · GW(p)

Probably the dfference between laypeople and experts is not the understanding of the danger of the strong AI, but the estimate of how far we are away from it.

Replies from: Seth Herd↑ comment by Seth Herd · 2023-04-10T12:57:34.357Z · LW(p) · GW(p)

I think this is probably largely correct when I think about my interactions with laypeople. They have no real guess on timelines, so short ones are totally credible and part of their wide implicit estimate. This introduces a lot of uncertainty. But this somehow also results in higher p(doom) than experts. Recent opinion polls seem to back this latter statement.

comment by Igor Ivanov (igor-ivanov) · 2023-04-07T16:48:06.715Z · LW(p) · GW(p)

I believe we need a fire alarm [LW · GW].

People were scared of nuclear weapons since 1945, but no one restricted the arms race until The Cuban Missile Crisis in 1961.

We know for sure [LW · GW] that the crisis really scared both Soviet and US high commands, and the first document to restrict nukes was signed the next year, 1962.

What kind of fire alarm it might be? That is The Question.

↑ comment by Seth Herd · 2023-04-07T17:15:47.983Z · LW(p) · GW(p)

I think we'll get some more scares from systems like autoGPT. Watching an AI think to itself, in English, is going to be powerful. And when someone hooks one up to an unturned model and asks it to think about whether and how to take over the world, I think we'll get another media event. For good reasons.

I think actually making such systems, while the core LLM is still too dumb to actually succeed at taking over the world, might be important.

Replies from: igor-ivanov↑ comment by Igor Ivanov (igor-ivanov) · 2023-04-07T17:34:17.494Z · LW(p) · GW(p)

I totally agree that it might be good to have such a fire alarm as soon as possible, and looking at how fast people make GPT-4 more and more powerful makes me think that this is only a matter of time.

comment by Igor Ivanov (igor-ivanov) · 2023-04-07T16:23:39.026Z · LW(p) · GW(p)

I think an important thing to get people convinced of the importance of AI safety is to find proper "Gateway drug" ideas that already bother that person, so they are likely to accept this idea, and through it get interested in AI safety.

For example, if a person is concerned about the rights of minorities, you might tell them about how we don't know how LLMs work, and this causes bias and discrimination, or how it will increase inequality.

If a person cares about privacy and is afraid of government surveillance, then you might tell them about how AI might make all these problems much worse.

comment by RedFishBlueFish (RedStateBlueState) · 2023-04-06T23:40:38.914Z · LW(p) · GW(p)

I'm going to quote this from an EA Forum post [EA · GW] I just made for why simply repeated exposure to AI Safety (through eg media coverage) will probably do a lot to persuade people:

Replies from: Seth Herd[T]he more people hear about AI Safety, the more seriously people will take the issue. This seems to be true even if the coverage is purporting to debunk the issue (which as I will discuss later I think will be fairly rare) - a phenomenon called the illusory truth effect. I also think this effect will be especially strong for AI Safety. Right now, in EA-adjacent circles, the argument over AI Safety is mostly a war of vibes. There is very little object-level discussion - it's all just "these people are relying way too much on their obsession with tech/rationality" or "oh my god these really smart people think the world could end within my lifetime". The way we (AI Safety) win this war of vibes, which will hopefully bleed out beyond the EA-adjacent sphere, is just by giving people more exposure to our side.

↑ comment by Seth Herd · 2023-04-07T02:20:11.954Z · LW(p) · GW(p)

This will definitely help. But any kind dirty tricks could easily deepen the polarization with those opposed. On thinking about it more, I think this polarization is already in play. Interested intellectuals have already seen years of forceful AI doom arguments, and they dislike the whole concept on an emotional level. Similarly, those dismissals drive AGI x-risk believers (including myself) kind of nuts, and we tend to respond more forcefully, and the cycle continues.

The problem with this is that, if the public perceives AGI as dangerous, but most of those actually working in the field do not, policy will tend to follow the experts and ignore the populace. They'll put in surface-level rules that sound like they'll do something to monitor AGI work, without actually doing much. At least that's my take on much of public policy that responds to public outcry.

comment by dr_s · 2023-04-06T22:49:33.250Z · LW(p) · GW(p)

Good post! While I don't like ascribing things to conspiracies when they can explained by simple psychology, with climate change at least we pretty much know that there have also been deliberate effort to muddy the waters or hide important information by fossil fuel companies. Evidence of this stuff has surfaced, we know of think-tanks that have been blatantly funded, and so on. Propaganda is unfortunately also a thing. I don't know if we are to expect it on the same scale for AI too - it's a less entrenched industry, though the main operators (Google, Microsoft, Meta) are all very big, rich and powerful. They push AI but it's not their only source of revenue and relevance. We'll see, but it's something to be wary of.

I think a big divide is also a psychological one. It's something we've seen with COVID too. Some people are just more oriented to be safety minded, to weigh future risks at a lower discount rate. I wear a mask to this day in closed spaces (especially if crowded and poorly ventilated) because I've heard reports of what Long COVID is like and even at low odds, I don't want any of that. I also am pretty willing to suffer small discomforts as I tend to just tune them out, and don't much feel peer pressure. A different mix of these traits will produce different results. To me 30 years doesn't seem nearly a long enough timeline to justify not worrying about something like X-risk. We've spent more energy trying to figure out how to bury nuclear waste for thousands of years.

Maybe one thing that's easier to convince experts of is that even if aligned, AGI would be a very hot potato politically and economically, and generally hard to control. But many people seem to just go with "well, we need to make the best of it because it's unavoidable". Perhaps one of the biggest problems we face is this sort of tech fatalism, this notion that we can't possibly even try to steer our development. I don't think it's entirely true - plenty of tech developments turned on small moments and decisions, if not on whether they would happen, in how they turned out (as for happening at all, I think the most glaring example would be Archimedes of Syracuse almost discovering calculus, but then being killed in his city's siege, and calculus was discovered only some 1800 years later). We've just never really done it on purpose. A lot of heuristics also go "trying to slow down technology sounds oppressive/reactionary" and sure, it kinda does, but everyone who pursues AGI also admits that it'd be a technology like almost nothing else before.

Replies from: Seth Herd↑ comment by Seth Herd · 2023-04-06T23:05:56.785Z · LW(p) · GW(p)

Your points on deliberate misinformation are good ones. Whether it's deliberate or not is muddied by polarized beliefs. If you work as an exec for a big company deploying dangerous AI, you're motivated to believe it's safe. If you can manage to keep believing that, you don't even see it as misinformation when you launch an ad campaign to convince the public that it's safe.

Your recent post AGI deployment as an act of aggression [LW · GW] convinced me that it will indeed be a political hot potato, and helped inspire me to write this post. My current thinking is that it probably won't be viewed as an act of aggression sufficient to do anything like military strikes, but it probably should. One related thought is that we might not even know when we've deployed AGI with the power to do something that shifts balance of power dramatically, like easily hack most government and communication networks. And if someone does know their new AI has that potential, they'll launch it secretly.

I agree that technology research can be controlled. We've done it, to some degree, with genetic and viral research. I'm not sure if deployment can realistically be controlled once the research is done.

comment by Rudi C (rudi-c) · 2023-04-09T10:02:21.738Z · LW(p) · GW(p)

I have two central cruxes/problems with the current safety wave:

- We must first focus on increasing notkilleveryone-ism research, and only then talk about slowing down capability. Slowing down progress is evil and undemocratic. Slowing down should be the last resort, while it is currently the first (and possibly the only) intervention that is seriously pursued.

Particular example: Yud ridicules researchers’ ability to contribute from other near fields, while spreading FUD and asking for datacenter strikes.

- LW is all too happy to support centralization of power, business monopolies, rentseeking, censorship, and, in short, the interests of the elites and the status quo.