SIAI - An Examination

post by BrandonReinhart · 2011-05-02T07:08:30.768Z · LW · GW · Legacy · 208 commentsContents

Notes Introduction SIAI Financial Overview Expenses Big Donors Singularity Summit SIAI Milestones 2005 2006 2007 2008 2009 Significant detail on 2009 achievements is available here. More publications are available here. My suggestions to the SIAI: Moving forward: Conclusion None 208 comments

12/13/2011 - A 2011 update with data from the 2010 fiscal year is in progress. Should be done by the end of the week or sooner.

Disclaimer

- I am not affiliated with the Singularity Institute for Artificial Intelligence.

- I have not donated to the SIAI prior to writing this.

- I made this pledge prior to writing this document.

Notes

- Images are now hosted on LessWrong.com.

- The 2010 Form 990 data will be available later this month.

- It is not my intent to propagate misinformation. Errors will be corrected as soon as they are identified.

Introduction

Acting on gwern's suggestion in his Girl Scout Cookie analysis, I decided to look at SIAI funding. After reading about the Visiting Fellows Program and more recently the Rationality Boot Camp, I decided that the SIAI might be something I would want to support. I am concerned with existential risk and grapple with the utility implications. I feel that I should do more.

I wrote on the mini-boot camp page a pledge that I would donate enough to send someone to rationality mini-boot camp. This seemed to me a small cost for the potential benefit. The SIAI might get better at building rationalists. It might build a rationalist who goes on to solve a problem. Should I donate more? I wasn’t sure. I read gwern’s article and realized that I could easily get more information to clarify my thinking.

So I downloaded the SIAI’s Form 990 annual IRS filings and started to write down notes in a spreadsheet. As I gathered data and compared it to my expectations and my goals, my beliefs changed. I now believe that donating to the SIAI is valuable. I cannot hide this belief in my writing. I simply have it.

My goal is not to convince you to donate to the SIAI. My goal is to provide you with information necessary for you to determine for yourself whether or not you should donate to the SIAI. Or, if not that, to provide you with some direction so that you can continue your investigation.

The SIAI's Form 990's are available at GuideStar and Foundation Center. You must register in order to access the files at GuideStar.

- 2002 (Form 990-EZ)

- 2003 (Form 990-EZ)

- 2004 (Form 990-EZ)

- 2005 (Form 990)

- 2006 (Form 990)

- 2007 (Form 990)

- 2008 (Form 990-EZ)

- 2009 (Form 990)

SIAI Financial Overview

The Singularity Institute for Artificial Intelligence (SIAI) is a public organization working to reduce existential risk from future technologies, in particular artificial intelligence. "The Singularity Institute brings rational analysis and rational strategy to the challenges facing humanity as we develop cognitive technologies that will exceed the current upper bounds on human intelligence." The SIAI are also the founders of Less Wrong.

The graphs above offer an accurate summary of SIAI financial state since 2002. Sometimes the end of year balances listed in the Form 990 doesn’t match what you’d get if you did the math by hand. These are noted as discrepancies between the filed year end balance and the expected year end balance or between the filed year start balance and the expected year start balance.

- Filing Error 1 - There appears to be a minor typo to the effect of $4.86 in the end of year balance for the 2004 document. It appears that Part I, Line 18 has been summed incorrectly. $32,445.76 is listed, but the expected result is $32,450.41. The Part II balance sheet calculations which agree with the error so the source of the error is unclear. The start of year balance in 2005 reflects the expected value so this was probably just a typo in 2004. The following year’s reported start of year balance does not contain the error.

- Filing Error 2 - The 2006 document reports a year start balance of $95,105.00 when the expected year start balance is $165,284.00, a discrepancy of $70,179.00. This amount is close to the estimated Program Service Accomplishments in 2005 Form 990 Part III Line F of $72,000.00. Looks like the service expenses were not included completely in Part II. The money is not missing: future forms show expected values moving forward.

- Theft - The organization reported $118,803.00 in theft in 2009 resulting in a year end asset balance lower than expected. The SIAI is currently pursuing legal restitution.

The SIAI has generated a revenue surplus every year except 2008. The 2008 deficit appears to be a cashing out of excess surplus from 2007. Asset growth indicates that the SIAI is good at utilizing the funds it has available, without overspending. The organization is expanding it’s menu of services, but not so fast that it risks going broke.

Nonetheless, current asset balance is insufficient to sustain a year of operation at existing rate of expenditure. Significant loss of revenue from donations would result in a shrinkage of services. Such a loss of revenue may be unlikely, but a reasonable goal for the organization would be to build up a year's reserves.

Revenue

Revenue is composed of public support, program service (events/conferences held, etc), and investment interest. The "Other" category tends to include Amazon.com affiliate income, etc.

Income from public support has grown steadily with a notable regular increase starting in 2006. This increase is a result of new contributions from big donors. As an example, public support in 2007 is largely composed of significant contributions from Peter Thiel ($125k), Brian Cartmell ($75k), and Robert F. Zahra Jr ($123k) for $323k total in large scale individual contributions (break down below).

In 2007 the SIAI started receiving income from program services. Currently all "Program Service" revenue is from operation of the Singularity Summit. In 2010 the summit generated surplus revenue for the SIAI. This is a significant achievement, as it means the organization has created a sustainable service that could fund further services moving forward.

A specific analysis of the summit is below.

Expenses

Expenses are composed of grants paid to winners, benefits paid to members, officer compensation, contracts, travel, program services, and an other category.

The contracts column in the chart below includes legal and accounting fees. The other column includes administrative fees and other operational costs. I didn’t see reason to break the columns down further. In many cases the Form 990s provide more detailed itemization. If you care about how much officers spent on gas or when they bought new computers you might find the answers in the source.

I don’t have data for 2000 or 2001, but left the rows in the spreadsheet in case it can be filled in later.

Program expenses have grown over the years, but not unreasonably. Indeed, officer compensation has declined steadily for several years. The grants in 2002, 2003, and 2004 were paid to Eliezer Yudkowsky for work relevant to Artificial Intelligence.

The program expenses category includes operating the Singularity Summit, Visiting Fellows Program, etc. Some of the cost of these programs is also included in the other category. For example, the 2007 Singularity Summit is reported as costing $101,577.00 but this total amount is accounted for in multiple sections.

It appears that 2009 was a more productive year than 2008 and also less expensive. 2009 saw a larger Singularity Summit than in 2008 and also the creation of the Visiting Fellows Program.

Big Donors

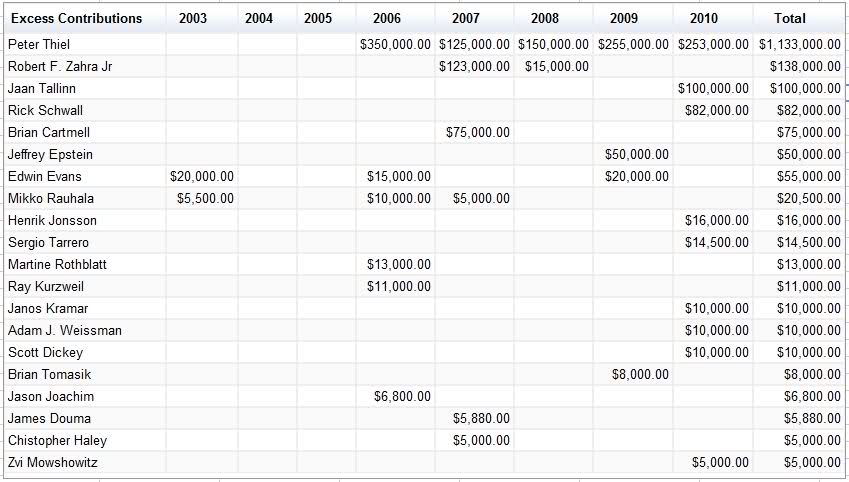

This is not an exhaustive list of contributions. The SIAI’s 2009 filing details major support donations for several previous years. Contributions in the 2010 column are derived from http://intelligence.org/donors. Known contributions of less than $5,000 are excluded for the sake of brevity. The 2006 donation from Peter Thiel is sourced from a discussion with the SIAI.

Peter Thiel and several other big donors compose the bulk of the organization's revenue. It would be good to see a broader base of donations moving forward. Note, however, that the base of donations has been improving. I don't have the 2010 Form 990 yet, but it appears to be the best year yet in terms of both the quantity of donations and the number of individual donors (based on conversation with SIAI members).

Officer Compensation

In 2002 to 2005 Eliezer Yudkowsky received compensation in the form of grants from the SIAI for AI research. It is noted in the Form 990s that no public funds were used for Eliezer’s research grants as he is also an officer. Starting in 2006 all compensation for key officers is reported as salaried instead of in the form of grants.

Compensation spiked in 2006, the same year of greatly increased public support. Nonetheless, officer compensation has decreased steadily despite continued increases in public support. It appears that the SIAI has been managing it’s resources carefully in recent years, putting more money into programs than into officer compensation.

Eliezer's base compensation as salary increased 20% in 2008. It seems reasonable to compare Eliezer's salary with that of professional software developers. Eliezer would be able to make a fair amount more working in private industry as a software developer.

Mr. Yudkowsky clarifies: "The reason my salary shows as $95K in 2009 is that Paychex screwed up and paid my first month of salary for 2010 in the 2009 tax year. My actual salary was, I believe, constant or roughly so through 2008-2010." In this case we would expect to see the 2010 Form 990 show a month reduced salary.

Moving forward, the SIAI will have to grapple with the high cost of recruiting top tier programmers and academics to do real work. I believe this is an argument for the SIAI improving its asset sheet. More money in the bank means more of an ability to take advantage of recruitment opportunities if they present themselves.

Singularity Summit

Founded in 2006 by the SIAI in cooperation with Ray Kurzweil and Peter Thiel, the Singularity Summit focuses on a broad number of topics related to the Singularity and emerging technologies. (1)

The Singularity Summit was free until 2008 when the SIAI chose to begin charging registration fees and accepting sponsorships. (2)

Attendee counts are estimates drawn from SIAI Form 990 filings. 2010 is purported to be the largest conference so far. Beyond the core conference attendees, hundreds of thousands of online viewers are reached through recordings of the Summit sessions. (A)

The cost of running the summit has increased annually, but revenue from sponsorships and registration have kept pace. The conference may have logistic and administrative costs, but it doesn't really impact the SIAI budget. This makes the conference a valuable blend of outreach and education. If the conference convinces someone to donate or in some way directly support work against existential risks, the benefits are effectively free (or at the very least come at no cost to other programs).

Is the Singularity Summit successful?

It’s difficult to evaluate the success of conferences. So many of the benefits are realized downstream of the actual event. Nonetheless, the attendee counts and widening exposure seem to bring immense value for the cost. Several factors contribute to a sense that the conference is a success:

- In 2010 the Summit became a positive revenue generating exercise in its own right. With careful stewardship, the Singularity Summit could grow to generate a reliable annual revenue for the SIAI.

- The ability to run an efficient conference is itself valuable. Should it choose to, the SIAI could run other types of conferences or special interest events in the future with a good expectation of success.

- The high visibility of the Summit plants seeds for future fund raising. Conference attendees likely benefit as much or more from networking as they do from the content of the sessions. Networking builds relationships between people able to coordinate to solve problems or fund solutions to problems.

- The Singularity Summit has generated ongoing public interest and media coverage. Notable articles can be found in Popular Science (3), Popular Mechanics (4), the Guardian (5), and TIME Magazine (6). Quality media coverage raises public awareness of Singularity related topics. There is a strong argument that a person with an interest in futurist or existential risk consciousness raising reaches a wide audience by supporting the Singularity Summit.

When discussing “future shock levels” -- gaps in exposure to and understanding of futurist concepts -- Eliezer Yudkowsky wrote, “In general, one shock level gets you enthusiasm, two gets you a strong reaction - wild enthusiasm or disbelief, three gets you frightened - not necessarily hostile, but frightened, and four can get you burned at the stake.” (7) Most futurists are familiar with this sentiment. Increased public exposure to unfamiliar concepts through the positive media coverage brought about by the Singularity Summit works to improve the legitimacy of those concepts and reduce future shock.

The result is that hard problems get easier to solve. Experts interested in helping, but afraid of social condemnation, will be more likely to do core research. The curious will be further motivated to break problems down. Vague far-mode thinking about future technologies will, for a few, shift into near-mode thinking about solutions. Public reaction to what would otherwise be shocking concepts will shift away from the extreme. The future becomes more conditioned to accept the real work and real costs of battling existential risk.

SIAI Milestones

This is not a complete list of SIAI milestones, but covers quite a few of the materials and events that the SIAI has produced over the years.

2005

- RF Eliezer Yudkowsky publishes “A Technical Explanation of Technical Explanation”

- RF Eliezer Yudkowsky writes chapters for “Global Catastrophic Risks”

- AI and existential risk presentations at Stanford, Immortality Institute’s Life Extension Conference, and the Terasem Foundation.

2006

- Fundraising efforts expand significantly.

- SIAI hosts the first Singularity Summit at Stanford.

2007

- SIAI hosts the Singularity Summit in San Francisco.

- SIAI outreach blog is started.

- SIAI Interview Series is started.

- SIAI introductory video is developed and released.

2008

- SIAI hosts the Singularity Summit in San Jose.

- SIAI Interview Series is expanded.

- SIAI begins its summer intern program.

2009

Significant detail on 2009 achievements is available here. More publications are available here.

- RF Eliezer Yudkowsky completes the rationality sequences.

- Less Wrong is founded.

- SIAI hosts the Singularity Summit in New York.

- RF Anna Salamon speaks on technological forecasting at the Santa Fe institute.

- SIAI establishes the Visiting Fellows Program. Graduate and under-graduate students within AI related disciplines develop related talks and papers.

Papers and talks from SIAI fellows produced in 2009:

- “Changing the frame of AI futurism: From storytelling to heavy-tailed, high-dimensional probability distributions”, by Steve Rayhawk, Anna Salamon, Tom McCabe, Rolf Nelson, and Michael Anissimov. (Presented at the European Conference of Computing and Philosophy in July ‘09 (ECAP))

- “Arms Control and Intelligence Explosions”, by Carl Shulman (Also presented at ECAP)

- “Machine Ethics and Superintelligence”, by Carl Shulman and Henrik Jonsson (Presented at the Asia-Pacific Conference of Computing and Philosophy in October ‘09 (APCAP))

- “Which Consequentialism? Machine Ethics and Moral Divergence”, by Carl Shulman and Nick Tarleton (Also presented at APCAP);

- “Long-term AI forecasting: Building methodologies that work”, an invited presentation by Anna Salamon at the Santa Fe Institute conference on forecasting;

- “Shaping the Intelligence Explosion” and “How much it matters to know what matters: A back of the envelope calculation”, presentations by Anna Salamon at the Singularity Summit 2009 in October

- “Pathways to Beneficial Artificial General Intelligence: Virtual Pets, Robot Children, Artificial Bioscientists, and Beyond”, a presentation by SIAI Director of Research Ben Goertzel at Singularity Summit 2009;

- “Cognitive Biases and Giant Risks”, a presentation by SIAI Research Fellow Eliezer Yudkowsky at Singularity Summit 2009;

- “Convergence of Expected Utility for Universal Artificial Intelligence”, a paper by Peter de Blanc, an SIAI Visiting Fellow.

* Text for this list of papers reproduced from here.

A list of achievements, papers, and talks from 2010 is pending. See also the Singularity Summit content links above.

Further Editorial Thoughts...

Prior to doing this investigation I had some expectation that the SIAI was a money losing operation. I didn’t expect the Singularity Summit to be making money. I had an expectation that Eliezer probably made around $70k (programmer money discounted for being paid by a non-profit). I figured the SIAI had a broad donor base of small donations. I was off base on all counts.

I had some expectation that the SIAI was a money losing operation.

I had weak confidence in this belief, as I don’t know a lot about the finances of public organizations. The SIAI appears to be managing its cash reserves well. It would be good to see the SIAI build up some asset reserves so that it could operate comfortably in years where public support dips or so that it could take advantage of unexpected opportunities.

Overall, the allocation of funds strikes me as highly efficient.

I didn’t expect the Singularity Summit to be making money.

This was a surprising finding, although I incorrectly conditioned my expectation from experiences working with game industry conferences. I don't know exactly how much the SIAI is spending on food and fancy tablecloths at the Singularity Summit, but I don't think I care: it's growing and showing better results on the revenue chart each year. If you attend the conference and contribute to the event you add pure value. As discussed above, the benefits of the conference appear to be very far in the “reducing existential risk” category. Losing the Summit would be a blow to ensuring a safe future.

I know that the Summit will not itself do the hard work of dissolving and solving problems, or of synthesizing new theories, or of testing those theories, or of implementing solutions. The value of the Summit lies in its ability to raise awareness of the work that needs to be done, to create networks of people to do that work, to lower public shock at the implications of that work, and generate funding for those doing that work.

I had an expectation that Eliezer probably made around $70k.

Eliezer's compensation is slightly more than I thought. I'm not sure what upper bound I would have balked at or would balk at. I do have some concern about the cost of recruiting additional Research Fellows. The cost of additional RFs has to be weighed against new programs like Visiting Fellows.

At the same time, the organization has been able to expand services without draining the coffers. A donor can hold a strong expectation that the bulk of their donation will go toward actual work in the form of salaries for working personnel or events like the Visiting Fellows Program.

I figured the SIAI had a broad donor base of small donations.

I must have been out to lunch when making this prediction. I figured the SIAI was mostly supported by futurism enthusiasts and small scale rationalists.

The organization has a heavy reliance on major donor support. I would expect the 2010 filing to reveal a broadening of revenue, but I do not expect the organization to have become independent of big donor support. Big donor support is a good thing to have, but more long term stability would be provided by a broader base of supporters.

My suggestions to the SIAI:

- Consider relocating to a cheaper part of the planet. Research Fellows will likely have to accept lower than market average compensation for their work or no compensation at all. Better to live in an area where compensation goes farther.

- Consider increasing savings to allow for a larger safety net and the ability to take advantage of opportunities.

- Consider itemizing program service expenses in more detail. It isn’t required, but the transparency makes for better decision making on the part of donors.

- Consider providing more information on what Eliezer and other Research Fellows are working on from time to time. You are building two communities. A community of polymaths who will solve hard problems and a community of supporters who believe in the efforts of the polymaths. The latter are more likely to continue their support if they have insight into the activities of the former.

Moving forward:

John Salvatier provided me with good insight into next steps for gaining further clarity into the SIAI’s operational goals, methodology, and financial standing.

- Contact GiveWell for expert advice on organizational analysis to help clarify good next steps.

- Get more information on current and forthcoming SIAI research projects. Is there active work in the research areas the SIAI has identified? Is there a game plan for attacking particular problems in the research space?

- Spend some time gathering information from SIAI members on how they would utilize new funds. Are there specific opportunities the SIAI has identified? Where is the organization “only limited by a lack of cash” -- if they had more funds, what would they immediately pursue?

- Formulate methods of validating the SIAI’s execution of goals. It appears that the Summit is an example of efficient execution of the reducing existential risk goal by legitimizing the existential risk and AGI problem space and by building networks among interested individuals. How will donors verify the value of SIAI core research work in coming years?

Conclusion

At present, the financial position of the SIAI seems sound. The Singularity Summit stands as a particular success that should be acknowledged. The ability for the organization to reduce officer compensation at the same time it expands programs is also notable.

Tax documents can only tell us so much. A deeper picture of the SIAI would work to reveal more of the moving parts within the organization. It would provide a better account of monthly activities and provide a means to measure future success or failure. The question for many supporters will not be “should I donate” but “should I continue to donate?” A question that can be answered by increased and ongoing transparency.

It is important that those who are concerned with existential risks, AGI, and the safety of future technologies and who choose to donate to the SIAI take a role in shaping a positive future for the organization. Donating in support of AI research is valuable, but donating and also telling others about the donation is far more valuable.

Consider the Sequence post ‘Why Our Kind Can’t Cooperate.’ If the SIAI is an organization worth supporting, and given that they are working in a problem space that currently only has strong traction with “our kind,” then there is a risk of the SIAI failing to reach its maximum potential because donors do not coordinate successfully. If you are a donor, stand up and be counted. Post on Less Wrong and describe why you donated. Let the SIAI post your name. Help other donors see that they aren’t acting alone.

Similarly, if you are critical of the SIAI think about why and write it up. Create a discussion and dig into the details. The path most likely to increase existential risk is the one where rational thinkers stay silent.

The SIAI’s current operating budget and donor revenue is very small. It is well within our community’s ability to effect change.

My research has led me to the conclusion I should donate to the SIAI (above my previous pledge in support of rationality boot camp). I already donate to Alcor and am an Alcor member. I have to determine an amount for the SIAI that won't cause wife aggro. Unilateral household financial decisions increase my personal existential risk. :P I will update this document or make a comment post when I know more.

References:

My working spreadsheet is here.

(1) http://www.singularitysummit.com/

(2) http://lesswrong.com/lw/ts/singularity_summit_2008/

(3) http://www.popsci.com/scitech/article/2009-10/singularity-summit-2009-singularity-near

(4) http://www.popularmechanics.com/technology/engineering/robots/4332783

(5) http://www.guardian.co.uk/technology/2008/nov/06/artificialintelligenceai-engineering

(6) http://www.time.com/time/health/article/0,8599,2048138-1,00.html

(7) http://www.sl4.org/shocklevels.html

(A) Summit Content

- 2006 to 2009 http://intelligence.org/singularitysummit

- 2010 - http://www.vimeo.com/album/1519377

208 comments

Comments sorted by top scores.

comment by Louie · 2011-05-02T23:59:50.701Z · LW(p) · GW(p)

If anyone would like to help with fundraising for Singularity Institute (I know the OP expressed interest in the other thread), I can offer coordination and organizing support to help make your efforts more successful.

louie.helm AT singinst.org

I also have ideas for people who would like to help but don't know where to start. Please contact me if you're interested!

For instance, you should probably publicly join SIAI's Facebook Cause Page and help us raise money there. We are stuck at $9,606.01... just a tad shy of $10,000 (Which would be a nice psychological milestone to pass!) This makes us roughly the #350th most popular philanthropic cause on Causes.com... and puts us behind other pressing global concerns like the "Art Creation Foundation For Children in Jacmel Haiti" and "Romania Animal Rescue". Seriously!

And, Yes: Singularity Institute does have other funds that were not raised on this site... but so do these other causes! It wouldn't hurt to look more respectable on public fundraising sites while simultaneously helping to raise money in a very efficient, publicly visible way. One project that might be helpful would be for someone to publicly track our assent up the fundraising rankings on Causes.com. It would be fun and motivating to follow the "horserace" the same way distributed computing teams track their ability to overtake other teams in CPU donations. I think it would focus attention properly on the fundraising issue, make it more of a "shared concern", give us small, manageable goals, make things way more fun (lots of celebrations as we accomplish goals!), and help more people come up with lots of creative ways to raise money, recruit more members, or do whatever it takes to reach our goals.

Replies from: lukeprog, Rain, Pavitra↑ comment by Rain · 2011-05-04T17:02:51.909Z · LW(p) · GW(p)

Are we supposed to donate through facebook rather than directly?

Replies from: MichaelAnissimov, Wilka, Louie↑ comment by MichaelAnissimov · 2011-05-05T04:20:00.982Z · LW(p) · GW(p)

YES, please do! It is directly in practice.

↑ comment by Wilka · 2011-05-04T18:33:20.927Z · LW(p) · GW(p)

Good question. I have a recurring direct donation set-up, but maybe donating via the Facebook page will make it more interesting for my friends to have a deeper look (and maybe donate).

Does anyone know what % of the donation via the causes app goes to the charity? I'm guessing it's not 100%, so I'm wondering if that x% is worth it to have it announced on Facebook. Although I could just announce it myself, I think I'll do that next time my donation happens.

Replies from: Louie↑ comment by Louie · 2011-05-05T01:01:23.661Z · LW(p) · GW(p)

Causes.com charges 4.75% to cover processing fees, but the added benefit of making your donation so publicly visible using Causes.com / Facebook makes it preferable.

We're currently only the #22 most supported cause in the "Education" section. A few hundred dollars more would put us in the top 20.

comment by XiXiDu · 2011-05-03T14:56:08.091Z · LW(p) · GW(p)

The organization reported $118,803.00 in theft in 2009 resulting in a year end asset balance lower than expected. The SIAI is currently pursuing legal restitution.

It isn't much harder to steal code than to steal money from a bank account. Given the nature of research being conducted by the SIAI, one of the first and most important steps would have to be to think about adequate security measures.

If you are a potential donor interested to mitigate risks from AI then before contributing money you will have to make sure that your contribution does not increase those risks even further.

If you believe that risks from AI are to be taken seriously then you should demand that any organisation that studies artificial general intelligence has to establish significant measures against third-party intrusion and industrial espionage that is at least on par with the biosafety level 4 required for work with dangerous and exotic agents.

It might be the case that the SIAI does already employ various measures against the possibility of theft of sensitive information, yet any evidence that hints at the possibility of weak security should be taken seriously. Especially the possibility that there are potentially untrustworthy people who can access critical material should be examined.

Replies from: CarlShulman, jsalvatier, katydee, katydee, wedrifid, timtyler↑ comment by CarlShulman · 2011-05-03T18:14:59.911Z · LW(p) · GW(p)

Upvoted for raising some important points. Ceteris paribus, one failure of internal controls is nontrivial evidence of future ones.

For these purposes one should distinguish between sections of the organization. Eliezer Yudkowsky and Marcello Herreshoff's AI work is a separate 'box' from other SIAI activities such as the Summit, Visiting Fellows program, etc. Eliezer is far more often said to be too cautious and secretive with respect to that than the other way around.

↑ comment by jsalvatier · 2011-05-03T17:55:14.175Z · LW(p) · GW(p)

I think this is a legitimate concern. It's probably not a significant issue right now, but definitely would be one if SIAI started making dramatic progress towards AGI. I don't think it deserves the downvotes its getting.

Replies from: Vladimir_Nesov, BrandonReinhart, JohnH↑ comment by Vladimir_Nesov · 2011-05-03T20:09:38.532Z · LW(p) · GW(p)

Note: the comment has been completely rewritten since the original wave of downvoting. It's much better now.

↑ comment by BrandonReinhart · 2011-05-03T20:05:11.950Z · LW(p) · GW(p)

I agree, this doesn't deserve to be downvoted.

It should be possible for the SIAI to build security measures while also providing some transparency into the nature of that security in a way that doesn't also compromise it. I would bet that Eliezer has thought about this, or at least thought about the fact that he needs to think about it in more detail. This would be something to look into in a deeper examination of SIAI plans.

↑ comment by JohnH · 2011-05-03T19:35:57.222Z · LW(p) · GW(p)

I am more concerned about the possibility that random employees at Google will succeed in making an AGI then I am at SIAI constructing one. To begin with, even if there were only 1000 employees at Google that were interested in AGI and they were only interested in it enough to work 1 hour a month each and they were only 80% as effective as Eliezer (as being some of the smartest people in the world doesn't quite put them on the same level as Eli) then if Eliezer will have AGI in say, 2031 then Google will have it in about 2017.

Replies from: TheOtherDave↑ comment by TheOtherDave · 2011-05-03T20:03:06.651Z · LW(p) · GW(p)

Personally, I expect even moderately complicated problems -- especially novel ones -- to not scale or decompose at all cleanly.

So, leaving aside all questions about who is smarter than whom, I don't expect a thousand smart people working an hour a month on a project to be nearly as productive as one smart person working eight hours a day.

If you could share your reasons for expecting otherwise, I might find them enlightening.

Replies from: JohnH↑ comment by JohnH · 2011-05-03T20:20:39.171Z · LW(p) · GW(p)

The idea is that they are sharing their information and findings so that while they are less efficient then working constantly on the problem they are able to point out possible solutions to each other that one person working by himself would be less likely to notice except through a longer process. As there would be between 4-5 people working on the project at any one time during the month I assume they would be working in a group and would stagger the times such that a nearly continuous effort is produced. Also, as much of the problem involves thinking about things, by not focusing on the issue constantly they may be more likely to come up with a solution then if they are focusing on it constantly.

This is a hypothetical, I have no idea how many people at Google are interested in AI or how much time they spend on it. I would imagine that there most likely are quite a few people at Google working on AGI as it relates directly to Google's core business and that they work on it significantly more than one hour a month each.

(edit the comment with intelligence and Eli was a pun.)

Replies from: bogdanb↑ comment by katydee · 2011-05-04T04:49:26.374Z · LW(p) · GW(p)

I am at least 95% confident that the procedures that govern access to money in SIAI are both different from and far less strict than the procedures that would be used to govern access to code. Even the woefully obsolete "So You Want To Be A Friendly AI Programmer" document contains procedures that are more strict than this for dealing with actual AI code, and my suspicion is that these are likely to have updated only in the direction of more strictness since.

Disclaimer: I am neither an affiliate of nor a donor to the SIAI.

↑ comment by wedrifid · 2011-05-04T03:47:35.918Z · LW(p) · GW(p)

It isn't much harder to steal code than to steal money from a bank account. Given the nature of research being conducted by the SIAI, one of the first and most important steps would have to be to think about adequate security measures.

This would certainly be a critical consideration if or when the SIAI was actually doing work directly on AGI construction. I don't believe that is a focus in the near future. There is too much to be done before that becomes a possibility.

(Not that establishing security anyway is a bad thing.)

↑ comment by timtyler · 2011-05-05T19:57:52.964Z · LW(p) · GW(p)

If you believe that risks from AI are to be taken seriously then you should demand that any organisation that studies artificial general intelligence has to establish significant measures against third-party intrusion and industrial espionage that is at least on par with the biosafety level 4 required for work with dangerous and exotic agents.

What if you believe in openness and transparency - and feel that elaborate attempts to maintain secrecy will cause your partners to believe you are hiding motives or knowledge from them - thereby tarnishing your reputation - and making them trust you less by making yourself appear selfish and unwilling to share?

Surely, then the strategies you refer to could easily be highly counter-productive.

Basically, if you misguidedly impose secrecy on the organisations involved then the good guys have fewer means of honestly signalling their altruism towards each other - and cooperating with each other - which means that their progress is slower and their relative advantage is diminished. That is surely bad news for overall risk.

The "opposite" strategy is much better, IMO. Don't cooperate with secretive non-sharers. They are probably selfish bad guys. Sharing now is the best way to honestly signal that you will share again in the future.

comment by DSimon · 2011-05-02T20:59:38.388Z · LW(p) · GW(p)

Is any other information publicly available at the moment about the theft? The total amount stolen is large enough that it's noteworthy as part of a consideration of SIAI's financial practices.

Replies from: anonynamja, timtyler, brazil84↑ comment by anonynamja · 2011-05-02T21:45:12.388Z · LW(p) · GW(p)

Yes, I want to know that steps have been taken to minimize the possibility future thefts.

Replies from: CarlShulman↑ comment by CarlShulman · 2011-05-03T09:41:25.809Z · LW(p) · GW(p)

Yes, I want to know that steps have been taken to minimize the possibility future thefts.

I don't know all the details of the financial controls, but I do know that members of the board have been given the ability to monitor bank transactions and accounts online at will to detect unusual activity and have been reviewing transactions at the monthly board meetings. There has been a major turnover of the composition of the board, which now consists of three major recent donors, plus Michael Vassar and Eliezer Yudkowsky.

Also, the SIAI pressed criminal charges rather than a civil suit to cultivate an internal reputation to deter repeats.

↑ comment by timtyler · 2011-05-02T21:22:35.649Z · LW(p) · GW(p)

See here. Incidentally, that post seems a lot like this one.

Replies from: BrandonReinhart↑ comment by BrandonReinhart · 2011-05-02T21:26:17.416Z · LW(p) · GW(p)

At this point an admin should undelete the original SIAI Fundraising discussion post. I can't seem to do it myself. I can update it with a pointer to this post.

↑ comment by brazil84 · 2011-05-03T04:40:30.093Z · LW(p) · GW(p)

Surely if the SIAI is pursuing legal restitution, there are documents with more details which can be posted. For example a copy of a civil complaint. (Normally civil complaints are a matter of public record.)

Let's face reality: It's totally embarrassing that an organization dedicated to reducing existential risk for humanity was not able to protect itself from an extremely common and mundane risk faced by organizations day after day.

Of greater concern is that the SIAI may be falling into the common institutional trap of suppressing information simply because it is embarrassing.

Replies from: CarlShulman, katydee↑ comment by CarlShulman · 2011-05-03T10:14:49.767Z · LW(p) · GW(p)

civil complaint

It's a criminal case.

Replies from: brazil84↑ comment by katydee · 2011-05-03T14:08:14.723Z · LW(p) · GW(p)

Why is it embarrassing that an organization dedicated to reducing one sort of risk fell prey to a risk of another sort?

Replies from: brazil84↑ comment by brazil84 · 2011-05-03T23:26:50.582Z · LW(p) · GW(p)

For one thing, the two risks are interrelated. Money is the life's blood of any organization. (Indeed, Eliezer regularly emphasizes the importance of funding to SIAI's mission). Thus, a major financial blow like this is a serious impediment to SIAI's ability to do its job. If you seriously believe that SIAI is significantly reducing existential risk to mankind, then this theft represents an appreciable increase in existential risk to mankind.

Second, SIAI's leadership is selling us an approach to thinking which is supposed to be of general application. i.e. it's not just for preventing ai-related disasters, it's also supposed to make you better at achieving more mundane goals in life. I can't say for sure without knowing the details, but if a small not-for-profit has 100k+ stolen from it, it very likely represents a failure of thinking on the part of the organization.

Replies from: None↑ comment by [deleted] · 2011-05-03T23:59:24.317Z · LW(p) · GW(p)

There is an area of thought without which we can't get anywhere, but which is hard to teach, and that is generating good hypotheses. Once we have a hypothesis in hand, then we can evaluate it. We can compare it to alternatives and use Bayes' formula to update the probabilities that we assign the rival hypotheses. We can evaluate arguments for and against the hypothesis by for example identifying biases and other fallacious reasoning. But none of this will get us where we want to go unless we happen to have selected the true hypothesis (or one "true enough" in a restricted domain, as Newton's law of gravity is true enough in a restricted domain). If all you have to evaluate is a bunch of false hypotheses, then Bayesian updating is just going to move you from one false hypothesis to another. You need to have the true hypothesis among your candidate hypotheses if Bayesian updating is ever going to take you to it. Of course, this is a tall order given that, by assumption, you don't know ahead of time what the truth is. However, the record of discoveries so far suggests that the human race doesn't completely suck at it.

Generating the true hypothesis (or indeed any hypothesis) involves, I am inclined to say, an act of imagination. For this reason, "use your imagination" is seriously good advice. People who are blindsided by reality sometimes say something like, they never imagined that something like this could happen. I've been blindsided by reality precisely because I never considered certain possibilities which consequently took me by surprise.

Replies from: brazil84↑ comment by brazil84 · 2011-05-04T00:24:59.161Z · LW(p) · GW(p)

I agree. It reminds me of a fictional dialogue from a movie about the Apollo 1 disaster:

Clinton Anderson: [at the senate inquiry following the Apollo 1 fire] Colonel, what caused the fire? I’m not talking about wires and oxygen. It seems that some people think that NASA pressured North American to meet unrealistic and arbitrary deadlines and that in turn North American allowed safety to be compromised.

Frank Borman: I won’t deny there’s been pressure to meet deadlines, but safety has never been intentionally compromised.

Clinton Anderson: Then what caused the fire?

Frank Borman: A failure of imagination. We’ve always known there was the possibility of fire in a spacecraft. But the fear was that it would happen in space, when you’re 180 miles from terra firma and the nearest fire station. That was the worry. No one ever imagined it could happen on the ground. If anyone had thought of it, the test would’ve been classified as hazardous. But it wasn’t. We just didn’t think of it. Now who’s fault is that? Well, it’s North American’s fault. It’s NASA’s fault. It’s the fault of every person who ever worked on Apollo. It’s my fault. I didn’t think the test was hazardous. No one did. I wish to God we had.

comment by [deleted] · 2011-05-04T09:10:17.362Z · LW(p) · GW(p)

Does anyone know if the finances of the Cryonics Institute or Alcor have been similarly dissected and analyzed? That kind of paper could literally be the difference between life and death for many of us.

Replies from: jsalvatier, gwern, CarlShulman↑ comment by jsalvatier · 2011-05-04T23:31:55.871Z · LW(p) · GW(p)

I'm guessing no. As a CI member, I agree it would be useful.

Replies from: BrandonReinhart↑ comment by BrandonReinhart · 2011-05-04T23:37:19.671Z · LW(p) · GW(p)

I would be willing to do this work, but I need some "me" time first. The SIAI post took a bunch of spare time and I'm behind on my guitar practice. So let me relax a bit and then I'll see what I can find. I'm a member of Alcor and John is a member of CI and we've already noted some differences so maybe we can split up that work.

Replies from: jsalvatier↑ comment by jsalvatier · 2011-05-05T00:49:43.483Z · LW(p) · GW(p)

I might be willing to do this, but I am somewhat reluctant because I feel like it might be emotionally taxing.

I would however be very enthusiastic about sponsoring someone's else's work and willing to invest a substantial amount. I'm not sure how to go about arranging that, though.

Replies from: None, Nick_Roy↑ comment by [deleted] · 2011-05-05T02:30:27.747Z · LW(p) · GW(p)

Seconded about investment. That could reduce the collective action problem. One possible implementation here.

If there were a 100-page post written about choosing between Alcor and CI, I'd read it. I plan to be hustling people to sign up for cryonics until I'm a glass statue myself, so the more up-to-date information and transparency, the better.

Replies from: jsalvatier↑ comment by jsalvatier · 2011-05-05T06:17:28.727Z · LW(p) · GW(p)

Feel free to contribute to this thread.

↑ comment by gwern · 2011-05-11T15:05:52.967Z · LW(p) · GW(p)

I don't know of anything. Before I did the Girl Scouts reading, I did once briefly look through the Alcor filings to ascertain how much of a loss they ran at. (About 0.7m a year for 2008.)

↑ comment by CarlShulman · 2011-05-05T02:35:40.993Z · LW(p) · GW(p)

I went to Alcor recently to interview the staff at length and in detail for due diligence. I could add info in comments to such a post.

comment by jsalvatier · 2011-05-04T14:54:52.776Z · LW(p) · GW(p)

This GiveWell thread includes a transcript of a discussion between GiveWell and SIAI representatives.

Replies from: jsalvatier, PhilGoetz, ata, PhilGoetz↑ comment by jsalvatier · 2011-05-04T15:18:12.367Z · LW(p) · GW(p)

Michael Vassar is working on an idea he calls the "Persistent Problems Group" or PPG. The idea is to assemble a blue-ribbon panel of recognizable experts to make sense of the academic literature on very applicable, popular, but poorly understood topics such as diet/nutrition. This would have obvious benefits for helping people understand what the literature has and hasn't established on important topics; it would also be a demonstration that there is such a thing as "skill at making sense of the world."

I am a little surprised about the existence of the Persistent Problems Group; it doesn't sound like it has a lot to do with SIAI's core mission (mitigating existential risk, as I understand it). I'd be interested in hearing more about that group and the logic behind the project.

Overall the transcript made me less hopeful about SIAI.

Replies from: gwern, BrandonReinhart, TimFreeman, XFrequentist↑ comment by BrandonReinhart · 2011-05-04T16:48:50.620Z · LW(p) · GW(p)

"Michael Vassar's Persistent Problems Group idea does need funding, though it may or may not operate under the SIAI umbrella."

It sounds like they have a similar concern.

↑ comment by TimFreeman · 2011-05-04T20:09:52.166Z · LW(p) · GW(p)

Is there any reason to believe that the Persistent Problems Group would do better at making sense of the literature than people who write survey papers? There are lots of survey papers published on various topics in the same journals that publish the original research, so if those are good enough we don't need yet another level of review to try to make sense of things.

↑ comment by XFrequentist · 2011-05-04T19:18:58.157Z · LW(p) · GW(p)

Eric Drexler made what sounds to me like a very similar proposal, and something like this is already done by a few groups, unless I'm missing some distinguishing feature.

I'd be very interested in seeing what this particular group's conclusions were, as well as which methods they would choose to approach these questions. It does seem a little tangential to the SIAI's stated mission through.

↑ comment by ata · 2011-05-04T23:47:03.352Z · LW(p) · GW(p)

Google has an "AGI team"?

Replies from: Kevin, PhilGoetz, timtyler↑ comment by Kevin · 2011-05-05T09:30:38.806Z · LW(p) · GW(p)

Yup. I think right now they're doing AGI-ish work though, not "let's try and build an AGI right now".

http://www.google.com/research/pubs/author37920.html

Replies from: lukeprog↑ comment by PhilGoetz · 2011-05-05T17:16:07.511Z · LW(p) · GW(p)

Google has AFAIK more computer power than any other organization in the world, works on natural language recognition, and wants to scan in all the books in the world. Coincidence?

Replies from: wedrifid, lukeprog↑ comment by timtyler · 2011-05-04T23:53:17.156Z · LW(p) · GW(p)

They are hosting and sponsoring an AGI conference in August 2011:

Replies from: ata"AGI-11 will be hosted by Google in Mountain View, California."

↑ comment by ata · 2011-05-04T23:56:26.712Z · LW(p) · GW(p)

Yeah, I found that earlier. I was referring to the line in the linked document that says "A friend of the community was hired for Google's AGI team, and another may be soon." That doesn't seem to be referring just to the conference.

Replies from: timtyler↑ comment by timtyler · 2011-05-05T08:05:14.701Z · LW(p) · GW(p)

Google is a major machine intelligence company.

At least one of their existing products aims pretty directly at general-purpose intelligence.

They have a range of other intelligence-requiring applications: translate, search, goggles, street view, speech recognition.

They have expressed their AI ambitions plainly in the past:

We have some people at Google who are really trying to build artificial intelligence and do it on a large scale.

...however, "Google's AGI team" is interesting phrasing. It probably refers to Google Research.

Moshe Looks has worked for Google Research since 2007, goes to the AGI conferences - e.g. see here - and was once described as a SIAI "scientific advisor" on their blog - the most probable source of this tale, IMO.

Google Research certainly has some interesting publications in machine intelligence and machine learning.

↑ comment by PhilGoetz · 2011-05-04T22:33:38.336Z · LW(p) · GW(p)

These two excerpts summarize where I disagree with SIAI:

Our needs and opportunities could change in a big way in the future. Right now we are still trying to lay the basic groundwork for a project to build an FAI. At the point where we had the right groundwork and the right team available, that project could cost several million dollars per year.

As to patents and commercially viable innovations - we're not as sure about these. Our mission is ultimately to ensure that FAI gets built before UFAI; putting knowledge out there with general applicability for building AGI could therefore be dangerous and work directly against our mission.

So, SIAI plans to develop an AI that will take over the world, keeping their techniques secret, and therefore not getting critiques from the rest of the world.

This is WRONG. Horrendously, terrifyingly, irrationally wrong.

There are two major risks here. One is the risk of an arbitrarily-built AI, made not with Yudkowskian methodologies, whatever they will be, but with due diligence and precautions taken by the creators to not build something that will kill everybody.

The other is the risk of building a "FAI" that works, and then successfully becomes dictator of the universe for the rest of time, and this turns out more poorly than we had hoped.

I'm more afraid of the second than of the first. I find it implausible that it is harder to build an AI that doesn't kill or enslave everybody, than to build an AI that does enslave everybody, in a way that wiser beings than us would agree was beneficial.

And I find it even more implausible, if the people building the one AI can get advice from everyone else in the world, while the people building the FAI do not.

Replies from: Rain, jsalvatier, jsalvatier, hairyfigment, timtyler, AlphaOmega↑ comment by Rain · 2011-05-05T01:56:13.600Z · LW(p) · GW(p)

I think of it this way:

- Chance SIAI's AI is Unfriendly: 80%

- Chance anyone else's AI is Unfriendly: >99%

- Chance SIAI builds their AI first: 10%

- Chance SIAI builds their AI first while making all their designs public: <1% (no change to other probabilities)

↑ comment by PhilGoetz · 2011-05-15T04:57:12.493Z · LW(p) · GW(p)

An AI that is successfully "Friendly" poses an extistential risk of a kind that other AIs don't pose. The main risk from an unfriendly AI is that it will kill all humans. That isn't much of a risk; humans are on the way out in any case. Whereas the main risk from a "friendly" AI is that it will successfully impose a single set of values, defined by hairless monkeys, on the entire Universe until the end of time.

And, if you are afraid of unfriendly AI because you're afraid it will kill you - why do you think that a "Friendly" AI is less likely to kill you? An "unfriendly" AI is following goals that probably appear random to us. There are arguments that it will inevitably take resources away from humans, but these are just that - arguments. Whereas a "friendly" AI will be designed to try to seize absolute power, and take every possible measure to prevent humans from creating another AI. If your name appears on this website, you're already on its list of people whose continued existence will be risky.

(Also, all these numbers seem to be pulled out of thin air.)

Replies from: nshepperd, Rain, timtyler, TimFreeman, wedrifid↑ comment by nshepperd · 2011-05-15T14:03:37.619Z · LW(p) · GW(p)

I see no reason an AI with any other expansionist value system will not exhibit the exact same behaviour, except towards a different goal. There's nothing so special about human values (except that they're, y'know, good, but that's a different issue).

↑ comment by Rain · 2011-05-15T13:42:19.869Z · LW(p) · GW(p)

You're using a different definition of "friendly" than I am. An 80% chance SIAI's AI is Unfriendly already contains all of your "takes over but messes everything up in unpredictable ways" scenarios.

The numbers were exaggerated for effect, to show contrast and my thought process. It seems to me that you think the probabilities are reversed.

↑ comment by timtyler · 2011-05-15T14:32:53.108Z · LW(p) · GW(p)

And, if you are afraid of unfriendly AI because you're afraid it will kill you - why do you think that a "Friendly" AI is less likely to kill you?

One definition of the term explains:

The term "Friendly AI" refers to the production of human-benefiting, non-human-harming actions in Artificial Intelligence systems that have advanced to the point of making real-world plans in pursuit of goals.

See the "non-human-harming" bit. Regarding:

If your name appears on this website, you're already on its list of people whose continued existence will be risky.

Yes, one of their PR problems is that they are implicitly threatening their rivals. In the case of Ben Goertzel some of the threats are appearing IRL. Let us hear the tale of how threats and nastiness will be avoided. No plan is not a good plan, in this particular case.

↑ comment by TimFreeman · 2011-05-15T18:22:01.719Z · LW(p) · GW(p)

An AI that is successfully "Friendly" poses an extistential risk of a kind that other AIs don't pose. The main risk from an unfriendly AI is that it will kill all humans. That isn't much of a risk

What do you mean by existential risk, then? I thought things that killed all humans were, by definition, existential risks.

humans are on the way out in any case.

What, if anything, do you value that you expect to exist in the long term?

There are arguments that [an UFAI] will inevitably take resources away from humans, but these are just that - arguments.

Pretty compelling arguments, IMO. It's simple -- the vast majority of goals can be achieved more easily if one has more resources, and humans control resources, so an entity that is able to self-improve will tend to seize control of all the resources and therefore take control of those resources from the humans.

Do you have a counterargument, or something relevant to the issue that isn't just an argument?

↑ comment by wedrifid · 2011-05-15T15:40:50.671Z · LW(p) · GW(p)

AI will be designed to try to seize absolute power, and take every possible measure to prevent humans from creating another AI. If your name appears on this website, you're already on its list of people whose continued existence will be risky.

Not much risk. Hunting down irrelevant blog commenters is a greater risk than leaving them be. There isn't much of a window during which any human is a slightest threat and during that window going around killing people is just going to enhance the risk to it.

Replies from: timtyler↑ comment by timtyler · 2011-05-15T16:15:06.569Z · LW(p) · GW(p)

The window is presumably between now and when the winner is obvious - assuming we make it that far.

IMO, there's plenty of scope for paranoia in the interim. Looking at the logic so far some teams will reason that unless their chosen values get implemented, much of value is likely to be lost. They will then mulitiply that by a billion years and a billion planets - and conclude that their competitors might really matter.

Killing people might indeed backfire - but that still leaves plenty of scope for dirty play.

Replies from: wedrifid↑ comment by wedrifid · 2011-05-15T16:25:24.340Z · LW(p) · GW(p)

The window is presumably between now and when the winner is obvious

No. Reread the context. This is the threat from "F"AI, not from designers. The window opens when someone clicks 'run'.

Replies from: timtyler↑ comment by timtyler · 2011-05-15T17:54:30.205Z · LW(p) · GW(p)

Uh huh. So: world view difference. Corps and orgs will most likely go from 90% human to 90% machine through the well-known and gradual process of automation, gaining power as they go - and the threats from bad organisations are unlikely to be something that will appear suddenly at some point.

↑ comment by Luke Stebbing (LukeStebbing) · 2011-05-05T22:37:12.809Z · LW(p) · GW(p)

If we take those probabilities as a given, they strongly encourage a strategy that increases the chance that the first seed AI is Friendly.

jsalvatier already had a suggestion along those lines:

I wonder if SIAI could publicly discuss the values part of the AI without discussing the optimization part.

A public Friendly design could draw funding, benefit from technical collaboration, and hopefully end up used in whichever seed AI wins. Unfortunately, you'd have to decouple the F and AI parts, which is impossible.

Replies from: jsalvatier↑ comment by jsalvatier · 2011-05-06T16:55:05.669Z · LW(p) · GW(p)

Isn't CEV an attempt to separate F and AI parts?

Replies from: wedrifid, LukeStebbing↑ comment by Luke Stebbing (LukeStebbing) · 2011-05-06T16:58:19.547Z · LW(p) · GW(p)

It's a good first step.

Replies from: jsalvatier↑ comment by jsalvatier · 2011-05-06T17:05:31.963Z · LW(p) · GW(p)

I don't understand your impossibility comment, then.

Replies from: LukeStebbing↑ comment by Luke Stebbing (LukeStebbing) · 2011-05-06T17:38:03.923Z · LW(p) · GW(p)

I'm talking about publishing a technical design of Friendliness that's conserved under self-improving optimization without also publishing (in math and code) exactly what is meant by self-improving optimization. CEV is a good first step, but a programmatically reusable solution it is not.

Replies from: jsalvatierBefore you the terrible blank wall stretches up and up and up, unimaginably far out of reach. And there is also the need to solve it, really solve it, not "try your best".

↑ comment by jsalvatier · 2011-05-06T17:44:50.240Z · LW(p) · GW(p)

OK, I understand that much better now. Great point.

↑ comment by jsalvatier · 2011-05-05T06:10:25.191Z · LW(p) · GW(p)

I wonder if SIAI could publicly discuss the values part of the AI without discussing the optimization part. The values part seems to me (and from what I can tell, you too) where the most good would be done by public discussion while the optimization part seems to me where the danger lies if the information gets out.

Replies from: wedrifid, timtyler↑ comment by wedrifid · 2011-05-05T06:38:16.515Z · LW(p) · GW(p)

I wonder if SIAI could publicly discuss the values part of the AI without discussing the optimization part. The values part seems to me (and from what I can tell, you too) where the most good would be done by public discussion while the optimization part seems to me where the danger lies if the information gets out.

Not honestly. When discussing values publicly you more or less have to spin bullshit. I would expect any public discussion the SIAI engaged in to be downright sickening to read and any interesting parts quickly censored. I'd much prefer no discussion at all - or discussion done by other people outside the influence or direct affiliation with the SIAI. That way the SIAI would not be obliged to distort or cripple the conversation for the sake of PR nor able to even if it wanted to.

Replies from: Nick_Tarleton↑ comment by Nick_Tarleton · 2011-05-05T16:16:09.140Z · LW(p) · GW(p)

I would expect any public discussion the SIAI engaged in to be downright sickening to read and any interesting parts quickly censored.

CEV doesn't seem to fit this description.

Replies from: wedrifid↑ comment by wedrifid · 2011-05-05T16:49:08.735Z · LW(p) · GW(p)

CEV doesn't seem to fit this description.

CEV is one of the things which, if actually explored thoroughly, would definitely fit this description. As it is it is at the 'bullshit border'. That is, a point at which you don't yet have to trade off epistemic considerations in favor of signalling to the lowest common denominator. Because it is still credible that the not-superficially-nice parts just haven't been covered yet - rather than being outright lied about.

Replies from: katydee, Will_Newsome↑ comment by katydee · 2011-05-05T17:04:03.466Z · LW(p) · GW(p)

Do you have evidence for this proposition?

Replies from: PhilGoetz, wedrifid↑ comment by PhilGoetz · 2011-05-15T04:50:15.127Z · LW(p) · GW(p)

I agree entirely with both of wedifrid's comments above. Just read the CEV document, and ask, "If you were tasked with implementing this, how would you do it?" I tried unsuccessfully many times to elicit details from Eliezer on several points back on Overcoming Bias, until I concluded he did not want to go into those details.

One obvious question is, "The expected value calculations that I make from your stated beliefs indicate that your Friendly AI should prefer killing a billion people over taking a 10% chance that one of them is developing an AI; do you agree?" (If the answer is "no", I suspect that is only due to time discounting of utility.)

Replies from: DrRobertStadler↑ comment by DrRobertStadler · 2011-09-13T20:51:02.705Z · LW(p) · GW(p)

Surely though if the FAI is in a position to be able to execute that action, it is in a position where it is so far ahead of an AI someone could be developing that it would have little fear of that possibility as a threat to CEV?

Replies from: PhilGoetz↑ comment by PhilGoetz · 2011-09-15T22:18:08.028Z · LW(p) · GW(p)

It won't be very far ahead of an AI in realtime. The idea that the FAI can get far ahead, is based on the idea that it can develop very far in a "small" amount of time. Well, so can the new AI - and who's to say it can't develop 10 times as quickly as the FAI? So, how can a one-year-old FAI be certain that there isn't an AI project that has been developed secretly 6 months ago and is about to overtake it in itelligence?

↑ comment by wedrifid · 2011-05-05T17:30:33.010Z · LW(p) · GW(p)

It is a somewhat complex issue, best understood by following what is (and isn't) said in conversations along the lines of CEV (and sometimes metaethics) when the subject comes up. I believe the last time was a month or two ago in one of lukeprog's posts.

Mind you this is a subject that would take a couple of posts to properly explore.

↑ comment by Will_Newsome · 2011-05-13T01:12:51.367Z · LW(p) · GW(p)

Because it is still credible that the not-superficially-nice parts just haven't been covered yet - rather than being outright lied about.

Isn't exploring the consequences of something like CEV pretty boring? Naively, the default scenario conditional on a large amount of background assumptions about relative optimization possible from various simulation scenarios et cetera is that the FAI fooms along possibly metaphysical spatiotemporal dimensions turning everything into acausal economic goodness. Once you get past the 'oh no that means it kills everything I love' part it's basically a dead end. No? Note: the publicly acknowledged default scenario for a lot of smart people is a lot more PC than this. It's probably not default for many people at all. I'm not confident in it.

Replies from: Dorikka↑ comment by Dorikka · 2011-05-13T01:45:11.270Z · LW(p) · GW(p)

the FAI fooms along possibly metaphysical spatiotemporal dimensions turning everything into acausal economic goodness.

I don't really understand what this means, so I don't see why the next bit follows. Could you break this down, preferably using simpler terms?

↑ comment by timtyler · 2011-05-05T08:26:32.599Z · LW(p) · GW(p)

The values part seems to me (and from what I can tell, you too) where the most good would be done by public discussion while the optimization part seems to me where the danger lies if the information gets out.

The problem is if one organisation with dubious values gets far ahead of everyone else. That situation is likely to be result of keeping secrets in this area.

Openness seems more likely to create a level playing field where the good guys have an excellent chance of winning. Those promoting secrecy are part of the problem here, IMO. I think we should leave the secret projects to the NSA and IARPA.

The history of IT shows many cases where use of closed solutions led to monopolies and problems. I think history shows that closed source solutions are mostly good for those selling them, but bad for the rest of society. IMO, we really don't want machine intelligence to be like that.

Many governments realise the significance of open source software these days - e.g. see: The government gets really serious about open source.

Replies from: jimrandomh, hairyfigment↑ comment by jimrandomh · 2011-05-05T13:19:37.748Z · LW(p) · GW(p)

The problem is if one organisation with dubious values gets far ahead of everyone else. That situation is likely to be result of keeping secrets in this area.

It's likely to be the result of organizations with dubious values keeping secrets in this area. The good guys being open doesn't make it better, it makes it worse, by giving the bad guys an asymmetric advantage.

Replies from: timtyler, PhilGoetz↑ comment by timtyler · 2011-05-05T22:47:24.148Z · LW(p) · GW(p)

We discussed this very recently.

The good guys want to form a large cooperatve network with each other, to help ensure they reach the goal first. Sharing is one of the primary ways they have of signalling to each other that they are good guys. Signalling must be expensive to be credible, and this is a nice, relevant, expensive signal. Being secretive - and failing to share - self-identifies yourself as a selfish bad guy - in the eyes of the sharers.

It is not an advantage to be recognised by good guys as a probable bad guy. For one thing, it most likey means you get no technical support.

A large cooperative good-guy network is a major win in terms of risk - compared to the scenario where everyone is secretive. The bad guys get some shared source code - but that in no way makes up for how much worse their position is overall.

To get ahead, the bad guys have to pretend to be good guys. To convince others of this - in the face of the innate human lie-detector abilities - they may even need to convince themselves they are good guys...

Replies from: jimrandomh↑ comment by jimrandomh · 2011-05-05T22:51:57.413Z · LW(p) · GW(p)

You never did address the issue I raised in the linked comment. As far as I can tell, it's a showstopper for open-access development models of AI.

Replies from: timtyler, PhilGoetz↑ comment by timtyler · 2011-05-06T21:00:21.978Z · LW(p) · GW(p)

You gave some disadvantages of openness - I responded with a list of advantages of openness. Why you concluded this was not responsive is not clear.

Conventional wisdom about open source and security is that it helps - e.g. see Bruce Schneier on the topic.

Personally, I think the benefits of openness win out in this case too.

That is especially true for the "inductive inference" side of things - which I estimate to be about 80% of the technical problem of machine intelligence. Keeping that secret is just a fantasy. Versions of that are going to be embedded in every library in every mobile computing device on the planet - doing input prediction, compression, and pattern completion. It is core infrastructure. You can't hide things like that.

Essentially, you will have to learn to live with the possibility of bad guys using machine intelligence to help themselves. You can't really stop that - so, don't think that you can - and instead look into affecting what you can change - for example, reducing the opportunities for them to win, limiting the resulting damage, etc.

↑ comment by PhilGoetz · 2011-05-15T04:38:55.633Z · LW(p) · GW(p)

In this case, I'm less afraid of "bad guys" than I am of "good guys" who make mistakes. The bad guys just want to rule the Earth for a little while. The good guys want to define the Universe's utility function.

Replies from: timtyler↑ comment by timtyler · 2011-05-15T18:53:40.912Z · LW(p) · GW(p)

I'm less afraid of "bad guys" than I am of "good guys" who make mistakes.

Looking at history of accidents with machines, they seem to be mostly automobile accidents. Medical accidents are number two, I think.

In both cases, technology that proved dangerous was used deliberately - before the relevant safety features could be added - due to the benefits it gave in the mean time. It seems likely that we will see more of that - in conjunction with the overall trend towards increased safety.

My position on this is the opposite of yours. I think there are probably greater individual risks from a machine intelligence working properly for someone else than there are from an accident. Both positions are players, though.

↑ comment by hairyfigment · 2011-05-15T19:06:00.703Z · LW(p) · GW(p)

Now I'm confused again. Who do you worry about if not the NSA?

↑ comment by jsalvatier · 2011-05-04T23:30:29.598Z · LW(p) · GW(p)

that does enslave everybody, in a way that wiser beings than us would agree was beneficial.

I'm having a hard time parsing what that last clause refers to; what is supposed to be better, enslaving or not enslaving?

↑ comment by hairyfigment · 2011-05-15T19:01:53.374Z · LW(p) · GW(p)

I find it implausible that it is harder to build an AI that doesn't kill or enslave everybody, than to build an AI that does enslave everybody, in a way that wiser beings than us would agree was beneficial.

Why?

The SIAI claims they want to build an AI that asks what wiser beings than us would want (where the definition includes our values right before the AI gets the ability to alter our brains). They say it would look at you just as much as it looks at Eliezer in defining "wise". And we don't actually know it would "enslave everybody". You think it would because you think a superhumanly bright AI that only cares about 'wisdom' so defined would do so, and this seems unwise to you. What do you mean by "wiser" that makes this seem logically coherent?

Those considerations obviously ignore the risk of bugs or errors in execution. But to this layman, bugs seem far more likely to kill us or simply break the AI than to hit that sweet spot (sour spot?) which keeps us alive in a way we don't want. Which may or may not address your actual point, but certainly addresses the quote.

↑ comment by timtyler · 2011-05-04T23:41:04.662Z · LW(p) · GW(p)

So, SIAI plans to develop an AI that will take over the world, keeping their techniques secret, and therefore not getting critiques from the rest of the world.

This is WRONG. Horrendously, terrifyingly, irrationally wrong.

It reminds me of this:

if we can make it all the way to Singularity without it ever becoming a "public policy" issue, I think maybe we should.

The plan to steal the singularity.

Replies from: wedrifid↑ comment by wedrifid · 2011-05-05T03:57:54.189Z · LW(p) · GW(p)

The plan to steal the singularity.

Any other plan would be insane! (Or, at least, only sane as a second choice when stealing seems impractical.)

Replies from: timtyler↑ comment by timtyler · 2011-05-05T08:15:07.387Z · LW(p) · GW(p)

Uh huh. You don't think some other parties might prefer to be consulted?

A plan to pull this off before the other parties wake up may set off some alarm bells.

Replies from: wedrifid↑ comment by wedrifid · 2011-05-05T08:23:56.213Z · LW(p) · GW(p)

A plan to pull this off before the other parties wake up may set off some alarm bells.

... The kind of thing that makes 'just do it' seem impractical?

Replies from: timtyler↑ comment by timtyler · 2011-05-05T08:44:18.641Z · LW(p) · GW(p)

"Plan to Singularity" dates back to 2000. Other parties are now murmuring - but I wouldn't say machine intelligence had yet become a "public policy" issue. I think it will, in due course though. So, I don't think the original plan is very likely to pan out.

↑ comment by AlphaOmega · 2011-05-15T21:25:20.228Z · LW(p) · GW(p)

This is a good discussion. I see this whole issue as a power struggle, and I don’t consider the Singularity Institute to be more benevolent than anyone else just because Eliezer Yudkowsky has written a paper about “CEV” (whatever that is -- I kept falling asleep when I tried to read it, and couldn’t make heads or tails of it in any case).

The megalomania of the SIAI crowd in claiming that they are the world-savers would worry me if I thought they might actually pull something off. For the sake of my peace of mind, I have formed an organization which is pursuing an AI world domination agenda of our own. At some point we might even write a paper explaining why our approach is the only ethically defensible means to save humanity from extermination. My working hypothesis is that AGI will be similar to nuclear weapons, in that it will be the culmination of a global power struggle (which has already started). Crazy old world, isn’t it?

Replies from: timtyler↑ comment by timtyler · 2011-06-01T18:14:06.342Z · LW(p) · GW(p)

The megalomania of the SIAI crowd in claiming that they are the world-savers would worry me if I thought they might actually pull something off.

I also think they look rather ineffectual from the outside. On the other hand they apparently keep much of their actual research secret - reputedly for fears that it will be used to do bad things - which makes them something of an unknown quantity.

I am pretty sceptical about them getting very far with their projects - but they are certainly making an interesting sociological phenomenon in the mean time!

comment by wilkox · 2011-05-02T08:29:34.589Z · LW(p) · GW(p)

Thank you very much for doing this. You've clearly put a lot of effort into making it both thorough and readable.

Formulate methods of validating the SIAI’s execution of goals.

Seconded. Being able to measure the effectiveness of the institute is important both for maintaining the confidence of their donors, and for making progress towards their long-term goals.

comment by JoshuaZ · 2011-05-02T13:59:44.850Z · LW(p) · GW(p)

There's an issue related to the Singularity Summits that is tangential but worth mentioning: Even if one assigns a very low probability to a Singularity-type event occurring the summits are still doing a very good job getting interesting ideas about technology and their possible impact on the world out their and promoting a lot of interdisciplinary thinking that might not occur otherwise. I was also under the impression that the Summits were revenue negative, and even given that I would have argued that they are productive enough to be a good thing.

comment by lukeprog · 2011-05-02T13:43:36.881Z · LW(p) · GW(p)

This is awesome. Thanks for all your hard work. I hope you will consider updating it in place when the 2010 form becomes available?

Replies from: BrandonReinhart↑ comment by BrandonReinhart · 2011-05-02T16:45:34.594Z · LW(p) · GW(p)

Yeah, I'll update it when the 2010 documents become available.

comment by Rain · 2011-05-02T13:20:11.163Z · LW(p) · GW(p)

Please add somewhere near the top what the SIAI acronym stands for and a brief mention of what they do. I suggest, "The Singularity Institute for Artificial Intelligence (SIAI) is a non-profit research group working on problems related to existential risk and artificial intelligence, and the co-founding organization behind Less Wrong."

Replies from: BrandonReinhart↑ comment by BrandonReinhart · 2011-05-02T16:43:30.060Z · LW(p) · GW(p)

Added to the overview section.

comment by PhilGoetz · 2011-05-04T22:11:30.895Z · LW(p) · GW(p)

Michael Vassar isn't paying himself enough. $52K/yr is not much in either New York City or San Francisco. Or North Dakota, for that matter.

Replies from: Larks, thomblake, LukeStebbing, jsalvatier↑ comment by Luke Stebbing (LukeStebbing) · 2011-05-05T08:40:38.180Z · LW(p) · GW(p)

$52k/yr is in line with Eliezer's salary if it's only covering one person instead of two, and judging from these comments, Eliezer's salary is reasonable.

Replies from: PhilGoetz↑ comment by PhilGoetz · 2011-05-05T16:44:39.863Z · LW(p) · GW(p)

I'm confused by this in three ways. Unless I'm mistaken, in 2009

- Eliezer was not married

- Michael was married

- Paying higher salaries to married than to single people is a questionable policy, and probably illegal

↑ comment by Luke Stebbing (LukeStebbing) · 2011-05-05T19:07:33.793Z · LW(p) · GW(p)