Preventing model exfiltration with upload limits

post by ryan_greenblatt · 2024-02-06T16:29:33.999Z · LW · GW · 22 commentsContents

Summary of key considerations Epistemic status More detailed arguments The total amount of generated tokens is probably not much larger than the number of model parameters (for an AI lab’s best model) We can probably heavily compress (text) model outputs Other approaches for limiting uploads Tracking and restricting total uploads seems relatively doable from a security perspective Even if total compressed uploads are larger than model weights, being within a moderate factor might make exfiltration via uploads hard Remaining concerns Appendix: experiment with compressing T=0 generations from weak LLMs Appendix: BOTEC for generated tokens vs parameters for GPT-6 None 22 comments

At some point in the future, AI developers will need to ensure that when they train sufficiently capable models, the weights of these models do not leave the developer’s control. Ensuring that weights are not exfiltrated seems crucial for preventing threat models related to both misalignment and misuse. The challenge of defending model weights has previously been discussed in a RAND report.

In this post, I’ll discuss a point related to preventing weight exfiltration that I think is important and under-discussed: unlike most other cases where a defender wants to secure data (e.g. emails of dissidents or source code), model weights are very large files. At the most extreme, it might be possible to set a limit on the total amount of data uploaded from your inference servers so that an attacker would be unable to exfiltrate the model weights even if they totally compromised your inference servers, while still being able to serve an API and otherwise run a normal amount of inference. If this ends up being viable, then it would be much easier to protect model weights from competent adversaries because upload limits are relatively simple to enforce. Even if it turns out that such a bandwidth limit isn’t feasible, the fact that any attacker will have to control a substantial fraction of upload bandwidth from your inference server might pose a substantial obstacle to exfiltration.

In this post:

- I make some predictions about the ratio between a model’s size and the total quantity of data that its inference servers will have to emit over the model lifetime. I conclude that the total quantity of data probably won’t be more than a few orders of magnitude larger than the size of the model for an AI lab’s most powerful AI.

- I suggest a variety of strategies to reduce the outflow bandwidth required from inference services. Most importantly, you can use a scheme involving arithmetic coding using a weak model that you are okay with being stolen. In this scheme, the weak model is trained to imitate the strong model. The weak model is present both inside and outside the inference network with the upload limit.

While I expect that the sort of proposal I discuss here is well known, there are many specific details I discuss here which I haven't seen discussed elsewhere. If you are reasonably familiar with this sort of proposal, consider just reading the “Summary of key considerations” section which summarizes the specific and somewhat non-obvious points I discuss in this post.

This proposal is written as a nearcast focused on SOTA LLMs, though I expect many of the conclusions to generalize.

Given how promising this proposal seems, I think that further investigation is warranted. The main source of uncertainty is about the ratio between the number of inference tokens generated and the number of model parameters for the key model we want to protect. There are a variety of improvements which might allow for somewhat reducing total uploads, so pursuing these could be quite leveraged if we end up in a regime where marginal reduction in uploads substantially reduces risk. The viability of this proposal depends substantially on non-public information that AI labs possess, so internal investigation by AI labs will likely be key. However, external researchers could investigate compression schemes, other approaches for reducing the total uploads, or mechanisms for very reliably and securely tracking the total amount of data uploaded. I’m excited about further investigation of this idea.

Summary of key considerations

- The total number of generated tokens from a given model might be similar to or smaller than the total number of parameters due to Chinchilla scaling laws. The ratio between generated tokens and total parameters is the main source of uncertainty in this proposal.

- Substantial compression of model outputs might be possible by compressing against a weaker model (e.g., arithmetic coding).

- We don’t need to upload prompt tokens from our data center and plausibly most tokens an LLM is run on will be prompt tokens.

- Internal usages of the model could occur in a data center which is co-located with the inference data center and we could limit uploads from the co-located data center

- Some intermediate outputs from an AI don't (typically) need to be used and thus can remain in the data center (unless flagged by a monitoring AI for further review); for instance, in best-of-N sampling outputs which aren’t selected don’t need to be uploaded and intermediate reasoning like chain-of-thought often doesn’t need to be seen by users.

- Attackers probably can’t use most of the bits uploaded from the data center for exfiltrating model weights, and we might be able to employ countermeasures that reduce the fraction of bits they can use.

Epistemic status

I'm not a security expert and I don't have a full understanding of current SOTA LLM workloads, so I'm not sure how well this proposal would work in practice. I'd be interested in hearing from people with more expertise.

This post is the product of about 4 hours of work and I’ve overall probably spent about 30-50 hours thinking about the topic and discussing the proposal with various people. I also haven’t gotten experts in the space to review this post. So, I might be missing some key considerations. However, I have talked about this proposal at a high level with various experts and otherwise well-informed people and I currently stand by my overall analysis.

My current guess is that there is about a 25% chance that this proposal can be used to relatively cheaply ensure that attackers won’t be able to extract the model weights without bypassing physical security on the data center (e.g. physically entering the data center and taking the weights out on a thumb drive). And about a 60% chance that putting non-trivial effort into compressing model generations and restricting uploads is a useful line of defense even if we can’t get strong guarantees.

I've discussed this proposal and gotten various related ideas from a variety of people (Buck Shlegeris, Beth Barnes, Fabien Roger, and others). Overall, the ideas here aren’t that original and I’m unsure exactly who came up with each of the ideas I discuss here.

Thanks to Buck Shlegeris for rewriting the introduction in addition to a variety of other help with editing.

Thanks to Fabien Roger for running the experiments in the appendix.

More detailed arguments

The total amount of generated tokens is probably not much larger than the number of model parameters (for an AI lab’s best model)

The total amount of inference tokens for a given AI lab’s most powerful model probably won't be more than an order of magnitude or two more than the total amount of tokens seen in training (and might be notably less than this). This is because if the inference tokens were much higher than this, then it would probably make sense to spend more money on training a better model. If AI labs don't optimize that much for inference costs and instead mostly optimize for AI capability, then on Chinchilla scaling, you train a model with 1/20 as many parameters as tokens seen in training. So, this might imply that inference tokens and model parameters are the same ballpark.

The situation might be even more favorable due to the fact that most tokens are read by LLMs, not generated, and we can avoid uploading these prompt tokens from the data center. We'll discuss what this looks like in more detail below.

Models using Mixture of Experts (MoE) might also make the situation more favorable as they might have relatively more parameters.

While the above reasoning applies for an AI lab’s best model, currently it’s often the case that models are trained to target a weaker level of capability and used to generate a much larger number of tokens than the model’s number of parameters. (Such models will be trained in a heavily inference optimized way rather than being trained Chinchilla-optimal. For instance, consider gpt-3.5-turbo which is probably trained on far more tokens than is Chinchilla-optimal in order to reduce inference costs.) So, using this scheme to secure the weights of relatively weaker AIs might be notably less effective or more costly. It’s unclear how important it will be to secure these relatively weaker AIs, but naively it seems at least somewhat important.

I’m quite uncertain about the ratio between model parameters and the number of generated tokens for the key AIs we want to protect. Further forecasting work seems useful here, though it’s likely the case that non-public information that AI labs have is very useful for better forecasting and thus lab internal forecasting might be more accurate.

We also do a more specific back-of-the-envelope calculation for GPT-6 in the appendix [LW · GW].

We can probably heavily compress (text) model outputs

Here's a rough sketch of how we might compress model generations for serving a model on an API:

- We have an inner data center and an outer data center. The inner data center is the one that actually generates tokens using the smart model while the outer data center decodes the generation and communicates with the user. The model weights are only present in the inner data center.

- When the outer data center receives a user query, it assigns a query ID and then sends the query information and ID into the inner data center.

- If the user query involves non-deterministic behavior (sampling at t>0), then the outer data center also sends a seed to the inner data center (we'll use this seed for our arithmetic coding compression scheme).

- The inner data center generates a response using the seed, compresses this response by using arithmetic coding with the weaker model, and then sends the compressed response along with the ID back to the outer data center. We don't need to send back the prompt tokens or the seed because the outer data center already has associated with the ID.

- The outer data center decodes the compressed response using the weaker model and then sends the decoded response to the user.

We'd train our weaker model to imitate the smart model. Ideally, we'd also train the smarter model to produce generations that require as few bits as possible (while not degrading performance too much). This involves generating responses which are both shorter and which deviate from the weaker model as little as possible (while still retaining almost as good performance) to improve compression. Tokens which are extremely predictable given the weaker model are effectively "free" in terms of compression.

(Minimizing deviation from the weaker model is probably the same or almost the same as imposing a KL penalty on the smarter model relative to the distribution of the weak model. (I think total KL might exactly correspond to the compression you get when sampling with t=1.) We'd also be training the weak model to imitate the strong model in parallel which complicates the process somewhat. (Both are optimizing to minimize this KL.) Overall, I expect there is some reasonable construction of an RL penalty which allows for nicely optimizing compression (probably directly optimizing expected bits in a way which takes into account deviating from the weak model and length) which will then allow achieving a good trade-off curve between performance and compression.)

(I think the training objective for minimizing deviation from the weaker model will be almost the same as the training objective which would be used to improve the performance of speculative decoding. And more generally, speculative decoding is very similar to the compression scheme we’re attempting to use.)

To avoid much higher latency for users, we'd want to stream this entire process (at least for some queries). This creates some additional complexity to avoid upload overhead from sending too many messages. I think this is totally manageable, see the parenthetical for more detail.

(To ensure that the additional upload overhead from sending many messages due to streaming isn’t too bad, we’d probably want to batch up a bunch of queries; above I discussed having a “query ID”, but in practice, we’ll probably want to have an ID which corresponds to a batch of queries. We could also only send a message for every 10 or so tokens generated (which only makes the user experience slightly worse). So overall, a message from the datacenter might contain a batch of queries (e.g. 128 queries) with 10 tokens per batch. If we optimistically compress to 0.1 bits per token, then this would be about 100 bits of actual content per message. If we use short 8 or 16 bit IDs (which should be possible as IDs will refer to relatively infrequent batches), then message overhead will be pretty small. Note that I’ve implicitly assumed we’re writing our own networking protocol from scratch rather than using e.g. TCP as message overhead might be an issue. (Separately, it might be fine to have a bunch of message overhead as long as we can ensure that attackers can’t use this message overhead to transmit bits. I think this is somewhat harder to ensure than just tracking the total number of bits over the wire, but also seems relatively doable.))

For our weaker model, you should imagine an open source model or a model which is proprietary but which isn't that concerning if stolen. Perhaps for applying this scheme to GPT-5, we would use GPT-4 or GPT-3.5 as our weaker model.

I’m not certain what is the exact compression scheme you want to use in the case of non-deterministic generations, but it probably looks something like arithmetic encoding and probably isn’t that bad in practice. (It might be even nicer if we’re willing to let the compression be lossy.)

I'm overall radically uncertain, but I think it seems plausible that we can compress generations using a weak model by a massive amount (e.g. 100x). Then, in this optimistic case perhaps each token would take 0.1 bits on average. We have some preliminary results in an appendix to this post.

Avoiding uploading prompt tokens is also probably a big difference.

Also, these sorts of compression schemes are relatively measurable, so iteration is tractable and we can study the problem in detail in advance.

Overall, I think this might combine toward ensuring we can upload somewhat less data than the model weights in total (though I'm unsure and this will heavily depend on money spent on inference relative to training).

Other approaches for limiting uploads

- We could ensure that lab researchers using these models (particularly using these models for huge amounts of inference) use a server which is co-located with the data center to access the model. Then, we would also restrict uploads from the co-location. So we would have an "inner data center" (has model weights), an "intermediate data center" (has approved researchers), and an "outer data center" (finally uploads generations to outside users and more generally uploads all the way to the outside world). The inner data center would have restricted uploads to both the intermediate and outer data centers and the intermediate data centers would have restricted uploads to the outer data center and the outside world. For many types of research, the data never needs to leave a local network, so this could suffice. We could also subsidize other people using the colocation if they would make heavy usage of the API in ways where the data doesn't need to leave the local network.

- We can do best of N inside the data center and only send out the final completion (though we should store all model outputs for possible future inspection and should likely monitor all outputs using another AI). (Idea from Beth Barnes)

- If we have an AI agent scaffold (or similar) and we don’t typically need to view internal reasoning (e.g. CoT), we could avoid sending out this internal reasoning most of the time. This also applies to other intermediate outputs in an agent scaffold. (Though this sort of thing probably introduces a bunch of complexity because we might want to be able to reference these intermediate outputs in later competitions which would require continuing to store them inside the datacenter and having some sort of ID.)

- In order to make it more inconvenient for an attacker to exfiltrate weights, we could regularly apply transformations to the parameters of the weights that preserve the input-output mapping implemented by the model, but which make it harder for an attacker to copy the model out piece by piece. For example, transformers are invariant to permuting the order of neurons, attention heads, and activation dimensions. It’s very fast to randomly permute the order of neurons, and if you regularly do so, an attacker will either have to canonicalize the neuron order whenever you permute them, which might be hard for the attacker to do subtly, or they’ll have to use substantially more upload bandwidth. See Gauge transformations for securing model inference for more details on this kind of scheme.

Tracking and restricting total uploads seems relatively doable from a security perspective

I don't really know that much about security, but it naively seems possible for a highly committed civilian actor to track and control total uploads from a data center in a way that is secure except for extreme attacks.

Here's my current rough understanding of how a relatively strong version of building a data center to track and restrict total uploads might work (but I expect that people more knowledgeable about security might have much better ideas):

- We build a data center with a single entrance. Everything else is thick concrete walls (or whatever makes it hardest to drill through). We also build a Faraday cage into the data center. (Existing standards for data center security are likely applicable here; I’m just summarizing the nature of the interventions from my understanding.)

- Everything enters through the single entrance: personal, electricity, our data cables, etc.

- Nothing ever leaves the data center except for personnel. We heavily search and scan (metal detector, x-ray, whatever) personnel as they leave the data center. (This still allows for non-metallic information storage devices, but forcing attackers to use more exotic approaches still seems to help substantially.) The rate at which personnel need to enter the data center for maintenance might be relatively low which could make the cost manageable.

- When personnel do work in the facility, they are heavily monitored to make it harder to hide information on their person. (E.g., all personnel must proceed in groups of 5 which all watch each other at all times.)

- We very carefully architect the infrastructure around the cables which can upload data.

- We want multiple fully distinct mechanisms for monitoring total uploaded data. This should be relatively doable by using physical splitters for the fiber optic cables and then having multiple distinct monitoring systems.

- We could build some of the monitoring mechanisms out of entirely distinct hardware manufacturing processes than what is typically used, to potentially evade hardware backdoors. (And we could keep such devices simple by avoiding internet connectivity and instead just physically attaching a display that personnel could monitor.)

- The fact that we want to do such simple things with the upload cable implies that it might be possible to build many redundant systems from scratch if that would improve security.

- We might have vastly more downloads than uploads, so if we could ensure that some cables are literally physically unidirectional that could help (I don't know how easy this is to do).

- We'd probably want to ensure that the upload capable cables are only physically capable of a limited upload rate such that uploading the entire model weights would take a long time even if monitoring and other restrictions failed.

- We need to make sure that we account for bits being transmitted via side channels (e.g. timing of messages). I think it should be relatively doable to keep side channels negligible relative to bits transmitted. This might require that we avoid the actual computers operating the cable from being compromised (rather than just ensure that at least one cable monitor avoids being compromised).

- The upload cable will need to support some traffic other than model generations (e.g. ssh), but I think this should be pretty manageable, and this traffic can be tiny in aggregate.

- The data center can be first built prior to model training. In this phase, free movement in and out is fine. After training starts, this would need to be restricted.

- If training and inference are very distinct phases, it might be better to train the model on a separate training optimized data center (which could have even more extreme upload restrictions) and then physically move the model weights to the inference data center (via whatever physical movement approaches seem most secure for very valuable but physically tiny items).

- Standard physical security approaches would be needed to secure the building (e.g. guards and cameras).

Of course, a preliminary version of this sort of scheme could be valuable even without all of these precautions.

This list is just talking about precautions aimed at narrowly tracking and restricting total uploads (while imposing minimal burden on digital access to the data center, e.g. installing packages and ssh), we’d surely also want to take at least some precautions which reduce the probability of being hacked at all.

If the upload restrictions really work out to an extent we can ensure that insane physical extraction schemes are needed, that seems like a big win.

Even if total compressed uploads are larger than model weights, being within a moderate factor might make exfiltration via uploads hard

It seems hard for an attacker to utilize a high fraction of all upload volume for weight exfiltration.

It would probably be hard for the attacker to create a huge amount of fake inference traffic over a long period even if they have heavily hacked our data center (both inner and outer) and other services.

So, the attacker might need to resort to watermarking real model generations from actual users to exfiltrate data. It seems hard to watermark outputs in a way which isn't noticeable and which allows for a high bitrate. In particular, users will look at these generations (or test other properties of these generations) and notice if they are weird or otherwise low quality. So, the attacker might only be able to harness a small fraction of the actual upload bandwidth for exfiltration without noticeably degrading performance. There might be some reasonable tests we can perform to hopefully detect watermarking.

(We might also be able to paraphrase model outputs (or similar) to make watermarking harder, but I think for this to be useful, we’d need to ensure the paraphrasing component isn’t compromised and remains secret from the attacker so it’s unclear how much value this could add. That said, current multi bit watermarking schemes which are at all robust to paraphrasing have pretty terrible bitrates [LW · GW], so this seems naively promising if we can make this work somehow.)

It seems like a pretty big footprint to have to tamper with or cause a high fraction of all generation traffic for a long time which might be hard for an attacker to do without detection even if they have effectively fully compromised all computers.

Remaining concerns

Attackers could in principle just hack the data center and then just use this for performing unmonitored generations (rather than uploading the model weights). This is probably considerably less bad than full exfiltration, but still can cause huge problems. My guess would be that you could try to make this hard to do without eventually being detected. (Separately, we have some novel ideas for making it harder to run models without monitoring or tracking, but they don't seem that compelling.)

Even if the model is big, if hackers can run arbitrary code on the data center, they can probably apply various compression schemes to the model weights. I expect this to be some constant factor. They could also try to use the data center to distill the model into a smaller model which is easier to exfiltrate, but doing this without detection seems really hard (as this will use many GPUs for a while and only some GPUs might be in training specialized arrangements). So this doesn't seem clearly easier than just training your own model unless GPUs have been made extremely scarce. (For instance, the attacker would have to set up the training run including the infra; they can use internal code, but they could probably also exfiltrate this internal code and use it elsewhere. So the only advantage of running this training on the data center will be efficiency improvements due to distillation and the stolen GPU time.)

Physical data extraction attacks remain (e.g., drilling through the walls, smuggling out storage using personnel, ensuring that when the data center is built there is a small robot that will drill out and deliver data). But forcing physical attacks seems like a big win.

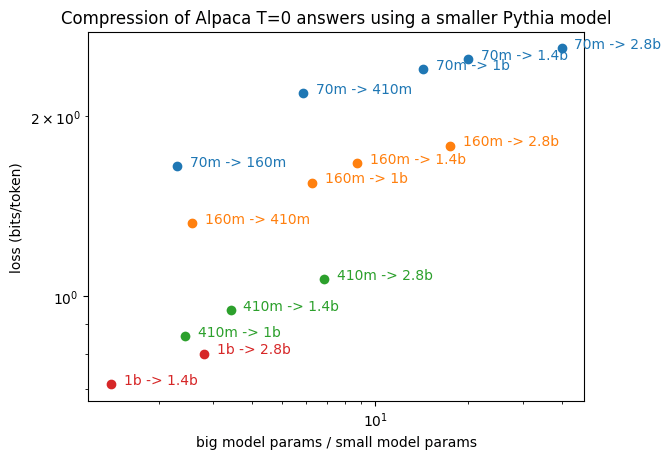

Appendix: experiment with compressing T=0 generations from weak LLMs

[The experiment in this section was run by Fabien Roger.]

To get an idea of the order of magnitude that output compression could provide, we ran the following experiment:

- Fine-tune a “big” model on Alpaca answers

- Fine-tune a “small” model on T=0 generations from the “big” model

- Measure the loss of the “small” model on T=0 generations

(All of the above steps use a separate split of the Alpaca dataset.)

Below are the results of the experiment. We find that compression works relatively well, enabling a compression below 1 bit/token. We also observe that, at a fixed gap size, the compression gets better as we scale the models, but it seems unlikely that it would enable a compression below 0.1 bits/token without further measures. One promising approach to achieve higher levels of compression would be to optimize the large model to be relatively compressible to the small model while retaining high performance.

Note that this compression problem is structurally very similar to T=0 speculative decoding.

(Note: these experiments are done with Pythia models, which were all trained on the same number of tokens, so the training FLOPs are proportional to the size of the models.)

Appendix: BOTEC for generated tokens vs parameters for GPT-6

Here is a quick back-of-the-envelope calculation for GPT-6 which should help to demonstrate why we think this upload limiting approach might be viable.

Let's suppose that GPT-4 has a compressed size of 1e12 bytes (about a TB). Each "GPT" is about 100x additional model compute, so GPT-5 would be 100x more compute than GPT-4. For Chinchilla optimal model training, GPT-5 would be (about) 10x more parameters and 10x more training time than GPT-4. Assuming the compression ratio of model weights remains the same, then GPT-5 has a compressed size of 1e13 bytes. Applying the same math to GPT-6, we get 1e14 bytes. (I'm ignoring improvements in algorithmic efficiency which could considerably reduce the number of bytes for GPT-6 in practice.)

Now, suppose literally every human on earth gets a (short-ish) book directly generated by GPT-6. (We're just using this an example of an obviously massive amount of token generation for a sense of scale.) These books would be about 100,000 tokens each (short books are perhaps 70,000 words and there are somewhat more tokens than words). So 1e5 tokens per book times about 8e9 humans is 8e14 tokens in total.

So 8e14 tokens vs 1e14 bytes.

If we can (optimistically) compress generated tokens down to 0.1 bits per tokens, then we get 8e13 bits uploaded vs 1e14 bytes of model weights which is 1e13 bytes uploaded vs 1e14 bytes of model weights.

So even with what seems like a massive amount of generation (8 billion books), the scheme can plausibly work. In practice, it's hard to predict the exact amount of output generation and we might need to protect smaller models than ones with 1e14 bytes.

Edit: Sam Altman claims that OpenAI generates around 100 billion words per day. If we assume that 10% of this is GPT-4, then this is 10 billion words per day which is about 2 trillion tokens per 6 months or 2e12 tokens. Now, let's suppose this increases by 10x for each model generation (each GPT). Then, we'd get to 2e14 tokens for GPT-6 which nicely matches the order of magnitude for the arbitrarily choice of 1 short book per human used above. (Thanks to Olli Järviniemi for prompting me to make this addition.)

22 comments

Comments sorted by top scores.

comment by Beth Barnes (beth-barnes) · 2024-04-26T23:03:13.437Z · LW(p) · GW(p)

If anyone wants to work on this or knows people who might, I'd be interested in funding work on this (or helping secure funding - I expect that to be pretty easy to do).

comment by ryan_greenblatt · 2025-01-01T04:31:46.928Z · LW(p) · GW(p)

I now think this strategy looks somewhat less compelling due to a recent trend toward smaller (rather than larger) models, particularly from OpenAI, and increased inference time compute usage creating more for an incentive for small models.

It seems likely that (e.g.) o1-mini is quite small given that it generates at 220 tokens per second(!), perhaps <50 billion active parameters based on eyeballing the chart from the article I linked earlier from Epoch. I'd guess (with very low confidence) 100 billion params overall (not including just active params). Likely something similar holds for o3-mini.

The update isn't as large as it might seem at first because users don't (typically) need to be send the reasoning tokens (or outputs from inference time compute usage more generally) which substantially reduces uploads. Indeed, the average compute per output token for o1 and o3 (counting the compute from reasoning tokens but just including tokens sent to the user as output tokens) is probably actually higher than it was for the original GPT-4 release despite these models potentially being smaller.

comment by Brendan Long (korin43) · 2024-02-07T03:47:04.093Z · LW(p) · GW(p)

I don't know the right keywords to search for it, but there's a related network security technique where you monitor your incoming/outgoing bandwidth ratio and shut things down if it suddenly changes. I think it's typically done by heuristics though.

Replies from: ryan_greenblatt↑ comment by ryan_greenblatt · 2024-02-07T15:59:35.423Z · LW(p) · GW(p)

Maybe Network Behavior Anomaly Detection (NBAD)?

comment by [deleted] · 2024-02-06T16:52:32.384Z · LW(p) · GW(p)

Did you consider using hypervisors with guest OSes or VMs on a host OS?

I don't work on cybersecurity directly either but have dealt with both of these from the perspective of "how do I make it work with this security measure present". I also write the software that loads the weights to neural network accelerators and have recently worked on LLM support.

The concept either way is that a guest OS or VM have an accessible address range of memory that is mapped to them. Every memory address they see is fake, the CPU keeps a mapping table that maps the [virtual address] to the [real address]. There can be multiple levels of mapping.

The other limitation is the control bits or control opcodes for the processor itself cannot be accessed by a guest.

What this means in principal is that it doesn't matter what a guest program does, no command will access memory you don't have privileges for, and you can't give yourself those privileges.

You would keep the program that actually can see the files with the model weights, that owns the GPU or TPU driver, on the host OS or a privileged guest OS.

On a separate OS inside another guest or VM is the python stack that actually calls the apis to run the model.

Between the 2 OS there are shared memory buffers. The input token buffer gets written to a circular buffer mapped to one of these buffers, then the privileged software emits the GPU/TPU driver API calls to DMA transfer the tokens to the hardware. Same for the output path.

There's a lot of moving parts and one common security vulnerability is to try to exploit flaws in the underlying implementation such as nearby memory cells in the actual ddr.

Note that if there is a leak, it would probably be slow. Some hack like rowhammer or a similar attack that leaks bits from the CPU cache, leaks a small amount of model weights, and this gets sent somewhere and stored.

If you wanted to reduce leaks one approach would be to stop using software isolation and use hardware. Use a separate CPU on the same motherboard to host the privileged OS.

Replies from: tao-lin, ryan_greenblatt↑ comment by Tao Lin (tao-lin) · 2024-02-06T20:37:30.963Z · LW(p) · GW(p)

A core advantage of bandwidth limiting over other cybersec interventions is its a simple system we can make stronger arguments about, implemented on a simple processor, without the complexity and uncertainty of modern processors and OSes

↑ comment by ryan_greenblatt · 2024-02-06T17:06:00.481Z · LW(p) · GW(p)

I think limiting attack surface using various types of isolation seems promsing, but orthogonal to the intervention we're describing in this post.

(As far as isolation proposals (which aren't discussed in this post), I more often imagine a datacenter where we have a large number of inference machines which aren't directly connected to the internet, but instead only connect to a local area network. Then, we can have a single extremely basic machine (e.g. embedded device/FPGA which doesn't even have an OS) implement an extremely limited API to connect to the outside world to service users. This API could only implement a small number of primitives (e.g. inference, fine-tuning). This reduces the internet facing attack surface at the cost of some convenience. However, note that supply chain attacks or hardware attacks on the inference machines are still possible and attacks could communicate with these compromised machines with encoded messages via the API.)

Replies from: None, None↑ comment by [deleted] · 2024-02-06T17:32:51.958Z · LW(p) · GW(p)

Then, we can have a single extremely basic machine (e.g. embedded device/FPGA which doesn't even have an OS) implement an extremely limited API to connect to the outside world to service an API. This reduces the internet facing attack surface at the cost of some convenience.

This is an extremely good idea and you can see the physical evidence all around you of our predecessors solving a similar problem.

Have you ever noticed how every electrical panel is in a metal box, and every high power appliance is in a metal case? Every building has a gap between it and neighbors?

Its the same concept applied. Fire is just 3 ingredients but humans don't fully understand the plasma dynamics. What we do know is you can't take any chances, and you must subdivide the world with fire breaks and barriers to contain the most likely sources of ignition.

A world that keeps AI from burning out of control is one where these hardware ASICs - firewalls - guard the network interfaces for every cluster capable of hosting an AI model. This reduces how much coordination models can do with each other, and where they can escape to.

You don't just want to restrict API you want to explicitly define what systems a hosted model can communicate with. Ideally a specific session can only reach a paid user, any systems the user has mapped to it, and it does research via a cached copy of the internet not a global one, so the AI models cannot coordinate with each other. (So these are more than firewalls and have functions similar to VPN gateways)

There would have to be a "fire code" for AI.

↑ comment by [deleted] · 2024-02-06T17:20:15.963Z · LW(p) · GW(p)

I think limiting attack surface using various types of isolation seems promsing, but orthogonal to the intervention we're describing in this post.

The issue is that for a model to work it needs a lot of I/O bandwidth. You're thinking ok, if all it needs to do is emit output tokens to a user window, then if those tokens are small in size over time relative to the model weights, you can restrict uploads.

But consider how many parallel sessions there are now (millions per month) or will be in the future. Leaks could be coordinated or randomized to parts of the weights so each parallel session leaks a little more.

Everyone is using a common golden model as well, and market dynamics want in the future there to be just a few models everyone's using.

Suppose there are 10 million user sessions a month and a leak of 128 bytes per session. Model is 0.64 terrabytes. (Gpt-4 assuming it's distilled 10x for the T model). Then it takes 550 months to leak.

If there's 1 billion sessions a month it leaks in 5.5 months.

It's better than nothing.

Replies from: ryan_greenblatt, ryan_greenblatt↑ comment by ryan_greenblatt · 2024-02-06T17:23:16.167Z · LW(p) · GW(p)

See this section of the post for commentary [LW · GW].

TLDR: we actually think it's reasonably likely that the total data outflow is of comparable scale to model weights (for an AI lab's most capable model) under normal commercial operation.

Thinking these are of comparable scale is a core assumption for the upload limiting method to make sense. (Without the introduction of some more exotic approaches.)

↑ comment by ryan_greenblatt · 2024-02-06T17:47:42.998Z · LW(p) · GW(p)

Also, I added a BOTEC about viability for GPT-6 [LW · GW] showing that it naively seems like we could be fine with GPT-6 generating an entire book for every human on earth.

comment by Adam Karvonen (karvonenadam) · 2024-07-22T20:44:09.892Z · LW(p) · GW(p)

Another potential option (that could stack with text compression) is to filter outgoing data. In particular, if we only allow outgoing data to be language tokens, and we filter the outgoing data to have low perplexity as measured by a small, secret language model, then it could become very difficult to encode terabytes of model weights in a way that has low perplexity.

I discuss this idea more here. [LW · GW]

Replies from: ryan_greenblatt↑ comment by ryan_greenblatt · 2024-07-22T22:36:16.829Z · LW(p) · GW(p)

I don't think this stacks with compression - if you compress data then it is no longer low perplexity. Data which is compressed as well as you can should look random to you (rather than predictable).

I think filtering like this is strictly worse than compression for reasons I discuss in my response here [LW(p) · GW(p)].

(But I appreciate the idea!)

Replies from: karvonenadam↑ comment by Adam Karvonen (karvonenadam) · 2024-07-22T23:18:57.106Z · LW(p) · GW(p)

I think it does stack if the perplexity filter is applied before the compression step, which relies on an underlying assumption that it's easier to secure the internet connection choke point rather than the entire data center.

I will try to continue the discussion here [LW(p) · GW(p)] for simplicity.

comment by Bogdan Ionut Cirstea (bogdan-ionut-cirstea) · 2024-07-22T20:23:22.776Z · LW(p) · GW(p)

The total amount of generated tokens is probably not much larger than the number of model parameters (for an AI lab’s best model)

This Epoch analysis (Optimally Allocating Compute Between Inference and Training) suggests something similar (especially assuming Chinchilla scaling laws would keep holding):

Replies from: ryan_greenblattOur analysis indicates that AI labs should spend comparable resources on training and running inference, assuming they can flexibly balance compute between these tasks to maintain model performance.

↑ comment by ryan_greenblatt · 2024-07-22T22:38:44.496Z · LW(p) · GW(p)

Yes, though notably I must make an additional assumption that the returns to capability are such that when allocating compute you go closer to chinchilla optimal rather than trying to make inference cheaper.

As in, my argument has two steps:

- Comparable resources (matching epoch's analysis)

- Returns to capabilites imply that you want to be near chinchilla optimal rather than overtrained.

I think this is likely to not be too far off in practice, though it might lose you an order of magnitude or so.

comment by quetzal_rainbow · 2024-02-08T08:10:58.565Z · LW(p) · GW(p)

I don't quite understand: why do we need weaker model during compression? Why don't just use something like "gRPC with compression"?

Replies from: ryan_greenblatt↑ comment by ryan_greenblatt · 2024-02-08T17:14:54.124Z · LW(p) · GW(p)

We want to arithmetic code against some other AI (which will achieve much more compression than lzip or whatever). But, the very fact that we need to decode using that other AI implies that the other AI is in some data center that needs to upload a bunch to the internet. So, we want the other model to be sufficiently weak that it's not that as bad if it is stolen.

comment by Steven Byrnes (steve2152) · 2024-02-06T17:33:44.536Z · LW(p) · GW(p)

When I was a low-level employee at a defense contractor, they had lots of infosec rules & restrictions, of course, and they were onerous & annoying, of course, but they were also weird in a bunch of ways, and it only made sense to me once I figured out that most of the rules and restrictions were designed to prevent employees (or spies that had gotten into employee accounts) from exfiltrating data out of the firm, rather than preventing employees from bringing viruses into the firm.

Anyway, in regards to this post, if you can ensure that weights cannot possibly wind up on employee laptops / workstations—the same laptops / workstations that are surfing the web, using web-connected applications, etc.—then that makes your job a lot easier. But is that plausible?

Replies from: ryan_greenblatt↑ comment by ryan_greenblatt · 2024-02-06T17:46:40.050Z · LW(p) · GW(p)

Note that in this post, the scheme we're proposing doesn't necessarily involve disconnecting the inference data center from the internet, just limiting total uploads. (Though some sort of isolation like I describe in this comment [LW(p) · GW(p)] could also be worthwhile, but perhaps more costly.)

However, we are proposing that the model weights don't end up on general purpose employee workstations which aren't in a specific inference datacenter. I think this security measure should be pretty workable and is very likely to happen by default.

Replies from: steve2152↑ comment by Steven Byrnes (steve2152) · 2024-02-06T19:53:33.723Z · LW(p) · GW(p)

I guess I was thinking, the number of bytes going from the data center to a general-purpose employee workstation could be large. For example, maybe the employee wants to run automated tests that involve lots of LLM queries, and then analyze all the output data in a jupyter notebook. Or maybe the employee wants to download the server logs and analyze them in a jupyter notebook. Or whatever. Sorry if I’m saying something stupid, feel free to ignore.

Replies from: ryan_greenblatt↑ comment by ryan_greenblatt · 2024-02-06T20:18:30.770Z · LW(p) · GW(p)

Yep, employee usage could be a high fraction of all output data. I think by default it's probably still comparable to the size of the model weights (or smaller) for same reasons noted elsewhere. (Like there doesn't seem to be a specific reason to think employee usage will cause an exception to the arguments I make elsewhere, so the total data seems probably managable given some precautions around uploading massive server logs or similar.)

If employee usage is a high fraction of overall usage, we might be able to further restrict uploads by co-locating a bunch of employee usage in an adjacent data center and applying similar safe guards to that co-located data center as I discuss here [LW · GW]. Of course, this doesn't help rule out exfiltration to the employee-usage-data-center, but we might prefer that exfiltration over arbitrary exfiltration (e.g. because we control the GPUS in the employee datacenter and we could possibly limit the number of gpus or the interconnect in that co-located data center depending on what employees need).