Is Science Slowing Down?

post by Scott Alexander (Yvain) · 2018-11-27T03:30:01.516Z · LW · GW · 77 commentsContents

77 comments

[This post was up a few weeks ago before getting taken down for complicated reasons. They have been sorted out and I’m trying again.]

Is scientific progress slowing down? I recently got a chance to attend a conference on this topic, centered around a paper by Bloom, Jones, Reenen & Webb (2018).

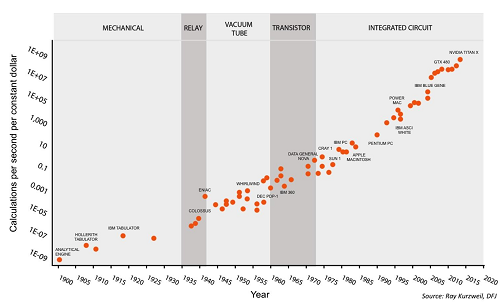

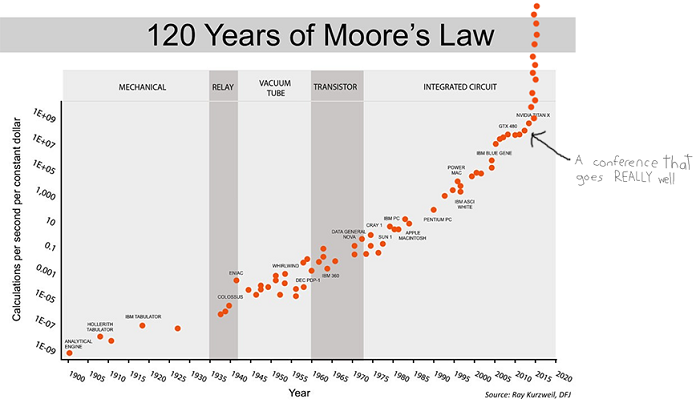

BJRW identify areas where technological progress is easy to measure – for example, the number of transistors on a chip. They measure the rate of progress over the past century or so, and the number of researchers in the field over the same period. For example, here’s the transistor data:

This is the standard presentation of Moore’s Law – the number of transistors you can fit on a chip doubles about every two years (eg grows by 35% per year). This is usually presented as an amazing example of modern science getting things right, and no wonder – it means you can go from a few thousand transistors per chip in 1971 to many million today, with the corresponding increase in computing power.

But BJRW have a pessimistic take. There are eighteen times more people involved in transistor-related research today than in 1971. So if in 1971 it took 1000 scientists to increase transistor density 35% per year, today it takes 18,000 scientists to do the same task. So apparently the average transistor scientist is eighteen times less productive today than fifty years ago. That should be surprising and scary.

But isn’t it unfair to compare percent increase in transistors with absolute increase in transistor scientists? That is, a graph comparing absolute number of transistors per chip vs. absolute number of transistor scientists would show two similar exponential trends. Or a graph comparing percent change in transistors per year vs. percent change in number of transistor scientists per year would show two similar linear trends. Either way, there would be no problem and productivity would appear constant since 1971. Isn’t that a better way to do things?

A lot of people asked paper author Michael Webb this at the conference, and his answer was no. He thinks that intuitively, each “discovery” should decrease transistor size by a certain amount. For example, if you discover a new material that allows transistors to be 5% smaller along one dimension, then you can fit 5% more transistors on your chip whether there were a hundred there before or a million. Since the relevant factor is discoveries per researcher, and each discovery is represented as a percent change in transistor size, it makes sense to compare percent change in transistor size with absolute number of researchers.

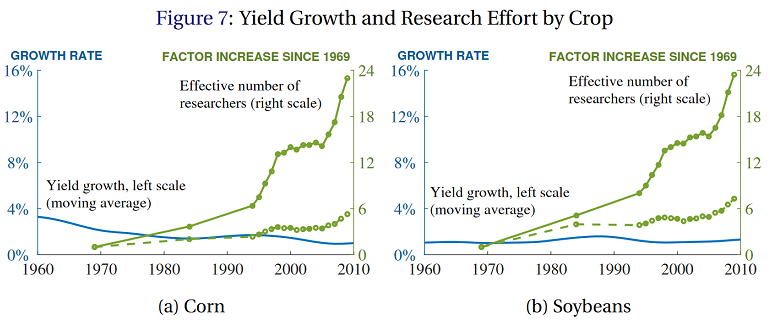

Anyway, most other measurable fields show the same pattern of constant progress in the face of exponentially increasing number of researchers. Here’s BJRW’s data on crop yield:

The solid and dashed lines are two different measures of crop-related research. Even though the crop-related research increases by a factor of 6-24x (depending on how it’s measured), crop yields grow at a relatively constant 1% rate for soybeans, and apparently declining 3%ish percent rate for corn.

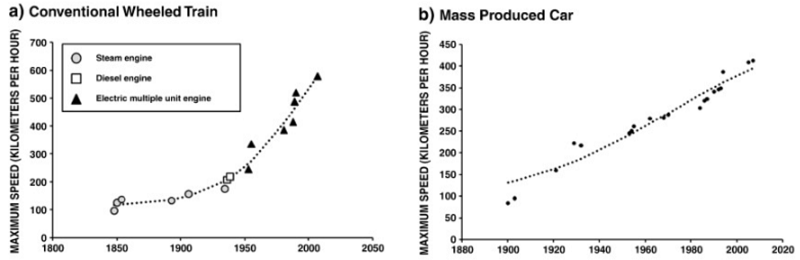

BJRW go on to prove the same is true for whatever other scientific fields they care to measure. Measuring scientific progress is inherently difficult, but their finding of constant or log-constant progress in most areas accords with Nintil’s overview of the same topic, which gives us graphs like

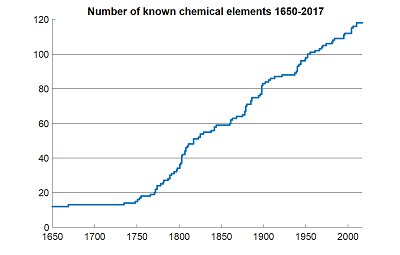

…and dozens more like it. And even when we use data that are easy to measure and hard to fake, like number of chemical elements discovered, we get the same linearity:

Meanwhile, the increase in researchers is obvious. Not only is the population increasing (by a factor of about 2.5x in the US since 1930), but the percent of people with college degrees has quintupled over the same period. The exact numbers differ from field to field, but orders of magnitude increases are the norm. For example, the number of people publishing astronomy papers seems to have dectupled over the past fifty years or so.

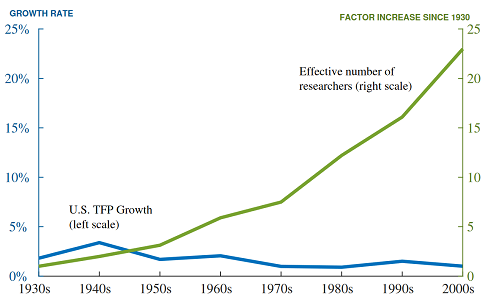

BJRW put all of this together into total number of researchers vs. total factor productivity of the economy, and find…

…about the same as with transistors, soybeans, and everything else. So if you take their methodology seriously, over the past ninety years, each researcher has become about 25x less productive in making discoveries that translate into economic growth.

Participants at the conference had some explanations for this, of which the ones I remember best are:

1. Only the best researchers in a field actually make progress, and the best researchers are already in a field, and probably couldn’t be kept out of the field with barbed wire and attack dogs. If you expand a field, you will get a bunch of merely competent careerists who treat it as a 9-to-5 job. A field of 5 truly inspired geniuses and 5 competent careerists will make X progress. A field of 5 truly inspired geniuses and 500,000 competent careerists will make the same X progress. Adding further competent careerists is useless for doing anything except making graphs look more exponential, and we should stop doing it. See also Price’s Law Of Scientific Contributions.

2. Certain features of the modern academic system, like underpaid PhDs, interminably long postdocs, endless grant-writing drudgery, and clueless funders have lowered productivity. The 1930s academic system was indeed 25x more effective at getting researchers to actually do good research.

3. All the low-hanging fruit has already been picked. For example, element 117 was discovered by an international collaboration who got an unstable isotope of berkelium from the single accelerator in Tennessee capable of synthesizing it, shipped it to a nuclear reactor in Russia where it was attached to a titanium film, brought it to a particle accelerator in a different Russian city where it was bombarded with a custom-made exotic isotope of calcium, sent the resulting data to a global team of theorists, and eventually found a signature indicating that element 117 had existed for a few milliseconds. Meanwhile, the first modern element discovery, that of phosphorous in the 1670s, came from a guy looking at his own piss. We should not be surprised that discovering element 117 needed more people than discovering phosphorous.

Needless to say, my sympathies lean towards explanation number 3. But I worry even this isn’t dismissive enough. My real objection is that constant progress in science in response to exponential increases in inputs ought to be our null hypothesis, and that it’s almost inconceivable that it could ever be otherwise.

Consider a case in which we extend these graphs back to the beginning of a field. For example, psychology started with Wilhelm Wundt and a few of his friends playing around with stimulus perception. Let’s say there were ten of them working for one generation, and they discovered ten revolutionary insights worthy of their own page in Intro Psychology textbooks. Okay. But now there are about a hundred thousand experimental psychologists. Should we expect them to discover a hundred thousand revolutionary insights per generation?

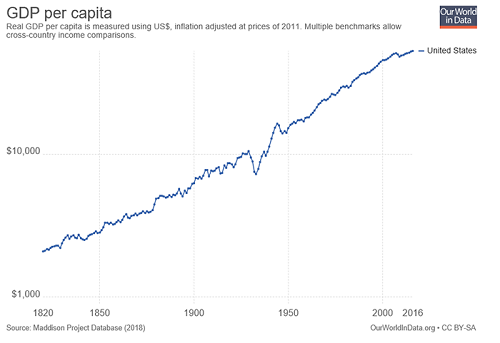

Or: the economic growth rate in 1930 was 2% or so. If it scaled with number of researchers, it ought to be about 50% per year today with our 25x increase in researcher number. That kind of growth would mean that the average person who made $30,000 a year in 2000 should make $50 million a year in 2018.

Or: in 1930, life expectancy at 65 was increasing by about two years per decade. But if that scaled with number of biomedicine researchers, that should have increased to ten years per decade by about 1955, which would mean everyone would have become immortal starting sometime during the Baby Boom, and we would currently be ruled by a deathless God-Emperor Eisenhower.

Or: the ancient Greek world had about 1% the population of the current Western world, so if the average Greek was only 10% as likely to be a scientist as the average modern, there were only 1/1000th as many Greek scientists as modern ones. But the Greeks made such great discoveries as the size of the Earth, the distance of the Earth to the sun, the prediction of eclipses, the heliocentric theory, Euclid’s geometry, the nervous system, the cardiovascular system, etc, and brought technology up from the Bronze Age to the Antikythera mechanism. Even adjusting for the long time scale to which “ancient Greece” refers, are we sure that we’re producing 1000x as many great discoveries as they are? If we extended BJRW’s graph all the way back to Ancient Greece, adjusting for the change in researchers as civilizations rise and fall, wouldn’t it keep the same shape as does for this century? Isn’t the real question not “Why isn’t Dwight Eisenhower immortal god-emperor of Earth?” but “Why isn’t Marcus Aurelius immortal god-emperor of Earth?”

Or: what about human excellence in other fields? Shakespearean England had 1% of the population of the modern Anglosphere, and presumably even fewer than 1% of the artists. Yet it gave us Shakespeare. Are there a hundred Shakespeare-equivalents around today? This is a harder problem than it seems – Shakespeare has become so venerable with historical hindsight that maybe nobody would acknowledge a Shakespeare-level master today even if they existed – but still, a hundred Shakespeares? If we look at some measure of great works of art per era, we find past eras giving us far more than we would predict from their population relative to our own. This is very hard to judge, and I would hate to be the guy who has to decide whether Harry Potter is better or worse than the Aeneid. But still? A hundred Shakespeares?

Or: what about sports? Here’s marathon records for the past hundred years or so:

In 1900, there were only two local marathons (eg the Boston Marathon) in the world. Today there are over 800. Also, the world population has increased by a factor of five (more than that in the East African countries that give us literally 100% of top male marathoners). Despite that, progress in marathon records has been steady or declining. Most other Olympics sports show the same pattern.

All of these lines of evidence lead me to the same conclusion: constant growth rates in response to exponentially increasing inputs is the null hypothesis. If it wasn’t, we should be expecting 50% year-on-year GDP growth, easily-discovered-immortality, and the like. Nobody expected that before reading BJRW, so we shouldn’t be surprised when BJRW provide a data-driven model showing it isn’t happening. I realize this in itself isn’t an explanation; it doesn’t tell us why researchers can’t maintain a constant level of output as measured in discoveries. It sounds a little like “God wouldn’t design the universe that way”, which is a kind of suspicious line of argument, especially for atheists. But it at least shifts us from a lens where we view the problem as “What three tweaks should we make to the graduate education system to fix this problem right now?” to one where we view it as “Why isn’t Marcus Aurelius immortal?”

And through such a lens, only the “low-hanging fruits” explanation makes sense. Explanation 1 – that progress depends only on a few geniuses – isn’t enough. After all, the Greece-today difference is partly based on population growth, and population growth should have produced proportionately more geniuses. Explanation 2 – that PhD programs have gotten worse – isn’t enough. There would have to be a worldwide monotonic decline in every field (including sports and art) from Athens to the present day. Only Explanation 3 holds water.

I brought this up at the conference, and somebody reasonably objected – doesn’t that mean science will stagnate soon? After all, we can’t keep feeding it an exponentially increasing number of researchers forever. If nothing else stops us, then at some point, 100% (or the highest plausible amount) of the human population will be researchers, we can only increase as fast as population growth, and then the scientific enterprise collapses.

I answered that the Gods Of Straight Lines are more powerful than the Gods Of The Copybook Headings, so if you try to use common sense on this problem you will fail.

Imagine being a futurist in 1970 presented with Moore’s Law. You scoff: “If this were to continue only 20 more years, it would mean a million transistors on a single chip! You would be able to fit an entire supercomputer in a shoebox!” But common sense was wrong and the trendline was right.

“If this were to continue only 40 more years, it would mean ten billion transistors per chip! You would need more transistors on a single chip than there are humans in the world! You could have computers more powerful than any today, that are too small to even see with the naked eye! You would have transistors with like a double-digit number of atoms!” But common sense was wrong and the trendline was right.

Or imagine being a futurist in ancient Greece presented with world GDP doubling time. Take the trend seriously, and in two thousand years, the future would be fifty thousand times richer. Every man would live better than the Shah of Persia! There would have to be so many people in the world you would need to tile entire countries with cityscape, or build structures higher than the hills just to house all of them. Just to sustain itself, the world would need transportation networks orders of magnitude faster than the fastest horse. But common sense was wrong and the trendline was right.

I’m not saying that no trendline has ever changed. Moore’s Law seems to be legitimately slowing down these days. The Dark Ages shifted every macrohistorical indicator for the worse, and the Industrial Revolution shifted every macrohistorical indicator for the better. Any of these sorts of things could happen again, easily. I’m just saying that “Oh, that exponential trend can’t possibly continue” has a really bad track record. I do not understand the Gods Of Straight Lines, and honestly they creep me out. But I would not want to bet against them.

Grace et al’s survey of AI researchers show they predict that AIs will start being able to do science in about thirty years, and will exceed the productivity of human researchers in every field shortly afterwards. Suddenly “there aren’t enough humans in the entire world to do the amount of research necessary to continue this trend line” stops sounding so compelling.

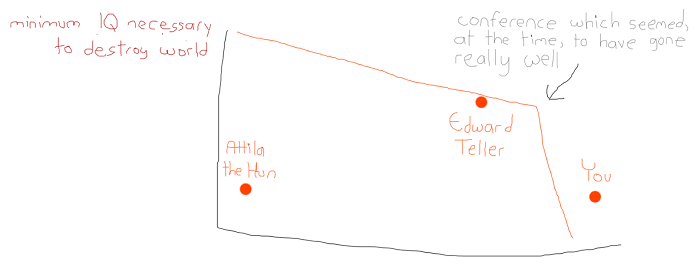

At the end of the conference, the moderator asked how many people thought that it was possible for a concerted effort by ourselves and our institutions to “fix” the “problem” indicated by BJRW’s trends. Almost the entire room raised their hands. Everyone there was smarter and more prestigious than I was (also richer, and in many cases way more attractive), but with all due respect I worry they are insane. This is kind of how I imagine their worldview looking:

I realize I’m being fatalistic here. Doesn’t my position imply that the scientists at Intel should give up and let the Gods Of Straight Lines do the work? Or at least that the head of the National Academy of Sciences should do something like that? That Francis Bacon was wasting his time by inventing the scientific method, and Fred Terman was wasting his time by organizing Silicon Valley? Or perhaps that the Gods Of Straight Lines were acting through Bacon and Terman, and they had no choice in their actions? How do we know that the Gods aren’t acting through our conference? Or that our studying these things isn’t the only thing that keeps the straight lines going?

I don’t know. I can think of some interesting models – one made up of a thousand random coin flips a year has some nice qualities – but I don’t know.

I do know you should be careful what you wish for. If you “solved” this “problem” in classical Athens, Attila the Hun would have had nukes. Remember Yudkowsky’s Law of Mad Science: “Every eighteen months, the minimum IQ necessary to destroy the world drops by one point.” Do you really want to make that number ten points? A hundred? I am kind of okay with the function mapping number of researchers to output that we have right now, thank you very much.

The conference was organized by Patrick Collison and Michael Nielsen; they have written up some of their thoughts here.

77 comments

Comments sorted by top scores.

comment by Benquo · 2018-11-27T14:02:29.076Z · LW(p) · GW(p)

This seems like it’s mixing together some extremely different things.

Fitting transistors onto a microchip is an engineering process with a straightforward outcome metric. Smoothly diminishing returns is the null hypothesis there, also known as the experience curve.

Shakespeare and Socrates, Newton and Descartes, are something more like heroes. They harvested a particular potential at a particular time, doing work that was integrated enough that it had to fit into a single person’s head.

This kind of work can’t happen until enough prep work has been done to make it tractable for a single human. Newton benefited from Ptolemy, Copernicus, Kepler, and Galileo, giving him nice partial abstractions to integrate (as well as people coming up with precursors to the Calculus). He also benefited from the analytic geometry and algebra paradigm popularized by people like Descartes and Viete. The reason he’s impressive is that he harvested the result of an unified mathematical theory of celestial and earthly mechanics well before most smart people could have - it just barely fit into the head of a genius particularly well-suited to the work, and even so he did have competition in Leibniz.

At best, the exponentially exploding number of people trying to get credit for doing vaguely physicsy things just doesn’t have much to do with the process by which we get a Newton.

But my best guess is that there’s active interference for two reasons. First, Newton had an environment where he could tune out all the noninteresting people and just listen to the interesting natural philosophers, while contemporary culture creates a huge amount of noisy imitation around any actual progress. Likewise, Shakespeare was in an environment where being a playwright was at least a little shady, while we live in a culture that valorized Shakespeare.

Second, the Athenians got even better results than the Londoners (Aeschylus, Sophocles, Euripides, Aristophanes all overlapped IIRC) by making playwriting highly prestigious but only open to the privileged few with the right connections. This strongly suggests that the limiting factor isn’t actually the number of people trying. Instead, it depends strongly on society’s ability to notice and promote the best work, which quite likely gets a lot worse once any particular field “takes off" and invites a deluge of imitators. Exponentially increasing effort actively interferes with heroic results, even as it provides more raw talent as potential heroes.

This suggests that a single global media market is terrible for intellectual progress.

Replies from: Benito↑ comment by Ben Pace (Benito) · 2018-11-27T14:10:33.531Z · LW(p) · GW(p)

Interesting. My main thought reading the OP was simply that coordination is hard at scale [LW · GW], and this applies to intellectual progress too. You had orders-of-magnitude increase in number of people but no change in productivity? Well, did you build better infrastructure and institutions to accommodate, or has your indrastructure for coordinating scientists largely stayed the same since the scientific revolution? In general, scaling early is terrible, [EA(p) · GW(p)] and will not be a source of value but run counter to it (and will result in massive goodharting).

Replies from: Benquo↑ comment by Benquo · 2018-11-27T15:45:52.802Z · LW(p) · GW(p)

So, should we encourage deeper schisms or other differentiation in the rationality community? Ideally not via hurt feelings so we can do occasional cross-pollination.

Replies from: Raemon, ChristianKl, Benito, Chris_Leong↑ comment by Raemon · 2018-11-28T20:13:55.280Z · LW(p) · GW(p)

My cached beliefs from the last time I thought about this is that progress is generally seen in small teams. This is sort of happening naturally as people (in the rationalsphere) tend to cluster into organizations, which are fairly silo'd and/or share their research informally.

This leaves you with the state of "it's hard for someone not in an org to get up to speed on what that org actually thinks", and my best guess is to build tools that are genuinely useful for smallish teams and networks to use semi-privately, but which increase the affordance for them to share things with the public (or, larger networks).

↑ comment by ChristianKl · 2018-11-29T12:16:17.487Z · LW(p) · GW(p)

I don't live in the Bay but the way Leverage Research if walled of from the rest of our community seems to be a working way of differentiation that still allows for cross-pollination.

In addition to differentiation within the Bay diffentiation by location would be another good way to have a bit of separation. Funding projects like Kocherga [LW · GW] gives us differentation.

↑ comment by Ben Pace (Benito) · 2018-11-27T17:22:27.315Z · LW(p) · GW(p)

I confess, that strategy has not occurred to me. I generally consider improvements to infrastructure to help the group produce value, and if a group has scaled too fast too early, write it off and go elsewhere. Something more (if you'll forgive the pejorative term) destructive might be right, and perhaps I should consider those strategies more. Though in the case of the rationality community I feel I can see a lot of cheap infrastructure improvements that I think will go a long way to improving coordination of intellectual labour.

(FYI I linked to that comment because I remembered it having a bunch of points about early growth being bad, not because I felt the topic discussed was especially relevant.)

Replies from: Davidmanheim↑ comment by Davidmanheim · 2018-11-29T13:23:34.372Z · LW(p) · GW(p)

The problem with fracturing is that you lose coordination and increase duplication.

I have a more general piece that discusses scaling costs and structure for companies that I think applies here as well - https://www.ribbonfarm.com/2016/03/17/go-corporate-or-go-home/

Replies from: joshuabecker↑ comment by joshuabecker · 2018-12-10T05:59:17.867Z · LW(p) · GW(p)

It's worth considering the effects of the "exploration/exploitation" tradeoff: decreasing coordination/efficiency can increase the efficacy of search in problem space over the long run, precisely because efforts are duplicated. When efforts are duplicated, you increase the probability that someone will find the optimal solution. When everyone is highly coordinated, people all look in the same place and you can end up getting stuck in a "local optimum"---a place that's pretty good, but can't be easily improved without scrapping everything and starting over.

It should be noted that I completely buy the "lowest hanging fruit is already picked" explanation. The properties of complex search have been examined somewhat in depth by Stuart Kauffman ("nk space"). These ideas were developed with biological evolution in mind but have been applied to problem solving. In essence, he quantifies the intuition you can improve low-quality things with a lot less search time than it takes to improve high-quality things.

These are precisely the types of spaces in which coordination/efficiency is counterproductive.

Replies from: NicholasKross↑ comment by Nicholas / Heather Kross (NicholasKross) · 2018-12-12T21:36:39.994Z · LW(p) · GW(p)

I'd be interested in more resources regarding the "low-hanging fruit" theory as related to the structure of ideaspace and how/whether nk space applies to that. Any good resources-for-beginners on Kauffman's work?

Replies from: joshuabecker↑ comment by joshuabecker · 2019-02-01T15:55:30.483Z · LW(p) · GW(p)

I read "At Home In The Universe: The Search for the Laws of Self Organization and Complexity" which is a very accessible and fun read---I am not a physicist/mathematician/biologist, etc, and it all made sense to me. The book talks about evolution, both biological and technological.

And the model described in that book has been quite commonly adapted by social scientists to study problem solving, so it's been socially validated as a good framework for thinking about scientific research.

↑ comment by Chris_Leong · 2018-11-28T11:02:02.649Z · LW(p) · GW(p)

Subreddits could achieve this without a schism

Replies from: Benquo↑ comment by Benquo · 2018-11-28T14:31:02.588Z · LW(p) · GW(p)

Academia has lots of journals but people submit across journals within a field if they don't get into their favored one. Likewise if largely overlapping groups are participating in multiple subreddits this seems more like division of labor than the kind of differentiation we saw in the classical Greek city-states.

comment by Stuart_Armstrong · 2018-12-01T18:00:51.085Z · LW(p) · GW(p)

But still? A hundred Shakespeares?

I'd wager there are thousands of Shakespeare-equivalents around today. The issue is that Shakespeare was not only talented, he was successful - wildly popular, and able to live off his writing. He was a superstar of theatre. And we can only have a limited amount of superstars, no matter how large the population grows. So if we took only his first few plays (before he got the fame feedback loop and money), and gave them to someone who had, somehow, never heard of Shakespeare, I'd wager they would find many other authors at least as good.

This is a mild point in favour of explanation 1, but it's not that the number of devoted researchers is limited, it's that the slots at the top of the research ladder are limited. In this view, any very talented individual who was also a superstar, will produce a huge amount of research. The number of very talented individuals has gone up, but the number of superstar slots has not.

Replies from: Davidmanheim, ChristianKl↑ comment by Davidmanheim · 2018-12-03T12:34:40.065Z · LW(p) · GW(p)

The model implies that if funding and prestige increased, this limitation would be reduced. And I would think we don't need prestige nearly as much as funding - even if near-top scientists were recruited and paid the way second and third string major league players in most professional sports were paid, we'd see a significant relaxation of the constraint.

Instead, the uniform wage for most professors means that even the very top people benefit from supplementing their pay with consulting, running companies on the side, giving popular lectures for money, etc. - all of which compete for time with their research.

Replies from: ChristianKl↑ comment by ChristianKl · 2018-12-03T14:06:35.006Z · LW(p) · GW(p)

Running companies on the side to commercialize their research ideas might reduce the amount of papers that they output. On the other hand it might very well increase their contribution to sciences a whole.

↑ comment by ChristianKl · 2018-12-01T18:45:30.707Z · LW(p) · GW(p)

It seems to me that the number of superstar slots is limited by the number of scientific fields.

If that's true the best solution would be to stop seeking international recognition for science and publish much more science in languages other then English to allow more fields to develop.

Replies from: mary-chernyshenko↑ comment by Mary Chernyshenko (mary-chernyshenko) · 2018-12-10T20:08:48.553Z · LW(p) · GW(p)

Why do you think it will lead to new fields, rather than to a Babylon of overlapping terminologies?

Replies from: ChristianKl↑ comment by ChristianKl · 2018-12-12T10:49:24.379Z · LW(p) · GW(p)

I think that the scientific establishment choose that students t.test with a cutoff at 5% is central for doing science was a very arbitrary decision. If the decision would have been different, then we would have different fields.

From my perspective there seem to be plenty of possible scientific fields that aren't pursued because they aren't producing status in the existing international scientific community.

Replies from: albertbokor, mary-chernyshenko↑ comment by albertbokor · 2018-12-18T20:37:14.593Z · LW(p) · GW(p)

Kinda like if there would civilisational Dunbar Limit? Language barriers sound like an effective means of fracturing, but would still need to be upheld somehow. The population and capital distribution per language might still be a nontrivial problem.

↑ comment by Mary Chernyshenko (mary-chernyshenko) · 2018-12-12T20:18:45.597Z · LW(p) · GW(p)

Regardless of any particular test; why do you think non-English-language-based diversification is good?

(asking because "non-English-language-based science" is currently a big problem where I live. "It's not in English" is basically the same as "it's not interesting to others".)

Replies from: ChristianKl↑ comment by ChristianKl · 2018-12-13T21:45:56.361Z · LW(p) · GW(p)

Having all science in English means that certain ontological assumptions that the English language makes get taken to be universal truths when they are particular features of the English language. Words like "be", "feel" or "imagine" are taken as if they map to something that's ontological basic when different languages slice up the conceptual space differently. The fact that English uses the "is of identity" and doesn't use a different word then "to be" to speak about identity leads to a lot of confusion.

Given the present climate of caring more about empirics then ontology there's also little push to get better at ontology in the current scientific community.

Issues around ontology however weren't my main issue.

"It's not in English" is basically the same as "it's not interesting to others".

This basically means that all high status research is published in English and also that the system doesn't work in a way where scientific funding is coupled on the necessity that research gets produced that's interesting to someone.

This needs a language that's spoken by enough people and at some point people who use the science in other applications.

Thomas Kuhn has a criteria that a is a field of knowledge where progress happens. That means that the way people change their topics of research is not just by listening to what's in fashion but by building upon previous work in a way that progresses. What kind of progress happens in the "non-English-language-based science" where you live?

Replies from: mary-chernyshenko, mary-chernyshenko↑ comment by Mary Chernyshenko (mary-chernyshenko) · 2018-12-14T20:37:28.234Z · LW(p) · GW(p)

Alright, if I meet that Markdown Syntax in a dark alley, we will have to talk. Have a link, instead.

https://www.facebook.com/photo.php?fbid=760692444279924&set=a.140441189638389&type=3&theater

Replies from: ChristianKl↑ comment by ChristianKl · 2018-12-14T23:30:39.426Z · LW(p) · GW(p)

DIY,Kiev style is an article the funding situation of Ukrainian science. It seems that after the fall of the Soviet Union research funding from the government was cut drastically and prestigious science started to depend on foreign money.

When the most prestigious science depends on foreign funding the less prestigious science that's still done in Ukrainian is likely to be lower quality.

It seems like Ukrainian politicians thought that Ukraine can be independent and that fails in various forms.

Chinese science in Chinese would a lot more likely to be successful then Ukrainian science in Ukrainian.

Replies from: mary-chernyshenko↑ comment by Mary Chernyshenko (mary-chernyshenko) · 2018-12-15T08:26:18.563Z · LW(p) · GW(p)

Let's not infer what the politicians thought, we have a rather different image of it here.

I'd like to read about Chinese science done in Chinese; I think it would be a great thing to know more about.

↑ comment by Mary Chernyshenko (mary-chernyshenko) · 2018-12-14T11:17:29.829Z · LW(p) · GW(p)

Is there a way to insert a picture? I think a graph will be more informative.

Replies from: SaidAchmiz, habryka4↑ comment by Said Achmiz (SaidAchmiz) · 2018-12-14T20:57:58.690Z · LW(p) · GW(p)

For what it’s worth, if you’re using GreaterWrong, you can click the Image button in the editor (it’s fourth from the left), and it will automatically insert the appropriate Markdown syntax for an image.

↑ comment by habryka (habryka4) · 2018-12-14T17:10:52.639Z · LW(p) · GW(p)

In case this was a technical question, you can insert pictures into comments by using the Markdown syntax:  · 2019-12-20T20:50:54.663Z · LW(p) · GW(p)

I still endorse most of this post, but https://docs.google.com/document/d/1cEBsj18Y4NnVx5Qdu43cKEHMaVBODTTyfHBa8GIRSec/edit has clarified many of these issues for me and helped quantify the ways that science is, indeed, slowing down.

comment by Raemon · 2018-11-29T02:34:02.954Z · LW(p) · GW(p)

Curated.

While I had already been thinking somewhat about the issues raised here, this post caused me to seriously grapple with how weird the state of affairs is, and to think seriously about why it might be, and (most importantly from my perspective), what LessWrong would actually have to do differently if our goal is to actually improve scientific coordination.

Replies from: joshuabecker↑ comment by joshuabecker · 2018-12-10T05:52:21.863Z · LW(p) · GW(p)

Can you help me understand why you see this as a a coordination problem to be solved? Should I infer that you don't buy the "lowest hanging fruit is already picked" explanation?

Replies from: ryan_b↑ comment by ryan_b · 2018-12-10T22:10:16.872Z · LW(p) · GW(p)

I can't speak for Raemon, but I point out that how low the fruit hangs is not a variable upon which we can act. We can act on the coordination question, regardless of of anything else.

Replies from: joshuabecker↑ comment by joshuabecker · 2019-02-01T15:52:30.323Z · LW(p) · GW(p)

Sure, though the question of "why is science slowing down" and "what should we do now" are two different questions. If the answer of "why is science slowing down" is simply because---it's getting harder.... then there may be absolutely nothing wrong with our coordination, and no action is required.

I'm not saying we can't do even better, but crisis-response is distinct from self-improvement.

comment by Davidmanheim · 2018-11-29T11:07:58.476Z · LW(p) · GW(p)

This seems to omit a critical and expected limitation as a process scales up in the number of people involved - communication and coordination overhead.

If there is low hanging fruit, but everyone is reaching for it simultaneously, then doubling the number of researchers won't increase the progress more than very marginally. (People with slightly different capabilities implies that the expected time to success will be the minimum of different people.) But even that will be overwhelmed by the asymptotic costs for everyone to find out that the low-hanging fruit they are looking for has been picked!

Is there a reason not to think that this dynamic is enough to explain the observed slowdown - even without assuming hypothesis 3, of no more low-hanging fruit?

Replies from: Bucky↑ comment by Bucky · 2018-11-29T22:48:29.285Z · LW(p) · GW(p)

This is an interesting theory. I think it makes some different predictions to the low hanging fruit model.

For instance, this theory would appear to suggest that larger teams would be helpful. If intel are not internally repeating the same research then them increasing their number of researchers should increase the discovery rate. If instead a new company employs the same number of new researchers then this will have minimal effect on the world discovery rate if they repeat what Intel is doing.

A simplistic low hanging fruit explanation does not distinguish between where the extra researchers are located.

Replies from: Davidmanheim↑ comment by Davidmanheim · 2018-11-30T08:34:50.420Z · LW(p) · GW(p)

Yes, this might help somewhat, but there is an overhead / deduplication tradeoff that is unavoidable.

I discussed these dynamics in detail (i.e. at great length) on Ribbonfarm here.

The large team benefit would explain why most innovation happens near hubs / at the leading edge companies and universities, but that is explained by the other theories as well.

comment by Alephywr · 2018-12-11T02:58:12.692Z · LW(p) · GW(p)

Popper points out that successful hypotheses just need to be testable, they don't need to come from anywhere in particular. Scientists used to consistently be polymaths educated in philosophy and the classics. A lot of scientific hypotheses borrowed from reasoning cultivated in that context. Maybe it's that context that's been milked for all it's worth. Or maybe it's that more and more scientists are naive empiricists/inductionists and don't believe in the primacy of imagination anymore, and thus discount entirely other modes of thinking that might lead to the introduction of new testable hypotheses. There are a lot of possibilities besides the ones expounded on in OP.

Replies from: albertbokor↑ comment by albertbokor · 2018-12-18T20:30:38.793Z · LW(p) · GW(p)

Hmm. The field is too 'hip' thus bloated, and the more imaginative of us don't have the time for dicking around with art beacuse of increased knowledge requirement and competition?

Replies from: ChristianKl, Alephywr↑ comment by ChristianKl · 2018-12-19T11:33:30.177Z · LW(p) · GW(p)

Nobody, in their right mind who critically thinks about medicine would choose a term like placebo-blind over the more accurate placebo-masked to describe common mechanisms the process.

A person who cares about epistomology can take a book like The Philosophy of Evidence-based Medicine by Jeremy H. Howick which explains why placebo-blind is misleading. The term makes claims about perception when the process that's used has nothing to do with measuring the perception of patients and many patient do perceive differences between placebo and verum due to side effects of the drug.

The marketing department of Big Pharma that prefers the misleading term placebo-blind seems to win out over people who care about epistomology.

↑ comment by Alephywr · 2018-12-19T04:37:26.230Z · LW(p) · GW(p)

Not art so much as philosophy. The average scientist today literally doesn't know what philosophy is. They do things like try to speak authoritatively about epistemology of science while dismissing the entire field of epistemology. Hence you get otherwise intelligent people saying things like "We just need people who are willing to look at reality", or appeals to "common sense" or any number of other absolutely ridiculous statements.

Replies from: ChristianKl↑ comment by ChristianKl · 2018-12-19T11:39:07.113Z · LW(p) · GW(p)

That leaves the question about whether they have a good sense of what art in the sense of previous times actually is.

Art is often about playing around with phenomena that have no practical use. It allows techniques to be developed that have no immediate commercial or even scientific value. This means the capabilities can increase over time and sometimes that leads to enough capabilities to produce commercial or scientific value down the road.

A lot what happens in HackerSpaces is art in that sense.

Replies from: Alephywrcomment by ChristianKl · 2018-11-28T17:48:30.458Z · LW(p) · GW(p)

Sigmoid curves and exponential curves look quite similar if you only see a portion of them.

comment by ryan_b · 2018-11-27T20:13:54.008Z · LW(p) · GW(p)

Assuming the trendline cannot continue seems like the Gambler's Fallacy. Saying we can resume the efficiency of the 1930's research establishment seems like a kind of institution-level Fundamental Attribution Error.

I find the low-hanging-fruit explanation the most intuitive because I assume everything has a fundamental limit and gets harder as we approach that limit as a matter of natural law.

I'm tempted to go one step further and try to look at the value added by each additional discovery; I suspect economic intuitions would be helpful both in comparing like with like and with considering causal factors. I have a nagging suspicion that 'benefit per discovery' is largely the same concept as 'discoveries per researcher', but I am not able to articulate why.

comment by bfinn · 2020-04-16T19:51:52.110Z · LW(p) · GW(p)

I've long thought the low-hanging fruit phenomenon applies to music. You can see it at work in the history of classical music. Starting with melodies (e.g. folk songs), breakthroughs particularly in harmony generated Renaissance music, Baroque, then Classical (meaning specifically Mozart etc.), then Romantic, then a modern cult of novelty produced a flurry of new styles from the turn of the 20th century (Impressionism onwards).

But by say 1980 it's like everything had been tried, a lot of 20th century experimentation (viz. atonal) was a dead end as far as audiences were concerned, and since then it's basically been tinkering around with the discoveries that have already been made.

If it is the case (and I think it is) that the invention of 'common practice music', i.e. tonal music with tunes & harmony, is actually a discovery of what humans like to hear, then there is a genuine limit on the number of tunes and chord progressions and rhythms possible, let alone good ones. (Cf the upper limit on the number of distinct flavours or colours, hence dishes or pictures.)

I suspect there is a similar story with novels. 20th century experimentation (stream of consciousness etc.) was a dead end, and it could well be we've already discovered broadly the optimum kind of novels that can be written. No doubt some great new ones will come along, some new genres, and occasional new Shakespeares, but basically it's tinkering with an existing format - stories of a certain length with plots of various standard kinds about humans (or human-like characters).

Hence also film/TV (similar to novels). And no doubt the other art forms too.

Partly what I'm saying here is that the arts involve discoveries more than inventions: it's discovering what humans are hard-wired (by evolution) to like. The element of culture/fashion is rather limited. Once you've made the key discoveries, you can still be creative by tinkering around (recombining elements etc.) but the scope is limited. Cf Damien Hirst said some years ago that all possible pictures [i.e. all significantly different kinds of picture] had already been made. And Edward Elgar made a similar observation a century ago about the limited number of different tunes you could make.

(PS If you think this is a pessimistic view, bear in mind that in your lifetime you won't read even all the famous classic novels or hear all the highly-regarded pieces of music. So the fact there can't be an infinite supply of future ones doesn't matter. First go away and read all the books ever written, then I'll listen when you complain there's a finite limit on new ones.)

comment by Raemon · 2019-11-27T21:16:46.050Z · LW(p) · GW(p)

The concepts in this post are, quite possibly, the core question I've been thinking about for the past couple years. I didn't just get them from this post (a lot of them come up naturally in LW team discussions), and I can't quite remember how counterfactual this post was in my thinking.

But it's definitely one of the clearer writeups about a core question to people who're thinking about intellectual progress, and how to improve.

The question is murky – it's not obvious whether science is actually slowing down to me, and there are multiple plausible hypotheses which suggest different courses of action. But this post at least gave me some additional hooks for how to think about this.

comment by Jan_Kulveit · 2018-11-29T20:22:14.602Z · LW(p) · GW(p)

Big part of this follows from the

Law of logarithmic returns:

In areas of endeavour with many disparate problems, the returns in that area will tend to vary logarithmically with the resources invested (over a reasonable range).

which itself can be derived from a very natural prior about the distribution of problem difficulties, so, yes, it should be the null hypothesis.

comment by ChristianKl · 2018-11-29T09:17:57.388Z · LW(p) · GW(p)

Why did the Gods Of Straight Lines fail us in genome sequencing the last 6 years? What did the involved scientists do to lose their fortune?

Replies from: gwern, Philip_W↑ comment by gwern · 2018-12-08T01:34:12.349Z · LW(p) · GW(p)

The gossip I hear is that the Gods of Straight Lines continued somewhat but prices took a breather because of the Illumina quasi-monopoly (think Intel vs AMD). Several of the competitors stumbled badly for what appear to be reasons unrelated to the task itself: BGI gambled on an acquisition to develop its own sequencers which famously blew up in its face for organizational reasons, and rumor has it that 23andMe spent a ton of money on an internal effort which it eventually discarded for unknown reasons. You'll notice that after WGS prices paused for years on end, suddenly, now that other companies have begun to catch up, Illumina has begun talking about $100 WGS next year and we're seeing DTC WGS drop like a stone.

Replies from: ChristianKl↑ comment by ChristianKl · 2018-12-09T16:14:05.101Z · LW(p) · GW(p)

It might be the way to get the cheap blood testing that Theranos promised but failed to deliver.

While you are here, do you know how closely DNA sequencing prices are linked to RNA sequencing prices?

If you could sequence RNA really cheaply, that would be great as that allows you to write RNA based on the amount of substance X that's in your probe. It might be the road to be able to measure all the hormones in the blood at the same time for cheap prices.

It might be the way to get the cheap blood testing that Theranos promised but failed to deliver.

Replies from: gwern↑ comment by gwern · 2018-12-11T00:13:29.066Z · LW(p) · GW(p)

Theranos, as I understand it, was promising blood testing of all sorts of biomarkers like blood glucose, and nothing to do with DNA. DNA sequencing is different from measuring concentration - at least in theory, you only need a single strand of DNA and you can then amplify that up arbitrary amounts (eg in PGD/embryo selection, you just suck off a cell or two from the young embryo and that's enough to work with). If you were trying to measure the nanograms of DNA per microliter, that's a bit different.

I don't know anything about RNA sequencing, since it's not relevant to anything I follow like GWASes.

Replies from: ChristianKl↑ comment by ChristianKl · 2018-12-12T10:06:37.384Z · LW(p) · GW(p)

Lets say I write a DNA chromosome that contains in a loop of [Promotor that gets activated by Testosterone] AUG ATU GUA TAU GUA TAU GUA TAU GUA TAU GUA TAU TAG

If I put that chromosome along with the enzymes that read DNA and write RNA into a solution, it will create the matching sequence in an amount that correlates with the amount of testosterone in the solution.

If you then read all the RNA in the solution you know how much of that sequence is there and can calculate the amount of testosterone.

In addition to measuring testosterone you can easily add 300 additional substances that you measure in parallel provided you can sequence your RNA cheap enough.

You will likely measure a reference value of a known substance as well.

https://www.abmgood.com/RNA-Sequencing-Service.html suggest that Illumina machines can also be somehow used for RNA but I don't know whether they are exactly the same machines.

If you actually want to understand how the genes do what they do it will be vital to be good at measuring how they affect the various hormones in the body.

The kind of technology that Theranos and other blood testing currently use is analog in the signals. RNA on the other hand allows for something like digital data that you can read out of a biological system when you put something in that system that can write RNA.

comment by Bridgett Kay (bridgett-kay) · 2018-11-28T20:26:17.377Z · LW(p) · GW(p)

I'm also partial to the low hanging fruit explanation. Unfortunately, it seems to me we can really only examine progress on already established fields. Much harder to tell if there is much left to discover outside of established fields- the opportunities to make big discoveries that establish whole new fields of study. This is where the undiscovered, low hanging fruit would be, i think.

comment by cousin_it · 2018-11-27T14:43:45.415Z · LW(p) · GW(p)

constant growth rates in response to exponentially increasing inputs is the null hypothesis

Yeah, that would be big if true. How sure are you that it's exponential and not something else like quadratic? All your examples are of the form "inputs grow faster than returns", nothing saying it's exponential.

Replies from: Bucky↑ comment by Bucky · 2018-11-27T16:38:25.248Z · LW(p) · GW(p)

Toy model: Say we are increasing knowledge towards a fixed maximum and for each man hour of work we get a fixed fraction of the distance closer to that maximum. Then exponentially increasing inputs are required to maintain a constant growth rate.

If I was throwing darts blind at a wall with a line on it and measured the closest I got to the line then the above toy model applies. I realise this is a rather cynical interpretation of scientific progress!

If the progress in a field doesn't depend on how much you know but how much you have left to find out then this pattern seems like a viable null hypothesis. Of course the data will add information but I'm not allowed to take the data into account when choosing my null.

EDIT: More generally, a fixed maximum knowledge is not strictly required. We still require exponentially varying inputs if the potential maximum increases linearly as we gain more knowledge. Think Zeno’s Achilles and the tortoise.

Replies from: Bucky, ChristianKl↑ comment by Bucky · 2018-11-30T13:22:56.687Z · LW(p) · GW(p)

Ok, I'm an idiot, this model doesn't predict exponentially increasing required inputs in time - the model predicts exponentially increasing required inputs against man-hours worked.

The relationship between required inputs and time is hyperbolic.

↑ comment by ChristianKl · 2018-11-29T12:19:29.500Z · LW(p) · GW(p)

When thinking like this it's worthwhile of what's meant with "field". Is for example nutrition science currently a field? Was domestic science a field?

What would be required to have a field that deals with the effect of eating on the body?

Replies from: Buckycomment by Ben Pace (Benito) · 2019-11-27T20:55:44.286Z · LW(p) · GW(p)

This post posed the question very clearly, and laid out a bunch of interesting possible hypotheses to explain the data. I think it's an important question for humanity and also comes up regularly in my thinking about how to help people do research on questions like AI alignment and human rationality.

comment by Nick Maley (nick-maley) · 2018-12-13T01:24:24.983Z · LW(p) · GW(p)

Maybe it's not a law of straight lines, its a law of exponentially diminishing returns, and maybe it applies to any scientific endeavour. What we are doing in science is developing mathematical representations of reality. The reality doesn't change, but our representations of it become ever more accurate, in an asymptotic fashion. What about Physics? In 2500 years we go from naive folk physics to Democritus, to Ptolemy, Galileo and Copernicus, to Newton, then Clerk Maxwell, Einstein, Schrodinger, Feynman and then the Standard Model, at every stage getting more and more accurate and complete. We now have a physics that is (as Sean Carroll points out) to all intents and purposes perfectly accurate in all it's predictions for ALL physical phenomena happening at Terrestrial and Solar neighborhood scale. We can even measure accurately the collisions between black holes over a distance of a billion light years. Order of magnitude improvements in the scope and accuracy of a such a physics might just be logically impossible. So enormous effort is invested by some very bright people to make relatively small improvements in theory. So this is a long hanging fruit argument, but predicated on the assumption that theories in a mature science will progressively approach an asymptote of possible accuracy and completeness.

Replies from: ChristianKl↑ comment by ChristianKl · 2018-12-14T11:49:27.586Z · LW(p) · GW(p)

We now have a physics that is (as Sean Carroll points out) to all intents and purposes perfectly accurate in all it's predictions for ALL physical phenomena happening at Terrestrial and Solar neighborhood scale.

That's not really true. Predicting the weather of tomorrow is a problem of physical prediction but we don't have perfectly accurate predictions.

It's interesting to have a physics community that sees itself so narrow that it wouldn't consider that physical phenomena.

Replies from: albertbokor↑ comment by albertbokor · 2018-12-18T20:15:54.486Z · LW(p) · GW(p)

Interesting proposition. I always thought that predicting stuff like weather was more of a question of incomplete data/ too many factors (is precise nanontech like this?). I mean we might get a results by a compromise between a ton of measurement devices (internet of things style) and some useful statistical approximations, but the computational need to accuracy ratio seems a bit much.

[sidenote:]Also, the post made me think about having little to no idea about career choice, and gave me the idea of working on this very question, on a higher level (mathemathics of human resources, I guess)... fucking overdue coding assignments, though. And my CS program in general.

Replies from: ChristianKl↑ comment by ChristianKl · 2018-12-19T11:24:38.733Z · LW(p) · GW(p)

The useful questions of physics that stay unsolved seem to be about complex systems as we live in a world most of the interesting phenomena aren't due to individual particals but due to complex systems.

Replies from: albertbokor↑ comment by albertbokor · 2018-12-19T12:04:30.537Z · LW(p) · GW(p)

Hmm. I want to look up how the guys did thermodynamics and such. I doubt I could think of anything new, but using these tools in all sorts of different scenarios might be of use.

comment by paperoli · 2018-12-04T11:33:02.841Z · LW(p) · GW(p)

If you assume that the human "soul" mass cannot be increased over time, does this problem make more sense? Population increase just causes an increase in the proportion of NPC's, while discoveries require something transcendental.

Replies from: albertbokor, TheWakalix↑ comment by albertbokor · 2018-12-18T20:20:23.168Z · LW(p) · GW(p)

Interesting idea. I intuit it as a more continuous problem (despite being a narcissist myself) as living in increasing sized groups dilutes the pool on an individual level, too much communication required to stay up to date and similar stuff. Kinda like a civilizational Dunbar Limit. (As an aunicornist in world view, feel this more akin to sorting somehow. You can't get below a certain O(f(n)) efficiency per added units).

↑ comment by TheWakalix · 2018-12-07T15:24:43.873Z · LW(p) · GW(p)

I get that this is probably in jest, but I am viscerally not okay with suggestions that some people aren't actually people (at least without very strong evidence), and I don't think that's an unusual trait.

Can we... not say things that make us seem like an alt-right cesspool to the average viewer, unless it's actually true and necessary?

(I'm not saying that "things that sound alt-right" are inherently bad, but there is a social cost to saying things like this, and I think we shouldn't accept that cost if we only get bad jokes out of it.)

Replies from: SaidAchmiz, ChristianKl↑ comment by Said Achmiz (SaidAchmiz) · 2018-12-07T15:45:30.808Z · LW(p) · GW(p)

Strongly downvoted for totally unnecessary politicization. Grandparent refers to concepts from religion, philosophy, and roleplaying games. If you perceive some obscure political-faction dog-whistling in perfectly ordinary comments, that is hardly a reason for other commenters to contort themselves to avoid setting off your politics detector.

(I mean, consider generalizing this principle. Is anything that happens to be a shibboleth, no matter how contrived, of some faction, no matter how irrelevant, now off-limits? This is tantamount to surrendering control of what language, and even what concepts, we use, to every Tom, Dick, and Harry whose only accomplishment is posting clickbait political screeds on the Internet.)

Replies from: TheWakalix, TheWakalix↑ comment by TheWakalix · 2018-12-08T02:29:37.669Z · LW(p) · GW(p)

I think you are misunderstanding me, and I’d like to clarify some things.

I did not read the great-grandparent as a dog whistle. I did not and do not think that paperoli’s use of the term NPC indicates that they are alt-right. I think that it will indicate to other people that they are alt-right.

My politics-detector is not being used to directly indicate “do I like this?” to me. Rather, I am using it as a proxy for “will people in general dislike this?”, combined with the knowledge that certain political standpoints are very costly to seem to hold.

I only brought up the political aspect because I saw the alt-right as being relevant to LW in that we should be trying, with some nonzero amount of effort, to avoid being associated strongly with them. As a result, I considered “avoid comments like this” to be a net positive action. I am now less certain that it’s worth the cost, but I think it was worth stating that the alternative (ignoring terminological overlap) does have a cost.

Edit: and the Visceral Repulsion and the Terminological Overlap referred to different parts of the great-grandparent - “some people don’t have souls” and “NPC” respectively.

↑ comment by TheWakalix · 2018-12-08T00:20:58.181Z · LW(p) · GW(p)

This isn’t politicization. It’s already politicized. I’m responding to the existing politicization of the word “NPC”.

I will note that I made two distinct points in the grandparent, and failed to distinguish them enough. The content alone caused my visceral repulsion, while the similarity to the alt-right caused my instrumental desire to avoid seeming more like the alt-right. This is motivated by the current association between LW and the alt-right, which I worry could become as significant in shaping outsiders’ views as the Phyg term did. Perhaps this is unlikely, but I’d rather not risk it for the sake of a joke.

In particular, it’s not my politics detector I’m worried about. It’s that this could contribute to LW setting off outsiders’ politics detectors.

And we don’t have to worry about expunging every shibboleth because we only need to do this for relatively well-known shibboleths of movements that we are considered to be associated with but which we would rather not be. It’s not that steep of a slippery slope.

If we’re talking about politics, my standpoint is pragmatic, not moral. I don’t think it’s fair that we should avoid terms found in hated screeds. I think it’s instrumentally optimal to do so.

Replies from: TheWakalix↑ comment by TheWakalix · 2018-12-08T02:13:23.901Z · LW(p) · GW(p)

Are the downvotes for the weasel reasoning around “politicization”, or for incorrectly asserting that LW is at risk of being tarred with “alt-right”, or for overestimating the harmfulness is being tarred with “alt-right”, or something I haven’t thought of? I am trying to see where the disagreement is, but I only see mutual misunderstanding.

In a nutshell, where’s the disagreement? What specific things do you think I am incorrect about? I would like to engage with your beliefs but I don’t know where they differ from mine.

Replies from: clone of saturn, habryka4↑ comment by clone of saturn · 2018-12-08T04:35:33.618Z · LW(p) · GW(p)

I think panicky preemptive denials of being alt-right are much more likely to associate us with them than the odd comment using the term "NPC," and furthermore, I think truthseeking is already more than impossible enough without constantly taking your eyes off the ball to worry about what various political factions will think. If you're that worried about people thinking you're alt-right, I'd suggest commenting under a single-use pseudonym.

Replies from: TheWakalix↑ comment by TheWakalix · 2018-12-08T17:47:44.506Z · LW(p) · GW(p)

You’ve still misunderstood. I’m worried about LW being associated with the alt-right because of the terminological overlap. I’m not concerned about being personally tarred with that.

I agree that this might be something that is counterproductive and itself harmful to discuss, though. And since it seems that people are aware and thinking about this, I don’t have much of a reason to ring the alarm bell anymore. I won’t continue this or bring it up elsewhere in public comments.

↑ comment by habryka (habryka4) · 2018-12-08T02:25:12.817Z · LW(p) · GW(p)

I have a bunch of thoughts, but don't currently have the time to write them down. Just letting you know that I am planning to write a comment about this sometime in the next few days (and feel free to ping me if I don't).

↑ comment by ChristianKl · 2018-12-07T22:44:58.491Z · LW(p) · GW(p)

This notion of NPC is older in our community then the term alt-right.

Replies from: TheWakalix, albertbokor↑ comment by TheWakalix · 2018-12-08T00:05:39.664Z · LW(p) · GW(p)

I know that. Most people don’t.

My post combined two points: I think that is Viscerally Repulsive because of the content, and I think it’s Harmful For LW because of the similarity to the alt-right. I don’t think I separated the two enough. I understand that LW didn’t borrow the term from the alt-right, and I don’t think it would be a bad thing even if it had. But using the same terminology as the alt-right is costly, and I don’t think we should pay those costs for the sake of bad jokes.

(If anyone thinks I’m backpedaling, notice that I said this in the grandparent, just not spelled out as much.)

↑ comment by albertbokor · 2018-12-18T20:26:32.386Z · LW(p) · GW(p)

What deffinitions do we generally use?