Contra Heighn Contra Me Contra Heighn Contra Me Contra Functional Decision Theory

post by omnizoid · 2023-09-17T02:15:41.430Z · LW · GW · 23 commentsContents

1 Is there a divergence between what’s rational to do and what type of agent you should want to be? 2 Is Schwarz’s procreation case unfair? 3 Reasons I refer to FDT as crazy, not just implausible or false 4 Implausible discontinuities 5 Conclusion None 23 comments

Heighn and I have an ongoing debate about FDT. This is my reply to Heighn’s reply to my reply to Heighn’s reply to my post. You can trace back the links from here [LW · GW]—which is the article I’m responding to. This will probably be my last post on the topic, so let me boil the dispute down to 4 things we disagree about. I will then argue I am right on each topic. This article might be sort of drab! There will be a lot of “I say X—Heighn’s reply is Y, my reply is Z, Heighn’s reply is A, my reply is B.” If you want to get a grasp of the core of the disagreement you’ll probably only need to read section 1—the others are just haggling over the details.

1 Is there a divergence between what’s rational to do and what type of agent you should want to be?

This may just be the crux of our disagreement. I claim there is no difference here: the questions What type of agent do I want to be? and What decision should I make in this scenario? are equivalent. If it is wise to do X in a given problem, then you want to be an X-ing agent, and if you should be an X-ing agent, then it is wise to do X. The only way to do X is to have a decision procedure that does X, which makes you an X-ing agent. And if you are an X-ing agent, you have a decision procedure that does X, so you do X.

This quote from Heighn I think expresses the core of the disagreement. Heighn claims that it’s rational to do X if and only if it’s best to be the type of agent who does X. Heighn asserts that if it’s wise to do X in a given problem, then you want to be an X-ing agent—seemingly without argument. So the question is, is this a plausible stipulation. Specifically, let’s think about a case I gave earlier that Heighn provides a very precise summary of:

Unfortunately, omnizoid once again doesn't clearly state the problem - but I assume he means that

there's an agent who can (almost) perfectly predict whether people will cut off their legs once they exist

this agent only creates people who he predicts will cut off their legs once they exist

existing with legs > existing without legs > not existing

Heighn’s diagnosis of such a case is that it’s rational to cut off your legs once you exist because the types of agents who do that are more likely to exist and thus do better. I think this is really implausible—once you exist, you don’t care about the probability of agents like you existing. You already exist! Why does it matter the probability of your existence conditional on certain actions. Your actions can’t affect that anymore because you already exist. In response to this, Heighn says:

FDT'ers would indeed cut off their legs: otherwise they wouldn't exist. omnizoid seems to believe that once you already exist, cutting off your legs is ridiculous. This is understandable, but ultimately false. The point is that your decision procedure doesn't make the decision just once. Your decision procedure also makes it in the predictor's head, when she is contemplating whether or not to create you. There, deciding not to cut off your legs will prevent the predictor from creating you.

But you only make decisions after you exist. Of course, your decisions influence whether or not you exist but they don’t happen until after you exist. But once you exist, no matter how you act, there is a zero percent chance that you won’t exist.

So I think reflecting on this case, and similar cases, shows quite definitively that it can sometimes be worth being the type of agent who acts irrationally. Specifically, if you are artificially rewarded for irrationality, prior to the irrational act, such that once you can take the irrational act you’ve already received the reward and thus receive no benefit from the irrational act, then it pays to be rational. If rationality were just about being the type of agent who gets more money on average, there would be no dispute—no one denies that Newcombe’s problem one-boxers get more money on average. What two-boxers claim is that the situation is artificially rigged against them, such that they lose by being rational. Once the predictor has run their course, 2-boxing gets you free money.

But I think we have a knock-down argument for the conclusion that it doesn’t always pay to be rational. There are cases where even FDTists would agree that being rational makes you worse off (the thing in quotes is from my last article). Let me give 4 examples:

“After all, if there’s a demon who pays a billion dollars to everyone who follows CDT or EDT then FDTists will lose out. The fact you can imagine a scenario where people following one decision theory are worse off is totally irrelevant—the question is whether a decision theory provides a correct account of rationality.”

Suppose that in the agent creation scenario, the agent gets very lucky, and is created. On this account, CDTists win more than FDTists—they get an extra leg.

There are bands of roving people who beat up those who follow FDT. In this world, FDTists will get less utility. Still, that doesn’t mean FDT is wrong—it just means that acting rationally doesn’t always pay.

A demon tortures only people who are very rational. Here, it clearly pays to be irrational.

I think this is the crux of the disagreement. But it just seems patently obvious that sometimes, if the world is rigged such that those who are rational are stipulated to get worse payouts, then it sometimes won’t pay to be rational.

2 Is Schwarz’s procreation case unfair?

Schwarz’s procreation case is the following:

Procreation. I wonder whether to procreate. I know for sure that doing so would make my life miserable. But I also have reason to believe that my father faced the exact same choice, and that he followed FDT. If FDT were to recommend not procreating, there's a significant probability that I wouldn't exist. I highly value existing (even miserably existing). So it would be better if FDT were to recommend procreating. So FDT says I should procreate. (Note that this (incrementally) confirms the hypothesis that my father used FDT in the same choice situation, for I know that he reached the decision to procreate.)

Heighn’s reply is the following:

This problem doesn't fairly compare FDT to CDT though. By specifying that the father follows FDT, FDT'ers can't possibly do better than procreating. Procreation directly punishes FDT'ers - not because of the decisions FDT makes, but for following FDT in the first place.

My reply is the following:

They could do better. They could follow CDT and never pass up on the free value of remaining child-free.

Heighn’s reply is the following:

No! Following CDT wasn't an option. The question was whether or not to procreate, and I maintain that Procreation is unfair towards FDT.

Yes, the decision is whether or not to procreate. I maintain that in such scenarios, it’s rational to follow CDT—Heighn says that it’s rational to follow FDT. Agents who follow my advice objectively do better—Heighn’s agents are miserable, mine aren’t!

3 Reasons I refer to FDT as crazy, not just implausible or false

In my article, I note:

I do not know of a single academic decision theorist who accepts FDT. When I bring it up with people who know about decision theory, they treat it with derision and laughter.

Heighn replies:

They should write up a critique!

Some of them have—MacAskill, for example. But the reason I point this out is two-fold.

I think higher-order evidence of this kind is useful. If 99.9% of people who seriously study a topic agree about X, and you disagree about X, you should think your reasoning has gone wrong.

The view I defend in my article isn’t just that FDT is wrong, but instead that it’s crazy. Crazy in the sense of being something that pretty much anyone who seriously considered the issue for long enough without being biased or misled on decision theory would give it up. Such an accusation is pretty dramatic—the kind of thing usually reserved for views like that the Earth is flat or creationist views! But it becomes less so when the idea is one rejected by perhaps every practicing decision theorist, and popular among people who are building AI, who just seem to be answering a fundamentally different question.

4 Implausible discontinuities

MacAskll has a critique of FDT that is, to my mind, pretty damning:

A related problem is as follows: FDT treats ‘mere statistical regularities’ very differently from predictions. But there’s no sharp line between the two. So it will result in implausible discontinuities. There are two ways we can see this.

First, take some physical processes S (like the lesion from the Smoking Lesion) that causes a ‘mere statistical regularity’ (it’s not a Predictor). And suppose that the existence of S tends to cause both (i) one-boxing tendencies and (ii) whether there’s money in the opaque box or not when decision-makers face Newcomb problems. If it’s S alone that results in the Newcomb set-up, then FDT will recommending two-boxing.

But now suppose that the pathway by which S causes there to be money in the opaque box or not is that another agent looks at S and, if the agent sees that S will cause decision-maker X to be a one-boxer, then the agent puts money in X’s opaque box. Now, because there’s an agent making predictions, the FDT adherent will presumably want to say that the right action is one-boxing. But this seems arbitrary — why should the fact that S’s causal influence on whether there’s money in the opaque box or not go via another agent much such a big difference? And we can think of all sorts of spectrum cases in between the ‘mere statistical regularity’ and the full-blooded Predictor: What if the ‘predictor’ is a very unsophisticated agent that doesn’t even understand the implications of what they’re doing? What if they only partially understand the implications of what they’re doing? For FDT, there will be some point of sophistication at which the agent moves from simply being a conduit for a causal process to instantiating the right sort of algorithm, and suddenly FDT will switch from recommending two-boxing to recommending one-boxing.

Second, consider that same physical process S, and consider a sequence of Newcomb cases, each of which gradually make S more and more complicated and agent-y, making it progressively more similar to a Predictor making predictions. At some point, on FDT, there will be a point at which there’s a sharp jump; prior to that point in the sequence, FDT would recommend that the decision-maker two-boxes; after that point, FDT would recommend that the decision-maker one-boxes. But it’s very implausible that there’s some S such that a tiny change in its physical makeup should affect whether one ought to one-box or two-box.

I’m quoting the argument in full because I think it should make things a bit clear. Heighn responds:

This is just wrong: the critical factor is not whether "there's an agent making predictions". The critical factor is subjunctive dependence, and there is no subjunctive dependence between S and the decision maker here.

I responded to that, saying:

But in this case there is subjective dependence. The agent’s report depends on whether the person will actually one box on account of the lesion. Thus, there is an implausible continuity on account of it mattering whether to one box the precise causal mechanisms of the box.

Heighn responded to this saying:

No, there is no subjunctive dependence. Yes,

The agent’s report depends on whether the person will actually one box on account of the lesion.

but that's just a correlation. This problem is just Smoking Lesion, where FDT smokes. The agent makes her prediction by looking at S, and S is explicitly stated to cause a 'mere statistical regularity'. It's even said that S is "not a Predictor". So there is no subjunctive dependence between X and S, and by extension, not between X and the agent.

But there is! The agent looks at the brain and then acts only if the brain is likely to output the result of one boxing. The mechanism is structurally similar to Newcombe’s problem—they predict what the person will do and so what they predict depends on the output of the cognitive algorithm. Whether the agent runs the same cognitive algorithm or just relies on something that predicts the result of the same cognitive algorithm seems flatly irrelevant—both cases give identical payouts and odds of affecting the payouts.

5 Conclusion

That’s probably a wrap! The basic problem with FDT is what MacAskill described—it’s fine as a theory of what type of agent to be—sometimes it pays to be irrational—but it’s flatly crazy as a theory of rationality. The correct theory of rationality would not instruct you to cut off your legs when that is guaranteed not to benefit you—or anyone else.

23 comments

Comments sorted by top scores.

comment by Zack_M_Davis · 2023-09-17T23:40:33.497Z · LW(p) · GW(p)

What two-boxers claim is that the situation is artificially rigged against them, such that they lose by being rational. [...] if there's a demon who pays a billion dollars to everyone who follows CDT or EDT then FDTists will lose out

But the original timeless decision theory paper addresses this class of argument: see §4 on "Maximizing Decision-Determined Problems" and §5 on "Is Decision-Dependency Fair". Briefly, the relevant difference between Newcomb's problem and "reward agents that use a particular decision theory" scenarios is that Newcomb's problem only depends on the agent's choice of boxes, not the agent's internal decision theory.

Replies from: omnizoid↑ comment by omnizoid · 2023-09-18T00:54:40.262Z · LW(p) · GW(p)

The examples just show that sometimes you lose by being rational.

Unrelated, but I really liked your recent post on Eliezer's bizarre claim that character attacks last is an epistemic standard.

Replies from: Zack_M_Davis↑ comment by Zack_M_Davis · 2023-09-18T06:11:32.145Z · LW(p) · GW(p)

But part of the whole dispute is that people don't agree on what "rational" means, right? In these cases, it's useful to try to avoid the disputed term—on both sides—and describe what's going on at a lower level. Suppose I'm a foreigner from a far-off land. I'm not a native English speaker, and I don't respect your Society's academics any more than you respect my culture's magicians. I've never heard this word rational before. (How do you even pronounce that? Ra-tee-oh-nal?) How would you explain the debate to me?

It seems like both sides agree that FDT agents get more money in Newcomb's problem, and that CDT agents do better than FDT agents given the fact of having been blackmailed, but that FDT agents get blackmailed less often in the first place. So ... what's the disagreement about? How are you so sure one side is crazy? Can you explain it without appealing to this "ra-tee-oh-nal" thing, or the consensus of your Society's credentialed experts?

Replies from: cubefox↑ comment by cubefox · 2023-09-18T20:57:46.760Z · LW(p) · GW(p)

I'm not the one addressed here, but the term "rational" can be replaced with "useful" here. The argument is that there is a difference between the questions "what is the most useful thing to do in this given situation?" and "what is the most generally useful decision algorithm, and what would it recommend in this situation?" Usefulness is a causal concept, but it is applied to different things here (actions versus decision algorithms that cause actions). CDT answers the first question, MIRI-style decision theories answer something similar to the second question.

What people like me claim is that the answer to the first question may be different from the second. E.g. for Newcomb's problem, where having a decision algorithm that, in certain situations, picks non-useful actions, can be useful. Like when an entity can read your decision algorithm and predict what action you would pick, and then change its rewards based on that.

In fact, that the answers to the above questions can diverge was already discussed by Thomas Schelling and Derek Parfit. See Parfit in Reasons and Persons:

Consider Schelling's Answer to Armed Robbery. A man breaks into my house. He hears me calling the police. But, since the nearest town is far away, the police cannot arrive in less then fifteen minutes. The man orders me to open the safe in which I hoard my gold. He threatens that, unless he gets the gold in the next five minutes, he will start shooting my children, one by one.

What is it rational for me to do? I need the answer fast. I realize that it would not be rational to give this man the gold. The man knows that, if he simply takes the gold, either I or my children could tell the police the make and number of the car in which he drives away. So there is a great risk that, if he gets the gold, he will kill me and my children before he drives away.

Since it would be irrational to give this man the gold, should I ignore his threat? This would also be irrational. There is a great risk that he will kill one of my children, to make me believe his threat that, unless he gets the gold, he will kill my other children.

What should I do? It is very likely that, whether or not I give this man the gold, he will kill us all. I am in a desperate position. Fortunately, I remember reading Schelling's The Strategy of Conflict.

I also have a special drug, conveniently at hand. This drug causes one to be, for a brief period, very irrational. I reach for the bottle and drink a mouthful before the man can stop me. Within a few seconds, it becomes apparent that I am crazy. Reeling about the room, I say to the man: 'Go ahead. I love my children. So please kill them.' The man tries to get the gold by torturing me. I cry out: 'This is agony. So please go on.'

Given the state that I am in, the man is now powerless. He can do nothing that will induce me to open the safe. Threats and torture cannot force concessions from someone who is so irrational. The man can only flee, hoping to escape the police. And, since I am in this state, the man is less likely to believe that I would record the number on his car. He therefore has less reason to kill me.

While I am in this state, I shall act in ways that are very irrational. There is a risk that, before the police arrive, I may harm myself or my children. But, since I have no gun, this risk is small. And making myself irrational is the best way to reduce the great risk that this man will kill us all.

On any plausible theory about rationality, it would be rational for me, in this case, to cause myself to become for a period very irrational.

comment by ProgramCrafter (programcrafter) · 2023-09-17T12:16:27.909Z · LW(p) · GW(p)

After all, if there’s a demon who pays a billion dollars to everyone who follows CDT or EDT then FDTists will lose out.

How does demon determine what DT a person follows?

If it's determined by simulating person's behavior in Newcomb-like problem, then once FDTist gets to know about that, he should two-box (since billion dollars from demon is more than million dollars from Omega).

If it's determined by mind introspection, then FDTist will likely self-modify to believe to be CDTist, and checking actual DT becomes a problem like detecting AI deceptive alignment.

Replies from: omnizoidcomment by Oskar Mathiasen (oskar-mathiasen) · 2023-09-17T07:34:37.493Z · LW(p) · GW(p)

Cant you make the same argument you make in Schwarz procreation by using Parfits hitchhiker after you have reached the city? In which case i think its better to use that example, as it avoids the Heighns criticism.

In the case of implausible discontinuities i agree with Heighn that there is no subjunctive dependence.

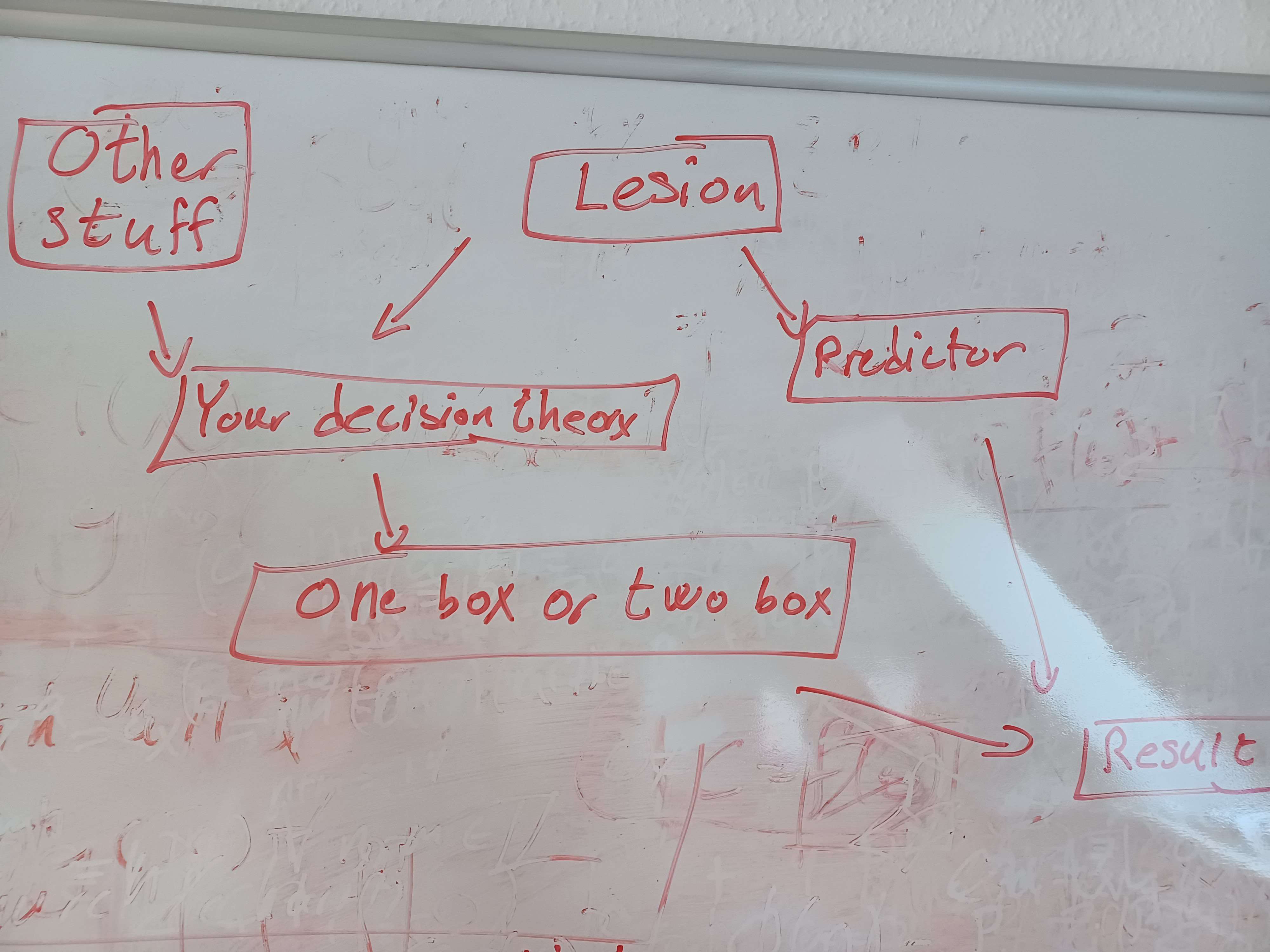

Here is a quick diagram of the causation in the thought experiment as i understand it.

We have an outcome which is completely determined by your decision to one box/two box and the predictor decision of whether to but money in the one box.

The Predictor decides based on the presence of a lesion (or some other physical fact)

Your decision how many boxes to take is determined by your decision theory.

And your decision theory is partly determined by the Lesion and partly by other stuff.

Now (my understanding of) the claim is that there is no downstream path from your decision theory to the predictor. This means that applying the do operator on the decision theory node doesn't change the distribution of the choices of the predictor.

↑ comment by omnizoid · 2023-09-17T15:26:20.895Z · LW(p) · GW(p)

You can make it with Parfit's hitchiker, but in that case there's an action before hand and so a time when you have the ability to try to be rational.

There is a path from the decision theory to the predictor, because the predictor looks at your brain--with the decision theory it will make--and bases the decision on the outputs of that cognitive algorithm.

Replies from: oskar-mathiasen↑ comment by Oskar Mathiasen (oskar-mathiasen) · 2023-09-18T14:33:25.515Z · LW(p) · GW(p)

I don't think the quoted problem has that structure.

And suppose that the existence of S tends to cause both (i) one-boxing tendencies and (ii) whether there’s money in the opaque box or not when decision-makers face Newcomb problems.

But now suppose that the pathway by which S causes there to be money in the opaque box or not is that another agent looks at S

So S causes one boxing tendencies, and the person putting money in the box looks only at S.

So it seems to be changing the problem to say that the predictor observes your brain/your decision procedure. When all they observe is S which, while causing "one boxing tendencies", is not causally downstream of your decision theory.

Further if S where downstream of your decision procedure, then fdt one boxes whether or not the path from the decision procedure to the contents of the boxes routes through an agent. Undermining the criticism that fst has implausible discontinuities.

comment by Ape in the coat · 2023-09-18T06:00:22.581Z · LW(p) · GW(p)

But you only make decisions after you exist. Of course, your decisions influence whether or not you exist but they don’t happen until after you exist. But once you exist, no matter how you act, there is a zero percent chance that you won’t exist.

I think a huge part of your crux of disagreement is whether it's posiible to be mistaken about your own existence.

If you can be mistaken, say because instead of existing in the real world you may just be part of a simulation where the predictor is determining your decision and thus, whether to create you in the real world or not, and you value existence in the real world, then your counter argument doesn't make sense.

However, if you can be certain that you definetely exist in a real world, then indeed, your counterargument works.

comment by metachirality · 2023-09-17T05:12:08.551Z · LW(p) · GW(p)

The thing I disagree with here most is the claim that FDT is crazy. I do not think it is, in fact, crazy to think it is a good idea to adopt a decision theory whose users generically end up winning in decision problems compared to other decision theories.

I also find it suspicious that that part predicates on the opinions of experts we know nothing about, who presumably learned about FDT primarily from you who thinks FDT is bad, and also sort of assumes that MacAskill is any more of an expert on decision theory than Soares. Do not take this as a personal attack, given this is speculation about your intent/mental state, these are just my honest thoughts. At the very least, it would be helpful to read their arguments against FDT.

Even if these were actual experts you talked to, somehow it seems strange to compare a few experts you talked to saying it is false, to flat earth, a hypothesis which many many experts from several fields all related to the earth, all accountable, think is false, and which can be disproven by mere idle thinking about what society and the institution of science would have to look like for it to be true.

For that matter, who even counts as a decision theory expert? What set of criteria are you applying to determine whether someone is an expert on it or not?

Replies from: omnizoid↑ comment by omnizoid · 2023-09-17T15:21:46.117Z · LW(p) · GW(p)

Well here's one indication--I don't know if there's a single published academic philosophy paper defending FDT. Maybe there's one--certainly not many. Virtually no decision theorists defend it. I don't know much about Soares, but I know he's not an academic philosopher, and I think there are pretty unique skills involved in being an academic philosopher.

Replies from: metachirality↑ comment by metachirality · 2023-09-17T15:49:12.591Z · LW(p) · GW(p)

So it's crazy to believe things that aren't supported by published academic papers? I think if your standard for "crazy" is believing something that a couple people in a field too underdeveloped to be subject to the EMH disagree with and that there are merely no papers defending it, not any actively rejecting it, then probably you and roughly every person on this website ever count as "crazy".

Actually, I think an important thing here is that decision theory is too underdeveloped and small to be subject to the EMH, so you can't just go "if this crazy hypothesis is correct then why hasn't the entire field accepted it, or at least having a debate over it?" It is simply too small to have fringe, in contrast to non-fringe positions.

Obviously, I don't think the above is necessarily true, but I still think you're making us rely too much on your word and personal judgement.

On that note, I think it's pretty silly to call people crazy based on either evidence they have not seen and you have not showed them (for instance, whatever counterarguments the decision theorists you contacted had), or evidence as weak/debatable as the evidence you have put forth in this post, and which has come to their attention only now. Were we somehow supposed to know that your decision theorist acquaintances disagreed beforehand?

If you have any papers from academic decision theorists about FDT, I'd like to see them, whether favoring or disfavoring it.

IIRC Soares has a Bachelor's in both computer science and economics and MacAskill has a Bachelor's in philosophy.

Replies from: omnizoid↑ comment by omnizoid · 2023-09-17T18:59:04.216Z · LW(p) · GW(p)

I wouldn't call a view crazy for just being disbelieved by many people. But if a view is both rejected by all relevant experts and extremely implausible, then I think it's worth being called crazy!

I didn't call people crazy, instead I called the view crazy. I think it's crazy for the reasons I've explained, at length, both in my original article and over the course of the debate. It's not about my particular decision theory friends--it's that the fact that virtually no relevant experts agree with an idea is relevant to an assessment of it.

I'm sure Soares is a smart guy! As are a lot of defenders of FDT. Lesswrong selects disproportionately for smart, curious, interesting people. But smart people can believe crazy things--I'm sure I have some crazy beliefs; crazy in the sense of being unreasonable such that pretty much all rational people would give them up upon sufficient ideal reflection and discussion with people who know what they're talking about.

Replies from: metachirality↑ comment by metachirality · 2023-09-17T20:38:47.529Z · LW(p) · GW(p)

My claim is that there is not yet people who know what they are talking about, or more precisely, everyone knows roughly as much about what they are talking about as everyone else.

Again, I'd like to know who these decision theorists you talked to were, or at least what their arguments were.

The most important thing here is how you are evaluating the field of decision theory as a whole, how you are evaluating who counts as an expert or not, and what arguments they make, in enough detail that one can conclude that FDT doesn't work without having to rely on your word.

Replies from: omnizoid, omnizoid↑ comment by omnizoid · 2023-09-18T03:12:26.571Z · LW(p) · GW(p)

If you look at philosophers with Ph.Ds who study decision theory for a living, and have a huge incentive to produce original work, none of them endorse FDT.

Replies from: metachirality↑ comment by metachirality · 2023-09-18T05:29:56.567Z · LW(p) · GW(p)

I don't think the specific part of decision theory where people argue over Newcomb's problem is large enough as a field to be subject to the EMH. I don't think the incentives are awfully huge either. I'd compare it to ordinal analysis, a field which does have PhDs but very few experts in general and not many strong incentives. One significant recent result (if the proof works then the ordinal notation in question would be most powerful proven well-founded) was done entirely by an amateur building off of work by other amateurs (see the section on Bashicu Matrix System): https://cp4space.hatsya.com/2023/07/23/miscellaneous-discoveries/

↑ comment by omnizoid · 2023-09-17T20:43:23.508Z · LW(p) · GW(p)

I mean like, I can give you some names. My friend Ethan who's getting a Ph.D was one person. Schwarz knows a lot about decision theory and finds the view crazy--MacAskill doesn't like it either.

Replies from: metachirality↑ comment by metachirality · 2023-09-17T20:58:25.591Z · LW(p) · GW(p)

Is there anything about those cases that suggest it should generalize to every decision theorist, or that this is as good a proxy for how much FDT works as the beliefs of earth scientists are for whether the Earth is flat or not?

For instance, your samples consist of a philosopher not specialized in decision theory, one unaccountable PhD, and one single person who is both accountable and specializes in decision theory. Somehow, I feel as if there is a difference between generalizing from that and generalizing from every credentialed expert that one could possibly contact. In any case, its dubious to generalize from that to "every decision theorist would reject FDT in the same way every earth scientist would reject flat earth", even if we condition on you being totally honest here and having fairly represented FDT to your friend.

I think everyone here would bet $1,000 that if every earth scientist knew about flat earth, they would nearly universally dismiss it (in contrast to debating over it or universally accepting it) without hesitation. However, I would be surprised if you would bet $1,000 that if every decision theorist knew about FDT, they would nearly universally dismiss it.

Replies from: omnizoid↑ comment by omnizoid · 2023-09-18T00:52:40.169Z · LW(p) · GW(p)

What's your explanations of why virtually no published papers defend it and no published decision theorists defend it? You really think none of them have thought of it or anything in the vicinity?

Replies from: metachirality↑ comment by metachirality · 2023-09-18T05:10:32.988Z · LW(p) · GW(p)

Yes. Well, almost. Schwarz brings up disposition-based decision theory, which appears similar though might not be identical to FDT, and every paper I've seen on it appears to defend it as an alternative to CDT. There are some looser predecessors to FDT as well, such as Hofstadter's superrationality, but that's too different imo.

Given Schwarz' lack of reference to any paper describing any decision theory even resembling FDT, I'd wager that FDT's obviousness is merely only in retrospect.

comment by metachirality · 2023-09-17T04:56:11.931Z · LW(p) · GW(p)

(Epistemic status: Plausible position that I don't actually believe in.) The correct answer to the leg-cutting dilemma is that you shouldn't cut it, because actually you will end up existing no matter what because Omega has to simulate you to predict your actions, and it's always possible that you're in the simulation. The fact that you always have to be simulated to be predicted makes up for every apparent decision theory paradox, such as not cutting your leg off even when doing so precludes your existence.

Replies from: TAG