CFAR: Progress Report & Future Plans

post by Adam Scholl (adam_scholl) · 2019-12-19T06:19:58.948Z · LW · GW · 5 commentsContents

(12/29/19: Edited to mention CFAR's mistakes regarding Brent, and our plans to publicly release our handbook).

Progress in 2018 and 2019

AIRCS

European Workshops

Instructor Training

Internal Improvements

Plans for 2020

We’re hiring, and fundraising!

None

5 comments

Context: This is the first in a series of year-end updates from CFAR. This post mainly describes what CFAR did over the past two years, and what it plans to do next year; other posts in the series will describe more about its mission, financials, and impact.

(12/29/19: Edited to mention CFAR's mistakes regarding Brent, and our plans to publicly release our handbook).

Progress in 2018 and 2019

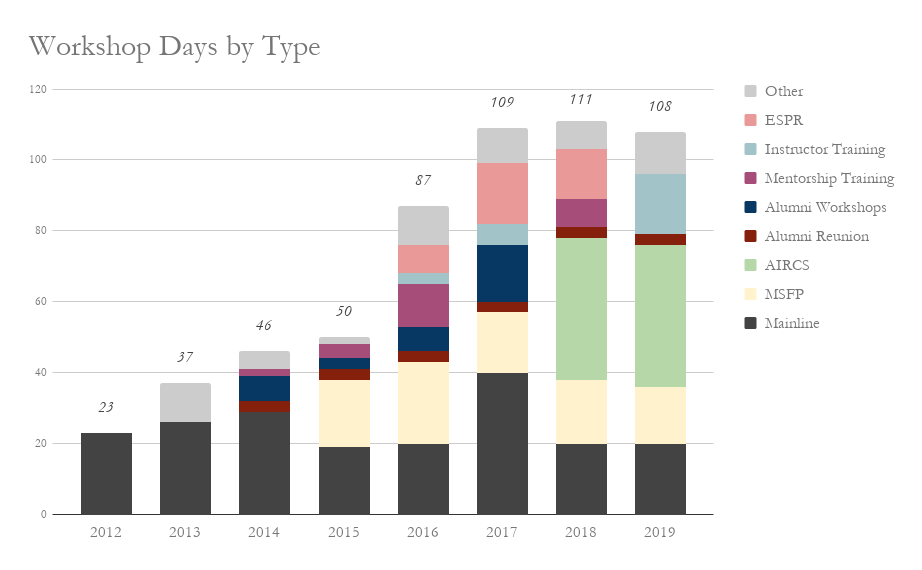

For the last three years, CFAR held its total number of workshop-days[1] roughly constant:

But the distribution of workshops has changed considerably. Most notably, in 2018 we started co-running AIRCS with MIRI, and since then we’ve been allocating roughly ⅓ of our staff time[2] to this project.[3]

AIRCS

AI Risk for Computer Scientists (AIRCS) workshops are designed to recruit and train programmers for roles doing technical alignment research. The program name isn’t perfect: while most attendees are programmers, it also accepts mathematicians, physicists, and others with significant technical skill.

We co-run these workshops with MIRI—the staff is mostly a mix of current and former CFAR and MIRI staff, and the curriculum is roughly half rationality material and half object-level content about the alignment problem. So far, MIRI has hired six AIRCS graduates (more are in the pipeline). While these workshops are designed primarily to benefit MIRI’s research programs, the curriculum includes a variety of other perspectives (e.g. Paul Christiano’s IDA), and AIRCS graduates have also subsequently accepted positions at other alignment orgs (e.g. FHI, Ought, and the OpenAI alignment team).

We’re excited about these workshops. So far, 12 AIRCS graduates have subsequently taken jobs or internships doing technical safety research,[4] of which (from what we can tell), AIRCS played a non-trivial causal role[5] in 9 of these outcomes. AIRCS also seems to help participants learn to reason better about the alignment problem, and to give MIRI greater familiarity with candidates (thus hopefully improving average quality of hires).

Overall, we’ve been impressed by the results of this program so far. We’re also excited about the curricular development that’s occurred at AIRCS, parts of which have been making their way into our curriculum for other programs.

European Workshops

Another notable strategic change over this period was that we decided to start running many more workshops in Europe. In total, 23% of the workshop days CFAR ran or co-ran in 2018 and 2019 occured in Europe—these programs included three mainlines, a mentorship training, one leg of our instructor training, a workshop at FHI featuring members of their new Research Scholars Program, and ESPR 2018.[6]

Aside from ESPR and the FHI workshop, all of these programs were held in the Czech Republic. This is partly because we’re excited about the Czech rationalist/EA scene, and partly because the Czech Association for Effective Altruism does a fantastic job of helping us with ops support, making it logistically easier for us to run workshops abroad.

In 2019, we spun off ESPR (the European Summer Program on Rationality) into a new independent organization run by Jan Kulveit, Senior Research Scholar in FHI’s Research Scholars Program and one of the initial organizers of the Czech rationality/EA community. We sent Elizabeth Garrett to help with the transition, but Jan organized and ran the program independently, and from what we can tell the program went splendidly.

Instructor Training

We ran our second-ever instructor training program this year, led by Eli Tyre and Brienne Yudkowsky. This program seems to me to have gone well. I think this is probably partly because the quality of the cohort was high, and partly because Eli and Brienne’s curriculum seemed to manage (to a surprising-to-all-of-us degree) to achieve its aim of helping people do generative, original seeing-type thinking and research. Going forward, we’ll be grateful and proud to have members of this cohort as guest instructors.

Internal Improvements

CFAR’s internal processes and culture seem to me to have improved markedly over the past two years. We made a number of administrative changes—e.g. we improved our systems for ops, budgets, finances, and internal coordination, and acquired a permanent venue, which reduces the financial cost and the time cost of running workshops. And after spending significant time investigating our mistakes with regard to Brent, we reformed our hiring, admissions and conduct policies, to reduce the likelihood such mistakes reoccur.

It also seems to me that our internal culture and morale improved over this time. Compared with two years ago, my impression is that our staff trust and respect each other more—that there’s more camaraderie, and more sense of shared strategic orientation.

Plans for 2020

From the outside, I expect CFAR’s strategy will look roughly the same next year as it has for the past two years. We plan to run a similar distribution of workshops, to keep recruiting and training technical alignment researchers (especially for/with MIRI, although we do try to help other orgs with recruiting too, where possible), and to continue propagating epistemic norms intended to help people reason more sanely about existential risk—to develop the skills required to notice, rather than ignore, crucial considerations; to avoid taking rash actions with large effect but unclear sign; to model and describe even subtle intuitions with rigor.

So far, I think this strategy has proved impactful. I’m proud of what our team has accomplished, both in terms of measurable impact on the AI safety landscape (e.g. counterfactual additional researchers), and in terms of more difficult to measure effects on the surrounding culture (e.g. the propagation of the term “crux”).

That said, I think CFAR still struggles with some pretty basic stuff. For example:

-

Historically, I think CFAR has been really quite bad at explaining its goals, strategy, and mechanism of impact—not just to funders, and to EA at large, but even to each other. I regularly encounter people who, even after extensive interaction with CFAR, have seriously mistaken impressions about what CFAR is trying to achieve. I think this situation is partly due to our impact model being somewhat unusually difficult to describe (especially the non-career-change-related parts), but mostly due to us having done a poor job of communicating.[7]

-

Our staff burn out regularly. Working at CFAR is intense: during workshops, staff spend nearly all their waking hours working, and they typically spend about ⅓ of their workdays at workshops. Between workshops and traveling, it can be hard to e.g. spend enough time with loved ones. And since many-person events are by nature, I think, somewhat unpredictable, our staff semi-regularly have to stay up late into the night fixing some sudden problem or another. Of the 13 long-term employees who have left CFAR to date, 5 reported leaving at least in substantial part due to burnout. Even aside from concerns about burnout, our current workshop load is sufficiently high that our staff have little time left to learn, improve, and become better at their jobs. We’re exploring a variety of strategies intended to mitigate these problems—for example, we’re considering working in three 3-month sprints next year, and collectively taking one-month sabbaticals in between each sprint to learn, work on personal projects and recharge.

-

We have limited ability to track the effects of our workshops. Sometimes the effects are obvious, like when people tell us they changed careers because of a workshop; other times we have strong reason to suspect an effect exists (e.g. many participants reporting that somehow, the workshop caused them to feel comfortable seriously considering working on alignment), but we’re sufficiently unclear about the details of the mechanism of impact that it’s difficult to know how exactly to improve. And even in the clearest cut cases, it’s hard to confidently estimate counterfactuals—neither us nor the participants, I think, have a great ability to predict what would have occurred instead in a world where they’d never encountered CFAR.

In 2020, we plan to devote significant effort toward trying to mitigate these and similar problems. Assuming we can find a suitable candidate, one step we hope to take is hiring someone to work alongside Dan Keys to help us gather and analyze more data. We’re also forming an outside advisory board—this board won’t have formal decision-making power, but it will have a mandate to review (and publish public reports on) our progress. This board is an experiment, which by default will last one year (although we may disband it sooner if it turns out to cost too much time, or to incentivize us to goodhart [LW · GW], etc). But my guess is that both their advice, and the expectation that we’ll regularly explain our strategy and decisions to them, will cause CFAR to become more strategically oriented—and hopefully more legibly so!—over the coming year.

(We're also planning to publicly release our workshop handbook, which we've previously only given to alumni. Our reservations [LW(p) · GW(p)] about distributing it more widely have faded over time, many people have asked us for a public version, and we’ve become increasingly interested in getting more public criticism and engagement with our curriculum. So we decided to run the experiment).

We’re hiring, and fundraising!

From what I can tell, CFAR’s impact so far has been high. For example, of the 66 technical alignment researchers at MIRI, CHAI, FHI, and the DeepMind and OpenAI alignment teams[8], 38 have attended a workshop of some sort, and workshops seem to have had some non-trivial causal role in at least 15 of these careers.

I also suspect CFAR has other forms of impact—for example, that our curriculum helps people reason more effectively about existential risk, that AIRCS and MSFP give a kind of technical education into alignment research that is both valuable and still relatively rare, and that the community-building effects of our programs probably cause useful social connections to form that we wouldn’t generally hear about. Our evidence on these points is less legible, but personally, my guess would be that non-career-change-type impacts like these account for something like half of CFAR’s total impact.

While our workshops have, in expectation, helped cause additional research hires to other technical safety orgs, and one might reasonably be enthusiastic about CFAR for reasons aside from the career changes it’s helped cause, I do think our impact has disproportionately benefited MIRI’s research programs. So if you think MIRI’s work is low-value, I think it makes sense to decrease your estimate of CFAR’s impact accordingly. But especially if MIRI research is one of the things you’d like to see continue to grow, I think CFAR represents one of the best available bets for turning dollars (and staff time) into existential risk reduction.

CFAR could benefit from additional money (and staff) to an unusual degree this year. We are not in urgently dire straits in either regard—we have about 12 months of runway, accounting for pending grant payments, and our current staff size is sufficient to continue running lots of workshops. But our staff size is considerably smaller than it was in 2017 and 2018, and we expect that we could fairly easily turn additional staff into additional workshop output. Also, our long-term sources of institutional funding are uncertain, which is making it harder than usual for us to make long-term plans and commitments, and increases the portion of time we spend trying to raise funds relative to running/improving workshops. So I expect CFAR would benefit from additional dollars and staff somewhat more than in recent years. If you’d like to support us, donate here—and if you’re interested in applying for a job, reach out!

This total includes both workshops we co-run (like AIRCS and MSFP) and workshops we run ourselves (like mainlines and mentorship workshops). ↩︎

CFAR’s allocation of staff time by goal roughly approximates its distribution of workshops by type, since most of its non-workshop staff time is spent pursuing goals instrumental to running workshops (preparing/designing classes, doing admissions, etc). ↩︎

Together with MSFP, this means about 50% of our workshop output over the past two years has been focused specifically on recruiting and training people for roles in technical safety research. ↩︎

Of these, three eventually left these positions—two were internships, one was a full-time job—and are not currently employed as alignment researchers, although we expect some may take similar positions again in the future. We also expect additional existing AIRCS alumni may eventually find their way into alignment research; past programs of this sort have often had effects that were a couple years delayed. ↩︎

More details on these estimates coming soon in our 2019 Impact Data document. ↩︎

The Czech Association for Effective Altruism also hosted a European CFAR Alumni Reunion in 2018, attended by 83 people. ↩︎

I think our name probably makes this problem worse—that people sometimes hear us as attempting to claim something like omni-domain competence, and/or that our mission is to make the population at large more rational in general. In fact, our curricular aims are much narrower: to propagate a particular set of epistemic norms among a particular set of people. We plan to explore ways of resolving this confusion next year, including by changing our name if necessary. ↩︎

“Technical alignment researchers” is a fuzzy category, and obviously includes people outside these five organizations; this group just struck me as a fairly natural, clearly-delineated category. ↩︎

5 comments

Comments sorted by top scores.

comment by Vaniver · 2019-12-19T19:31:57.107Z · LW(p) · GW(p)

Historically, I think CFAR has been really quite bad at explaining its goals, strategy, and mechanism of impact—not just to funders, and to EA at large, but even to each other. I regularly encounter people who, even after extensive interaction with CFAR, have seriously mistaken impressions about what CFAR is trying to achieve. I think this situation is partly due to our impact model being somewhat unusually difficult to describe (especially the non-career-change-related parts), but mostly due to us having done a poor job of communicating.

When I wrote this comment [LW(p) · GW(p)] on T-shaped organizations, CFAR was one of the main examples in my mind.

comment by romeostevensit · 2019-12-19T06:57:16.695Z · LW(p) · GW(p)

Our staff burn out regularly.

it seems to me like one of the issues would be an underestimate of the number of ops people needed. Adding one marginal ops person to an event seems like an easy test? This might be give bad data if the org is bad at hierarchy thus increasing coordination costs as a function of more like number of edges in a complete graph instead of more like a n-ary tree with n like 4 or 5.

Replies from: Duncan_Sabien↑ comment by Duncan Sabien (Deactivated) (Duncan_Sabien) · 2019-12-19T07:22:31.755Z · LW(p) · GW(p)

This is a good guess on priors, but in my experience (Oct 2015 - Oct 2018, including taking over the role of a previous burnout, and also leaving fairly burnt), it has little to do with ops capacity or ops overload.

Replies from: gworley, erratim↑ comment by Gordon Seidoh Worley (gworley) · 2019-12-21T00:12:22.558Z · LW(p) · GW(p)

My experience suggests that "extra ops people" isn't likely a good guess on priors and your experience is far more typical; more often when this happens an organization has cultural problems that cannot be fixed by the addition of additional people, and instead requires careful organizational transformation to change the organization's culture such that frequent burnout is not the sort of thing that happens.

↑ comment by Timothy Telleen-Lawton (erratim) · 2019-12-19T20:51:23.173Z · LW(p) · GW(p)

+1 (I'm the Executive Director of CFAR)