We run the Center for Applied Rationality, AMA

post by AnnaSalamon · 2019-12-19T16:34:15.705Z · LW · GW · 324 commentsContents

[Edit: We're out of time, and we've allocated most of the reply-energy we have for now, but some of us are likely to continue slowly dribbling out answers from now til Jan 2 or so (maybe especially to replies, but also to some of the q's that we didn't get to yet). Thanks to everyone who participate... None 324 comments

CFAR recently launched its 2019 fundraiser, and to coincide with that, we wanted to give folks a chance to ask us about our mission, plans, and strategy. Ask any questions you like; we’ll respond to as many as we can from 10am PST on 12/20 until 10am PST the following day (12/21).

Topics that may be interesting include (but are not limited to):

- Why we think there should be a CFAR;

- Whether we should change our name to be less general;

- How running mainline CFAR workshops does/doesn't relate to running "AI Risk for Computer Scientist" type workshops. Why we both do a lot of recruiting/education for AI alignment research and wouldn't be happy doing only that.

- How our curriculum has evolved. How it relates to and differs from the Less Wrong Sequences. Where we hope to go with our curriculum over the next year, and why.

Several CFAR staff members will be answering questions, including: me, Tim Telleen-Lawton, Adam Scholl, and probably various others who work at CFAR. However, we will try to answer with our own individual views (because individual speech is often more interesting than institutional speech, and certainly easier to do in a non-bureaucratic way on the fly), and we may give more than one answer to questions where our individual viewpoints differ from one another's!

(You might also want to check out our 2019 Progress Report and Future Plans [LW · GW]. And we'll have some other posts out across the remainder of the fundraiser, from now til Jan 10.)

[Edit: We're out of time, and we've allocated most of the reply-energy we have for now, but some of us are likely to continue slowly dribbling out answers from now til Jan 2 or so (maybe especially to replies, but also to some of the q's that we didn't get to yet). Thanks to everyone who participated; I really appreciate it.]

324 comments

Comments sorted by top scores.

comment by Ben Pace (Benito) · 2019-12-20T21:56:55.464Z · LW(p) · GW(p)

I feel like one of the most valuable things we have on LessWrong is a broad, shared epistemic framework, ideas with which we can take steps through concept-space together and reach important conclusions more efficiently than other intellectual spheres e.g. ideas about decision theory, ideas about overcoming coordination problems, etc. I believe all of the founding staff of CFAR had read the sequences and were versed in things like what it means to ask where you got your bits of evidence from, that correctly updating on the evidence has a formal meaning, and had absorbed a model of Eliezer's law-based approach [LW · GW] to reasoning about your mind and the world.

In recent years, when I've been at CFAR events, I generally feel like at least 25% of attendees probably haven't read The Sequences, aren't part of this shared epistemic framework, and don't have an understanding of that law-based approach, and that they don't have a felt need to cache out their models of the world into explicit reasoning and communicable models that others can build on. I also have felt this way increasingly about CFAR staff over the years (e.g. it's not clear to me whether all current CFAR staff have read The Sequences). And to be clear, I think if you don't have a shared epistemic framework, you often just can't talk to each other very well about things that aren't highly empirical, certainly not at the scale of more than like 10-20 people.

So I've been pretty confused by why Anna and other staff haven't seemed to think this is very important when designing the intellectual environment at CFAR events. I'm interested to know how you think about this?

I certainly think a lot of valuable introspection and modelling work still happens at CFAR events, I know I personally find it useful, and I think that e.g. CFAR has done a good job in stealing useful things from the circling people (I wrote about my positive experiences circling here [LW(p) · GW(p)]). But my sense for a number of the attendees is that even if they keep introspecting and finding out valuable things about themselves, 5 years from now they will not have anything to add to our collective knowledge-base (e.g. by writing a LW sequence that LWers can understand and get value from), even to a LW audience who considers all bayesian evidence admissible even if it's weird or unusual, because they were never trying to think in a way that could be communicated in that fashion. The Gwerns and the Wei Dais and the Scott Alexanders of the world won't have learned anything from CFAR's exploration.

As an example of this, Val (who was a cofounder but doesn't work at CFAR any more) seemed genuinely confused [LW(p) · GW(p)] when Oli asked for third-party verifiable evidence for the success of Val's ideas about introspection. Oli explained that there was a lemons problem (i.e. information asymmetry) when Val claimed that a mental technique has changed his life radically, when all of the evidence he offers is of the kind "I feel so much better" and "my relationships have massively improved" and so on. (See Scott's Review of All Therapy Books for more of what I mean here, though I think this is a pretty standard idea.) He seemed genuinely confused why Oli was asking for third-party verifiable evidence, and seemed genuinely surprised that claims like "This last September, I experienced enlightenment. I mean to share this as a simple fact to set context" would be met with a straight "I don't believe you." This was really worrying to me, and it's always been surprising to me that this part of him fit naturally into CFAR's environment and that CFAR's natural antibodies weren't kicking against it hard.

To be clear, I think several of Val's posts in that sequence were pretty great (e.g. The Intelligent Social Web is up for the 2018 LW review, and you can see Jacob Falkovich's review on how the post changed his life [LW(p) · GW(p)]), and I've personally had some very valuable experiences with Val at CFAR events, but I expect, had he continued in this vein at CFAR, that over time Val would just stop being able to communicate with LWers, and drift into his own closed epistemic bubble, and to a substantial degree pull CFAR with him. I feel similarly about many attendees at CFAR events, although fewer since Val left. I never talked to Pete Michaud very much, and while I think he seemed quite emotionally mature (I mean that sincerely) he seemed primarily interested in things to do with authentic relating and circling, and again I didn't get many signs that he understood why building explicit models or a communal record of insights and ideas was important, and because of this it was really weird to me that he was executive director for a few years.

To put it another way, I feel like CFAR has in some ways given up on the goals of science, and moved toward the goals of a private business, whereby you do some really valuable things yourself when you're around, and create a lot of value, but all the knowledge you gain about about building a company, about your market, about markets in general, and more, isn't very communicable, and isn't passed on in the public record for other people to build on (e.g. see the difference between how all scientists are in a race to be first to add their ideas to the public domain, whereas Apple primarily makes everyone sign NDAs and not let out any information other than releasing their actual products, and I expect Apple will take most of their insights to the grave).

Replies from: AnnaSalamon, AnnaSalamon, Duncan_Sabien, AnnaSalamon, AnnaSalamon, orthonormal, ricraz↑ comment by AnnaSalamon · 2019-12-21T11:14:44.867Z · LW(p) · GW(p)

This is my favorite question of the AMA so far (I said something similar aloud when I first read it, which was before it got upvoted quite this highly, as did a couple of other staff members). The things I personally appreciate about your question are: (1) it points near a core direction that CFAR has already been intending to try moving toward this year (and probably across near-subsequent years; one year will not be sufficient); and (2) I think you asking it publicly in this way (and giving us an opportunity to make this intention memorable and clear to ourselves, and to parts of the community that may help us remember) will help at least some with our moving there.

Relatedly, I like the way you lay out the concepts.

Your essay (I mean, “question”) is rather long, and has a lot of things in it; and my desired response sure also has a lot of things in it. So I’m going to let myself reply via many separate discrete small comments because that’s easier.

(So: many discrete small comments upcoming.)

↑ comment by AnnaSalamon · 2019-12-21T14:16:54.136Z · LW(p) · GW(p)

Ben Pace writes:

In recent years, when I've been at CFAR events, I generally feel like at least 25% of attendees probably haven't read The Sequences, aren't part of this shared epistemic framework, and don't have any understanding of that law-based approach, and that they don't have a felt need to cache out their models of the world into explicit reasoning and communicable models that others can build on.

The “many alumni haven't read the Sequences” part has actually been here since very near the beginning (not the initial 2012 minicamps, but the very first paid workshops of 2013 and later). (CFAR began in Jan 2012.) You can see it in our old end-of-2013 fundraiser post [LW · GW], where we wrote “Initial workshops worked only for those who had already read the LW Sequences. Today, workshop participants who are smart and analytical, but with no prior exposure to rationality -- such as a local politician, a police officer, a Spanish teacher, and others -- are by and large quite happy with the workshop and feel it is valuable.” We didn't name this explicitly in that post, but part of the hope was to get the workshops to work for a slightly larger/broader/more cognitively diverse set than the set who for whom the original Sequences in their written form tended to spontaneously "click".

As to the “aren’t part of this shared epistemic framework” -- when I go to e.g. the alumni reunion, I do feel there are basic pieces of this framework at least that I can rely on. For example, even on contentious issues, 95%+ of alumni reunion participants seem to me to be pretty good at remembering that arguments should not be like soldiers, that beliefs are for true things, etc. -- there is to my eyes a very noticable positive difference between the folks at the alumni reunion and unselected-for-rationality smart STEM graduate students, say (though STEM graduate students are also notably more skilled than the general population at this, and though both groups fall short of perfection).

Still, I agree that it would be worthwhile to build more common knowledge and [whatever the “values” analog of common knowledge is called] supporting “a felt need to cache out their models of the world into explicit reasoning and communicable models that others can build on” and that are piecewise-checkable (rather than opaque masses of skills that are useful as a mass but hard to build across people and time). This particular piece of culture is harder to teach to folks who are seeking individual utility, because the most obvious payoffs are at the level of the group and of the long-term process rather than at the level of the individual (where the payoffs to e.g. goal-factoring and murphyjitsu are located). It also pays off more in later-stage fields and less in the earliest stages of science within preparadigm fields such as AI safety, where it’s often about shower thoughts and slowly following inarticulate hunches. But still.

↑ comment by Duncan Sabien (Deactivated) (Duncan_Sabien) · 2019-12-21T09:45:07.272Z · LW(p) · GW(p)

that CFAR's natural antibodies weren't kicking against it hard.

Some of them were. This was a point of contention in internal culture discussions for quite a while.

(I am not currently a CFAR staff member, and cannot speak to any of the org's goals or development since roughly October 2018, but I can speak with authority about things that took place from October 2015 up until my departure at that time.)

Replies from: AnnaSalamon↑ comment by AnnaSalamon · 2019-12-21T13:43:44.260Z · LW(p) · GW(p)

Agreed

Replies from: adam_scholl↑ comment by Adam Scholl (adam_scholl) · 2019-12-22T04:26:03.622Z · LW(p) · GW(p)

Yeah, I predict that if one showed Val or Pete the line about fitting naturally into CFAR’s environment without triggering antibodies, they would laugh hard and despairingly. There was definitely friction.

Replies from: SaidAchmiz↑ comment by Said Achmiz (SaidAchmiz) · 2019-12-22T05:59:28.309Z · LW(p) · GW(p)

What did this friction lead to (what changes in CFAR’s output, etc.)?

↑ comment by AnnaSalamon · 2019-12-21T13:42:41.379Z · LW(p) · GW(p)

Ben Pace writes:

“... The Gwerns and the Wei Dais and the Scott Alexanders of the world won't have learned anything from CFAR's exploration.”

I’d like to distinguish two things:

- Whether the official work activities CFAR staff are paid for will directly produce explicit knowledge in the manner valued by the Gwern etc.

- Whether that CFAR work will help educate people who later produce explicit knowledge themselves in the manner valued by Gwern etc., and who wouldn't have produced that knowledge otherwise.

#1 would be useful but isn’t our primary goal (though I think we’ve done more than none of it). #2 seems like a component of our primary goal to me (“scientists” or “producers of folks who can make knowledge in this sense” isn’t all we’re trying to produce, but it’s part of it), and is part of what I would like to see us strengthen over the coming years.

To briefly list our situation with respect to whether we are accomplishing #2 (according to me):

- There are in fact a good number of AI safety scientists in particular who seem to me to produce knowledge of this type, and who give CFAR some degree of credit for their present tendency to do this.

- On a milder level, while CFAR workshops do not themselves teach most of the Sequences’ skills (which would exceed four days in length, among other difficulties), we do try to nudge participants into reading the Sequences (by referencing them with respect at the workshop, by giving all mainline participants and AIRCS participants paper copies of “How to actually change your mind” and HPMOR, and by explicitly claiming they are helpful for various things).

- At the same time, I do think we should make Sequences-style thinking a more explicit element of the culture spread by CFAR workshops, and of the culture that folks can take for granted at e.g. alumni reunions (although it is there nevertheless to quite an extent).

(I edited this slightly to make it easier to read after Kaj had already quoted from it.)

Replies from: adam_scholl, Kaj_Sotala↑ comment by Adam Scholl (adam_scholl) · 2019-12-22T11:26:23.819Z · LW(p) · GW(p)

I think a crisp summary here is: CFAR is in the business of helping create scientists, more than the business of doing science. Some of the things it makes sense to do to help create scientists look vaguely science-ish, but others don't. And this sometimes causes people to worry (understandably, I think) that CFAR isn't enthused about science, or doesn't understand its value.

But if you're looking to improve a given culture, one natural move is to explore that culture's blindspots. And I think exploring those blindspots is often not going to look like an activity typical of that culture.

An example: there's a particular bug I encounter extremely often at AIRCS workshops, but rarely at other workshops. I don't yet feel like I have a great model of it, but it has something to do with not fully understanding how words have referents at different levels of abstraction. It's the sort of confusion that I think reading A Human's Guide to Words [? · GW] often resolves in people, and which results in people asking questions like:

- "Should I replace [my core goal x] with [this list of "ethical" goals I recently heard about]?"

- "Why is the fact that I have a goal a good reason to optimize for it?"

- "Are propositions like 'x is good' or 'y is beautiful' even meaningful claims?"

When I encounter this bug I often point to a nearby tree, and start describing it at different levels of abstraction. The word "tree" refers to a bunch of different related things: to a member of an evolutionarily-related category of organisms, to the general sort of object humans tend to emit the phonemes "tree" to describe, to this particular mid-sized physical object here in front of us, to the particular arrangement of particles that composes the object, etc. And it's sensible to use the term "tree" anyway, as long as you're careful to track which level of abstraction you're referring to with a given proposition—i.e., as long as you're careful to be precise about exactly which map/territory correspondence you're asserting.

This is obvious to most science-minded people. But it's often less obvious that the same procedure, with the same carefulness, is needed to sensibly discuss concepts like "goal" and "good." Just as it doesn't make sense to discuss whether a given tree is "strong" without distinguishing between "in terms of its likelihood of falling over" or "in terms of its molecular bonds," it doesn't make sense to discuss whether a goal is "good" without distinguishing between e.g. "relative to societal consensus" or "relative to your current preferences" or "relative to the preferences you might come to have given more time to think."

This conversation often seems to help resolve the confusion. At some point, I may design a class about this, so that more such confusions can be resolved. But I expect that if I do, some of the engineers in the audience will get nervous, since it will look an awful lot like a philosophy class! (I already get this objection regularly one-on-one). That is, I expect some may wonder whether the AIRCS staff, which claim to be running workshops for engineers, are actually more enthusiastic about philosophy than engineering.

We're not. Academic philosophy, at least, strikes me as an unusually unproductive field with generally poor epistemics. I don't want to turn the engineers into philosophers—I just want to use a particular helpful insight from philosophy to patch a bug which, for whatever reason, seems to commonly afflict AIRCS participants.

CFAR faces this dilemma a lot. For example, we spent a bunch of time circling for a while, and this made many rationalists nervous—was CFAR as an institution, which claimed to be running workshops for science-minded, sequences-reading, law-based-reasoning-enthused rationalists, actually more enthusiastic about woo-laden authentic relating games?

We weren't. But we looked around, and noticed that lots of the promising people around us seemed particularly bad at extrospection—i.e., at simulating the felt senses of their conversational partners in their own minds. This seemed worrying, among other reasons because early-stage research intuitions (e.g. about which lines of inquiry feel exciting to pursue) often seem to be stored sub-verbally. So we looked to specialists in extraspection for a patch.

Replies from: RobbBB, Benito, adam_scholl, Wei_Dai, SaidAchmiz, howie-lempel↑ comment by Rob Bensinger (RobbBB) · 2019-12-22T15:14:00.209Z · LW(p) · GW(p)

I felt a "click" in my brain reading this comment, like an old "something feels off, but I'm not sure what" feeling about rationality techniques finally resolving itself.

If this comment were a post, and I were in the curating-posts business, I'd curate it. The demystified concrete examples of the mental motion "use a tool from an unsciencey field to help debug scientists" are super helpful.

Replies from: mr-hire, RobbBB↑ comment by Matt Goldenberg (mr-hire) · 2019-12-22T15:38:44.454Z · LW(p) · GW(p)

Just want to second that I think this comment is particularly important. There's a particular bug where I can get innoculated to a whole class of useful rationality interventions that don't match my smell for "rationality intervention", but the whole reason they're a blindspot in the first place is because of that smell... or something.

↑ comment by Rob Bensinger (RobbBB) · 2019-12-22T15:17:29.545Z · LW(p) · GW(p)

I feel like this comment should perhaps be an AIRCS class -- not on meta-ethics, but on 'how to think about what doing debugging your brain is, if your usual ontology is "some activities are object-level engineering, some activities are object-level science, and everything else is bullshit or recreation"'. (With meta-ethics addressed in passing as a concrete example.)

↑ comment by Ben Pace (Benito) · 2019-12-24T23:48:54.791Z · LW(p) · GW(p)

Thanks, that was really helpful. I continue to have a sense of disagreement that this is the right way to do things, so I’ll try to point to some of that. Unfortunately my comment here is not super focused, though I am just trying to say a single thing.

I recently wrote down a bunch of my thoughts about evaluating MIRI, and I realised that I think MIRI has gone through alternating phases of internal concentration and external explanation, in a way that feels quite healthy to me.

Here is what I said [LW(p) · GW(p)]:

In the last 2-5 years I endorsed donating to MIRI (and still do), and my reasoning back then was always of the type "I don't understand their technical research, but I have read a substantial amount of the philosophy and worldview that was used to successfully pluck that problem out of the space of things to work on [? · GW], and think it is deeply coherent and sensible and it's been surprisingly successful in figuring out AI is an x-risk, and I expect to find it is doing very sensible things in places I understand less well." Then, about a year ago, MIRI published the Embdded Agency sequence [? · GW], and for the first time I thought "Oh, now I feel like I have an understanding of what the technical research is guided by, and what it's about, and indeed, this makes a lot of sense." My feelings have rarely been changed by reading ongoing research papers at MIRI, which were mostly just very confusing to me. They all seemed individually interesting, but I didn't see the broader picture before Embedded Agency.

I continued:

So, my current epistemic state is something like this: Eliezer and Benya and Patrick and others spent something like 4 MIRI-years hacking away at research, and I didn't get it. Finally Scott and Abram made some further progress on it, and crystalised it into an explanation I actually felt I sorta get. And most of the time I spent trying to understand their work in the meantime was wasted effort on my part, and quite plausibly wasted effort on their part. I remember that time they wrote up a ton of formal-looking papers for the puerto rico conference, to be ready in case a field suddenly sprang around them... but then nobody really read them or built on them. So I don't mind if, in the intervening 3-4 years, they again don't really try to explain what they're thinking about to me, until a bit of progress and good explanation comes along. They'll continue to write things about the background worldview, like Security Mindset, and Rocket Alignment, and Challenges to Christiano's Capability Amplification Proposal, and all of the beautiful posts that Scott and Abram write, but overall focus on getting a better understanding of the problem by themselves.

I think the output of this pattern is perhaps the primary ways I evaluate whether I think MIRI is making progress.

I'll just think aloud a little bit more on this topic:

- Writing by CFAR staff, for example a lot of comments on this post by Adam, Anna, Luke, Brienne, and others, are one of the primary ways I update my model of how these people are thinking, and how much it feels interesting and promising to me. I’m not talking about “major conclusions” or “rigorously evidenced results”, but just stuff like the introspective data Luke uses when evaluating whether he’s making progress (I really liked that comment), or Dan saying what posts are top of his mind that he would write to LW. Adam’s comments about looking for blind spots and Anna’s comment about tacit/explicit are even more helpful, but the difference between their comments and Luke/Dan’s comments isn’t as large as between Luke/Dan’s comments and nothing.

- It’s not that I don’t update on in-person tacit skills and communication, of course that’s a major factor I use when thinking about who to trust and in what way. But especially as I’m think of groups of people, over many years, doing research, I’m increasingly interested in whether they’re able to make records of their thoughts that communicate well with each other - how much writing they do. This kind of more legible tracking of ideas and thought is pretty key to me. This is in part because I think I personally would have a very difficult time doing research without good, long-term, external working memory, and also from some of my models of the difficulty of coordinating groups.

- Adam’s answer above is both intrinsically interesting and very helpful for discussing this topic. But when I asked myself if it felt sufficient to me, I said no. It matters to me a lot whether I expect CFAR to try hard to do the sort of translational work into explicit knowledge at some point down the line, like MIRI has successfully done multiple times and explicitly intends to do in future. CFAR and CFAR alumni explore a lot of things that typically signal having lost contact with scientific materialism and standards for communal, public evidence like “enlightenment”, “chakras”, “enneagram”, and “circling”. I think walking out into the hinterlands and then returning with the gold that was out there is great, and I’ve found one of those five things quite personally valuable - on a list that was selected for being the least promising on surface appearances - and I have a pretty strong “Rule Thinkers In, Not Out” vibe around that. But if CFAR never comes back and creates the explicit models, then it’s increasingly looking like 5, 10, 20 years of doing stuff that looks (from the outside) similar to most others who have tried to understand their own minds, and who have largely lost a hold of reality. The important thing is that I can’t tell from the outside whether this stuff turned out to be true. This doesn’t mean that you can’t either (from the inside), but I do think there’s often a surprising pairing where accountability and checkability end up actually being one of the primary ways you find out for yourself whether what you’ve learned is actually true and real.

- Paul Graham has a line, where he says startups should “take on as much technical debt as they can, and no more”. He’s saying that “avoiding technical debt” is not a virtue you should aspire to, and that you should let that slide in service of quickly making a product that people love, while of course admitting that there is some boundary line that if you cross, is just going to stop your system from working. If I apply that idea to research and use Chris Olah’s term “research debt”, the line would be “you should take on as much research debt as possible, and no more”. I don’t think all of CFAR’s ideas should be explicit, or have rigorous data tracking, or have randomised controlled trials, or be cached out in the psychological literature (which is a mess), and it’s fine to spend many years going down paths that you can’t feasibly justify to others. But, if you’re doing research, trying to take difficult steps in reasoning, I think you need to come back at some point and make a thing that others can build on. I don’t know how many years you can keep going without coming back, but I'd guess like 5 is probably as long as you want to start with.

- I guess I should‘ve said this earlier, but there’s also few things as exciting for me as when CFAR staff write their ideas about rationality into posts. I love reading Brienne’s stuff and Anna’s stuff, and I liked a number of Duncan’s things (Double Crux, Buckets and Bayes, etc). (It’s plausible this is more of my motivation than I let on, although I stand by everything I said above as true.) I think many other LessWrongers are also very excited about such writing. I assign some probability that this is itself is a big enough reason that should cause CFAR to want to write more (growth and excitement have many healthy benefits for communities).

- As part of CFAR’s work to research and develop an art of rationality (as I understand CFAR staff thinks of part of the work as being research, e.g. Brienne’s comment below), if it was an explicit goal to translate many key insights into explicit knowledge, then I would feel far more safe and confident around many of the parts that seem on first and second checking, like they are clearly wrong. If it isn’t, then I feel much more ‘all at sea’.

- I’m aware that there are more ways of providing your thinking with good feedback loops than “explaining your ideas to the people who read LW”. You can find other audiences. You can have smaller groups of people you trust and to whom you explain the ideas. You can have testable outcomes. You can just be a good enough philosopher to set your own course through reality and not look to others for whether it makes sense to them. But from my perspective, without blogposts like those Anna and Brienne have written in the past, I personally am having a hard time telling whether a number of CFAR’s focuses are on the right track.

I think I’ll say something similar to what Eliezer said at the bottom of his public critique of Paul's work, and mention that from my epistemic vantage point, even given my disagreements, I think CFAR has had and continues to have surprisingly massive positive effects on the direction and agency of me and others trying to reduce x-risk, and I think that they should definitely be funded to do all the stuff they do.

Replies from: adam_scholl↑ comment by Adam Scholl (adam_scholl) · 2019-12-27T07:44:14.153Z · LW(p) · GW(p)

Ben to check, before I respond—would a fair summary of your position be, "CFAR should write more in public, e.g. on LessWrong, so that A) it can have better feedback loops, and B) more people can benefit from its ideas?"

↑ comment by Adam Scholl (adam_scholl) · 2019-12-22T11:26:41.995Z · LW(p) · GW(p)

(To be clear the above is an account of why I personally feel excited about CFAR having investigated circling. I think this also reasonably describes the motivations of many staff, and of CFAR's behavior as an institution. But CFAR struggles with communicating research intuitions, too; I think in this case these intuitions did not propagate fully among our staff, and as a result that we did employ a few people for a while whose primary interest in circling seemed to me to be more like "for its own sake," and who sometimes discussed it in ways which felt epistemically unhealthy to me. I think people correctly picked up on this as worrying, and I don't want to suggest that didn't happen; just that there is, I think, a sensible reason why CFAR as an institution tends to investigate local blindspots by searching for non-locals with a patch, thereby alarming locals about our epistemic allegiance).

↑ comment by Wei Dai (Wei_Dai) · 2019-12-22T22:57:25.391Z · LW(p) · GW(p)

Philosophy strikes me as, on the whole, an unusually unproductive field full of people with highly questionable epistemics.

This is kind of tangential, but I wrote Some Thoughts on Metaphilosophy [LW · GW] in part to explain why we shouldn't expect philosophy to be as productive as other fields. I do think it can probably be made more productive, by improving people's epistemics, their incentives for working on the most important problems, etc., but the same can be said for lots of other fields.

I certainly don’t want to turn the engineers into philosophers

Not sure if you're saying that you personally don't have an interest in doing this, or that it's a bad idea in general, but if the latter, see Counterintuitive Comparative Advantage [LW · GW].

Replies from: adam_scholl↑ comment by Adam Scholl (adam_scholl) · 2019-12-23T00:55:30.511Z · LW(p) · GW(p)

I have an interest in making certain parts of philosophy more productive, and in helping some alignment engineers gain some specific philosophical skills. I just meant I'm not in general excited about making the average AIRCS participant's epistemics more like that of the average professional philosopher.

↑ comment by Said Achmiz (SaidAchmiz) · 2019-12-22T14:20:09.913Z · LW(p) · GW(p)

I was preparing to write a reply to the effect of “this is the most useful comment about what CFAR is doing and why that’s been posted on this thread yet” (it might still be, even)—but then I got to the part where your explanation takes a very odd sort of leap.

But we looked around, and noticed that lots of the promising people around us seemed particularly bad at extrospection—i.e., at simulating the felt senses of their conversational partners in their own minds.

It’s entirely unclear to me what this means, or why it is necessary / desirable. (Also, it seems like you’re using the term ‘extrospection’ in a quite unusual way; a quick search turns up no hits for anything like the definition you just gave. What’s up with that?)

This seemed worrying, among other reasons because early-stage research intuitions (e.g. about which lines of inquiry feel exciting to pursue) often seem to be stored sub-verbally.

There… seems to be quite a substantial line of reasoning hidden here, but I can’t guess what it is. Could you elaborate?

So we looked to specialists in extraspection for a patch.

Is there some reason to consider the folks who purvey (as you say) “woo-laden authentic relating games” to be ‘specialists’ here? What are some examples of their output, that is relevant to … research intuitions? (Or anything related?)

In short: I’m an engineer (my background is in computer science), and I’ve also studied philosophy. It’s clear enough to me why certain things that look like ‘philosophy’ can be, and are, useful in practice (despite agreeing with you that philosophy as a whole is, indeed, “an unusually unproductive field”). And we do, after all, have the Sequences, which is certainly philosophy if it’s anything (whatever else it may also be).

But I don’t at all see what the case for the usefulness of ‘circling’ and similar woo might be. Your comment makes me more worried, not less, on the whole; no doubt I am not alone. Perhaps elaborating a bit on my questions above might help shed some light on these matters.

Replies from: elityre, adam_scholl, ChristianKl, mr-hire↑ comment by Eli Tyre (elityre) · 2019-12-24T23:05:34.332Z · LW(p) · GW(p)

Is there some reason to consider the folks who purvey (as you say) “woo-laden authentic relating games” to be ‘specialists’ here? What are some examples of their output, that is relevant to … research intuitions? (Or anything related?)

I'm speaking for myself here, not any institutional view at CFAR.

When I'm looking at maybe-experts, woo-y or otherwise, one of the main things that I'm looking at is the nature and quality of their feedback loops.

When I think about how, in principle, one would train good intuitions about what other people are feeling at any given moment, I reason "well, I would need to be able to make predictions about that, and get immediate, reliable feedback about if my predictions are correct." This doesn't seem that far off from what Circling is. (For instance, "I have a story that you're feeling defensive" -> "I don't feel defensive, so much as righteous. And...There's a flowering of heat in my belly.")

Circling does not seem like a perfect training regime, to my naive sensors, but if I imagine a person engaging in Circling for 5000 hours, or more, it seems pretty plausible that they would get increasingly skilled along a particular axis.

This makes it seem worthwhile training with masters in that domain, to see what skills they bring to bear. And I might find out that some parts of the practice which seemed off the mark from my naive projection of how I would design a training environment, are actually features, not bugs.

This is in contrast to say, "energy healing". Most forms of energy healing do not have the kind of feedback loop that would lead to a person acquiring skill along a particular axis, and so I would expect them to be "pure woo."

For that matter, I think a lot of "Authentic Relating" seems like a much worse training regime than Circling, for a number of reasons, including that AR (ironically), seems to more often incentivizes people to share warm and nice-sounding, but less-than true sentiments than Circling.

Replies from: SaidAchmiz, elityre↑ comment by Said Achmiz (SaidAchmiz) · 2019-12-25T01:00:19.152Z · LW(p) · GW(p)

When I think about how, in principle, one would train good intuitions about what other people are feeling at any given moment, I reason “well, I would need to be able to make predictions about that, and get immediate, reliable feedback about if my predictions are correct.” This doesn’t seem that far off from what Circling is. (For instance, “I have a story that you’re feeling defensive” → “I don’t feel defensive, so much as righteous. And...There’s a flowering of heat in my belly.”)

Why would you expect this feedback to be reliable…? It seems to me that the opposite would be the case.

(This is aside from the fact that even if the feedback were reliable, the most you could expect to be training is your ability to determine what someone is feeling in the specific context of a Circling, or Circling-esque, exercise. I would not expect that this ability—even were it trainable in such a manner—would transfer to other situations.)

Finally, and speaking of feedback loops, note that my question had two parts—and the second part (asking for relevant examples of these purported experts’ output) is one which you did not address. Relatedly, you said:

This makes it seem worthwhile training with masters in that domain, to see what skills they bring to bear.

But are they masters?

Note the structure of your argument (which structure I have seen repeated quite a few times, in discussions of this and related topics, including in other sub-threads on this post). It goes like this:

-

There is a process P which purports to output X.

-

On the basis of various considerations, I expect that process P does indeed output X, and indeed that process P is very good at outputting X.

…

-

I now conclude that process P does output X, and does so quite well.

-

Having thus concluded, I will now adopt process P (since I want X).

But there’s a step missing, you see. Step 3 should be:

- Let me actually check to see whether process P in fact does output X (and how well it does so).

So, in this case, you have marshaled certain considerations—

When I think about how, in principle, one would train good intuitions … I reason … if I imagine a person engaging in Circling for 5000 hours, or more, it seems pretty plausible that …

—and on the basis of this thinking, reasoning, imagining, and seeming, have concluded, apparently, that people who’ve done a lot of Circling are “masters” in the domain of having “good intuitions about what other people are feeling at any given moment”.

But… are they? Have you checked?

Where is the evidence?

Replies from: elityre↑ comment by Eli Tyre (elityre) · 2019-12-25T03:12:20.807Z · LW(p) · GW(p)

I'm going to make a general point first, and then respond to some of your specific objections.

General point:

One of the things that I do, and that CFAR does, is trawl through the existing bodies of knowledge (or purported existing bodies of knowledge), that are relevant to problems that we care about.

But there's a lot of that in the world, and most of it is not very reliable. My response is only point at a heuristic that I use in assessing those bodies of knowledge, and weighing which ones to prioritize and engage with further. I agree that this heuristic on its own is insufficient for certifying a tradition or a body of knowledge as correct, or reliable, or anything.

And yes, you need to do further evaluation work before adopting a procedure. In general, I would recommend against adopting a new procedure as a habit, unless it is concretely and obviously providing value. (There are obviously some exceptions to this general rule.)

Specific points:

Why would you expect this feedback to be reliable…? It seems to me that the opposite would be the case.

On the face of it, I wouldn't assume that it is reliable, but I don't have that strong a reason to assume that it isn't a priori.

Post priori, my experience being in Circles is that there is sometime incentive to obscure what's happening for you, in a circle, but that, at least with skilled facilitation, there is usually enough trust in the process that that doesn't happen. This is helped by the fact that there are many degrees of freedom in terms of one's response: I might say, "I don't want to share what's happening for me" or "I notice that I don't want to engage with that."

I could be typical minding, but I don't expect most people to lie outright in this context.

(This is aside from the fact that even if the feedback were reliable, the most you could expect to be training is your ability to determine what someone is feeling in the specific context of a Circling, or Circling-esque, exercise. I would not expect that this ability—even were it trainable in such a manner—would transfer to other situations.)

That seems like a reasonable hypothesis.

Not sure if it's a crux, in so far as if something works well in circling, you can intentionally import the circling context. That is, if you find that you can in fact transfer intuitions, process fears, track what's motivating a person, etc., effectively in the circling context, an obvious next step might be to try and and do this on topics that you care about, in the circling context. e.g. Circles on X-risk.

In practice it seems to be a little bit of both: I've observed people build skills in circling, that they apply in other contexts, and also their other contexts do become more circling-y.

Finally, and speaking of feedback loops, note that my question had two parts—and the second part (asking for relevant examples of these purported experts’ output) is one which you did not address.

Sorry, I wasn't really trying to give a full response to your question, just dropping in with a little "here's how I do things."

You're referring to this question?

What are some examples of their output, that is relevant to … research intuitions? (Or anything related?)

I expect there's some talking past eachother going on, because this question seems surprising to me.

Um. I don't think there are examples of their output with regard to research or research intuitions. The Circlers aren't trying to do that, even a little. They're a funny subculture that engages a lot with an interpersonal practice, with the goals of fuller understanding of self and deeper connections with others (roughly. I'm not sure that they would agree that those are the goals.)

But they do pass some of my heuristic checks for "something interesting might be happening here." So I might go investigate and see what skill there is over in there, and how I might be able to re-purpose that skill for other goals that I care about.

Sort of like (I don't know) if I was a biologist in an alternative world, and I had an inkling that I could do population simulations on a computer, but I don't know anything about computers. So I go look around and I see who does seem to know about computers. And I find a bunch of hobbyists who are playing with circuits and making very simple video games, and have never had a thought about biology in their lives. I might hang out with these hobbyist and learn about circuits and making simple computer games, so that I can learn skills for making population simulations.

This analogy doesn't quite hold up, because its easier to verify that the hobbyists are actually successfully making computer games, and to verify that their understanding of circuits reflects standard physics. The case of the Circlers is less clean cut, because it is less obvious that they are doing anything real, and because their own models of what they are doing and how are a lot less grounded.

But I think the basic relationship holds up, noting that figuring out which groups of hobbyists are doing real things is much trickier.

Maybe to say it clearly: I don't think it is obvious, or a slam dunk, or definitely the case (and if you don't think so then you must be stupid or misinformed) that "Circling is doing something real." But also, I have heuristics that suggest that Circling is more interesting than a lot of woo.

In terms of evidence that make me think Circling is interesting (which again, I don't expect to be compelling to everyone):

- Having decent feedback loops.

- Social evidence: A lot of people around me, including Anna, think it is really good.

- Something like "universality". (This is hand-wavy) Circling is about "what's true", and has enough reach to express or to absorb any way of being or any way the world might be. This is in contrast to many forms of woo, which have an ideology baked into them that reject ways the world could be a priori, for instance that "everything happens for a reason". (This is not to say that Circling doesn't have an ideology, or a metaphysics, but it is capable of holding more than just that ideology.)

- Circling is concerned with truth, and getting to the truth. It doesn't reject what's actually happening in favor of a nicer story.

- I can point to places where some people seem much more socially skilled, in ways that relate to circling skill.

- Pete is supposedly able to be good at detecting lying.

- The thing I said about picking out people who "seemed to be doing something", and turned out to be circlers.

- Somehow people do seem to cut past their own bullshit in circles, in a way that seems relevant to human rationality.

- I've personally had some (few) meaningful realizations in Circles

I think all of the above are much weaker evidence than...

- "I did x procedure, and got y, large, externally verifiable result",

or even,

- "I did v procedure, and got u, specific, good (but hard to verify externally) result."

These days, I generally tend to stick to doing things that are concretely and fairly obviously (if only to me) having good immediate effects. If there aren't pretty immediate obvious, effects, then I won't bother much with it. And I don't think circling passes that bar (for me at least). But I do think there are plenty of reasons to be interested in circling, for someone who isn't following that heuristic strongly.

I also want to say, while I'm giving a sort-of-defense of being interested in circling, that I'm, personally, only a little interested.

I've done some ~1000 hours of Circling retreats, for personal reasons rather than research reasons (though admittedly the two are often entangled). I think I learned a few skills, which I could have learned faster, if I knew what I was aiming for. My ability to connect / be present with (some) others, improved a lot. I think I also damaged something psychologically, which took 6 months to repair.

Overall, I concluded it was fine, but I would have done better to train more specific and goal-directed skills like NVC. Personally, I'm more interested in other topics, and other sources of knowledge.

Replies from: elityre, howie-lempel

↑ comment by Eli Tyre (elityre) · 2019-12-25T03:26:36.702Z · LW(p) · GW(p)

Some sampling of things that I'm currently investigating / interested in (mostly not for CFAR), and sources that I'm using:

- Power and propaganda

- reading the Dictator's Handbook and some of the authors' other work.

- reading Kissinger's books

- rereading Samo's draft

- some "evil literature" (an example of which is "things Brent wrote")

- thinking and writing

- Disagreement resolution and conversational mediation

- I'm currently looking into some NVC materials

- lots and lots of experimentation and iteration

- Focusing, articulation, and aversion processing

- Mostly iteration with lots of notes.

- Things like PJ EBY's excellent ebook.

- Reading other materials from the Focusing institute, etc.

- Ego and what to do about it

- Byron Katie's The Work (I'm familiar with this from years ago, it has an epistemic core (one key question is "Is this true?"), and PJ EBY mentioned using this process with clients.)

- I might check out Eckhart Tolle's work again (which I read as a teenager)

- Learning

- Mostly iteration as I learn things on the object level, right now, but I've read a lot on deliberate practice, and study methodology, as well as learned general learning methods from mentors, in the past.

- Talking with Brienne.

- Part of this project will probably include a lit review on spacing effects and consolidation.

- General rationality and stuff:

- reading Artificial Intelligence: a Modern Approach

- reading David Deutsch's the Beginning of Infinity

- rereading IQ and Human Intelligence

- The Act of Creation

- Old Micheal Vassar talks on youtube

- Thinking about the different kinds of knowledge creation, and how rigorous argument (mathematical proof, engineering schematics) work.

I mostly read a lot of stuff, without a strong expectation that it will be right.

Replies from: howie-lempel↑ comment by Howie Lempel (howie-lempel) · 2019-12-25T16:16:38.196Z · LW(p) · GW(p)

Thanks for writing this up. Added a few things to my reading list and generally just found it inspiring.

Things like PJ EBY's excellent ebook.

FYI - this link goes to an empty shopping cart. Which of his books did you mean to refer to?

The best links I could find quickly were:

Replies from: elityre↑ comment by Eli Tyre (elityre) · 2019-12-26T00:56:49.548Z · LW(p) · GW(p)

↑ comment by Howie Lempel (howie-lempel) · 2019-12-25T16:06:19.471Z · LW(p) · GW(p)

I think I also damaged something psychologically, which took 6 months to repair.

I've been pretty curious about the extent to which circling has harmful side effects for some people. If you felt like sharing what this was, the mechanism that caused it, and/or how it could be avoided I'd be interested.

I expect, though, that this is too sensitive/personal so please feel free to ignore.

Replies from: elityre↑ comment by Eli Tyre (elityre) · 2019-12-26T00:58:24.359Z · LW(p) · GW(p)

It's not sensitive so much has context-heavy, and I don't think I can easily go into it in brief. I do think it would be good if we had a way to propagate different people's experiences of things like Circling better.

↑ comment by Eli Tyre (elityre) · 2019-12-24T23:12:52.590Z · LW(p) · GW(p)

Oh and as a side note, I have twice in my life had a short introductory conversation with a person, noticed that something unusual or interesting was happening (but not having any idea what), and then finding out subsequently that the person I was talking with had done a lot of circling.

The first person was Pete, who I had a conversation with shortly after EAG 2015, before he came to work for CFAR. The other was an HR person at a tech company that I was cajoled into interviewing at, despite not really having any relevant skills.

I would be hard pressed to say exactly what was interesting about those conversations: something like "the way they were asking questions was...something. Probing? Intentional? Alive?" Those words really don't capture it, but whatever was happening I had a detector that pinged "something about this situation unusual."

Replies from: elityre↑ comment by Eli Tyre (elityre) · 2020-12-12T07:44:27.455Z · LW(p) · GW(p)

Coming back to this, I think I would describe it as "they seemed like they were actually paying attention", which was so unusual as to be noteworthy.

↑ comment by Adam Scholl (adam_scholl) · 2019-12-23T00:29:50.263Z · LW(p) · GW(p)

Said I appreciate your point that I used the term "extrospection" in a non-standard way—I think you're right. The way I've heard it used, which is probably idiosyncratic local jargon, is to reference the theory of mind analog of introspection: "feeling, yourself, something of what the person you're talking with is feeling." You obviously can't do this perfectly, but I think many people find that e.g. it's easier to gain information about why someone is sad, and about how it feels for them to be currently experiencing this sadness, if you use empathy/theory of mind/the thing I think people are often gesturing at when they talk about "mirror neurons," to try to emulate their sadness in your own brain. To feel a bit of it, albeit an imperfect approximation of it, yourself.

Similarly, I think it's often easier for one to gain information about why e.g. someone feels excited about pursuing a particular line of inquiry, if one tries to emulate their excitement in one's own brain. Personally, I've found this empathy/emulation skill quite helpful for research collaboration, because it makes it easier to trade information about people's vague, sub-verbal curiosities and intuitions about e.g. "which questions are most worth asking."

Circlers don't generally use this skill for research. But it is the primary skill, I think, that circling is designed to train, and my impression is that many circlers have become relatively excellent at it as a result.

Replies from: SaidAchmiz↑ comment by Said Achmiz (SaidAchmiz) · 2019-12-23T01:05:10.058Z · LW(p) · GW(p)

… something like the theory of mind analog of introspection: something like “feeling, yourself, something of what the person you’re talking with is feeling.” You obviously can’t do this perfectly, but I think many people find that e.g. it’s easier to gain information about why someone is sad, and about how it feels for them to be currently experiencing this sadness, if you use empathy/theory of mind/the thing I think people are often gesturing at when they talk about “mirror neurons,” to try to emulate their sadness in your own brain. To feel a bit of it, albeit an imperfect approximation of it, yourself.

Hmm. I see, thanks.

Now, you say “You obviously can’t do this perfectly”, but it seems to me a dubious proposition even to suggest that anyone (to a first approximation) can do this at all. Even introspection is famously unreliable; the impression I have is that many people think that they can do the thing that you call ‘extrospection’[1], but in fact they can do no such thing, and are deluding themselves. Perhaps there are exceptions—but however uncommon you might intuitively think such exceptions are, they are (it seems to me) probably a couple of orders of magnitude less common than that.

Similarly, I think it’s often easier for one to gain information about why e.g. someone feels excited about pursuing a particular line of inquiry, if one tries to emulate their excitement in one’s own brain. Personally, I’ve found this empathy/emulation skill quite helpful for research collaboration, because it makes it easier to trade information about people’s vague, sub-verbal curiosities and intuitions about e.g. “which questions are most worth asking.”

Do you have any data (other than personal impressions, etc.) that would show or even suggest that this has any practical effect? (Perhaps, examples / case studies?)

By the way, it seems to me like coming up with a new term for this would be useful, on account of the aforementioned namespace collision. ↩︎

↑ comment by Adam Scholl (adam_scholl) · 2019-12-23T04:07:53.358Z · LW(p) · GW(p)

Thanks for spelling this out. My guess is that there are some semi-deep cruxes here, and that they would take more time to resolve than I have available to allocate at the moment. If Eli someday writes that post about the Nisbett and Wilson paper [LW(p) · GW(p)], that might be a good time to dive in further.

↑ comment by ChristianKl · 2019-12-26T00:27:57.254Z · LW(p) · GW(p)

To do good UX you need to understand the mental models that your users have of your software. You can do that by doing a bunch of explicit A/B tests or you can do that by doing skilled user interviews.

A person who doesn't do skilled user interviews will project a lot of their own mental models of how the software is supposed to work on the users that might have other mental models.

There are a lot of things about how humans relate to the world around them, that they normally don't share with other people. People with a decent amount of self-awareness know how they reason, but they don't know how other people reason at the same level.

Circling is about creating an environment where things can be shared that normally aren't. While it would be theoretically possible that people lie, it feels good to share about one's intimate experience in a safe environment and be understood.

At one LWCW where I lead two circles there was a person who was in both and who afterwards said "I thought I was the only person who does X in two cases where I now know that other people also do X".

Replies from: SaidAchmiz↑ comment by Said Achmiz (SaidAchmiz) · 2019-12-26T01:39:19.975Z · LW(p) · GW(p)

Do you claim that people who have experience with Circling, are better at UX design? I would like some evidence for this claim, if so.

Replies from: ChristianKl↑ comment by ChristianKl · 2019-12-26T10:56:48.276Z · LW(p) · GW(p)

My main claim is that the activity of doing user interviews is very similar to the experience of doing Circling.

As far as the claim goes of getting better at UX design: UX of things were mental habits matter a lot. It's not as relevant to where you place your buttons but it's very relevant to designing mental intervention in the style that CFAR does.

Evidence is great, but we have little controlled studies of Circling.

Replies from: SaidAchmiz↑ comment by Said Achmiz (SaidAchmiz) · 2019-12-26T14:58:28.376Z · LW(p) · GW(p)

My main claim is that the activity of doing user interviews is very similar to the experience of doing Circling.

This is not an interesting claim. Ok, it’s ‘very similar’. And what of it? What follows from this similarity? What can we expect to be the case, given this? Does skill at Circling transfer to skill at conducting user interviews? How, precisely? What specific things do you expect we will observe?

Evidence is great, but we have little controlled studies of Circling.

So… we don’t have any evidence for any of these claims, in other words?

As far as the claim goes of getting better at UX design: UX of things were mental habits matter a lot. It’s not as relevant to where you place your buttons but it’s very relevant to designing mental intervention in the style that CFAR does.

I don’t think I quite understand what you’re saying, here (perhaps due to a typo or two). What does the term ‘UX’ even mean, as you are using it? What does “designing mental intervention” have to do with UX?

↑ comment by Matt Goldenberg (mr-hire) · 2019-12-22T15:50:29.573Z · LW(p) · GW(p)

Not a CFAR staff member, but particularly interested in this comment.

It’s entirely unclear to me what this means, or why it is necessary / desirable.

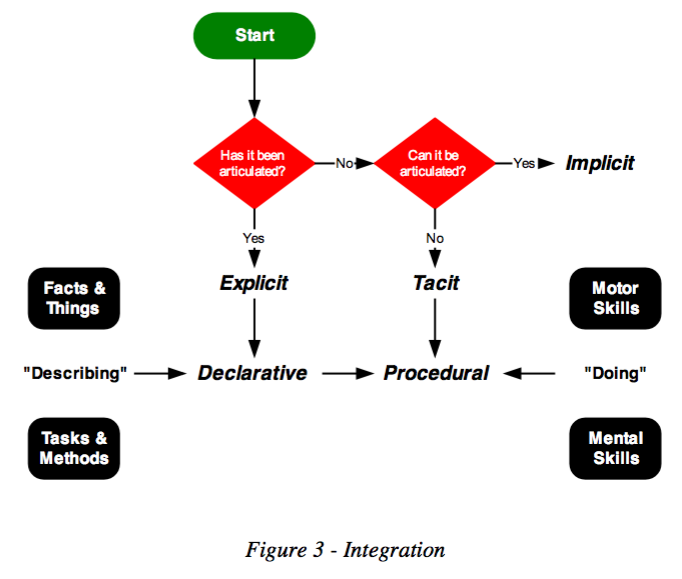

One way to frame this would be getting really good at learning tacit knowledge.

Is there some reason to consider the folks who purvey (as you say) “woo-laden authentic relating games” to be ‘specialists’ here?

One way would be to interact one with them, notice "hey, this person is really good at this" and then inquire as to how they got so good. This is my experience with seasoned authentic relaters.

Another way would be to realize there's a hole in understanding related to intuitions, and then start ssearching around for "people who are claiming to be really good at understanding others' intuitions", this might lead you to running into someone as described above and then seeing if they are indeed good at the thing.

But I don’t at all see what the case for the usefulness of ‘circling’ and similar woo might be.

Let's say that as a designer, you wanted to impart your intuition of what makes good design. Would you rather have:

1. A newbie designer who has spent hundreds of hours of deliberate practice understanding and being able to transfer models of how someone is feeling/relating to different concepts, and being able to model them in their own mind.

2. A newbie designer who hasn't done that.

To me, that's the obvious use case for circling. I think there's also a bunch of obvious benefits on a group level to being able to relate to people better as well.

Replies from: SaidAchmiz

↑ comment by Said Achmiz (SaidAchmiz) · 2019-12-22T16:28:58.145Z · LW(p) · GW(p)

One way to frame this would be getting really good at learning tacit knowledge.

Is there some reason to believe that being good at “simulating the felt senses of their conversational partners in their own minds” (whatever this means—still unclear to me) leads to being “really good at learning tacit knowledge”? In fact, is there any reason to believe that being “really good at learning tacit knowledge” is a thing?

One way would be to interact one with them, notice “hey, this person is really good at this” and then inquire as to how they got so good. This is my experience with seasoned authentic relaters.

Hmm, so in your experience, “seasoned authentic relaters” are really good at “simulating the felt senses of their conversational partners in their own minds”—is that right? If so, then the followup question is: is there some way for me to come into possession of evidence of this claim’s truth, without personally interacting with many (or any) “seasoned authentic relaters”?

Another way would be to realize there’s a hole in understanding related to intuitions

Can you say more about how you came to realize this?

Let’s say that as a designer, you wanted to impart your intuition of what makes good design. Would you rather have:

A newbie designer who has spent hundreds of hours of deliberate practice understanding and being able to transfer models of how someone is feeling/relating to different concepts, and being able to model them in their own mind.

A newbie designer who hasn’t done that.

Well, my first step would be to stop wanting that, because it is not a sensible (or, perhaps, even coherent) thing to want.

However, supposing that I nevertheless persisted in wanting to “impart my intuition”, I would definitely rather have #2 than #1. I would expect that having done what you describe in #1 would hinder, rather than help, the accomplishment of this sort of goal.

Replies from: mr-hire↑ comment by Matt Goldenberg (mr-hire) · 2019-12-22T16:51:46.478Z · LW(p) · GW(p)

Is there some reason to believe that being good at “simulating the felt senses of their conversational partners in their own minds” (whatever this means—still unclear to me) leads to being “really good at learning tacit knowledge”?

This requires some model of how intuitions work. One model I like to use is to think about "intuition" is like a felt sense or aesthetic that relates to hundreds of little associations you're picking up from a particular situation.

If i'm quickly able to in my mind, get a sense for what it feels like for you (i.e get that same felt sense or aesthetic feel when looking at what you're looking at), and use circling like tools to be able to tease out which parts of the environment most contribute to that aesthetic feel, I can quickly create similar associations in my own mind and thus develop similar intuitions.

f so, then the followup question is: is there some way for me to come into possession of evidence of this claim’s truth, without personally interacting with many (or any) “seasoned authentic relaters”?

Possibly you could update by hearing many other people who have interacted with seasoned authentic relaters stating they believe this to be the case.

Can you say more about how you came to realize this?

I mean, to me this was just obvious seeing for instance how little emphasis the rationalists I interact with emphasize things like deliberate practice relative to things like conversation and explicit thinking. I'm not sure how CFAR recognized it.

However, supposing that I nevertheless persisted in wanting to “impart my intuition”, I would definitely rather have #2 than #1. I would expect that having done what you describe in #1 would hinder, rather than help, the accomplishment of this sort of goal.

I think this is a coherent stance if you think the general "learning intuitions" skill is impossible. But imagine if it weren't, would you agree that training it would be useful?

Replies from: SaidAchmiz↑ comment by Said Achmiz (SaidAchmiz) · 2019-12-22T21:10:47.489Z · LW(p) · GW(p)

This requires some model of how intuitions work. One model I like to use is […]

Hmm. It’s possible that I don’t understand what you mean by “felt sense”. Do you have a link to any discussion of this term / concept?

That aside, the model you have sketched seems implausible to me; but, more to the point, I wonder what rent it pays? Perhaps it might predict, for example, that certain people might be really good at learning tacit knowledge, etc.; but then the obvious question becomes: fair enough, and how do we test these predictions?

In other words, “my model of intuitions predicts X” is not a sufficient reason to believe X, unless those predictions have been borne out somehow, or the model validated empirically, or both. As always, some examples would be useful.

Possibly you could update by hearing many other people who have interacted with seasoned authentic relaters stating they believe this to be the case.

It is not clear to me whether this would be evidence (in the strict Bayesian sense); is it more likely that the people from whom I have heard such things would make these claims if they were true than otherwise? I am genuinely unsure, but even if the answer is yes, the odds ratio is low; if evidence, it’s a very weak form thereof.

Conversely, if this sort of thing is the only form of evidence put forth, then that itself is evidence against, as it were!

I mean, to me this was just obvious seeing for instance how little emphasis the rationalists I interact with emphasize things like deliberate practice relative to things like conversation and explicit thinking. I’m not sure how CFAR recognized it.

Hmm, I am inclined to agree with your observation re: deliberate practice. It does seem puzzling to me that the solution to the (reasonable) view “intuition is undervalued, and as a consequence deliberate practice is under-emphasized” would be “let’s try to understand intuition, via circling etc.” rather than “let’s develop intuitions, via deliberate practice, whereupon the results will speak for themselves, and this will also lead to improved understanding”. (Corollary question: have the efforts made toward understanding intuitions yielded an improved emphasis on deliberate practice, and have the results thereof been positive and obvious?)

I think this is a coherent stance if you think the general “learning intuitions” skill is impossible. But imagine if it weren’t, would you agree that training it would be useful?

Indeed, I would, but notice that what you’re asking is different than what you asked before.

In your earlier comment, you asked whether I would find it useful (in the hypothetical “newbie designer” situation) to be dealing with someone who had undertaken a lot of “deliberate practice understanding and being able to transfer models of how someone is feeling/relating to different concepts, and being able to model them in their own mind”.

Now, you are asking whether I judge “training … the general ‘learning intuitions’ skill” to be useful.

Your questions imply that these are the same thing. But (even in the hypothetical case where there is such a thing as the latter) they are not!

Replies from: mr-hire↑ comment by Matt Goldenberg (mr-hire) · 2019-12-22T23:32:06.065Z · LW(p) · GW(p)

The wikipedia article for Gendlin's focusing has a section trying to describe felt sense, taking out the specific part about "the body", the first part says:

" Gendlin gave the name "felt sense" to the unclear, pre-verbal sense of "something"—the inner knowledge or awareness that has not been consciously thought or verbalized",

Which is fairly close to my use of it here.

That aside, the model you have sketched seems implausible to me; but, more to the point, I wonder what rent it pays? Perhaps it might predict, for example, that certain people might be really good at learning tacit knowledge, etc.; but then the obvious question becomes: fair enough, and how do we test these predictions?

One thing it might predict is that there are ways to train the transfer of intuition, from both the teaching and learning side of things, and that by teaching them people get better at picking up intuitions.

Hmm, I am inclined to agree with your observation re: deliberate practice. It does seem puzzling to me that the solution to the (reasonable) view “intuition is undervalued, and as a consequence deliberate practice is under-emphasized”

I do believe CFAR at one point was teaching deliberate practice and calling it "turbocharged training". However, if one is really interested in intiution and thinks its' useful, the next obvious step is to ask "ok, I have this blunt instrument for teaching intuition called deliberate practice, can we use an understanding of how intuitions work to improve upon it?"

Your questions imply that these are the same thing. But (even in the hypothetical case where there is such a thing as the latter) they are not!

Good catch, this assumes that my simplified model of how intuitions work is at least partly correct. If the felt sense you get from a particular situation doesn't relate to intuition, or if its' impossible for one human being to get better at feeling what another is feeling, than these are not equivalent. I happen to think both are true.

Replies from: SaidAchmiz, Benito↑ comment by Said Achmiz (SaidAchmiz) · 2019-12-23T01:14:49.608Z · LW(p) · GW(p)

[Gendlin’s definition]

I see, thanks.

One thing it might predict is that there are ways to train the transfer of intuition, from both the teaching and learning side of things, and that by teaching them people get better at picking up intuitions.

Well, my question stands. That is a prediction, sure (if a vague one), but now how do we test it? What concrete observations would we expect, and which are excluded, etc.? What has actually been observed? I’m talking specifics, now; data or case studies—but in any case very concrete evidence, not generalities!

I do believe CFAR at one point was teaching deliberate practice and calling it “turbocharged training”. However, if one is really interested in intiution and thinks its’ useful, the next obvious step is to ask “ok, I have this blunt instrument for teaching intuition called deliberate practice, can we use an understanding of how intuitions work to improve upon it?”

Yes… perhaps this is true. Yet in this case, we would expect to continue to use the available instruments (however blunt they may be) until such time as sharper tools are (a) available, and (b) have been firmly established as being more effective than the blunt ones. But it seems to me like neither (a) (if I’m reading your “at one point” comment correctly), nor (b), is the case here?

Really, what I don’t think I’ve seen, in this discussion, is any of what I, in a previous comment, referred to as “the cake” [LW(p) · GW(p)]. This continues to trouble me!

Replies from: mr-hire↑ comment by Matt Goldenberg (mr-hire) · 2019-12-23T03:08:50.225Z · LW(p) · GW(p)

I suspect the CFARians have more delicious cake for you, as I haven't put that much time into circling, and the related connection skills I worked on more than a decade ago and have atrophied since.

Things I remember:

- much quicker connection with people

- there was a few things like exercise that I wasn't passionate about but wanted to be. After talking with people who were passionate I was able to become passionate myself for those things

- I was able to more quickly learn social cognitive strategies by interacting with others who had them.

↑ comment by philh · 2019-12-23T10:19:29.472Z · LW(p) · GW(p)

To suggest something more concrete... would you predict that if an X-ist wanted to pass a Y-ist's ITT, they would have more success if the two of them sat down to circle beforehand? Relative to doing nothing, and/or relative to other possible interventions like discussing X vs Y? For values of X and Y like Democrat/Republican, yay-SJ/boo-SJ, cat person/dog person, MIRI's approach to AI/Paul Christiano's approach?

It seems to me that (roughly speaking) if circling was more successful than other interventions, or successful on a wider range of topics, that would validate its utility. Said, do you agree?

Replies from: mr-hire↑ comment by Matt Goldenberg (mr-hire) · 2019-12-23T14:25:58.091Z · LW(p) · GW(p)

Yes, although I expect the utility of circling over other methods to be dependent on the degree to which the ITT is based on intuitions.

↑ comment by Ben Pace (Benito) · 2019-12-22T23:57:39.792Z · LW(p) · GW(p)

I always think of 'felt sense' as, not just pre-verbal intuitions, but intuitions associated with physical sensations, be they in my head, shoulders, stomach, etc.

Replies from: mr-hire↑ comment by Matt Goldenberg (mr-hire) · 2019-12-23T00:11:41.648Z · LW(p) · GW(p)

I think that Gendlin thinks all pre-verbal intuitions are represented with physical sensations.

I don't agree with him but still use the felt-sense language in these parts because rationalists seem to know what I'm talking about.

Replies from: adam_scholl, mr-hire↑ comment by Adam Scholl (adam_scholl) · 2019-12-23T01:02:12.390Z · LW(p) · GW(p)

Yeah, same; I think this term has experienced some semantic drift, which is confusing. I meant to refer to pre-verbal intuitions in general, not just ones accompanied by physical sensation.

↑ comment by Matt Goldenberg (mr-hire) · 2019-12-23T02:54:44.734Z · LW(p) · GW(p)

Also in particular - felt sense refers to the qualia related to intuitions, rather than the intuitions themselves.

Replies from: adam_scholl↑ comment by Adam Scholl (adam_scholl) · 2019-12-23T03:59:31.263Z · LW(p) · GW(p)

(Unsure, but I'm suspicious that the distinction between these two things might not be clear).

Replies from: mr-hire↑ comment by Matt Goldenberg (mr-hire) · 2019-12-23T14:43:08.751Z · LW(p) · GW(p)

Yes, I think there's a distinction between the semantic content of "My intuition is that Design A is better than Design B" referring to the semantic content or how the intuition "caches out" in terms of decisions. This contrast with the felt sense, which always seems to refer to what the intuition is like "from the inside," for example a sense of unease when looking at Design A, and rightness when looking at Design B.

I feel like using the word "intuition" can refer to both the latter and the former, whereas when I say "felt sense" it always refers to the latter.

↑ comment by Howie Lempel (howie-lempel) · 2019-12-22T15:08:02.915Z · LW(p) · GW(p)

"For example, we spent a bunch of time circling for a while"

Does this imply that CFAR now spends substantially less time circling? If so and there's anything interesting to say about why, I'd be curious.

Replies from: adam_scholl↑ comment by Adam Scholl (adam_scholl) · 2019-12-23T00:42:06.911Z · LW(p) · GW(p)

CFAR does spend substantially less time circling now than it did a couple years ago, yeah. I think this is partly because Pete (who spent time learning about circling when he was younger, and hence found it especially easy to notice the lack of circling-type skill among rationalists, much as I spent time learning about philosophy when I was younger and hence found it especially easy to notice the lack of philosophy-type skill among AIRCS participants) left, and partly I think because many staff felt like their marginal skill returns from circling practice were decreasing, so they started focusing more on other things.

↑ comment by Kaj_Sotala · 2019-12-21T14:12:45.835Z · LW(p) · GW(p)

Whether CFAR staff (qua CFAR staff, as above) will help educate people who later themselves produce explicit knowledge in the manner valued by Gwern, Wei Dai, or Scott Alexander, and who wouldn’t have produced (as much of) that knowledge otherwise.

This seems like a good moment to publicly note that I probably would not have started writing my multi-agent sequence [? · GW] without having a) participated in CFAR's mentorship training b) had conversations with/about Val and his posts.

↑ comment by AnnaSalamon · 2019-12-22T05:14:09.435Z · LW(p) · GW(p)

With regard to whether our staff has read the sequences: five have, and have been deeply shaped by them; two have read about a third, and two have read little. I do think it’s important that our staff read them, and we decided to run this experiment with sabbatical months next year in part to ensure our staff had time to do this over the coming year.

↑ comment by orthonormal · 2019-12-20T23:59:38.114Z · LW(p) · GW(p)

I honestly think, in retrospect, that the linchpin of early CFAR's standard of good shared epistemics was probably Critch.

Replies from: AnnaSalamon↑ comment by AnnaSalamon · 2019-12-21T18:10:28.904Z · LW(p) · GW(p)